Abstract

Conventional endoscopes comprise a bundle of optical fibers, associating one fiber for each pixel in the image. In principle, this can be reduced to a single multimode optical fiber (MMF), the width of a human hair, with one fiber spatial-mode per image pixel. However, images transmitted through a MMF emerge as unrecognizable speckle patterns due to dispersion and coupling between the spatial modes of the fiber. Furthermore, speckle patterns change as the fiber undergoes bending, making the use of MMFs in flexible imaging applications even more complicated. In this paper, we propose a real-time imaging system using flexible MMFs, but which is robust to bending. Our approach does not require access or feedback signal from the distal end of the fiber during imaging. We leverage a variational autoencoder to reconstruct and classify images from the speckles and show that these images can still be recovered when the bend configuration of the fiber is changed to one that was not part of the training set. We utilize a MMF 300 mm long with a 62.5 μm core for imaging \(10~\times ~10\) cm objects placed approximately at 20 cm from the fiber and the system can deal with a change in fiber bend of 50\(^\circ\) and range of movement of 8 cm.

Similar content being viewed by others

Introduction

Multimode fibers (MMFs), the width of a human hair, potentially allow for the transmission of images formed from the thousands of spatial modes they support1,2. This creates the potential for minimally invasive and high-resolution imaging systems such as ultra-thin endoscopes which can be used for imaging objects out of the reach of conventional technology. Unfortunately, even when using temporally coherent light, images propagated through MMFs suffer from severe spatial distortions and can appear as random speckle patterns at the distal end due to modal dispersion in the fiber1,2. However, although the information is completely scrambled at either end of a MMF, the vast majority of the information is not lost and hence the image can, in principle, be recovered.

To image through MMFs, two main classes of methods have been used to date. The first consists of raster-scanning methods2,3,4,5,6,7, which rely on measuring the complex mapping of the input field onto the output field, namely the transmission matrix (TM)8,9,10,11,12. Using the TM, the input field at the proximal facet of a MMF can be specified such that focused light beams are generated at the distal end. By calculating the correct series of input fields, this focused beam can be scanned over an object with a field of view defined by the numerical aperture of the fiber. The second class of methods consists of speckle imaging approaches13,14,15,16,17. With these methods, a set of speckle patterns is recorded at the distal end of a MMF, forming a measurement matrix during a calibration stage. During imaging, the same speckle patterns are projected sequentially onto a new object and the collected overall signal provides a speckle measurement. Iterative methods have been traditionally used to reconstruct objects from the speckle measurements and the measurement matrix. Although Lan et al.17 showed that using an average of speckle patterns recorded for different fiber bends during image reconstruction can decrease the influence of changing the fiber bend, all the aforementioned methods are not robust to bending and consider a single, static, fiber configuration. It should be noted at this stage that although in principle the light propagation in MMFs is often invertible, raster scanning leads to better results due to better signal-to-noise (SNR) ratios.

To overcome the fiber bending problem and allow the use of MMFs as flexible imaging devices, extensive research has been carried out over the last decade. Caravaca et al.18 proposed a real-time TM measurement technique that allows for light refocusing at very high frame rates by placing a photodetector at the distal end of the fiber. In another example, Farahi et al.19 placed a virtual coherent point light source (a beacon) at the distal end to allow for bending compensation while focusing light through MMFs by digital phase conjugation (DPC). Similarly, Gu et al.20 attached a partial reflector to the distal end of the MMF, which reflects light back to the proximal end, allowing the MMF to be re-calibrated each time the fiber is bent. Leveraging optical memory effects, it was claimed that using a guide-star on the distal facet of an MMF, which reports its local field intensity to the proximal facet, along with the estimation of a basis where the TM is diagonal, enables TM approximation and allows for imaging through a bending MMF21. All these methods require a priori computation of the TM or feedback mechanism from the distal end of the fiber. In another work5, it was shown that bending deformations in step-index MMFs could be predicted and compensated for in imaging applications. However, this process is computationally intensive and requires precise knowledge of the fiber layout.

Recently, deep learning approaches22,23,24,25,26,27,28,29,30,31,32 have emerged that infer images from speckle patterns without prior knowledge of the fiber characteristics. Although they demonstrate promising results and real-time reconstruction, accounting for fiber bending remains a challenging problem. In this context, Li et al.33,34 showed that training an autoencoder (AE) neural network on speckles from multiple thin diffusers can be used to recover images from speckles corresponding to a new diffuser of the same type. Moreover, another study35 confirmed the existence of statistical dependencies in the optical field distribution scattered by a random medium. Motivated by these findings, Resisi et al.36 extended the work of Li et al.33,34 to MMFs and showed that an AE trained on hundreds of fiber bends can be used to reconstruct images from new configurations. However, their results were limited to the reconstruction of handwritten digits, which share very similar features, displayed on a binary DMD. Furthermore, the reflected light from the fiber was imaged on a camera which does not depict a realistic imaging scenario. Finally, preparing the data for training and testing required 14 weeks of lab work.

In this paper, we propose a high-resolution and minimally invasive imaging system leveraging MMFs. In contrast to previously reported work, our proposed framework represents a realistic imaging scenario where no access to the distal end of the fiber is required during imaging and the collected signal need not be coherent light. The object of interest is probed with speckle patterns through an illumination MMF while a secondary collection fiber, placed alongside the illumination fiber, transmits the diffuse reflections from the object back to the proximal end to be recorded by an avalanche photodiode (APD). The object is then reconstructed from the APD measurements leveraging a variational autoencoder with a Gaussian mixture latent space (GMVAE). In contrast to deterministic deep learning approaches proposed in the literature, the GMVAE learns the overall distribution representing the complicated mapping between measurements corresponding to different fiber configurations and the underlying images of different classes in a low-dimensional latent representation. The latent space learned is configuration-agnostic. This means all measurement vectors corresponding to the same class of images but to different fiber configurations share the same cluster in the latent space. Our GMVAE architecture is significantly simpler than the widely used AEs proposed for imaging through MMFs36 and scattering media33,34, resulting in a 90% reduction in training time for the same data set. Our light network does not suffer as much from generalization issues as reported for the AE architecture proposed for imaging through MMFs36. We demonstrate the robustness of our GMVAE against AE33,34 on new image classes from the fashion-MNIST data set and new configurations of a MMF that were not used during training. This research lays the foundation for a flexible, affordable, and high-resolution imaging system, particularly useful in cases highly sensitive to implant size.

Results

Observation model

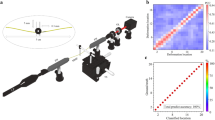

A simplified version of the proposed imaging system is shown in Fig. 1. The laser is spatially shaped by a digital micro-mirror device (DMD) to produce M speckle patterns at the far-field of the distal end of a 300 mm long and 62.5 μm core graded-index illumination fiber. The M patterns are used to probe a \(10~\times ~10\) cm object placed approximately at 20 cm from the fiber and the back-scattered light from each of the M patterns is collected by a 400 μm core step-index collection fiber (running parallel to the illumination fiber) and recorded by an APD at the proximal end (see “Methods” for details). In contrast to previously reported work, our imaging system does not require access to- or a feedback signal from the distal end of the fiber during imaging.

Assuming that the speckle patterns projected at the distal end of the fiber are known (we will discuss in the training procedure section how they are recorded before actual imaging), each measurement received by the APD can be modeled as the overlap integral between the object and one speckle pattern, hence the forward model can be formulated as

where \(\varvec{y} \in \mathbb {R}^M\) is the measurement vector, \(\textbf{A} \in \mathbb {R}^{M \times N}\) is the measurement matrix which contains the set of M speckle patterns, vectorized to form the rows of the matrix, each of size N pixels. The vector \(\varvec{x} \in \mathbb {R}^N\) is a discretized version of the object to be reconstructed and \(\varvec{n} \in \mathbb {R}^M\) represents measurement noise, modelled as a realization of a random i.i.d. Gaussian noise. The function \(\mathscr {G}\) comprises the damping effect caused by the collection fiber and the perturbations of the APD and the laser (see "Methods" for details). As discussed in the introduction, estimating the underlying object \(\varvec{x}\) from the measurements \(\varvec{y}\) yields an inverse problem that can be solved efficiently using a variety of different methods, provided that \(\textbf{A}\) and \(\mathscr {G}(\cdot )\) do not change. However, changing the configuration of the fiber means significant changes in the measurement matrix \(\textbf{A}\), and hence the measurement values. Thus, system re-calibration is usually performed to correct for changes in the measurement matrix \(\textbf{A}\). In this work, we aim to learn an \(\textbf{A}\)-agnostic variational autoencoder that can classify and reconstruct images from measurements corresponding to new and unseen configurations of the fiber.

Reconstruction algorithm

Schematic of the proposed Gaussian Mixture Variational Autoencoder (GMVAE). Arrows of different colors indicate various types of neural network layers, as explained by the color-coding in the legend above. The output dimensions for each layer are displayed within the GMVAE schematic. Note that the APD measurement vectors of size \(M=4096\) are reshaped to \(64\times 64\).

Variational autoencoders (VAEs)37,38,39 are deep generative models that consist of an encoder, a latent space and a decoder. The essence of VAEs is to regularize the encodings distribution during training to ensure that the latent space captures only the important features of the data set. This allows for reconstruction (or generation) of new data through the decoder. In contrast to the standard VAE architecture which only contains a single continuous (and multivariate) latent variable, the Gaussian mixture VAE (GMVAE)40,41,42,43,44 also contains a discrete latent variable representing the data class. Therefore, by specifying different integer numbers to different object classes, the GMVAE provides the membership probabilities of the observed object, for all the pre-defined classes, which allows for object classification.

In this context, we leverage a GMVAE (depicted in Fig. 2) for imaging through a MMF which bends. Essentially, the encoder of our GMVAE performs non-linear dimensionality reduction of a set of APD measurements corresponding to any image and any fiber configuration into a low-dimensional latent representation which only captures image information and disregards the fiber configuration. Thus, the learned latent space is trained to be \(\textbf{A}\)-agnostic, i.e., all measurement vectors corresponding to the same image but different fiber configurations share the same features in the latent space. The decoder of the GMVAE then takes as input this latent vector and generates an estimated image of the scene. Once trained, the proposed GMVAE can simultaneously classify (through the VAE encoder) and reconstruct (through the VAE decoder) objects from measurement vectors corresponding to a variety of fiber configurations, even configurations unseen during training.

Training procedure

Recall that training the GMVAE described above requires sets of input (APD measurements) and output (reference images of objects, belonging to different classes). However, recording sufficient measurement vectors at different fiber configurations and with a large image data base for training is not possible in practice as this imaging system is based on a sequential generation of illumination patterns in order to record diffuse reflection from real objects. To circumvent this problem, we use a white screen and a scientific CMOS camera (Hamamatsu Orca Flash 4.2) (sCMOS) to record the speckle patterns corresponding to M fixed DMD patterns for different configurations of the fiber. This leads to different speckle matrices \(\textbf{A}_l\), where each speckle is a row vector in \(\textbf{A}_l\) and l represents the index of the fiber configuration. As illustrated in Fig. 3, the DMD patterns, and therefore input spatial light field, are maintained for all configurations which allows the model to capture the hidden correlations between speckle patterns corresponding to the different configurations. We then use the recorded \(\textbf{A}_l\) matrices to perform speckle projection on images numerically to simulate measurement vectors. More precisely, we compute \(\varvec{y}\) as in Equation (1) for each image \(\varvec{x}\) in our training data set and for the L configurations of the fiber (replacing \(\textbf{A}\) in Eq. (1) by \(\textbf{A}_l\)).

To assess quantitatively the performance of the model in the next section, we first generate data sets in a similar fashion to the training data, however, we record speckle patterns corresponding to new configurations of the fiber which are different from those seen during training. We then perform projection on the test images numerically to simulate measurements. Measurements computed with this procedure are referred to as numerical measurements. We also assess qualitatively the performance of GMVAE on real measurements collected by an APD as presented in Fig. 1. We refer to these measurements as APD measurements. Training using numerical measurements offers the benefit of greater speed and flexibility to the model as learning new classes of images does not require the lab experiments to be repeated.

Optical setup for collecting the training data set. The figure shows three simplified fiber configuration examples and the resulting changes to the output speckle patterns. The fiber tip, imaging screen, and sCMOS camera were rotated together using the rotation stage and the middle of the fiber was clamped to- and moved by the bending arm (see Supplementary Note 1 for details).

Experiments

In the following experiments, we apply two types of bend simultaneously on the fiber. These are rotational bends applied at the final section of the fiber using a rotational stage, and arm bends performed on the middle section of the fiber leveraging a large lever arm (the experimental setup is shown in Supplementary Note 1). The lever arm has a mechanical advantage of \(\sim\)1:8 from the actuation side to the fiber-clamp side. Arm bends were measured at the actuation side in −1 mm increments from the initial position at 10 mm to 0 mm while rotational bends were in 5\(^\circ\) increments from the initial position at 230\(^\circ\) to 280\(^\circ\). This yielded \(L=11\) different fiber configurations covering a change in fiber bend of 50\(^\circ\) and range of movement of 8 cm. For simplicity, we denote each configuration by \(\text {C}_x\) where x is the corresponding arm bend position.

GMVAE training was performed using \(L=5\) fiber configurations (\(\text {C}_{10}\), \(\text {C}_{7}\), \(\text {C}_{5}\), \(\text {C}_{3}\) and \(\text {C}_{1}\)). These configurations cover the range from (10 mm, 230\(^\circ\)) to (1 mm, 275\(^\circ\)). At each configuration, we use 6000 images from each of the following 8 classes of the fashion-MNIST data set: (0) t-shirt, (1) trouser, (2) dress, (3) coat, (4) sandal, (5) shirt, (6) sneaker, and (7) boot. Testing was done on new configurations (\(\text {C}_{9}\), \(\text {C}_{8}\), \(\text {C}_{6}\), \(\text {C}_{4}\), \(\text {C}_{2}\), \(\text {C}_{0}\)) using new images from the same trained-on 8 classes and 2 new classes: pullover and bag. Here, \(\text {C}_{9}\), \(\text {C}_{8}\), \(\text {C}_{6}\), \(\text {C}_{4}\), \(\text {C}_{2}\) represent new bends which lie inside the range of the training configurations while \(\text {C}_{0}\) (0 mm, 280\(^\circ\)) represents a new bend which lies outside the range of the training configurations.

We tested the method in two scenarios, with and without wavefront shaping. Note that wavefront shaping allows for raster-scanning imaging while no wavefront shaping leads to speckle imaging. The performance of GMVAE is evaluated in terms of reconstruction quality against AE33,34 on numerical and APD measurements. Note that numerical measurements are computed at all \(L=11\) configurations while the APD measurements are only recorded at \(\text {C}_{10}\), \(\text {C}_{7}\), \(\text {C}_{5}\), \(\text {C}_{2}\) and \(\text {C}_{0}\). Since AE can only perform reconstruction, the classification accuracy of GMVAE is compared to that of a classifier trained on the images reconstructed by AE. For fairness, the chosen classifier, denoted by C-AE, has the same architecture as that used in our GMVAE (see Supplementary Note 4 for details).

First experiment with wavefront shaping

In this experiment, we use the wavefront shaping technique2 to generate focal spots at the distal end of the fiber. This allows for raster-scanning imaging which can give better results due to better SNR ratios. Speckles were recorded at the different configurations and wavefront shaping was only performed at the configuration \(\text {C}_{10}\). This means that speckles at \(\text {C}_{10}\) (10 mm, 230\(^\circ\)) are simple focal points. By bending the fiber away from the calibration position, the focal points become speckles due to coupling between spatial modes of the fiber. Therefore, \(\text {C}_{0}\) (0 mm, 280\(^\circ\)) represents the most challenging bend where speckles have the maximum distortion (see Supplementary Figure 2).

Figure 4A shows the peak signal-to-noise ratio (PSNR) curves obtained by the GMVAE and the AE at all configurations under scrutiny utilizing 10000 numerical measurements and 20 APD measurements from the fashion-MNIST dataset (8 trained-on classes and 2 new classes). We notice that both the GMVAE and the AE give the best reconstruction quality on the numerical measurements recorded at \(\text {C}_{10}\) (the AE scores PSNR = \(23.67\pm 3.79\) dB and the GMVAE scores PSNR = \(22.69\pm 3.61\) dB). This is expected since \(\text {C}_{10}\) was used during training. Moreover, \(\text {C}_{10}\) is the calibrated configuration, hence details from the original images can be seen directly in the measurements, reducing the problem of reconstruction to a denoising or a mild deconvolution problem. Although the AE was able to achieve 1 dB enhancement on numerical measurements at the calibrated configuration, the GMVAE achieves higher PSNR values at all other configurations. In contrast to the AE which exhibits a gradual decrease in PSNR values on numerical measurements as the fiber bends away from the calibrated position, the GMVAE maintains almost the same PSNR values (\(\thickapprox 20\) dB) at the seen configurations (\(\text {C}_{7}\), \(\text {C}_{5}\), \(\text {C}_{3}\) and \(\text {C}_{1}\)) and the same PSNR values (\(\thickapprox 18.5\) dB) at the unseen configurations (\(\text {C}_{9}\), \(\text {C}_{8}\), \(\text {C}_{6}\), \(\text {C}_{4}\), \(\text {C}_{2}\) and \(\text {C}_{0}\)). Albeit not significant, the difference in PSNR values between seen and unseen configurations suggests that not all the configuration-dependent features are discarded in the latent space, hence training the GMVAE with more configurations might lead to better generalization. The GMVAE also maintains around 1.5 dB of PSNR enhancement on APD measurements recorded at the seen configurations (\(\text {C}_{10}\), \(\text {C}_{7}\) and \(\text {C}_{5}\)) and the unseen configurations (\(\text {C}_{2}\) and \(\text {C}_{0}\)). Since both the AE and the GMVAE were trained on numerical measurements, we notice a steep decrease in the PSNR values when testing on APD measurements. Although the training procedure was adapted to partially compensate for the damping effect caused by the collection fiber and the perturbations of the APD and the laser (see “Methods” for details), small additional variations occur in the APD measurement. On the one side, this suggests the use of a more robust hardware and possibly enhance the forward model to improve the training efficiency. On the other hand, this demonstrates the performance of the methods in realistic scenarios including unknown signal variations.

Figure 5 compares qualitatively the reconstruction quality of the GMVAE against the AE on APD measurements collected at the following configurations: \(\text {C}_{10}\), \(\text {C}_{2}\) and \(\text {C}_{0}\). We can clearly see the good reconstruction quality for both the GMVAE and the AE at the calibrated configuration \(\text {C}_{10}\). Again, the results show a superior performance for the GMVAE in comparison to the AE for the new configurations (\(\text {C}_{2}\) and \(\text {C}_{0}\)) which are far away from the calibration position. Although \(\text {C}_{0}\) represents the most difficult bend which lies outside the range of training configurations, the GMVAE was able to maintain almost the same reconstruction quality as that for \(\text {C}_{2}\) which lies inside the training range. This shows the ability of the GMVAE to generalize to new configurations of the fiber in comparison with the AE that fails in capturing the non-linear dependencies between the different configurations. Moreover, the training time of the GMVAE is around 10 times lower than that for the AE.

Figure 6 (top row) shows the average confusion matrices obtained by the GMVAE and the C-AE on 8000 numerical measurements from the 8 trained-on classes. The confusion matrices are averaged over the unseen configurations \(\text {C}_{9}\), \(\text {C}_{8}\), \(\text {C}_{6}\), \(\text {C}_{4}\), \(\text {C}_{2}\) and \(\text {C}_{0}\). We can see that the GMVAE achieves \(77\pm 3\%\) classification accuracy with \(1\%\) average enhancement over the C-AE which scores \(76\pm 8\%\). These numbers suggest that simultaneous learning is better than sequential learning and can boost both reconstruction quality and classification accuracy for unseen configurations of the fiber.

Figure 7 (top row) presents the combined raw data and the combined GMVAE latent vectors of 8000 numerical measurements computed at the configurations \(\text {C}_{10}\), \(\text {C}_{2}\) and \(\text {C}_{0}\) and projected using principal component analysis (PCA) to the 3D space. In contrast to the combined raw data (Fig. 7A), the GMVAE combined latent space (Fig. 7B) of the testing data points shows a clear separation between the different classes. More importantly, the combined latent space is configuration-agnostic. This means that regardless of the varying configurations (\(\text {C}_{10}\), \(\text {C}_{2}\), and \(\text {C}_{0}\)), the measurement vectors belonging to the same class (e.g., t-shirt) coalesced into a single cluster in the latent space. This observation is a manifestation of the GMVAE’s ability to capture the commonalities in the underlying data structure corresponding to the same class, irrespective of the configuration changes.

Average PSNR curves obtained by GMVAE and AE. Results are shown at different configurations on 10000 numerical measurements and 20 APD measurements from the fashion-MNIST dataset (8 trained-on classes and 2 new classes). (A) results of the first experiment with wavefront shaping and (B) results of the second experiment without wavefront shaping.

Reconstruction results from the first experiment with wavefront shaping at configurations \(\text {C}_{10}\), \(\text {C}_{2}\) and \(\text {C}_{0}\). The first column displays the original images, while each set of three consecutive columns presents the APD measurements, GMVAE reconstruction, and AE reconstruction. Images highlighted by red squares correspond to a new class (pullover) not used during training. The intensity values of all images in the figure range between 0 and 1.

Average confusion matrices obtained by GMVAE and C-AE on 8000 numerical measurements from the 8 trained-on classes. (A) and (B) are results of the first experiment with wavefront shaping, and (C) and (D) are results of the second experiment without wavefront shaping. The confusion matrices are averaged over the unseen configurations \(\text {C}_{9}\), \(\text {C}_{8}\), \(\text {C}_{6}\), \(\text {C}_{4}\), \(\text {C}_{2}\) and \(\text {C}_{0}\).

3D PCA projection of the raw data and the GMVAE latent vectors. (A) and (B) are results of the first experiment with wavefront shaping, and (C) and (D) are results of the second experiment without wavefront shaping. Each panel shows the accumulation of 8000 numerical measurements computed at the configurations \(\text {C}_{10}\), \(\text {C}_{2}\) and \(\text {C}_{0}\).

Second experiment without wavefront shaping

In the second experiment, speckles were recorded at the different configurations with no configuration-specific wavefront shaping performed. This simulates a situation in which no prior calibration has been done and the fiber is to be used in-situ for imaging directly. This experiment represents a more complicated scenario as all configurations are challenging (see Supplementary Figure 2). However, it could be of interest when wavefront shaping for raster scanning is expensive or not possible.

Figure 4B presents the PSNR curves obtained by the GMVAE and the AE at all configurations on numerical and APD measurements. We see that the GMVAE achieves higher PSNR values (around 2 dB) for all configurations on both numerical and APD measurements. The GMVAE gives almost the same PSNR values at the seen configurations and the same PSNR values at the unseen configurations (except for \(\text {C}_{8}\)). The drop in PSNR values at the unseen configurations indicates that the GMVAE latent space does not only capture image information but also fiber configuration related information. This problem might be mitigated by increasing the number of training configurations.

The average confusion matrices obtained by the GMVAE and the C-AE on 8000 numerical measurements from the 8 trained-on classes are shown in Fig. 6 (bottom row). The confusion matrices are averaged over the unseen configurations. Once again, the results suggest better classification accuracy for the GMVAE compared to the C-AE. More precisely, the GMVAE scores \(66\pm 10\%\) with 8% average enhancement from the C-AE.

Finally, the combined raw data and the combined GMVAE latent vectors of 8000 numerical measurements computed at the configurations \(\text {C}_{10}\), \(\text {C}_{2}\) and \(\text {C}_{0}\) and projected by PCA to the 3D space are presented in Fig. 7 (bottom row). In contrast to the combined raw data (Fig. 7C) where we can see stripes representing measurements from the same class but different configurations, the combined GMVAE latent space (Fig. 7D) is configuration-agnostic, i.e., only features of the measurements related to the underlying images are preserved and configuration-dependent features are discarded.

Video reconstruction

We further validate the performance of the GMVAE on recorded videos in two scenarios. Firstly, we show the reconstruction of 3 static objects while moving the fiber from the calibrated position \(\text {C}_{10}\) (10 mm, 230\(^\circ\)) to \(\text {C}_{5}\) (5 mm, 255\(^\circ\)). This corresponds to change in fiber bend of 25\(^\circ\) and range of movement of 4 cm. Secondly, we show the reconstruction of a moving object while bending the fiber from \(\text {C}_{10}\) to \(\text {C}_{5}\). The reconstructed video (provided as a supplementary material) shows good reconstruction quality for the GMVAE. As seen in the reconstructed video, it is expected that the GMVAE fails to reconstruct images when objects leave the field-of-view (FOV) of the fiber. We believe this could be improved using more complex VAE architectures allowing translation-blind classification45,46,47.

Our proposed system facilitates the reconstruction of roughly 5 frames per second. Despite our GMVAE enabling swift inference times—typically in the millisecond range once properly trained—our setup’s real-time potential is predominantly constrained by the hardware and the acquisition process. Our system uses a DMD that reads binary masks from onboard DDR RAM at a rate of 21000 masks per second. To the best of our knowledge, this represents the current state of the art in arbitrary digital projection. With an FPGA upgrade and a restricted region-of-interest on the DMD, we could potentially increase this rate to roughly 40000 masks per second. Given that we scan through 4096 DMD patterns per image, we can achieve an image acquisition rate just above 5 Hz. We are actively investigating potential optimizations for the hardware, firmware, and software used in our system. It’s important to clarify that while our system can perform live reconstruction, we did not showcase this capability in this work, as the processing was conducted offline.

Discussion

We have proposed an innovative real-time imaging system using flexible MMFs, which, in contrast to previous work, is robust to bending and does not require access to the distal end of the fiber during imaging. We utilized a MMF 300 mm long with a 62.5 μm core for imaging \(10~\times ~10\) cm objects placed approximately at 20 cm from the fiber and the system could deal with a change in fiber bend of 50\(^\circ\) and range of movement of 8 cm. Objects from different classes were efficiently classified and reconstructed from the speckle measurements leveraging a Gaussian mixture variational autoencoder (GMVAE). The essence of our GMVAE is to learn a configuration-agnostic latent representation from measurements corresponding to different configurations of the fiber. The complexity of our method is much smaller than that of other works proposed for imaging through MMFs36 and scattering media33,34 and as a result the training time is reduced by around 90% for the same training data set. The results demonstrated through different experiments with and without wavefront shaping proved the efficiency of the proposed approach in reconstruction and classification of images for new configurations of the fiber. The good performance of our system is further validated on recorded videos of static and moving objects while dynamically bending the fiber, enabling reconstruction of approximately 5 frames per second. This novel approach paves the way for real-time, flexible and high-resolution imaging system for use in areas with very limited access.

Methods

Experimental design

The experimental setup, shown in Fig. 1, consists of a Q-switched laser at 532 nm with a pulse width of 700 ps and a repetition rate of 21 kHz (Teem Photonics SNG-100P-1x0). The laser is spatially shaped by a digital micro-mirror device (Vialux V-7000) (DMD) such that the far-field of the distal end of a 62.5 μm core graded index fiber with a numerical aperture (NA) of 0.275 (Thorlabs GIF625) has a desired spatial intensity pattern. Of particular note is the fact that the transmission matrix of a graded index fiber is more resistant to bending than that of a step index fiber. Given that our study aims to maximize the bend resistance of our imaging method, we have chosen to use a graded index fiber as our illumination fiber, in conjunction with a bend-agnostic reconstruction approach. Through calibration of the fiber in a particular configuration, this spatial pattern was selected to be a raster scanning spot as is described in the first experiment with wavefront shaping. As discussed in the second experiment without wavefront shaping, we also investigated the case where the holograms are not selected for any specific configuration of the fiber and, hence, the DMD masks were generated with a quasi-random algorithm. For imaging, a sample image, printed in grey-scale on white paper, is placed at the screen and the reflected light for each of M patterns generated by the DMD is collected by a second step index collection fiber, 400 μm core and 0.39 NA (Thorlabs FT400UMT), running parallel to the illumination fiber. The back-scattered light is recorded at the proximal end of the collection fiber by an AC-coupled avalanche photodiode (MenloSystems APD210) (APD) sampling at 2.5 Gs/s. The laser pulses are used a trigger for imaging and as such allow for time gating the signal from the collection fiber. In this way, only data corresponding to the distance of the sample from the fiber tip are considered and the remaining recording could be dismissed, hence reducing background illumination effects. After this temporal gating is performed, M speckle measurements remain for each image.

GMVAE training

The GMVAE architecture is explained in Supplementary Note 3. For both experiments, the training data set utilizes 48000 images of size \(64\times 64\) pixels from 8 classes of the fashion-MNIST data set (6000 images per class). We record the speckle patterns corresponding to \(M=4096\) random but fixed DMD patterns for \(L=11\) configurations of the fiber leading to different speckle matrices \(\textbf{A}_l\), where each speckle pattern of spatial resolution \(64\times 64\) is stretched as a row vector in \(\textbf{A}_l\) and l represents the fiber configuration. Then, we perform projections on the training images numerically to get the measurement vectors \(\varvec{y}\) as in Equation (1).

By expressing the function \(\mathscr {G}\) in a matrix form, Eq. (1) can be re-written as

where s accounts for the damping effect caused by the collection fiber and is fixed to 10 for the first experiment and 200 for the second experiment, and T represents the transpose operator. The vectors \(\varvec{w}\) and \(\varvec{b}\) are the white and black backgrounds recorded during the experiments. Finally, \(\overline{\textbf{A}\varvec{x}}\) is a normalized version of \(\textbf{A}\varvec{x}\) with values in the range [0,1]. We found that incorporating these two types of normalization in the training can mitigate the effects of the the perturbations of the APD and the laser and enhance both the reconstruction quality and the classification accuracy. Note that the standard deviation of the i.i.d. Gaussian noise \(\varvec{n}\) is fixed to 0.015.

Data availability

The datasets used and/or analyzed during the current study available from the corresponding author on reasonable request.

References

Psaltis, D. & Moser, C. Imaging with multimode fibers. Opt. Photonics News 27, 24–31 (2016).

Stellinga, D. et al. Time-of-flight 3d imaging through multimode optical fibers. Science 374, 1395–1399 (2021).

Čižmár, T. & Dholakia, K. Exploiting multimode waveguides for pure fibre-based imaging. Nat. Commun. 3, 1–9 (2012).

Bianchi, S. & Di Leonardo, R. A multi-mode fiber probe for holographic micromanipulation and microscopy. Lab. Chip 12, 635–639 (2012).

Plöschner, M., Tyc, T. & Čižmár, T. Seeing through chaos in multimode fibres. Nat. Photonics 9, 529–535 (2015).

Loterie, D. et al. Digital confocal microscopy through a multimode fiber. Opt. Express 23, 23845–23858 (2015).

Caravaca-Aguirre, A. M. & Piestun, R. Single multimode fiber endoscope. Opt. Express 25, 1656–1665 (2017).

Popoff, S. M. et al. Measuring the transmission matrix in optics: An approach to the study and control of light propagation in disordered media. Phys. Rev. Lett. 104, 100601 (2010).

Čižmár, T. & Dholakia, K. Shaping the light transmission through a multimode optical fibre: Complex transformation analysis and applications in biophotonics. Opt. Express 19, 18871–18884 (2011).

Carpenter, J., Eggleton, B. J. & Schröder, J. 110 x 110 optical mode transfer matrix inversion. Opt. Express 22, 96–101 (2014).

N’Gom, M., Norris, T. B., Michielssen, E. & Nadakuditi, R. R. Mode control in a multimode fiber through acquiring its transmission matrix from a reference-less optical system. Opt. Lett. 43, 419–422 (2018).

Li, S. et al. Compressively sampling the optical transmission matrix of a multimode fibre. Light: Sci. Appl. 10, 1–15 (2021).

Choi, Y. et al. Scanner-free and wide-field endoscopic imaging by using a single multimode optical fiber. Phys. Rev. Lett. 109, 203901 (2012).

Amitonova, L. V. & De Boer, J. F. Compressive imaging through a multimode fiber. Opt. Lett. 43, 5427–5430 (2018).

Caravaca-Aguirre, A. M. et al. Hybrid photoacoustic-fluorescence microendoscopy through a multimode fiber using speckle illumination. Apl Photonics 4, 096103 (2019).

Lan, M. et al. Robust compressive multimode fiber imaging against bending with enhanced depth of field. Opt. Express 27, 12957–12962 (2019).

Lan, M. et al. Averaging speckle patterns to improve the robustness of compressive multimode fiber imaging against fiber bend. Opt. Express 28, 13662–13669 (2020).

Caravaca-Aguirre, A. M., Niv, E., Conkey, D. B. & Piestun, R. Real-time resilient focusing through a bending multimode fiber. Opt. Express 21, 12881–12887 (2013).

Farahi, S., Ziegler, D., Papadopoulos, I. N., Psaltis, D. & Moser, C. Dynamic bending compensation while focusing through a multimode fiber. Opt. Express 21, 22504–22514 (2013).

Gu, R. Y., Mahalati, R. N. & Kahn, J. M. Design of flexible multi-mode fiber endoscope. Opt. Express 23, 26905–26918 (2015).

Li, S., Horsley, S. A., Tyc, T., Čižmár, T. & Phillips, D. B. Memory effect assisted imaging through multimode optical fibres. Nat. Commun. 12, 1–13 (2021).

Moran, O., Caramazza, P., Faccio, D. & Murray-Smith, R. Deep, complex, invertible networks for inversion of transmission effects in multimode optical fibres. Advances in Neural Information Processing Systems31 (2018).

Borhani, N., Kakkava, E., Moser, C. & Psaltis, D. Learning to see through multimode fibers. Optica 5, 960–966 (2018).

Rahmani, B., Loterie, D., Konstantinou, G., Psaltis, D. & Moser, C. Multimode optical fiber transmission with a deep learning network. Light Sci. Appl. 7, 1–11 (2018).

Turpin, A., Vishniakou, I. & d Seelig, J. Light scattering control in transmission and reflection with neural networks. Opt. Express 26, 30911–30929 (2018).

Kakkava, E. et al. Imaging through multimode fibers using deep learning: The effects of intensity versus holographic recording of the speckle pattern. Opt. Fiber Technol. 52, 101985 (2019).

Caramazza, P., Moran, O., Murray-Smith, R. & Faccio, D. Transmission of natural scene images through a multimode fibre. Nat. Commun. 10, 1–6 (2019).

Fan, P., Zhao, T. & Su, L. Deep learning the high variability and randomness inside multimode fibers. Opt. Express 27, 20241–20258 (2019).

Li, Y. et al. Image reconstruction using pre-trained autoencoder on multimode fiber imaging system. IEEE Photonics Technol. Lett. 32, 779–782 (2020).

Zhao, J. et al. High-fidelity imaging through multimode fibers via deep learning. J. Phys. Photonics 3, 015003 (2021).

Liu, Z. et al. All-fiber high-speed image detection enabled by deep learning. Nat. Commun. 13, 1–8 (2022).

Mitton, J. et al. Bessel equivariant networks for inversion of transmission effects in multi-mode optical fibres. Preprint at arXiv:2207.12849 (2022).

Li, Y., Xue, Y. & Tian, L. Deep speckle correlation: A deep learning approach toward scalable imaging through scattering media. Optica 5, 1181–1190 (2018).

Li, Y., Cheng, S., Xue, Y. & Tian, L. Displacement-agnostic coherent imaging through scatter with an interpretable deep neural network. Opt. Express 29, 2244–2257 (2021).

Starshynov, I., Turpin, A., Binner, P. & Faccio, D. Statistical dependencies beyond linear correlations in light scattered by disordered media. Phys. Rev. Res. 4, L022033 (2022).

Resisi, S., Popoff, S. M. & Bromberg, Y. Image transmission through a dynamically perturbed multimode fiber by deep learning. Laser Photonics Rev. 15, 2000553 (2021).

Kingma, D. & Welling, M. Auto-encoding variational bayes. In ICLR (2014).

Kingma, D. P. & Welling, M. An introduction to variational autoencoders. Found. Trends Mach. Learn. 12, 307–392 (2019).

Tonolini, F., Radford, J., Turpin, A., Faccio, D. & Murray-Smith, R. Variational inference for computational imaging inverse problems. J. Mach. Learn. Res. 21, 7285–7330 (2020).

Shu, R. Gaussian mixture vae: Lessons in variational inference, generative models, and deep nets.

Figueroa, J. A. Semi-supervised learning using deep generative models and auxiliary tasks. In NeurIPS (2019).

Collier, M. & Urdiales, H. Scalable deep unsupervised clustering with concrete gmvaes. Preprint at arXiv:1909.08994 (2019).

Charakorn, R. et al. An explicit local and global representation disentanglement framework with applications in deep clustering and unsupervised object detection. Preprint at arXiv:2001.08957 (2020).

Varolgüneş, Y. B., Bereau, T. & Rudzinski, J. F. Interpretable embeddings from molecular simulations using gaussian mixture variational autoencoders. Mach. Learn. Sci. Technol. 1, 015012 (2020).

Franceschi, J.-Y., Delasalles, E., Chen, M., Lamprier, S. & Gallinari, P. Stochastic latent residual video prediction. In International Conference on Machine Learning, 3233–3246 (PMLR, 2020).

Wu, B., Nair, S., Martin-Martin, R., Fei-Fei, L. & Finn, C. Greedy hierarchical variational autoencoders for large-scale video prediction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 2318–2328 (2021).

Wang, L., Tan, H., Zhou, F., Zuo, W. & Sun, P. Unsupervised anomaly video detection via a double-flow convlstm variational autoencoder. IEEE Access 10, 44278–44289 (2022).

Acknowledgements

This work was supported by the Royal Academy of Engineering under the Research Fellowship scheme RF201617/16/31 and by the Engineering and Physical Sciences Research Council (EPSRC) Grant number EP/T00097X/1.

Author information

Authors and Affiliations

Contributions

All authors (A.A., S.P.M., Y.A., M.J.P., and S.M.) contributed to the the methodology and the writing of the manuscript. A.A. and S.P.M. conducted the experiments, tests and investigation. Y.A., M.J.P., and S.M. provided supervision throughout the project.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Supplementary Video 2.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abdulaziz, A., Mekhail, S.P., Altmann, Y. et al. Robust real-time imaging through flexible multimode fibers. Sci Rep 13, 11371 (2023). https://doi.org/10.1038/s41598-023-38480-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-023-38480-4

This article is cited by

-

Model-free estimation of the Cramér–Rao bound for deep learning microscopy in complex media

Nature Photonics (2025)