Abstract

Recognition memory research has identified several electrophysiological indicators of successful memory retrieval, known as old-new effects. These effects have been observed in different sensory domains using various stimulus types, but little attention has been given to their similarity or distinctiveness and the underlying processes they may share. Here, a data-driven approach was taken to investigate the temporal evolution of shared information content between different memory conditions using openly available EEG data from healthy human participants of both sexes, taken from six experiments. A test dataset involving personally highly familiar and unfamiliar faces was used. The results show that neural signals of recognition memory for face stimuli were highly generalized starting from around 200 ms following stimulus onset. When training was performed on non-face datasets, an early (around 200–300 ms) to late (post-400 ms) differentiation was observed over most regions of interest. Successful cross-classification for non-face stimuli (music and object/scene associations) was most pronounced in late period. Additionally, a striking dissociation was observed between familiar and remembered objects, with shared signals present only in the late window for correctly remembered objects, while cross-classification for familiar objects was successful in the early period as well. These findings suggest that late neural signals of memory retrieval generalize across sensory modalities and stimulus types, and the dissociation between familiar and remembered objects may provide insight into the underlying processes.

Similar content being viewed by others

Introduction

Decades of research in the field of recognition memory have identified a number of electrophysiological indicators of successful memory retrieval, known as old-new effects1. These effects have been studied extensively in the context of familiarity and recollection2,3,4, and have been observed across a range of sensory domains and stimulus types5,6. While the similarity and distinctiveness of these electrophysiological signals, and the underlying processes that they reflect, have received attention7,8, there have been few attempts to determine whether these findings can be generalized across experiments.

In the literature on recognition memory, two key electrophysiological components have been identified: the early mid-frontal effect (FN400) and the late parietal component (LPC). The FN400 typically appears around 300–500 ms after stimulus presentation, predominantly over the frontal regions of the brain, while the LPC is typically observed as a broad positive deflection between 400 and 800 ms after stimulus onset. Dual process theories of memory retrieval, which posit different neuro-cognitive processes underlying familiarity and recollection, find support in the behavior of these two components under different experimental manipulations. The earlier FN400 has been linked to familiarity, a fast and automatic process associated with “just knowing” a stimulus even in the absence of contextual information. In contrast, the LPC has been associated with recollection, a slower and more controlled process involving the recall of episodic information about previous encounters with the stimulus4,9.

Face perception has often been studied as a model system for investigating the organizational principles of the brain10, but faces are also a unique category of visual stimulus due to their high biological, personal and social importance. This importance is reflected in the prioritization of face detection over other types of visual stimuli11,12,13, as well as the preferential processing of familiar faces over unfamiliar ones14.

Rapid saccades toward familiar faces have been observed as early as 180 ms after stimulus presentation11, and early EEG responses, such as the N170, have been, in cases, shown to be modulated by face familiarity depending on the context of the task15. However, consistent effects of face familiarity on neural activity do not seem to emerge until after 200 ms post-stimulus. The earliest event-related potential (ERP) component that has been reliably shown to be modulated by face familiarity is the N25016, a parieto-occipital deflection occurring between 230 and 350 ms, which is thought to reflect the visual recognition of a known face17. The sustained familiarity effect, peaking between 400 and 600 ms, is theorized to reflect activity in the “familiarity hub”, where structural information about a face is integrated with additional semantic and affective information18.

The interpretation and synthesis of existing findings on the neural basis of recognition memory are limited by the considerable differences in timing and topographical distribution across studies. This means that signals related to memory processes that do not conform to the canonical configurations described above may be overlooked8,19. Additionally, the averaged waveforms elicited by the presentation of stimuli are likely to reflect a multitude of temporally overlapping but separate cognitive processes20, making it difficult to disentangle the underlying neural mechanisms21.

In this study, instead of focusing on the presence or absence of these signals of successful retrieval in separate experiments, a data-driven approach was taken to probe how shared information content in neural signals between different memory task conditions unfolds over time. A cross-experiment multivariate cross-classification (MVCC,22) analysis on openly available EEG data from ca. 120 participants of both sexes across six experiments was performed23,24,25,26. This cross-dataset decoding procedure was implemented to probe if memory-related neural signals generalize across different experimental conditions, thus probing the common underlying mechanisms beyond task and stimulus-specific contexts. The test dataset for this analysis consisted of an experiment involving personally highly familiar and unfamiliar faces27, and classifier training was conducted using data from two additional face memory experiments28,29, an experiment presenting short segments of familiar and unfamiliar music30, a study investigating remembered and forgotten object-scene associations31, and an object familiarity/recollection study19.

In multivariate pattern analysis (MVPA), a machine learning classifier is trained on a set of features (e.g., voltage amplitudes from multiple channels) to find a decision boundary that best separates the categories of interest. This trained classifier is then used to categorize data that was not part of the training set. Higher-than-chance classification indicates that the patterns of data used for training contain information that can be used to successfully classify the test data, indicating that the patterns contain information that separates the categories of interest, and that this information is present in both the training and the test datasets22,32,33. One major advantage of MVPA over other methods is its flexibility in terms of what data can be used for training and testing. For example, cross-classification can be performed across different time points, regions of interest, experimental conditions, participants, or datasets from different experiments. Furthermore, pre-existing labels in the training and test datasets can be easily modified, allowing for the evaluation of cross-classification performance across different tasks and domains.

Previous studies using cross-dataset classification have found that neural signals for familiarity with face stimuli generalize across experiments that use different familiarization methods23, and that successful cross-classification can be demonstrated even across markedly different task conditions25,26. These results suggest that for faces, a general familiarity signal exists that is shared across participants, stimuli, and mode of acquisition. The current study aims to explore the generalizability of this phenomenon by investigating the neural dynamics of cross-classification involving datasets for various stimulus types and sensory modalities. This allows for probing whether similar generalizable signals exist for recognition memory across different stimulus types and sensory modalities.

Replicating previous findings23,24,26, neural signals for face stimuli generalized remarkably well starting from around 200 ms after stimulus onset. When training was performed on non-face datasets, an early (200–300 ms) and a late (post-400 ms) differentiation was observed over most regions of interest. Successful cross-classification for non-face (music and object/scene associations) datasets was more pronounced in the late period. Finally, a clear distinction was observed between familiar and remembered objects, with shared patterns only present for correctly remembered objects in the later time frame, while familiar objects also had shared patterns in the early period. Furthermore, classifiers trained on signals related to subjective familiarity and recollection (such as incorrect responses and false alarms) were effective in identifying genuine face-familiarity.

Methods

Test dataset

This analysis is based on the availability of openly accessible EEG datasets, which originated from different laboratories and were measured using different EEG devices and setups. In addition to the varying stimulus types, there were multiple other factors that varied across the experiments included in this study, such as the number of trials, repetition of unique stimuli, experimental task, and the uncertainty of the familiarity decisions (see also the Further considerations section of this paper). These varying and uncontrolled parameters can influence the signal-to-noise ratio and, as a consequence, the separability of the signals for the categories of interest. Previous research has shown that cross-classification performance is higher in the low-to-high signal-to-noise ratio direction (a phenomenon known as decoding direction asymmetry), and successful cross-classification supports the presence of shared information content in the signals34.

Therefore, it was decided that data from a single experiment, the Incidental Recognition study by Wiese et al.27, will serve as the “target” dataset for this analysis. There are several reasons for this choice. First, the sample size in this study was n = 22, which is one of the highest among the available studies. Second, the stimulus set in this study consisted of trial-unique images of highly personally familiar and unfamiliar faces, which were pre-experimentally familiar, salient, socially and emotionally relevant, and unambiguously categorizable as known or unknown. Third, the stimulus set was also participant-unique, which reduces the likelihood that signals for lower-level stimulus properties will contribute to the results of cross-participant classification analyses. Fourth, the images were presented for an extended period (1 s) that allowed for the testing of a longer time window. Finally, the trials of interest did not require any response from the participants, which means that neural patterns related to response preparation, execution, and monitoring should not contribute to the signal. The following section provides a brief overview of the experimental procedures.

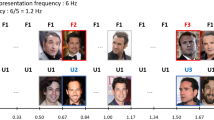

Personally familiar and unfamiliar faces (Wiese et al.27). The stimuli consisted of face photographs of unknown and highly personally familiar individuals (e.g., close friends, relatives). The 50 familiar and 50 unfamiliar face images were trial-unique, luminance-adjusted, and presented in grayscale for 1000 ms on a gray background. The familiar and unfamiliar identities were different for each participant. To keep participants engaged, a butterfly detection task was used, otherwise the experiment did not require any responses to the face stimuli. Trials were separated by a fixation cross, with a presentation duration of 1500–2500 ms. In addition to the trial-unique, familiar/unfamiliar stimuli, an additional familiar and an unfamiliar identity were presented, each with a single image repeated 50 times. These trials were not analyzed in the current study.

Training datasets

Famous and unfamiliar faces (Wakeman and Henson28). This dataset contains data from 15 participants. The stimuli were greyscale photographs of famous (celebrity) and unfamiliar individuals of both sexes. The start of each trial was indicated by a fixation cross (400–600 ms), and the inter-stimulus interval was set to 1700 ms. The experiment included 300 famous and 300 unfamiliar trials, which were presented for an interval that varied between 800 and 1000 ms. In addition to famous and unfamiliar faces, scrambled face images were also presented during the experiment, these trials were not included in the current analysis. Participants were required to make symmetry judgments for the images. For the purposes of this current study, familiar and unfamiliar trials were selected for each participant based on their responses in a post-experiment familiarity questionnaire.

An experimentally familiarized face and unknown faces (Sommer et al.29). This dataset contains data from 15 participants. The experimental design followed the Jane/Joe paradigm described in Tanaka et al.35. Volunteers were familiarized with a target face (matched to their gender, “Joe” or “Jane”) for 10–60 s. The same male face was used for the “Joe” condition, and the same female face was used for the “Jane” condition, across all participants. The 10 unknown faces were also matched to the participant’s gender. Stimuli were presented for 500 ms, followed by a 500 ms blank period. Finally, a cue prompted the participant to indicate whether the target face was presented. A 500 ms presentation of a fixation cross separated the trials. Each face image was presented twice in 36 experimental blocks (for a total of 72 presentations of the familiarized face and 720 presentations of unfamiliar faces). Classifiers were trained on the trials presenting the experimentally familiarized image of a person (“familiar”) and images of unfamiliar persons (“unfamiliar”). The participants’ own face was also part of the stimulus set, but these trials were not included in the current analysis.

Familiar and unfamiliar music (Jagiello et al.30). This dataset contains data from 10 participants. Volunteers passively listened to short, 750 ms long segments of familiar and unfamiliar (personally relevant and acoustically matched unknown) songs. The experiment was divided into 10 blocks, each with 100 familiar and 100 unfamiliar snippets, presented randomly, with an ISI between 1000 and 1500 ms. The participants were not required to make a response, and were instructed to maintain continuous focus on a white cross set against a grey background. Only averaged ERP data in the two conditions was openly available for this experiment. Classifiers were trained on the trials with personally familiar and relevant music ("familiar”) and unfamiliar music (“unfamiliar”).

Object-scene associations (Treder et al.31). The dataset for this study contains data from 18 participants. The experiment consisted of eight runs, each of which was divided into pre-encoding delay, encoding, post-encoding delay, and retrieval blocks. During the delay blocks, participants were instructed to perform an even–odd decision task on numbers between 0 and 100. The encoding blocks consisted of 32 trials, each starting with a fixation cross presented for 1500 ± 100 ms. A unique, randomly selected object-scene combination was then displayed until the participant pressed a button (minimum 2500 ms, maximum 4000 ms). Participants were asked to make a plausibility decision for each combination (i.e., how likely it is for the combination to appear in real life). The retrieval blocks also consisted of 32 trials, starting with a fixation cross presented for 1500 ± 100 ms. In eight mini-blocks, either an object or a scene was presented as a cue (until the button was pressed, minimum 2500 ms, maximum 6000 ms). Participants were asked to indicate whether they remembered the corresponding associated pair from the encoding phase (e.g., a scene for an object cue, or an object for a scene cue). “Remember” responses were to be given if the memory was vivid enough to provide a detailed description of the associated item; this was followed up by an instruction to give a description of the target in 20% of the trials. For the purposes of this analysis, “remember” trials were relabeled as “familiar” and “forgot” trials were relabeled as “unfamiliar” to investigate the neural correlates of successful memory retrieval.

Object familiarity and recollection (Dimsdale-Zucker et al.19). The dataset for this study contains data from 38 participants. The experiment included an encoding and a retrieval phase. During the encoding phase, 180 images of objects from the Bank of Standardized Stimuli (BOSS) were shown in color for 250 ms, each accompanied by an encoding question (“Would you find this item in a supermarket/convenience store?” or “Would this item fit in a fridge/bathtub?”). During the retrieval phase, one of the 180 previously presented images or 90 novel images was shown for 700 ms, followed by a “think” cue presented for 1700 ms. During this time, participants were instructed to withhold their responses in order to minimize movement-induced artifacts. Next, the object was shown again, and participants were asked to make a self-paced “remembered”, “familiar”, or “new” decision, as well as a confidence estimation on a scale from 1 (highly confident) to 4 (not at all confident). Finally, participants were asked to make a source memory judgement (fridge, supermarket, bathtub, convenience store). Trials were separated by a variable interstimulus interval of approximately 2 s. The presentation of stimuli and the order of the familiarity and source memory options were randomized for each participant. A 45–60-min delay period separated the encoding and retrieval phases. For the purposes of this study, “old” items correctly identified as “familiar” or “remembered” were tested against correctly identified “new” items in two separate analyses. Additional trial selection and relabeling was performed to explore the neural signatures of false alarms and forgotten items, and to probe the similarity of neural signals for familiarity and recollection to those of familiar face processing.

For an overview of the datasets included in the analyses, see Supplementary Information, Tables S1A and B.

Analyses

The analysis pipeline (see Fig. 1) was based on procedures described in Dalski et al.23. Only trials with correct responses were included in the main analyses (where applicable). Linear discriminant analysis (LDA) classifiers, as implemented in scikit-learn, were used for all classification analyses. Trial counts were balanced at both training and testing by under-sampling to the minimum trial count in the classes of interest, in each participant. Within-experiment, leave-one-subject-out analyses were implemented on the test experiment to characterize the temporal evolution of the information content present for personally familiar and unfamiliar stimuli in that dataset. In a leave-one-subject-out procedure, data from one participant is excluded from the dataset, and the model is trained using the remaining data. Subsequently, the model’s performance is evaluated using the data from the excluded participant. This procedure is iteratively repeated for each participant in the dataset, ensuring that each participant's data serves as the test set at least once. The mean classification accuracies for each participant are then combined to form a dataset for statistical analysis.

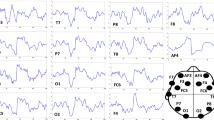

Analysis pipeline for time-resolved cross-classification. (A) EEG data (trials × times × channels) in each training dataset is aggregated across participants. (B) Linear discriminant analysis (LDA) classifiers are trained on data at each time point. (C) The fitted classifiers are then used to predict category membership in the test dataset, for each participant separately. (D) The classifier performance metrics are then aggregated to generate the sample-level cross-classification time course for each test dataset.

Multivariate cross-classification analyses (see Fig. 1) were conducted across experiments to probe the temporal dynamics of shared neural patterns related to memory recall, independent of stimulus type. Time-resolved MVPA, generalization across time, and sensor-space spatio-temporal searchlight analyses were performed. For each training dataset, linear discriminant analysis (LDA) classifiers were trained on aggregated data from all participants at each time point. These fitted classifiers were then used to predict stimulus familiarity in the test dataset for each trial in each participant at the corresponding time point. The mean classification accuracy scores for each participant in the test dataset are then combined to form a dataset for statistical analysis.

Time-resolved multivariate pattern analysis

Time-resolved cross-classification was carried out on data from all channels, as well as pre-defined regions of interest. The ROIs were based on Ambrus et al.36,37 and Dalski et al.23. Six scalp locations along the median (left and right) and coronal (anterior, center, and posterior) planes were defined and used for training and testing in separate analyses. Cross-classification was performed on common channels in the training and test datasets (see Supplementary Information, Figure S1). EEG data for trials in both the training and test datasets were synchronized with the onset of stimulus presentation. Time-resolved analyses included data from 200 ms before to 1000 ms after the stimulus presentation, aligning with the time window of stimulus presentation in the test experiment.

Spatio-temporal searchlight

All sensors were systematically tested separately by training and testing on data originating from the given channel and adjacent electrodes. For each channel and its neighbors, a time-resolved analysis on all time points was conducted.

Generalization across time

Temporal generalization analysis was used to investigate the temporal organization of information-processing stages. For this, the classifiers were fitted on data from each time point in the training experiments; these classifiers were then tested on the data at every timepoint in the test experiment. The results were organized in a cross-temporal (training-times × testing-times) classification accuracy matrix. Similar information-processing may be indicated where classifiers successfully generalize from one timepoint to another across the experiments32.

Statistical testing

The analyses were conducted on common-average-referenced, baseline-corrected (− 200 to 0 ms), and down-sampled (100 Hz) data23,37. No further processing steps were applied, retaining all available data to ensure that the analyses remain robust and data-driven. As classifiers effectively suppress noise and artefacts33,38, this approach avoids potential loss of informative signals and maintains statistical power39. A moving average of 30 ms (3 consecutive time points) was applied to all decoding accuracy data at the participant level23,36. For ROI-based time-resolved analyses, classification accuracies were entered into two-tailed, one-sample cluster permutation tests (mne.stats.permutation_cluster_1samp_test, with 10,000 iterations using random sign flips) against chance (50%). For the temporal generalization and searchlight analyses, two-tailed spatio-temporal cluster permutation tests (mne.stats.spatio_temporal_cluster_1samp_test) were used against chance level (50%), with 10,000 iterations (using random sign flips)40.

Statistical analyses were conducted using python, MNE-Python41, scikit-learn42 and SciPy43. Data and code can be accessed by following the URL: https://osf.io/qcr2g/.

Ethics statement

The study was approved by the Bournemouth University Research Ethics committee (ID 46391).

Results

Characterization of the test dataset

To characterize the evolution of the information content in the test dataset, within-experiment, leave-one-subject-out (LOSO) classification analyses were conducted on the personally familiar and unfamiliar faces experiment27. The time-resolved classification analysis revealed strong, sustained clusters beginning around 200 ms in all regions of interest (cluster p < 0.0001, Fig. 2A, see Supplementary Table 1A). Likewise, the searchlight analysis yielded a single cluster encompassing all sensors (cluster p < 0.0001, Supplementary Table 5A). Temporal generalization analyses also showed sustained effects starting around 200 ms (cluster p-values < 0.0001, Fig. 2B, see Supplementary Table 3A).

Familiarity classification in the test dataset. Within-experiment, leave-one-subject-out classification for the personally familiar and unfamiliar faces (Wiese et al.27) experiment. (A) Time-resolved classification over all electrodes and pre-defined regions of interest. Shaded regions denote significant clusters (two-sided cluster permutation tests, p < 0.05). For detailed statistics, see Supplementary Table 1A. Spatio-temporal searchlight results are shown as scalp maps, with classification accuracy scores averaged in 100 ms steps. Regions forming part of positive clusters (p < 0.05) are marked. Statistics calculated using spatio-temporal cluster permutation tests. (B) Generalization across time. Two-sided cluster permutation test (p < 0.05). RA/LA right/left anterior, RC/LC right/left central, RP/LP right/left posterior.

Cross-experiment classification

Cross-experiment time-resolved classification over all electrodes yielded significant effects for all six datasets (Fig. 3, Supplementary Information Figure S2). Sustained, robust effects were observed from ca. 200 ms for both face-familiarity datasets (experimentally familiarized faces, Fig. 3A: 200–340 ms, cluster p = 0.013, 370–800 ms, cluster p < 0.0001; famous faces, Fig. 3B: 220–990 ms, cluster p = 0.0002), while for other stimulus types, successful cross-classification was not indicated before 300 ms post-stimulus onset (familiar music, Fig. 3C: 300–890 ms, cluster p = 0.0001, 920–970 ms, cluster p = 0.035; object-scene associations, Fig. 3D: 330–630 ms, cluster p < 0.0001; remembered objects, Fig. 3F: 410–630 ms, cluster p < 0.0001). Interestingly, the exception to this was the case of familiar objects (Fig. 3E), where the start of the significant cluster (200–620 ms, cluster p < 0.0001) coincided with that of the leave-one-subject-out classification in the test dataset (see also Supplementary Information 1).

Time-resolved cross-experiment classification accuracies over all electrodes and pre-defined regions of interest. Classifier training was performed on EEG data from a range of memory tasks, and classification accuracy was tested on EEG signals for highly personally familiar and unfamiliar faces (Wiese et al.). For comparison, the results of the leave-one-subject-out classification analysis for this test dataset are overlayed in black. Left. Face memory. Classifiers trained on EEG data for an experimentally familiarized face and a set of unfamiliar faces (Sommer et al., A, dark brown) and faces of pre-experimentally familiar celebrities and unknown faces (Wakeman and Henson., B, light brown). Middle. Non-face memory. Classifiers trained on EEG data for short segments of pre-experimentally familiar and unfamiliar music (Jagiello et al., C, green) and experimentally learned object/scene associations (Treder et al., D, purple). Significant clusters emerged most prominently in the late, post-300 ms time window. For music stimuli, the most consistent effect was observed over the right central ROI, while for scene-object associations, frontal and central regions also yielded sustained significant clusters. Right. Objects, familiar and remembered. Classifiers trained on EEG data for images of objects (Dimsdale-Zucker et al.) participants indicated as familiar (E, red) or remembered (F, blue). Significant clusters for both conditions included the late, ca. 400–600 ms window, while the early, ca. 200–300 ms window was only flagged in the ‘familiar’ condition. Statistics were calculated using two-sided cluster permutation tests (p < 0.05), shaded regions denote significant clusters. The end of the stimulus presentation window is marked by vertical dotted lines. For detailed statistics, see Supplementary Table 1. Spatio-temporal searchlight results are shown as scalp maps, with classification accuracy scores averaged in 100 ms steps. Regions forming part of positive clusters are marked. Statistics were calculated using spatio-temporal cluster permutation tests. For detailed results, see Supplementary Table 5. RA/LA right/left anterior, RC/LC right/left central, RP/LP right/left posterior.

The results of the spatio-temporal searchlight analyses yielded sustained effects that encompassed the majority of the sensors, peaking between 460 and 560 ms. Training on experimentally familiarized faces yielded a positive (190–850 ms, cluster p < 0.0001) and a negative (690–990 ms, cluster p = 0.023) cluster, while a single, sustained (190–990 ms, cluster p < 0.0001) cluster was seen in the case of famous faces. Music stimuli and object-scene associations both yielded sustained positive clusters (music: 270–990 ms, cluster p < 0.0001, object-scene associations: 200–990 ms, cluster p < 0.0001). A robust early positive cluster emerged for familiar objects (190–660 ms, cluster p < 0.0001) which was followed by a later negative cluster (560–990 ms, cluster p = 0.0046). For remembered objects, the cluster permutation test flagged a large time interval (220–990 ms, cluster p < 0.0001) as significant, although only a handful of posterior sensors were part of the cluster between 200 and 400 ms.

Region-of-interest-analyses have shown that for most stimulus types, the most prominent and consistent effects were observed over the right central and posterior ROIs (Fig. 3, see also Fig. 4A and B). Negative classification accuracies (i.e., cases of significant, below-chance classification) were seen in some training datasets, these were mostly restricted to the post-500 ms interval. For detailed statistics, see Supplementary Table 1.

Cross-classification accuracy in early and late phases. Left panel: classification accuracy at each time-point, at the region of interest of highest classification accuracy, compared to mean baseline classifier performance, (A) in the leave-one-subject-out analysis for the test dataset, and (B) the cross-classification analyses. Horizontal significance markers indicate if the time-ROI datapoint belonged to a significant cluster based on the results of the cluster permutation test reported in Figs. 2 and 3. Highest decoding accuracies were seen in the right central and right posterior regions of interest. (C) Classification accuracies in the time-resolved cross-classification analyses in early and late phases. The time points depicted correspond to accuracy peaks in the results of the LOSO analysis for the test dataset. Results in the right central and posterior ROIs, as well as all electrodes, are depicted here. Results of all ROIs can be found in Supplementary Information S3. Statistics: two-sided one-sample t-tests, puncorrected < 0.05*, < 0.01**, < 0.001***, < 0.0001****. Boxplots: first, second (median), and third quartiles; whiskers with Q1 − 1.5 IQR and Q3 + 1.5 IQR, with horizontally jittered individual classification accuracies.

Region-of-interest analyses were followed up by examining cross-classification accuracies for the different training datasets at time-points with peak within-experiment leave-one-subject-out decoding accuracies in the test dataset. In the early window, significant positive effects were seen only in the case of the face-familiarity datasets, and in the case of objects correctly identified as “familiar”. In contrast, classification accuracies at the late peak consistently yielded significant effect in all cross-classification analyses (Fig. 4C, Supplementary Fig. 3A).

Temporal generalization analyses over all electrodes generally yielded rectangular cross-classification matrices (Fig. 5). In the case of experimentally familiar faces an early positive and a later negative cluster emerged (train interval: 90 to 830 ms, test interval: 190 to 990 ms, cluster p < 0.0001; train interval: 820 to 1290 ms; test interval: 220 to 990 ms, cluster p = 0.014). For famous faces, a single sustained cluster was observed (train interval: 200 to 1290 ms, test interval: 200 to 990 ms, cluster p = 0.0002). Music stimuli yielded a large positive cluster (train interval: 290 to 1100 ms, test interval: 200 to 990 ms, cluster p < 0.0001) in addition to later positive (train interval: 1130 to 1490 ms, test interval 190 to 990 ms, cluster p = 0.0029) and a number of smaller, weak effects that also included periods in the baseline. In the case of object-scene associations, two early positive clusters (train interval: − 20 to 210 ms, test interval: 300 to 990 ms, cluster p = 0.0056; train interval: 310 to 640 ms, test interval: 230 to 990 ms, cluster p = 0.0005), a weaker negative cluster (train interval: 650 to 1030 ms, test interval: 200 to 710 ms, cluster p = 0.0425) and a late, weaker positive cluster (train interval: 1130 to 1290 ms, test interval: 290 to 780 ms, cluster p = 0.0249), were seen. Both familiar and remembered objects yielded an earlier positive and a later negative cluster (familiar objects: train interval: 130 to 770 ms, test interval: 160 to 990 ms, cluster p < 0.0001; train interval: 850 to 1290 ms, test interval: 240 to 990 ms, cluster p = 0.0014; remembered objects: train interval: 400 to 760 ms, test interval: 280 to 990 ms, cluster p = 0.0046; train interval: 760 to 1290 ms, test interval: 180 to 990 ms, cluster p = 0.0002). For the results and the detailed statistics of the temporal generalization analyses in the regions of interest, see Supplementary Table 3.

Cross-experiment temporal generalization analysis over all electrodes. Two-sided cluster spatio-temporal permutation test (p < 0.05). For the results of the temporal generalization analyses separately in the different ROIs, see Supplementary Table 3.

Subjective effects in familiarity and recollection

The object familiarity/recollection dataset was subjected to further analyses. To test the cross-experiment representational dynamics of false alarms and forgotten items, these were relabeled as “familiar”, while keeping the “unfamiliar” labels of correctly rejected novel items. For forgotten items, old items incorrectly categorized as new were relabeled as “familiar”, while the “unfamiliar” label for novel items were kept (see Supplementary Information, Table S1B). Classifiers were then trained on these newly constructed datasets and tested on the EEG data for personally familiar and unfamiliar faces.

Time-resolved cross-classification over all electrodes in the case of false alarms for familiar objects yielded two positive clusters (190–340 ms, cluster p = 0.0006; 360–590 ms, cluster p < 0.0001). For false-alarm-remembered objects, three positive clusters were observed (280–330 ms, cluster p = 0.0308; 370–460 ms, cluster p = 0.0155, and 480–800 ms, cluster p < 0.0001). Spatio-temporal searchlight analysis yielded one positive cluster (180–940 ms, cluster p < 0.0001) (Fig. 6A). A single late positive cluster was observed in the case of forgotten vs. novel objects (370–560 ms, cluster p = 0.0056) in the time-resolved cross-classification analysis, while the spatio-temporal searchlight yielded a cluster (190–990 ms, cluster p < 0.0001) that also encompassed earlier time points (Fig. 6B).

Time-resolved cross classification for false alarms, forgotten items, and subjective old and new, but incorrect answers in the object recognition dataset. These analyses required the relabeling of specific trials in the training dataset (A) False alarms for familiar objects. familiar: new item, response: familiar. unfamiliar: new item, response: new. (B) False alarms for remembered objects. familiar: new item, response: remembered. unfamiliar: new item, response: new (C) Forgotten objects. familiar: old item, response: new. unfamiliar: new item, response: new. (D) Subjective old/new answers in trials with incorrect responses. familiar: new item, response: old. unfamiliar: old item, response: new. Results of the leave-one-subject-out classification for personally familiar and unfamiliar faces is presented in black for comparison (two-sided cluster permutation tests, p < 0.05). Spatio-temporal searchlight results are shown as scalp maps, with classification accuracy scores averaged in 100 ms steps. Sensors and time points forming part of significant clusters are marked (Two-sided spatio-temporal cluster permutation tests, p < 0.05). RA/LA: right/left anterior, RC/LC: right/left central, RP/LP: right/left posterior.

Time-resolved analysis for forgotten objects over all sensors yielded a late positive cluster (370–560 ms, cluster p = 0.0056), while a positive cluster (160–990 ms, cluster p < 0.0001) was observed in the results of the searchlight analysis. Interestingly, both ROI-based and searchlight results have shown weaker effects over frontal electrodes (Fig. 6C).

To test the neural signals for subjective (but incorrect) recall (Fig. 6D), only trials with erroneous responses were retained. Data for these trials were then labeled according to the participants’ response (i.e., incorrect “old” trials were labeled “familiar”, and incorrect “new” trials were labeled “unfamiliar”). Remarkably, the time-resolved analysis also yielded positive clusters at both early and late time points (210–270 ms, cluster p = 0.0262; 360–450 ms, cluster p = 0.006; 470–610 ms, cluster p = 0.004). The searchlight analysis also yielded a positive cluster with an early onset (190–920 ms, cluster p < 0.0001), as well as a weak late negative cluster (840–990 ms, cluster p = 0.0416).

To explore the similarity of neural signals for familiarity with, and recollection of objects to those of familiar face processing, classifiers were trained to categorize EEG data for objects judged as familiar, and objects judged as remembered. These classifiers were then tested on EEG signals elicited by familiar faces. Time-resolved cross-classification (Fig. 7) revealed an early (190–410 ms, cluster p = 0.002) cluster in favor of familiarity, while a late cluster favored recollection (660–840 ms, cluster p = 0.003). Similarly, the spatio-temporal searchlight analysis yielded early evidence for familiarity (190–530 ms, cluster p = 0.002), and late evidence for recollection (370–990 ms, cluster p < 0.0001). For detailed statistics, see Supplementary Tables 2 and 6.

Familiar vs. Remembered objects. Classifiers were trained to categorize EEG data for familiar and remembered objects and were tested on EEG data for familiar faces. Regions shaded in red denote significant evidence for familiarity, regions shaded in blue denote significant evidence for recollection. Results of the leave-one-subject-out classification for familiar faces is presented in black for comparison. (Two-sided cluster permutation tests, p < 0.05). Spatio-temporal searchlight results are shown as scalp maps, with classification accuracy scores averaged in 100 ms steps. Sensors and time points forming part of the significant cluster evidencing familiarity are shown in the top row, sensors and time points forming part of the significant cluster evidencing recollection are shown in the bottom row. (Two-sided spatio-temporal cluster permutation tests, p < 0.05). RA/LA: right/left anterior, RC/LC: right/left central, RP/LP: right/left posterior.

Discussion

The aim of this study was to probe the shared signals of memory functions using a data-driven approach. Much of the previous work in this area focused on canonical ERP components in a priori spatial and temporal windows. While this has led to the identification of a number of components related to memory processes, it is also limited in a number of ways. For example, the timing of certain components may shift depending on experimental factors, relevant signals might be mixed with those of other cognitive processes, and signatures of memory processes that do not conform to canonical configurations might be overlooked.

Here, time-resolved cross-experiment classification of EEG signals23,24,25,26 was used to find shared information content indicative of similar underlying memory-related processes across participants, stimulus modalities and tasks, and to probe how these signals unfold over time. Classifier training was conducted on data from experiments investigating face familiarity28,29, object familiarity and recollection19, familiar and unfamiliar music30, and remembered and forgotten object-scene associations31. EEG data for highly familiar and unfamiliar faces comprised the test dataset27.

The main findings of these analyses were the following. (1) Cross-classification performed on all training datasets yielded sustained significant effects; (2) depending on the training dataset, dissociations between an early (ca. 200 to 400 ms) and a late (ca. 400 to 600 ms) time window were observed; (3) familiar and remembered objects differed substantially in the early phase; (4) subjective recognition judgements also yielded positive patterns; and (5) significant late negative decoding was observed for some training datasets.

Early and late effects

Broadly defined early and late effects, such as the FN400 and the LPC, have long been investigated in memory research. Person-perception research uses a different nomenclature, somewhat independent from that of the rest of the field, such as the N170, the N250, and the SFE (but see for example44,45,46). The fact that face-specific components, such as the N250, have also been described for familiar objects trained for expertise, as well as for personally familiar and newly learned objects47,48,49 further complicates the picture. Due to the considerable differences in the timing and topographical distribution across studies using different stimulus types and experimental paradigms8,19, the relationship between these effects remains unclear.

Another factor to consider is the functional separation between components described for different time windows. While an extensive body of literature exists evidencing the differential modulation of the FN400 and the LPC effects, such evidence for early and late face-related effects, that share a similar topographic distribution, has started to emerge only recently. Dalski et al.23 reported sustained cross-dataset temporal generalizability of face-familiarity information between ca. 200 and 600 ms following stimulus onset, a finding that was interpreted as an indication of a single automatic processing cascade that is maintained over time. In this present analysis, temporal cross-classification on face-datasets (Fig. 5A) yielded very similar results. Nevertheless, while similar in scalp distribution, evidence exists that suggests that the early (200–400 ms) and late (> 400 ms) effects might be functionally distinct. Wiese and colleagues found that ERP markers of familiarity are modulated differentially in these two time-windows: while the N250 (200–400 ms) is not substantially affected by attentional load, the sustained familiarity effect (SFE, > 400 ms) relies on the availability of attentional resources50. Cross-classification evidence comes from Li et al.24 who found that cross-classification accuracy for famous faces correlated with the level of familiarity in the ca. 400–600 ms window only, and Dalski et al.25, who reported differential modulation in the early and late windows as a function of the task.

Although the N250 is commonly interpreted as a face-specific ERP component that reflects access to the visual representation of a face, it has been suggested that it also indexes enhanced access to post-perceptual representations. The SFE is theorized to reflect the integration of visual information with person-related (for example, semantic and affective) knowledge18, or an additional elaboration phase in the perceptual face recognition process50.

The results of this present analysis, when training on non-face datasets, also argue for at least a partial functional separation between these two phases. In the right occipito-temporal regions of interest, where within-experiment classification yielded the highest decoding accuracies, a marked dissociation between early and late effects can be observed. Early effects (Fig. 4C, top row) were unambiguously present in the case of face training datasets, and were not significantly above chance in other comparisons, with the important exception of familiar vs. novel objects (see the Familiarity and Recollection section below). In contrast, significant late effects were observed in all comparisons (Fig. 4C, bottom row).

Stimulus material

In all comparisons, strong effects were observed in the approximately 300–400 to 600 ms time window, and these effects encompassed a large number of channels and regions of interest (see Supplementary Information Figure S3B).

Faces. In Dalski et al.23 we reported on the generalizability of face-familiarity signals across experiments, where familiarization was achieved either perceptually, via media exposure, or by personal interaction37. The significant cross-experiment face-familiarity classification involved all three datasets, predominantly over posterior and central regions of the right hemisphere in the 270–630 ms time window. This current study replicates this finding on three independent datasets, providing further evidence for the existence of a robust, general face-familiarity signal.

Familiar and unfamiliar music. The authors of the report30 noted that the EEG responses they observed were similar to those commonly observed in old/new memory retrieval paradigms, suggesting that similar mechanisms may play a role in their generation. This present analysis supports this observation. Evidence for sustained positive cross-classification emerged ca. 300 ms after stimulus onset, most prominently over right central and posterior sites.

The interpretation of these findings needs to take into account that the music material in this experiment was tied also tied to person-knowledge in at least two different ways. First, the familiar music presented was chosen to be personally relevant, as such, effects of personal semantics and autobiographical access cannot be ruled out51. Second, the stimulus set comprised of contemporary popular music. That means, the auditory stimuli might be also associated with access to biographic knowledge of the artists, as well as visual imagery from the accompanying music videos. As evidence exists for the cross-domain activation of related representations, priming being one example52,53, our understanding would benefit from systematically investigating how these factors relate to shared neural patterns of memory functions.

Although large and sustained effects were observed, in contrast to pre-experimentally familiar faces and experimentally familiarized objects, significant cross-classification effects did not manifest earlier than ca. 300 ms after stimulus onset. Two mutually non-exclusive hypotheses present themselves. Music is inherently sequential in nature, as such, in contrast to visual information, the generation of a recognition signal might be delayed by the time needed for the accumulation of the necessary evidence. Studies suggest that a 500 ms long excerpt is needed to reliably identify familiar and unfamiliar music54,55; in a closed set, such as the dataset used here, 100 ms has been shown to be sufficient56. Indeed, the authors measured pupillary responses concurrently with EEG and found an early familiarity effect starting around ca. 100 ms, for which they did not observe a corresponding early ERP component. The temporal generalization matrix in this present analysis, however, yielded significant clusters in central and posterior ROIs for training times between ca. 100 and 170 ms. Although caution must be exercised in taking this weak effect at face value, in the interest of completeness, it is nevertheless reported in Supplementary Information 4. It should be noted that while both the train and test datasets used long-term, personally relevant stimuli, they differed in the type of stimulation they employed. The test dataset used visual stimuli, while the train dataset used auditory stimuli. The delay in cross-decodable early effects may also suggest that early signals of recognition may vary depending on the type of stimulation.

Object-scene associations. Successful, sustained effects were observed approximately 330 ms following stimulus onset over all electrodes, while significant effects over central and posterior clusters were seen as early as 230 ms. Frontal effects first emerged around 350 ms following stimulus onset. It is worth noting that, in contrast to the other experiments analyzed in this study, all stimuli in the test phase were presented in the learning phase and the “familiar” and “unfamiliar” labels were based on whether the participant reported remembering them or not. This means that there were two ways to generate “forgotten” responses: either the participant failed to recall both the cue and the target stimuli, or they remembered (or felt familiarity with) the cue but failed to recall the paired associate. In contrast, “remembered” responses, indicating successful recall, required the participants to remember both the cue and the related target stimulus. How much the recognition of the cue and the successful recall of the target contributed to successful cross-classification is difficult to disentangle, but for reasons detailed in the following sections, it is possible that early peaks over posterior and central regions of interest are more related to a sense of familiarity with the cue, while the later effects are more related to the recollection of the paired associate.

Experimentally familiarized objects. Training on EEG data for familiar objects and testing on personally familiar and unfamiliar faces yielded strong, early (200 ms) effects. In fact, beside the two face-EEG datasets, this condition yielded the most stable early patterns across all regions of interest. As the stimulus material in these two studies was vastly different, the effect of low-level image properties can be ruled out with confidence. This may imply that by this time point, for both faces and objects, the visual information is processed in adequate detail to elicit a stimulus-independent familiarity signal. In stark contrast, cross-classification patterns for images of objects judged as remembered first emerged around 400 ms post-stimulus onset. A more detailed discussion is given in the following section.

Familiarity and recollection

As hinted above, while both early and late cross-classification accuracies in the case of familiarity for newly learned objects and personally familiar faces were remarkably high, no early effect was seen in recollection for objects. It is important to emphasize that this lack of early cross-classification between remembered objects and familiar faces does not necessarily indicate the absence of recognition information present in this early period, only that there were no utilizable shared neural signals between the processes by which recognition is mediated for remembered objects and familiar faces in this early stage. Late signals, on the other hand, were highly informative both in the case of familiar and remembered objects, with more evidence for recollection from ca. 500 ms onward.

It is an open question whether the neural signals for memory representations and decision-making processes play a causal role in supporting mnemonic experiences, such as familiarity and recollection. Additionally, it is not well understood how these signals manifest in the case of false memories. To date, reliable neural markers of false memories have not been established57.

This present study made use of the flexibility of MVPA to explore these questions by selecting and relabeling evoked responses in the training datasets (see Supplementary Information Table 1B) to align with those of the test experiment. In the case of object-scene associations (where no novel items were presented in the test phase), this was achieved by changing the “remembered” and “forgotten” labels to “familiar” and “unfamiliar”. In the case of objects, for false alarms, this meant relabeling novel (i.e., first presented in the test phase) objects for which participants responded “familiar” or “remembered” as such, while keeping the “unfamiliar” labels of correctly identified novel objects. Remarkably, training remembered and forgotten object/scene pairs, and on false alarms for both familiar and remembered objects, yielded robust and sustained cross-classification patterns.

For false-alarm-familiar objects the patters of cross-classification accuracies were very similar to those of correctly identified familiar objects up to ca. 600 ms, with an extended positive decoding effect (Fig. 6A). In contrast to correctly identified remembered objects, training on false-alarm-remembered objects (Fig. 6B) led to early effects as well; the steeply rising late-phase peak, while present, was delayed by ca. 20–50 ms over central-posterior ROIs (see Supplementary Information 5). In the case of forgotten (versus novel) objects, cross-classification over all electrodes yielded a positive cluster for the 400 to 600 ms window, while the early peak seen in the case of familiar objects in bilateral posterior and right central regions of interest was not observed, and no significant clusters were found in frontal ROIs.

Are faces ‘special’?

The human face is possibly the most extensively investigated high-level visual stimulus in cognitive science, and face perception is often viewed as a model system for investigating the development and organization of information processing in the brain10,58,59. Social and personal importance, as individuals and as a species, makes faces a salient stimulus category. Due to some of the very same reasons, the generalizability of the finding in face perception research to other stimulus categories is a matter of debate, summarized in the question: “are faces special?”60,61. In the field of memory research, arguments are made that the recognition of faces may be “inherently different from recognition of other kinds of nonverbal stimuli”62. The question whether the processes that contribute to face recognition are unique can be probed by investigating how these processes unfold for various other stimulus types63. In this present study, the choice of a face recognition dataset as the test set makes it possible to tackle this question.

The results presented here show substantial overlap between recognition signals for faces and other stimulus categories. These effects were most consistent in the later, ca. post-300–400 ms phase, but were also found in the earlier, 200 ms time window in some cases (see the previous Early and Late Effects section). Training classifiers on data for various stimulus types and using these to test familiarity in data for highly personally familiar and unfamiliar faces yielded extensive positive effects, arguing for similar information processing.

On first sight, the results of this analysis argue for at least a partial overlap between systems that subserve the recognition of faces and other stimulus categories, particularly in the later (ca. 400 ms onward) stages of processing. However, alternative explanations for this shared effect must be considered. Due to the low spatial resolution of EEG, the exact structural localization of the origin of these signals is challenging. For example, regions thought to be category-selective are found in nearby anatomical locations in the lateral occipital (e.g., OFA for faces, LO for objects, EBA for bodies, OPA for places) and temporal cortices (FFA for faces, pFs for objects, FBA for bodies, PPA for scenes)64,65,66,67. It is thus possible that while successful cross-classification in part reflects similar activity in the same areas of the brain (e.g., the hippocampus), other parts of the shared signal may result from information flow in parallel processing pathways between different nodes within centimeters of distance. Source-localized MEG and fMRI-M/EEG fusion methods should investigate this question in the future.

Time since acquisition

Three of the experiments in this report used single-session experimental familiarization: Sommer et al.29 involved a 10 to 60 s familiarization phase with a previously unfamiliar single face image immediately before the start of the experiment. The 180-item acquisition phase and the test phase in Dimsdale-Zucker et al.19 was separated by a 45–60-min interval. Treder et al.31 had eight, 32-item encoding/recall blocks, each with a 3-min delay between learning and test. In two studies, stimuli were pre-experimentally familiar: Wakeman and Henson28 used faces of well-known celebrities, while Jagiello et al.30 presented personally relevant music. Stimuli in the test dataset27 were also pre-experimentally familiar (faces of personally highly familiar identities). Despite these differences, consistent and overlapping cross-classification accuracies were observed in all analyses.

Old/new effects similar to those observed for long-term memory have been observed for short-term memory tasks. Using a wide range of stimulus types (single letters, words and pseudowords, pictures of objects, dots at different spatial locations, and two-dimensional sinusoidal gratings), Danker and colleagues62 have found that the amplitude of the FN400 showed old/new effects only for verbal stimuli, which increased with recency. Old/new effects indexed by the LPC, on the other hand, were seen for a range of stimulus types, and were not modulated by recency.

Although systematic studies on other stimulus types are yet to be conducted, EEG patterns for short-term, experimentally familiarized faces have been shown to generalize to longer-term personal familiarity23,26. The results of this present study further support these findings, as training on single-session familiarization data, in addition to faces29, familiar objects19 and object-scene associations31, all yielded positive effects.

Negative decoding

Post-500 ms sustained, significant below-chance classification accuracies were seen in several training datasets. Similar below-chance classification patterns are commonly observed, especially in temporal generalization analyses68,69,70,71. Interestingly, the reasons for such reversal phenomena are not yet understood32, and the issue has received little attention so far. That this reversal is not merely an epiphenomenon related to the end of the stimulus presentation but is a meaningful effect that reflects cognitive processing is indicated by its attenuation when false alarms are tested against correct rejections in the familiar/remembered objects experiment (Fig. 6A and B, Supplementary Information 5). Sustained positive cross-decodability in these cases may reflect neural activity related to extended search processes, or, alternatively, extra-experimental familiarity.

In this present investigation, sustained, significant below-chance classification performance was only observed in studies that involved short-term, laboratory-based familiarization methods, i.e., in the cases of the experimentally familiarized face and the familiar/remembered objects experiments. Cross-classification did not yield such patterns when the pre-experimentally familiar (famous) faces and pre-experimentally familiar music datasets were used for training. Thus, it is possible that such pattern reversal effects are related to control processes for items with recent or weak memory traces.

Further complicating the interpretation of these findings is the fact that the studies where stimuli were newly learned were also the ones that directly tested recollection for these items. The experimentally familiarized face and the familiar/remembered objects experiment both required responses that pertained to memory recall. The familiar music experiment required no response, while the task—symmetry judgement—was not related to familiarity in the famous faces study. As the type of the required response and the length of familiarity were not independent factors, further research is needed to study the causes of this phenomenon.

Further considerations

This analysis was based on publicly available EEG datasets that were collected in different laboratories using different EEG systems under varying conditions. The stimulus presentation times, number of trials and repetitions, and control for low-level properties also varied significantly across the studies. In addition to the stimulus categories analyzed in this study, some of the experiments also presented additional stimulus types, such as the participant's own face in the study by Sommer et al.29, scrambled faces in the study by Wakeman and Henson28, and a “no variance” condition using a second familiar/unfamiliar pair in the study by Wiese et al.27. It is highly likely that the complete stimulus pool has an influence on the neural signals for individual stimulus conditions. Therefore, it is important to note that this analysis does not represent a systematic investigation into the factors that contribute to successful cross-classification. Further research is needed to systematically investigate these factors, and to replicate and extend these findings in more controlled and standardized conditions.

Considering that the spatio-temporal pattern of the effects is dependent on the information content in both the training and test datasets, neural signals of recognition that are not present in either the training or test dataset will not be captured by the analysis. Therefore, it is important to keep in mind that the current study used a single test dataset that focused on face familiarity. Future studies should explore the potential effects of other stimulus types, such as written and spoken words, which are commonly used in neuroscience research and have not been investigated here.

Stimulus type specificity in familiarity and recollection has been reported in lesion case studies. For example, patient N.B.72,73, who underwent surgical resection of left anterior temporal-lobe structures, demonstrated a deficit in familiarity assessment (with preservation of recollection) for non-words but performed normally with abstract pictures and faces. This material-specificity suggests a dissociation in familiarity assessment, unrelated to task difficulty or sensory modality, supporting the notion that the neural mechanisms and representations facilitating familiarity assessment in the temporal lobe are influenced by stimulus type. Therefore, it would be valuable to expand this research presented here to include stimulus classes not examined in this study, e.g. words and pseudowords. Additionally, employing cross-dataset classification could be beneficial for comparing healthy individuals with those experiencing memory impairments.

Additionally, it would be interesting to explore how other sensory modalities, such as auditory or tactile stimuli, may influence these shared neural representations. Furthermore, it would be valuable to examine the interplay between recollection, neural representations of various stimulus properties, and attention in the context of familiarity and recollection.

Conclusion

In this study, multivariate cross-dataset classification analysis was used to investigate the shared signals of recognition memory across a range of different stimulus types and experimental conditions. This approach allows for the identification of patterns of neural activity that are shared across different conditions and datasets, providing a more comprehensive view of the neural dynamics underlying memory processes. The results indicate that the neural signals for face stimuli generalized remarkably well starting from around 200 ms following stimulus onset. For non-face stimuli, successful cross-classification was most robust in the later period. Furthermore, a dissociation between familiar and remembered objects was observed, with shared signals present only in the late window for correctly remembered objects, while cross-decoding results for familiar objects were present in the early period as well. Furthermore, the subjective sense of familiarity and/or recollection, i.e., false alarms and incorrect responses, also produced similar patterns of cross-classification. Overall, these findings show that multivariate cross-classification across datasets can contribute to our understanding of the neural dynamics of memory processes.

Data and code availability

Data and code are made openly available. Url: https://osf.io/qcr2g/.

References

Rugg, M. D. & Curran, T. Event-related potentials and recognition memory. Trends Cogn. Sci. 11, 251–257. https://doi.org/10.1016/j.tics.2007.04.004 (2007).

Brown, M. W. & Banks, P. J. In search of a recognition memory engram. Neurosci. Biobehav. Rev. 50, 12–28. https://doi.org/10.1016/j.neubiorev.2014.09.016 (2015).

Wolk, D. A. et al. ERP correlates of recognition memory: Effects of retention interval and false alarms. Brain Res. 1096, 148–162 (2006).

Yonelinas, A. P. The nature of recollection and familiarity: A review of 30 years of research. J. Mem. Lang. 46, 441–517 (2002).

Donaldson, D. I. & Rugg, M. D. Recognition memory for new associations: Electrophysiological evidence for the role of recollection. Neuropsychologia 36, 377–395 (1998).

Wilding, E. L. & Rugg, M. D. An event related potential study of memory for words spoken aloud or heard. Neuropsychologia 35, 1185–1195 (1997).

Mecklinger, A. & Jäger, T. Episodic memory storage and retrieval: Insights from electrophysiological measures. In Neuroimaging of Human MemoryLinking cognitive processes to neural systems (eds Rösler, F. et al.) 357–382 (Oxford University Press, 2009). https://doi.org/10.1093/acprof:oso/9780199217298.003.0020.

Kwon, S., Rugg, M. D., Wiegand, R., Curran, T. & Morcom, A. M. A meta-analysis of event-related potential correlates of recognition memory. Psychon. Bull. Rev. 30, 2083–2105 (2023).

Jacoby, L. L. A process dissociation framework: Separating automatic from intentional uses of memory. J. Mem. Lang. 30, 513–541 (1991).

Young, A. W. Faces, people and the brain: The 45th Sir Frederic Bartlett Lecture. Quart. J. Exp. Psychol. 71, 569–594 (2018).

Visconti di Oleggio Castello, M. & Gobbini, M. I. Familiar face detection in 180ms. PLoS One 10, e0136548 (2015).

Crouzet, S. M. Fast saccades toward faces: Face detection in just 100 ms. J. Vis. 10, 1–17 (2010).

Morrisey, M. N., Hofrichter, R. & Rutherford, M. D. Human faces capture attention and attract first saccades without longer fixation. Vis. Cogn. 27, 158–170 (2019).

Ramon, M. & Gobbini, M. I. Familiarity matters: A review on prioritized processing of personally familiar faces. Vis. Cogn. 26, 179–195 (2018).

Johnston, P., Overell, A., Kaufman, J., Robinson, J. & Young, A. W. Expectations about person identity modulate the face-sensitive N170. Cortex 85, 54–64 (2016).

Huang, W. et al. Revisiting the earliest electrophysiological correlate of familiar face recognition. Int. J. Psychophysiol. 120, 42–53 (2017).

Schweinberger, S. R. & Neumann, M. F. Repetition effects in human ERPs to faces. Cortex https://doi.org/10.1016/j.cortex.2015.11.001 (2016).

Wiese, H. et al. A robust neural index of high face familiarity. Psychol. Sci. 30, 261–272 (2019).

Dimsdale-Zucker, H. R., Maciejewska, K., Kim, K., Yonelinas, A. P. & Ranganath, C. Relationships between individual differences in dual process and electrophysiological signatures of familiarity and recollection during retrieval. Neuropsychologia https://doi.org/10.1101/2021.09.15.460509 (2022).

Campbell, A., Louw, R., Michniak, E. & Tanaka, J. W. Identity-specific neural responses to three categories of face familiarity (own, friend, stranger) using fast periodic visual stimulation. Neuropsychologia 141, 107415 (2020).

Staresina, B. P. & Wimber, M. A neural chronometry of memory recall. Trends Cogn. Sci. 23, 1071–1085 (2019).

Kaplan, J. T., Man, K. & Greening, S. G. Multivariate cross-classification: Applying machine learning techniques to characterize abstraction in neural representations. Front. Hum. Neurosci. https://doi.org/10.3389/fnhum.2015.00151 (2015).

Dalski, A., Kovács, G. & Ambrus, G. G. Evidence for a general neural signature of face familiarity. Cerebral Cortex 32, 2590–2601 (2022).

Li, C., Burton, A. M., Ambrus, G. G. & Kovács, G. A neural measure of the degree of face familiarity. Cortex 155, 1–12 (2022).

Dalski, A., Kovács, G., Wiese, H. & Ambrus, G. G. Characterizing the shared signals of face familiarity: Long-term acquaintance, voluntary control, and concealed knowledge. Brain Res. 1796, 148094 (2022).

Dalski, A., Kovács, G. & Ambrus, G. G. No semantic information is necessary to evoke general neural signatures of face familiarity: Evidence from cross-experiment classification. Brain Struct. Funct. 228, 449–462 (2023).

Wiese, H. et al. Detecting a viewer’s familiarity with a face: Evidence from event-related brain potentials and classifier analyses. Psychophysiology 59, 1–21 (2022).

Wakeman, D. G. & Henson, R. N. A multi-subject, multi-modal human neuroimaging dataset. Sci. Data 2, 150001 (2015).

Sommer, W. et al. The N250 event-related potential as an index of face familiarity: A replication study. R. Soc. Open Sci. 8, 202356 (2021).

Jagiello, R., Pomper, U., Yoneya, M., Zhao, S. & Chait, M. Rapid brain responses to familiar vs. unfamiliar music – an EEG and pupillometry study. Sci. Rep. 9, 1–13 (2019).

Treder, M. S. et al. The hippocampus as the switchboard between perception and memory. Proc. Natl. Acad. Sci. https://doi.org/10.1073/pnas.2114171118 (2021).

King, J. R. & Dehaene, S. Characterizing the dynamics of mental representations: The temporal generalization method. Trends Cogn. Sci. 18, 203–210. https://doi.org/10.1016/j.tics.2014.01.002 (2014).

Grootswagers, T., Wardle, S. G. & Carlson, T. A. Decoding dynamic brain patterns from evoked responses: A tutorial on multivariate pattern analysis applied to time series neuroimaging data. J. Cogn. Neurosci. 29, 677–697 (2017).

van den Hurk, J. & Op de Beeck, H. P. Generalization asymmetry in multivariate cross-classification: When representation A generalizes better to representation B than B to A. bioRxiv https://doi.org/10.1101/592410 (2019).

Tanaka, J. W., Curran, T., Porterfield, A. L. & Collins, D. Activation of preexisting and acquired face representations: The N250 event-related potential as an index of face familiarity. J. Cogn. Neurosci. 18, 1488–1497 (2006).

Ambrus, G. G., Kaiser, D., Cichy, R. M. & Kovács, G. The neural dynamics of familiar face recognition. Cerebral Cortex 29, 4775–4784 (2019).

Ambrus, G. G., Eick, C. M., Kaiser, D. & Kovács, G. Getting to know you: Emerging neural representations during face familiarization. J. Neurosci. 41, 5687–5698 (2021).

Carlson, T. A., Grootswagers, T. & Robinson, A. K. An introduction to time-resolved decoding analysis for M/EEG. In The cognitive neurosciences (eds Poeppel, D. et al.) 679–690 (The MIT Press, 2020). https://doi.org/10.7551/mitpress/11442.003.0075.

Delorme, A. EEG is better left alone. Sci. Rep. 13, 2372 (2023).

Maris, E. & Oostenveld, R. Nonparametric statistical testing of EEG- and MEG-data. J. Neurosci. Methods 164, 177–190 (2007).

Gramfort, A. et al. MNE software for processing MEG and EEG data. Neuroimage 86, 446–460 (2014).

Pedregosa, F. et al. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 12, 2825–2830 (2011).

Virtanen, P. et al. SciPy 1.0: Fundamental algorithms for scientific computing in Python. Nat. Methods 17, 261–272 (2020).

Herzmann, G. & Sommer, W. Effects of previous experience and associated knowledge on retrieval processes of faces: An ERP investigation of newly learned faces. Brain Res. 1356, 54–72 (2010).

Nessler, D., Mecklinger, A. & Penney, T. B. Perceptual fluency, semantic familiarity and recognition-related familiarity: An electrophysiological exploration. Cogn. Brain Res. 22, 265–288 (2005).

MacKenzie, G. & Donaldson, D. I. Dissociating recollection from familiarity: Electrophysiological evidence that familiarity for faces is associated with a posterior old/new effect. Neuroimage 36, 454–463 (2007).

Scott, L. S., Tanaka, J. W., Sheinberg, D. L. & Curran, T. A reevaluation of the electrophysiological correlates of expert object processing. J. Cogn. Neurosci. 18, 1453–1465 (2006).

Scott, L. S., Tanaka, J. W., Sheinberg, D. L. & Curran, T. The role of category learning in the acquisition and retention of perceptual expertise: A behavioral and neurophysiological study. Brain Res. 1210, 204–215 (2008).

Pierce, L. J. et al. The N250 brain potential to personally familiar and newly learned faces and objects. Front. Hum. Neurosci. 5, 1–13 (2011).

Wiese, H. et al. Later but not early stages of familiar face recognition depend strongly on attentional resources: Evidence from event-related brain potentials. Cortex 120, 147–158 (2019).

Jakubowski, K. & Ghosh, A. Music-evoked autobiographical memories in everyday life. Psychol. Music 49, 649–666 (2021).

Ambrus, G. G., Amado, C., Krohn, L. & Kovács, G. TMS of the occipital face area modulates cross-domain identity priming. Brain Struct. Funct. 224, 149–157 (2019).

Koelsch, S. et al. Music, language and meaning: Brain signatures of semantic processing. Nat. Neurosci. 7, 302–307 (2004).

Tillmann, B., Albouy, P., Caclin, A. & Bigand, E. Musical familiarity in congenital amusia: Evidence from a gating paradigm. Cortex 59, 84–94 (2014).

Filipic, S., Tillmann, B. & Bigand, E. Judging familiarity and emotion from very brief musical excerpts. Psychon. Bull. Rev. 17, 335–341 (2010).

Schellenberg, E. G., Iverson, P. & McKinnon, M. C. Name that tune: Identifying popular recordings from brief excerpts. Psychon. Bull. Rev. 6, 641–646 (1999).

Xue, G. The neural representations underlying human episodic memory. Trends Cogn. Sci. 22, 544–561 (2018).

Scherf, K. S., Behrmann, M. & Dahl, R. E. Facing changes and changing faces in adolescence: A new model for investigating adolescent-specific interactions between pubertal, brain and behavioral development. Dev. Cogn. Neurosci. 2, 199–219 (2012).

Yovel, G., Wilmer, J. B. & Duchaine, B. What can individual differences reveal about face processing?. Front. Hum. Neurosci. 8, 562 (2014).

Yue, X., Tjan, B. S. & Biederman, I. What makes faces special?. Vision Res. 46, 3802–3811 (2006).

Liu, C. H. & Chaudhuri, A. What determines whether faces are special?. Vis. Cogn. 10, 385–408 (2003).

Danker, J. F. et al. Characterizing the ERP old-new effect in a short-term memory task. Psychophysiology 45, 784–793 (2008).

Tovée, M. J. Is face processing special?. Neuron 21, 1239–1242 (1998).

Taylor, J. C., Wiggett, A. J. & Downing, P. E. Functional MRI analysis of body and body part representations in the extrastriate and fusiform body areas. J. Neurophysiol. 98, 1626–1633 (2007).

Kanwisher, N. Functional specificity in the human brain: A window into the functional architecture of the mind. Proc. Natl. Acad. Sci. USA 107, 11163–11170 (2010).

Kamps, F. S., Julian, J. B., Kubilius, J., Kanwisher, N. & Dilks, D. D. The occipital place area represents the local elements of scenes. Neuroimage 132, 417–424 (2016).

Weiner, K. S., Natu, V. S. & Grill-Spector, K. On object selectivity and the anatomy of the human fusiform gyrus. Neuroimage 173, 604–609 (2018).

Carlson, T. A., Hogendoorn, H., Kanai, R., Mesik, J. & Turret, J. High temporal resolution decoding of object position and category. J. Vis. 11, 9–9 (2011).

Carlson, T., Tovar, D. A., Alink, A. & Kriegeskorte, N. Representational dynamics of object vision: The first 1000 ms. J. Vis. 13, 1–1 (2013).

King, J. R., Gramfort, A., Schurger, A., Naccache, L. & Dehaene, S. Two distinct dynamic modes subtend the detection of unexpected sounds. PLoS One 9, e85791 (2014).

Nikolić, D., Usler, S. H., Singer, W. & Maass, W. Distributed fading memory for stimulus properties in the primary visual cortex. PLoS Biol 7, e1000260 (2009).

Köhler, S. & Martin, C. B. Familiarity impairments after anterior temporal-lobe resection with hippocampal sparing: Lessons learned from case NB. Neuropsychologia 138, 107339 (2020).

Bowles, B. et al. Impaired familiarity with preserved recollection after anterior temporal-lobe resection that spares the hippocampus. Proc. Natl. Acad. Sci. 104, 16382–16387 (2007).

Acknowledgements

This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

G.G.A. is the sole author of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Ambrus, G.G. Shared neural codes of recognition memory. Sci Rep 14, 15846 (2024). https://doi.org/10.1038/s41598-024-66158-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-66158-y

Keywords

This article is cited by

-

Shared neural dynamics of facial expression processing

Cognitive Neurodynamics (2025)