Abstract

The objective measurements of the real-world optimization problems are mostly subject to noise which occurs due to several reasons like human measurement or environmental factors. The performance of the optimization algorithm gets affected if the effect of noise is higher than the negligible limit. The previous noise handling optimization algorithms use a large population size or multiple sampling at same region which increases the total count of function evaluations, and few methods work for a particular problem type. To address the above challenges, a Differential Evolution based Noise handling Optimization algorithm (NDE) to solve and optimize noisy bi-objective optimization problems is proposed. NDE is a Differential Evolution (DE) based optimization algorithm where the strategies for trial vector generation and the control parameters of DE algorithm are self-adapted using fuzzy inference system to improve the population diversity along the evolution process. In NDE, explicit averaging based method for denoising is used when the noise level is higher than negligible limit. Extending noise handling method enhances the performance of the optimization algorithm in solving real world optimization problems. To improve the convergence characteristics of the proposed algorithm, a restricted local search procedure is proposed. The performance of NDE algorithm is experimented using DTLZ and WFG problems, which are benchmark bi-objective optimization problems. The obtained results are compared with other SOTA algorithm using modified Inverted Generational Distance and Hypervolume performance metrics, from which it is confirmed that the proposed NDE algorithm is better in solving noisy bi-objective problems when compared to the other methods. To further strengthen the claim, statistical tests are conducted using the Wilcoxon and Friedman rank tests, and the proposed NDE algorithm shows significance over the other algorithms rejecting the null hypothesis.

Similar content being viewed by others

Introduction

Many real-world problems are formulated as optimization problems. While modeling the optimization problems, noise may arise from different roots and such problems are called noisy optimization problems. There are various sources from which noise may arise such as, measurement errors, incompleteness in data and environmental factors, due to which it is difficult to attain appropriate objective function value1,2,3. The impact of such noise factors in optimization problems can be, different objective value results over multiple evaluations for a same individual. The other impact of noise is, when the noise strength is high, population will contain large number of poor solutions, due to which the environment selection process is affected and deviates the search direction away from the true front4. Thus, when the effect of noise is high it may influence more in search process5. It is vital to consider noise factor while modelling the optimization problem. Including the noise factor along with objective function value while evaluation is presented in Eq. 1.

where \(\varepsilon\) is the noise factor, \(\varepsilon \sim N(0,{\sigma }^{2},I)\). The standard deviation component \({\sigma }^{2}\) denotes the noise strength and \(I\) is the identity matrix.

Evolutionary algorithms (EAs) mimic the natural selection and genetic inheritance principles. EA samples a population of candidate solutions, and new solutions are generated through selection, mutation and crossover operations. Promising solutions are selected for the next generation. EAs are robust in handling noisy optimization problems. The performance of EA may deteriorate when level of noise is high. Few notable draw backs of EA include fine tuning the control parameters associated with the algorithm according to the problem and premature convergence.

Real-world Engineering optimization problems mostly requires optimization of multiple objectives simultaneously. These problems are classified as multi-objective optimization problems (MOPs). Mathematical representation of a MOP is given by Eq. 2,

where, \(Y=[{Y}_{1}, {Y}_{2},.., {Y}_{d}]\) is a vector of \(d\) decision variables subject to boundary constraints and \(F(Y)\) is the objective vector with \(m\) objective functions. The objective of multi-objective optimization problem is to attain a set of pareto optimal solutions that exhibits good convergence and diversity characteristics.

Evolutionary algorithms are one among the widely used methods to solve such multi-objective optimization problems for the past two decades, since it is simple and much prior knowledge is not required and has a greater potential to perform global search. Nondominated sorting genetic algorithm II (NSGA-II)6, Strength pareto evolutionary algorithm (SPEA2)7 are popular elitism based multi-objective evolutionary algorithms. As stated earlier, the performance of EA can be affected if the noise level is high.

The existing techniques designed for dealing with such optimization problems with noise factor can be categorized in to averaging, ranking and modeling based methods. Where, the averaging based methods can be categorized as explicit and implicit averaging methods. Explicit averaging method evaluates a solution for multiple times, and the average value is considered as the objective function value. In the implicit averaging model, size of the population is increased for reducing the impact of noise. Ranking based optimization methods are grouped in to probability ranking and clustering based ranking. In the probabilistic ranking method, the process of selecting fitter individuals is modified. Instead of the conventional dominance relation based selection operator, the probabilistic dominance factor is used to mitigate the effect of noise which estimates the dominance probability amongst two solutions. The clustering ranking method of noise handling is based on estimation of clustering radius in order to select fitter solutions. In the modeling based method of handling noisy optimization problems, a model is derived based on a solution set where the impact of noise could be less while compared to its effect on a single solution.

Given such wide range of algorithms to handle noisy optimization problems, the limitations associated with each of the methods are also to be considered. In general, averaging based methods are computationally expensive, since the function evaluations count it takes is high. In ranking based optimization methods, the results may be inaccurate since the dominance based ranking is replaced with probabilistic or other alternate techniques. Modeling method based algorithms may not be applicable to a wide range of optimization problems and can be suitable in solving problem with specific characteristics.

From the above study, we propose a Differential Evolution based noise handling optimization algorithm (NDE) to optimize noisy bi-objective optimization problems. The optimizer used is FAMDE-DC8 as it is robust and efficient in handling optimization problems of varied characteristics. The main contributions include:

-

Differential evolution based noise handling optimization algorithm (NDE) is developed using the FAMDE-DC algorithm as the base optimizer combined with noise handling method.

-

Adaptive switching technique9 is extended through which denoising method is applied only when the noise factor is high. Explicit averaging based method of denoising is used for handling noise.

-

To improve the exploitation properties of the NDE algorithm, a restricted local search technique is proposed.

-

Experimenting the performance of NDE algorithm. Benchmark optimization problems from DTLZ and WFG suite are used with noise inclusion. Modified inverted generational distance and the hypervolume indicators are utilized for performance evaluation. Results are compared with other algorithms and further non-parametric statistical test is conducted to strengthen the findings.

The paper is organized as follows, the related work is discussed in section “Related works”, the FAMDE-DC optimizer and the technique to measure the noise strength is given under section “Preliminaries”. In section “proposed algorithm”, the proposed NDE algorithm is detailed. In section “experimentation”, the experimental setup, test problems, performance metrics, results are presented. The conclusions are given in section “conclusions”. The notations used in the research and its expansion are listed in Table 1.

Related works

Differential Evolution algorithm10 is simple to extend, robust single objective optimization algorithms. Several algorithms by extending DE to solve MOPs are presented by various researchers. In11, authors introduced DE algorithm based on pareto dominance approach suitable to optimize multi-objective problems and the algorithm is named as Paret-frontier differential evolution algorithm (PDE). In Differential evolution for multi-objective optimization (DEMO)12, the base DE algorithm is combined with pareto ranking and crowding distance based sorting methods suitable to be applicable to optimize multi-objective optimization problems. The DEMO algorithm is further extended by13 to solve many objective optimization problems by using correlation-based ordering of the objectives for the selection of conflicting objectives subset and they named it as \(\propto\)-DEMO. In14, the authors have presented a multi-objective self-adaptive differential evolution (MOSADE) in which the non-dominated solutions are retained using external elitist archive and further diversity is improved using crowding entropy measure. Differential evolution based multi-objective optimization by controlling the population diversity through self- adaptation of strategies used for trial vector generation and the control parameters using fuzzy system is achieved by FAMDE-DC algorithm8. In15, authors have proposed a self-adaptive Trajectory optimization method used to optimize the problem associated in the UAV based mobile edge computing system. Differential Evolution algorithm is improved using a distance indicator and two stage mutation strategy suitable to optimize multimodal multi-objective problems16. The above works show the importance and potential of the algorithms in solving optimization problems.

Some of the existing multi-objective evolutionary algorithms developed to handle noisy optimization problems are summarized. In16, authors have used iterative resampling procedure to lower the effect of noise. They have adapted a varying number of samples for a solution depending on the current noise factor in the search space. Two approaches are used for uncertainty reduction, such as resampling and increasing population size17 which is applied to solve optimization problem in feedback control of combustion. Explicit averaging and modeling based method of denoising9 is used to reduce the noise and the authors have proposed adaptive switch strategy to choose the noise treatment and type based on effect of noise. The population size is increased or decreased based on the observed objective function values in the predefined number of iterations18. Population size control based evolutionary strategy named pcCMSA-ES19, where linear regression and hypothesis test are used to detect noise effect, upon which the population size is varied. The learning rate parameter is adapted based up on the noise ratio20, the empirical results of this learning rate adaptation when compared to resampling or increasing the size of samples is better but, the convergence rate is not optimal.

To reduce noise, the authors have used stochastic and significance based dominance methods for solution selection21. Probability based ranking is used for selecting the fitter solutions to handle noise22. Clustering based ranking scheme23 is used to handle noisy optimization problems. Algorithm based on restricted Boltzmann machine is used to build the probabilistic model and is hybridized with particle swarm algorithm to handle noisy optimization problems24. Regularity model is combined with NSGA-II algorithm for denoising25. Adaptive switch strategy9 is introduced through switch and select amongst the denoising techniques such as averaging and modeling methods based on the noise strength measurements. In26, authors have used radial basis function networks as denoising method.

In Filters based NSGA-II (FNSGA-II)27, mean and wiener filters are included with optimization algorithms to handle noise in images and signals. These filters help to reduce the noise factor balancing convergence and diversity. A noise handling method for surrogate assisted evolutionary algorithms28 by using radial basis function is proposed. Further, sampling strategies are chosen based on the convergence and diversity characteristics using adaptive switch technique. A Gaussian and Regularity model based NSGA-II algorithm named GMRM-NSGA-II is suggested to handle noisy multi-objective optimization problems. Population is divided to subpopulations and the above two models are applied to each of the population to improve convergence and diversity30.

The summary of literature review is given in Table 2.

Preliminaries

The base optimizer FAMDE-DC and the technique to measure the noise strength is presented in this section.

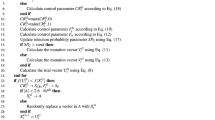

FAMDE-DC algorithm

FAMDE-DC8 is a multi-objective optimization algorithm. It is a Differential Evolution (DE) based algorithm. Crossover rate \((CR)\) value, which is a vital control parameter is self-adapted by using fuzzy system in order to improve the diversity among population. A pool of strategies for trial vector generation29 is used to generate trial vectors and the strategies are self-adapted based on their success index in previous generations, which indicates how successful a strategy is in generating solutions which are entering in to successive generation after selection process. The selection of solutions that are fitter is performed through fast non-dominated sorting6, improved with controlled elitism30 and dynamic crowding distance techniques31. Algorithm 1 shows the steps in FAMDE-DC algorithm and is detailed below.

Initialization

The initial population set of size (\(NP\)) is generated randomly covering the search space boundary limits. Next the control parameters \(CR\) and \(F\) of the DE algorithm are initialized. The learning period (\(LE)\) is set as 50.

Fitness evaluation

Objective function value is estimated for all the solutions in the population set.

Crossover rate adaptation

The control parameter crossover rate is adapted using fuzzy inference system to control the diversity of population. After the fitness function evaluation, the current generation population diversity \((popdiversity)\) estimated using distance to average point technique32. Reference diversity variable \(\left(refdiversity\right)\) is set at 0.15. The difference between the current population diversity and reference diversity is estimated and given as input parameter to the single input/output fuzzy inference system. The fuzzy system maps the input with the implication rule set generated and it outputs the changes required to be made in the crossover rate in order to improve the population diversity. This change factor is used in crossover rate value generation, thus the crossover rate is self-adapted.

Trial vector generation

There are various strategies widely used in DE based algorithms for trial vector generation. The performance of the strategies varies among the optimization problems to which it is applied. Their performance characteristics even differs during the various stages of evolution, thus choosing a strategy for solving a particular optimization problem based on trial and error method is time consuming. Thus, a strategy pool with four trial vector generation strategies is extended29. During the initial learning period \((LE)\) the strategies are chosen with equal probability and later the strategies are chosen based on its success index value. The success index is an estimation of the percentage of solutions generated using a particular strategy, successfully chosen for the next generation after the selection process. The control parameter value crossover rate \((CR)\) is generated through normal distribution \(N(Mean,Std)\), where the crossover rate mean \((CRm)\) is initially set at 0.5 and later is adapted using the population diversity and success index. The value of standard deviation set at 0.1. The scaling factor \((F)\) is generated using the normal distribution with mean and standard deviation values 0.5 and 0.3 respectively.

Selection

The trial and the target vectors are combined in to population size of \(2NP\) solutions. Fast non-dominated sorting6 technique is used to split the solutions in to front levels based on non-domination factor. Solutions are selected based on controlled elitism30 and dynamic crowding distance31 techniques which ensures solutions from all the fronts are selected in order to improve the lateral diversity of the pareto front.

Measuring noise strength

Resampling is a common method used to measure and quantify the strength of the noise. The steps involved in estimation of noise strength is given below9,33. The very first step in measuring noise strength involves, estimating the count of resampling solutions which are utilized to observe noise strength which is estimated using the formula in Eq. 3.

where, the variable \(NP\) is the size of the population set, the resampling ratio is given by \({\lambda }_{res}\) and this parameter limits the percentage of solutions that are to be re-evaluated. The count of solutions to be resampled is given by \({N}_{res}\) and \({f}_{pro}\) is the probabilistic based rounding function.

After estimating the count of resampling solutions, the second step is to choose randomly the \({N}_{res}\) number of solutions to be resampled. The third step is resampling the solutions and the technique of resampling differs based on the usage of explicit and implicit averaging denoising method. Upon resampling we will be left with two sets. For instance, let the solution set with noise inclusion is \({x}_{i}{\prime}={x}_{i}+N(0,{\sigma }^{2})\), where \(i=\{\text{1,2},..d\}\) and \(d\) represents the problem dimension. The sets before and after resampling are: \({\mathcal{L}}_{1}=\{{\widetilde{f}}_{1}, {\widetilde{f}}_{2},\dots ,{\widetilde{f}}_{{N}_{re}},{\widetilde{f}}_{{N}_{re}+1},\dots {\widetilde{f}}_{N}\}\) and \({\mathcal{L}}_{2}=\{{\widetilde{f}}_{1}{\prime}, {\widetilde{f}}_{2}{\prime},\dots ,{\widetilde{f}}_{{N}_{re}}{\prime},{\widetilde{f}}_{{N}_{re}+1},\dots {\widetilde{f}}_{N}\}\).

The fourth step is calculation of rank change, for each of the solutions that are resampled and is given in Eq. 4.

where, the variable \(rank\left({\widetilde{f}}_{i}\right)\) and \(rank\left({\widetilde{f}}_{i}{\prime}\right)\) is the rank of solution in the set \(\mathcal{L}\). The \(\text{sign}()\) function returns + 1/-1/0 based on the whether argument is positive/negative/otherwise, this function aids in eliminating the change of rank created by the resampling solution. The rank change fall within the range: \(\left|{\Delta }_{i}\right| \in \{\text{0,1},2,..2N-2\}\).

The last step is measuring the noise strength \(s\) and is given in Eq. 5.

where, in the above equation rank change \({\Delta }_{i}\) is compared with variable \({\Delta }_{\theta }^{lim}\) which is limit based on the acceptance threshold \(\theta\), and it indicates \(\theta /2th\) percentile in the sequence of possible change of rank \({\mathbb{S}}=\{\left|1-R\right|,\left|2-R\right|, \dots ,|2N-1-R|\}\). The steps involved include generation of the \({\mathbb{S}}\) sequence, next the sequence \({\mathbb{S}}\mathbb{^{\prime}}\) is obtained by arranging \({\mathbb{S}}\) and at last \(\theta /2th\) percentile is calculated from sequence \({\mathbb{S}}\mathbb{^{\prime}}\). \(1\) is the indicator function and if its argument is matched correct then + 1 is returned else 0 is returned.

Thus, the strength of the noise given by variable \(s\) gives the range of noise in the objective function. If the result \(s<0\) then noise is within the limit of acceptance else, the resampled solutions have contributed to the change in rank beyond the acceptable limits and noise has huge effect over objective function.

Proposed algorithm

The proposed Differential evolution based noise handling optimization algorithm (NDE) is detailed in this section. The algorithm and flowchart are given below in Algorithm 2 and Fig. 1 respectively.

Initialization

Initial population set of size \(NP\) is generated randomly.

Trial vector generation

Trial vector is generated using a pool of strategies for trial vector generation8,29 as used in the base optimizer FAMDE-DC. The strategies are adapted based on success index and crossover rate is adapted using fuzzy system.

Noise estimation and reduction

The effect or strength of noise is estimated through techniques as discussed in section “Measuring noise strength” with following modifications suitable to be applicable multi-objective optimization problems. The trial vector of \(NP\) solutions are evaluated with fast non-dominated sorting technique6 and categorized in to non-dominated sets or fronts based on the domination count. The fitness of each solution is thus estimated using the fast non-dominated sorting based technique. The solutions used to measure the strength of noise for resampling is selected randomly from first front and, if sufficient solutions are not available in first front, then solutions from subsequent fronts are chosen till the required \({N}_{re}\) solutions are selected. If the estimated noise strength given by variable \(s\) is less than 0, then the effect of noise is minimal and it is not required to denoise. Else, the noise is to be reduced using denoising method. The process for noise strength measurement and reduction is given in Algorithm 3.

Explicit averaging-based method for denoising is applied to reduce strength of noise. This method uses resampling technique to denoise the values of objective functions for the selected solutions. In general, the resampling technique is applied for all the solutions for a specified count and averages the attained values as value for objective function. Selecting a solution set and resampling solutions in this set has shown significant improvement in the algorithm’s performance in denoising, and function evaluations utilized for resampling is thus wisely used through this method9.

In the proposed NDE algorithm, set of solutions are selected for resampling and each solution in the set are resampled twice. Fast non-dominated sorting is applied to the trial vector and solutions are categorized as fronts. The solutions listed in first front are set of non-dominated solutions. The solutions for resampling are selected from the first front. During the early stage of evolution, the number of solutions in the first front will be less and at later stage of evolution, the non-dominated solutions in the first front will be more. Thus, the solutions for resampling are chosen from the non-dominated solution set by forming a hyper box9 using the ideal point \(({pop}_{min})\) and nadir point \(({pop}_{max})\). These ideal and nadir points are chosen as in Eq. 6.

\(\forall i\in (\text{1,2},\dots ,m)\), where \(m\) indicates number of objectives, \(R\) is the count of non-dominated solutions in first front retrieved after performing non-dominated sorting. The solutions inside the hyper box are chosen for resampling.

Selection

To select solutions for next generation, target and the trial vectors are combined to form a set of \(2NP\) solutions, where \(NP\) is the size of the population. This combined population set is subject to fast non-dominated sorting. The required \(NP\) solutions are selected from first front, which is the set of non-dominated solutions. If sufficient number of solutions are not available in first front, then solutions from subsequent fronts are used. If more than required number of solutions are available in a front then, Dynamic Crowding Distance (DCD)30 technique is used to select the required solutions from the front. DCD ensures maintaining uniform diversity across the selected solutions.

Local search

The function evaluations consumed during the explicit averaging-based method of denoising may reduce the convergence properties of the algorithm. Moreover, the crossover rate \(CR\) self-adaptation using fuzzy system through population diversity control may also affect the convergence. Thus, to improve the convergence, exploitation of solutions in promising search region is done using a restricted local search algorithm. Local search based optimization method searches for a locally better solution compared to the current solution chosen for exploitation in its proximity, and if one such solution is found it is added to the population which improves the convergence rate.

In the present NDE algorithm, we propose a restricted local search technique. The local search is performed in regular prespecified intervals \({LS}_{Int}\) and for a limited number of function evaluations \({LS}_{Feval}\), through which exploitation at larger rate is restricted. The current population best solution is chosen from the first front (the set of non-dominated solutions). Search is performed in the proximity of chosen solution for \({LS}_{Feval}\) times and if a solution better (dominates) than the current population best solution or better than any solution in first front is identified through the search, then the identified solution replaces a random solution in the population. Thus, this restricted local search procedure, applied once in every \({LS}_{Int}\) generation tries to find a better solution through exploitation and improve the convergence rate. The \({LS}_{Int}\) and \({LS}_{Feval}\) values are selected based on trial and error basis and best parameter values are selected for further experimentation. The restricted local search method is given in Algorithm 4.

Experimentation

To evaluate the performance of the proposed NDE algorithm, DTLZ34and WFG35 test problems are taken. The above test problem set are multi-objective unconstrained optimization problems and varying noise levels (0.1, 0.2, 0.5) is used for experimentation, and is detailed in section "Test problems". The performance metrics used for experimentation are modified inverted generational distance (IGD+)36 and hypervolume (HV)37 , which helps to assess both convergence and diversity properties of the attained solution set and the metrics are detailed in section "Performance metrics".

The performance of proposed NDE algorithm is compared with five multi-objective evolutionary algorithms namely, two stage evolutionary algorithm (TSEA)9, two archive algorithm (Two_Arch2)38, regularity model in NSGA-II (RM-NSGA-II)25, Noise-tolerant Strength Pareto Evolutionary Algorithm (NTSPEA)2, rolling tide evolutionary algorithm (RTEA)39 and Filters based NSGA-II (FNSGA-II)27. Except Two_Arch2 all other algorithms are developed to solve noisy optimization problems. The initialization of values for the various parameters used in proposed NDE algorithm are listed in Table 3.

Test problems

Sixteen test problems are used to evaluate the performance of the proposed algorithm. All the problems are multi-objective unconstrained optimization problems. From DTLZ test suite34 seven problems (DTLZ1 to DTLZ7) are taken and from WFG test suite35 nine problems (WFG1 to WFG9) are taken. The number of decision variables and objectives can be scaled up to required numbers. These sixteen problems exhibits different properties (concave, multimodal, biased, etc.) make it suitable to be used to test the performance of the proposed optimization algorithm. Three objective problems are considered for investigation.

The properties of these problems are listed in Table 4. The significance of using above problems in evaluating optimization algorithms is the availability of true pareto optimal front. Further, in DTLZ problems the number of objectives is scalable, test problems DTLZ5 and DTLZ6 have degenerated pareto fronts and distance functions are added in all the DTLZ test problems. WFG Problems include complex characteristics like many problems are non-separable, we may also observe few problems are deceptive, problems where pareto fronts are mixed shape. The availability of problems with such complex characteristics and availability true optimal fronts for the benchmark problems like above, significantly contributes in conducting the experimentation in the optimization field.

Performance metrics

The performance of the proposed algorithm is investigated using two performance metrics, modified Inverted Generational Distance (IGD+)36 and HyperVolume (HV)37.

IGD+ performance metric is a modified inverted generational distance metric that quantifies both convergence and diversity characteristic of an optimization algorithm. It helps to estimate the distance between the attained and true pareto fronts, lesser the IGD+ value indicates better the attained solutions and is calculated as given in Eq. 7.

where, \(A\) represents the reference points in true front, \(B\) represents the solutions in the attained pareto front. \(d(x,B)\) represents the nearest distance from \(x\) to attained front solutions, and this distance calculation from \(x\) to a solution \(y\) in \(B\) is estimated as, \(d\left(x,y\right)=\sqrt{\sum_{j=1}^{n}\text{max}{({y}_{j}-{x}_{j},0)}^{2}}\) and \(n\) represents the number of objectives.

HV performance metric helps to evaluate the convergence and diversity properties of the obtained solutions. HV calculates the hypervolume between the attained front and a given reference point. Formula to calculate HV is given in Eq. 8. A higher HV value indicates that better solutions are attained.

where variable \(S\) represents attained solution set and \(Le\) indicates the Lebesgue measure. Variable \(n\) denotes the number of objective functions. \(Re=({re}_{1}, {re}_{2},..,{re}_{m})\) is a vector of maximum reference point value on every objective function set at (1,1). Before calculating HV metric value, the objective functions are normalized using Min–max normalization.

Results and discussion on DTLZ problems

The mean IGD+ value results of DTLZ problems are given in Table 5 and the pareto fronts are given in Fig. 2. The results of HV Metric is listed in Table 6. The overall performance of NDE algorithm results is better when compared to all algorithms. DTLZ1 test problem is a linear and multi-modal test problem and chances of getting stuck at local optimal solutions are higher for problems of such characteristics, the obtained pareto front is shown in Fig. 2a. NDE algorithms performance is best followed by TSEA algorithm and the other algorithms. This is because of the fuzzy system based control parameter adaptation to regulate the population diversity and the applied denoising method. DTLZ2 is a concave, unimodal problem and the possibility of noise affecting its performance is high. NDE outperforms and the results are better compared to the other algorithms, and the respective attained front is shown in Fig. 2b. The effectiveness of averaging based denoising method is evident through the results. The characteristic of DTLZ3 test problem is multimodal as well and the performance of the proposed NDE algorithm is better, and the attained front is given in Fig. 2c.

Obtained pareto fronts for DTLZ problems. (a) Obtained pareto fronts for DTLZ1 problem. (b) Obtained pareto fronts for DTLZ2 problem. (c) Obtained pareto fronts for DTLZ3 problem. (d) Obtained pareto fronts for DTLZ4 problem. (e) Obtained pareto fronts for DTLZ5 problem. (f) Obtained pareto fronts for DTLZ6 problem. (g) Obtained pareto fronts for DTLZ7 problem.

DTLZ4 test problem is a modified one from DTLZ2 and the solutions are usually densely populated near to the planes. The performance of NTSPEA algorithm is better followed by NDE and the other methods. It can be observed that the NTSPEA algorithm works by associating survival time factor for all the solutions in the population and DTLZ4 problem is sensitive towards the initial population, and the respective attained front is given in Fig. 2d. The characteristics of test problems DTLZ5, DTLZ6 and DTLZ7 are irregular and the pareto front for DTLZ7 is discontinuous, the obtained pareto fronts for these problems are illustrated in Fig. 2e, f and g respectively. The proposed NDE algorithm attains better results for all the above problems. The IGD+ and HV values evolution across the function evaluations for DTLZ2 and DTLZ7 test problems are given in Fig. 3a and b respectively.

Results and discussion on WFG problems

WFG test problems are relatively complex when compared to the DTLZ problems. The mean values of the IGD+ and HV performance metrics are given in Tables 7, 8 respectively. The attained pareto fronts for the WFG problems are given in Fig. 4. WFG1 test problem is a complex test problem with mixed and biased characteristics. The performance of the proposed NDE algorithm is better than other algorithms taken for comparison, and is at par with performance of NTSPEA algorithm, the obtained pareto front for the same is illustrated in Fig. 4a. WFG2 is a multimodal test problem, and the performance of NDE is the best, and the respective front is given in Fig. 4b. WFG3 is a linear, non-separable test problem and the attained solutions and the mean IGD+ and HV values are better than other methods, and the pareto front is given in Fig. 4c.

Obtained pareto fronts for WFG problems. (a) Obtained pareto fronts for WFG1 problem. (b) Obtained pareto fronts for WFG2 problem. (c) Obtained pareto fronts for WFG3 problem. (d) Obtained pareto fronts for WFG4 problem. (e) Obtained pareto fronts for WFG5 problem. (f) Obtained pareto fronts for WFG6 problem. (g) Obtained pareto fronts for WFG7 problem. (h) Obtained pareto fronts for WFG8 problem. (i) Obtained pareto fronts for WFG9 problem.

The characteristic of WFG4 problem is that it is multi-modal, with multiple local optimal solutions. The population diversity control and the appropriate denoising method aid in escaping from such locally optimal solutions and it is evident through the obtained results, and front as given in Fig. 4d. WFG5 problem is of deceptive characteristic and is a separable problem and the attained concave pareto front and the mean IGD+ and HV values are better, and the front for the problem is illustrated in Fig. 4e. WFG6, WFG8 and WFG9 test problems are non-separable test problems and WFG9 is multi-modal as well. For the test problem WFG6, the performance of NDE and RTEA are at par, followed by the other algorithms, and the attained pareto front is illustrated in Fig. 4f. For WFG8 and WFG9 test problems, the results of NDE algorithm are promising, and the obtained pareto fronts for these problems are given in Fig. 4h and i respectively. The characteristic of WFG7 is it is unimodal and a separable one and the results of the proposed NDE algorithm is best, and the pareto front is given in Fig. 4g. The IGD+ and HV values evolution across the function evaluations for WFG9 and WFG2 test problems are given in Fig. 5a and b respectively.

Population effect and population diversity analysis

Population plays a vital role in search and optimization algorithms. The initial population is randomly generated within the variable bounds. Along the evolution, fitter solutions are selected and used that helps to attain an optimal or pareto-optimal solutions. In the proposed research, the population diversity plays a key role. The population diversity is controlled adaptively in order to improve the search performance. The solutions in the population must be diverse enough during the initial stages of evolution to have a better exploration over the search space and over the evolution and at later stages the population diversity is to be low to have better exploitation. Population that aids in balancing the explore-exploit cycle is required to attain optimal or pareto optimal solutions. The effect of population diversity in the present research is presented below and the explore-exploit cycle analysis is presented in the section "Exploration–Exploitation analysis".

To perform population diversity analysis, diversity of the population is calculated as given in Eq. 9, “distance to average point” measure32. This measure includes population size, dimension and search region of variables, thus this measure used for population diversity estimation.

where, \(PP\) represents population with size \(NP\). \(\left|LenDia\right|\) is the diagonal length of the search space, \({d}_{i}\) is the problem dimension. \({y}_{ij}\) is \({j}^{th}\) value of \({i}^{th}\) solution, \({\overline{y}}_{j}\) is the \({j}^{th}\) value of average point \(\overline{y }\).

Figure 6a and b shows the diversity graph of the population along the iterations for two problems, from which it is evident that, during the initial stages of evolution higher diversity is observed to improve the exploration and in later stage diversity value is lesser to improve exploitation.

Exploration–Exploitation analysis

To analyse the balancing property of exploration–exploitation cycle, the exploration and exploitation rate along the evolution is calculated using Eqs. 10 and 1140.

where, \(popdiversity\) indicates the population diversity and is calculated using Eq. 9.

Exploration–exploitation graph for two problems is given in Fig. 7a and b. It can be observed that, exploration starts with a higher value and during later stages it decreases and the exploitation begins with a smaller value and upon evolution its value is increased in final stages, which ensures the balance between the cycles of exploration and exploitation.

Statistical test results

Statistical analysis through Wilcoxon signed rank test and Friedman test41 are performed to further validate the performance of NDE.

Wilcoxon signed rank test is a pairwise comparison test, used to find whether there is significant difference between two algorithms. The test is performed using IBM SPSS package and the significance level \(\alpha\) is set to 0.05. \(\rho\)-value is computed through the above test and if the obtained \(\rho\)-value is less than \(\alpha\), the null hypothesis can be rejected and it implies there is significance difference among the algorithms taken for evaluation. The test results using the mean HV value results with \(\sigma\) value 0.5 obtained through various algorithms for DTLZ problems is presented in Table 9. The \(\rho\)-value is less than 0.05 (significance level) and the null hypothesis can be rejected. It is evident that the proposed NDE algorithm is significantly better when compared to other algorithms taken for comparison.

Table 10 gives the Wilcoxon signed rank test results using the mean IGD+ value results with.

\(\sigma\) value 0.5 obtained through various algorithms for WFG test problems. It is evident that the NDE algorithm is significantly better than the other algorithms taken for comparison as the \(\rho\)-value is less than 0.05.

The competence of the proposed NDE algorithm is also statistically analysed using Friedman rank test, which is a multiple comparison test. Joint analysis of algorithms taken for comparison is performed through the above test. The test is performed using IBM SPSS package and the significance level \(\alpha\) is set to 0.05. \(\rho\)-value is computed through the above test and if the obtained \(\rho\)-value is less than \(\alpha\), it implies there is significance difference among the algorithms taken for evaluation.

Table 11 gives the Friedman rank test results using the mean IGD+ value results with.

\(\sigma\) value 0.5 obtained through various algorithms for DTLZ problems.

The proposed NDE algorithm is ranked first in the above test and the \(\rho\)-value obtained is 0.0001 which is less than the significance level \(\alpha =0.05\), which proves the significance of the proposed optimization algorithm.

Table 12 gives the Friedman rank test results using the mean HV value results with \(\sigma\) value 0.5 obtained through various algorithms for WFG problems.

The proposed NDE algorithm is again ranked first in the above statistical test and the \(\rho\)-value obtained is 0.00008 which is less than the significance level \(\alpha =0.05\), shows the significance of the proposed optimization algorithm.

Experimentation on CEC 2017 test problems

To further investigate the effectiveness of the proposed Differential Evolution based Noise handling Optimization algorithm (NDE), experimentation is conducted on CEC 2017 test problems42 which are single objective optimization problems. To perform this study, the selection process in the proposed NDE algorithm is changed suitable to handle single objective optimization problems. The trial vector generated after the crossover operation is subject to fitness function evaluation. The fitness function values of the solutions in target and trial vectors are compared and the better solution is chosen as candidate for the next generation.

CEC 2017 test suite comprises 29 test problems (Test problem F2 has been removed from the CEC 2017 suite). The parameter settings are followed as given in the technical report and are given in Table 13.

The result analysis is performed by calculating error value, which is the difference between the best objective function value that is attained in a run and the true optimal value. This error value is calculated for all the 51 runs and the mean and standard deviation values are recorded. The results are compared with two other algorithms Effective Butterfly Optimizer using Covariance Matrix Adapted Retreat phase (EBOwithCMAR)43 and jSO44 which has secured first and second rank in the CEC 2017 competition and are presented in Table 14. The best results are highlighted in boldface, and it can be observed that the proposed NDE algorithm performs better in 19 functions, similar performance in 3 functions and inferior performance in 7 functions. This shows the significance of the NDE algorithm, with its capability of population diversity control through strategy adaptations, the restricted local search and noise handling techniques exhibits a robust performance.

Limitations

The main limitations of the research include, experimenting on a number of benchmark problems with varied and complex characteristics and not conducting experimentation on a real-world optimization problem45,46,47. The widely considered limitation in applying an optimization algorithm to a real-world problem will be parameter fine tuning according to the problem. But in the proposed NDE algorithm the major parameters like crossover rate and trial vector generation strategies are self-adapted according to the population domain of the problem. Thus, finetuning the other associated parameters may be a challenge. Through the above test results the robustness of the proposed NDE algorithm in solving a wider range of problems is evident. Further improvements will be studied in future work, by integrating multiple denoising models, fine tuning algorithm to handle constrained optimization problems and conducting experiments on real-world optimization problem.

Conclusions

In the present work, Differential Evolution based Noise handling Optimization algorithm (NDE) to optimize noisy bi-objective optimization problems is proposed. FAMDE-DC is used as the base optimizer, where fuzzy system is extended to adapt crossover rate in order to control the population diversity. Adaptive switching technique is extended to test whether the noise ratio is within the acceptable limits or not. If the noise ratio is exceeding the prespecified limits, then explicit averaging based denoising method is applied. To further improve the convergence characteristics, a restricted local search procedure is applied in prespecified intervals. To evaluate the performance of proposed NDE algorithm, DTLZ and WFG test problems are used by including noise of different levels. The modified Inverted Generational Distance (IGD+) and Hypervolume (HV) are chosen as the performance indicators. The attained results for most of the above problems using NDE algorithm are better than SOTA algorithms that are taken for comparison and shows the effectiveness of NDE algorithm in handling noisy optimization problems. The results related to test problem DTLZ4 are not best, the pareto optimal solutions for the problem are densely populated near to the planes and the convergence characteristics of the attained solutions are to be improved, which shows the future direction of improving the NDE algorithm. Further, non-parametric statistical tests namely Wilcoxon signed rank test and Friedman rank tests are conducted on the attained results, and the test results shows the significance of the suggested NDE algorithm over the other algorithms taken for comparison.

Data availability

The datasets used and analysed during the current study available from the corresponding author on reasonable request.

References

Brewster, B. S., Cannon, S. M., Farmer, J. R. & Meng, F. Modeling of lean premixed combustion in stationary gas turbines. Prog. Energy Combust. Sci. 25, 353–385 (1999).

Buche, D., Stoll, P., Dornberger, R. & Koumoutsakos, P. Multiobjective evolutionary algorithm for the optimization of noisy combustion processes. IEEE Trans. Syst. Man Cybern. 32, 460–473 (2002).

Jin, Y. & Branke, J. Evolutionary optimization in uncertain environments-a survey. IEEE Trans. Evol. Comput. 9, 303–317 (2005).

Beyer, H. G. Evolutionary algorithms in noisy environments: theoretical issues and guidelines for practice. Comput. Methods Appl. Mech. Engrg. 186, 239–267 (2000).

Goh, C. K. & Tan, K. C. An investigation on noisy environments in evolutionary multiobjective optimization. IEEE Trans. Evol. Comput. 11, 354–381 (2007).

Deb, K., Pratap, A., Agarwal, S. & Meyarivan, T. A fast and elitist multiobjective genetic algorithm: NSGA-II. IEEE Transact. Evolut. Comput. 6, 182–197 (2002).

Zitler, E., Laumanns, M. & Thiele, L. SPEA2: Improving the strength pareto evolutionary algorithm. Technical Report, Computer Engineering and Networks Laboratory (TIK), ETH Zurich, Switzerland. 103, 1–21 (2001).

Brindha, S. & Amali, M. J. A robust and adaptive fuzzy logic based differential evolution algorithm using population diversity tuning for multi-objective optimization. Eng. Appl. Artif. Intell. 102, 1–14 (2021).

Zheng, N. & Wang, H. A two-stage evolutionary algorithm for noisy bi-objective optimization. Swarm Evolut. Comput. 1(78), 101259 (2023).

Storn, R. & Price, K. Differential evolution - a simple and efficient heuristic for global optimization over continuous spaces. J. Global Optim. 11, 341–359 (1997).

Abbass, H.A., Sarker, R. & Newton, C. PDE: A pareto-frontier differential evolution approach for multi-objective optimization problems. In: Proceedings of the Congress on Evolutionary Computation (CEC 2001). 971–978. (2001)

Robic, T. & Filipic, B. DEMO: Differential evolution for multiobjective optimization. In: International Conference on Evolutionary Multi-criterion Optimization. 520–533 (2005).

Bandyopadhyay, S. & Mukherjee, A. An algorithm for many-objective optimization with reduced objective computations: a study in differential evolution. IEEE Trans. Evolut. Comput. 3, 400–413 (2015).

Wang, Y., Wu, L. & Yuan, X. Multi-objective self-adaptive differential evolution with elitist archive and crowding entropy-based diversity measure. Soft Comput. 14, 193–209 (2010).

Brindha, S., Uma Maheswari, J., Vinothini, A. & Amali, M. J. Self-adaptive trajectory optimization algorithm using fuzzy logic for mobile edge computing system assisted by unmanned aerial vehicle. Drones 7, 1–19 (2023).

Wang, Y., Liu, Z. & Wang, G. G. Improved differential evolution using two-stage mutation strategy for multimodal multi-objective optimization. Swarm Evolut. Comput. 1(78), 101232 (2023).

Syberfeldt, A., Ng, A., John, R. I. & Moore, P. Evolutionary optimisation of noisy multi-objective problems using confidence-based dynamic resampling. Eur. J. Oper. Res. 204, 533–544 (2010).

Hansen, N., Niederberger, A. S. P., Guzzella, L. & Koumoutsakos, P. A method for handling uncertainty in evolutionary optimization with an application to feedback control of combustion. IEEE Trans. Evol. Comput. 13, 180–197 (2009).

Nguyen, D.M. & Hansen, H. Benchmarking CMAES-APOP on the BBOB noiseless testbed. In: Proc. Genet. Evol. Comput. Conf.Companion. 1756–1763 (2017).

Hellwig, M. & Beyer, H.G. Evolution under strong noise: A self-adaptive evolution strategy can reach the lower performance bound-the pccmsa-es. In: International Conference on Parallel Problem Solving from Nature. 26–36 (2016).

Krause, O. Large-scale noise-resilient evolution-strategies. In: Proceedings of the Genetic and Evolutionary Computation Conference. 682–690 (2019).

Eskandari, H. & Geiger, C. D. Evolutionary multiobjective optimization in noisy problem environments. J. Heurist. 15, 559–595 (2009).

Hughes, E.J. Evolutionary multi-objective ranking with uncertainty and noise. In: International Conference on Evolutionary Multi-Criterion Optimization. 329–343 (2001).

Babbar, M., Lakshmikantha, A. & Goldberg, D.E. A modified NSGA-II to solve noisy multiobjective problems. In: Genetic and Evolutionary Computation Conference (GECCO 2003). 21–27 (2003).

Shim, V. A., Tan, K. C., Chia, J. Y. & Al Mamun, A. Multiobjective optimization with estimation of distribution algorithm in a noisy environment. Evol. Comput. 21, 149–177 (2013).

Wang, H., Zhang, Q., Jiao, L. & Yao, X. Regularity model for noisy multi-objective optimization. IEEE Trans. Cybern. 46, 1997–2009 (2015).

Zheng, N., Wang, H. & Yuan, B. An adaptive model switch-based surrogate-assisted evolutionary algorithm for noisy expensive multi-objective optimization. Complex Intell. Syst. 8, 1–18 (2022).

Liu, R., Li, Y., Wang, H. & Liu, J. A noisy multi-objective optimization algorithm based on mean and Wiener filters. Knowledge-Based Syst. 27(228), 107215 (2021).

Zheng, N., Wang, H. & Yuan, B. An adaptive model switch-based surrogate-assisted evolutionary algorithm for noisy expensive multi-objective optimization. Complex Intell. Syst. 8, 4339–4356 (2022).

Liu, R., Li, N. & Wang, F. Noisy multi-objective optimization algorithm based on Gaussian model and regularity model. Swarm Evolut. Comput. 1(69), 101027 (2022).

Qin, A. K., Huang, V. L. & Suganthan, P. N. Differential evolution algorithm with strategy adaptation for global numerical optimization. IEEE Transact. Evolut. Comput. 13, 398–417 (2009).

Deb, K. Multi-objective optimization using evolutionary algorithms (Wiley, 2001).

Luo, B., Zheng, J., Xie, J. & Wu, J. Dynamic Crowding Distance? A New Diversity Maintenance Strategy for MOEAs. In: Proceedings of the Fourth International Conference on Natural Computation. 580–585 (2008).

Ursem, R.K. Diversity-guided evolutionary algorithms. In: Proceedings of seventh International Conference on Parallel Problem Solving from Nature VII (PPSN-2002). 462–471 (2002).

Li, Z. et al. Noisy optimization by evolution strategies with online population size learning. IEEE Trans. Syst. Man Cybern. 52, 5816–5828 (2021).

Deb, K., Thiele, L., Laumanns, M. & Zitzler, E. Scalable multi-objective optimization test problems. In: Proceedings of the 2002 Congress on Evolutionary Computation. 825–830 (2002).

Huband, S., Hingston, P., Barone, L. & While, L. A review of multiobjective test problems and a scalable test problem toolkit. IEEE Trans. Evol. Comput. 10, 477–506 (2006).

Ishibuchi, H., Masuda, H., Tanigaki, Y. & Nojima, Y. Modified distance calculation in generational distance and inverted generational distance. In: International Conference on Evolutionary Multi-Criterion Optimization. 110–125 (2015).

Bader, J. & Zitzler, E. HypE: an algorithm for fast hypervolume-based many-objective optimization. Evol. Comput. 19, 45–76 (2011).

Wang, H., Jiao, L. & Yao, X. Two_Arch2: an improved two-archive algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 19, 524–541 (2014).

Fieldsend, J. E. & Everson, R. M. The rolling tide evolutionary algorithm: a multiobjective optimizer for noisy optimization problems. IEEE Trans. Evol. Comput. 19, 103–117 (2014).

Chauhan, D. & Yadav, A. Optimizing the parameters of hybrid active power filters through a comprehensive and dynamic multi-swarm gravitational search algorithm. Eng. Appl. Artif. Intell. 1(123), 106469 (2023).

Derrac, J., Garcia, S., Molina, D. & Herrera, F. A practical tutorial on the use of nonparametric statistical tests as a methodology for comparing evolutionary and swarm intelligence algorithms. Swarm Evol. Comput. 1, 3–18 (2011).

Awad, N. H., Ali, M. Z., Liang, J. J., Qu, B. Y., & Suganthan, P. N. Problem definitions and evaluation criteria for the CEC 2017 special session and competition on single objective bound constrained real-parameter numerical optimization. In Technical report. 1–34 (2016).

Kumar, A., Misra, R.K., & Singh, D. Improving the local search capability of Effective Butterfly Optimizer using Covariance Matrix Adapted Retreat Phase. In: 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia, Spain. 1835–1842 (2017).

Brest, J., Maučec, M.S., & Bošković, B. Single objective real-parameter optimization: Algorithm Jso. 2017 IEEE Congress on Evolutionary Computation (CEC), Donostia, Spain. 1311–1318 (2017).

Chauhan, S. et al. An Adaptive feature mode decomposition based on a novel health indicator for bearing fault diagnosis. Measurement 28(226), 114191 (2024).

Chauhan, S. et al. A quasi-reflected and Gaussian mutated arithmetic optimisation algorithm for global optimisation. Inform. Sci. 6, 120823 (2024).

Sumika, C., Govind, V., Laith, A. & Anil, K. Boosting salp swarm algorithm by opposition-based learning concept and sine cosine algorithm for engineering design problems. Soft Comput. 27, 18775–18802 (2023).

Funding

Open access funding provided by Vellore Institute of Technology.

Author information

Authors and Affiliations

Contributions

The study conception and design performed by Brindha Subburaj, material preparation, data collection and analysis by Brindha Subburaj, Uma Maheswari J, Syed Ibrahim S P, Muthu Subash Kavitha, the manuscript preparation was all performed by Brindha Subburaj, Uma Maheswari J, Syed Ibrahim S P, Muthu Subash Kavitha. All the authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article's Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article's Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Subburaj, B., Maheswari, J.U., Ibrahim, S.P.S. et al. Population diversity control based differential evolution algorithm using fuzzy system for noisy multi-objective optimization problems. Sci Rep 14, 17863 (2024). https://doi.org/10.1038/s41598-024-68436-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-68436-1