Abstract

Accurately detecting voltage faults is essential for ensuring the safe and stable operation of energy storage power station systems. To swiftly identify operational faults in energy storage batteries, this study introduces a voltage anomaly prediction method based on a Bayesian optimized (BO)-Informer neural network. Firstly, the temporal characteristics and actual data collected by the battery management system (BMS) are considered to establish a long-term operational dataset for the energy storage station. The Pearson correlation coefficient (PCC) is used to quantify the correlations between these data. Secondly, an Informer neural network with BO hyperparameters is used to build the voltage prediction model. The performance of the proposed model is assessed by comparing it with several state-of-the-art models. With a 1 min sampling interval and one-step prediction, trained on 70% of the available data, the proposed model reduces the root mean square error (RMSE), mean square error (MSE), and mean absolute error (MAE) of the predictions to 9.18 mV, 0.0831 mV, and 6.708 mV, respectively. Furthermore, the influence of different sampling intervals and training set ratios on prediction results is analyzed using actual grid operation data, leading to a dataset that balances efficiency and accuracy. The proposed BO-based method achieves more precise voltage abnormity prediction than the existing methods.

Similar content being viewed by others

Introduction

With the construction of new power systems, lithium(Li)-ion batteries are essential for storing renewable energy and improving overall grid security1,2,3. Li-ion batteries, as a type of new energy battery, are not only more environmentally friendly but also offer superior performance4. However, safety problems have arisen as the industry pursues higher energy densities in Li-ion batteries5. The public has become increasingly anxious about the safety of large-scale Li-ion battery energy-storage systems because of the frequent fire accidents in energy-storage power stations in recent years6. In the fields of electric vehicles and electrochemical energy storage, frequent incidents of spontaneous combustion and explosions indicate the potential, spontaneous, and destructive characteristics of lithium-ion battery failures. To achieve the required voltage and capacity, an array of battery cells are connected in various series/parallel configurations, respectively7,8. Due to degradation of battery cells, electrical faults, or misuse, faults may occur and precipitate within these constituent battery cells. Fault in battery systems is considered one of the most severe problems that can result in thermal runaway and fire9,10. If these faults are not attended to, thermal runaway may be triggered. To date, numerous studies indicate that voltage anomalies may manifest or induce various battery malfunctions. For example, over-voltage means there may be a fault that the battery system is over-charged and the charge protection circuit is disabled; under-voltage represents that the battery system may be over-discharged or short-circuited internally11. Early and precise prediction of voltage anomalies during the operation of energy storage stations is crucial to prevent the occurrence of voltage-related faults, as these anomalies often indicate the possibility of more serious issues. This also implies the need for certain human intervention to identify potential issues caused by voltage anomalies, ensuring the secure operation of energy storage systems, such as real-time monitoring and accurate prediction of battery voltage.

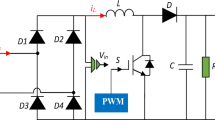

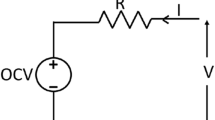

Currently, research on battery fault diagnosis is abundant, primarily categorized into three main types: statistical analysis‑based method12 model-based13 and data-driven method14. Statistical analysis, based on mathematical statistics and the dissection of various data and information, is employed in LIB systems. Different sensors collect information such as current, voltage, temperature15 internal resistance, etc. Subsequently, various statistical methods are applied for direct analysis of the collected data. However, it tends to be possible to achieve fault detection, and it is difficult to identify the type of fault16. By developing high-fidelity battery models, model-based methods can be employed to characterize fault-related information, including terminal voltage17, state of charge (SOC)18, temperature19, and so on. On this basis, a set of residuals is obtained, which can be used to generate signals by comparing parameters with measured values. Subsequently, these signals are utilized for fault diagnosis. Currently, electrochemical models20, equivalent circuit models (ECM)21, thermal models22, and multi-factor coupling models23 are widely applied in battery fault diagnosis. In Ref.24 achieves multi-fault detection and isolation by integrating equivalent circuit models into a hybrid automaton and tracking continuous/discrete dynamics from the cell level to the module level. In Ref.25 accomplishes the joint estimation of battery voltage and SOC based on the ECM It quantitatively calculates the positive and negative virtual insulation resistance for fault diagnosis. In Ref.26 has developed a fault diagnosis method for battery pack voltage and current sensor error detection, utilizing an integrated ECM and an unscented particle filter. Reference27 A thermo-electrochemical coupling modeling approach is proposed to predict the electrochemical and thermal behaviors of batteries. By considering heat generation and temperature-related characteristics, their model can analyze he average battery temperature and temperature distribution. Regrettably, the precision and dependability of these techniques are primarily contingent upon the faithfulness of the constructed model. The accuracy and reliability of these methods primarily depend on the fidelity of the constructed model. Due to the yet unresolved internal characteristics of electrochemical reactions in li-ion batteries, establishing highly faithful models to consider all possible operating conditions remains challenging.

Data-driven methods do not require to consider the complicated battery mechanism and have become a research hotspot in the field of fault diagnosis28. This approach directly analyzes and processes operational data to detect battery faults, greatly simplifying the implementation process. Data-driven methods are primarily divided into signal processing-based methods and machine learning methods. The former typically utilize techniques such as root mean square amplitude, spectral analysis, wavelet transform, entropy-based methods, rough set, and principal component analysis to extract fault characteristic parameters, such as bias, variance, entropy, and correlation coefficient29. Subsequently, faults are identified by comparing the parameters with the values in the normal state. Currently, most voltage anomaly predictions use data-driven methods. Regarding battery safety issues in electric vehicles and electric scooters. In Ref.11, introduces long short-term memory (LSTM) neural networks for accurate multi-step voltage prediction, combined with alert thresholds to achieve fault diagnosis. In Ref.30, an adaptive enhancement approach integrates the LSTM neural network with the ECM of LIB for voltage prediction and diagnosis of voltage faults. Reference31, an investigation was conducted on the development of a precise multi-step voltage prediction and voltage fault diagnosis method based on gated recurrent unit (GRU) neural network and incremental training. For proton exchange membrane fuel cell (PEMFC) degradation performance, Reference32 combines bidirectional (Bi)-LSTM, Bi-GRU, and echo state networks to create a hybrid model for predicting short-term voltage degradation and performance. Reference33 uses a combined LSTM and support vector regression (SVR) model to forecast short-term voltage degradation and long-term durability. Reference34 introduces a voltage prediction model using residual- convolutional neural network (CNN)-LSTM-random attention, which improves short- and mid-term predictions.

Currently, real-world operational data comes mainly from electric vehicles, electric scooters and PEMFC. However, the actual operational data from lithium-ion batteries in energy storage stations involved in grid-assisted services is limited, especially in terms of accurately predicting voltage fault anomalies. The traditional models, such as LSTM and GRU, are unable to effectively handle long-term dependencies. Their performance degrades with the addition of time-series data. These models can’t process time series data in parallel because their current state computation depends on previous conditions. This dependency limits the flexibility of the models and increases the computational complexity. The Transformer model overcomes the limitations of LSTM and GRU in parallel processing. The Transformer model effectively captures long-term dependencies and local features in voltage time series, leveraging its attention mechanism, Informer, a notable Transformer variant. This optimizes information utilization and enhances predictive performance. However, the implementation of Informer is relatively complex, particularly in terms of parameter tuning and hyperparameter selection. With the rapid development of deep learning and optimization, the (Bayesian optimized) BO algorithm has gradually become a research hotspot35. The BO algorithm is widely used to solve complex and high-dimensional optimization problems, especially in the hyperparameter optimization of machine learning models, neural network structure search, and automated machine learning36,37.

To address these issues, this study proposes an Informer prediction method based on the BO algorithm. First, the raw data and the extracted time-series parameters are standardized. Then the (Pearson correlation coefficient) PCC is employed to assess the influence of these parameters on battery voltage and obtain high-quality parameters as inputs for the voltage prediction model. Second, datasets with different time intervals are utilized to reconstruct the data. Third, the BO algorithm is employed to acquire appropriate model hyperparameters. Finally, the prediction results are compared across different training sets. The experimental results indicate that, the BO-Informer prediction method achieves more accurate short-term voltage anomaly predictions than the non-optimized versions of Informer, Transformer, GRU, and LSTM neural networks.

The primary contributions of this study are as follows: 1) For the first time, the relevant information from the (battery management system) BMS is integrated with the temporal information extracted by the Informer from voltage data. Then, PCC is used to identify high-quality parameters to be input into the voltage prediction model. 2) Informer model is employed for the first time in voltage prediction for energy storage stations. To enhance voltage prediction performance, BO is combined with iterative training, resulting in the BO-Informer model. This integration optimizes the model’s hyperparameters, leading to enhanced predictive capabilities and an improved ability to learn and memorize complex relationships within the voltage data. The model achieves advanced forecasting of future voltage, demonstrating its potential for early prediction of voltage anomalies. 3) The proposed hybrid model is compared with commonly used Transformer, GRU, LSTM, and the non-optimized Informer models. The experimental results demonstrate that the proposed model achieves optimal performance across all three evaluation metrics ((mean absolute error) MAE, (mean square error) MSE, and (root mean square error) RMSE). The predictive accuracy of the proposed model is demonstrated.

The remainder of this paper is structured as follows. Section “Data collection and processing” details the process of data collection and preprocessing. The development of the BO-Informer model is introduced In section “Methodology”. Subsequently, Section “Result and discussion” presents the results and discussion. Finally, Section “Conclusion” draws the main conclusions.

Data collection and processing

Data and structure of energy storage station

A certain energy storage power station in western China is composed of three battery cabins. Each compartment contains two stacks (1, 2), and each stack comprises three clusters. Each cluster is composed of 19 modules, with each module consisting of 2 cells in parallel and 12 cells in series. In this study, the term ‘individual cell’ refers to the ‘1 series’ within the 2 parallel and 12 series configurations. Figure 1 illustrates the arrangement of battery cells in a battery module. The individual battery cells have a rated voltage of 3.2 V and a nominal capacity of 120 Ah, while the battery pack has a rated voltage of 38.4 V and a capacity of 240 Ah. Real-time operational data was continuously recorded using the BMS, at 60-s intervals, encompassing parameters like battery current, voltage, temperature, and SOC. The variability and unpredictability of factors such as grid peak shaving and frequency regulation often lead to random fluctuations in most time-varying parameters, Fig. 2 shows the curves of some representative parameters, including battery voltage (normal and abnormal), cluster voltage, SOC, temperature and current. It can be seen from the figure that the main fluctuations in battery voltage and cluster voltage are in the range of 3.6 to 4.2 V and 50.4 to 58.8 V. The range of abnormal voltage is from 0 to 3.39 V, and the temperature range is from 22 to 28 °C. The current jump is caused by the switching between charging and discharging of the energy storage power station. The SOC ranges from 17.5 to 86.6%. This phenomenon can be explained by the ancillary services provided by the storage station, The confidence level of SOC is relatively low, making it difficult to estimate accurately even with state-of-the-art methods. SOC significantly influences voltage changes, but its relationship is non-linear and exhibits a time lag. This limits the possibility of complete battery discharge. Other factors impacting the battery voltage within the storage station include current, temperature and inevitable aging effects.

Data standardization process

Different evaluation indicators often have different scales and units of measurement, and such differences can potentially affect the results of data analyses. In order to eliminate the effects of these metrics, data standardization measures are necessary to ensure comparability between indicators. Linear transformation is considered to be an effective method for mapping the raw data into the range [0, 1] and its standardization formula is shown in Eq. (1) The method normalizes the raw data by scaling it in equal proportions.

In this equation: Dnorm represents the collected data of the BMS normalized in the previous section, collectively referred to as original data thereafter. D denotes the original data; Dmax and Dmin are the maximum and minimum values of the original data set, respectively.

PCC correlation analysis

Currently, online estimation of the state of health (SOH) and remaining useful life (RUL) of batteries is still a challenging problem. In order to more fully consider the temporal characteristics of the battery voltage, we introduce the Informer model. The model is naturally equipped to handle temporal characteristics. The model ensures that the correct order of the input sequence can be captured by introducing positional encoding in the data input. The positional encoding involves both local and global timestamps, with equations for the local timestamps as shown in Eqs. (2) and (3)

Here, pos denotes the position of the current word in the whole input sequence. i is the dimension of the current computed value, which takes values ranging from 1 to d. d denotes the dimension of the input sequence and L denotes the length of the sequence. Based on the above equations, three time series features i.e. weekly, daily and hourly parameters of the voltage data of battery 60 for the period of August 1 to August 31 were extracted. Additionally, ten parameters related to battery voltage data, including temperature of the cell, stack voltage (the total voltage of a battery stack), stack current (the current flowing through an entire battery stack), stack SOC (the state of charge of a battery stack), cluster voltage (the voltage of a battery cluster), cluster current (the current flowing through a specific battery cluster), and cluster SOC (the state of charge of a particular battery cluster), were recorded using the BMS. To extract the most valuable features from these parameters and quantify their correlation with voltage, the PCC method was employed to eliminate the harmful parameters and extract the high-quality parameters as inputs to the voltage prediction model. The details are as follows:

where, n indicates the number of each parameter; xi indicates the voltage; \(\overline{x}\) indicates the average value of xi; yi indicates the characteristic variable of the voltage at the moment i; \(\overline{y}\) indicates the average value of yi. The value of ρ ranges from − 1 to 1, and the size of the absolute value of the ρ value reflects the strength of the correlation between the characteristic variable and the voltage. An absolute value close to 1, indicates that the linear correlation between the two parameters is strong. The PCC values of the selected 11 parameters are shown in Fig. 3. Selecting highly correlated parameters can reduce computational burden while maintaining high predictive performance. The PCC values of the pile voltage, cluster voltage, cluster SOC and day feature are all higher than 0.85, indicating a strong linear correlation. Therefore, they are chosen as inputs to the model. Since the model aims to use historical data of battery clusters/battery packs for abnormal voltage prediction, the battery voltage itself is also included as an input to the model.

Methodology

Figure 4 shows the entire implementation process of the proposed voltage anomaly prediction scheme, which is divided into four stages: data preparation, BO-Informer model training, Bayesian hyperparameter optimization, and discussion of prediction results. First, operational data of the energy storage station is obtained from the BMS, Then the data is preprocessed, including correlation analysis, normalization, and partitioning into training and testing datasets. Next, an Informer model suitable for the data structure is used to train and predict future domain battery voltages using an integrated multi-step prediction design. To further enhance the performance, BO is employed to optimize the model’s hyperparameters. This combined approach results in the development of the BO-Informer prediction model.

The neural network structure of informer

The Informer is a Transformer based model proposed by Zhou et al.38 Aiming to Improve Computational Efficiency of Self-Attention Mechanisms, Multilayer Network Stacking and Stepwise Decoding Methods in Transformer Networks. The informer network exhibits better performance than LSTM in capturing long-term correlations. Therefore, it has been successfully applied in fields such as long time series electrical line trip fault prediction and wind power prediction39,40. This study applies the Informer model for the first time to the prediction of voltage anomalies in energy storage batteries. The model consists of an encoder and a decoder, where the encoder processes the time series and reduces the time complexity and memory usage through a probabilistic sparse self-attention mechanism. Subsequently, the temporal dimension of the input sequence is reduced by a self-attentive distillation process, which reduces the spatial complexity. Eventually, the predicted output is generated at the decoder stage.

Unified input representation

Since the position information of the data cannot be directly recorded in the input, requiring position embedding. Informer incorporates position encoding into the data input to ensure the model captures the correct order of the input sequence. This position encoding is divided into local and global timestamps. The equations for local timestamps are provided in Eqs. (2) and (3).

Following the encoding steps, the input data for the encoder layer is obtained as follows:

where ui represents the original data sequence, i ∈ [1, 2, …, L]; L is the length of the data sequence; t is the series number; and α, set to 1 for normalized input sequences, is a factor balancing the size between the mapping vector and the position encoding.

ProbSparse self-attention

Self-attention is an important feature extraction mechanism used in the encoding and decoding process. The original self-attention mechanism originally proposed by Vaswani et al. aims to map the query, key and value vectors to the output. The weights are derived by applying specific formulas to calculate the association of the query with the corresponding keys. Then, a weighted sum of these values is calculated based on these weights as shown in Eq. (6)

where \(Q \in R^{{L_{Q} \times d}}\), \(K \in R^{{L_{K} \times d}}\), \(V \in R^{{L_{V} \times d}}\), d represents the input dimension. This attention mechanism is also known as dot product attention because we compute the dot product of the query with all keys. However, this approach requires significant memory usage (O(LQLK)) and quadratic time complexity for computation. This severely limits the predictive power of the model.

According to previous experimental results, the score of self-attention follows a long-tailed distribution, i.e., a small number of dot products contribute to most of the attention. Therefore, identifying the main attention is important to reduce the computational complexity, which is the core strategy of ProbSparse self-attention.

We define the ith query as qi and define the probability of qi attention to all keys as p(kj |qi). When qi contribution to attention is not essential, p(kj |qi) will be close to uniformly distributed u(kj|qi) = 1/Lk. We use the adjusted Kullback–Leibler divergence to measure the distribution The difference between p and q

M(qi, K) denotes the importance of qi, which increases with the ‘diversity’ of the probability distribution, and is therefore more likely to contain major dot product pairs. Here, we envisage using only the n highest scoring dominant queries:

where \(\overline{Q}\) consists of the first n queries, which are obtained from the sparsity measurements. We set n = alnLQ, where a is a constant parameter. As a result, the memory usage of ProbSparse self-attention is reduced to O(LK ln LQ).

In order to reduce the computational complexity, the computation of M(qi,K) requires traversing all dot products of the query and the key, and the complexity of this process is quadratic O(LQLK). Moreover, the LSE operation (the first part of M(qi,K)) is not stable enough for numerical computation. For this reason, two methods are used to reduce the complexity: approximate simplification and sampling calculations. M(qi,K) has obvious upper and lower bounds (proofs have been obtained in Zhou’s study38). The adjusted measurements are shown in Eq. (10).

Due to the presence of a long-tailed distribution, there is no need to evaluate \(\hat{M}\)(qi,K) for each query. We can randomly sample N = lnK ln LQ pairs to compute \(\hat{M}\)(qi,K) and set the residual value to zero. This approach makes the numerical computation more stable and reduces unnecessary traversals. If LK = LQ = L, the time and space complexity is O(L ln L).

Encoder

The encoder is mainly responsible for extracting dependencies from lengthy data sequences. The probabilistic sparse self-attention mechanism deals with sequences that contain redundant V-vectors. Therefore, in this case, the distillation operation gives higher weight to the basic features. The distillation process for stages j to j + 1 is as follows:

where [.]AB denotes the key operation in the multi-head probabilistic sparse self-attention mechanism. the ELU is an activation function. The effect of convolution and pooling is to halve the input sequence, thus reducing the memory utilisation to O((2 − λ)L log L).

Decoder

The decoder receives the following input vectors:

where \(X_{de}^{t}\) denotes the input to the decoder, \(X_{token}^{t}\) is the start token of the sequence and \(X_{0}^{t}\) denotes the placeholder of the target sequence. In order to maintain the consistency of the input dimensions, the timestamps are zero-padded. Next, autoregression is prevented by using masked multi-head attention to ensure that each position focuses only on information relevant to the current position. Eventually, the final result is obtained. The voltage dataset we used has a high sampling frequency. Based on the correlation heat map, we selected data that are strongly correlated with the input data in order to train and predict the model for long sequence data. The validation prediction length was 4012 sample points, and the results showed that the model was able to effectively process and predict data sequences with long sequences and large data features. After several tests, Informer achieved convergence at round 10 of the dataset. Given the characteristics of battery voltage data from energy storage power stations, traditional methods are unable to complete model training quickly when facing newly generated data. Therefore, under the premise of guaranteeing the prediction accuracy, reduce the model size and computational runtime, and choose three encoder layers and one decoder layer. The specific structure is shown in Fig. 5.

Loss functions

In the back propagation process of neural networks, the loss function plays a crucial role and essentially reflects the error of the network. The smaller the value of the loss function, the more superior the performance of the network in problem solving. Therefore, it is crucial to choose an appropriate loss function to ensure that the network parameters are optimized in a more reasonable direction. There are numerous loss functions to choose from, including absolute value loss function, mean square loss function, cross entropy loss function, etc. In this experiment, we used the MSE. The specific expression of the mean square loss function is as follows:

Model parameters

In Informer modeling, crucial parameters include historical data length (h-len) Initial learning rate, dropout rate, The number of encoder layers and batch size. Based on the basic hyperparameters proposed by Zhou et al.38 as well as the prediction results from the dataset discussed in section “Decoder” of this paper, the model’s structure and hyperparameters were preliminarily determined. The experimental model parameters are shown in Table 1.

Bayesian optimization

BO neural networks encompass several hyperparameters, including the loss function, the number of encoder layers, the number of decoder layers, h-len, learning rate, dropout rate, and batch size. These parameters are typically set based on experience, making it difficult to obtain the optimal parameters. These hyperparameters significantly affect the runtime and prediction accuracy of neural networks. Hence their optimization is crucial. This study focuses on historical data length, learning rate, dropout rate, and batch size as the neural network’s hyperparameters. A BO algorithm is employed to efficiently identify the optimal configuration of these hyperparameters.

h-len dictates the amount of past time steps the model takes into account during predictions. Proper h-len enables the model to accurately capture long-term dependencies and trends within the time series, thereby enhancing prediction accuracy. Bayesian optimization is an approach that optimizes black-box functions by building Gaussian process models. Its fundamental principle is to select the parameter values that are most likely to lead to optimization in each iteration based on the current Gaussian process model. It leverages Bayes’ theorem to update the model’s prior probability distribution with information obtained from function evaluations. This allows BO to choose the next sampling point based on the insights provided by the current Gaussian process model, iteratively enhancing the black-box function. Figure 4 depicts the BO process for Informer.

Bayesian optimization parameters

Similar to the original Informer model, the BO-based Informer model also includes certain parameters from the Informer. However, BO is specifically utilized to optimize parameters such as h-len, initial learning rate, dropout rate, and batch size. To ensure effective optimization and the feasibility of the resulting parameters, it is essential to set appropriate ranges for the hyperparameters, as indicated in Table 2.

The experimental environment for this study was Windows 11 64-bit operating system with i5-10400F CPU,16 GB RAM, and GTX-3060 graphics card. The experimental code used scikit-learn library and pytorch library in Python and compilation was done using PyCharm software.

Performance evaluation criteria

Error computation is vital for evaluating prediction accuracy. Therefore, standard metrics including RMSE, MSE, and MAE were used. These metrics can be calculated using Eqs. (14), (15), and (16), respectively. Equation (17) is applied for determining the neural network’s maximum error.

where N is the amount of data in the dataset, VTrue is the anomalous voltage of a single lithium-ion battery, and VPredict is the voltage predicted by the neural network.

Result and discussion

Comparison of informer and BO-informer

The BMS dataset of the energy storage plant was sampled at a time interval of 60 s, and 11,671 data from battery 60 for the period 3 August 2023 to 11 August 2023 were used as the dataset. In the dataset, abnormal voltage faults were identified for the time periods from 22:18:48 on 8 August 2023 to 9:51:49 on 9 August 2023, and from 21:40:48 on 9 August 2023 to 10:24:49 on 10 August 2023. Furthermore, a portion of the initial dataset contained missing data points, potentially influencing the precise identification of abnormal voltage characteristics by the neural network model. Data preprocessing is an essential preparatory stage, ensuring that the data is in a suitable format for constructing and training the neural network model. This study adopts a forward-filling strategy to address missing values. This method replaces missing entries with the corresponding value from the previous time step. Subsequently, the data was normalized or improved model training as described in Eq. (1) in section “Data standardization process”. The processed data was analyzed by correlation analysis and four correlations were obtained as inputs. Based on the pre-processed dataset, the Informer and Bayesian-Informer neural network models were used to predict battery voltage anomalies in the energy storage plant. In this study, the dataset was divided into training and test sets in the ratio of 7:3. Figure 6a shows the battery voltage anomalies prediction results of the Informer neural network without parameter optimization.

The Informer neural network model’s prediction outcomes show significant disparities compared to the actual data. Throughout the forecasting phase, errors progressively accumulate, resulting in deviations between subsequent predicted values and actual values. Figure 6b illustrates the absolute errors in prediction results relative to experimental data. During training, the model maintained, low absolute errors between predicted and actual values. However, the absolute error gradually rose in subsequent predictions, potentially due to abrupt voltage fluctuations. The collective absolute errors range from 0 to 165 mV, with the highest absolute error in prediction results reaching 1030 mV at 7423 min. Conversely, this may indicate an overfitting issue stemming from imprudent hyperparameter configuration. The Informer model, optimized with Bayesian hyperparameters, effectively determines the most suitable hyperparameters within the ensemble range. Figure 7 illustrates the prediction outcomes of the Bayesian-optimized Informer neural network trained on the first 70% of experimental data. The BO-Informer model demonstrates significant improvement in prediction accuracy compared to the base Informer neural network. Across the overall validation, absolute errors ranged from 0 and 43 mV. The maximum absolute error in the prediction results is 890 mV occurring at the-7423-min mark. Consequently, the Bayesian algorithm enhances model performance and yields more precise prediction outcomes.

Comparative experiment of different neural network predictions

The performance of the proposed model was compared with the baseline models including Informer, Transformer, GRU, and LSTM using historical data lengths of 24 time steps This comparative analysis encompassed one-step, two-step, and three-step prediction tasks. The results of the error comparison among these models on the test set are presented in Table 3., with the optimal errors highlighted in bold. Figures 8, 9, 10 show the bar charts illustrating the error comparison. The experimental findings demonstrate that the predictive performance of Informer surpasses that of other models. The proposed hybrid model, built upon Informer, is further refined and achieves optimal outcomes across all error metrics. Compared to Informer, reductions in RMSE, MSE, and MAE are observed, particularly in multi-step predictions. The most typical one-step prediction result is shown in Fig. 11. From the enlarged view, it can be seen that all these models can generally predict voltage anomalies. The proposed BO-Informer closely aligns with the actual values, achieving the best prediction results.

The effect of training set proportion on prediction results

Data-driven approaches hinge not only on the formulation of the prediction model but also on the strategic partitioning of the dataset, a pivotal aspect in the prediction workflow. The size of the training set significantly influences the model’s predictive accuracy. Generally, a larger proportion of the training set yields more precise predictions from the neural network model. However, the relationship between the training set size and model performance enhancement is not linear, and an increase in training set volume may impact computational efficiency. Hence, determining an effective and accurate training set size is crucial for achieving accurate predictions. In this study, training sets comprising 25%, 50%, and 75% of the data were utilized, as depicted in Fig. 12a–c, respectively. It can be observed that predictions from the neural network model trained on the 50% and 75% sets are notably more accurate than those from the 25% set. Nevertheless, the 75% set did not yield a significant improvement in prediction accuracy over the 50% set. The MSE decreased by 860.89 mV and 860.74 mV, the RMSE decreased by 722.52 mV and 722.20 mV, and the MAE decreased by 59.58% and 59.66%, respectively. While larger training sets typically enhance the model’s ability to learn complex patterns within the data, they also demand more computational resources. The results suggested that increasing the training set size from 50 to 75% did improve prediction accuracy but, the gains were marginal compared to the computational costs incurred.

To validate the model’s robustness, an additional dataset containing voltage anomalies was used for testing. This dataset was, collected from a BMS in a western China energy storage facility with a rated battery voltage of 3.1 V. The prediction results from the model are depicted in Fig. 12d–f. Utilizing BO, the Informer neural network model achieves superior precision in forecasting voltage anomalies. The predicted values maintain a minimal margin of error compared to the actual voltage, with an MSE of approximately 1.7 mV and an MAE of around 2.06%. Experimental results validate the model’s ability to maintain accurate predictions despite variations in battery parameters, The results, also proved the model’s robustness and generalization ability.

The effect of different time intervals on prediction results

The initial BMS data was sampled every minute. The dataset size influenced the neural network model’s training efficacy. Effective learning and desired outcomes by the neural network model require sufficiently large datasets. However, larger datasets may introduce more noise and escalate training complexities for the model. Thus, this study explored time intervals of 2, 3, 4, 5, 6, and 7 min. Employing a one-step prediction approach and utilizing the top 50% of the dataset for model training improved operational efficiency. Figure 13 illustrates the prediction outcomes. The BO integrated with the Informer neural network model excels in short-term battery anomaly prediction in an energy storage facility when sampling intervals are set at 2 and 3 min. However, inadequacies in data selection lead to subpar neural network model predictions concerning anomalous feature variations, as shown in Fig. 13c–f. For training set ratios exceeding 25%, the MSE, RMSE, and MAE metrics initially exhibit a decreasing trend as the sampling interval increases. The fluctuation in error at the 25% training set ratio may stem from the exclusion of abnormal voltage data states, resulting in error accumulation over time.

While the study highlights the advantages of shorter sampling intervals (2–3 min) for accurate abnormal detection, it also underscores the need for a balanced approach in selecting the optimal time interval. This involves considering noise levels, computational constraints, and the specific requirements of the energy storage system.

Error analysis

Sections “Comparative experiment of different neural network predictions” and “The effect of training set proportion on prediction results” delve into the impacts of training set size and sampling frequency on the predictive capability of the Bayesian optimized Informer neural network model. For a more comprehensive model assessment, a detailed analysis from an error evaluation standpoint is necessary. Figure 14a–c showcase the average percentage error, RMSE, and MAE across all datasets, respectively. MAE provides a clearer picture of true prediction errors by avoiding error offsetting. MSE heightens sensitivity to error values. RMSE maintains alignment with the data scale, making it easier to interpret. changes in overall prediction accuracy. Specific error assessment metric values are listed in Table 4. The model demonstrated improved prediction accuracy as the training set proportion increased from 25 to 50%. However, further increasing the training set proportion to 75% did not yield significant changes in error assessment metrics. Notably, the lowest error occurred at a 4 min sampling interval, with MAE values of 233.85 mV and 31.99 mV, RMSE values of 513.99 mV and 62.75 mV, and MSE values of 264.18 mV and 3.94 mV across various training set proportions. Figure 14 illustrates that inadequate dataset volume fails to capture genuine data characteristics. The 4 min sampling interval likely provides the BO-Informer model with an optimal balance of data distribution and quality, enabling the model to achieve the lowest MAE in predicting voltage changes. The comprehensive error analysis reveals the intricate interplay between training set size, sampling intervals, and predictive accuracy. The analysis suggests that selecting a 4 min dataset and employing a 50% training set size can lead to more precise predictions.

Conclusion

This study employed the Bayesian algorithm to optimize the hyperparameters of the Informer neural network model, aiming to enhance the accuracy of predicting abnormal voltage fluctuations in lithium-ion batteries. The analysis compared prediction outcomes across various methods, training set proportions, and sampling time intervals.

-

(1)

Neural networks demonstrate effective short-term prediction capabilities for voltage fluctuations in energy storage systems. Specifically, the Informer neural network model optimized through the Bayesian algorithm exhibited superior prediction accuracy compared to Informer, Transformer, GRU, and LSTM neural network models without parameter optimization, in 1-step, 2-step, and 3-step prediction scenarios. In the case of 1-step prediction, the highest RMSE in the test set was 9.118 mV, with an MSE of 0.0831 and an MAE of 6.708 mV.

-

(2)

The training set size within the dataset notably impacts prediction outcomes, and selecting an appropriate training set size can yield optimal performance in degradation prediction. A training set size that is too low leads to suboptimal model predictions, whereas a size exceeding 50% yields improved prediction accuracy.

-

(3)

The experiments, excessively revealed that, -excessively high sampling frequencies can introduce noise in the data and potentially lead to misleading performance trends. Setting the sampling interval to 4 min allowed the BO-Informer neural network model to achieve reduced prediction errors and preserve the original data features more effectively.

-

(4)

In real-world energy storage plant operations, tasks like peak shaving and frequency regulation are common, leading to intricate voltage fluctuations. Future studies can investigate extensions of the model to diagnose specific types of voltage anomalies, enhancing fault detection capabilities. Additionally, exploring the model’s adaptability for voltage prediction in other battery systems can also be considered.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.. All data generated or analysed during this study are included in this published article.

References

Zhang, Q. et al. State-of-health estimation of batteries in an energy storage system based on the actual operating parameters. J. Power Sources 506, 230162. https://doi.org/10.1016/j.jpowsour.2021.230162 (2021).

Pradhan, S. & Chakraborty, B. Battery management strategies: An essential review for battery state of health monitoring techniques. J. Energy Storage https://doi.org/10.1016/j.est.2022.104427 (2022).

Gong, D. et al. State of health estimation for lithium-ion battery based on energy features. Energy 257, 124812 (2022).

Lingyun, L. I. Current situation and development trend of lithium battery industry for new energy vehicles in China. Power Technol. 44(4), 628–630 (2020).

Yin, H. et al. Modeling strategy for progressive failure prediction in lithium-ion batteries under mechanical abuse. ETransportation 7, 100098 (2021).

Wu, X. et al. Research on short-circuit fault-diagnosis strategy of lithium-ion battery in an energy-storage system based on voltage cosine similarity. J. Energy Storage 71, 108012 (2023).

Yao, L., Wang, Z. & Ma, J. Fault detection of the connection of lithium-ion power batteries based on entropy for electric vehicles. J. Power Sources 293, 548–561 (2015).

Wang, Z. et al. Voltage fault diagnosis and prognosis of battery systems based on entropy and Z-score for electric vehicles. Appl. Energy 196, 289–302 (2017).

Xu, J. et al. Multi-scale short circuit resistance estimation method for series connected battery strings. Energy 202, 117647 (2020).

Sun, Y. et al. A comprehensive research on internal short circuits caused by copper particle contaminants on cathode in lithium-ion batteries. ETransportation 13, 100183 (2022).

Hong, J., Wang, Z. & Yao, Y. Fault prognosis of battery system based on accurate voltage abnormity prognosis using long short-term memory neural networks. Appl. Energy 251, 113381 (2019).

Liu, X. et al. Review of abnormality detection and fault diagnosis methods for lithium-ion batteries. Automot. Innov. 6(2), 256–267 (2023).

Yang, Z. et al. A novel model-based damage detection method for lithium-ion batteries. J. Energy Storage 42, 102970 (2021).

Data-driven capacity estimation of commercial lithium-ion batteries from voltage relaxation. Nat. Commun. https://doi.org/10.1038/s41467-022-29837-w.

Ojo, O. et al. A neural network based method for thermal fault detection in lithium-ion batteries. IEEE Trans. Ind. Electron. 68(5), 4068–4078 (2020).

Zou, B. et al. A review on the fault and defect diagnosis of lithium-ion battery for electric vehicles. Energies 16(14), 5507 (2023).

Chen, Z. et al. Model-based fault diagnosis approach on external short circuit of lithium-ion battery used in electric vehicles. Appl. Energy 184, 365–374 (2016).

Tang, P. et al. Prediction of lithium-ion battery SOC based on the fusion of MHA and ConvolGRU. Sci. Rep. 13(1), 16543 (2023).

Jiaqiang, E. et al. Effects of heating film and phase change material on preheating performance of the lithium-ion battery pack with large capacity under low temperature environment. Energy 284, 129280 (2023).

Wang, L. et al. Construction of electrochemical model for high C-rate conditions in lithium-ion battery based on experimental analogy method. Energy 279, 128073 (2023).

Chen, M. et al. A multilayer electro-thermal model of pouch battery during normal discharge and internal short circuit process. Appl. Thermal Eng. 120, 506–516 (2017).

Shu, X. et al. An adaptive multi-state estimation algorithm for lithium-ion batteries incorporating temperature compensation. Energy 207, 118262 (2020).

Kanbur, B. B., Kumtepeli, V. & Duan, F. Thermal performance prediction of the battery surface via dynamic mode decomposition. Energy 201, 117642 (2020).

Chen, Z. et al. Multifault diagnosis of li-ion battery pack based on hybrid system. IEEE Trans. Transp. Electrif. 8(2), 1769–1784 (2021).

Wang, Y. et al. Model based insulation fault diagnosis for lithium-ion battery pack in electric vehicles. Measurement 131, 443–451 (2019).

Zheng, C., Chen, Z. & Huang, D. Fault diagnosis of voltage sensor and current sensor for lithium-ion battery pack using hybrid system modeling and unscented particle filter. Energy 191, 116504 (2020).

Gu, W. B. & Wang, C. Y. Thermal-electrochemical modeling of battery systems. J. Electrochem. Soc. 147(8), 2910 (2000).

Gan, N. et al. Data-driven fault diagnosis of lithium-ion battery overdischarge in electric vehicles. IEEE Trans. Power Electron. 37(4), 4575–4588 (2021).

Zhang, L. et al. Research on electric vehicle charging safety warning model based on back propagation neural network optimized by improved gray wolf algorithm. J. Energy Storage 49, 104092 (2022).

Li, D. et al. Battery fault diagnosis for electric vehicles based on voltage abnormality by combining the long short-term memory neural network and the equivalent circuit model. IEEE Trans. Power Electron. 36(2), 1303–1315 (2020).

Zhao, H. et al. Multi-step ahead voltage prediction and voltage fault diagnosis based on gated recurrent unit neural network and incremental training. Energy 266, 126496 (2023).

Li, S. et al. Degradation prediction of proton exchange membrane fuel cell based on Bi-LSTM-GRU and ESN fusion prognostic framework. Int. J. Hydrogen Energy 47(78), 33466–33478 (2022).

Liu, Z. et al. Durability estimation and short-term voltage degradation forecasting of vehicle PEMFC system: Development and evaluation of machine learning models. Appl. Energy 326, 119975 (2022).

Liu, C. et al. Accuracy improvement of fuel cell prognostics based on voltage prediction. Int. J. Hydrogen Energy 58, 839–851 (2024).

Polson, N. G. & Sokolov, V. Deep learning: A Bayesian perspective. 2017.

Chen, D. et al. Performance degradation prediction method of PEM fuel cells using bidirectional long short-term memory neural network based on Bayesian optimization. Energy 285, 129469 (2023).

Wang, L. et al. Accurate solar PV power prediction interval method based on frequency-domain decomposition and LSTM model. Energy 262, 125592 (2023).

Zhou, H., Zhang, S. & Peng, J., et al. Informer: Beyond efficient transformer for long sequence time-series forecasting. 2020. https://doi.org/10.48550/arXiv.2012.07436.

Guo, L., Li, R. & Jiang, B. A data-driven long time-series electrical line trip fault prediction method using an improved stacked-informer network. Sensors (Basel, Switzerland) 21(13), 4466. https://doi.org/10.3390/s21134466 (2021).

Tian, Y. et al. An adaptive hybrid model for wind power prediction based on the ivmd-fe-ad-informer[J]. Entropy 25(4), 647 (2023).

Funding

Project supported by the Open project of Key Laboratory in Xinjiang. Uygur Autonomous Region of China(2023D04071); National Natural Science Foundation of China (52167016); Project Supported by Key Research. and Development Project of Xinjiang Uygur Autonomous Region(2022B01020-3).

Author information

Authors and Affiliations

Contributions

Conceptualization, Z.R. and J.W.; methodology, Z.R. and J.W.; validation, Z.R. and G.L.; data collation, Z.R.; writing-original draft preparation, Z.R.; writing-review and editing, Z.R.; supervision, G.L. and H.W.; project. administration, J.W. All authors have read and agreed to the submitted version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Rao, Z., Wu, J., Li, G. et al. Voltage abnormity prediction method of lithium-ion energy storage power station using informer based on Bayesian optimization. Sci Rep 14, 21404 (2024). https://doi.org/10.1038/s41598-024-72510-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-72510-z

Keywords

This article is cited by

-

Temporal-aware transformer networks for state of charge multi-output prediction in unmanned aerial vehicle lithium-ion batteries

Scientific Reports (2025)

-

A hybrid approach for lithium-ion battery remaining useful life prediction using signal decomposition and machine learning

Scientific Reports (2025)

-

Nonlinear degradation modeling and remaining useful life prediction for electric drive system with multiple failure modes

Scientific Reports (2025)