Abstract

Disparities in surgical outcomes often result from subjective decisions dictated by surgical training, experience, and available resources. To improve outcomes, surgeons have adopted advancements in robotics, endoscopy, and intra-operative imaging including fluorescence-guided surgery (FGS), which highlights tumors and anatomy in real-time. However, technical, economic, and logistic challenges hinder widespread adoption of FGS beyond high-resource centers. To overcome these impediments, we combined laser diodes, Raspberry Pi cameras and computers, off-the-shelf optical components, and 3D-printed parts to make a battery-powered, compact, dual white light and NIR imaging system that has comparable performance to existing bulkier, pricier, and wall-powered technologies. We combined these components with off-the-shelf augmented reality (AR) glasses to create a fully-wearable fluorescence imaging AR Raspberry Pi-based goggle system (FAR-Pi) and validated performance in a pre-clinical cancer surgery model. Novel device design ensures distance-independent coalignment between real and augmented views. As an open-source, affordable, and adaptable system, FAR-Pi is poised to democratize access to FGS and improve health outcomes worldwide.

Similar content being viewed by others

Introduction

Surgery is the primary curative method for most localized solid tumors. Surgical outcomes continue to improve for most tumor types due in part to advances in preoperative and intraoperative imaging technologies. However, mortality rates after cancer surgery are disproportionately higher in low-resource than in high-resource medical centers1, regardless of the aggregate income level of any country. High-resource medical centers have access to technologies that promise to improve cancer surgery outcomes, but these technologies are often too complex or expensive to be accessible to low-resource centers2.

One such technology is near infrared (NIR, 700–900 nm) fluorescence-guided surgery (FGS), which provides high contrast and high resolution delineation of important anatomy or malignant tissue in real-time at depths up to 1–2 cm, and thereby enables surgeons to augment their vision so that they can reduce complications by identifying critical anatomy and malignant tissue that is otherwise difficult to see3,4,5,6,7. For example, without FGS technology in both minimally invasive or open surgery, failure to visually identify critical anatomic structures that are obscured in the surgical field can lead to iatrogenic injuries that are associated with high patient morbidity and poor surgical outcomes. Furthermore, failure to visually identify essentially invisible cancer margins leads to incomplete cancer resection and increased patient mortality. Several studies have validated the clinical utility of FGS, including (1) a randomized controlled trial leading to the 2017 FDA-approval of 5-aminolevulinic acid (5-ALA) for malignant glioma excision where 41% of patients with 5-ALA guided excision had progression free survival at 6 mo compared to 21% of patients with standard white-light guided excision8, (2) a randomized single-blind trial in patients undergoing laparoscopic cholecystectomy where NIR imaging of indocyanine green (ICG) was approximately 3 times more effective than white light imaging at detecting biliary structures at risk of iatrogenic injury9, and (3) a prospective trial in 66 adults with cervical cancer in whom pelvic nerves, which when not visualized are at risk for injury during surgery, were well visualized with NIR ICG imaging in > 80% of patients10. Beyond these examples, there are numerous completed and ongoing clinical trials (19 otolaryngology-related studies ongoing in 2021 alone3) testing both traditional non-targeted fluorophores like ICG, 5-ALA, methylene blue, and fluorescein as well as newer targeted fluorophores like cancer-targeting monoclonal antibodies bound to IRDye800W and the cancer-targeting small molecule LS301 developed in our lab in applications including lymph node identification, lymphatic and vascular perfusion mapping, nerve identification, and cancer resection11,12,13,14,15,16.

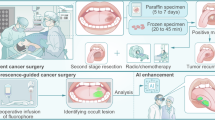

There are numerous commercial and research grade FGS imaging systems built for both minimally invasive surgery (MIS) and open surgery7,17,18. A core technology that is shared across all of these systems (cart-based, endoscopic, handheld, and goggle-based FGS systems), summarized in Fig. 1A, is the combination of a targeted NIR fluorophore with the ability to detect and superimpose fluorophore-specific NIR fluorescence with a coaligned visible light image. Further innovations in these systems include (1) use of sophisticated CCD, EMCCD, ICCD, or sCMOS camera sensors with high bit-depth and enhanced NIR quantum efficiency, (2) the ability to have multiple channels to obtain white light images simultaneously with multiple fluorophores that have nonoverlapping excitation/emission spectra19,20, and (3) the ability to filter out ambient light in real-time21,22,23. The manner by which this signal is presented to surgeons varies between systems (Fig. 1B)7,17,18. In MIS settings, a separate monitor displays the NIR signal superimposed with visible signal to effectively extend a surgeon’s white light vision to include NIR fluorescence. However, in the open surgery setting, existing systems display the fluorescent NIR signal on a separate monitor that requires clinicians to divert their attention from the surgical field.To overcome this limitation, we and others19,20,23,24,25,26,27,28,29,30,31,32,33,34 have introduced virtual reality (VR) and augmented reality (AR) FGS systems that use heads-up-display (HUD) see-through goggles to project the fluorescent signal directly onto the surgeon’s field of view in real-time.

Summary of core fluorescence-guided surgery (FGS) technology present in existing FGS systems, examples of existing FGS applications, and breakdown of key components necessary to implement FGS technology with off-the-shelf accessible components. FGS systems excite and detect the fluorescence signal from a fluorophore that accumulates within a tissue of interest and displays this signal to a surgeon to allow them to better visualize structures that are otherwise difficult to see. (A) The key components powering an open-surgery FGS system are reviewed in an example breast tumor excision. Prior to excision, a flurophore that accumulates within the tumor is administered. Once superficial tissue is excised, tumor tissue (faint gray) is illuminated with an excitation light source (with room lights on) resulting in NIR fluorescent emission. Next, cameras detect both the reflected room light (VIS) as well as the NIR fluorescent light while filtering out any reflected excitation light. (B) In some FGS implementations, the imaging and illumination modules are housed in a handheld module that the surgeon positions over the surgical cavity and a fusion of the visible and NIR detected images is presented on a separate display, allowing the surgeon to better visualize the tumor. An alternative is an AR implementation, where the fluorescent signal is false-colored and projected onto AR glasses in a position coaligned with the surgeon’s real world view of the tumor. (C) The core FGS technology as well as an AR implementation of FGS can be achieved with a wearable system made of accessible components. Excitation can be achieved with compact laser diodes. Dual white light and NIR imaging can be achieved with Raspberry Pi cameras coupled with off-the-shelf optical filters and mirrors and coaligned in a compact 3D printed assembly. Image acquisition and processing can be achieved with a battery-powered wearable Raspberry Pi computer, and AR display can be achieved with off-the-shelf AR glasses.

The clinical problems addressed by FGS are present around the world, but in current practice, existing FGS systems (including handheld, endoscopic, and cart-based systems) are only available to a select few with access to high-resource settings. In some applications of FGS, the complexity and expense of sophisticated targeted FGS fluorophores, such as those using costly cancer-targeting monoclonal antibodies, creates a significant barrier for global adoption, and in these cases efforts to increase access to these novel targeted fluorophores are needed. However, many prominent clinical successes in FGS employ non-targeted accessible fluorophores like ICG and 5-ALA, and in these cases, the barrier to global adoption of FGS is the inaccessibility of existing FGS imaging systems. Several factors drive this inaccessibility, including that existing systems are wall-powered, bulky (even handheld units like the SPY portable handheld imager has a bulky wheeled-cart that the handheld unit connects to), require support staff to operate, and are cost-prohibitive17,18. Our previous goggle-based AR system24,25,26,34,35 was a first step towards creating a more accessible wearable system that is more affordable than commercial systems and able to operate without support staff, but was still wall-powered, utilized a bulky excitation source, and required custom built NIR-enhanced camera sensors. For adoption in low-resource settings, FGS systems must be designed to be affordable, compact enough to fit into space-constrained operating rooms, battery-powered given potential unreliable wall-power, and rely on minimal or no support staff to function.

In recent years there has been an increased interested in using open-source hardware tools of the ‘Maker-movement’36, including battery-powered compact single board computers (SBC) like the Raspberry Pi (RPi), off-the-shelf electronics and sensors, and 3D-printers, to democratize medical technology by making otherwise inaccessible medical technology more accessible37,38,39,40,41,42. This type of work, where accessible solutions are sought in the face of resource contraints, has been called ‘frugal innovation’43,44. For example, the RPi coupled with off-the-shelf components has been used to develop multiple medical and research devices including digital scanning microscopes45, syringe pumps46, ear-contactless auscultation of patients with COVID-1947, and a low-cost wireless endoscope42. In addition, several groups have attempted to build FGS systems using off-the shelf Raspberry Pi components. Kim et al. found that the RPiV2 NoIR camera can detect NIR parathyroid tissue fluorescence48, but neither fluorescence detection limits nor laser power was characterized, and signal detection required 2-second exposure times which are not compatible with real-time imaging. In addition, Li et al. showed that a Raspberry Pi computer could serve as the computational core of a projection-based NIR FGS system, but their system lacked a second visible camera stream, relied on long exposure times (330 ms), and used a camera that was an order of magnitude more expensive than the RPiV2 camera49. While these examples show the promise of off-the shelf components, they fail to recapitulate key features of existing FGS systems and raise the question of whether accessible off-the-shelf technologies are sufficiently performant to support the sophisticated functionality present in existing FGS systems.

Inspired by these efforts, we explore whether off-the shelf RPiV2 cameras, despite having lower bit depth and NIR sensitivity than scientific-grade cameras utilized in existing FGS implementations, can be combined with a RPi SBC and compact laser diodes to achieve the core NIR FGS technology of real-time coaligned white light and NIR fluorescence (VIS/NIR) imaging with ambient light subtraction. Furthermore, while this RPi-enabled core FGS functionality could support a variety of different accessible FGS implementations, in the present work we pair RPi-enabled dual VIS/NIR imaging with off-the-shelf see-through HUD AR glasses to create a wearable fluorescence imaging AR RPi-based system (FAR-Pi) (Fig. 1C). We also introduce an innovative and simple solution to ensure distance-independent coalignment between the surgeon’s view through the AR glasses and the coaligned VIS/NIR signal projected on the AR glasses.

Unlike existing commercial systems, FAR-Pi is designed to be compact, wearable and battery-powered. By making the design open-source, accessible, inexpensive, and modular, FAR-Pi presents an opportunity to support multiple clinical use cases, foster innovation, allow for local manufacturing in areas where medical exports are limited, and democratize this previously inaccessible technology for the betterment of human health37,38,43.

Results

Core FGS functionality benchmarking

Performance specifications of existing commercial and research-grade FGS systems has been previously reported17,18,26,27,29,35,50 and in the sections that follow we compare the performance of the FAR-Pi excitation and light detection modules with existing FGS system benchmarks. Table 1 summarizes these comparisons.

FAR-Pi excitation module – laser diode array

Existing FGS systems achieve NIR excitation with 4–31 mW/cm2 surface irradiance for fluorophore excitation with working distances (distance between system and surgical field) ranging from 5 to 63 cm. The field of view (FOV) of existing FGS systems ranges from 3.3 cm2 to 280 cm2, and while the area of illumination is not specifically reported, it is reasonable to assume that the area of illumination in existing systems scales with FOV17,18 (Table 1). We first tested whether a battery-powered circular array of three 780 nm laser diodes (Fig. 2A) could achieve a range of irradiance values and area of illumination comparable to existing systems. The assembled laser diode array weighed 110 g and fit within a 3D printed cylindrical housing with 60 mm height and a 64 mm diameter (Supplement Sect. 1, Figures S1-S3). When mounted to a surgical head-gear the laser diodes were centered 5 cm above the eyes and had an angle of 7° relative to surgeon’s view in order for the center of illumination to correspond to the center of the surgeon’s view (Fig. 2B). In this assembly the distance between laser diode array and surgical field was 9 cm closer than the distance between the surgeon’s eyes and the surgical field (Fig. 2B).

An illumination module consisting of a circular 780 nm laser diode array delivers sufficient laser irradiance at relevant working distances to support FGS. (A) CAD models and 3D-printed implementation of a circular arrangement of laser diodes. The distance d between individual laser center and array center and the working distance between laser diodes and surgical field determine the angle of laser inclination θ. The 3D-printed implementation is an illumination module with central white light LED around a 3-laser diode array. (B) To-scale schematic demonstrating FGS implementation where laser diode array is head-mounted on the mid forehead approximately 5 cm above the eyes to provide illumination of the surgical field 50 cm away from the surgeon along the surgeon’s central field of view. In this position the illumination source is approximately 10 cm closer to the surgical field than the surgeon’s eyes are to the surgical field and makes an angle of 7° with the horizon. (C) Spectral characterization of a Laserlands 120 mW 780 nm laser diode demonstrating peak intensity at 783 nm with < 2 nm full-width at half maximum. (D) Laser diode array spatial power distribution was measured at distances between laser and illumination surface of 40 cm, 50 cm, and 60 cm. The spatial power distribution at 40 cm is shown as a 2 dimensional heatmap, and horizontal and vertical cross sections at 40, 50, and 60 cm are plotted around the heatmap. (E) At each working distance the square centimeter area over which irradiance exceeds threshold values of 4, 6, 8, and 10 mW/cm2 is plotted.

The laser array had a spectral peak at 783 nm with a 2 nm full width at half maximum (Fig. 2C). The distance dependence of surface irradiance and change in area over which different surface irradiance thresholds were exceeded was assessed by increasing distance between laser diode and surface to a maximum of 60 cm. Maximum irradiance decreased from 22.5 to 8 mW/cm2 as distance increased (Fig. 2D). The area of irradiance exceeding 10 mW/cm2 at a distance of 40 cm was 12.8 cm2. The area of irradiance exceeding 4 mW/cm2 ranged from 27.7 to 29 cm2 at distances of 40 to 60 cm (Fig. 2E). Of note, given the 7° shallow angle between the illumination module and the surgeon’s horizon, the center of illumination shows relatively small shifts in center position compared to the area of illumination as distance between the field and the eyes changes (6.2 mm shift for 5 cm deviations in head position).

FAR-Pi coaligned NIR and white light detection – synchronized RpiV2 cameras

We next evaluated whether RPiV2 cameras could achieve synchronized dual NIR and white light imaging similar to some existing FGS systems. RPiV2 camera spatial resolution ranged from 138 to 227 μm (3.6–2.2 line pairs/mm respectively) at distances between 35 and 65 cm (Fig. 3A). This aligns with theoretical predictions (Supplement, Section 2 A-B) and is within the 50–500 μm spatial resolution range of existing FGS systems (Table 1). In agreement with theoretical predictions the RPiV2 camera remained in focus over a 20 cm distance between 35 and 55 cm. Existing FGS systems have reported depth of focus of 2–3 cm (Table 1).

Raspberry PiV2 camera sensors can be combined with inexpensive optical components in a compact 3D-printed enclosure to achieve coaligned dual visible and NIR imaging. A) Sensor dimensions and lens field of view, focal length, and aperture can be used to predict camera spatial resolution (line pairs per mm) and depth of field (depth of focus) limits as a function of object distance. Theoretical predictions were confirmed by determining the highest resolvable line pair group in a Thorlabs 1963 A resolution test target at a given distance. B) To-scale schematic demonstrating optically aligned RPiV2 cameras, cold IR mirror that serves as a beamsplitter, excitation filter, and optical path of visible light (green line), excitation light (red line) and NIR fluorescent light (purple line). Inset shows CAD model of the assembled 3D-printed enclosure containing these components. (C) Superimposed visible (misaligned parts false-colored green) and NIR (misaligned parts false-colored purple) images of a resolution test target captured 30 cm, 50 cm, and 70 cm away from the camera assembly. (D) A projective transform defined from corresponding fiducial points obtained from the 50 cm NIR and visible images, when applied to images obtained at any distance, achieves near-perfect alignment between NIR and visible camera images independent of distance.

To achieve optically aligned visible white light and NIR imaging, the RPiV2 standard camera sensor (sensitive to visible white light but not NIR) and RPiV2 NoIR sensor (sensitive to NIR and visible light) were optically coaligned with a cold IR mirror that has > 95% visible light reflection and > 90% NIR light transmission (Fig. 3B) in a 3D-printed enclosure. This resulted in a lightweight (< 5 g) and compact (< 6 cm3) imaging module capable of synchronized white light and filtered NIR imaging.

Misalignment between the white light camera sensor (VIS) and the NIR camera sensor, arising due to inaccuracies in 3D printing and sensor positioning, could be corrected through a software-based real-time image transformation applied to the VIS images. Without software correction the alignment error between NIR and visible images was 1 pixel horizontally and 20 pixels vertically, which translates to a 3 and 5 mm vertical deviation between VIS and NIR images acquired at 30 and 50 cm, respectively (Fig. 3C). To align these images a single projective transform was defined from matched fiducial markers present in the VIS and NIR images at 50 cm. Though the transform was determined at 50 cm, applying it to images ranging from 30 cm to 70 cm resulted in near-perfect alignment (within 1 pixel vertically and horizontally) between NIR and VIS images (Fig. 3D). Thus, a software-based projective transform, determined once at a single distance, was able to overcome inaccuracies inherent in aligning components using 3D printed parts and achieve distance-independent real-time optically coaligned dual white light and NIR imaging. This underscores the potential for low-cost manufacturing practices like 3D printing to support the development of high-quality medical technologies in low-resource settings. Supplement Sect. 2 C-F, Figures S4-S8 provides further details regarding RPiV2 camera characterization and dual white light/NIR camera assembly.

FAR-Pi NIR fluorescence sensitivity

We next evaluated if the NIR-sensitive NoIR RPiV2 camera coupled with one of four excitation filter options with varying optical densities (OD) (Fig. 4A) could achieve sensitivity limits within the range of 50 pM to 60 nM reported for existing commercial and research-grade systems (Table 1). Absolute pixel intensity and fluorescence signal to background (SBR) was measured using a stable ICG-mimicking fluorescence phantom with 250 pM-100 nM concentrations using both 13.5 mW/cm2 and 22.5 mW/cm2 irradiance (Fig. 4B-D). All data shown is for real-time exposure times (33 ms) and ISO of 800. The 830 nm longpass LP excitation filter had the lowest OD and lower fluorescence SBR and higher control well absolute pixel intensity compared to the other excitation filter options. At both irradiance levels the ~$40 single 830 nm LP option provided SBR > 2 for concentrations of 10 nM and higher. Control well absolute pixel intensity was lowest for the 808 nm LP filter, which was the filter with highest OD and highest cost (~$500). The 808 nm LP and inexpensive double 830 nm LP (~$80) design both had SBR exceeding 2 at concentrations of 5 nM and SBR > 1.2 at concentrations of 1nM and above at both irradiance levels. The ~$225 832 BP filter had similar performance to the double 830 nm LP design. Increasing exposure time yielded higher pixel intensities and boosted SBR at lower concentrations beyond SBR of 1, with an SBR of 1.77 at 250 pM for the 808 nm LP at 22.5 mW/cm2 irradiance and 99 ms exposure time (Supplement Section 2G, Figures S9-S12 for sensitivity results with varying exposure times and ISO levels).

Fluorescence sensitivity varies with excitation filter optical density and irradiance. (A) The optical density of four excitation filter options, including a single 830 nm Newport longpass filter (830 × 1 nm LP), two 830 nm LP filters in series (830 × 2 nm LP), one 832 nm Edmund bandpass filter (832 nm BP), or one 808 nm Semrock LP filter (808 nm LP), are plotted and compared with the normalized emission spectrum of LS301, a tumor-targeting NIR fluorophore12; (B) Schematic of experimental determination of FAR-Pi fluorescence sensitivity limits using a 96 well plate with duplicate IR-125 concentrations ranging from 250 pM to 100 nM as well as a control well. Irradiance of 22.5 mW/cm2 and 13.5 mW/cm2 were used, corresponding to positioning of the laser diode at 40 cm and 50 cm from the phantom, respectively. The difference between images obtained with laser on and laser off defined an NIR-background-subtracted image for each well. Fluorescence SBR was calculated for each well by dividing the average background-subtracted pixel intensity of a given well by the average detected background-subtracted pixel intensity in the control well. C-D) A log-log plot of average pixel intensity (out of 255) on the top panel, and SBR (on the bottom panel) vs. IR-125 concentration for each of the 4 different excitation filter options with 22.5 mW/cm2 irradiance (C) and 13.5 mW/cm2 irradiance (D).

Computation module

Raspberry Pi SBCs are less computationally powerful than computers used in existing FGS systems18,25,26,29, but have the advantage of being wearable and relatively inexpensive49. We therefore tested if Raspberry Pi SBCs had sufficient computational power to support FGS.

Dual-camera streaming

We achieved dual-camera streaming with both the Compute Module 4 (RPiCM4) and the Raspberry Pi V4 SBC (RPiV4). With the use of Python threaded processes, the PiCamera package, and OpenCV methods, the RPiCM4 and RPiV4 could read, process, modify, and display images via HDMI port without noticeable delay. CSI to HDMI conversion boards, which allow the video signal to be transferred via HDMI cables, ensured video signal fidelity over 5 m (Supplement, Sections 3 A-C).

Laser diode array control and synchronization for ambient light compatible NIR imaging

We developed a custom ‘HAT’ (Hardware Attached on Top) circuit board that interfaces with the Raspberry Pi SBC general-purpose input/output (GPIO) pins and allows the laser diode to be toggled on and off through software-based control (Figures S13, S14). To support NIR imaging with ambient lights on, we toggle the laser diode on and off with consecutive frames and determine the absolute value of subtracted consecutive frames (∆Fn = |Fn – Fn−1|) to remove ambient NIR signal while retaining laser-induced fluorescence.

The Raspberry Pi SBC was modified to allow continuous monitoring of camera frame read start and stop events, and then these detected events were used to toggle the laser diode on and off with every other frame. Figure 5A shows consecutive frames captured using this approach with a tube of ICG, imaged with the FAR-Pi imaging module equipped with an 830 nm LP filter and with the laser diode on for 70% of the duration of each frame. The “laser on” frames showed strong fluorescent signal from the ICG tube as well as background NIR signal, while “laser off” frames only showed background NIR signal. The absolute difference between consecutive frames obtained with a 70% duration laser pulse yielded a continuous 30 frames per second (fps) NIR-background-subtracted stream (Fig. 5B). In the case of laser duration equaling 90% of frame duration, ICG fluorescence was present in all frames (Supplemental Figure S15), suggesting that during “laser-off” frames camera sensor exposure had started before the laser had been turned off.

Synchronization of laser excitation with alternating camera frames facilitates real-time NIR-background subtraction. (A) The device tree of the Raspberry Pi computer is modified to toggle a GPIO pin high/low with camera frame read start/stop events, generating a square wave (frame start/stop pulse, green) with 33 ms frame duration (tF) when imaging at 30 frames per second. Triggering the NIR laser diode array at the end of every other frame (double the period of the camera pulse) toggles laser excitation with each frame. Consecutive captured frames are shown of an Eppendorf tube filled with indocyanine green (ICG) next to a tube filled with water using the NIR-sensitive camera in the FAR-Pi VIS/NIR imaging module (with a 830 nm LP excitation filter) where the duration of the laser diode pulse duration was set to 70% of frame duration (0.7tF). All of the frames show faint signal in the top of the image corresponding to the ambient NIR light reflected off of white paper (white arrows), but only alternative frames show fluorescent signal from the ICG tube. (B) To achieve ambient NIR subtraction, we determine the absolute value of the difference between the current frame (Fn) and the preceding frame (Fn−1). Three consecutive ambient subtracted frames are shown (note the absence of background white paper ambient NIR light in the top), generated from the 70% frame duration frames in A).

In vivo evaluation

The ability of the 3-diode laser excitation array and dual RPiV2 VIS/NIR imaging module to detect in vivo accumulation of the intravenously administered cancer-targeting fluorophore LS30112 was tested using a subcutaneous breast cancer model in n = 4 mice. Figure 6A summarizes the experimental setup. In Fig. 6B the amount of excitation light reflected off of white paper, leaked through one of the 4 different excitation filters, and subsequently detected by the NIR camera is shown and quantified. Figure 6C provides a visualization in one mouse and quantification across all mice of detected fluorophore SBR for each of the 4 excitation filter options. The maximum (out of 1.0) pixel intensity NIR excitation light leaking through an 830 nm LP filter assembly was 0.53 (Fig. 6B). The double 830 nm LP assembly, 832 nm BP filter, and 808 nm LP filters were more effective excitation light filters with maximum pixel intensity of leaked NIR excitation light of 0.082, 0.106, and 0.090 respectively (Fig. 6B). Across all mice the average LS301 peak SBR signal (Fig. 6C) measured with the single 830 nm LP filter was significantly lower (2.11 ± 0.16) than LS301 peak SBR measured with the double 830 nm LP filter (6.40 ± 0.62, p = 5.5•10−8), the 832 nm BP filter (4.9 ± 0.35, p = 6.0•10−6), or the 808 nm LP filter (5.93 ± 0.50 p = 2.0•10−7). LS301 peak SBR was significantly higher when measured with the double 830 nm LP than with the single 830 nm LP filter (p = 0.0021) but not significantly different (p = 0.47) when compared to the 808 nm LP filter. Finally, LS301 peak SBR was significantly higher when measured with the 808 nm LP than with the 832 nm BP filter (p = 0.029).

Compact battery-powered FGS system consisting of a NIR laser diode array coupled with RPi v2 cameras and NIR optical filters can detect cancerous tissue in an in vivo mouse model. A) Schematic of experimental setup. A mouse with a dorsal flank tumor is imaged 24 h after receiving the NIR cancer-targetting fluorophore LS301 via tail vein injection. The fluorophore is excited with the FAR-Pi laser diode array and fluorophore emission is detected with FAR-Pi dual VIS/NIR imaging module, which consists of 2 cameras coupled with cold IR mirror as a beamsplitter (BS) and excitation filter (ExF). Four different imaging modules were tested corresponding to 4 excitation filter options (one Newport 830 nm LP, two stacked Newport 830 nm LP, one Edmunds 832 nm BP, and one Semrock 808 nm LP) B) Pixel intensity heatmap (out of 1) of excitation light reflecting off of white paper captured by the NIR-sensitive camera for each excitation filter option. Below each heatmap, the pixel intensity along the line through the image which intersects with peak intensity is plotted. Larger pixel intensity corresponds to greater degree of excitation light leakage through the excitation filter. C) Visual and quantitative view of in vivo NIR fluorescence emitted from the cancer-targeting NIR fluorophore LS301 measured with each of the FAR-Pi imaging module excitation filter options in the same mouse. In each case, coaligned simultaneous visible and NIR images are captured with laser on and off, the NIR signal is processed to generate an NIR-background subtracted image, and then the background subtracted image is divided by the excitation filter-specific leaked excitation peak intensity (top half) to generate a SBR heatmap. The SBR heatmap is shown as a partially transparent overlay on the coaligned visible image using a threshold of SBR > 1.5. Lower panel is a box and whisker plot quantifying the peak SBR value across all mice grouped by the type of excitation filter used during data acquisition. Median is shown in red, top and bottom lines show 75th and 25th percentile, respectively. The blue notch indicates a 95% confidence interval around the median.

Using FGS core components to implement AR wearable FGS

The preceding results demonstrate that a battery-powered 780 nm laser diode array coupled with RPiV2 visible and NIR-sensitive cameras, optical filters, and Raspberry Pi computational module provide the core functionality necessary for ambient light-corrected coaligned white light and NIR FGS. In the sections that follow results specific to an AR see-through wearable FGS implementation that incorporates this core FGS functionality are presented.

Ensuring alignment of wearable visible and NIR cameras with user’s view

The signal detected by the FGS NIR-sensitive camera (“camera-view”) which is projected to the user on the AR glasses must be aligned with the user’s real-world view (“surgeon’s-view”) to ensure surgical accuracy. When the optical axis of the camera-view differs from the optical axis of the surgeon’s-view, there is a misalignment between these two views that is typically corrected through software-based image transformation or reliance on fixed fiducial markers in the surgical field28,29,51. However, as the distance between the surgeon and the surgical field changes, because of a parallax effect, a software-based image transformation defined at one distance fails to achieve alignment between the camera-view and surgeon’s view. In Fig. 7A-C we show a schematic and 3D-rendering of the experimental setup used to quantify this alignment error by positioning the FGS imaging module at eye-level, midway between the eyes, and capturing images of a resolution target positioned 40–60 cm away using both the VIS/NIR camera module as well as a camera positioned behind the glasses to mimic the surgeon’s eye (‘surrogate-eye’). Figure 7C is a 3D rendering of the surgeon’s view, where the resolution target (purple) is seen through the glasses and the image of the target captured by the VIS/NIR camera module is projected onto the surface of the glasses in green. The resolution target images acquired by the VIS/NIR module (purple) and ‘surrogate-eye’ camera (green) when the target is 50 cm away is shown in Fig. 7D, where there is a misalignment of 6.9 mm vertically and 23.0 mm horizontally between the surgeon’s view and the VIS/NIR module. Fiducial markers that match between the images define a fixed projective transform that is subsequently applied to the VIS/NIR module images captured as distance varies ± 10 cm from the 50-cm calibration distance. At each distance the horizontal error between the transformed VIS/NIR module images and the surrogate-eye images is calculated and plotted in Fig. 7E. Above the plot the transformed VIS/NIR image and surrogate-eye camera images are displayed, zooming in on the resolution target 1.0 group, for − 5 cm, 0 cm, and + 5 cm away from the calibration target. While the transformed images show excellent alignment and minimal alignment error at 50 cm, the projective transform-corrected images demonstrate horizontal alignment errors that linearly increases 1 mm for every 2 cm deviation from the calibration distance. Thus with the imaging module placed between the eyes, alignment between VIS/NIR module and the surgeon’s view is not achieved with a fixed projective transform derived at a single distance, and would likely require recalibrating the projective transform at each distance.

Alignment between FAR-Pi imaging dual visible/near infrared camera (VIS/NIR) module and surgeon’s eye is assured at any distance if VIS/NIR module and eye are optically coaxial. In A-E) the VIS/NIR module (green cube) is between the eyes (not aligned with the eye), whereas in F-J) a beamsplitter in front of the augmented reality (AR) glasses makes the VIS/NIR module and eye optically coaxial. A) Experimental schematic with a resolution target positioned at distances ranging from 40 to 60 cm away from AR glasses with VIS/NIR module between the eyes. B-C) 3D rendering of resolution target (purple) in front of glasses (B) and looking through the glasses (C) with the image captured by the VIS/NIR module projected on the glasses (green). D) A camera is placed behind the glasses to visualize what the surgeon sees, this ‘surrogate-eye’ camera output is shown in purple. With the resolution target 50 cm from glasses, VIS/NIR camera (green) images do not align with ‘surrogate-eye’ images. Matching fiducial markers define a projective alignment transformation. E) Misalignment error between transformed images and ‘surrogate-eye’ images is plotted for resolution target distances ± 10 cm from calibration distance, showing increase of 2 mm in horizontal misalignment per centimeter deviation. This misalignment is visualized above the plot. At 50 cm VIS/NIR images and the ‘surrogate-eye’ images align (0 cm box above plot), but when the resolution target is moved ± 5 cm from the calibration distance, applying the 50-cm-derived transform to the VIS/NIR images no longer achieves alignment with ‘surrogate-eye’ images (± 5 cm boxes). F-H) Similar to A-C) but now the VIS/NIR module is mounted above a beamsplitter (yellow) that is placed in front of glasses. I) With the resolution target 50 cm from the glasses, the ‘surrogate-eye’ image (purple) and VIS/NIR image (green) are more closely aligned than in D). As in D) a projective alignment transform is defined at 50 cm by matching fiducial markers. J) As in E) misalignment between transformed VIS/NIR images and ‘surrogate-eye’ images is quantified and visualized. Unlike E), images at all distances remain aligned despite the 50-cm-derived transform being applied at each distance.

Figure 7F-H shows an alternative assembly that overcomes the issues of parallax seen in Fig. 7A-C by ensuring that the optical path of the VIS/NIR camera and surgeon’s eye is coaxial. In this assembly, a beamsplitter is placed at 45° in front of the surgeon’s eye and the VIS/NIR imaging module is aligned above the beamsplitter. The baseline misalignment between the eye and VIS/NIR cameras is reduced to 17.3 mm vertically and 3.4 mm horizontally (Fig. 7I). As in 7D, matched fiducial markers between the surrogate-eye images and the VIS/NIR images defines a projective transform that is then applied to the VIS/NIR module images as target distance varies from 40 to 60 cm. Figure 7J shows the same results as shown in Fig. 7E but now with the beam-splitter design highlighted in 7 F-H. In this optically coaxial assembly, the fixed projective transform defined at 50 cm achieves alignment between the imaging module and the surgeon’s eye at any distance, with total alignment error remaining less than 0.5 mm vertically and 0.3 mm horizontally as the target moves 10 cm closer or farther from the calibration distance. Thus, when the VIS/NIR imaging module is made coaxial with the user’s eye via use of a beamsplitter, a single projective transform defined at one calibration distance can be applied to detected images before they are projected to the user to ensure alignment at any working distance.

Incorporating a see-through augmented reality display

To provide the user with a projected view of the fluorescent signal detected by the FAR-Pi imaging module, see-through augmented reality (AR) glasses (Rokid Air, Rokid, Hangzhou, China) were selected, modified with custom 3D-printed mounts and combined with the FAR-Pi imaging and illumination modules using the beamsplitter-based design in the Fig. 7E. The illumination laser diode array module was positioned mid-forehead midway between the eyes at an angle to ensure that the center of the illumination array corresponded to the center of the surgeon’s field of view at a 50 cm working distance. The fully assembled system (Fig. 8A) consists of head-mounted components (390 g), a waist-worn computational module (580 g) and USB battery pack (460 g) providing 5 h of power. Assembly steps are detailed in Methods and Supplemental Sect. 5, Figures S16-24.

FAR-Pi system achieves dual NIR-visible coaligned augmented-reality fluorescence imaging in a fully wearable form using off-the shelf components. A) The <$1400 FAR-Pi FGS is a fully-wearable battery-powered assembly which recapitulates the functionality of existings FGS systems that require wall-power and support staff, while being orders of magnitude smaller and less costly. B) Images captured while looking at a stopwatch through the glasses showed both the real-world view of the stopwatch (‘see-through reality’) and a projected view of stopwatch (‘projected’) captured by the FAR-Pi system cameras. A 50 ms delay is demonstrated between the real-world view and the projected view. C) Image captured while looking at a rectangular calibration target through the glasses. In this view a menu with green text is projected to the user, otherwise the real-world view of the calibration target is unobstructed. D) The calibration process starts, and a calibration target captured by the FAR-Pi cameras is projected to the user in purple, with the corners projected in green. The surgeon must select corresponding corners in their field of view (open circles) to define a transformation that is subsequently applied in real-time to coalign the camera signal and the surgeon’s view. E) With calibration complete, the alignment transformation is applied and results in alignment between the camera’s and user’s view of the calibration target. F) Schematic of an experiment where an image is captured while looking at a vial of 1 mM ICG through the glasses. G) Looking through the glasses at a vial of ICG while the laser is off. With no excitation there is no detected fluorescence and therefore no projected overlay. Thus, the user sees the real-world ICG vial through effectively transparent glasses, while also seeing a menu with green and blue text projected at the top of their view. H) Once the laser pulse is turned on, the background-subtracted NIR fluorescence signal detected from the ICG vial is projected in false color green on the surgeon’s field of view. Note the perfect alignment (applied in real time by applying the image transform derived in D) between projected signal and real-world view.

Placing a camera behind the Rokid Air at the position of the user’s eye allows for visualization of what the user sees when looking through the FAR-Pi system. Figure 8B is a screenshot from a video stream captured while looking through the glasses at a stopwatch and modifying the projected image to appear adjacent to the stopwatch, rather than superimposed. The latency between the real-world view of the stopwatch and the projected view is approximately 50 ms in the screenshot, which is on par with measured 81–94 ms latencies in similar existing AR systems like the Oculus Rift, Oculus Quest, Valve Index, 54 ms latency measured in a custom Acer see-through HMD developed by Microsoft52, 100 ms latency observed in the Hololens 253, and < 100 ms latency reported by Mela et al. (no lower range reported) in a custom built stereoscopic AR FGS system29.

Figure 8C-D and Supplemental Figure S25-27 and Video 1 demonstrates the user’s view through the glasses. when looking at a rectangular calibration target. Initially, the glasses project a window with user interface elements at the top and otherwise a black background, making the glasses effectively transparent and allowing the user to primarily see the real-word view (Fig. 8C). In Fig. 8D the calibration process has begun, and a purple overlay consisting of the calibration target detected by the FAR-Pi cameras is projected onto the glasses. The user thus sees both the real-world view and the FAR-Pi camera view which initially are misaligned. The user then selects the position of the real-world calibration target corners that correspond to the automatically detected corners (green dots), and this defines the projective transform (following the same process as in Fig. 7B) that is applied during subsequent use to ensure distance-independent alignment between FAR-Pi cameras and surgeon’s eye. In Fig. 8E the FAR-Pi camera view is modified in real-time using this projective transform and the projected view of the calibration target aligns with the surgeon’s eye. Figure 8F-H and Supplemental Video 1 demonstrates the user’s view through the FAR-Pi system when looking at a vial of ICG. In Fig. 8G the laser is off and there is no detected fluorescent signal to project, so the user sees the real-world view through effectively transparent glasses. In Fig. 8H the laser is turned on and the detected fluorescent signal is processed, transformed using the projective transform derived during calibration, and then projected onto the screen of the glasses in a position that is coaligned with the real world-view of the ICG vial.

Discussion

In this work, we show that a combination of battery-powered laser diodes, off-the-shelf optical filters and mirrors, a Raspberry Pi computer, and low-cost CMOS-based Raspberry Pi v2 cameras without enhanced NIR sensitivity or prolonged exposure times assembled together in 3D-printed enclosures can be used to create a novel battery-powered FGS system that (1) recapitulates the NIR fluorescence sensitivities reported in commercial systems, (2) achieves dual channel co-aligned simultaneous NIR and white light imaging and (3) achieves real-time ambient light subtraction through pulsed laser excitation synchronized with camera frame capture. The goal of our animal validation studies was demonstrating the system’s ability to detect NIR fluorophore-labelled cancer in vivo, but the core functionality exhibited by the system is also relevant to other surgical domains where AR has proven to be useful. For example, dual coaligned NIR and white light imaging co-registered with the surgeon’s view is the core technology present in imaging assisted AR orthopedic surgery and neurosurgery, where detecting coaligned infrared and visible light is used to track fiducial markers for generating co-registration between a patient and radiologic imaging data54,55. Our system would therefore support this type of application from a hardware perspective, though integration with radiology images would likely require an alternate computational module with increased computational power. The core FGS functionality that we achieve with off-the-shelf components could also support making other types of existing surgical AR applications like endoscopic FGS56 as well a hand-held FGS57 more accessible. For example, the FAR-Pi imaging and computational module could replace the RPi Zero and IMX219 camera sensor used in the low-cost endoscopic system described by Lazarus et al.42 to create an accessible FGS endoscopy system capable of dual white light and NIR fluorescence imaging, or the FAR-Pi imaging and computational module could be combined with the illumination module into a single enclosure to function as a compact battery-powered handheld FGS unit that expands upon the accessible handheld FGS system of Okusanya et al.58. In some surgical settings, these alternative approaches would be preferred to a head-mounted system, and the fact that our core dual-camera visible and NIR imaging module could support those alternative approaches demonstrates the broad applicability of our system.

In the present work we show how our Raspberry Pi-based FGS illumination and imaging modules can be combined with off-the-shelf AR glasses to create an AR FGS system. A key challenge with AR systems is that what is displayed on the see-through display and what the surgeon sees with their eyes without the glasses must be aligned in space. Without proper spatial alignment the surgeon may see malignant tissue highlighted in an area of benign tissue and as a result end up excising benign tissue while leaving malignant tissue behind. In this work, we show that when the camera and eye are not optically aligned (as is typically the case in existing see-through dual VIS/NIR systems26,31,59,60), alignment can be achieved by calibrating at a fixed distance, but this corrective measure fails at distances beyond the calibration distance. To overcome this limitation and ensure that our system accurately aligns the detected NIR and visible camera signals with the surgeon’s eye at any distance, we introduced a beamsplitter in front of the glasses that makes the camera optically coaxial with the surgeon’s eye. This simplifies the AR FGS system by achieving distance-independent registration without requiring additional hardware such as distance tracking sensors or fixed-position fiducial markers29,34. A similar approach was introduced by Lee et al., but unlike our system, their work was limited by not having a second coaligned visible camera feed and also requiring manual adjustment of camera position to ensure alignment between camera and eye33.

In additional to spatial alignment, an AR system must also minimize delay (latency) between the projected images and the surgeon’s real-world view. While zero latency would be ideal, existing systems that utilize an external monitor, like endoscopic systems and some existing FGS systems, are estimated to have a 50 ms delay while the Hololens 2, which has been used in numerous surgical mixed reality applications has a minimum latency of 100 ms32,53,61,62,63. Khan et al. directly tested the impact of increasing latency (by adding delays of 50–150 ms beyond baseline latency) in both surgical task completion and surgeon’s perception while working with a traditional external display or a Hololens 2. Total latency above 150–200 ms was found to significantly impact surgeon’s perception and performance, which corroborated other studies64,65 that similarly recommended an upper latency threshold below 150–200 ms. Our system demonstrated a 50 ms latency, consistent with the < 100 ms latency reported by Mela et al.’s stereoscopic AR FGS system and within the thresholds suggested by Khan et al.29,53.

Limitations

There are several limitations of the FAR-Pi system that offer opportunities for improvement. The software interface that we designed for the FAR-Pi is intended to be a working proof-of-concept, but in future versions we aim to improve both the processing speed as well as the graphical user interface based on user testing. The FAR-Pi laser diodes have a weak but long NIR tail that contributes to a non-zero background signal, particularly for lower OD filters, that decreases SBR. FAR-Pi system sensitivity could be boosted by adding excitation clean-up filters (see Figure S1, S3). The illumination module consists of 3 lasers at fixed angle of inclination, with a Gaussian power distribution at the excitation surface. Dynamically adjusting the lasers’ angle of inclination could ensure a more homogenous power distribution as working distance changes. The area of illumination and homogeneity could also be increased by incorporating more and higher power laser diodes coupled with a diffuser. Adjustable surface irradiance, a feature available in some existing FGS devices, could be achieved by laser diode pulse width modulation. Since the system relies on NIR wavelengths depth of imaging is limited to 1–2 cm7,66, which may be insufficient in cases with deeper metastases beyond the primary tumor site. This is a general limitation of NIR FGS systems that has been highlighted in recent reviews, where potential solutions like incorporation of additional modalities like tomography, short wave infrared (SWIR) imaging, or nuclear imaging7 have been proposed. In principle, the FAR-Pi system is easily modified by swapping out the RPiV2 camera for a SWIR-sensitive USB-3 or CSI interface camera, though in current practice the cost of SWIR-cameras would be increase the cost of the FAR-Pi system by an order of magnitude.

It is important to note that while the results presented demonstrate that the FAR-Pi system recapitulates the core functionality of existing FGS systems and is capable of detecting and displaying tumor-targeting NIR fluorophores in vivo, the current work is still a proof-of-concept study and additional studies are required to optimize and validate the FAR-Pi system for surgical use. This is in line with prior AR-HMD studies from our group and others where initial studies have lower levels of clinical relevance (LoCR)67 and demonstrate novel technologies with surgical task completion in either phantoms or animal models24,29,30,31,35, and subsequent studies have high LoCR and demonstrate surgical task completion in real world surgical settings with human patients26,34. With respect to the FAR-Pi system, future studies will include user testing studies to help improve both the hardware and software, implementation of the FAR-Pi system in clinical trials of novel NIR-fluorophore for breast cancer surgery, and comparative trials between the FAR-Pi system and existing FGS systems. These studies are critical for demonstrating the clinical utility of the FAR-Pi system and supports future CE Mark and FDA approvals.

FGS accessibility and sustainability

As previously outlined, existing FGS systems are generally inaccessible outside of resource-rich centers, and the fact that only a minority of people can benefit from this clinical tool does not help decrease disparities in global health outcomes. Furthermore, existing FGS systems often require expensive service contracts and custom parts are needed if systems break down. Thus even if existing systems were made available in low resource settings, because of their technical complexity they could up like the majority of donated “high end” medical equipment - sitting broken or unused68. One of the inspiring aspects of the Maker movement is that it is aligned with increasing sustainability through both increasing access to technology and reducing waste by promoting reuse and repurposing of technology69. This was demonstrated during the Covid-19 pandemic, when gaps in sustainability of the global supply chain motivated the Maker community to develop a range of medical supplies and devices70. This proved that Maker and frugal engineering tools could be used to create novel devices or to create custom parts to fix or repurpose existing medical devices.

Because our work is aimed at developing an FGS system that overcomes the accessibility limitations of existing systems, our efforts are in line with healthcare related sustainability goals. Inspired by the Maker and frugal engineering movement, we show that off-the-shelf components coupled with open-source software are surprisingly capable and can recapitulate the performance of sophisticated and expensive medical imaging equipment. We hope this work serves to inspire existing FGS manufacturers as well as entrepreneurs and researchers around the world to consider using accessible low-cost components like the Raspberry Pi in novel globally accessible FGS systems. In fact, Raspberry Pi components have already been successfully implemented in medical devices; while it is widely known that these components are inexpensive and available off-the-shelf, they are also robust and reliable enough to meet the high bar needed for medical device development71. Furthermore, because these low-cost components are well-documented and fairly simple to work with, the devices become easier to repair, modify, or repurpose, all of which contributes to reducing medical device waste. Finally, while FGS device accessibility is important, it is not the only barrier to bringing the benefits of FGS to the global community. Indeed, FGS imaging systems must be coupled with FGS contrast agents, and as newer targeted agents are developed, it is important that efforts are made to ensure that such agents are also made accessible to those beyond high-resource centers.

Conclusions

In summary, the FAR-Pi system is an open-hardware inspired, fully wearable, head-mounted, battery-powered, and relatively easy to build AR FGS solution that is an order of magnitude smaller, lighter, and less expensive than existing systems. Incorporating features that allow ambient light subtraction and optical alignment of the imaging module cameras with the surgeon’s view uniquely improves the reliability and capability of the FAR-Pi system, bringing the goal of globally accessible FGS within reach. Furthemore the modular design makes the FAR-Pi system highly customizable. For example, the core FAR-Pi FGS module, which is capable of detecting coaligned white light and NIR fluorescence, could be modified to be compatible with implementations beyond AR-glasses. Thus, our innovative approach could support a range of novel low-cost globally accessible FGS implementations beyond goggle-based systems. Future studies will focus on validating the FAR-Pi system in real-world surgical settings and performing comparative studies with existing FGS systems. Further development of the FAR-Pi system is poised to improve human health by making the benefits of FGS available to patients around the world.

Methods

Working distance between surgical field and FAR-Pi components

The working distance between surgical field and illumination and imaging modules varies depending on FGS application. In AR-based FGS implementations, the relevant working distance is the distance between surgical field and surgeon’s eyes. In our prior CVG system published from our lab in 2015 and 201726,51, we worked directly with surgeons ranging in height from 5’2” to 6’2” to determine an appropriate working distance. We found that an average 50 cm working distance (ranging from 40 to 60 cm) was appropriate, and this distance has been subsequently used in ongoing surgeon user testing of our prior CVG system. This average 50 cm working distance is consistent with the working distances used by groups beyond our own laboratory in their AR-based FGS systems. For example Mela et al.27 utilize a working distance of 30 ± 10 cm in their stereoscopic goggle-based VIS/NIR system, Zhang et al.31 utilize a working distance from 25 to 45 cm in their Google Glass-based NIR-only system, and Lee et al.33 utilize a working distance of 50 cm in their Epson Moverio goggle-based NIR-only system. Thus based on nearly a decade of our own user testing as well as the work of other labs building goggle-based systems, we design the FAR-Pi system around an assumed working distance of 50 ± 10 cm. Of note, the distance between the FAR-Pi camera, which is mounted on the goggles and therefore in front of the eyes, is approximately 5 cm slightly closer than the working distance between the eyes and the surgical field.

Laser diode array characterization

Existing FGS systems employ large and expensive cart-based laser diodes, effectively tethering the surgeon to the cart with optical fibers18. Some groups have utilized less bulky LED modules, but these have broad spectra and, unlike the laser diode array, require additional clean-up filters to ensure that their NIR tails do not overlap with the emitted fluorescence signal29. A halogen lamp27 is a relatively inexpensive alternative, but it also requires additional optical filters and poses a challenge for wearable devices given that it is wall-powered and generates significant heat.

To overcome the challenges of these approaches, we evaluated whether a low-cost, compact, fully-wearable, and battery-powered laser diode array could provides similar irradiance to tethered systems. The normalized spectral profile of ~$20 120 mW 780 nm laser diodes (Laserlands.net, Besram Technology Inc, Wuhan, China)) was measured with a visible-NIR spectrometer (USB2000 + VIS-NIR-ES, Ocean Insight, Orlando, FL) (Fig. 2C). A compact 3 laser diode array with laser inclination of θ = 3° and diameter d = 18.475 mm was constructed (Fig. 2A, Supplement S1-S3) and aimed at a black posterboard 40–60 cm away. An optical power meter (S121C and PM100D, Thorlabs Inc, Newton, NJ) was used to determine maximum power on the posterboard. Surface temperature (T) of the posterboard was measured with a FLIR T650Sc thermal camera (FLIR Systems, Wilsonville, OR). Surface temperature maps before (T(x, y)t=0) and after laser illumination (T(x, y)t=5) were extracted using the FLIR ATLAS MATLAB SDK72. Subtracting T(x, y)t=0 from T(x, y)t=5 yields ΔT(x, y). Multiplying ΔT(x, y)/ΔTMAX by measured maximum laser power provided an estimate of the surface laser power distribution (Fig. 2D).

RPiV2 camera resolution and depth of focus

Assuming a working distance between the surgeon’s eyes and surgical field of 50 cm25,33,34 and accounting for the fact that the RPiV2 camera is head-mounted and positioned a few centimeters closer to the surgical field than the eyes, the position of the RPiV2 lens must be adjusted to ensure that it remains in focus at working distances around 50 cm. Theoretical analysis predicts that the RPiV2 at a distance d from a target can resolve 1360/d line pairs per millimeter, and that focusing to a distance of 40 cm ensures focus from 31 to 56 cm while focusing to a 45 cm distance ensures focus from 33 to 69 cm (Supplement Eqs. 3–4). The lens was subsequently focused at the d = 40 position and the predicted RPiV2 spatial resolution and depth of focus (Fig. 3A solid lines) was measured by imaging a backlit Thorlabs NSB 1963 A resolution test target (R2L2S1N, Thorlabs Inc, Newton, NJ) at distances between 30 cm and 65 cm in 5 cm increments. For any chosen group number, a row vector consisting of the total gray-scale pixel intensity across each column defined the vertical line pairs, and a column vector consisting of the total gray-scale pixel intensity across each row defined the horizontal line pairs (Figure S5). Local maxima and minima of these 1-dimensional vectors correspond to resolved line pairs, and the highest line pair that demonstrated 5 clear distinct maxima corresponding to all 5 line pairs in a group defined the resolution limit at each distance.

Dual visible and NIR imaging

The 8 MP RPiV2 camera was chosen for the FAR-Pi imaging module because it comes in visible and NIR versions (standard and NoIR respectively). Furthermore, miniaturization is facilitated by detaching the RPiV2 camera sensors from the RPiV2 PCB and connecting with flexible extension cables (B0186, Arducam, Nanjing China) (Figure S4). A 3D-printed enclosure was designed to position a 45° cold IR mirror plate beamsplitter (#62–634 Edmund Optics, Barrington, NJ) between the RPiV2 cameras so that visible light is reflected to the standard camera and optically coaligned NIR light is transmitted to the NoIR camera (Fig. 3B). To remove excitation light that may overlap with fluorophore emission, multiple filters (placed in front of the NoIR camera) were evaluated: (1) an OD 3–4 12.7 mm 830 nm LP Newport filter ($40, 5CGA-830, Newport, Irvine CA), (2) two stacked 12.7 mm 830 nm LP Newport filters, (3) a 12.5 mm OD6 Edmund 832 nm BP filter ($240, #84–091, Edmund Optics, Barrington, NJ), and (4) a 12.5 mm OD6 808 nm Semrock EdgeBasic LP filter ($535, BLP01-808R-12.5-D, AVR Optics, Fairport, NY). OD vs. wavelength for each filter is summarized in Fig. 4A. For each optical filter, the positions of the cameras and beamsplitter required minor modifications to ensure coaligned and equal optical path (Figure S6).

To achieve dual-camera streaming we utilized a $65 CM4104016 Raspberry Pi Compute Module 4 (RPiCM4) with a WaveShare carrier board that contains two camera serial interface (CSI) inputs ($20, CM4-IO-Base-A, Waveshare, Shenzhen, China). Camera coalignment was measured by positioning dual-camera assemblies 30–70 cm from a back-illuminated resolution target, capturing images from both cameras, and then calculating the horizontal and vertical offset between corresponding fiducial markers (Fig. 3C, S8). Corresponding fiducial marker positions at 50 cm were then utilized to derive a single corrective projective transforms between the visible and NIR images that was used to align visible and NIR images at any distance. Python scripts were developed to read the dual-camera stream and apply the alignment image transforms in real-time. Additional details about the RPiV2 cameras, imaging module enclosures, and camera alignment process is provided in Supplement Sect. 1.

NIR fluorescence sensitivity

Existing FGS systems have reported lower sensitivity limits for NIR fluorescent emission ranging from 50 pM for the Visionsense, 1nM for the Solaris, 5nM for the Fluobeam (though requiring long exposures exceeding 1 s), 15 nM for the PDE Neo, and 40 nM for a AR-stereoscopic system described by Mela et al. 27 18 (Table 1). To determine the lower sensitivity limits of the FAR-Pi RPiV2 camera, the RPiV2 camera was coupled to 4 alternative excitation filter options described in the “Dual Visible and NIR imaging section above”.

To determine how NIR sensitivity limits of the RPiV2 NoiR camera compare to existing FGS systems, a durable NIR fluorescence phantom was made, consisting of a black 96-well plate (Corning, Corning, NY) with duplicate wells of polyurethane embedded with IR-125 dye in concentrations of 0 (control), 250 pM, 500 pM, 1 nM, 5 nM, 7.5 nM, 10 nM, 25 nM, 50 nM, 75 nM, and 100 nM (Fig. 4B), as described by Ruiz et al.73 (Supplementary Sect. 6). IR-125 solid phantoms were used instead of diluted ICG vials because IR-125 phantoms have been shown to have similar fluorescence properties to ICG while offering the advantage of ease of handling and prolonged stability73.

FAR-Pi imaging modules with 4 separate excitation filter options were placed in a fixed position 45 cm above the IR-125 phantom. The distance of 45 cm was chosen based on the assumption that the surgeon’s eyes are 50 cm from the surgical field, and the cameras are positioned in front of the eyes by approximately 5 cm. In the AR FGS implementation the laser diode array is positioned mid-forehead at a distance 10 cm closer to the surgical field than the eyes, and therefore the laser diode array was positioned at 40 cm from the phantom, where peak irradiance is 22.5 mW/cm2. To test how fluorescence sensitivity changes with decreased irradiance, the diode array was also moved to a position 50 cm away from the phantom where peak irradiance is 13.5 mW/cm2, mimicking what happens if the surgeon moves 10 cm away from the surgical field. The phantom was placed on custom 2-axis motorized stage, which allowed automated positioning of the phantom to ensure consistent irradiation when imaging a given well. At each well, coaligned RPiV2 visible and NIR images were obtained with laser ON and OFF. Ambient NIR light was subtracted by finding the difference between the laser ON and laser OFF NIR images. For each well images were acquired with auto white balance off, two irradiance levels (22.5 mW/cm2 and 13.5 mW/cm2), multiple exposure times (33 ms, 66 ms, 99ms), and with multiple ISO levels (400, 600, and 800). A python script automated stage positioning and data acquisition. Signal to background ratio (SBR) for each IR-125 dye concentration was determined in MATLAB by dividing background-subtracted pixel intensities in each fluorophore-containing well by background-subtracted intensities in the control well.

In vivo validation studies

All animal studies were performed following the Animal Research: Reporting of In Vivo Experiments (ARRIVE) guidelines and under an approved protocol by Washington University in St. Louis’s Institutional Animal Care and Use Committee (IACUC). All studies were performed in accordance with relevant regulations and guidelines set forth by the approved protocol. Animals were housed under a 12 h dark-light cycle. Adult Fox Chase SCID Beige mice (n = 4, Charles River Laboratories) were subcutaneously implanted with 5•105 4T1 breast cancer cells on the left dorsal flank. After 2 weeks tumor growth was evident and then tail vein injection of 100 µL of 60 µM LS301-HSA (an NIR cancer-targeting fluorophore developed in our lab12) was performed. After 24 h, mice were imaged (at a distance of 45 cm) using the FAR-Pi imaging, illumination, and computation modules (with 4 different excitation filter options). Coaligned 8-bit visible light and transcutaneous fluorescent NIR images were obtained with laser ON and OFF to remove background ambient NIR. To determine the degree of residual NIR signal from excitation light leaking through each of the 4 excitation filters, NIR images of white paper were obtained with excitation laser ON and OFF (Fig. 6A). The maximum pixel intensities measured off reflected white paper set an excitation-filter-specific threshold to which pixel intensities were normalized to generate a pixel by pixel signal to background (SBR), where SBR of 1 corresponds to an NIR signal that is indistinguishable from leaked excitation light. The maximum value of LS301 SBR across all pixels defined the peak LS301 SBR for each mouse under each excitation filter option. In order to exclude signal from non-specific LS301 binding, a mask that excluded pixels with SBR less than 1.5 was applied, and then the image was overlaid with partial transparency over the coaligned visible FAR-Pi image (Fig. 6B).

Synchronizing laser excitation with camera frame capture to remove ambient NIR

Achieving ambient light subtraction is possible if one can time laser illumination to be synchronized with every other camera frame. All frames contain ambient background NIR, and if one frame corresponds to laser on and the next frame corresponds to laser off, then the absolute difference between frames will remove background NIR while keeping laser-induced NIR fluorescence. However, the RPiV2 camera is a rolling-shutter camera which means that unlike a global shutter camera where all camera pixels are exposed to light at the same time, different rows of the frame are exposed to light at different times. Also the RPiV2 camera does not have a frame exposure pin (FREX pin) exposed, and this means there is no electrical signal that can be detected for the start and end of when the camera sensor is being exposed to light. What is possible with the RPiV2 camera however, is to detect when the camera frame is being read into memory on the Raspberry Pi computer, and to use this as a trigger for synchronizing camera frames with laser diode toggling.

To detect camera read frame start and stop events, we modified the device tree74,75 in both the RPiCM4 and RPiV4 so that a selected GPIO pin goes HIGH and LOW with camera frame read start and stop events respectively (Fig. 5A, green pulse). These detected events (monitored through Python code to detect changes in GPIO pin state) served as delayed surrogates for when camera sensor exposure occurs, and Python code was developed to trigger the laser diode ON at the end of every other frame read event.This results in effectively the laser toggling on and off between consecutive frames. Python code was also written to calculate the absolute difference between consecutive frames in real-time, this real-time processed video stream provides an ambient NIR-subtracted video stream that would allow imaging with ambient lights on.

To test this approach two Eppendorf tubes filled with 1mM indocyanine green (ICG) and water (negative control) were imaged with the FAR-Pi dual VIS/NIR imaging module using synchronized pulsed laser illumination. An 830 nm LP filter was chosen as the excitation filter for the imaging module because the relatively low OD of the filter would result in more ambient NIR light being detected. The laser module is triggered based on when camera frames are read to memory. This results in the laser effectively being off during one frame, and on the next frame, and as a result one frame will show only background ambient NIR signal, while the subsequent frame will show emitted fluorescence from the vial of ICG. The start of camera sensor exposure for a given frame may precede the time when the prior frame was read into memory, and therefore when laser excitation is triggered at the end of a frame read event, it is likely starting after frame exposure started. In such a scenario, if the laser remains on for the duration of a full frame it will bleed into the next frame. Thus, the duration of laser excitation must be less than the duration of a full frame. We tested setting laser duration to 30%, 50%, 70%, and 90% of frame duration to determine the maximum permissible duration where laser bleed through between frames did not occur.

Implementing FGS with an augmented reality heads-up-display

Selection and modification of off-the-shelf AR glasses

While a custom-built AR HMD would provide more engineering flexibility, our goal was to make the FAR-Pi simple to source and build, and therefore we repurposed off-the-shelf AR glasses for medical imaging. There are several commercially available see-through AR glasses including the waveguide-optics based Hololens 2 ($3500) and Epson Moverio smartglasses ($600-$2000 based on the model), and the birdbath-optics based Rokid Air ($400-$500). While in principal any of these AR glasses could be incorporated into the FAR-Pi system, we used the Rokid Air because it offered high resolution (1920 × 1080), high field of view (43° diagonal) that rivaled the Hololens, can be adjusted for different degrees of myopia, are readily available from commercial sources, and are relatively inexpensive (~$430 with HDMI adapter).

The FAR-Pi system has a single white light and NIR stream, and when this non-stereoscopic stream is input into off-the-shelf AR glasses, identical images are displayed to each eye. Thus if the displayed image is aligned for one eye it will be misaligned for the other, and this creates a perception of double vision. We found that we could overcame this limitation by modifying the Rokid Air by disconnecting one of the eye displays so that it only had a monocular display (Figure S21A-D). We are not aware of a similar modification working with other off-the-shelf AR glasses, and previously published non-stereoscopic FGS systems do not address methods for overcoming this double vision limitation25,26,33,34.

Combining FAR-Pi components with Rokid Air AR glasses

For surgeon comfort, all head-worn components are mounted to a surgical headband. The position of the illumination module relative to the AR glasses is based on the overall goal of ensuring that the center of the surgeon’s field of view when they are looking straight ahead, which is where the surgeon’s hands would be working, is also the center of illumination and fluorescence detection. As the surgeon moves their head so that their field of view centers on a particular area of concern, so too should the center of illumination and detection move. For the illumination module this is achieved by coupling the laser diode array on an angled adjustable arm to a surgical headband so that the laser diode array is between the eyes at mid-forehead level and angled down to the center of the surgeon’s field of view 50 cm from the eyes as shown in Fig. 2B. For the imaging module this is achieved by making the FGS VIS/NIR cameras optically coaxial with the surgeon’s eyes by mounting them to the AR glasses above a beamsplitter that is in front of eyes as shown in Fig. 7F-H. With this design as the surgeon moves, the imaging module and illumination module move as well, staying aligned with the center of the surgeon’s field of view. This obviates the need for having the AR glasses track surgeon head position or eye motion.

To accommodate mounting imaging and illumination modules to the Rokid Air glasses and mounting the Rokid Air glasses themselves to a surgical headband, a custom 3D printed coupling that secures to the mounting holes present on the front of the Rokid Air glasses was designed in Autodesk Fusion 360 (Figure S21E-F). This coupling component has mounting holes that accomodate different positions for the imaging module, as well as central vertical assembly with mounting holes that secure both the surgical headband and the illumination module at a mid-forehead position (Figure S22). The remaining components of the FAR-Pi system (the RPiCM4 computational module, PiSugar2 Plus 5000 mAh power supply (PiSugar, Guangzhou, China), laser diode control HAT, and camera-related hardware were combined with the RPiCM4 in a 3D printed enclosure (Fig. 8A, S18) that can be worn on the waist. Assembly details are provided in Supplement Sect. 4. As noted in Fig. 2B, due to the size of the laser diode module, when mounted mid-forehead the distance between surgical field and laser diode was 10 cm closer than the distance between surgeon’s eye and surgical field. Similarly given the position of the the imaging module in front of the eyes and above the beamsplitter, the position of the imaging module becomes 5 cm closer to the surgical field than the surgeon’s eye.

Surgeon’s view and latency when looking through the Rokid Air AR glasses

The Raspberry Pi CM4, like other Raspberry Pi SBCs, can be connected via HDMI cable to an external monitor, and this provides a graphical user interface for computing. Connecting the HDMI output of the Raspberry Pi CM4 module with a powered HDMI to USB-C adapter to the USB-C video input of the Rokid Air effectively turns the Rokid Air glasses into an external monitor. Thus, a user wearing the Rokid Air glasses would see the typical Raspberry Pi desktop operating system projected onto their field of view. The default view includes windows with light gray background that when projected within the glasses obstruct a user’s view (Figure S25A). Removing desktop wallpaper and adjusting the operating system background default window color to black allows the majority of the user’s view to remain unobstructed (Figure S25B). Thus, applying a threshold that turns any pixels that are below background fluorescence signal to have zero intensity (black) ensures that only the detected fluorescent signal is projected on the user’s field of view while surrounding structures remain unobstructed.

To determine latency between the AR projected video stream and the real world an iPhone camera was positioned behind the glasses to mimic the position of the surgeon’s eye so that the iPhone camera could see both the real world through the glasses as well as the projected images on the glasses. A stopwatch was then positioned in front of the glasses. The imaging module was mounted to the glasses so that it also visualized the stopwatch. The image stream from the imaging module was purposely misaligned with the real world view so that the real world view of the stopwatch and the projected image of the stopwatch could be visualized side by side rather than overlayed. Furthermore, while typically only the NIR stream would be projected to the user’s field of view, for the purposes of visualizing the stopwatch the visible camera stream was projected instead (Fig. 8B). Time difference between the real-world view of the stopwatch and the projected view of the stopwatch defined system latency.

Alignment between the surgeon’s eyes and the projected images on the AR glasses