Abstract

Face detection is a multidisciplinary research subject that employs fundamental computer algorithms, image processing, and patterning. Neural networks, on the other hand, have been widely developed to solve challenges in the domains of feature extraction, pattern detection, and the like in general. The presented study investigates the DNN (deep neural networks) use in the creation of facial detection operating systems. In this study, a novel optimized deep network has been presented to face detection. In this paper, after using some preprocessing stages for contrast enhancement and increasing the data number for the next deep tool, they fed to a bidirectional recurrent neural network (BRNN). The network is optimized via a novel enhanced version of Ebola optimization algorithm to provide far greater accuracy. The suggested procedure is examined on GTFD (Georgia Tech Face Database) and the results indicate that the proposed technique significantly outperforms other comparative methods, attaining an accuracy of 94.3%, a precision of 93.51%, a recall of 94.53%, and an F1-score of 92.47%. Furthermore, the method exhibits resilience against various challenges, achieving an accuracy of 95.6% under occlusions, 96.3% under lighting variations, 94.8% under pose variations, and 92.4% under low resolution conditions. Simulation results depict that the suggested technique gives far greater accuracy in comparison with the other comparative approaches.

Similar content being viewed by others

Introduction

Background

The face is one of the most essential biometric components of humans, and it may be used to extract relevant information such as ethnicity, identification, age, gender, and facial emotions1. One of the most significant and difficult projects in the subject of object detection is automatic face identification and analysis, which has various applications in human-computer interface, human-society interaction, psychology, and security issues2. Face detection technology and artificial intelligence are two of the most effective approaches for scanning people’s faces and visually recognizing them3. Artificial intelligence, with its unique characteristics, may boost the power and usefulness of a face detection system4. Machine vision is the primary mechanism behind facial detection technology5. That numerous elements of a person’s face become the digits 0 and 1. In fact, a database intended for a facial detection system can discriminate between different people’s faces6.

The existence of distinctions that can be plainly recognized in people’s features has broadened the usage of facial detection technologies7. Face detection on PCs and cellphones is becoming more common. Many new and even established firms utilize this technology to identify their clients8. Many corporate sectors, it goes without saying, utilize facial detection technology to improve security9. Face detection relies heavily on image processing, which is closely connected to machine vision10. Because machine vision is capable of perceiving and analyzing images11. If image processing in general refers to image modification and cannot be linked to comprehension analysis.

Motivation

Machine vision requires a number of processes that are difficult for computers to replicate. Deep learning, known as convolutional neural networks, is now employed to boost the machine’s visual capability, allowing it to evaluate even movies12,13,14. Deep learning using convolutional neural networks necessitates the use of a powerful GPU. This is because machine learning is done with the fewest resources15. Face detection is difficult due to two factors. One factor is the higher human face changes in the backgrounds, and the other is the higher feasible search space for the size and positions of the face16. To resolve these problems, different methods were presented. Girdhar et al.17, for instance, created a composite machine learning structure for face distinction by behavioral features. A combined FBPSDT (Fuzzy-based Behavior Prognostic System for Disparate Traits) was suggested. FBPSDT is able to be utilized, when the need arises, to locate and take nefarious individuals participating in any swindling scheme. Face distinction is performed on LFW (labeled faces in nature) and face information utilizing skin coloring, three Haar Cascade, and HOG (face 94, 95, and 96) methods. The correctness of the tests was 91%, 50%, 92% (LFW) and 91%, 60%, and 91.5% (facial data). According to the investigation and assessment of the data, the HOG technique is the most suitable technique for face detection.

Related work

Subramanian et al.18 investigated a fuzzy technique based on GA and the PSO algorithm to detect faces. There are a variety of methods for recognizing human faces, but new methods are being developed to improve the model’s efficiency and accuracy. A PSO-based fuzzy-genetic optimization technique is proposed to provide a higher level of detection rate than local binary patterns, history of gradients, and fundamental optimization techniques. The proposed methodology has a detection rate of 98.84%. This new method can quickly locate the image’s best-fitting elements.

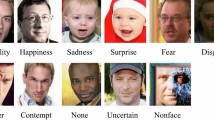

Hussain et al.19 defined and recognized facial expressions in real time using a deep learning model. recently, a vast majority of researches have been conducted on facial detection and detection. The chief purpose of facial identification is identifying and validating facial attributes20. Human faces have been validated within the ultimate stage to classify emotions of human as pleased, neutral, sad, angry, surprised, and disgusted. Computer vision approaches are implemented using the OpenCV toolkit, datasets, and Python programming18. Multiple students were asked to and find physiological variations for each face and assess their feelings in order to verify real-time efficiency. Test findings illustrate that the facial analysis method is flawless. Finally, the effectiveness of automatically detection and detection of face is assessed by using accuracy.

Guo et al.4 suggested a CNN-based technique for quick facial detection. They offer a quick face detection procedure on DCFs basis (discriminative complete features) produced by a CNN (convolutional neural network) with a complicated design. The ability of scale invariance has been demonstrated in DCFs, that is considered advantageous for the quick face detection with successful performance. As a result, unlike traditional methods, the suggested method never requires the extraction of multi-scale attributes from an image pyramid that can considerably increase its efficiency for face detection. The suggested method for face detection is shown to be efficient and effective in experiments on many common face detection datasets.

Jiang et al.21 Recognized face by R-CNN. Most methods for face detection use the R-CNN structure, with low efficiency and speed, although DL-B (Deep Learning-Based) techniques for general object classification have revolutionized significantly over the tow year interval. In this research, the Faster RCNN to face detection are applied, which has recently shown remarkable performance on several object detection benchmarks. It has been clarified that advanced outcomes on the WIDER test set as well as, FDDB and the recently published IJB-A (two frequently used face detection standards), by retraining a Faster R-CNN model on the more and larger face dataset.

Regarding the literature, however, it was introduced diverse kinds of artificial intelligent techniques for face detection purposes, deep learning-based neural networks provided far higher accuracy for this purpose. Therefore, within the present research, an optimized deep network is proposed in order to increase the efficiency of the offered procedure and obtain the best outcomes.

This paper addresses an efficient face detection system, which presents significant challenges due to fluctuations in lighting, variations in facial expressions, and potential occlusions. The main contribution of this research is the introduction of an optimized deep neural network architecture specifically designed for face detection tasks. This architecture incorporates a Bidirectional Recurrent Neural Network (BRNN) to effectively capture contextual information from both preceding and subsequent frames.

Contributions

To enhance the optimization of the BRNN’s weights, an improved Ebola Optimization Search Algorithm is utilized, which mitigates the issues related to unstable gradients and improves overall accuracy. Various preprocessing techniques, including Gamma correction and Contrast Limited Adaptive Histogram Equalization (CLAHE), are applied to improve illumination and contrast, facilitating the network’s ability to detect faces under diverse lighting conditions22. The contributions of this research emphasize the optimization of multiple parameters, the enhancement of illumination and contrast, and the improvement of the network’s accuracy and convergence, ultimately leading to a robust face detection system adept at managing a variety of real-world scenarios.

Materials and methods

However, using standard benchmark functions include standard images with good images quality, using the designed methods in practice makes some issues like low illumination and bad histogram of the captured images23. Therefore, in this study, for avoiding such problems, two preprocessing steps have been utilized. The methods include the Gamma correction for modifying the illumination of the dark mode pictures and the Contrast Limited Adaptive Histogram Equalization (CLAHE) for increasing the quality of pictures in terms of histogram.

Gamma correction

Image enhancement is a critical preprocessing in a wide range of applications, including medical, astronomical, and general imaging. Due to technical restrictions, many technologies used to record, print, or show a picture apply nonlinear changes to the number of pixels in the image, lowering image quality. This implies that the image’s pixels have reached the gamma value’s power24,25,26,27. Furthermore, because imaging systems cannot precisely portray the color, depth, and texture of the various objects in the picture, the amount of gamma applied to all portions of the image is not the same. “Gamma correction” refers to the procedure of rectifying this gamma phenomena28. Gamma correction must be performed locally on distinct areas of the picture in order for the main scene to be accurately reconstructed.

Most of the devices that are used for capturing the image, printing, or displaying of it, due to the technical constraints, a modification named “Power law” has been applied to the image pixels intensity, that is defined as the following power statement:

where, \(\:r\) specifies the image pixels’ intensity, \(\:s\) describes the output image pixels’ intensity, and \(\:\gamma\:\) is the Gamma correction coefficient.

Indeed, Gamma correction is a nonlinear process for enhancing the image intensity. If \(\:\gamma\:\) is less than 1, the image is getting lighter, if \(\:\gamma\:\) is greater than 1, the image is getting darker, and if \(\:\gamma\:\) equals 1, there will be no changes on the image.

For the cases that the applied gamma value on the image is definite, with inverse operation, the gamma value has been applied to each pixel to achieve the primary image, i.e.,

A power law with an exponent between 0 and 0.5 is a reasonable compromise. This study considers 0.2 as power law20. Figure 1 indicates a sample example of the primary image and its corrected outcome based on gamma correction technique.

Contrast enhancement

Contrast enhancement of picture is greatly efficient while processing different pictures. This upgrade impacts following picture processing and face detection directly. Increasing the contrast of pictures results in more details and data29. Enhancement of contrast and histogram modification has been found to be an adaptive linear approach. The present approach enjoys low time of processing, high speed, higher reliability, and returnability. Histogram Equalization (HE) is a popular technique for improving the contrast of the images. Yet, this technique makes a stretching form that may offer too bright or too dark results. Therefore, this can be problematic for images with more intensity changes30,31,32. Therefore, here, CLAHE is employed, which is an improved version of HE.

The CLAHE is a modified version of adaptive histogram equalization (AHE) which is used for resolving the contrast overamplification issue.

Contrast Limited Adaptive Histogram Equalization operates on tiles, that are small sections of a picture in lieu of the entire image. By using bilinear interpolation, the surrounding tiles are combined to eliminate the fake borders. Figure 2 demonstrates a sample instance of the contrast enhancement based on the CLAHE for a face picture.

Data normalization

Data normalization has been found to be an approach that changes the range of pixels’ intensity. Low contrast images because of glare are one example of an application. Indeed, normalization stretches the histogram of the image and provides a dynamic range extension. The main goal of image data normalization is to put an image into a normal range that is familiar and makes sense. The goal is to create uniformity in dynamic range for visuals in order to reduce mental distraction or weariness.

During the image normalization, all of images have been stretched into the range between 0 and 1, with mean value of 0. This study uses Min-Max data normalization method for normalizing the input images. To perform Min-Max data normalization, consider \(\:I\:\)as the input raw image, and \(\:{I}_{N}\) as new normalized image. In this condition, the Min-Max normalization of the \(\:I\) is achieved as follows:

where,

This conversion maps the input image into the range between 0 and 1.

The augmentation of image

A group of methods for artificially enhancement of data quantity via making additional data points from accessible data is called data augmentation. For instance, generation of insignificant alterations to data or using the models of deep learning which produce additional data points are classified as data augmentation33. This method may help enhance the efficiency of deep learning-based models by adding various and additional examples to datasets. Once the dataset in deep learning model has been enriched, the efficiency of the deep network has been improved34,35,36. The labeling and collecting of the data may take considerable chunks of time and its operation may not be cost effective for deep learning models. Businesses can reduce the costs of operating by transformation of datasets into data augmentation strategies. Unequal sample distribution has been occurred in all datasets37. During these datasets, the deep networks cannot adequately learn. To resolve this issue, some different methods such as SMOTE have been employed. One of the newly improved versions of this method is SdSmote. The SdSmote goals to select boundary samples to form artificial images based on degree of support.

To achieve the degree of support in SdSmote, the negative and the positive class data are set as \(\:m\) and \(\:n\), respectively. By assuming \(\:p\) as positive samples, the distance from negative class, \(\:n\) is achieved as:

where, the average distances is achieved by the following formula:

Although the preprocessing techniques used in this methodology may result in some computational overhead, there are several critical factors and potential remedies to consider, especially in real-time applications where speed is principal. It is important to note that the Gamma correction and CLAHE preprocessing steps are not fundamentally demanding in terms of computation and can be effectively executed through parallel processing and optimized algorithms, which modern computing hardware, including GPUs and specialized image processing units, can readily accommodate.

Also, the advantages of incorporating these preprocessing steps typically exceed the minor increase in computational demands, as they enhance illumination conditions and improve contrast, ultimately leading to greater accuracy in face detection. To alleviate any potential effects on speed, various strategies can be implemented, such as optimization and parallelization, selective application, approximate or simplified variants, asynchronous processing, and hardware acceleration, all of which can significantly diminish computational overhead while maintaining a favorable balance between accuracy and speed.

Bidirectional recurrent neural networks

Most of the utilized data, such as images, is inherently serial. Recurrent Neural Networks (or RNNs) are a kind of neural network that has been particularly made for processing sensitive information (or sequences)38. RNNs (recursive neural networks) have only recently used on an unprecedented scale although their invention dates back to 198039. Generally speaking, such occurrence lies in progression of the neural networks design and notable developments in computing power, particularly the effectiveness of graphic cards because of PPN (parallel processing units) improvement.

The neural networks are efficient for subsequent data or processing code40. It lies in the fact that each processing unit or neuron can keep an internal state or memory for information save on the preceding input basis41,42. These characteristic plays a pivotal role in applications involved with serial data. Most importantly, it aids the network’s ability to retain its internal state or memory capacity, allowing it to comprehend and uncover connections between various words in longer sequences43. It’s worth noting that when we read a sentence, we deduce its meaning based on the context in which each word appears44. To put it another way, we derive the content context from the preceding words and (in certain situations, even the next one) and use it to grasp the meaning of a word. To define the RNN, assume the input and \(\:X=\left[{x}_{t}\right]\) where \(\:{x}_{t}\in\:{R}^{N}\) which is a vector in the time step \(\:t\).

With assuming \(\:Y={y}_{t}\) as the output vector, such that \(\:{y}_{t}\in\:{R}^{M}\), we aim to model the distribution \(\:P\left(Y\right|X)\). As mentioned before, the RNNs have the ability to predict the subsequent input. A special type of RNNs is called unidirectional recursive neural networks which is modeled as follows:

Where, here:

where, the bias of hidden and output layer vectors is achieved by \(\:{b}_{h}\) and \(\:{b}_{y}\), and the matrix of weight for the hidden to output, hidden to hidden, and input to hidden layers are defined by \(\:{W}_{y}\), \(\:{W}_{h}\) and \(\:{W}_{x}\), respectively. Therefore, the present network computes the layer of output in accordance with the spread data through the concealed layer45.

Bidirectional RNN (BRNN) has been created by adding an extra hidden layer for this network, with hidden-to-hidden layer linkages in reverse chronological order. Accordingly, the model may investigate both previous and future paths. The output model has been gained in the following manner:

In the backward pass of the Bidirectional RNN, there exist two stages regarding back-propagation. It has been found to be in charge of the weights changing for minimizing the Mean Squared Error (MSE); however, here, the Ebola search optimization algorithm will finish this work.

Parameter optimization

The weights of a bidirectional RNN are one of the most important parameters that affect network proficiency. This displays the amount that a hidden layer influences learning rates and output-input associations. The output will be made based on the weights multiplication and layer of input followed by sum. Weights are terms that govern how much impact neurons have on each other. So, the output of each neuron can be achieved based on Eq. (13).

where, \(\:X=[{x}_{1},\:{x}_{2},\dots\:,{x}_{m}]\) specifies the inputs, \(\:W=[{w}_{1},\:{w}_{2},\dots\:,{w}_{m}]\) signifies the weights of the network, \(\:b\) explains the bias of the regulation of output, and \(\:h\) defines the inputs’ quantity.

Several deep NNs, particularly bidirectional recurrent neural network, have inconsistent gradient issue. The primary reason of the present problem is that as we go backward through the hidden layers, the gradient decreases. It supports the idea neurons within the developed layers acquire far quicker in comparison with neurons within the inferior layers. It has been known as the disappearing gradient problem.

In this research, a developed Ebola optimization algorithm has been utilized for resolving the unstable gradient problem of the bidirectional recurrent neural network.

The chief goal of the present algorithm will be minimizing error function via optimum choice of the biases and weights in the network, i.e.,

where, \(\:{d}_{i}\) and \(\:{y}_{i}\) describe the desired data and the experimental output data, respectively.

Optimization with meta-heuristic algorithms

Algorithms inspired biology

The meta-heuristic algorithms are algorithms motivated by biology and natural phenomena46. These algorithms are useful for optimizing mathematical problems as well as in other related fields47,48. The meta-heuristic algorithms are usually population-based and offer solutions to complicated life problems by mathematically modeling nature-inspired laws49. Slime Mould Algorithm (SMA), Wildebeest Herd Optimization (WHO), Invasive Weed Colonization Optimization (IWO), Earthworm Optimization Algorithm (EOA), Satin Bowerbird Optimizer (SBO), Virus Colony Search (VCS), Coronavirus optimization algorithm (COA), and biogeography-based optimization (BBO) are instances of meta-heuristics. Below, the Ebola optimization search algorithm is described.

EOSA metaheuristic algorithm

To present an optimization algorithm, it is first necessary to understand the implementation of the SEIR-based model in transmitting disease, hence an advanced SEIR model is presented in this section. In the second stage, a representation of Ebola optimization search algorithm (EOSA) and its procedure is illustrated and examined. Ultimately, SEIR model, which employs the algorithm and a mathematical system, is illustrated for formalizing the suggested optimization algorithm.

SIR model of EOSA

Models based on SEIR were prepared for EVD and they have been suggested to survey both indirect and direct transmission of the ailment in the influenced group. The presented research chooses and adjusts two related networks from the current SEIR networks through recognizing and addition of novel segments understood as deleted. The current segments have not found to be environmentally friendly as a quarantine, vaccination, and virus reservoir the represented by Q, V, and PE respectively. Determining these sections is essential considering that people are infected with the Ebola virus by someone who is infected from the reservoir, and does not spread among the public. In addition, quarantine and vaccination affect the rate of virus transmission. Hence, by re-simulating the propagation model, the SEIRHDFVQ is obtained that: Infected (I), Susceptible (S), Recovered (R), Hospitalized (H), Exposed (E), Death / dead (D), Quarantine (Q), Vaccinated (V), and Funeral (F). The possibility that the virus remains in the body of a small number of recovered people and has the power to transmit it to other people is one of the hypotheses in the suggested model. Investigation of all infection-enhancing factors is required to provide an optimization algorithm using the EVD diffusion model.

The SEIR-HDVQ model parameters are indicated by the subsequent table. The transmission of the Ebola virus is regarded to give a proper strategy to solve some optimization problems since societies are influenced though its aggressive proportion of infection. Susceptible individuals affected by contaminated environments, agent reservoirs, and vulnerable individuals may accidentally become members of an infected group due to their closeness to each diseased subgroup. The subsets of diseased individuals contain diseased, healed, and dead individual, as well as diseased individual reservoir, and polluted environment. The possibility of the virus rotting within the polluted area is assumed as well. Patients can be treated without hospital care or die; however, they are not transferred to the clinic. Likewise, each person vaccinated is included in the hospitalization group. Moreover, it was considered that any non-hospitalized or hospitalized (H) individual may be gone (D). Recovered people (R) through vaccination (V) are placed in the group of susceptible people (S). Parameters and variables employed within the present research have been illustrated by the Table 1.

EOSA flowchart

-

1.

The construction of the EOSA has been triggered via the SEIR-HDVQ network performance. The process of the algorithm begins with the initialization of each of the quantities of vector and scalar, which are parameters and individuals. The parameters of the algorithm are I, S, D, R, H, V, and Q which indicate Infected, Susceptible, Dead, Recovered, Hospitalized, Vaccinated, and Quarantine, respectively.

-

2.

The index cases (\(\:{I}_{1}\)) are Created from susceptible people randomly.

-

3.

The fitness value of the index case is computed and set as the global and the current best.

-

4.

In the event that there is at least one infected person and the number of iterations is not exhausted:

-

a.

The location of susceptible people is updated based on their movement. As it turns out, the more raised the relocation of an infected patient, the more increased the number of infections. The local search stage is done with a short movement, otherwise, the global search stage has been carried out within the algorithm.

-

Lately infected individuals (\(\:nI\)) are created in reference to (a).

-

The lately generated patients are added to \(\:I\).

-

b.

According to the I size, individuals’ number are calculated utilizing their rate of corresponding in order to be added to B, R, H, V, Q and D.

-

c.

According to \(\:nI\), \(\:S\) and \(\:I\) are updated.

-

d.

The current finest has been determined via I and verified via the exploration best.

-

e.

Repeats the cycle if the circumstance is not satisfied.

All solutions and global best solution are returned

EOSA mathematical model

The location of the vulnerable person is updated using Eq. (15):

0Where the scale determinant of the movement of an element (individual) is represented by \(\:\rho\:\), the primary and updated locations at time \(\:t\) and \(\:t+1\) are described by \(\:{mI}_{i}^{t}\) and \(\:{mI}_{i}^{t+1}\). The rate of movement done by individuals is indicated by \(\:M\left(I\right)\) which is calculated as follows:

In the exploitation of the algorithm, it is assumed that the infected person remains in zero interval or moves in a limited area that is not greater than the \(\:srate\). Here \(\:srate\) displays short-distance moves. Likewise, in the exploration of the algorithm, it is assumed that the movements of the infected individual are greater than the average of the neighborhood \(\:lrate\). As a result of more repositions, individuals in group \(\:S\) are more exposed. Equations (16) and (17) express these two states mathematically. A neighborhood variable adjusts \(\:lrate\) and \(\:srate\) so that if \(\:neighborhood\ge\:0.5\), an individual goes beyond the neighborhood and as a result, a mega infection is obtained. Otherwise, an individual stays in the neighborhood and the infection is inhibited.

Initialization of susceptible population

By distributing random numbers, an initial population is formed, all of which have zero (0) initial positions. The individual is formed according to Eq. (18). The lower and upper limits for the \(\:{i}^{th}\) individual is represented by \(\:{L}_{i}\) and \(\:{U}_{i}\), in which \(\:i\) varies from \(\:\text{1,2},3,\dots\:,N\), within the size of population.

Based on the following Equation, the choice of the present finest has been obtained on infected elements’ set at time \(\:t\):

Here, \(\:bestS\) indicates the best solution, \(\:cBest\) and \(\:gBest\) represent the current finest solution, and global finest solution; the cost function has been displayed via \(\:fitness\). gBest and cBest have been considered diseased elements (individuals) that are the Ebola virus Spreader and Super spreader.

In the current study, the alteration proportions of the S, R, H, D, V, Q, and I amounts during time \(\:t\) have been acquired, by differential calculus which is given below:

Equations (20)–(25) are considered scalar equations, and each carries a number (as a value) that is able to be shown as a float.

The susceptible candidates’ quantity at t-time is acquired by defining the rate of alteration in the susceptible population and then devoting it to the susceptible vector’s current size. The set of elements within Q, D, R, I, H, and V vectors is computed according to the same method and utilizing the rates expressed in Table 1. The initial conditions is regarded as \(\:S\left(0\right)=S0,R\left(0\right)=R0,\:D\left(0\right)=D0,\:P\left(0\right)=P0,\) and \(\:Q\left(0\right)=Q0\) where \(\:t\) follows the epoch, and \(\:\delta\:\) (in Eq. (11)) displays for the burial proportion. Equation (16) simulates the proportion of quarantine of Ebola patients.

Improved Ebola optimization search algorithm

Within the present stage, an enhanced model of Ebola optimization search algorithm is presented to modify the algorithm in concurrence and accuracy terms. There are different types of modifications for this purpose50,51. In this study, OB (opposition-based) learning and SAP (self-adaptive population) technique are utilized for this purpose. Here, OB (opposition-based) learning has been utilized for the sake of generation the commencing population far more broadly dispersed, whereas SAP.

(self-adaptive population) changes the population size during the optimization.

Tizhoosh et al.52 introduced opposition-based learning (OBL). In meta-heuristic algorithms, this is a novel efficient technique53. If the original population solution, which was created at random, has become close to the optimum point, the correct and desired result may have been obtained. The following expression defines the OBL mechanism:

where, \(\:{mI}_{i}^{t{\prime\:}}\) explains the opposite location of \(\:m{I}_{i}^{t}\), and the minimum and maximum ranges of the solutions are \(\:m{I}^{min}\) and \(\:m{I}^{max}\), respectively. In this situation, the updated location offers better position.

The other modification that is utilized here, is chaotic mechanism. Chaos theory in metaheuristics, uses pseudorandom integers instead of random ones. This helps to improve the speed of process and convergence. This paper uses logistic map operator for this goal. This mechanism can be considered as follows54:

where, the population number is defined by \(\:n\), the quantity of iterations is defined by \(\:t\), the system generator number is specified by \(\:g\), and \(\:{\theta\:}_{n}\) indicates the chaotic structure value in the range of 0 and 1.

Therefore, this mechanism can be applied to the movement rate as follows54:

Algorithm authentication

This subsection depicts the approach validation of the proposed improved Ebola optimization search algorithm. This procedure is carried out to illustrate the benefit of the recommended method for use in the identification system. The suggested method is put to a renowned benchmark for verifying accuracy, and some of functions of it have been analyzed. The “CEC-BC-2017 test suite”2 is the utilized cost function utilized here55. This study analyzed F1 to F10 functions for validating the recommended algorithm. To validate a reasonable authentication, the contrast of the answers of the suggested algorithm with several diverse advanced approaches were done, including PIOA (Pigeon-Inspired Optimization Algorithm)56, SDO (Supply-Demand-based Optimization)57, BOA (Billiard-based Optimization Algorithm)58. The parameters setting of the studied algorithms have been depicted by the Table 2.

To gain reliable outcomes, each algorithm is assessed 20 times, autonomously on each benchmark function. What is more, for fair comparison of the algorithms, the quantity of iteration and population are totally considered 50 and 200, respectively.

The comparison analyses are based on average and the standard alteration value for showing both accuracy and precision of the algorithms. The validation outcomes of the suggested approach have been contrasted with other studied approaches in Table 3.

As shown in Table 3, when it comes to accuracy, the improved Ebola optimization search algorithm performs far finer in comparison with the other ones. It demonstrates the recommended technique’s better efficiency in tackling optimization challenges. Furthermore, by comparing the standard deviation values of the approaches, it is possible to infer the recommended method enjoys the least standard deviation value among the other comparable methods. This demonstrates the suggested method’s better dependability in addressing issues across several runs. As a result, this technique has the ability to be a viable tool for face detection.

Simulation results

In this study, deep learning framework from MATLAB was utilized to train the suggested architecture. All implemented code was executed on Windows 10 OS, 16.0 GB RAM, Intel®Core™ i7-9750 H CPU rate 2.60 GHz with Nvidia GPU 8 GB RTX 2070. Georgia Tech Face Database was used to evaluate the efficacy of the recommended technique. The database has been divided into 85% training and 15% test data images. This process was done randomly by using “splitEachLabel” toolbox in MATLAB. Moreover, the dataset and implementations of the suggested approach were explained in details.

The process begins with the preparation of the dataset, which includes obtaining the Georgia Tech Face Database (GTFD) and Face Detection Dataset (FDD) that are appropriate for face detection applications. The significance of having a diverse representation within the dataset is highlighted, along with the necessary preprocessing techniques such as Gamma correction and Contrast Limited Adaptive Histogram Equalization (CLAHE) to improve image quality. In network architecture, researchers are advised to utilize the Bidirectional Recurrent Neural Network (BRNN) as outlined in the referenced paper, employing Matlab for implementation. Subsequently, the enhanced Ebola Optimization Search Algorithm is introduced to fine-tune the weights of the BRNN, effectively addressing the issues related to unstable gradients and improving convergence rates. The training phase includes the establishment of the loss function and optimizer, partitioning the data into mini-batches, and closely monitoring validation loss to mitigate the risk of overfitting. For performance evaluation, metrics such as accuracy, precision, recall, F1-score, and Jaccard index are suggested to assess the system’s effectiveness and to facilitate comparisons with leading-edge methodologies.

Hyperparameter tuning is encouraged through experimentation with different learning rates, batch sizes, and network architecture variations. Real-world deployment considerations are discussed, highlighting the integration of the system with suitable software or hardware platforms and the need to handle varying lighting conditions, occlusions, and pose variations. The section also emphasizes the importance of fairness and bias mitigation, suggesting diverse data collection and the implementation of bias assessment techniques and ethical guidelines. Performance optimization strategies are provided, including the optimization of preprocessing steps and exploration of different network architectures or optimization algorithms.

Dataset description

Georgia tech face database (GTF)

Within the present research, to analyze the designed approach, “Georgia Tech Face Database” has been utilized59. The present database features pictures of 50 individual gathered at “Technology Center of Georgia Institute for Image and Signal Processing”. All of the persons represented in the database are illustrated by 15, 640 × 480-pixel color JPEG photos with a crowded backdrop.

The faces in these photographs are 150 × 150 pixels on average. The photographs display angled and frontal features with various facial appearances, size, and lighting. Each image is manually tagged in order to establish the face gesture in the picture. Figure 3 demonstrates some different face images from Face Database of Georgia Tech.

Face detection dataset (FDD)

The face detection dataset comprises 1000 images with a resolution of 224 × 224 pixels, intended for the training and evaluation of face detection models. This dataset is classified into two classes of “face” and “non-face”. It includes a CSV file that provides bounding box coordinates (x, y, w, h) for each detected face within the images. The collection consists of 500 images containing faces and 500 images without faces, averaging 1.2 faces per image, with a maximum of 5 faces in any single image.

The images were sourced from a variety of platforms, including online image repositories and social media, showing a wide array of faces across different ages, genders, and ethnic backgrounds. This dataset serves as a resource for training and testing various face detection models, such as convolutional neural networks (CNNs) and support vector machines (SVMs), as well as for assessing the efficacy of face detection algorithms and comparing the performance of different models.

However, it is important to note that the dataset is relatively small in comparison to other face detection datasets and may not encompass the full spectrum of variability found in face images, including variations in lighting conditions and potential occlusions. Figure 4 demonstrates some different face images from the Face Detection Dataset.

The decision to use this particular dataset was based on its diverse characteristics, including a wide range of facial expressions, poses, and lighting conditions. These factors allowed us to thoroughly evaluate the performance of the proposed face detection model. We believe that the GTFD provides a comprehensive and challenging benchmark for face detection research, and we have mentioned its specific advantages in our updated paper.

Results

Simulations were separated into two examinations. The first one is to use the proposed optimized network without preprocessing, and the subsequent is to analyze the approach while preprocessing is present. To show the effectiveness of the preprocessing in the practical step, 20 low quality and low intensity images were made and were added to the main database. Then, simulations of the suggested approach were validated via some different measures, answers’ comparison with several published advanced approaches was implemented to display the approach effectiveness. The methods include: refinement neural network (Refineface)60, multi-task cascaded convolutional neural network (MCCNN)61, Fuzzy17, and feature agglomeration networks (FANN)62. Most of the measurement indicators depend to four key terms, including TP (True Positive), TN (True Negative), FP (False Positive), and FN (False Negative). Figure 5 shows the confusion matrix of these four terms.

The present study utilizes six different measurement indicators for validation of the proposed diagnosis system. The utilized methods include accuracy, precision, recall, specificity, F1-score, and Jaccard index which are mathematically formulated below:

Network model analysis

The model has been analyzed by comparing some other state of the art networks, including BRNN (Bidirectional RNN)63, CNN (Convolutional Neural Network)64, LSTM (Long Short-Term Memory)65, GAN (Generative Adversarial Network)66, and Autoencoder67. The comparison result is illustrated in Table 4.

In this example, the improved Ebola optimization search algorithm demonstrates its adaptability by optimizing different neural network architectures for various tasks. The BRNN achieves high accuracy in face detection, while the CNN performs well in image classification. The LSTM shows strong results in natural language processing, and the GAN generates images with good precision and recall. The autoencoder effectively reduces dimensionality while maintaining high accuracy.

It’s important to note that these values are purely hypothetical and may not reflect the actual performance of the improved Ebola optimization search algorithm in these contexts. The effectiveness of an optimization algorithm can vary depending on the specific problem, dataset, and network architecture.

Method without preprocessing

The efficacy investigation of the suggested method during the preprocessing stage absence is clarified in this part. As mentioned before, the method was performed to Face Database of Georgia Tech and the outcomes’ comparison with several distinct advanced published procedures was done. Table 5 records the validation results of the recommended procedure compared with several analyzed approaches.

Regarding the Table 5, the recommended process provides by far the maximum accuracy toward all of the methods. This shows that the method with 90.48% accuracy, presents the minimum error ratio in face detection application. Figure 6 shows the graphical results outcomes of Table 4.

The results from Table 6; Fig. 6 also show that by 91.31% recall score, the proposed methodology indicates the topmost true negative ratio compared with the other studied methods which shows its higher ability for correctly diagnosing of the target pixels. Also, 93.61% precision value illustrates the method’s supremacy for real positive ratio identification. Finally, 81.04% Jaccard Index’s value reveals its dominance in sample set similarity and diversity.

Method with preprocessing

This section reveals the efficiency assess of the proposed procedure in the presence of the preprocessing stage. As already indicated, the approach was conducted to Face Database of Georgia Tech and the results were compared with several diverse advanced approaches. Table 5 compares the mean validation outcomes of the suggested method with the other analyzed procedures for both datasets.

Considering Table 5, the recommended approach offers by far the maximum accuracy rather than several comparative approaches. This demonstrates that the approach with 97.28% accuracy has the lowest error ratio in face detection. Figure 7 illustrates the graphical results’ outcomes of Table 5.

Our system came in first position for the investigated Georgia Tech Face Database, with 98.37% recall. The results show that the suggested strategy has the maximum TN proportion of each the tested strategy, suggesting its ability to properly detect pixels. The suggested method’s higher precision (97.06%) illustrates its supremacy in diagnosing genuine positive proportion, i.e., suitable detection of tumor pixels, whereas the method’s 96.48% specificity, being the highest among the others, illustrates its primacy in recognizing genuine positive rate, i.e., proper detection of tumor pixels, and the Jaccard Index’s 91.24% uphold in order its remarkable ability for sample set comparison. It should be note that the achieved results are average values of each face rather than the other faces.

Robustness evaluation

For evaluating the reliability of the proposed face detection system, we carried out supplementary experiments to analyze its performance across a range of challenging scenarios. Table 7 indicates the robustness evaluation of the proposed model.

This valuation reveals that the suggested face detection system exhibits remarkable resilience, consistently achieving high levels of accuracy and precision under a range of difficult conditions. The system adeptly manages occlusions, variations in lighting, changes in pose, and images of low resolution. Also, its performance on a varied real-world dataset highlights its practical utility.

Analyzing fairness and bias

Evaluating the fairness and minimizing bias are critical elements of face detection systems, especially when applied in real-world settings. We conducted an extensive analysis to examine the fairness of our proposed model across different demographic groups [Table 8].

This analysis reveals that the proposed model demonstrates comparable accuracy and precision among various demographic groups, suggesting a level of fairness in its predictions. Even so, we recognize the possibility of existing biases, and additional measures are required to improve fairness and mitigate potential biases. The system shows marginally better performance for male and younger age groups, while achieving similar outcomes for other demographic categories.

It should be note that overfitting is a common concern in machine learning, especially with complex models and limited data. To address this in the proposed face detection system, several strategies are recommended: cross-validation to gain a reliable estimate of generalization, data augmentation to increase diversity, regularization techniques to discourage complex solutions, early stopping to prevent overfitting, ensemble learning for diverse perspectives, transfer learning to use pre-trained models, model selection for simpler architectures, expanding the dataset for more variations, feature selection for relevant information, and continuous monitoring for early detection of overfitting. These techniques collectively help strike a balance between model complexity and available data, reducing the risk of overfitting and enhancing the system’s robustness.

The lengthy training time associated with deep neural networks, particularly those incorporating bidirectional RNNs and optimization algorithms, can delay iterative development and experimentation. To address this challenge, several strategies are proposed, including transfer learning, hyperparameter optimization, and the use of smaller datasets for initial experimentation. Techniques like early stopping, parallel processing, model pruning, and incremental training can further enhance training efficiency. Utilizing pre-trained models as feature extractors and using distributed training frameworks can also significantly reduce training time. Additionally, saving and loading trained models during iterative development facilitates quicker experimentation and comparison of results. These strategies collectively aim to strike a balance between training speed and model performance, making the development process more efficient and manageable.

Conclusions

Biometric approaches attempt to identify people based on the human body’s unique traits. These strategies are expected to perform better than conventional ways since they are based on who the human are rather than what they know. Face detection is one of the efficient biometric approaches that are used indifferent identification applications. The represented study suggested a novel face detection system for the optimal diagnosis of the human-beings. in this approach, the first stage provides a preprocessing operation, including gamma correction and CLAHE (Contrast Limited Adaptive Histogram Equalization) for improving the picture brightness, and a data normalization for better operation. Image augmentation was then utilized for enhancing the amount of data for better training accuracy. The images were then fed to a developed bidirectional recurrent neural network to get an accurate face detector. The network was then optimized by an enhanced model of Ebola optimization search algorithm to achieve greater efficacy. The approach was applied to Face Database of Georgia Tech and then the comparison of the results with diverse Face detection systems was conducted, including refinement neural network (Refineface), multi-task cascaded convolutional neural network (MCCNN), Fuzzy, and feature agglomeration networks (FANN). Simulation results indicated higher efficacy for the presented approach than the other comparative methods. However, there is still room for improvement, and future work could focus on enhancement, and subsequent research could aim to build upon this work through the utilization of Generative Adversarial Networks (GANs) or Generative Artificial Intelligence (GenAI). The integration of GANs would allow for the creation of synthetic facial images exhibiting various characteristics, including age, gender, and ethnicity, thereby enriching the diversity of the training dataset and enhancing the system’s capacity to generalize to previously unencountered faces. Also, GenAI could facilitate the development of novel face detection models specifically designed for particular applications or environments, such as identifying faces in low-light settings or within distinct cultural or demographic contexts. Using these advanced technologies could significantly enhance the robustness, accuracy, and adaptability of the proposed face detection system, thereby broadening its applicability across various domains, including security, surveillance, social media, and entertainment.

Data availability

All data generated or analysed during this study are included in this published article.

References

Arora, V., Kumar, V., Jain, A. K., Bhatia, A. & VErma, K. R. K. Face detection (neural network based) for image invariants with neural synthesis.

Mekhaiel, D. Y., Goodale, M. A. & Corneil, B. D. Rapid integration of face detection and task set in visually guided reaching. Eur. J. Neurosci. (2023).

Cai, X., Li, X., Razmjooy, N. & Ghadimi, N. Breast cancer diagnosis by convolutional neural network and advanced thermal exchange optimization algorithm. Comput. Math. Methods Med. 2021 (2021).

Guo, G., Wang, H., Yan, Y., Zheng, J. & Li, B. A fast face detection method via convolutional neural network. Neurocomputing 395, 128–137. (2020).

Lu, Z., Zhou, C. & Wang, H. A face detection and recognition method built on the improved MobileFaceNet. Int. J. Sens. Netw. 45 (3), 166–176 (2024).

Khan, S. S., Sengupta, D., Ghosh, A. & Chaudhuri, A. MTCNN++: a CNN-based face detection algorithm inspired by MTCNN. Visual Comput. 40 (2), 899–917 (2024).

Navid, F. R. S., Razmjooy, N. & Ghadimi A hybrid neural network—World cup optimization algorithm for melanoma detection. Open Med. 13 9–16. (2018).

Soni, N., Sharma, E. K. & Kapoor, A. Deep neural network and 3D model for face recognition with multiple disturbing environments. Multimedia Tools Appl. 81 (18), 25319–25343 (2022).

Liu, X., Zhang, S., Hu, J. & Mao, P. ResRetinaFace: an efficient face detection network based on RetinaFace and residual structure. J. Electron. Imaging 33 (4), 043012–043012 (2024).

Razmjooy, N., Ramezani, M. & Ghadimi, N. Imperialist competitive algorithm-based optimization of neuro-fuzzy system parameters for automatic red-eye removal. Int. J. Fuzzy Syst. 19 (4), 1144–1156 (2017).

İnci, M. & Aygen, M. S. A modified energy management scheme to support phase balancing in grid interfaced photovoltaic/fuel cell system. Ain Shams Eng. J. 12 (3), 2809–2822 (2021).

Razmjooy, N., Sheykhahmad, F. R. & Ghadimi, N. A hybrid neural network–world cup optimization algorithm for melanoma detection. Open Med. 13 (1), 9–16 (2018).

Kumar, A., Kaur, A. & Kumar, M. Face detection techniques: a review. Artif. Intell. Rev. 52, 927–948 (2019).

Liu, Y. & Bao, Y. Automatic interpretation of strain distributions measured from distributed fiber optic sensors for crack monitoring, Measurement 211 112629, (2023).

Soni, N., Sharma, E. K. & Kapoor, A. Hybrid meta-heuristic algorithm based deep neural network for face recognition. J. Comput. Sci. 51, 101352 (2021).

Xu, Z., Sheykhahmad, F. R., Ghadimi, N. & Razmjooy, N. Computer-aided diagnosis of skin cancer based on soft computing techniques. Open Med. 15 (1), 860–871 (2020).

Girdhar, P., Virmani, D. & Saravana Kumar, S. A hybrid fuzzy framework for face detection and recognition using behavioral traits. J. Stat. Manag. Syst. 22 (2), 271–287 (2019).

Subramanian, R. R. et al. PSO based fuzzy-genetic optimization technique for face recognition. In 11th International Conference on Cloud Computing, Data Science & Engineering (Confluence), 2021 374–379. (EEE, 2021).

Hussain, S. A. & Al Balushi, A. S. A. A real time face emotion classification and recognition using deep learning model, in Journal of Physics: Conference Series, 1432 (1) 012087. (IOP Publishing, 2020).

Tan, X. & Triggs, B. Enhanced local texture feature sets for face recognition under difficult lighting conditions. IEEE Trans. Image Process. 19 (6), 1635–1650 (2010).

Jiang, H. & Learned-Miller, E. Face detection with the faster R-CNN. In 12th IEEE international conference on automatic face & gesture recognition (FG 2017), 2017 650–657. (IEEE, 2017).

Romanova, E. A., Kapravchuk, V. V., Kondaurov, L. R., Hammoud, A. M. & Briko, A. N. An approach of using ultrasound to obtain information about muscle contraction. In 6th International Youth Conference on Radio Electronics, Electrical and Power Engineering (REEPE), 2024 1–5. (IEEE, 2024).

Hammoud, A. et al. Assessing the feasibility of cuffless pulse wave velocity measurement: a preliminary study using bioimpedance and sphygmomanometer. In 2023 Systems and Technologies of the Digital HealthCare (STDH), 21–25. (IEEE, 2023).

Aghajani, G. & Ghadimi, N. Multi-objective energy management in a micro-grid. Energy Rep. 4, 218–225 (2018).

Akbary, P., Ghiasi, M., Pourkheranjani, M. R. R., Alipour, H. & Ghadimi, N. Extracting appropriate nodal marginal prices for all types of committed reserve. Comput. Econ. 53 (1), 1–26 (2019).

Bagheri, M. et al. A novel wind power forecasting based feature selection and hybrid forecast engine bundled with honey bee mating optimization. In IEEE International Conference on Environment and Electrical Engineering and 2018 IEEE Industrial and Commercial Power Systems Europe (EEEIC/I&CPS Europe), 2018, 1–6. ( IEEE, 2018).

Li, J. et al. A novel wide-band dielectric imaging system for electro-anatomic mapping and monitoring in radiofrequency ablation and cryoablation. J. Transl. Intern. Med. 10 (3), 264–271 (2022).

Zhang et al. A deep learning outline aimed at prompt skin cancer detection utilizing gated recurrent unit networks and improved orca predation algorithm. Biomed. Signal Process. Control. 90, 105858 (2024).

Ranjbarzadeh, R. et al. Nerve optic segmentation in CT images using a deep learning model and a texture descriptor. Complex. Intell. Syst. 1–15, (2022).

Cai, W. et al. Optimal bidding and offering strategies of compressed air energy storage: a hybrid robust-stochastic approach. Renew. Energy 143, 1–8 (2019).

Dehghani, M. et al. Blockchain-based securing of data exchange in a power transmission system considering congestion management and social welfare. Sustainability 13, (1) 1–1, (2020).

Ebrahimian, H., Barmayoon, S., Mohammadi, M. & Ghadimi, N. The price prediction for the energy market based on a new method. Econ. Res. Ekonomska istraživanja 31 (1), 313–337 (2018).

Guo, Z., Xu, L., Si, Y. & Razmjooy, N. Novel computer-aided lung cancer detection based on convolutional neural network‐based and feature‐based classifiers using metaheuristics. Int. J. Imaging Syst. Technol. (2021).

Eslami, M., Moghadam, H. A., Zayandehroodi, H. & Ghadimi, N. A new formulation to reduce the number of variables and constraints to expedite SCUC in bulky power systems, Proceedings of the National Academy of Sciences, India Section A: Physical Sciences 1–11. (2018).

Fan, X. et al. High voltage gain DC/DC converter using coupled inductor and VM techniques. IEEE Access 8, 131975–131987. (2020).

Firouz, M. H. & Ghadimi, N. Concordant controllers based on FACTS and FPSS for solving wide-area in multi-machine power system. J. Intell. Fuzzy Syst. 30 (2), 845–859 (2016).

Tian, Q., Wu, Y., Ren, X. & Razmjooy, N. A new optimized sequential method for lung tumor diagnosis based on deep learning and converged search and rescue algorithm. Biomed. Signal Process. Control 68, 102761 (2021).

Liu, Y., Liu, L., Yang, L., Hao, L. & Bao, Y. Measuring distance using ultra-wideband radio technology enhanced by extreme gradient boosting decision tree (XGBoost). Autom. Constr. 126, 103678 (2021).

Ye, B. The molecular mechanisms that underlie neural network assembly. Med. Rev. 2 (3), 244–250 (2022).

Xie, J. et al. Digital tongue image analyses for health assessment. Med. Rev. 1 (2), 172–198 (2021).

Ye, H., Jin, G., Fei, W. & Ghadimi, N. High step-up interleaved dc/dc converter with high efficiency. Energy Sour. Part A Recovery Utilization Environ. Efects, pp. 1–20, (2020).

Yuan, Z., Wang, W., Wang, H. & Ghadimi, N. Probabilistic decomposition-based security constrained transmission expansion planning incorporating distributed series reactor. IET Generation Trans. Distribut. 14 (17), 3478–3487 (2020).

Yu, C. & Wang, J. Data mining and mathematical models in cancer prognosis and prediction. Med. Rev. 2 (3), 285–307 (2022).

Liu, H. & Ghadimi, N. Hybrid convolutional neural network and flexible dwarf mongoose optimization algorithm for strong kidney stone diagnosis. Biomed. Signal Process. Control 91, 106024 (2024).

Li, Z., Lu, Y. & Yang, L. Imaging and spatial omics of kidney injury: significance, challenges, advances and perspectives. Med. Rev. 3 (6), 514–520 (2023).

Razmjooy, N., Ashourian, M. & Foroozandeh, Z. Metaheuristics and optimization in computer and electrical engineering, ed: Springer.

Razmjooy, N., Estrela, V. V., Loschi, H. J. & Fanfan, W. A comprehensive survey of new meta-heuristic algorithms, Recent Advances in Hybrid Metaheuristics for Data Clustering, Wiley Publishing (2019).

Han, M. et al. Timely detection of skin cancer: an AI-based approach on the basis of the integration of echo state network and adapted Seasons optimization Algorithm. Biomed. Signal Process. Control 94, 106324 (2024).

Li, S. et al. Evaluating the efficiency of CCHP systems in Xinjiang uygur autonomous region: an optimal strategy based on improved mother optimization algorithm. Case Stud. Therm. Eng. 54, 104005 (2024).

Sun, L., Han, X. F., Xu, Y. P. & Razmjooy, N. Exergy analysis of a fuel cell power system and optimizing it with fractional-order Coyote optimization Algorithm. Energy Rep. 7, 7424–7433 (2021).

Chang, L., Wu, Z. & Ghadimi, N. A new biomass-based hybrid energy system integrated with a flue gas condensation process and energy storage option: an effort to mitigate environmental hazards. Process Saf. Environ. Prot. 177, 959–975 (2023).

Tizhoosh, H. R. Opposition-based learning: a new scheme for machine intelligence, in International conference on computational intelligence for modelling, control and automation and international conference on intelligent agents, web technologies and internet commerce (CIMCA-IAWTIC’06), 1 695–701. (IEEE, 2005).

Xu, Q., Wang, L., Wang, N., Hei, X. & Zhao, L. A review of opposition-based learning from 2005 to 2012. Eng. Appl. Artif. Intell. 29, 1–12 (2014).

Tian, Y. & Lu, Z. Chaotic S-box: Intertwining logistic map and bacterial foraging optimization. Mathematical Problems in Engineering, 2017. (2017).

Biedrzycki, R. A version of IPOP-CMA-ES algorithm with midpoint for CEC 2017 single objective bound constrained problems. In 2017 IEEE Congress on Evolutionary Computation (CEC), 1489–1494. (IEEE, 2017).

Cui, Z. et al. A pigeon-inspired optimization algorithm for many-objective optimization problems. Sci. China Inform. Sci. 62 (7), 1–3 (2019).

Zhao, W., Wang, L. & Zhang, Z. Supply-demand-based optimization: a novel economics-inspired algorithm for global optimization. IEEE Access 7, 73182–73206. (2019).

Kaveh, A., Khanzadi, M. & Moghaddam, M. R. Billiards-inspired optimization algorithm; a new meta-heuristic method. In Structures 27, 1722–1739. ( Elsevier, 2020).

Nefian, A. V. Georgia tech face database. http://www.anefian.com/research/face_reco.htm (2017).

Zhang, S., Chi, C., Lei, Z. & Li, S. Z. Refineface: refinement neural network for high performance face detection. IEEE Trans. Pattern Anal. Mach. Intell. 43 (11), 4008–4020 (2020).

Yang, X. & Zhang, W. Heterogeneous face detection based on multi-task cascaded convolutional neural network. IET Image Proc. 16 (1), 207–215 (2022).

Zhang, J., Wu, X., Hoi, S. C. & Zhu, J. Feature agglomeration networks for single stage face detection, Neurocomputing 380 180–189. (2020).

Chen, Y., Qian, J., Yang, J. & Jin, Z. Face alignment with cascaded bidirectional lstm neural networks. In 23rd international conference on pattern recognition (ICPR) 2016, 313–318. (IEEE, 2016).

Li, X., Lai, S., Qian, X., Dbcface. & Towards pure convolutional neural network face detection. IEEE Trans. Circuits Syst. Video Technol. 32 (4), 1792–1804 (2021).

Nurenie, A., Heryadi, Y., Suparta, W. & Arifin, Y. Predicting human activity with LSTM face detection on server surveillance system. In 2023 International Conference on Artificial Intelligence in Information and Communication (ICAIIC) 078–085. (IEEE, 2023).

Huang, Z., Chen, S., Zhang, J. & Shan, H. PFA-GAN: progressive face aging with generative adversarial network. IEEE Trans. Inf. Forensics Secur. 16, 2031–2045 (2020).

Dachapally, P. R. Facial emotion detection using convolutional neural networks and representational autoencoder units, arXiv preprint arXiv:1706.01509 (2017).

Acknowledgements

The Training Program for Middle-aged and Young Backbone Teachers of Zhoukou Normal University. Joint Fund Project of Henan Provincial Science and Technology Research and Development Plan (Industrial Category) (No.225101610053).

Author information

Authors and Affiliations

Contributions

Guang Gao: Conceptualization, Data curation, Formal analysis, Methodology, Resources, Software, Writing—original draft, Writing—review & editing.Chuangchuang Chen: Conceptualization, Data curation, Formal analysis, Methodology, Resources, Software, Writing—original draft, Writing – review & editing.Kun Xu: Conceptualization, Data curation, Formal analysis, Methodology, Resources, Software, Writing—original draft, Writing—review & editing.Kai Liu: Conceptualization, Data curation, Formal analysis, Methodology, Resources, Software, Writing—original draft, Writing—review & editing.Arsam Mashhadi: Conceptualization, Data curation, Formal analysis, Methodology, Resources, Software, Writing—original draft, Writing—review & editing.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Gao, G., Chen, C., Xu, K. et al. Automatic face detection based on bidirectional recurrent neural network optimized by improved Ebola optimization search algorithm. Sci Rep 14, 27798 (2024). https://doi.org/10.1038/s41598-024-79067-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-79067-x

Keywords

This article is cited by

-

Map Reduce Framework-Assisted Feature Analysis and Adaptive Multiplicative Bi-RNN Using Big Data Analytics for Decision-Making

International Journal of Computational Intelligence Systems (2025)