Abstract

Real-time detection of conveyor belt tearing is of great significance to ensure mining in the coal industry. The longitudinal tear damage problem of conveyor belts has the characteristics of multi-scale, abundant small targets, and complex interference sources. Therefore, in order to improve the performance of small-size tear damage detection algorithms under complex interference, a visual detection method YOLO-STOD based on deep learning was proposed. Firstly, a multi-case conveyor belt tear dataset is developed for complex interference and small-size detection. Second, the detection method YOLO-STOD is designed, which utilizes the BotNet attention mechanism to extract multi-dimensional tearing features, enhancing the model’s feature extraction ability for small targets and enables the model to converge quickly under the conditions of few samples. Secondly, Shape_IOU is utilized to calculate the training loss, and the shape regression loss of the bounding box itself is considered to enhance the robustness of the model. The experimental results fully proved the effectiveness of the YOLO-STOD detection method, which constantly surpasses the competing methods and achieves 91.2%, 91.9%, and 190.966 detection accuracy and detection speed in terms of recall, Map value, and FPS, respectively, which is able to satisfy the needs of industrial real-time detection and is expected to be used in the real-time detection of conveyor belt tearing in the industrial field.

Similar content being viewed by others

Introduction

Coal is a vital resource for national livelihood1. There are 74 large coal mines in Xinjiang, China. Belt conveyor, as one of the important tools for coal transportation, is widely used in coal mines due to its advantages of large transportation capacity, low power consumption, and high reliability. The conveyor belt, as the main component for carrying and transporting materials, plays a crucial role in the operation of belt conveyors. In the coal mines of Xinjiang, the mining areas are often subject to windy and sandy conditions due to geographical and other factors. Moreover, due to the direct contact between the conveyor belt and the materials, various damage to the conveyor belt is inevitable due to factors such as material shape and quality, belt manufacturing technology, and production equipment. Continuous friction exacerbates the damage, leading to serious faults such as tearing and even fractures. These damages may bring safety risks, shorten the conveyor’s service life, disrupt production, and cause significant economic losses. Therefore, it is of great significance to accurately, efficiently, and reliably identify these damages to prevent the aforementioned issues.

The defect detection task for conveyor belts usually involves many complex situations in practical applications. For example, defects such as tears and fractures on the conveyor belt exhibit varying sizes and directions, and are subject to background interference. In addition, real-time online monitoring is required for conveyor belt damage detection at present. Moreover, when faults like conveyor belt tears occur, replacement and maintenance work are usually carried out immediately upon discovery, which results in the insufficient on-site data retention of these incidents. It can be seen that the target detection task for conveyor belt defects faces challenges in accuracy, anti-interference, computational efficiency, and the scarcity of sample data.

The traditional method for detecting belt defects in coal mine industrial belt conveyors is mainly divided into two stages2. The typical methods in the first stage include manual inspection during shutdown, material leakage detection, electromagnetic detection, X-ray non-destructive detection, etc3. Slow manual detection speed, high false detection and missed detection rates, and the need for shutdown for testing; Leakage detection uses a balance hammer and tray device to detect whether there is material leakage at the bottom of the upper conveyor belt. This method is easily affected by the environment and has a high false detection rate; Electromagnetic detection is a method of determining whether tearing has occurred by installing electromagnetic detection devices on both sides of the conveyor, and determining whether the electromagnetic signals emitted by the electromagnetic detection devices are continuous. This method requires modification on the conveyor, which is costly and prone to false positives; X-ray non-invasive detection uses X-rays to detect whether the steel wires inside the conveyor belt are continuous to determine whether the conveyor belt is torn. This detection method is only applicable to low-speed running belts, and X-rays can emit radiation to the human body, so it is not widely used. All of these detection methods have some major shortcomings and are unable to meet the demand for detection accuracy in industrial tear detection. The second stage is intelligent detection based on machine vision4. Wang et al. constructed a SVM based belt tearing prediction model, combined with the Camshift operator to quickly track and capture moving tearing images, and built a real-time monitoring system for longitudinal tearing of conveyor belts, achieving good real-time monitoring results for conveyor belt tearing5. Hao et al. used a multi class support vector machine model to quickly extract the grayscale values of longitudinal tearing images of conveyor belts as salient values, which were used to identify the position and type of tearing, with a recognition accuracy of over 90%6. Li et al. used the SSR algorithm to detect tear damage on conveyor belts, selected appropriate scale parameters, extracted feature information such as tear area and length, and identified tears7. Li et al. proposed a machine vision inspection method based on multiple linear light sources. By irradiating the surface of the conveyor belt with multiple linear light sources, a line-array CCD camera is used to collect images of the conveyor belt, and an image processing method is used to complete real-time detection8. Machine vision technology makes inspection tasks easier and gradually meets the needs of industrial inspection tasks for inspection accuracy as well as real-time inspection. Machine vision technology makes the inspection task easier to complete, greatly reducing the difficulty of industrial inspection, and further improve the accuracy and efficiency of the inspection. Although, the development of machine vision technology has solved some of the problems in the target detection task, but how to improve the detection performance of the detection method as well as the detection of the stability of the detection of the new urgent need to solve the problem, leakage rate, high false detection rate, to adapt to the complexity of the detection environment, are today’s detection methods need to overcome the problem.

In recent years, object detection methods based on deep learning in machine vision have achieved significant prominence in various fields9,10,11. These methods uses deep learning models for tasks such as image segmentation and feature extraction, offering advantages like high detection accuracy, real-time performance, and anti-interference. The network models used in existing object detection tasks all come from the improvement and evolution of convolutional neural networks12; Zhu et al. embedded an SD module in the Yolov7 network to enhance the model’s feature extraction ability for small targets, and used LU-PAFPN as the neck network to enhance the information transmission efficiency between the upper and lower layers of the model, greatly improving the model’s processing ability for small target detection problems13; Zhang et al. built a DsPAN anchor free box network to improve the accuracy of the Yolov8 model for workpiece damage detection, enhancing the model’s ability to detect small object and obtain underlying information of the detected object14; However, despite the good results of Yolov8 in terms of detection accuracy, the application of these techniques in the industrial sector is still challenging, as the complex network structure leads to sub-optimal real-time performance, and the high demand for computational resources makes the deployment of this method a challenging task. With the development of technology, numerous scholars have proposed many excellent algorithms for equipment defect detection in industrial environment, such as attention mechanism15,16, multi-head convolution16,17, etc.

At present, methods for object detection based on machine vision and deep learning are mainly affected by two factors: model training and image data quality (including quantity, size, cleanliness, etc.) used for detection algorithms. From existing research, it can be seen that a large number of labeled datasets are the key to ensuring excellent performance of machine vision algorithm18. However, there are fewer equipment defect samples and fewer high-quality sample data, while facing a detection environment with multiple interference. These challenges greatly hinder the application of machine vision and deep learning in this field.

In summary, considering the limitations of existing methods in detecting defects in coal mine conveyor belt tearing with few samples and multiple interferences, proposed a deep learning based YOLO-STOD conveyor belt wear detection method. By incorporating the BotNet structural attention mechanism, the model’s feature extraction and learning ability are improved. Additionally, Shape_IOU is introduced to obtain the regression loss of the bounding box’s shape, which enhances the detection capability for small sample defects and the model’s robustness in complex environments, ultimately achieving superior detection performance for conveyor belt tearing defects under challenging conditions. The main contributions of this paper are outlined as follows:

-

(1)

This study proposed a YOLO-STOD method based on deep learning, which extracts deep level features of image tearing damage to achieve real-time detection of longitudinal tearing damage in conveyor belts with few samples and complex environmental conditions. A detection accuracy of 91.9% and a detection speed of 190.966 were obtained, which well meets the demand for real-time detection of conveyor belt tears in industrial sites.

-

(2)

In order to improve the model’s detection ability for small tearing targets, this study integrates the BotNet bottleneck structure in the backbone network of Yolov5 to improve the model’s feature extraction ability for targets and enhance the model’s detection accuracy for tearing.

-

(3)

In order to further improve the detection ability of the model, this study uses Shape_IOU to calculate the model loss, and by considering the shape loss of the bounding box itself, the convergence speed as well as the accuracy of the model is greatly improved, which greatly improves the detection accuracy of the model and the detection ability in complex environments.

-

(4)

This research developed a diverse dataset of conveyor belt tearing defects to support machine vision based defect target detection for coal mine conveyors and other potential industrial equipment.

The specific structure of this paper is as follows: “Related work” reviews related work on object detection. “The Basic Theory of Yolov5” discusses the basic theory and limitations of Yolov5. “Proposed model” presents the proposed algorithm. “Experiment” covers the experimental validation. Finally, “Conclusion” provides the summary.

Related work

The object detection algorithms based on machine vision and deep learning are mainly divided into two-stage and single-stage algorithms. These two stages detection methods use two independent networks to handle region localization and classification problems separately. Firstly, candidate boxes are formed to determine the regions containing objects, and then complex networks are used to detect and classify the selected regions. This type of methods focuses more on the selective regions of the network structure. However, this method has a slow detection speed and cannot meet the needs of real-time detection. A typical method is the Faster Region based Convolutional Neural Network (Faster R-CNN) series19;

The regression based single-stage detection method directly detects and classifies all regions in an image. Typical examples of this approach include SSD algorithm and Yolo series algorithm20. The detection method based on Yolo is the most typical single stage object detection method. Yolo uses convolutional neural networks to extract image features, and directly predicts bounding boxes and categories by generating anchor boxes, thereby achieving real-time object detection. This method has extremely high detection accuracy and extremely fast detection speed.

Yolo series algorithms after several years of development, has been iterated out of many versions, compared to other algorithms in the Yolo series, the Yolov5 model has stronger reliability and compatibility, more lightweight structure makes it relatively low demand for computing resources, has a greater advantage in the detection speed, but also to ensure a higher detection accuracy, Yolov7 detection speed has a slight advantage, but there is a certain decline in detection accuracy, Yolov8 has better detection accuracy, but more decline in detection speed, in the balance between detection accuracy and detection speed, Yolov5 is clearly doing better21. Yolov7 has a slight advantage in detection speed, while Yolov7 has a slight advantage in detection speed and a certain decline in detection accuracy22. Yolov8 has a better detection accuracy, but the detection speed declines more, and Yolov5 is obviously better in the balance between detection accuracy and detection speed; as verified by the experiments, Yolov8 has a higher value of Map, but there is a significant decline in detection speed, and Yolov7 performs poorly on the index of detection accuracy. Considering computational resources, detection accuracy, and detection real-time, we finally choose Yolov5 network as the baseline network in this study.

Yolov5, as an important improvement in the Yolo series, is based on Yolov3. Yolov5 adds Mosaic image enhancement technology to the input end to enhance the stability and robustness of the model, and concatenates the detected images. It also introduces adaptive anchor box calculation to enhance detection accuracy by learning optimal anchor box parameters. Additionally, adaptive image scaling addresses the issue of inconsistent input image scales. Due to the rapid development of deep learning based object detection models, Yolov5 and its improved versions have been widely applied in various fields of object detection tasks. Wang et al. optimized the Yolov5 model using SE attention mechanism and K-means algorithm for road damage detection, achieving a detection accuracy of over 95%23. Sun et al. proposed a machine vision based conveyor belt tear detection method. The method improves the model by adding the attention mechanism MCA to the Yolov5 network and replacing the Alpha-iou Loss to achieve the real-time conveyor belt tear detection task24; Wang et al. used the improved Yolov5 model to detect the X-ray images of the wire rope cores inside the conveyor belt, and further judged whether the conveyor belt was torn by detecting whether the wire rope cores were broken or not, and the detection accuracy could reach 91%25. Wei et al. used the UNet structure combined with the Yolov5 model to achieve real-time detection of pedestrians on the road in complex environments with a detection accuracy of up to 97.5% using data from a combination of visible and infrared images26. Zheng et al. combined multispectral channel attention with the Yolov5 algorithm in pedestrian and car detection, enhancing information propagation between features and greatly improving the detection accuracy of the model27. Wang et al. developed a small model fruit detection method based on the channel pruning Yolov5s algorithm, which uses the channel pruning algorithm to trim and fine tune the model, achieving fast and accurate detection of apple small fruits28.

The basic theory of Yolov5

This section provides a detailed introduction to the theoretical background of the selected basic model Yolov5, and discusses the shortcomings of existing Yolov5 algorithms in detecting targets with few samples of tearing defects and complex environmental interference.

Basic structure

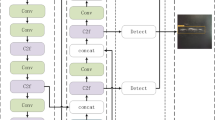

The Yolov5 algorithm includes Input, Backbone, Neck, and Head. The algorithm structure is shown in Fig. 1.

In the backbone network, Yolov5 uses CSPDarknet53 as its backbone network, which mainly includes CSPNet and Darknet53. CSPNet is mainly composed of a series of convolutional layers (Conv), pooling layers, and residual blocks to improve the detection accuracy and speed of the network. The Darknet53 structure is composed of 53 convolutional layers, mainly used to extract shallow and deep features of conveyor belt tearing. In addition to the backbone network, the CBS structure is a fundamental component of the Yolov5 model, mainly responsible for extracting target features. It consists of Conv, Batch Normalization (BN) layer, and activation function SiLu, where SiLu is used to improve the model’s representation ability and enhance its stability. CSP is a key component in Yolov5, which is a double-layer parallel structure mainly composed of CBS and n BottleNecks. When the torn feature is input into the CSP structure, it will be divided into two parts. One part is directly convolved through the convolutional layer, and the other part is processed through cross stage connections. Finally, the two parts of the features are fused. By merging features from different stages, the network’s feature extraction ability is greatly enhanced, and information loss is effectively reduced, ultimately enabling the model to detect torn features more accurately and improving the detection efficiency of the model; SPPF is an important structure in the Yolov5 model, which is an improvement of the SPP structure in the Yolov3 network. SPPF is mainly used to fuse tear features at different scales. It is composed of a CBS module and a maxpooling module in series. Compared to the parallel structure of SPP, SPPF reduces the computational complexity of the model while retaining the same functionality, improving the detection efficiency of the model2.

The Neck section of Yolov5 mainly uses the PANet structure, which is a good feature extraction structure. PANet has established a feature pyramid that enhances the model’s ability to detect tearing features by fusing low-level high-resolution tearing image features at the bottom of the pyramid with strong semantic information at the top. Compared to FPN, the use of top-down and bottom-up bidirectional feature fusion paths improves the model’s ability to process target features in images and enhances the overall performance of the model29.

The Head section is mainly responsible for the final regression prediction of tear features, which mainly includes the detection layer, output layer, and loss function. The detection layer consists of convolutional layer, batch normalization layer, and SiLu activation function layer, which is used to extract the position and category information of features from the feature map. There are three output layers in the Head network, which are responsible for decoding predicted information into the position and category of the object detection box. They can output three different scales of features, enabling the model to adapt to detection tasks at different scales and achieve multi-scale object detection. The loss function is used to measure the difference between the predicted results of the model and the actual labels, and to improve the detection ability of the model by optimizing this difference30.

Disadvantages of Yolov5

Yolov5 effectively balances detection accuracy and speed, making it a powerful network for object detection tasks. It mainly constructs a corresponding detection model by continuously learning the features of the detection target, and uses the model to complete the detection task, greatly improving the accuracy and efficiency of object detection tasks31. However, the training process of this model requires the support of a large number of data samples. When data samples are insufficient, the model may over learn the existing target features, greatly weakening its generalization ability, which is called overfitting. This overfitting results in a model that cannot be applied to data outside the training set. In addition, a lack of training data can prevent the model from learning the target’s deep features, severely impacting detection accuracy, particularly in complex and highly interfered environments.

The poor detection performance for small targets is also a major flaw of the Yolov5 algorithm32. Yolov5 uses CSP_ Net as the feature extraction network. When performing feature extraction tasks for small targets, CSP_ Net can extract very little feature information due to the target being too small, resulting in severe loss of target feature information and the model being unable to learn target features well.

Meanwhile, the detection accuracy of Yolov5 is also susceptible to interference from the detection environment, and multivariate interference is also a major factor affecting the model’s detection accuracy33. In complex situations such as occlusion and other damage interference, the model cannot recognize and learn target features well, leading to an increase in false positives and missed detections.

As a deep learning network, Yolov5 has a complex network structure and numerous hidden layers, which leads to the degradation of model capabilities and the problem of vanishing gradients, which also affects the detection performance of the model.

Proposed model

The YOLO-STOD network developed in this article is improved to address the shortcomings of the Yolov5 network in object detection tasks. BotNet attention mechanism and Shape_IOU are introduced to enhance the model’s feature extraction ability, improve the detection accuracy and small object detection performance of the model.

BotNet attention mechanism

How to further enhance the feature information extraction ability of Yolo network for small target objects and the anti-interference ability of the model in complex environments has always been an important direction for the development of Yolo algorithm. The feature extraction ability of the backbone network in Yolov5 almost determines the performance of the entire model. Therefore, the improvement of the feature extraction method of the backbone network is also the focus of this study.

The BotNet (Bottleneck Transformers Network) structure is an improvement of the ResNet bottleneck network, a hybrid model that combines a Convolutional Neural Network (CNN) with a Self-Attention mechanism, where the CNN has translational invariance and the Transformer has a global sensory field34. The specific structure of BotNet is shown in Fig. 2a.This combination enables the BotNet network to effectively mitigate the gradient vanishing problem in deep learning networks.BotNet adopts the Multi Head Self Attention (MHSA) of the Transformer module to replace the 3 × 3 convolution in the ResNet50 network, and each head of the MHSA can independently learn the tearing feature information in a different dimensional tear feature information, which greatly improves the model’s ability to acquire small target feature information and anti-interference ability in complex environments. At the same time, MHSA can use multiple heads to process the features in parallel to improve the detection efficiency.The specific structure of BotNet is shown in Fig. 2b.

In response to the shortcomings mentioned in “Disadvantages of Yolov5”, the BotNet structure is introduced into the backbone network of Yolov5. The BotNet module is a typical bottleneck structure that combines CNN networks with Transformer architecture. This combination can effectively enhance the model’s global perception ability and improve the accuracy of tearing feature detection in complex situations. And replace the 3 × 3 convolutional layers in the bottleneck structure with Multi Head Self Attention modules. This replacement enables the model to process the input torn image sequence in different subspaces in parallel, capturing semantic information of tearing at different levels and aspects, helping the model extract and integrate smaller tearing features from multiple perspectives and levels, ultimately improving the model’s recognition ability for smaller tears. And although the BotNet structure adds the computational structure of the Transformer, the use of the Transformer structure and convolutional layers in the structure work together, which helps to maintain computational efficiency and the detection performance of the model.

Shape_IOU

One limitation of Yolov5 is its low detection accuracy for objects with irregular shapes or sizes, especially for small targets, which cannot guarantee detection accuracy. One of the main reasons for this is that Yolov5 uses IOU as the target location evaluation metric. Common loss functions include GIOU35, CIOU36, SIOU37, etc. They only focused on the impact of the distance, shape, and angle between the predicted box and the real box on the regression of the bounding box from different aspects, without paying attention to the loss caused by the shape of the bounding box itself. For small object detection tasks, small targets occupy fewer pixels in the image, and the shape information of their bounding boxes is particularly important. The loss of bounding box information can lead to inaccurate loss calculation.

To address these limitations, the model loss is computed using Shape_IOU, which is a bounding-box regression loss function, as shown in Eq. (7)., can better handles conveyor belt tearing of the target boundary by optimizing the spatial overlap between the predicted box and the true bounding box. This largely reduces the shape difference of the bounding box, and Shape_IOU also gives a lower score when the predicted box is close to the real box but the shapes do not match, which is not taken into account by commonly used loss functions. This is extremely beneficial for the detection of objects with complex shapes or large variations in aspect ratios. At the same time, Shape_IOU Loss reduces the background interference through overlap evaluation and enhances the robustness of the model. The improved model performance is mainly attributed to the calculation of Shape_IOU, which prioritises the overlap area between the predicted and actual boxes, and focuses on the shape and scale of the enclosing boxes themselves, matching the shapes between the enclosing boxes, and on the correctness of the shape of the enclosing boxes themselves, as shown in Eq. (1). This leads to better alignment and reduces the likelihood of incorrectly detecting small torn targets or irregularly shaped objects. Improving the model’s detection accuracy and ability to complete complex detection tasks. In summary, the introduction of the Shape_IOU boundary regression loss function effectively improves the detection accuracy of the model. By focusing on the spatial overlap between the prediction frame and the real bounding box’s itself, the inherent limitations associated with traditional loss functions are effectively mitigated38. When considering the geometric constraints between the ground truth (b) box and the prediction box(b(gt)), Shape_IOU calculates the loss by adjusting the scale of the anchored box itself. The calculation principle is shown in Fig. 3. Experiments show that the computational power of the Shape_IOU is correlated with a scale factor, which represents the scale of the target in the dataset. When the scale factor is 1.0, the detection accuracy of the model reaches its peak. As the scale factor gradually increases, the prediction accuracy and other related measures all decrease to varying degrees. The computational principle and process of Shape_IOU are deduced as follows:

-

a.

Calculate IoU.

The formula for calculating the standard IoU is as follows:

where \(\:\text{b}\) and \(\:\text{b}\left(\text{g}\text{t}\right)\) represent the predicted box and the ground truth box, respectively.

Introduce the scale factor scale to calculate the weighted width \(\:ww\) and weighted height hh as follows:

-

b.

Calculate shape distance.

The specific calculation formula is outlined as follows:

-

c.

Calculate the shape consistency term obtained by cumulatively computing weighted width and height differences. The exponential decay function here is used to evaluate shape consistency.

The following formula defines the precise computation:

where ww and wh are the proportions of weighted differences in width and height, calcu-lated by the following formula:

-

d.

Calculate Shape-IoU.

The exact mathematical expression for the calculation is as follows:

In the formula, ww and hh represent the weight coefficients in the width and height directions of the bounding box, respectively, while scale represents the scale factor, which is an important parameter in Shape_IOU. The value of scale is generally between 0 and 1.5, corresponding to the scale of smaller detection targets and larger detection targets. The selection of scale parameters directly affects the detection performance of the model.

In the detection of longitudinal tearing on conveyor belts, the first step is to deal with complex and ever-changing detection environments. Secondly, the detection of smaller tears on conveyor belts is also a major challenge in today’s detection tasks. Introducing Shape_IOU in Yolov5 to focus on the shape loss of the bounding box itself is a good solution, which can greatly improve the overall detection accuracy of the model.

Shape_IOU solves the problem of losing shape information of bounding boxes when the detection environment is complex or the detection target is small. It also introduces a scale factor to adapt the size of the detection target, especially solving the problem of low detection accuracy caused by missing detection object information in small object detection tasks. In addition, it combines the shape loss of bounding boxes to improve the model’s anti-interference ability in complex environments.

Overall algorithm structure

The improved YOLO-STOD algorithm still focuses on the Yolov5 network, but replaces the last CSP3 layer in the backbone network with a BotNet structure, which optimizes the Yolov5 model’s poor detection accuracy for small object detection tasks and enhances its robustness, allowing the model to still have relatively stable and excellent detection accuracy in complex situations. The optimized network is shown in Fig. 4. The parameters of the layers of the model are shown in Table 1.

Experiment

This section will introduce a large number of experiments conducted by our research institute to verify the effectiveness and superiority of the proposed method.

Image acquisition and datasets description

Considering the gap in existing data on conveyor belt tearing in coal mining industry, this study first builds an experimental platform for conveyor belt tearing defects, and conducts corresponding data collection and datasets production.

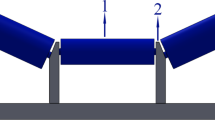

The experimental platform for longitudinal tearing defects on conveyor belts is shown in Fig. 5. The conveyor belt used in the experiment is a nylon rope core type (NN-300 L), with a width of 800 mm and a thickness of 8 mm, and a running speed of 3 m/s. The camera used for image acquisition equipment is an industrial CCD camera (MV-CS050-20GM) with a resolution of 2592 × 2048 and a maximum frame rate of 22.7 fps. The experiment set up a longitudinal tear fault on the conveyor belt, taking into account the harsh working environment of the coal mine conveyor belt and the multiple types of damage caused by long-term transportation of materials. Horizontal breakage of the conveyor belt and scratches on the conveyor belt skin were added as interference sources, and shielding factors were added to enhance the model’s anti-interference ability. The experiment initially obtained 1800 images, including 1300 background images without tearing and 500 images with tearing. The specific data is shown in Table 2. Using data augmentation techniques to enhance the dataset, 1500 background images and 1600 images with tears were obtained, totaling 3100 image data with a size of 640 × 640. The data augmentation effect is shown in Fig. 6. Divide all images into training set 1860, testing set 620, and validation set 620 in a 6:2:2 ratio, and ensure that the ratio of torn and non torn images in each sample set reaches 1:1.

Experimental environment

The model training platform is as follows: Windows operating system, Inter i5 12,400 F processor, NVIDIA GeForce RTX3060Ti 8G. The deep learning development environment is Python 11.3 and PyTorch 2.0.1. The parameters and hyperparameters for model training and testing in this article are shown in Table 3.

Evaluating indicator

In order to directly and comprehensively evaluate the performance of the model, accuracy, recall, F1 score, and Map values are used to evaluate the superiority of the improved model, and FPS, GFLOPs and Parameters indexes are used to evaluate the detection speed of the model. The specific form of indicators is as follows:

In the formula, \(\:pre-process\:\left(t\right)\), \(\:inference\:\left(t\right)\), and \(\:NMS\:\left(t\right)\) respectively represent the time required for prepossessing, inference, and NMS to detect an image.

Among the above evaluation indicators, mAP value is a comprehensive evaluation of model performance, which is the average of AP values for each detection category. F1score is the harmonic average of accuracy and recall. FPS is the number of frames per second that a model can process, measuring the model’s detection speed. GFLOPs denotes the number of computational floats per second that the model can perform, the larger the value, the faster the model computes and the better it can handle more complex devices. Parameters denotes the number of parameters of the model, the larger the number of parameters indicates that the model’s computational task is heavier and the computational cost is higher.

Result analysis

Performance comparison

In order to verify the superiority of the proposed model, the current popular attention mechanism and commonly used backbone network are added to Yolov5 to verify the effectiveness of the proposed model. The performance of the various improved models is shown in Table 4.

The results in Table 4 showed that integrating EfficientNet, BotNet, SimAm, and two attention mechanisms into the Yolov5 network achieved better detection performance than Yolov5s, with the BotNet attention mechanism achieving the best detection results among all detection accuracy indicators. After integrating the EfficientNet network structure into Yolov5s, the map value increased significantly, with a 1.3% increase. This is because the EfficientNet network model uses composite scaling coefficients and inverted residual structures to enhance the model’s feature extraction ability and representation performance, improving the network’s detection accuracy. However, due to the high computational requirements of the EfficientNet network itself, the integration into Yolov5s network increases the complexity and computational burden of the model, resulting in a decrease in the network’s detection speed. After adding the SimAm attention mechanism to the Yolov5s network, the recall rate showed impressive performance, increasing by 3.8%. This is because the SimAm attention mechanism can better capture the spatial relationships and contextual information of objects, expand the appeal field of the model, reduce false positives and missed detections in the model detection process, and enhance the model’s recall rate. In this article, the BotNet attention mechanism was added to the Yolov5s network, resulting in a 4.4% increase in recall, a 2.9% increase in Map value, a 2.06% increase in F1 score, and a 1% increase in FPS, all of which showed significant improvements. At the same time, the model incorporating the BotNet structure has the best scores in the GFLOPs metric, having the best GFLOPs scores with the least number of Parameters, which indicates that the model has the lowest computational cost and has the best computational power, due to the way that the multi-head self-attention mechanism in the BotNet structure processes the data in parallel, which reduces the model’s number of parameters and increases the processing power of the model, which greatly improves the detection speed of the model. The FPS index achieved the best performance among all comparison networks, indicating that adding BotNet to the Yolov5s network can help the model converge at the fastest speed and effectively reduce target loss during the detection process. The comparison of target loss between training and testing is shown in Fig. 7. Based on the above analysis, the overall performance of the BotNet attention mechanism is the best among all networks. This change is due to the introduction of the Transformer’s multi head self attention mechanism by the BotNet attention mechanism on the basis of the residual network, which enhances the accuracy of the model in detecting small targets and enhances the robustness of the model in complex environments, thereby improving the overall performance of the model. The detection results are shown in Fig. 8.

Subsequently, in order to verify the effectiveness of the loss function used in this article, commonly used loss functions were used in Yolov5s to detect the superior performance of Shape_IOU. The performance comparison of various loss functions is shown in Table 5.

From Table 5, it can be seen that using CIOU, Mpd IOU, DIOU, and Shape_IOU all have good results, which can make the overall Map value of the model reach over 90%. Among them, CIOU is the default loss function calculation method in Yolov5s, which considers the differences in shape and position between the predicted box and the real box, enhances the model’s ability to learn features, but also increases the computational complexity of the model and reduces its detection speed. DIOU calculates the loss by minimizing the distance between the center points of the target box and the detection box, but when the center points of the target box and the detection box are close, it will degenerate into the original IOU calculation method. MPD IOU calculates losses by calculating the distance between the target box and the detection box, greatly increasing computational costs and being susceptible to geometric shapes such as the length and width of the bounding box. By adding Shape_IOU, the accuracy of model detection has been improved by 1.8%, the Map value has been improved by 1.1%, the F1 score has been improved by 0.92%, and the detection speed has been improved by 8.54%. This is because Shape_IOU not only considers the loss of the position and shape of the bounding box, but also pays more attention to the shape matching between the predicted box and the real box, increasing the weight of the shape loss part of the bounding box itself. Compared with other loss functions, this calculation method can more accurately capture the shape regression loss of the bounding box, enhance the model’s ability to extract target feature information, improve the network’s detection accuracy for small target objects, and the model’s anti-interference ability. The comparison of bounding box loss of the model is shown in Fig. 9; The comparison of detection results for the same image is shown in Fig. 10.

Ablation experiment

In order to verify the contribution of the attention mechanism and loss function added in this article to the task of detecting small sample defects in longitudinal tearing of conveyor belts, ablation experiments were designed to determine the effects of BotNet attention mechanism and Shape_IOU on the detection results. The results of the ablation experiments are shown in Table 6.

As shown in the table above, the added BotNet attention mechanism and Shape_IOU have both improved the detection results of the model to a certain extent. The detection results of Yolov5s baseline network for longitudinal tearing of conveyor belts under the same conditions were 83.6%, 86.9%, and 88.9%, respectively. The BotNet attention mechanism is integrated into the Yolov5 network alone, and the model data is processed in parallel by combining the MHSA multi-head self-attention mechanism, which reduces the computational cost of the model, enhances the ability of the model to detect small objects, and enhances its robustness in complex environments. Under the condition that the detection speed is basically unchanged, the detection performance of the model is greatly improved. Compared to the Yolov5s network, the detection results showed a 4.4% increase in recall, a 1.9% increase in map values, and a 1% increase in FPS. The Shape_IOU was integrated into Yolov5s alone, and the calculation of the shape information of the detection frame itself was added to the model, which reduced the probability of missed and false detection, and improved the recall rate of the model. Finally, the BotNet attention mechanism and Shape_IOU were integrated into the Yolov5s network, which comprehensively improved the detection performance of the model. Although the number of parameters is not significantly reduced, the GFLOPs are improved by 31%, which greatly improves the detection efficiency of the model. In terms of detection accuracy, compared with the baseline network Yolov5, The accuracy was improved by 1.8%, the recall was improved by 4.3%, the Map value was improved by 3%, the F1 score was improved by 2.98%, and the FPS was improved by 9%. The experiment shows that the improved method in this article has a comprehensive improvement on the detection performance of the model, and also enhances the robustness of the model for tear detection under complex conditions, ensuring detection accuracy. The comparison of detection results is shown in Fig. 11.

Comparison of different models

In the field of object detection, the Yolo series methods have outstanding performance among single-stage detection methods. Therefore, a comparative experiment was designed to compare the Yolo series algorithms with the algorithm proposed in this paper, verifying the superiority and effectiveness of the algorithm proposed in this paper. The results are shown in Table 7.

As can be seen from the table below, the improved method in this paper outperforms Yolov7, Yolov7 Tiny, Yolov8 and Yolov9 in terms of detection accuracy and speed. In terms of detection speed, the Yolov5s model is smaller and faster than the other Yolo versions. Compared to the Yolov5, the Yolov7 has a 34 per cent lower FPS, the Yolov8 has a 24 per cent lower FPS and the Yolov9 has a 79 per cent lower FPS. Among them, Yolov9 performs the worst in the metric of FPS, which is due to the introduction of the PGI component and the GELAN module to the Yolov9 model, which greatly increases the number of parameters, complexity, computational complexity, and detection speed of the model. Yolov9 also has the highest GFLOPs, which suggests that the algorithm may be adapted to more complex detection tasks, but at the same time, it also greatly increases the computational cost of the model and has a high demand for computing equipment.Yolov8 has the highest Parameters score, which indicates that the algorithm has the highest computational cost. This is due to the fact that the Yolov8 model adopts the C2f module with richer gradient flow in Backbone, which greatly enhances the model’s detection ability. In addition, Yolov8 adopts the new Ancher-Free detection head in the Head part, which improves the flexibility and accuracy of the detection, but at the same time, it also increases the number of parameter counts of the model with the computational cost, and decreases the detection speed. Compared with Yolov5s method, the algorithm proposed in this paper improves 9% in FPS, which is due to the adopted MHSA self-attention module in the BotNet bottleneck structure, which processes the data in parallel in multiple heads to improve the detection efficiency of the model. In addition, in terms of detection accuracy, it can be observed that the Yolov8 model has a better performance in terms of Map values, which is mainly due to the C2f gradient flow module adopted by Yolov8 and the Ancher-Free detection head Ancher-Free detection head does not need to preset anchor frames, but directly predicts the bounding box and category of the target. This improvement increases the flexibility and accuracy of the detection and enhances the detection accuracy of the model. Compared to the Yolov5 method, the Map value of the Yolov7 model decreased by 1.4%, the Map value of the Yolov8 model increased by 1.5%, and the Map value of the Yolov9 model decreased by 0.2%. Compared to Yolov5 algorithm, the algorithm proposed in this paper has 3% improvement. In summary, the algorithm proposed in this paper also has some advantages and effectiveness in detection accuracy. A comparison of the detection results is shown in Fig. 12.

Conclusion

In order to maintain the safe production of coal resources, this paper proposes an improved model YOLO-STOD based on Yolov 5 for the limitations of the existing target detection methods.In this study, taking into account the problems and disturbances of conveyor belt tear detection in mining, we design a conveyor belt tear detection dataset based on the actual coal mines with fewer samples and more sources of disturbances, and we add the other damages, such as the horizontal breaks and the wear of the belt surface, as interferences are added to the dataset to enhance the diversity of the dataset. In order to improve the detection performance, the BotNet bottleneck structure and the Shape_IOU border loss function are innovatively integrated into the Yolov5 model, which effectively improves the detection accuracy and speed of the model, and also improves the anti-interference ability of the model and the performance of the detection task under the complex multi-interference environment. The experimental results show that the proposed method works well in small target detection, and the detection accuracy can reach more than 91% even under multi-source interference. Although this paper has strengthened the detection performance of the model target detection method for small targets, it has not been applied in practice, nor has it studied and experimented on the occlusion problem, and in the practical application, it is necessary to consider the harshness of the actual working conditions, as well as the protection measures of the image acquisition equipment, which is the limitation of the detection method in this paper. Although the deep learning model and method given in this study can be used for tear detection in mining belt conveyors, it can be extended to more industrial detection scenarios.

In the future, this study will further explore the model’s detection performance in real-world applications under other interference conditions such as occlusion and foreign objects. This is a problem given that is not addressed by the methods in this paper. In addition, we will also explore the use of techniques from other fields to improve the model performance, such as diffusion models and Neural Architecture Search (NAS) techniques.

Data availability

Data availability Currently, the data used in this study is not publicly available, they are part of an ongoing study. The data sup-porting this study’s findings are available on request from the corresponding author.

References

Caineng, Z. O. U. et al. Resource types, formation, distribution and prospects of coal-measure gas. Pet. Explor. Dev. 46 (3), 451–462 (2019).

Hou, C. et al. Multispectral visual detection method for conveyor belt longitudinal tear. Measurement 143, 246–257 (2019).

Zhang, M. et al. Deep learning-based damage detection of mining conveyor belt. Measurement 175, 109130 (2021).

Zhao, Z. Q. et al. Object detection with deep learning: a review. IEEE Trans. Neural Netw. Learn. Syst. 30 (11), 3212–3232 (2019).

WANG, F. & SUN, H. Visual inspection for extended edge belt tearing based on SVM. China Mech. Eng. 30 (04), 455 (2019).

Hao, X. & Liang, H. A multi-class support vector machine real-time detection system for surface damage of conveyor belts based on visual saliency. Measurement 146, 125–132 (2019).

Li, J. & Miao, C. The conveyor belt longitudinal tear on-line detection based on improved SSR algorithm. Optik 127 (19), 8002–8010 (2016).

Yang, Y. et al. On-line conveyor belts inspection based on machine vision. Optik 125 (19), 5803–5807 (2014).

Petrovic, A. et al. Computer-vision unmanned aerial vehicle detection system using yolov8 architectures. Int. J. Rob. Autom. Technol. 11, 1–12 (2024).

Gawande, U., Hajari, K. & Golhar, Y. Novel person detection and suspicious activity recognition using enhanced YOLOv5 and motion feature map. Artif. Intell. Rev. 57 (2), 16 (2024).

Liang, Y. et al. A stable diffusion enhanced YOLOV5 model for metal stamped part defect detection based on improved network structure. J. Manuf. Process. 111, 21–31 (2024).

Zeng, C., Zheng, J. & Li, J. Real-time conveyor belt deviation detection algorithm based on multi-scale feature fusion network. Algorithms 12 (10), 205 (2019).

]Zhu, J., Qin, C. & Choi, D. YOLO-SDLUWD: YOLOv7-based small target detection network for infrared images in complex backgrounds. Digit. Commun. Netw., (2023).

Zhang, Y. et al. DsP-YOLO: an anchor-free network with DsPAN for small object detection of multiscale defects. Expert Syst. Appl. 241, 122669 (2024).

Jiang, C. et al. AM-YOLO: Improved YOLOV4 based on attention mechanism and multi-feature fusion. In 2022 IEEE 6th Information Technology and Mechatronics Engineering Conference (ITOEC), vol. 6, 1403–1407 (IEEE, 2022).

Sekharamantry, P. K. et al. A seamless deep learning approach for apple detection, depth estimation, and tracking using YOLO models enhanced by multi-head attention mechanism. Computers 13 (3), 83 (2024).

Ying, Z. et al. A modified-YOLOv5s model for detection of wire braided hose defects. Measurement 190, 110683 (2022).

Hussain, M. Yolov5, yolov8 and yolov10: the go-to detectors for real-time vision. arXiv Preprint. arXiv:2407.02988 (2024).

Wang, Y. et al. A big coal block alarm detection method for scraper conveyor based on YOLO-STOD. Sensors 22 (23), 9052 (2022).

Li, R., Wu, Y. Improved YOLO v5 wheat ear detection algorithm based on attention mechanism. Electronics 11 (11), 1673 (2022).

Chitraningrum, N. et al. Comparison study of corn leaf disease detection based on deep learning YOLO-v5 and YOLO-v8. J. Eng. Technol. Sci. 56 (1), 61–70 (2024).

Yu, Q. et al. Detection and analysis of corrosion on coated metal surfaces using enhanced YOLO v5 algorithm for anti-corrosion performance evaluation. J. Mar. Sci. Eng. 12 (7), 1090 (2024).

Liu, Y. An improved faster R-CNN for object detection. In 2018 11th International Symposium on Computational Intelligence and Design (ISCID), vol. 2, 119–123 (IEEE, 2018).

Sun, Y. et al. MVAID. Conveyor belt tear detection algorithm based on improved YOLOv5s. In Third International Conference on Machine Vision, Automatic Identification, and Detection, 2024, vol. 13230, 269–275 (SPIE, 2024).

Wang, B. et al. Damage object detection of steel wire rope-core conveyor belts based on the improved YOLOv5. Int. J. Image Graph. 2550057 (2024).

Wei, J. et al. Infrared pedestrian detection using improved UNet and YOLO through sharing visible light domain information. Measurement 221, 113442 (2023).

Zheng, Y. et al. YOLOv5s FMG: an improved small target detection algorithm based on YOLOv5 in low visibility. IEEE Access. (2023).

Wang, D. & He, D. Channel pruned YOLO V5s-based deep learning approach for rapid and accurate apple fruitlet detection before fruit thinning. Biosyst. Eng. 210, 271–281 (2021).

Guo, K. et al. A pavement distresses identification method optimized for YOLOv5s. Sci. Rep. 12 (1), 3542 (2022).

Li, L. et al. ET-YOLOv5s: toward deep identification of students’ in-class behaviors. IEEE Access. 10, 44200–44211 (2022).

Yang, X. et al. Lightweight and efficient deep learning models for fruit detection in orchards. Sci. Rep. 14 (1), 26086 (2024).

Saidani, T. Deep learning approach: YOLOv5-based custom object detection. Eng. Technol. Appl. Sci. Res. 13 (6), 12158–12163 (2023).

Jung, H. K. & Choi, G. S. Improved yolov5: efficient object detection using drone images under various conditions. Appl. Sci. 12 (14), 7255 (2022).

Ye, X. et al. Rapid and accurate crayfish sorting by size and maturity based on Improved YOLOv5. Appl. Sci. 13 (15), 8619 (2023).

Wang, Z. et al. A smoke detection model based on improved YOLOv5. Mathematics 10 (7), 1190 (2022).

Cao, J. et al. A novel YOLOv5-Based hybrid underwater target detection algorithm combining with CBAM and CIoU. In 2023 China Automation Congress (CAC). IEEE. 8060–8065 (2023).

Xu, L. et al. Defect signal intelligent recognition of weld radiographs based on YOLO V5-IMPROVEMENT. J. Manuf. Process. 99, 373–381 (2023).

Zhang, H. & Zhang, S. Shape-iou: more accurate metric considering bounding box shape and scale. arXiv Preprint. arXiv:2312.17663 (2023).

Acknowledgements

This work has been awarded the Key R&D Project of Xinjiang Uygur Autonomous Region [No. 2022B01037]; the Tianshan Talent Science and Technology Innovation Leading Talent Project of Xinjiang Uygur Autonomous Regiont[No. 2023TSYCLJ0051].

Author information

Authors and Affiliations

Contributions

W.L.: Conceptualization, methodology, software, validation, writing—original draft, visualization. T.Q.: Methodology, validation, formal analysis, writing—original draft, supervision. N.W.: Software, validation. W.X.: Writing—review and editing. C.P.: Visualization.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, W., Tao, Q., Wang, N. et al. YOLO-STOD: an industrial conveyor belt tear detection model based on Yolov5 algorithm. Sci Rep 15, 1659 (2025). https://doi.org/10.1038/s41598-024-83619-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-024-83619-6