Abstract

Due to complex environmental conditions and varying noise levels, traditional models are limited in their effectiveness for detecting anomalies in video sequences. Aiming at the challenges of accuracy, robustness, and real-time processing requirements in the field of image and video processing, this study proposes an anomaly detection and recognition algorithm for video image data based on deep learning. The algorithm combines the innovative methods of spatio-temporal feature extraction and noise suppression, and aims to improve the processing performance, especially in complex environments, by introducing an improved Variable Auto Encoder (VAE) structure. The model named Spatio-Temporal Anomaly Detection Network (STADNet) captures the spatio-temporal features of video images through multi-scale Three-Dimensional (3D) convolution module and spatio-temporal attention mechanism. This approach improves the accuracy of anomaly detection. Multi-stream network architecture and cross-attention fusion mechanism are also adopted to comprehensively consider different factors such as color, texture, and motion, and further improve the robustness and generalization ability of the model. The experimental results show that compared with the existing models, the new model has obvious advantages in performance stability and real-time processing under different noise levels. Specifically, the AUC value of the proposed model is 0.95 on UCSD Ped2 dataset, which is about 10% higher than other models, and the AUC value on Avenue dataset is 0.93, which is about 12% higher. This study not only proposes an effective image and video processing scheme but also demonstrates wide practical potential, providing a new perspective and methodological basis for future research and application in related fields.

Similar content being viewed by others

Introduction

Research background and motivations

With the advancement of technology and the rapid growth of digital information, video image data plays an increasingly important role in people’s daily lives and many industrial fields1,2,3. Especially in the fields of safety monitoring, self-driving cars, medical diagnosis, and industrial automation, the analysis and processing of video image data has become crucial4,5,6. These applications highlight an urgent need for the detection and identification of abnormal events in video image data7,8. For example, the security monitoring system needs to identify potential illegal activities or dangerous situations in real time. The autonomous driving system needs to accurately identify abnormal situations on the road surface to ensure driving safety9,10,11.

Despite the substantial demand, anomaly detection and recognition of video image data are facing many challenges12. First, the magnitude of video data is usually very large, and it is necessary to deal with high-dimensional data streams13,14,15. Second, the diversity of abnormal events and the complex changes in scenes increase the difficulty of detection16,17,18. Traditional rule-based methods and machine learning techniques are often limited in dealing with such problems because they are difficult to adapt to the high dimension and complexity of data19,20,21. In this context, deep learning technology shows the potential to solve the above problems due to its powerful feature learning and presentation ability22. In particular, deep learning can achieve more accurate anomaly detection and recognition by learning the deep features of video data23,24,25.

Aiming at the challenges of accuracy, robustness, and real-time processing in the field of image and video processing, it is urgent to propose innovative algorithms to solve these problems. The purpose of this study is to improve the processing performance, especially in complex conditions, by combining the new model of spatio-temporal feature extraction and noise suppression. The model framework proposed in this study is called Spatio-Temporal Anomaly Detection Network (STADNet). It aims to solve the challenges of accuracy, robustness, and real-time processing requirements in the current image and video processing field.

Research objectives and research contributions

This study aims to develop a video image data anomaly detection and recognition algorithm based on deep learning with the aim of improving the accuracy and efficiency of abnormal event detection. The specific research objectives include the designing and implementing an efficient deep learning model to detect and identify abnormal events from video image data. Compared with the existing technology, the performance of the proposed algorithm is comprehensively evaluated, including key indicators such as accuracy and detection speed. Finally, the application potential of the developed algorithm in different fields (such as safety monitoring, automatic driving, etc.) is discussed, and its effectiveness and feasibility in actual scenes are evaluated.

The main contributions of this study can be summarized as follows:

(1) Multi-scale 3D convolution and spatio-temporal attention mechanism: By introducing multi-scale 3D convolution module and spatio-temporal attention mechanism, STADNet can effectively capture the multi-dimensional spatio-temporal characteristics of video sequences and dynamically focus on key areas and important time periods in the video, thus significantly improving the recognition accuracy of abnormal events. (2) Multi-stream network architecture and cross-attention fusion mechanism: Double-stream network architecture is used to extract color and motion features, and multi-modal information is fused through cross-attention mechanism to enhance the correlation between different features and improve the robustness and generalization ability of the model. (3) Efficient anomaly location and detection: Through the probability scoring mechanism, STADNet can accurately locate the abnormal events in the video and make the final decision, which improves the accuracy of anomaly detection and real-time processing ability.

Literature review

Before the rise of deep learning, anomaly detection mainly relied on traditional machine learning methods26. For example, Li et al. (2020)27 used the method based on support vector machine (SVM) to identify abnormal behaviors in traffic videos. Although it has achieved certain results, this method usually needs to design features manually, and it has high computational complexity when dealing with large-scale video data, so it is difficult to adapt to complex and changeable scenes. Sönmez et al. (2019)28 proposed a method based on extreme learning machine for traffic flow analysis, and introduced the basic principle of this method and its potential application in the field of traffic flow analysis. Similarly, Giriprasad et al. (2018)29 used deep neural network classifier trained by support vector to detect anomalies in video surveillance, and evaluated its performance. Palmieri et al. (2013)30 used wavelet analysis and SVM to detect card sharing traffic, and discussed the practical application of this method in network traffic analysis.

With the improvement of computing power and the popularity of big data, deep learning technology has gradually become the focus of video anomaly detection research31. For example, Sabokorou et al. (2018)32 proposed a full convolutional neural network (CNN) called Deep-anomaly, which was used to quickly detect abnormal behaviors in crowded scenes. Shin et al. (2020)33 proposed a comprehensive method combining 3D CNN, Generative Adversarial Network (GAN) and automatic encoder to improve the ability of video surveillance system to detect abnormal events. Hu et al. (2022)34 designed a video anomaly detection method based on 3D convolutional automatic encoder, focusing on extracting features from video data, and verified its performance in anomaly detection.

In recent years, researchers began to pay attention to how to effectively fuse multimodal information in video data to improve the performance of anomaly detection. For example, Kumari and Anand (2024)35 used CNN to extract the spatial features of video frames and capture the time series information through RNN, thus significantly improving the accuracy of anomaly detection. In addition, automatic encoders are also widely used in anomaly detection tasks. By learning the normal mode of video data, it is considered abnormal to detect the input that deviates significantly from the reconstructed image36. For example, Wang & Yang (2022)37 proposed a convolutional recursive automatic encoder to capture temporal patterns and spatial irregularities respectively. The fusion of multimodal information is another research hotspot, which includes visual features, motion trajectories of audio signals and video frames38. For example, Wu and Li (2023)39 proposed a multimodal emotion recognition method combining facial expressions and EEG signals to improve the accuracy and robustness of emotion recognition. Huang et al. (2024)40 proposed a multi-modal fusion network in video salient object detection, which improved the performance through channel rearrangement. Another example is Min et al. (2016)41, who used a deep learning model that combines visual and auditory information to predict the gaze position of human eyes in complex environments. The model not only considered visual information, but also combined audio data to enhance the perception ability of the target area. Min et al. (2020)42 also proposed a new video saliency model to model the high correspondence between audio and visual signals in video. The results showed that multi-modal model had higher accuracy and robustness than single-modal model when dealing with audio and video content.

In practical application, some researches also focus on the optimization of anomaly detection in specific scenarios. For example, Shams et al. (2024)43 proposed a Self-Attention Neural Network (SANN) model based on self-attention layer, which was used to detect emergency vehicle alarms and road traffic noise in acoustic data. Taha et al. (2023)44 proposed a deep learning method combining mask detection and robust principal component analysis (RPCA), which improved the performance of face recognition in the scene of wearing masks. Ullah et al. (2021)45 proposed the LSTM network based on attention mechanism for action recognition in sports. This method dynamically weights key areas in video frames through attention mechanism, which greatly improves the performance of motion recognition. Khan et al. (2016)46 studied crowd behavior analysis in natural environment, especially identifying source and sink points and describing main flows. In this study, an analysis method based on behavior pattern was proposed, which was suitable for abnormal event detection in crowded environment. In the aspect of image quality evaluation, Min et al. (2018)47 designed an innovative distortion enhancement method, which artificially increased the distortion on the non-reference image to simulate a variety of common distortion types, and used these distortion features in the training model to estimate the image quality. Zhai & Min (2020)48 deeply discussed the methods and techniques of image quality perception assessment, and summarized the research progress of image quality assessment, especially the latest development of perceived image quality assessment.

Additionally, multi-modal fusion technology has gained wide attention in video anomaly detection. For example, Liu et al. (2023) proposed a saliency detection method based on scene understanding. By extracting high-level semantic information from aerial images49, they effectively improved target recognition in complex environments. This provides a reference for the design of the spatio-temporal attention mechanism in this study. Bai et al. (2024) achieved high-precision feature fusion in multi-modal fake news detection. They used a frequency-aware cross-modal interaction method. Their work further confirmed the effectiveness of cross-attention mechanisms in multi-modal data processing50. Moreover, Song et al. (2024) built a fine-grained multi-modal emotion analysis dataset. By combining video and text information, they improved emotion classification performance. Their multi-modal feature alignment strategy offered new insights for this study’s cross-modal fusion mechanism51. Pan et al. (2024) proposed a multi-modal fatigue detection framework for miners. By fusing visual and physiological signal features, they demonstrated the practical value of multi-modal technology in dynamic scenarios52. These studies show that combining multi-source information and optimizing feature fusion strategies is key to improving model performance in complex environments.

Generally, the existing research has made some progress in video image anomaly detection, but there are still the following main gaps:

(1) Limitations of multimodal fusion: The existing multimodal fusion methods mainly focus on the simple fusion of visual and audio signals, and fail to effectively consider the complex temporal and spatial characteristics of video data. Video data not only contains visual information (such as color and texture), but also has strong time series properties. Traditional methods often ignore the characteristics of time dimension, so it is impossible to fully explore the temporal and spatial correlation in video. (2) Challenges of high-dimensional complex data processing: Although Autoencoders and recurrent neural networks (RNNs) have made remarkable achievements in dealing with anomaly detection of time series data, they still have some limitations in the face of high-dimensional and complex video data structures. These models usually can’t effectively deal with multi-dimensional information and noise interference in video, which leads to performance degradation. (3) There are many shortcomings in traditional methods. For example, methods based on rules or simple models are often difficult to cope with different noise environments and complex background interference, resulting in unstable performance in practical applications. Many deep learning methods fail to fully capture the temporal and spatial characteristics of video data, especially the key changes in time series, which affects the accuracy of the model in dealing with video anomaly detection. The generalization ability of existing models is poor, especially when applied across datasets, the performance often drops significantly, and it lacks sufficient robustness to deal with anomaly detection tasks in different scenarios.

In view of these research gaps, this study proposes a spatio-temporal anomaly detection network (STADNet), which has made contributions in the following aspects and filled the shortcomings of the existing methods: On the one hand, by introducing a multi-scale 3D convolution module and a spatio-temporal attention mechanism, STADNet can fully capture the spatio-temporal features in videos and make up for the problem that traditional methods ignore spatio-temporal information. This design effectively improves the model’s ability to express temporal and spatial features in video data, and can improve the accuracy of anomaly detection in complex background and dynamic environment. By introducing the improved VAE structure, STADNet enhances the noise suppression function and improves the robustness of the model in high noise environment. Traditional deep learning models often have unstable performance when dealing with noise, while VAE can effectively suppress noise interference and ensure stable operation under different noise conditions.

In addition, STADNet adopts multi-stream network architecture to extract color, texture, and motion features from video images respectively, and fuse them through cross-attention mechanism. This design effectively enhances the correlation between different patterns, making the model more stable and accurate when dealing with multidimensional information. This cross-modal fusion method solves the problem that the existing model is not robust enough on a single feature or channel. In addition, STADNet specially optimizes the computational complexity in the architecture design, so that it can meet the requirements of real-time processing while maintaining high detection performance. This optimization makes up for the shortcomings of existing methods in real-time, and can meet the requirements of efficient processing in real-time video surveillance and other practical application scenarios. Through these innovative designs, STADNet has achieved remarkable performance improvement in dealing with video image data anomaly detection in complex environment, especially in accuracy, robustness and real-time, which fills many gaps in existing methods.

Research methodology

Deep learning model architecture

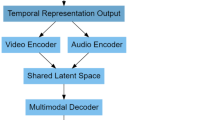

The framework of the proposed deep learning model is named Spatio-Temporal Anomaly Detection Network (STADNet). STADNet is composed of a series of carefully designed components, which aims to capture the temporal and spatial features in video images efficiently. The overall model architecture is shown in Fig. 1 below.

Figure 1 shows that STADNet first receives a series of continuous video frames as input, and carries out preliminary processing through a multi-scale 3D convolution module. The module is composed of 3D convolution layers stacked in multiple layers, which combines batch normalization layer and ReLU activation function to ensure the stability and nonlinear expression ability of feature extraction. In order to capture spatio-temporal information effectively, the convolution kernel size and pace of each layer of convolution can be customized according to specific tasks. The larger convolution kernel can capture the global context, while the smaller convolution kernel pays more attention to local details. This flexible design enables the model to obtain rich information at different spatial resolutions. Assume that the size of the input video frame is W×H×D (where W and H are space dimensions and D is time dimension), the mathematical expression of 3D convolution operation is:

X is the convolution characteristic map of the input. W is the convolution kernel, and Y is the output characteristic map. The size of convolution kernel W is \(\:{K}_{w}\)×\(\:{K}_{h}\)×\(\:{K}_{d}\), which respectively represents the size of convolution kernel in space and time. The introduction of multi-scale 3D convolution module is mainly to capture the multi-dimensional spatio-temporal features in video sequences. In the traditional 2D convolution, usually, only the spatial information of images can be processed, but the change of time dimension cannot be fully considered. However, 3D convolution can deal with both spatial and temporal dimensions, so that the model can better capture the motion and change of objects in the video, as well as local and global background information. Through multi-scale design, features can be extracted at different time scales and spatial scales, thus avoiding the details that may be ignored by traditional methods when dealing with complex scenes. Compared with other models, STADNet can obtain more comprehensive spatio-temporal features through multi-scale 3D convolution module, especially in the environment with large noise and interference, which greatly improves the performance of the model.

After obtaining the preliminary features, the model introduces the spatio-temporal attention mechanism, which highlights the important spatial areas and key time periods through dynamic weighting. This process enhances the model’s attention to specific features, thus improving the accuracy in anomaly detection. Specifically, the weight calculated by the attention mechanism is multiplied by the feature map at the element level, and the temporal and spatial features that have a significant impact on the detection results are emphasized, so that the model still has strong identification ability in complex environments. Assume that the input feature maps are Xspatial and Xtemporal. The attention weight is obtained by calculation, which is expressed as αspatial and αtemporal. The weighted feature map can be obtained:

αspatial and αtemporal are weights in spatial and temporal dimensions respectively, which are dynamically calculated according to the spatio-temporal characteristics of input data. Traditional anomaly detection methods often have the defect of ignoring the importance of spatio-temporal information, especially when dealing with complex spatio-temporal interaction. Spatio-temporal attention mechanism can adaptively pay attention to those moments and areas related to abnormal events and ignore irrelevant background noise or unimportant information by weighting features in time and space. This method is especially suitable for dynamic scenes and complex environments, which is usually not fully considered in many existing anomaly detection models. By introducing the spatio-temporal attention mechanism, STADNet can capture abnormal events in video more accurately while maintaining high efficiency and accuracy, and significantly improve the detection effect.

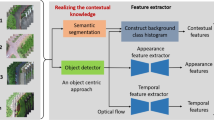

In order to integrate various modal information in video, STADNet also designs a multi-stream network architecture. Among them, the color stream is processed by the standard two-dimensional convolutional neural network (CNN) to extract the color and texture features in the video frame. On the other hand, motion flow calculates the motion information between adjacent frames, uses optical flow method to model, and uses specially designed convolution layer to extract motion features. After extracting their own features, the two streams merge advanced features through an efficient multi-modal fusion layer. This fusion strategy combines color, texture, and motion information, which significantly improves the robustness and generalization ability of the model.

In multi-modal information fusion, STADNet uses the cross-attention fusion mechanism to make the characteristics of color flow and motion flow interact and weigh at the channel level. This process involves transforming the high-level features of each stream into attention vectors, and then strengthening the correlation between different modes through element-level multiplication operation, thus highlighting important feature areas. This method makes the model not only capture abundant multimodal information, but also effectively integrate this information to enhance the final detection effect. Assume that there are two modal streams Xcolor and Xmotion, which are fused by attention weighting mechanism:

\(\:{X}_{i}\) represents the characteristics of each stream. \(\:{\alpha\:}_{i}\) is the corresponding attention weight.

Finally, after the processing of each module, the fused features are reduced and compressed through the fully connected layer, and finally a probability score generated by sigmoid activation function is output, which is used to represent the normality or abnormality of each video segment. By aggregating and sorting the probability scores of all segments in the whole video sequence, STADNet can accurately locate potential abnormal events and make a final judgment at the video level, thus providing an effective and high-performance solution for the anomaly detection task.

In order to further optimize the model and reduce the calculation requirements, a series of concrete and in-depth measures have been taken. Firstly, in the design of network architecture, the model is significantly simplified and optimized. The depth and width of the network are reduced, and the overall structure is lighter by reducing the number of layers and neurons. At the same time, a smaller convolution kernel (for example, 3 × 3 convolution kernel) is adopted to reduce the number of parameters and computation. This design can reduce the complexity of each layer of calculation, while maintaining effective information extraction ability. This optimization makes the model greatly reduce the consumption of computing resources without sacrificing performance.

Secondly, in the process of data preprocessing and enhancement, the strategy of parallel processing and batch processing is introduced. By dividing the data into multiple batches and using multi-thread parallel processing, the speed of data loading and preprocessing is significantly accelerated. In the aspect of data enhancement, intelligent enhancement methods, such as random cropping, rotation and brightness adjustment, are adopted. These methods effectively reduce the computational overhead on the premise of maintaining data diversity and information richness to avoid the computational burden caused by excessive enhancement.

Aiming at the optimization of specific hardware platform, the parallel computing ability of hardware accelerator is fully utilized. By adjusting model parameters, such as using lower precision and optimizing calculation strategy, the compiler of graph calculation framework TensorFlow is adopted here. The model can achieve higher computational efficiency in hardware environment. This adaptive adjustment ensures that the model can give full play to the performance of hardware and reduce the reasoning time.

In the process of training and reasoning, mini-batch training is selected, that is, the dataset is divided into smaller batches for training, which can not only reduce the occupied memory resources, but also accelerate the convergence of the model. In the reasoning stage, lightweight reasoning algorithms are adopted, such as pruning and quantization of convolution operations to reduce redundant calculation and memory occupation, significantly improve the reasoning speed in practical application scenarios and reduce the calculation requirements.

Through the above optimization measures, the computational complexity of the model is reduced, which makes it perform well in real-time application and limited computing resources environment. These measures not only improve the running efficiency and performance of the model, but also provide feasible technical support for practical application, ensuring the reliability and practicability of the model in various situations.

In STADNet model, the core purpose of loss function design is to ensure that the model can effectively detect abnormal behavior. Specifically, the Binary Cross-Entropy Loss function based on binary classification task is used to measure the difference between the abnormal probability predicted by the model and the real label. Firstly, after multi-scale 3D convolution, spatio-temporal attention mechanism and multi-modal feature fusion, the model will generate a probability score to indicate whether each video sequence is normal or abnormal. This probability output is limited to 0 to 1 by sigmoid activation function to get the anomaly score of video sequence. For each video clip, the prediction score is compared with its real label (0 stands for normal and 1 stand for abnormal), and the cross-entropy loss is calculated. The expression of the loss function is as follows:

N is the total number of video clips. \(\:{y}_{i}\) is the real tag of the ith video clip. \(\:{p}_{i}\) is the abnormal probability predicted by the model. In addition, in order to further enhance the stability of the model in complex environment, regularization term is also used to prevent the model from over-fitting. By L2 regularization (also called weight attenuation) of model parameters, the complexity of the model can be effectively controlled and its generalization ability can be ensured when dealing with different noise levels. In the training process, cross entropy loss and regularization term work together to drive the model to gradually optimize parameters, thus improving the accuracy and robustness of anomaly detection. The equation of regularization term is as follows:

λ is the regularization parameter and wi is the model parameter.

Spatio-temporal feature extraction

In STADNet framework, the extraction of spatio-temporal features is the core of model checking performance. The capture of temporal and spatial features is realized by two main parts: spatial feature extraction and time series feature extraction.

In the aspect of spatial feature extraction, the focus of the model is to obtain internal structure and texture information from a single frame image. This is done by CNN, and the core of CNN is to model the correlation between pixels in local area. Through the application of multi-layer convolution operation and pooling layer, CNN can abstract and capture advanced semantic features in images layer by layer. For example, the first convolution layer mainly identifies edges and basic shapes, while subsequent convolution layers can gradually identify more complex object parts, even the layout of the whole scene. STADNet adopts multi-scale 3D convolution module, which can not only capture these local features at multiple spatial resolutions, but also consider different levels of details and background information at the same time, which makes the model still maintain high detection accuracy in complex scenes. For spatial feature extraction, the mathematical expression of convolution operation is shown in Eq. 6:

\(\:X\) is the input feature map. \(\:W\) is the convolution kernel. \(\:Y\) is the output feature map. \(\:{K}_{w}\) and \(\:{K}_{h}\) are the size of the convolution kernel in the spatial dimension, and \(\:(x,y)\) is the spatial coordinate.

In the aspect of time series feature extraction, the design of STADNet aims to capture the continuity and consistency of actions in video over time. In order to capture these dynamic changes, the model combines the advantages of long and short-term memory network (LSTM) and three-dimensional convolutional neural network (3D CNN). LSTM understands and remembers the past input information through the transition of its internal state, thus predicting the action trend in future frames. On the other hand, by introducing convolution operation in time dimension based on two-dimensional convolution, 3D CNN makes the model not only capture spatial proximity, but also consider temporal continuity. In this way, the model can learn the spatio-temporal characteristics from the video sequence at the same time. STADNet’s multi-stream network architecture effectively deals with the complex dynamic changes in video through this spatio-temporal convolution, and can capture the occurrence of abnormal behaviors on different time and space scales. In the process of time series feature extraction, the mathematical expression of 3D convolution is shown in Eq. 7:

\(\:X\) is the feature map of the input video frame. \(\:W\) is the 3D convolution kernel. \(\:Y\) is the output feature map. \(\:{K}_{d}\) is the size of the convolution kernel in the time dimension. \(\:(t,x,y)\) is the 3D coordinates of time and space.

In addition, the spatio-temporal attention mechanism introduced by STADNet further enhances the effect of feature extraction. This mechanism can dynamically weight the important areas in the video frame in the space-time dimension, especially when abnormal events occur, which can highlight these key areas and filter out irrelevant background information. By combining spatio-temporal features with cross-modal fusion mechanism, STADNet can not only capture the local and global features in video, but also improve the robustness and generalization ability of detection through the synergy of multi-modal information. This series of spatio-temporal feature extraction designs enable STADNet to maintain efficient anomaly detection performance when processing video data in a complex environment, and show significant advantages in experimental results. The mathematical expression of spatio-temporal attention mechanism is shown in Eq. 8:

\(\:{X}_{spatial}\) and \(\:{X}_{temporal}\) represent features in spatial and temporal dimensions respectively. \(\:{\alpha\:}_{spatial}\) and \(\:{\alpha\:}_{temporal}\) are dynamically calculated spatial and temporal attention weights. Through the above-mentioned spatio-temporal feature extraction design, STADNet can keep efficient anomaly detection performance when processing video data in a complex environment, and it shows significant advantages in the experimental results.

Variational autoencoder for anomaly detection of video data

The Variational Autoencoder (VAE) is a powerful unsupervised learning model, which can not only learn the low-dimensional potential representation of data, but also be especially suitable for anomaly detection tasks. VAE model consists of encoder and decoder. In VAE, given the input data x, the encoder maps it to the potential space (hidden variable) z, and its distribution is usually assumed to be Gaussian distribution. The mathematical equation of this process is as follows:

µ(x) and \(\:{\sigma\:}^{2}\left(\text{x}\right)\) are the potential spatial mean and variance of input data x, respectively, and the encoder network learns these two parameters through neural network. Then, the encoder will realize the sampling from the potential space through the reparameterization technique:

ϵ∼\(\:\mathcal{N}\)(0,I) is noise with standard normal distribution. The decoder decodes the variable z in the potential space back to the input space to generate reconstructed input data \(\:\stackrel{\sim}{\text{x}}\). The mathematical expression of the decoding process is:

f(z) is the reconstructed data generated by the decoder network.

Under the framework of a VAE, the definition of anomaly degree is mainly based on the concept of reconstruction error. When the input data is encoded into variables in the latent space by the VAE model, the model will try to reconstruct the original input from this low-dimensional hidden space. For normal samples, because the VAE mainly encounters and learns the normal data distribution in the training stage, the model can reconstruct these samples well. On the contrary, for abnormal samples, because they deviate from the normal distribution of training data, there will be great errors in the reconstruction by VAE.

In the anomaly detection of video image data, the application of VAE is particularly potential, because video image data usually has high dimensions and complex structural characteristics. Figure 2 is the specific steps used by VAE for anomaly detection when processing such data:

Figure 2 shows the application process of the VAE in video data anomaly detection. The entire process can be divided into two main parts: the encoder and the decoder, with their internal structures detailed in the figure. In the encoder part, the input video frames first pass through a mean layer and a variance layer to generate a low-dimensional latent space representation. The mean layer is responsible for generating the center of the latent space, while the variance layer generates the distribution range of the latent space. Together, these two parts enable the model to learn the hidden features of the input data. In the decoder part, the reconstruction layer of the decoder takes the low-dimensional latent space representation and restores it to high-dimensional video frames through a series of transposed convolutional layers (or other similar up sampling techniques). The output of each layer is gradually reconstructed until a complete video frame is generated. The reconstruction error is calculated by comparing the input video frame with the reconstructed video frame. A larger reconstruction error indicates a greater deviation of the input video frame from the normal patterns learned by the model, resulting in a higher anomaly score. The anomaly score is used to identify abnormal events or behaviors in the video data.

Moreover, in addition to the reconstruction error, the distribution in the potential space can be directly analyzed. The potential vectors corresponding to abnormal samples may fall outside the trained probability distribution or at the edge of the distribution, which can be quantified by calculating statistics such as the Kullback-Leibler Divergence (KL). In conclusion, the application of VAE in video image anomaly detection cleverly combines the representational learning ability of deep learning and the statistical principle of probabilistic modeling, enabling automatic anomaly detection of complex video data. This approach is beneficial for problem discovery and early warning in real-time monitoring systems, industrial defect detection, security monitoring, and other fields. The pseudo code of STADNet model is shown in Fig. 3.

The workflow of STADNet model is to preprocess the input video sequence, including normalizing the size and pixel value of the video frame and applying data enhancement technology to improve the adaptability of the model to different environmental conditions. Then, the spatio-temporal features of video frames are extracted by multi-scale 3D convolution module, and the key areas and time periods in the video are highlighted by spatio-temporal attention mechanism. In the multi-stream network architecture, the model handles color stream and motion stream respectively. The former extracts color and texture features through standard 2D CNN, while the latter calculates and extracts motion information through optical flow method and special convolution layer. The cross-attention fusion mechanism fuses the characteristics of these two streams to form a unified temporal and spatial representation. The fused features are reduced and compressed through the fully connected layer, and then the probability score of each video segment is generated through the activation function to represent the normal or abnormal state of the video content. Finally, the segment scores of the whole video sequence are aggregated and sorted to accurately locate potential abnormal events. The whole process involves complex data processing and deep learning technology, aiming at improving the accuracy of anomaly detection and the ability of real-time processing.

Model training and optimization

In this study, to accurately measure the model performance and guide the model learning, a compound loss function is designed, which includes the following parts:

1) Reconstruction Loss: In this study, the MSE is used as the measure of reconstruction loss, because MSE can effectively measure the pixel-level difference between the input image and the reconstructed image in most cases, which provides a clear optimization direction for the model.

2) Anomalous loss: To enhance the sensitivity of the model to abnormal data, the abnormal loss is introduced, which is based on the difference between the reconstruction error of normal data and the reconstruction error of abnormal data. Specifically, the model is encouraged to produce higher reconstruction error when reconstructing abnormal data to distinguish normal data from abnormal data.

3) Regularization Term: To prevent the model from over-fitting, L2 regularization term is added to the loss function. It restricts the weight of the model and promotes the model to learn a smoother and more generalized feature representation.

Considering the diversity and complexity of video data, the input data is carefully preprocessed and enhanced to improve the generalization ability and robustness of the model. The specific process is shown in Fig. 4:

In Fig. 4, in the preprocessing step, image size normalization and pixel value normalization are included. Additionally, data enhancement techniques, such as random cropping, rotation, flipping, and adjusting brightness and contrast, are adopted. These techniques can effectively simulate the video acquisition conditions in different environments and provide richer data background for model training. During the process of model training, the Adam optimizer is selected to optimize the parameters.

Experimental design and performance evaluation

Datasets collection

To assess the effectiveness of the proposed algorithm in different scenes, two enhanced public video datasets are selected: the UCSD Ped2 dataset (dataset A), which mainly contains outdoor scenes of pedestrians walking, and abnormal events include the appearance of non-pedestrian entities such as cyclists and scooters. The dataset adds reactive synthesis elements through computer graphics and visual computing technology. Specifically, the enhancement content includes responding to real pedestrians and performing abnormal actions, such as falling, jumping and walking on the grass. It includes dogs, cats, horses, etc. that respond to real pedestrians. It is randomly assigned to real pedestrians, and dropped after a random time as an abnormal event. The synthetic model achieves a realistic occlusion effect through real pedestrians and foreground parts. The test data is attached with a frame-level label to indicate abnormal or normal data in the form of. npy file. Dataset address: https://www.kaggle.com/datasets/ivannikolov/reactive-anomaly-synthetic-data. The Avenue dataset (dataset B) provides a more complex urban video environment, with abnormal situations involving unusual behaviors such as running and jumping. These two datasets are widely used in the field of video anomaly detection, providing a standardized benchmark for comparing different methods. In order to facilitate data manipulation, the data originally downloaded in PDF format is converted into CSV format. Dataset address: https://www.kaggle.com/datasets/alfredmalaza/top-10-most-common-traffic-violations-along-edsa.

In order to further verify the performance of the model in various complex environments, two new datasets are introduced: ShanghaiTech dataset (Dataset C) and UCF-Crime dataset (Database D). The dataset of Shanghai University of Science and Technology contains videos collected on campus and urban roads, and abnormal events include non-pedestrian behaviors such as cycling and skateboarding. The UCF-Crime dataset covers more abnormal behaviors, such as fighting, theft and arson, and provides video data in a more complex urban environment.

The dataset features and scale are shown in Table 1.

In the data preprocessing stage, the duplicate items and incomplete records in the dataset are removed to ensure the accuracy and integrity of the data. The data in PDF format is converted into CSV format for further data analysis and processing. The video frames are labeled, including the classification of abnormal behaviors and normal behaviors to provide the supervision information needed for model training. In the aspect of data enhancement, video frames are randomly cropped to simulate the video acquisition conditions from different perspectives. The video frames are randomly rotated and flipped to increase data diversity. The brightness and contrast of video frames are randomly adjusted to simulate video data in different lighting environments.

Experimental environment

The following Table 2 lists the hardware and software configurations used in this research experiment in detail:

Parameters setting

To consider the diversity and complexity of video data, the input data is carefully preprocessed and enhanced to improve the generalization ability and robustness of the model. Table 3 shows all parameters and value ranges of experimental analysis:

The parameter range in Table 3 provides flexibility, allowing the model to be hyperparametric tuned in the training process to find the optimal configuration and improve the performance and generalization ability of the model. In the experiment of this study, the key parameters of the deep learning model are set as shown in Table 4 below:

Performance evaluation

To verify the performance of the proposed video image data anomaly detection and recognition method, the performances of AlexNet, CNN + LSTM, 3D CNN and the proposed model are compared in accuracy, precision, recall, F1 score and AUC value. The specific calculation method of indicators is as follows:

In the above equation, TP represents the number of samples correctly predicted by the model as positive class. TN represents the number of samples correctly predicted by the model as negative. FP indicates the number of samples that the model incorrectly predicts as positive, that is, the samples that actually belong to negative are predicted as positive. FN indicates the number of samples that the model incorrectly predicts as negative class, that is, the samples that belong to positive class are predicted as negative class.

Frames Per Second (FPS) is a commonly used index to measure the speed of video playback or processing, especially in the field of real-time video processing and video games. It represents the number of video frames that can be processed and displayed per second. The higher the FPS, the better the fluency of video playback or processing. Equation 5 shows the calculating FPS:

In Eq. 16, Frames is the total number of video frames processed during the measurement period. Seconds is the total time taken to process these frames, usually in seconds. In practical application, when calculating FPS, the time point at the beginning of recording video processing is \(\:{T}_{1}\), and after processing a certain number of video frames, the time point at the end of recording is \(\:{T}_{2}\). The time difference \(\:\varDelta\:T\) between \(\:{T}_{2}\) and \(\:{T}_{1}\) is calculated, and the total time for processing these frames is gotten. In the same time period, the total number of processed video frames \(\:N\) is calculated.

In order to visually compare the performance of different models, the performance results of different datasets in this model are shown in Table 5. Besides comparing with some classic deep learning models, it is also compared with the latest anomaly detection models mentioned in Baradaran & Bergevin (2024)53 and Qiu et al. (2024)54.

Table 5 shows that STADNet model performs significantly better than other models on different data sets. Firstly, on the UCSD Ped2 dataset, STADNet performs well in some important indexes, such as AUC value, F1 score, accuracy and recall rate, especially its AUC value reaches 0.95, which is much higher than that of AlexNet (0.85), CNN + LSTM (0.89) and 3D CNN (0.91). This proves STADNet’s powerful ability in distinguishing normal and abnormal events. In addition, the accuracy of STADNet is 0.93, and the recall rate is 0.91, which shows that STADNET is excellent in correctly identifying abnormal events, and it can still maintain high accuracy based on high recall rate, reflecting its comprehensiveness and accuracy in video processing. In terms of real-time processing frame rate, STADNet is ahead of other models with a frame rate of 28fps, which can meet the needs of real-time video surveillance. Although the performance of the model is close to that of Baradaran & Bergevin (2024) and Qiu et al. (2024), the model has some advantages in real-time processing frame rate (28fps), which is very important for practical application.

On the Avenue dataset, STADNet still maintains strong performance, and its AUC value is 0.94, which surpasses AlexNet (0.86), CNN + LSTM (0.88) and 3D CNN (0.90). It is also superior to other methods in accuracy, recall and F1 score, showing strong robustness and accuracy, especially in the face of complex background and diversified abnormal behaviors. The frame rate of real-time processing is 26fps, which is also significantly higher than other models, showing that STADNet can adapt to the dynamic and complex environment while maintaining efficient processing. Although Qiu et al. (2024) model is similar to in AUC value (0.90) and real-time processing frame rate (23fps), the new method still has some leading advantages.

On the ShanghaiTech dataset, the AUC value of STADNet is 0.93, which is superior to other models and shows strong adaptability, especially in the complex urban background. Despite the complicated background, STADNet can still maintain a high accuracy (0.91) and recall (0.89), and its F1 score is 0.89, which shows that it can balance the accuracy and recall requirements well. STADNet achieves a real-time processing frame rate of 28fps, meeting practical application requirements. Although the performance of the model is close to that of Baradaran & Bergevin (2024) and Qiu et al. (2024), the model still has advantages in real-time processing frame rate (28fps).

On the UCF-Crime dataset, STADNet also performs well, and its AUC value is 0.91, which is ahead of other models, which proves its efficiency in dealing with complex abnormal behaviors (such as fighting, theft, arson, etc.). The accuracy rate is 0.89 and the recall rate is 0.85, which shows its good ability to identify abnormal behaviors, especially in the mixed environment of various abnormal events. The frame rate of real-time processing is 26fps, which still maintains high real-time performance, ensuring that the model can run effectively in dynamic monitoring. Although it is similar to Qiu et al. (2024), the real-time processing frame rate (26fps) and comprehensive indicators of the model make it more advantageous in practical application.

On the whole, STADNet has demonstrated obvious performance advantages on all datasets, especially on key indicators such as accuracy, recall, AUC value and F1 score, and its performance far exceeds that of the comparative models such as AlexNet, CNN + LSTM and 3D CNN. More importantly, STADNet also performs well in real-time processing frame rate, which can meet the requirements of real-time applications, which makes its application prospects in complex environments very broad. STADNet’s high efficiency, robustness and good versatility make it have high application value in anomaly detection tasks in different scenarios.

Figure 5 shows that the performance of STADNet is always better than other models under different noise levels, showing its excellent robustness in noise environment. Under the condition of low noise, the F1 score of STADNet is generally higher than other models, especially on the UCSD Ped2 dataset, the F1 score of STADNet is 0.92, which is much higher than that of AlexNet (0.78), CNN + LSTM (0.83) and 3D CNN (0.86). With the increase of noise level, although the F1 scores of all models decrease, STADNet still maintains a high detection performance, especially in high noise environment, the F1 score of STADNet is 0.85, which is still ahead of other models (Alex Net: 0.70, CNN + LSTM: 0.75, 3D CNN: 0.78). This stability is not only reflected in the UCSD Ped2 dataset, but also in the Avenue and ShanghaiTech datasets. Even in the case of increasing noise, the performance of STADNet remains superior to other models, which proves its strong noise robustness and adaptability to complex environments. Especially under the condition of high noise, STADNet can effectively resist the interference of noise and ensure the high accuracy of anomaly detection.

In addition, on UCF-Crime dataset, STADNet also shows its robustness, and the F1 score under low noise is 0.87, which is significantly higher than other models. With the increase of noise, STADNet’s F1 score is still ahead, especially in high noise environment, STADNet’s F1 score is 0.80, which is better than other models (Alex Net: 0.63, CNN + LSTM: 0.69, 3D CNN: 0.72). These results show that STADNet can not only provide high-precision anomaly detection in low-noise environment, but also maintain good detection effect in noisy complex environment, thus verifying the strong adaptability and generalization ability of the model under different noise conditions. To sum up, STADNet performs better than other existing models in different noise levels, especially in complex environments. STADNet can always maintain a high F1 score in both low noise and high noise conditions, which proves its effectiveness in video anomaly detection and shows the obvious advantages of this model in dealing with noise interference.

Figure 6 focuses on real-time processing performance, with frame rate and average delay as the primary indicators. The proposed model demonstrates the highest processing frame rate and the lowest average delay under low, medium, and high loads. It indicates that it not only performs well with regards to accuracy, but also excels in real-time processing. AlexNet has the lowest frame rate under high load, likely due to its relatively simple structure, which requires more resources when dealing with complex tasks, leading to the decrease in processing speed. The real-time processing capabilities of CNN + LSTM and 3D CNN lie between AlexNet and the proposed model. It could be attributed to their complex structure and computational requirements, but are still adequate for specific application scenarios.

The time consumption of each model on different datasets is evaluated, including training time and reasoning time. The evaluation results of time consumption are shown in Table 6. Table 6 shows that the proposed model has good performance in training time and reasoning time, especially in reasoning time, which has obvious advantages compared with other models. This shows that the proposed model not only improves the accuracy, but also shows superiority in real-time processing ability.

Figures 7 and 8 show the anomaly detection heat maps of different models on UCSD Ped2 dataset and Avenue dataset, respectively.

Figure 7 shows the anomaly detection heat map on the UCSD Ped2 dataset. First, from the average calorific value of the normal area, the average calorific value of AlexNet is 0.15, which is relatively high, indicating that its sensitivity to the normal area is low. In contrast, the average calorific value of the Proposed Model in the normal region is only 0.08, which is significantly lower than other models, showing that it has the highest sensitivity to the normal region and can distinguish the normal region more accurately. Secondly, from the average calorific value of abnormal area, the average calorific value of abnormal area of AlexNet is 0.70, which is relatively low, indicating that its ability to detect abnormal areas is weak. However, the average calorific value of the Proposed Model is 0.85, which is significantly higher than other models, showing that it has the strongest ability to detect abnormal areas and can identify abnormal areas more effectively. Finally, from the perspective of the recognition accuracy of abnormal areas, the recognition accuracy of AlexNet is 85%, and the performance is average. The recognition accuracy of the Proposed Model is as high as 97%, which is significantly better than other models, indicating that it has the best overall performance in anomaly detection tasks. To sum up, the Proposed Model shows the highest anomaly detection ability on the UCSD Ped2 dataset, which is obviously superior to other models in both sensitivity to normal areas and detection ability to abnormal areas. However, the traditional AlexNet model is weak in anomaly detection tasks, and there is much room for improvement.

In Fig. 8, on the Avenue dataset, the average calorific value of the normal area of AlexNet is 0.13, which is relatively high, indicating that its sensitivity to the normal area is low. In contrast, the average calorific value of the Proposed Model in the normal region is only 0.07, which is significantly lower than other models, showing that it has the highest sensitivity to the normal region and can distinguish the normal region more accurately. Secondly, from the average calorific value of abnormal area, the average calorific value of abnormal area of AlexNet is 0.68, which is relatively low, indicating that its ability to detect abnormal areas is weak. However, the average calorific value of the Proposed Model is 0.83, which is significantly higher than other models, showing that it has the strongest ability to detect abnormal areas and can identify abnormal areas more effectively. Finally, from the perspective of the recognition accuracy of abnormal areas, the recognition accuracy of AlexNet is 82%, and its performance is average. The recognition accuracy of the Proposed Model is as high as 96%, which is significantly better than other models, indicating that it has the best overall performance in anomaly detection tasks. To sum up, the Proposed Model also shows the highest anomaly detection ability on the Avenue dataset, which is obviously superior to other models in both the sensitivity to normal areas and the detection ability to abnormal areas. However, the traditional AlexNet model is weak in anomaly detection tasks, and there is much room for improvement.

Figures 9 and 10 show the loss curves of different models in the training process to help better understand the convergence and performance of the model (the first 10 iterations).

In Fig. 9, from the training loss point of view, the initial training loss of AlexNet is 2.30, and after 10 rounds of training, it drops to 1.34, which shows that its convergence speed is slow. In contrast, the initial training loss of the Proposed Model is 2.00, and it drops to 1.05 after 10 rounds of training, which shows that the proposed model has the fastest convergence speed in the training process. Secondly, from the perspective of verification loss, the initial verification loss of AlexNet is 2.25, and after 10 rounds of training, it drops to 1.33, which shows that its performance on verification set is slow. In contrast, the initial verification loss of the Proposed Model is 1.95, and after 10 rounds of training, it drops to 1.00, which shows that the performance of the proposed model is the fastest. To sum up, the training loss and verification loss of the Proposed Model on the UCSD Ped2 dataset decrease the fastest, showing its efficiency and stability in the training process. However, the AlexNet model converges slowly in the training process, which shows that its adaptability on complex datasets is poor.

Figure 10 shows a similar situation. From the training loss point of view, the initial training loss of AlexNet is 2.25, and after 10 rounds of training, it drops to 1.30, which shows that its convergence speed is slow. In contrast, the initial training loss of the Proposed Model is 1.95, and after 10 rounds of training, it drops to 1.00, which shows that the proposed model has the fastest convergence speed. Secondly, from the perspective of verification loss, the initial verification loss of AlexNet is 2.20, and after 10 rounds of training, it drops to 1.25, which shows that its performance on verification set is slow. In contrast, the initial verification loss of the Proposed Model is 1.90, and after 10 rounds of training, it drops to 0.95, which shows that the performance of the proposed model is the fastest. To sum up, the training loss and verification loss of the Proposed Model on the Avenue dataset decrease the fastest, which shows its efficiency and stability in the training process. However, the AlexNet model converges slowly in the training process, which shows that its adaptability on complex datasets is poor.

In order to further improve the robustness of the model and ensure that it can effectively deal with malicious input in security applications, adversarial attack experiments are added to the existing research. Antagonistic attack refers to the intentional input disturbance to the deep learning model, aiming at misleading the output results of the model. This kind of attack may seriously affect the performance and security of the model, especially in security-sensitive application scenarios. The following two antagonistic attack techniques are used in the experiment: Fast Gradient Sign Method (FGSM) generates antagonistic samples by adding disturbances based on the input gradient direction to the original input. Projected Gradient Descent (PGD) is more aggressive than FGSM by adding small disturbances iteratively in multiple steps. Firstly, FGSM and PGD attacks are carried out on the original video data to generate adversarial samples. The generated Adversarial samples are used to evaluate the proposed model STADNet, and to compare the robustness of AlexNet, CNN + LSTM and 3D CNN. The results of antagonism attack experiment and model robustness evaluation are shown in Table 7:

In Table 7, the proposed model STADNet performs best in the case of no attack, and still maintains a high accuracy and F1 score in the face of FGSM and PGD confrontational attacks, which is more robust than other models. This shows that STADNet not only performs well under normal conditions, but also can effectively resist attacks to a certain extent in the face of malicious input, so it has high application potential.

In the design of STADNet, the computational complexity and time consumption of the model are also considered to ensure the high detection accuracy and meet the requirements of real-time processing. For the same video sequence, the complexity of different models is measured by the following indicators, and the comparison results are shown in Table 8:

On the two indexes of Table 8 shows Parameter quantity (M) and FLOPs, STADNet still maintains a high parameter quantity and computational complexity. Compared with other models, it performs better in accuracy and performance, but it needs more computational resources. Nevertheless, its processing speed (35ms/frame) is better than that of LSTM and other models. The AUC values of UCSD Ped2 and Avenue datasets show that STADNet performs well in these two datasets, which is ahead of other models. The AUC value of ShanghaiTech dataset is 0.93, which shows that STADNet still has good detection performance in complex and dynamic urban environment. The AUC value of STADNet on UCF-Crime dataset is 0.91, which is significantly improved compared with 0.79–0.83 of other models, which proves its ability to detect various types of abnormal behaviors (such as fighting, theft, arson, etc.). By comparing the performance of different models on various datasets, it shows that STADNet is the best in both accuracy (AUC value) and processing speed (average processing time), especially in the detection of complex environments and different types of anomalies. Although the computational complexity of STADNet is high, its efficient real-time processing ability and excellent performance make it suitable for many practical application scenarios.

Although STADNet performs well in most test scenarios, it is observed that the anomaly detection effect in some complex environments is not ideal. Figure 11; Table 9 show several unsuccessful cases:

In these cases, false negatives (the model failed to detect real abnormal events) and false positives (the model wrongly detected normal events as abnormal events) are the main problems. In Case 1, the model fails to capture the abnormal behavior in the video due to the drastic change of illumination, which reflects the lack of robustness of the model when the illumination conditions changed. In Case 2, the appearance of angle similarity makes the model unable to effectively identify potential abnormal behaviors, which shows the limitations in high-density crowd scenes. Case 3 shows the interference of complex background, which leads the model to misjudge normal activity as abnormal behavior, which shows the influence of background noise on detection performance.

To more comprehensively demonstrate the performance differences of different datasets and methods in anomaly detection tasks, three cases are provided: Case D, Case E, and Case F. These correspond to the crowd conflict scenes in the UCF-Crime dataset, extreme lighting variation scenes in the ShanghaiTech dataset, and dynamic background interference scenes in the Avenue dataset, as shown in Fig. 12. Each case includes four sets of comparison results: detection heatmaps from STADNet, AlexNet, CNN + LSTM, and 3D CNN. The true anomaly regions (GT) and model prediction regions (Pred) are marked with red bounding boxes. For example, in Case D (crowd conflict), STADNet’s heatmap shows a response value of 0.85 in the anomaly regions (such as areas with intense physical movements), which is significantly higher than AlexNet (0.62) and 3D CNN (0.78). However, there are still some local misses (such as actions of people on the edge). Analysis indicates that high-density occlusion prevents the model from fully capturing local spatiotemporal features.

Discussion

The proposed model shows its excellent performance in multiple datasets and different conditions, especially in terms of accuracy, robustness and real-time processing ability when dealing with complex image and video data. Compared with the ResNet structure proposed by Wickramasinghe et al. (2021)55, although ResNet performs well in deep network training, its performance improvement is limited when dealing with high-noise data. The model proposed in this study can maintain a high-performance level even in high noise environment through more effective noise processing mechanism. In terms of real-time, compared with DenseNet of Zhang et al. (2021)56, although DenseNet has some advantages in parameter efficiency, its frame rate drops obviously when dealing with high-load tasks. By optimizing the calculation process and structure, the model can maintain high processing speed and low delay even under high load conditions, and meet the needs of real-time processing. Compared with AlexNet of Khan et al. (2022)57 and Guo et al. (2020)58, the proposed model not only performs well on benchmark datasets, but also shows better generalization ability on different noise levels and multiple datasets. This is due to the model’s deep understanding of features and effective information fusion strategy. The visible model comprehensively considers accuracy, robustness and real-time, which not only improves the accuracy of image processing, but also ensures the reliability and effectiveness in complex environments and real-time application scenarios. However, there are still some false detections. To improve these problems, the following measures are planned: The first is increasing lighting variations and occlusion samples to enhance the model’s adaptability to different environmental conditions. Meanwhile, more context information and more advanced feature extraction modules are introduced to enhance the model’s understanding of complex scenes. Combined with the output results of other models, the accuracy of overall detection is improved by ensemble learning. Through the in-depth analysis of these failure cases, it is expected to further optimize STADNet to improve its performance in various complex environments.

Generally speaking, this study fills the gaps in the existing video image anomaly detection methods in terms of spatio-temporal feature extraction, noise suppression, multi-modal information fusion and real-time processing, especially in the performance of anomaly detection in complex environment and high noise conditions. Compared with traditional methods, STADNet can capture multi-dimensional spatio-temporal information in video data more effectively, and improve the accuracy, robustness and real-time performance of detection through innovative model design. At the same time, the design of STADNet model fully considers its adaptability and expansibility on different datasets. By adopting multi-stream network architecture and cross-attention fusion mechanism, the model can extract the color, texture, and motion features of video frames respectively, and improve the robustness and generalization ability of the model through multi-modal fusion.

Because the model shows excellent robustness in complex environment, it can effectively deal with practical problems such as high noise and dynamic background interference. Therefore, in addition to experimental conditions, STADNet model also has a wide range of application potential in practical applications, especially in the fields of safety monitoring, intelligent transportation, industrial defect detection and so on. For example, in the intelligent traffic monitoring system, STADNet can detect abnormal events in real time, such as traffic accidents or abnormal behaviors. In industrial production, the model can help to detect equipment failure or abnormal production line; In video surveillance, it can be used to find abnormal behaviors or security threats, and improve public security prevention and control capabilities. In addition, STADNet can also be widely used in automatic quality control, medical video analysis and other fields, providing more accurate and efficient anomaly detection solutions for related industries. Therefore, STADNet is not only innovative in academic research, but also has a wide range of application values in many practical scenarios, which can provide theoretical support and practical guidance for technological progress in related fields.

However, the model still has some potential limitations in different anomaly detection scenarios. For example, although STADNet performs very well on a variety of datasets, its generalization ability in different fields or new scenarios is still uncertain. Different types of anomalies, video scenes and environmental factors may affect the performance of the model. For example, the model may face challenges when dealing with unconventional scenes (such as video data in outdoor and extreme weather conditions), especially in these new scenes, the labeled data is scarce, which makes the effect of training the model difficult to predict. Secondly, with the extensive deployment of deep learning model in practical applications, STADNet, as an anomaly detection model based on deep learning, may face the problem of adversarial attacks. Antagonistic attack refers to making the model make a wrong prediction or misjudgment through tiny and carefully designed disturbances. In the task of video anomaly detection, this may mean that malicious disruptor can avoid detection by forging normal behavior or video data. Therefore, how to improve the robustness of the model and reduce the impact of confrontational attacks has become an important challenge in the application of deep learning models. In the future, it is planned to add more confrontation training mechanisms or introduce defense strategies in the model design to enhance the security and reliability of the model.

In addition, in practical applications, the deployment of STADNet may involve issues of user privacy and data security, especially when dealing with video data involving sensitive information. Although the model is mainly used for video anomaly detection, in some cases, the anomaly detection results may reveal data related to sensitive information such as personal behavior and location. Therefore, when deploying such models, people must abide by relevant laws and regulations, protect users’ privacy, and ensure the security of data processing. In a word, although STADNet performs well on multiple data sets, in actual deployment, people still need to pay attention to its generalization ability in different application scenarios, the prevention of antagonistic attacks, and data security and ethical issues.

Conclusion

Research contribution

This study proposes a STADNet based on deep learning. Through the innovative combination of multi-scale 3D convolution module, spatio-temporal attention mechanism, multi-stream network architecture and VAE, the anomaly detection performance of video image data is effectively improved. The experimental results show that STADNet is significantly superior to the existing models in accuracy and robustness on UCSD Ped2 and Avenue datasets, with AUC values reaching 0.95 and 0.93, respectively, which are about 10% and 12% higher than other models. In addition, STADNet shows higher stability and stronger real-time processing ability under different noise levels. At high noise level, the F1 score of STADNet has the smallest decline, which shows its advantages in noise processing and feature extraction. In terms of real-time processing performance, STADNet shows the highest processing frame rate and the lowest average delay under low, medium and high loads, which shows that it not only has excellent accuracy, but also has excellent real-time processing ability. This study not only puts forward an effective image and video processing scheme in theory, but also shows a wide range of potential in practical application, providing a new perspective and methodological basis for future research and application in related fields.

In this study, a novel method of anomaly detection and recognition of video image data is proposed. The model significantly improves the image and video processing ability under multiple indicators and conditions, especially in accuracy, robustness and real-time processing. The model shows superior performance on multiple datasets and noise levels, including accuracy, precision, recall, F1 score and AUC value, demonstrating its robustness and efficiency. By integrating spatio-temporal-feature-extraction and noise suppression mechanism, the model effectively improves the processing quality and speed, and provides a novel approach for solving complex problems in practical application.

Future works and research limitations

Limitations and discussion

In this study, although a new deep learning model is proposed for anomaly detection and recognition of video image data, there are still some limitations and room for improvement. In this section, these limitations will be discussed and the future research direction will be put forward.

(1) Model performance and complex scenes.

Although the model shows good performance in dealing with complex scenes, there are still some challenges in some extreme conditions. For example, in a dense and crowded environment, due to mutual occlusion and dynamic changes between targets, the model may have a certain degree of false detection or missed detection. Therefore, future research can explore more complex model structures or introduce more advanced technologies to further improve the adaptability of the model in complex scenes.

(2) Diversity and generalization performance of datasets.

In this study, two enhanced public video datasets are selected for experimental verification, but these datasets still cannot cover all possible scenes and abnormal situations. Therefore, there are still some limitations in evaluating the generalization performance of the model. Future research can consider collecting more diverse and real datasets and evaluating the generalization ability of the model through cross-data set verification.

(3) Computing resources and efficiency.

Although the model has achieved good performance in the experiment, its training and reasoning process requires a lot of computing resources, especially when dealing with large-scale video data. This limits the expansibility and universality of the model in practical application. Therefore, future research can explore more efficient algorithms or optimization strategies to reduce the computational complexity of the model and improve its practicability in resource-constrained environments.

Although this study has achieved remarkable results, there are still some future work directions and research limitations. While discussing the performance of the model, the proposed model has some limitations. Firstly, although the model performs well in dealing with general image and video data, it may encounter challenges in dealing with specific types of abnormal situations. For example, the performance of the model may be affected when the lighting conditions change extremely or there are a lot of obstructions, which needs further improvement and optimization. Secondly, people should also be aware of the possible bias in the process of model training and verification. Although this study tries best to ensure the diversity and representativeness of datasets, there may be some deviations in the process of data collection, which will limit the generalization ability of the model in some cases. In addition, although data enhancement technology is helpful to improve the robustness of the model, it may also introduce unnecessary prejudice if it is not used properly. Therefore, it is necessary to pay more attention to these problems in future research and try to solve them in order to further improve the performance and reliability of the model.

Future works

Although the results of this study show that STADNet has obvious advantages in anomaly detection and recognition, there are still some areas worthy of further exploration and improvement:

Lightweight of the model: While maintaining the performance of the model, further optimize the model structure and reduce the consumption of computing resources to meet the application requirements of embedded devices and mobile terminals.

Multimodal data fusion: In addition to video data, other types of sensor data (such as audio, temperature, vibration, etc.) can be considered to improve the accuracy and robustness of anomaly detection.

Adaptive learning mechanism: An adaptive learning mechanism is introduced to enable the model to learn and adjust online according to new data in the deployment process, thus improving its adaptability to dynamic environmental changes.

Optimization of data enhancement methods: Explore more advanced data enhancement technologies, such as the synthetic data enhancement method based on GAN, to further enrich the diversity of training data and improve the generalization ability of the model.

Expansion of application scenarios: The model is applied to more practical scenarios, such as intelligent traffic monitoring, industrial equipment fault detection and medical image analysis, to verify the practicability and effectiveness of the model in different fields. Through the above-mentioned future work, it is expected to further improve the performance and application breadth of STADNet, and provide a more comprehensive and efficient solution for anomaly detection and identification of image and video data.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author Yiping Lu on reasonable request via e-mail luyiping2006@sina.com.

References

Chang, Y. et al. Video anomaly detection with spatio-temporal dissociation[J]. Pattern Recogn. 122, 108213 (2022).

Alafif, T. et al. Generative adversarial network based abnormal behavior detection in massive crowd videos: a Hajj case study[J]. J. Ambient Intell. Humaniz. Comput. 13 (8), 4077–4088 (2022).

Cho, M. A. et al. Unsupervised video anomaly detection via normalizing flows with implicit latent features[J]. Pattern Recogn. 129, 108703 (2022).

Ingle, P. Y. & Kim, Y. G. Real-time abnormal object detection for video surveillance in smart cities[J]. Sensors 22 (10), 3862 (2022).

Khan, S. W. et al. Anomaly detection in traffic surveillance videos using deep learning[J]. Sensors 22 (17), 6563 (2022).