Abstract

The electroencephalography (EEG) signals are very important for obtaining information from the brain, and EEG signals are one of the cheapest methods to gather information from the brain. EEG signals have commonly been used to detect epilepsy. Therefore, the main objective of this research is to demonstrate the epilepsy detection capability of the presented new-generation relation-centric feature extraction function. In this research, we have presented a new-generation EEG signal classification model, and this model is an explainable feature engineering (XFE) model. To present this XFE model, a feature extraction function, termed friend pattern (FriendPat), has been introduced. The presented FriendPat is a distance- and voting-based feature extraction function. By deploying the introduced FriendPat, features have been extracted. The generated features have been selected using a cumulative and iterative feature selector, and the selected features have been classified using a t algorithm-based k-nearest neighbors (tkNN) classifier. By using channel information and Directed Lobish’s (DLob) look-up table based on the brain cap used, DLob symbols have been generated, and these symbols create the DLob string for artifact classification. By using this generated DLob string and statistical analysis, explainable results have been obtained. To investigate the classification performance of the presented FriendPat XFE model, we have used a publicly available EEG epilepsy detection dataset. The presented model attained 99.61% and 79.92% classification accuracies using 10-fold cross-validation (CV) and leave-one-subject-out (LOSO) CV, respectively. This XFE model generates a connectome diagram for epilepsy detection.

Similar content being viewed by others

Introduction

Electrical brain activity is to be detected in a non-invasive way, electroencephalography (EEG) is frequently used1,2. Commonly used for the diagnosis of neurological disorders, particularly epilepsy3. Epilepsy is a neurological disorder that occurs when recurrent episodes of seizures having a wide variety of symptoms, which affect millions of people worldwide and can be diagnostic problems4. The correct identification of epilepsy using EEG signals is a vital process in clinical environments as it supports timely treatment and management5.

EEG signals are complicated and very dynamic requiring new advanced techniques in understanding and interpreting6,7. However, these signals differ by brain regions, signal frequencies, and amplitudes, all of which can be used to recognize abnormal phenomena in the brain8. Furthermore, brain wave activity can be similar during active seizure and non-seizure states, making it difficult for EEG data alone to provide a clear diagnosis for epilepsy9.

With the development of feature extraction and signal classification techniques, researchers have been able to utilize EEG data more efficiently10. These improvements focus on enhancing diagnostic efficiency and decreasing computational overhead1. These methods form the basis for the development of robust tools that can be integrated into clinical workflows for optimal patient care11.

This, primarily focused on better interpretability and classification of EEG signals, is still an area of research10,12. This not only allows for detection of abnormalities but also provides the ability to explain what mechanisms underlie these patterns so that more efficient and explainable diagnostic solutions could emerge13.

Literature review

Current studies on epilepsy diagnosis models presented in the literature are given below.

For patient-independent seizure classification, Aboyeji et al.14 employed the CHB-MIT EEG dataset which comprised recordings from 22 pediatric patients. In their method, Dilated Convolutional Squeeze and Excitation Networks (DCSENets) were used alongside spectrograms derived from the short-time Fourier transform augmented with taping functions (Hann and Gaussian) to minimize spectral distortion. Leave-one-patient-out cross-validation yielded accuracies of 87.20% and 87.29%, respectively. Lin et al.15 introduced a framework of strange attractor reconstruction for classifying epileptic seizure using EEG signals based on CHB-MIT and Freiburg EEG datasets. The CHB-MIT dataset had 24 cases of 23 subjects with EEG sampled at 256 Hz, and the Freiburg dataset included 21 patients with invasive recordings. On the CHB-MIT dataset, this method had an average sensitivity of 94.58% and specificity of 93.38%. Cao et al.16 developed a hybrid CNN-Bi-LSTM model for recognizing epileptic seizures from EEG signals obtained from Bonn, New Delhi, and CHB-MIT datasets. And the Bonn dataset comprises five subsets consisting of 4097 data points sampled in 173.61 Hz, while the New Delhi and CHB-MIT datasets come with various seizure analysis. In binary classification tasks with the Bonn and New Delhi datasets, their approach attained 100% accuracy, sensitivity, specificity, and F1-score. Moreover, their model achieved an overall accuracy of 98.43% on the CHB-MIT dataset. Using the Bonn EEG dataset, Verma et al.17 developed an epilepsy detection system, this dataset comprising of 500 segments of 23.6s each sampled at 173.61 Hz. By using Continuous Wavelet Transform (CWT) and subsequently a deep CNN with transfer learning, they attained 94.5% validation accuracy. The CNN-Informer model for detecting epileptic seizure was proposed by Li et al.18 and was tested on the CHB-MIT and SH-SDU datasets. The CHB-MIT dataset consisted of 983 h of EEG recordings from 23 pediatric patients and the SH-SDU dataset contained 143 h from 10 adult patients. Their model trained on the CHB-MIT dataset acquired an average sensitivity of 99.54% and specificity of 98.55%, while on the SH-SDU dataset an average sensitivity of 94.83% with 1.24/h false detection rate was achieved. Using the CHB-MIT and Bonn datasets, Liu et al.19 presented a dual-stream CNN model for seizure detection with EEG signals. The CHB-MIT data set consisted of 980 h of EEG recordings of 24 patients, and the Bonn dataset consisted of five subsets of EEG segments sampled at 173.61 Hz. On the CHB-MIT dataset, their model attained an average sensitivity of 79.59% and specificity of 92.23%. Using the EPItect dataset, which consists of 4340 h of 21-channel EEG recordings at 256 Hz from 90 patients, el-Dajani et al.20 introduced a CNN-LSTM model for the detection of epileptic seizures. Using full 10–20 system electrodes it was reported that their model achieved a sensitivity of 73% and false alarm rates of 9.9 per hour. In mobile scenarios with electrodes placed behind the ear, sensitivity dropped to 68% with 10 false-positive events per hour. Khalfallah et al.21 investigated the application effectiveness of the machine learning and deep learning techniques on the EEG-based neurological disorder classification over datasets CHB-MIT, IBIB PAN, and AHEPA. They preprocessed the EEG signals using FIR filters followed by ICA and used bandpower and Shannon entropy as features. 99.85% accuracy using Random Forest for autism classification, and 100% accuracy using Support Vector Machines for dementia detection. The deep learning models, in this case CNN and ChronoNet demonstrated diagnostic accuracies between 92.5% and 100%. Pain et al.22 introduced the MSSTNet model for detecting mind wandering (MW) episodes using electroencephalogram (EEG) recordings. The publicly available EEG dataset used in the study included recordings from two subjects, with 22 sessions sampled at 1024 Hz. Their model had a 95.07% mixed-subject classification accuracy with intra- and cross-subject accuracies of 94.48% and 83.13%, respectively.

Literature gaps

-

Deep learning models are very popular in the EEG signal classification14,23,24 but these model’s time complexities are exponential25,26. Therefore, the deep learning models are expensive models for EEG signal classification27.

-

The most of the researchers have focused to classification performance28,29. However, explainable artificial intelligence (XAI) in the shadow for EEG signal classification.

Motivation and our method

The main motivation of this research is to demonstrate effectiveness of a new relation-based feature extraction function, and this feature extraction function is named Friend Pattern (FriendPat). The introduced FriendPat computes the distances between the channels of each point. By utilizing the distances of the channel values, the feature vector has been generated using a counter-based voting model. Moreover, we are motivated to fill the given literature gaps.

To fill the first literature gap, we have presented a new feature engineering model. This feature engineering model has been designed to show the classification ability of the introduced FriendPat. Moreover, we have integrated two self-organized models into the presented FriendPat to obtain classification results. These models are the cumulative weighted iterative neighborhood component analysis (CWINCA)30 feature selector and the t algorithm-based k-nearest neighbors (tkNN)31 classifier. CWINCA generates various selected feature vectors and selects the best feature vector for classification, while tkNN produces multiple classification outputs and automatically chooses the best output among the generated results. By utilizing the classification power of both CWINCA and tkNN self-organized methods, we have demonstrated the classification capability of the presented FriendPat. Also, the presented feature engineering model has linear time complexity since the methods used have linear time complexities. Moreover, the presented FriendPat-centric XFE model is a highly accurate EEG signal classification model.

In order to fill the second literature gap, the Directed Lobish (DLob)32 symbolic language has been integrated into the presented FriendPat-centric model. By deploying DLob, we have presented a new-generation explainable feature engineering (XFE) model. This XFE model has generated a DLob sentence by deploying the identities of the selected features. Moreover, by using the generated DLob sentence, a connectome diagram has been created. By using DLob, we have presented interpretable results.

Innovations and contributions

Innovations:

-

In this research, a new-generation feature extraction function has been presented and this feature extraction function is termed FriendPat.

-

In order to investigate the classification ability of the presented FriendPat, we have presented a new generation XFE model. Also, as our knowledge, this research is the first epilepsy diagnosis research with DLob XAI method.

Contributions:

-

The introduced FriendPat-centric XFE model has tested on a big EEG epilepsy detection dataset. This dataset contains more than 10,000 EEG signals. We have tested the presented FriendPat-centric XFE model deploying both 10-fold cross-validation (CV) and leave-one subject-out (LOSO) CV. The presented model attained 99.61% and 79.92% classification accuracies with 10-fold CV and LOSO CV respectively. In this aspect, the presented XFE model is a competitor model to deep learning models and our presented FriendPat-centric model contributes to feature engineering research area.

-

To understand the hidden mechanism of the epilepsy detection, DLob has been used in this research. By using the presented DLob, the presented XFE-based interpretable results has been extracted. For this point, this model contributes the neurology.

Dataset

To test the introduced FriendPat-centric model, we have used a publicly available dataset, which is an EEG epilepsy detection dataset33,34. It has 35 channels and two classes: epilepsy and control. The data were collected in a hospital. Neurologists removed noise and cropped the region of interest before release. We downloaded these preprocessed recordings and did not apply any further cleaning. This EEG epilepsy dataset contains two classes and these are (i) epilepsy and (ii) control. Data were collected from 50 epilepsy participants, and there are EEG signals from 71 control participants. The lengths of the EEG signals are equal, each being 15 s. Additionally, the sampling frequency of the brain cap used is 500 Hz (Hz). In this dataset, there are 10,356 EEG signals, of which 4,465 are epilepsy signals, and the remaining 5,891 are control signals.

Friend pattern

In this research, a new-generation feature extraction function has been presented, and the introduced feature extraction function is termed FriendPat. The presented FriendPat is a channel-based feature extractor. In this feature extractor, a channel vector has been created from each point, and the distance matrix of each channel vector has been generated. By using these distances, a feature vector has been created. In this feature generation process, the average distance has been computed, and smaller or equal points have been incremented, while the counters of the remaining points have been decremented. At this point, the introduced FriendPat is a simple yet effective feature extractor. To provide a clearer explanation of the introduced FriendPat, the general block diagram of the presented FriendPat is shown in Fig. 1.

The block diagram of FriendPat is illustrated with a numerical example. In this example, the maximum distance is highlighted in bold, and the minimum distance is highlighted in italics. Distances smaller than or equal to the average are incremented by + 1, while the smallest distance is further increased by + 2. Conversely, distances larger than the average are decremented by – 1, and the maximum distance is further decreased by – 2. This approach represents friendships as a feature vector.

Figure 1 demonstrates the graphical outline of the presented FriendPat feature extractor, and we have used a 5 × 5 example for clarification. In this research, the dataset used has 35 channels; therefore, the size of the distance matrix is computed as 35 × 35. To clarify this model further, the steps have been demonstrated below.

S1: Create the channel vector deploying each point of the used multichannel EEG signal.

Herein, \(cv\): channel vector, \(nc\): number of channel and \(len\): length of the EEG signal.

S2: Compute the distance matrix of the created channel vector. Herein, we have used L1-norm distance metric.

where \(\:D\): distance matrix.

S3: Compute the average value of the distance matrix to create pivot value for creating friendship matrix.

Here, \(av\): average distance value.

S4: Create the friendship matrix using average distance value. The initial values of the distance matrix are zero.

where \(\:F\): friendship matrix.

S5: Identify the indices of the minimum and maximum values in the distance matrix, and adjust the corresponding values by applying + 1 or – 1, respectively.

Here, r: row value, c: column value. By applying the above process, the minimum and maximum distance points are increased and decreased twice.

S6: Repeat steps S1–S4 for each point in the EEG signal, and generate the friendship matrix.

S7: Apply matrix to vector conversion and obtain feature vector.

Herein, \(fv\): the feature vector generated with a length of \(nc\frac{{nc}}{2}.\)

These seven steps defined above represent the FriendPat.

The FriendPat-centric XFE model

In order to investigate the classification capability of the presented FriendPat, we have developed a FriendPat-centric XFE model. In this XFE model, the features have been extracted using the introduced FriendPat, and the most informative features have been selected using the CWINCA feature selector. The features selected by CWINCA have been used for both classification and XAI results generation. Moreover, the CWINCA feature selector is a self-organized feature selector. In the classification phase, the tkNN self-organized classifier has been used to obtain the classification results. In the last (fourth) phase, DLob has been employed to produce the XAI results. The general block diagram (graphical overview) of the presented FriendPat-centric XFE model is depicted in Fig. 2.

The defined phases of the presented FriendPat-centric XFE shown in Fig. 2 model are also explained below:

Phase 1: Extract the features of the used EEG signals deploying the introduced FriendPat.

where, X: the created feature matrix,\(~data:\) the used EEG signal dataset and \(FP\left( . \right)\): the introduced FriendPat feature extractor.

Phase 2: Choose the most distinctive features using the CWINCA32 feature selector. The CWINCA feature selector is an improved version of the NCA35 and INCA36 feature selectors. The NCA feature selector is a distance-based feature selector, and selecting the optimal number of features is a challenging process for the NCA feature selector. Therefore, INCA was introduced by Tuncer et al.36 in 2020, and they solved this problem using an iterative feature selection methodology. They employed an iterative checking process. However, for INCA, determining the loop’s start and stop values is a challenging problem. To address this issue, Tuncer et al.32 presented CWINCA in 2024. The start and stop values of the loop have been computed using the cumulative weights of the features in the CWINCA feature selector. Therefore, CWINCA has been utilized as the feature selection function in this study. Additionally, CWINCA is a self-organized feature selector like INCA.

Herein, \(id\): identities of the chosen features, \(sX\): the selected feature matrix, \(CWN\left( {.,.} \right)\): the used CWINCA feature selection and y: the real label. The used threshold values to detect range of the loop are 0.5 and 0.9999.

Phase 3: Generate the classification outcomes for the proposed FriendPat-centric XFE model using the tkNN31 classifier. The tkNN is an iterative, ensemble-based, and self-organizing classifier designed to enhance the classification efficiency of the kNN algorithm. For this reason, it was selected for use in this study. Additionally, both tkNN and CWINCA are distance-based methods. We have combined these methods to achieve the probable maximum classification performance.

Here, \(ct\): the computed classification outcome and \(tkNN\left( {.,.} \right):\) the used tkNN classifier.

Phase 4: Produce the XAI results using the DLob32 symbolic language. In this research, we have used an EEG dataset with 35 channels. Therefore, a look-up table (LUT) based on the used channels has been employed. By using the identities of the selected features and the created LUT, DLob symbols have been generated. The DLob symbol extraction method used is defined below.

Herein, p: the pointer of the channels and \(sn\): the number of the selected features. By using the generated pointer and LUT, the DLob symbols have been extracted as below.

By utilizing the channel information and LUT, symbols have been created.

Herein, \(DS\): the created DLob string.

The DLob and Cardioish symbols are defined in Table 1.

By using these symbols and meaning of them, we have extracted explainable results. In this research, we have used 13 out of the 16 DLob symbols based on the brain cap used.

Experimental results

The experimental setup, computational complexity analysis and results (classification and XAI) have been presented in this section.

Experimental setup

First, we describe the experimental setup of this research. The implementation was carried out using the MATLAB (version 2024a) programming environment. The introduced FriendPat-based model was programmed using functions, and these functions were stored as .m files. The utilized parameters of this model are listed in Table 2.

Computational complexity analysis

This model is considered a lightweight XFE model due to its linear time complexity. The time complexity of the introduced FriendPat-centric XFE model has been computed using Big O notation, and the computation is provided below. Additionally, the parameters used in this study are outlined below.

FriendPat-based feature extraction: The presented FriendPat is a distance-based feature extractor. Thus, the complexity of the FriendPat feature extractor is \(O\left( {c{n^2}L} \right)\). Herein, \(cn\): the number of the channels and L: length of the signal and \(cn<L\).

CWINCA: It is an iterative feature selection process. Therefore, the time complexity of it is \(O\left( {C+N+IK+G} \right)\). Here, C: time complexity of the cumulative weight computation, N: time complexity of the NCA, I: range of the iteration, K: time complexity of the utilized classifier and G: time burden coefficient of the greedy algorithm.

tkNN: The tkNN classifier is an iterative and self-organizing classifier. This classifier generates both parameters-based and voted outcomes. In this aspect, the time complexity of this classifier is equal to \(O\left( {PK+V+G} \right)\). Where, P: number of parameters and V: time complexity of the IMV.

XAI: In the XAI phase, we have used DLob and statistical analysis. Thus, the time complexity of this phase is \(O\left( {sn} \right)\). Here, \(sn\): the number of the selected features.

Overall: The calculated computational complexities have also been given in Table 3 to compute overall time complexity.

According to Table 3 of the presented FriendPat-centric XFE model is computed as \(O\bigg( {c{n^2}L}\) \({+C+N+IK+PK+V+G+sn} \bigg)\) and this time complexity openly illustrated that the presented FriendPat-centric XFE model has linear time complexity.

Classification results

The first output of the introduced FriendPat-centric XFE model is the classification output. Using this output, the classification results were computed, which include (i) accuracy, (ii) sensitivity, (iii) specificity, (iv) precision, (v) F1-score, and (vi) geometric mean. To compute these results, we utilized two validation techniques: (1) 10-fold CV and (2) LOSO CV.

The computed confusion matrices for these validation techniques are shown in Fig. 3.

According to Fig. 3, the computed classification performances have been tabulated in Table 4.

Table 4 demonstrates that the introduced FriendPat-centric XFE model achieved 79.92% and 99.61% classification accuracies using LOSO CV and 10-fold CV, respectively. In this regard, the presented model exhibits high classification performance.

Interpretable results

The second output of the introduced FriendPat-centric XFE model is the XAI. The generated XAI results are shown in Fig. 4.

Per Fig. 4, the most frequently used DLob symbol is TR, demonstrating that most of the collected epileptic EEGs belong to temporal epilepsy. However, other symbols have also been used to detect epilepsy. Additionally, the information entropy of the DLob string has been computed as 2.3408, and the complexity ratio of this DLob string is calculated as 63.26%. In this regard, epilepsy is predictable according to DLob, and the created DLob string is provided below.

TRPRTRCzTRFLTRFLTRFRTRFRTRCLTRFRTRPRTRFRTRCRTRFLTRFRTRTRTRPRTR

FRTRPRTRPRTRTRTRPLTRFLTRORTRTLTRTRTRFRTRPRTRTRTRFzTRCRTRCzTRFz

TRFRTRPRTRCRTRCLTRTRTRFRTRTLTRPLTRPLTRFzTRFLTRTLTRTRTRCzTRTRTR

PRTRCRTRTLTRTRTRTRTRFRTRPRTRPRTRFRTRCRTRCLTRORTRFLTRFRTRTLTRC

RTRFLTRFLTRTRTRCzTRCLTRFLTRFRTRFzTRCLTRCLTRTLTRCLTROLTRPzTRFRT

RTRTRTRTRFRTRTRTRPR.

Discussions

The results and findings of the recommended paper have been discussed in this section.

Overview

This research presents a new-generation feature extraction function called FriendPat. To investigate the classification and explainable capabilities of the introduced FriendPat, an XFE model has been proposed. The presented XFE model utilizes FriendPat for feature extraction, CWINCA for feature selection, tkNN for classification, and DLob for XAI results generation.

In this XFE model, CWINCA is a critical component for obtaining both classification and interpretable results. The CWINCA feature selector is a self-organized model that selects the most informative feature vectors through an iterative selection process, while tkNN is a self-organized classifier. By deploying the tkNN classifier, higher classification performance has been achieved.

Comparisons

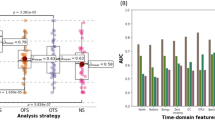

To demonstrate the superior performance of the tkNN classifier, comparisons were made with Decision Tree (DT), Linear Discriminant (LD), Quadratic Discriminant (QD), Binary Generalized Linear Model Logistic Regression (BGLMLR), Naïve Bayes (NB), Support Vector Machine (SVM), kNN, Neural Network (NN), and Bagged Tree (BaT). These comparative results were obtained using 10-fold CV and are presented in Fig. 5.

According to Fig. 5, the best classifier is the tkNN classifier, as it achieved 99.61% classification accuracy on the used dataset. Additionally, kNN and SVM achieved 99.53% and 99.51% accuracy, respectively. Among the others, kNN performed the best. Therefore, we selected the tkNN classifier for this study.

The presented model has low time complexity while also demonstrating high classification performance. It achieved 99.61% and 79.92% classification accuracies using 10-fold CV and LOSO CV, respectively. In this regard, the introduced FriendPat-centric XFE model is both highly accurate and lightweight. Furthermore, this model is a strong competitor to deep learning models.

To highlight the high classification performance of the presented model, we have included a comparative results table. In this table, the presented model is compared to state-of-the-art models. These results are provided in Table 5.

Table 5 clearly demonstrates that the presented FriendPat-centric XFE model achieved the highest classification accuracies among the state-of-the-art (SOTA) models. It also highlights that the introduced FriendPat-centric model is a strong competitor to deep learning models.

Testing additional dataset

To demonstrate the general classification ability of the proposed FriendPat-XFE, we used the publicly available MAT dataset41. It contains recordings from 36 healthy subjects performing arithmetic tasks, labeled as “good” (1) or “bad” (0). The dataset includes 1,149 good and 449 bad EEG segments recorded with a 23-channel cap. FriendPat extracts 253 features, of which CWINCA selects 34. Using tkNN, the model achieved 99.87% accuracy under 10-fold CV. The resulting confusion matrix is shown in Fig. 6.

Per Fig. 6, the FriendPat XFE attained 99.87% classification accuracy and 99.84% geometric mean. We have compared the computed results to the state-of-the-art (SOTA) models and these comparative results were listed in Table 6.

These results demonstrate that the proposed model achieves excellent classification performance on the MAT dataset.

Discussion of the interpretable results

The introduced FriendPat-centric XFE model has the ability to generate explainable results. Based on the generated explainable results, the DLob sentence is shown to be predictable, with a complexity calculated as 63.26%. This finding clearly indicates that epilepsy can be detected using the DLob sentence. Additionally, for this dataset, the most frequently used DLob symbol is TR, which shows that most of the collected epilepsy cases are temporal epilepsy. Furthermore, there is evidence of frontal and parietal activation in the generated DLob sentence. This demonstrates the high discriminative ability of the DLob sentence, and a cortical connectome diagram has been generated for epilepsy detection.

Highlights

The most important points of this research are discussed below:

Findings:

-

The presented FriendPat has used a channel distance matrix to detect channel relations.

-

To get high classification performance in this model, two self-organized methods which are CWINCA and tkNN have been used.

-

The introduced FriendPat extracts 595 features and the CWINCA used selected 82 out of the generated 595 features.

-

The tkNN classifier attained 99.61% and 79.92% accuracies deploying 10-fold CV and LOSO CV consecutively.

-

The generated DLob sentence has 164 (= 82 × 2) DLob symbols since the generated each feature contain two channel information.

-

By deploying DLob sentence produced, a cortical connectome diagram has been created. This connectome diagram highlighted that the center node is TR.

-

According to the generated DLob sentence, the most activated lobe is temporal lobe. This depicted that the collected most epilepsy are temporal epilepsy.

-

In the DLob sentence generated, the frontal and parietal lobe activated. It shown that there are parietal and frontal epilepsies in this dataset.

-

The complexity of the DLob sentence produced is 63.26%. It is highlighted that epilepsy is a predictable process for cortical languages.

Advantages:

-

The introduced FriendPat-centric XFE model is a highly accurate model since it yielded 99.61% and 79.92% accuracies classification accuracies deploying 10-fold CV and LOSO CV consecutively. By deploying 10-fold CV and LOSO CV together, we attained both comparative and reliable results. According to the comparative results table, the presented model is a competitive model to deep learning model since this XFE model attained higher classification performances than deep learning model for the used dataset.

-

As our knowledge, for this dataset, the presented FriendPat-centric XFE model is the first model which used DLob. By deploying DLob, we have detected types of the epilepsies in this dataset.

-

In this research, we have presented lightweight, high accurate and explainable model for epilepsy detection.

Limitations and future directions

Limitation:

-

The LOSO CV-based results like sensitivity are relatively lower than 10-fold CV-based results.

Future directions:

-

We plan to develop a new generation of FriendPat-like models to improve classification performance under LOSO CV. To achieve this, our plans are as follows:

-

We will design new FriendPat-like extractors that incorporate cross-subject normalization and domain-adaptation techniques. This should reduce variability between training and test subjects and boost LOSO CV sensitivity.

-

By integrating channel- and temporal-attention mechanisms, the model can learn to focus on the most discriminative signal segments for each subject.

-

-

This model can be applied to other biomedical signal dataset for instance ECG, EMG.

-

New deep learning models can be presented by using FriendPat and attention mechanism together.

-

DLob can be improved to get more interpretable results.

Potential applications:

-

Personalized medicine applications can be developed for epilepsy deploying FriendPat-centric XFE model.

-

DLob can be integrated to user interface of the EEG devices for simplification manual EEG reading.

-

The recommended FriendPat-centric XFE model can be used to monitor effect of the epilepsy treatments and medications.

-

Epileptic drugs’ effects can be followed using an application based on the introduced FriendPat-centric XFE model.

-

New generation FriendPat-centric XFE model-based smart applications can be used in the medical centers and hospitals to diagnose epilepsy and type of the epilepsy.

Conclusions

This research introduces the FriendPat-centric XFE model, an innovative and explainable approach for EEG signal classification, particularly for epilepsy detection. The model combines the FriendPat feature extraction function, CWINCA feature selector, tkNN classifier, and DLob symbolic language to achieve high classification accuracy and interpretability. It demonstrated its effectiveness by achieving 99.61% accuracy with 10-fold CV and 79.92% accuracy with LOSO CV on a large EEG epilepsy dataset containing more than 10,000 EEG signals. Additionally, the complexity ratio of the DLob string generated by the model is calculated as 63.26%. The complexity ratio computed also indicates that epilepsy has a predictable structure in the cortex. The classification results highlight the model’s ability to outperform feature engineering and deep learning models, making it a competitive and lightweight alternative.

The FriendPat-centric XFE model is computationally efficient due to its linear time complexity. Therefore, the presented XFE model is well-suited for real-time and resource-constrained applications. The integration of DLob enhances interpretability by generating symbolic representations and cortical connectivity diagrams, providing insights into the underlying mechanisms of epilepsy. For instance, the model identifies that most epileptic cases in the dataset are linked to temporal lobe activity, with fewer cases related to frontal and parietal regions, consistent with the dataset’s annotations. These findings demonstrate the model’s ability to detect epilepsy while offering clinically relevant and explainable insights, supporting its application in personalized medicine and clinical settings.

Overall, the FriendPat-centric XFE model is a robust solution for epilepsy detection, combining high accuracy, computational efficiency, and interpretability. It has the potential to be integrated into EEG device interfaces for diagnosis, treatment monitoring, and simplifying manual interpretation. This research paves the way for the next generation of XAI-based medical applications across various fields.

Data availability

We have used a publicly available dataset [33,34]. The dataset used can be downloaded from this link https://www.kaggle.com/datasets/buraktaci/turkish-epilepsy.

References

Kumar, S. & Sharma, A. Advances in non-invasive EEG-based brain-computer interfaces: signal acquisition, processing, emerging approaches, and applications. Signal. Process. Strateg. 2025, 281–310 (2025).

Sharif, S. M., Butta, R., Rao, D. V., Murthy, G. & Devarajan, N. M. Improved LSTM-Squeeze net architecture for brain activity detection using EEG with improved feature set. Biomed. Signal Process. Control. 101, 107222 (2025).

Bergonzini, P. et al. Clinical, etiological, and therapeutic profile of early-onset absence seizures: a case series analysis. Clin. Neurol. Neurosurg. 249, 108673 (2025).

Firdous, S. M., Mallik, S. & Paria, B. Antioxidant effects of medicinal plants for the treatment of epilepsy. Antioxidants: Nature’s Defense Against Dis. 2025, 441–489 (2025).

Mourid, M. R., Irfan, H. & Oduoye, M. O. Artificial intelligence in pediatric epilepsy detection: balancing effectiveness with ethical considerations for welfare. Health Sci. Rep. 8 (1), e70372 (2025).

Huang, H., Hu, H., Xu, F., Zhang, Z. & Yang, J. Electroencephalography-based assessment of worker vigilance for evaluating safety interventions in construction. Adv. Eng. Inform. 64, 102973 (2025).

Prateek, M. & Rathore, S. P. S. Clinical validation of AI disease detection Models—An overview of the clinical validation process for AI disease detection models, and how they can be validated for accuracy and effectiveness. AI Dis. Detecti. Adv. Appl. 2025, 215–237 (2025).

Furutani, N. et al. Utility of complexity analysis in electroencephalography and electromyography for automated classification of sleep-wake States in mice. Sci. Rep. 15 (1), 3080 (2025).

Chakkarapani, A. A., Singh, I. & Balakrishnan, U. Comprehensive insights into neonatal seizure: etiologies, diagnostic tools, management and future directions. Paediatr. Child Health (2024).

Sharma, R. & Meena, H. K. Emerging trends in EEG signal processing: a systematic review. SN Comput. Sci. 5 (4), 1–14 (2024).

Basak, M., Maiti, D. & Das, D. EEG innovations in neurological disorder diagnostics: a Five-Year review. Asian J. Res. Comput. Sci. 17 (6), 226–249 (2024).

Yang, H., Chen, C. P., Chen, B. & Zhang, T. Improving the interpretability through maximizing mutual information for EEG emotion recognition. IEEE Trans. Affect. Comput. (2024).

Bouazizi, S. & Ltifi, H. Enhancing accuracy and interpretability in EEG-based medical decision making using an explainable ensemble learning framework application for stroke prediction. Decis. Support Syst. 178, 114126 (2024).

Aboyeji, S. T. et al. DCSENets: interpretable deep learning for patient-independent seizure classification using enhanced EEG-based spectrogram visualization. Comput. Biol. Med. 185, 109558 (2025).

Lin, Y., Dong, L., Jiang, Y. & Lian, J. Epileptic EEG classification via deep learning-based strange attractor. Biomed. Signal Process. Control. 100, 106965 (2025).

Cao, X., Zheng, S., Zhang, J., Chen, W. & Du, G. A hybrid CNN-Bi-LSTM model with feature fusion for accurate epilepsy seizure detection. BMC Med. Inf. Decis. Mak. 25 (1), 6 (2025).

Verma, A., Shrivastava, A. & Chaturvedi, D. Epilepsy detection system using CWT and deep CNN. In Artificial Intelligence in Biomedical and Modern Healthcare Informatics 211–222 (Elsevier, 2025).

Li, C. et al. CNN-Informer: a hybrid deep learning model for seizure detection on long-term EEG. Neural Netw. 181, 106855 (2025).

Liu, Y., Liu, G., Wu, S. & Tin, C. Phase spectrogram of EEG from S-transform enhances epileptic seizure detection. Expert Syst. Appl. 262, 125621 (2025).

El-Dajani, N., Wilhelm, T. F. L., Baumann, J., Surges, R. & Meyer, B. T. Patient-Independent epileptic seizure detection with reduced EEG channels and deep recurrent neural networks. Information 16 (1), 20 (2025).

Khalfallah, S., Peuch, W., Tlija, M. & Bouallegue, K. Exploring the Effectiveness of Machine Learning and Deep Learning Techniques for EEG Signal Classification in Neurological Disorders (IEEE Access, 2025).

Pain, S., Chatterjee, S., Sarma, M. & Samanta, D. MSSTNet: a multi-stream time-distributed spatio-temporal deep learning model to detect Mind wandering from electroencephalogram signals. Comput. Electr. Eng. 122, 110005 (2025).

Chaibi, S., Mahjoub, C. & Kachouri, A. EEG-based cognitive fatigue recognition using relevant multi-domain features and machine learning. In Brain-Computer Interfaces 327–344 (Elsevier, 2025).

Jalilpour, S. & Müller-Putz, G. A framework for interpretable deep learning in cross-subject detection of event-related potentials. Eng. Appl. Artif. Intell. 139, 109642 (2025).

Javed, H., El-Sappagh, S. & Abuhmed, T. Robustness in deep learning models for medical diagnostics: security and adversarial challenges towards robust AI applications. Artif. Intell. Rev. 58 (1), 1–107 (2025).

Li, W. & Law, K. E. Deep Learning Models for time Series Forecasting: a Review (IEEE Access, 2024).

Sawan, A., Awad, M., Qasrawi, R. & Sowan, M. Hybrid deep learning and metaheuristic model based stroke diagnosis system using electroencephalogram (EEG). Biomed. Signal Process. Control 87, 105454 (2024).

Lu, S-Y., Zhu, Z., Tang, Y., Zhang, X. & Liu, X. CTBViT: a novel ViT for tuberculosis classification with efficient block and randomized classifier. Biomed. Signal Process. Control. 100, 106981 (2025).

Gao, Q., Long, T. & Zhou, Z. Mineral identification based on natural feature-oriented image processing and multi-label image classification. Expert Syst. Appl. 238, 122111 (2024).

Cambay, V. Y., Hafeez Baig, A., Aydemir, E., Tuncer, T. & Dogan, S. Minimum and maximum Pattern-Based Self-Organized feature engineering: fibromyalgia detection using electrocardiogram signals. Diagnostics 14 (23), 2708 (2024).

Tuncer, T. et al. Lobish: symbolic Language for interpreting electroencephalogram signals in Language detection using Channel-Based transformation and pattern. Diagnostics 14 (17), 1987 (2024).

Tuncer, T. et al. Directed Lobish-based explainable feature engineering model with TTPat and CWINCA for EEG artifact classification. Knowl. Based Syst. 305, 112555 (2024).

Tasci, I. et al. Epilepsy detection in 121 patient populations using hypercube pattern from EEG signals. Inform. Fusion 96, 252–268 (2023).

Tasci, B. Turkish Epilepsy EEG Dataset (2023, accessed 21 Jan 2024). https://www.kaggle.com/datasets/buraktaci/turkish-epilepsy.

Goldberger, J., Hinton, G. E., Roweis, S. & Salakhutdinov, R. R. Neighbourhood components analysis. Adv. Neural. Inf. Process. Syst. 17, 513–520 (2004).

Tuncer, T., Dogan, S., Özyurt, F., Belhaouari, S. B. & Bensmail, H. Novel multi center and threshold ternary pattern based method for disease detection method using voice. IEEE Access. 8, 84532–84540 (2020).

Vaswani, A. et al. Attention is all you need. Adv. Neural Inf. Process. Syst. 2017, 30 (2017).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L-C. Mobilenetv2: Inverted residuals and linear bottlenecks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 4510–4520 (2018).

Szegedy, C., Vanhoucke, V., Ioffe, S., Shlens, J. & Wojna, Z. Rethinking the inception architecture for computer vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 2818–2826 (2016).

Dişli, F. et al. Epilepsy diagnosis from EEG signals using continuous wavelet Transform-Based depthwise convolutional neural network model. Diagnostics 15 (1), 84 (2025).

Zyma, I. et al. Electroencephalograms during mental arithmetic task performance. Data 4 (1), 14 (2019).

Yedukondalu, J., Sharma, D. & Sharma, L. D. Subject-wise cognitive load detection using time–frequency EEG and Bi-LSTM. Arab. J. Sci. Eng. 49 (3), 4445–4457 (2024).

Yedukondalu, J. et al. Cognitive load detection through EEG lead wise feature optimization and ensemble classification. Sci. Rep. 15 (1), 842 (2025).

Aslam, M. et al. Electroencephalograph (EEG) based classification of mental arithmetic using explainable machine learning. Biocybern. Biomed. Eng. 45 (2), 154–169 (2025).

Acknowledgements

We would like to thank Assoc. Prof. Dr. Irem Tasci and Assoc. Prof. Dr. Serkan Kirik for their neurological insights into the findings.

Funding

This work was supported by the TEKF.23.49 project fund provided by the Scientific Research Projects Coordination Unit of Firat University.

Author information

Authors and Affiliations

Contributions

Author contributions Conceptualization, TT, SD; formal analysis, TT, SD; investigation, TT, SD; methodology, TT, SD; project administration, TT; resources, TT, SD; supervision, TT; validation, TT, SD; visualization, TT, SD; writing—original draft, TT, SD; writing—review and editing, TT, SD. All authors have read and agreed to the published version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tuncer, T., Dogan, S. An explainable EEG epilepsy detection model using friend pattern. Sci Rep 15, 16951 (2025). https://doi.org/10.1038/s41598-025-01747-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-01747-z

Keywords

This article is cited by

-

Quantum inspired wavelet and Fourier feature fusion for EEG based epilepsy and seizure detection

Scientific Reports (2026)

-

Enhanced EEG Signal Processing for Accurate Epileptic Seizure Detection

SN Computer Science (2025)