Abstract

To address the complex requirements of network intrusion detection in IoT environments, this study proposes a hybrid intelligent framework that integrates the Whale Optimization Algorithm (WOA) and the Grey Wolf Optimization (GWO) algorithm—referred to as WOA-GWO. This framework leverages a cooperative mechanism to balance global exploration and local exploitation capabilities. WOA’s spiral bubble-net search strategy endows the model with efficient global optimization in large-scale feature spaces, while GWO’s hunting behavior, based on a social hierarchy, enhances fine-tuned optimization in key feature regions. The complementary design of the two algorithms effectively overcomes the limitations of single-algorithm approaches, such as susceptibility to local optima and slow convergence speed. Compared with traditional models like the Long Short-Term Memory Recurrent Neural Network (LSTM-RNN) and Support Vector Machine (SVM), the proposed framework significantly improves the sensitivity and generalization ability for detecting various types of attacks through dynamic feature selection and parameter optimization. Experimental results demonstrate that the hybrid algorithm exhibits superior real-time responsiveness in binary classification tasks, thanks to its lightweight design that reduces dependency on computational resources. In multi-class attack identification scenarios, the framework mitigates feature confusion between rare attacks (e.g., user-to-root attacks) and normal traffic through adaptive feature weight allocation. This study further validates the potential of swarm intelligence algorithms in the field of IoT security, offering a novel methodological foundation for efficient threat detection in resource-constrained environments.

Similar content being viewed by others

Introduction

With the rapid development of Internet of Things (IoT) technology, the number of smart devices globally has experienced exponential growth, widely applied in various domains such as smart homes, smart healthcare, and industrial automation1,2,3. These devices communicate and transmit data over networks, forming a vast IoT ecosystem that significantly enhances the convenience of daily life and work4. However, as the scale of IoT devices expands and application scenarios diversify, the security challenges faced by IoT networks have become increasingly severe5. Due to limited computing power, constrained storage capacity, and inadequate firmware update mechanisms, IoT devices generally exhibit weak security defenses and are highly vulnerable to network intrusions6,7,8. For example, attacks such as Distributed Denial of Service (DDoS) and malware propagation not only result in direct consequences like data breaches and device failures but can also trigger cascading security incidents due to the high degree of interconnectivity among devices9,10. This dual challenge—resource constraints and increasing attack complexity—poses significant difficulties for traditional security defense mechanisms in IoT contexts.

In the realm of cybersecurity, Intrusion Detection System (IDS) has been widely adopted as a crucial means of defending against malicious attacks11,12. IDS monitor network traffic in real time, identifying anomalous behaviors to promptly detect potential attacks. Although existing IDSs perform well in conventional network environments, their adaptability in IoT scenarios is markedly limited. Rule-based detection methods, such as signature detection, rely on predefined attack pattern databases13 and struggle to cope with novel, dynamically evolving threats. While machine learning–based approaches are better suited for feature mining, they often falter in IoT settings due to hardware limitations. For instance, handling high-dimensional features demands substantial computational resources, resulting in slow training and inference times. Complex deep learning models, such as LSTM, are often infeasible to deploy on memory-constrained devices. Furthermore, suboptimal feature selection and limited model generalization can further diminish detection efficiency. Of particular concern is the fact that most existing optimization algorithms adopt a single strategy, which fails to balance global search and local refinement. As a result, models are prone to becoming trapped in local optima or exhibit inadequate convergence speeds. These limitations not only compromise detection accuracy but may also lead to excessive computational overhead, making it difficult to meet the real-time demands of IoT applications14. With the advancement of machine learning and deep learning technologies, data-driven detection methods have begun to emerge, particularly demonstrating advantages in handling large-scale data and unknown attack behaviors15,16. However, existing machine learning-based intrusion detection methods still face limitations in IoT environments, including unreasonable feature selection, unstable classifier performance, and prolonged training times17.

To address these issues, this study proposes a hybrid optimization algorithm that combines the Whale Optimization Algorithm (WOA) and the Gray Wolf Optimization (GWO) algorithm (referred to as WOA-GWO) to enhance the performance of IDS in IoT environments. During the global search phase, WOA enhances the model’s search capability by extensively exploring the search space. In contrast, GWO simulates the hunting behaviors of wolf packs during the local search phase to conduct refined searches in specific areas, improving the detection system’s ability to recognize different types of attacks. This algorithm possesses significant application potential in IoT environments and is expected to provide new solutions for future network security defenses.

Literature review

Network security challenges in IoT environments

The security issues associated with the IoT have emerged as a significant research focus in the field of information security in recent years. With the increasing number of connected devices, IoT faces various types of network attacks, including Denial of Service (DoS) attacks, DDoS attacks, man-in-the-middle attacks, malware attacks, and network sniffing18,19,20. These attacks are not only highly complex but also exhibit rapid propagation characteristics, posing substantial challenges to existing network security defense systems21,22. In response to these challenges, IDS have been widely deployed as an effective defense mechanism within IoT environments. The primary function of an IDS is to monitor and analyze network traffic, detecting potential security threats by identifying anomalous patterns23. For instance, Santhosh et al. (2023)24 noted that IDS provided a defensive barrier by effectively monitoring internet traffic to guard against attacks on the internet and connected devices. Although traditional rule-based methods (such as signature detection and anomaly detection) perform well in identifying known attacks, their effectiveness diminishes when confronted with continuously evolving novel attacks25.

Current state of network IDS

Existing machine learning-based methods for network intrusion detection have made significant progress, particularly in feature selection and the construction of classification models. For example, Bin (2023)26 proposed a feature selection method based on information gain to enhance the classification accuracy of intrusion detection. Alabsi et al. (2023)27 introduced a method that utilized a combination of two convolutional neural networks (CNN) to detect attacks on IoT networks. By calculating the information entropy of different features in network traffic, this approach filtered out the features most contributory to attack behavior recognition, thereby optimizing the model’s detection performance. However, such methods often exhibited limitations when addressing the complexity and diversity of attack types, as they frequently overlooked the interdependencies among features during the selection process, leading to insufficient model generalization.

Meanwhile, the introduction of deep learning methods has further advanced the development of IDS. For instance, IDS models based on Long Short-Term Memory (LSTM) networks are capable of handling temporal dependencies within network traffic, demonstrating strong performance in detecting persistent attacks28. Kasongo and Sun (2020)29 proposed a wireless IDS classifier based on deep LSTM architectures. Additionally, Recurrent Neural Network (RNN) has been widely applied in intrusion detection, enabling the identification of hidden patterns within complex network traffic data30. However, these models are often characterized by long training times and a susceptibility to overfitting when dealing with high-dimensional features.

To further enhance the performance of IDS, optimization algorithms have been increasingly utilized in the design of IDS in recent years. The WOA and the GWO are two commonly employed swarm intelligence optimization algorithms, which address optimization problems by simulating the hunting behaviors of whales and wolves in nature31. WOA excels in the global search phase, allowing for extensive exploration of the search space while avoiding local optima, whereas GWO demonstrates strong fine-tuning capabilities in local searches, facilitating deep exploration of specific areas32. In the context of network intrusion detection, optimization algorithms are primarily used for feature selection and model parameter optimization. For example, Tajari Siahmarzkooh and Alimardani (2021)33 utilized WOA for feature selection in network traffic, significantly enhancing the accuracy of intrusion detection. Kaliraj (2024)34 employed a WOA-based weighted extreme learning machine to identify network intrusions, randomly assigning weights to the network prior to training the neural network and obtaining output weights. Jyothi et al. (2024)35 introduced a novel optimization method termed the enhanced WOA for training neural networks.

However, current research often relies solely on individual optimization algorithms, making it challenging to achieve a good balance between global and local searches, which leaves room for improvement in the detection capabilities of intrusion systems. To further enhance the performance of IDS, some researchers have proposed hybrid optimization algorithm strategies that integrate the advantages of multiple optimization algorithms. Although these hybrid optimization algorithms have improved IDS accuracy to some extent, their complex algorithm design and high computational costs pose limitations for practical applications.

Hybrid optimization algorithms in intrusion detection

In recent years, hybrid optimization algorithms have become a prominent research focus in the field of intrusion detection. For instance, although Khaled et al. (2025) investigated groundwater resource prediction using random forest regression36, their proposed hybrid optimization method provides valuable insights applicable to this study. Similarly, while Elshabrawy (2025) focused on waste management technologies for energy production37, the optimization techniques employed for processing large-scale datasets offer practical guidance for IoT-based IDSs. In intrusion detection-specific research, Alzakari et al. (2024) introduced a deep learning model combining an enhanced CNN with a LSTM network for the early detection of potato diseases38. By integrating CNN’s powerful image feature extraction capabilities with LSTM’s ability to process temporal sequences, the model effectively identifies disease characteristics in leaf images, enabling early warning and precise disease control—thus providing a valuable tool for agricultural disease management.

El-Kenawy et al. (2024) applied machine learning and deep learning techniques to predict potato crop yields, supporting sustainable agricultural development39. Their study utilized algorithms such as Random Forest (RF), Support Vector Machine (SVM), and Deep Neural Networks (DNN) to analyze multi-source data, including soil conditions, climate variables, and planting density. These models significantly improved prediction accuracy and provided critical decision-making support, helping to optimize agricultural strategies and reduce resource waste.

Recent innovations in optimization algorithms have also introduced new ideas for improving intrusion detection. El-Kenawy et al. (2024) proposed the Greylag Goose Optimization (GGO) algorithm, inspired by the migratory and foraging behaviors of greylag geese40. This multi-stage search strategy offers biologically inspired solutions for designing more effective hybrid algorithms. Additionally, Hassib et al. (2019) developed a framework for mining imbalanced big data41, demonstrating significant improvements in algorithmic performance, which were highly relevant for enhancing IDSs.

Machine learning and deep learning technologies continue to push the boundaries of technical applications, offering innovative solutions to complex challenges. For example, Khafaga et al. (2022) significantly improved the prediction of metamaterial antenna bandwidth by applying adaptive optimization to LSTM networks42. By leveraging the LSTM model’s strengths in sequential and nonlinear data processing and fine-tuning it through adaptive optimization, the study overcame the limitations of conventional prediction methods in high-frequency electromagnetic contexts. This enhanced model not only improved prediction accuracy but also provided solid data support for antenna design and optimization, thereby accelerating technological development in the field.

Alzubi et al. (2025) proposed an IoT intrusion detection framework that integrated the Salp Swarm Algorithm (SSA) with an Artificial Neural Network (ANN), significantly improving the recognition efficiency of complex attack patterns by dynamically adjusting feature weights43. Their findings demonstrated that hybrid optimization strategies could effectively balance global exploration and local exploitation capabilities, providing theoretical support for the design of the WOA-GWO hybrid algorithm in this study. Furthermore, Alzubi et al. (2024) developed a hybrid deep learning framework for edge computing environments. By optimizing model compression and parallel computing techniques, they successfully reduced computational overhead on resource-constrained devices, validating the feasibility of lightweight models in IoT scenarios44. In response to increasingly complex cyberattacks in the IoT security domain, researchers continue to explore innovative detection methods. For instance, Alzubi (2023) introduced a MALO-based XGBoost model—optimized using the Moth-Flame Algorithm with Levy Flight and Opposition-Based Learning—for detecting Botnet attacks in IoT environments45. This model enhances XGBoost’s parameter tuning by simulating moth navigation behavior and incorporating Levy flight strategies, significantly improving both global search capability and local refinement. As a result, the model can swiftly and accurately detect Botnet attacks in complex and dynamic IoT settings. This study underscores the potential of optimization algorithms to boost the detection performance of machine learning models and offers a powerful tool for addressing emerging cyber threats in IoT contexts.

Similarly, Alweshah et al. (2023) employed the Emperor Penguin Colony Optimization (EPCO) algorithm to optimize feature selection, demonstrating the potential of swarm intelligence algorithms in reducing model dimensionality and accelerating detection speed46. Collectively, these studies highlight the growing trend of cross-domain applications of hybrid optimization algorithms as an effective approach to tackling complex security challenges. Alzubi et al. (2022) also proposed an optimized machine learning-based IDS specifically designed for fog and edge computing environments47. This system incorporates multiple machine learning algorithms, such as SVM and RF, combined with feature selection and parameter optimization techniques to enhance detection accuracy and efficiency. Moreover, the system addresses latency and bandwidth limitations common in fog and edge computing by performing local data preprocessing and feature extraction at edge nodes. This approach reduces data transmission volume and eases computational load on central servers, offering a valuable reference for achieving efficient intrusion detection in resource-constrained computing environments.

In the field of Industrial Wireless Sensor Networks (IWSNs), intrusion detection faces significant challenges due to complex network topologies and the limited computational capacity of individual nodes. Alzubi (2022) proposed a deep learning-based intrusion detection model incorporating Fréchet and Dirichlet distributions for use in IWSNs. By integrating probabilistic distribution models within the deep learning architecture, the study not only improved detection accuracy48 but also enhanced the model’s adaptability to various types of attacks, such as Denial-of-Service (DoS) and malware propagation. Additionally, the model leveraged transfer learning during training, utilizing pre-trained model knowledge to significantly reduce both training time and computational resource consumption. In a related advancement, Qiqieh et al. (2025) developed a swarm-optimized machine learning framework that employed adaptive feature sampling to address data imbalance issues49, offering methodological insights for multi-class attack detection. These recent developments help bridge the limitations of traditional methods in terms of real-time performance and generalizability, providing a technical foundation for the construction of a hybrid optimization model that balances efficiency and accuracy.

Integrating insights from the aforementioned studies reveals a clear trend in IDS optimization—from the application of standalone algorithms to the adoption of multi-strategy collaborative innovations. However, most existing hybrid methods are still primarily designed for traditional network environments. Given the dual challenges of resource constraints and attack diversity in IoT devices, there remains a pressing need for more efficient algorithm fusion mechanisms. The WOA-GWO framework proposed in this study addresses this study gap by implementing a dynamically coupled global–local search strategy, enabling precise detection of complex attacks under limited resources. This offers a novel technical pathway for enhancing IoT security systems.

Summary and research gaps

In summary, current network IDS face various challenges in the context of the IoT. Existing intrusion detection methods based on machine learning and deep learning encounter issues such as insufficient feature selection, limited model generalization capabilities, and prolonged computational times when addressing diverse attack behaviors. Although optimization algorithms have introduced new ideas for intrusion detection, single optimization methods still face limitations in balancing global and local searches, making them insufficient to fully cope with the complex attack scenarios prevalent in IoT environments. This study aims to fill this gap by combining the strengths of the WOA and the Grey Wolf Optimization (GWO) algorithm to propose a hybrid optimization algorithm, WOA-GWO, which seeks to enhance both the global and local search capabilities of IDS. During the global search phase, WOA extensively scans the feature space to avoid falling into local optima; during the local search phase, GWO refines the processing of specific feature areas by simulating the hunting behaviors of wolf packs. This study not only aims to improve the accuracy and timeliness of IoT IDS but also provides new insights into the application of optimization algorithms in the field of cybersecurity.

Research methodology

Dataset selection and preprocessing

In this study, the NSL-KDD dataset was selected as the experimental foundation for evaluating the performance of network IDS. The NSL-KDD dataset is a meticulously constructed dataset specifically designed for research in network intrusion detection, standing out for its integrity and diversity compared to other datasets. It contains various types of network traffic records, including normal traffic and multiple types of attack traffic, such as Denial of Service (DoS), Probe, Remote to Local (R2L), and Local to Remote (U2R) attacks. Each record in the NSL-KDD dataset is described by 41 features, which include basic connection features (such as source IP address, destination IP address, and protocol type), content features (such as packet length and transmission time), and time features (such as connection duration and login time). These features comprehensively reflect the state of network connections, providing rich information for subsequent feature selection and model training, ensuring that the model can capture critical network behavior patterns.

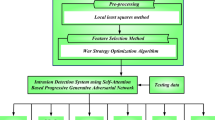

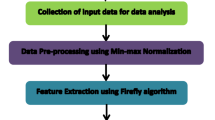

In the data preprocessing phase (as shown in Fig. 1), data cleaning is first performed to remove missing values and outliers. Methods for handling missing values include deleting samples containing missing values or filling in missing data with the mean or median. Outliers are detected and addressed using the interquartile range method to ensure the accuracy and integrity of the dataset. After data cleaning, standardization methods are applied to scale numerical features, resulting in a mean of 0 and a variance of 1. Standardization helps eliminate scale differences between features, thereby enhancing the training efficiency of the model and avoiding instability during training due to inconsistent feature scales.

Finally, to facilitate subsequent model training and performance evaluation, the dataset is divided into a training set and a testing set, with a ratio set at 80% for training and 20% for testing. The training set is used for model training and optimization, while the testing set is employed to assess the detection accuracy of the model. This division ensures the model’s generalization capability on unseen data, making the experimental results more reliable and valid.

Application of feature selection methods

In IoT intrusion detection scenarios, selecting appropriate feature selection methods requires balancing computational efficiency, compatibility with various feature types, and model interpretability. Information Gain (IG), an information-theoretic evaluation method, offers a key advantage by quantifying the nonlinear correlation between features and classification targets. Compared to the Chi-square Test, which is suited for assessing the independence between discrete features and target variables but performs poorly with continuous features (e.g., transmission time or packet length in network traffic), IG calculates mutual information without assuming any specific data distribution. This makes it particularly suitable for the complex, mixed-type feature sets often found in IoT traffic data. Embedded methods such as Recursive Feature Elimination (RFE) can iteratively select optimal feature subsets based on feedback from specific classification models (e.g., SVM). However, their computational complexity increases exponentially with the number of features. In resource-constrained IoT environments, frequent model retraining significantly increases latency and energy consumption. In contrast, IG ranks feature importance in a single pass, with a time complexity of \(O(n \cdot m)\) (where n is the number of samples and m is the number of features), making it well-suited for real-time detection tasks. Moreover, multi-class attack identification—such as DoS, Probe, Remote-to-Local (R2L), and User-to-Root (U2R)—requires feature selection methods that are sensitive to diverse class boundaries. IG measures the reduction in entropy for each class label and can naturally extend to multi-class problems. In contrast, methods like ANOVA or Least Absolute Shrinkage and Selection Operator (LASSO) often require adaptation for multi-class tasks. For instance, while LASSO performs feature sparsification via L1 regularization, its penalty coefficient must be dynamically tuned for different attack types, increasing the complexity of hyperparameter optimization.

Feature selection is a crucial step in enhancing the performance of IDS. In this study, the information gain ranking method is employed for feature selection to optimize the training efficiency and classification accuracy of the model. Information gain is a metric used to evaluate the contribution of features to classification tasks, helping to identify the features that provide the most information for distinguishing between different types of attacks. In the feature selection process, the information gain value for each feature is first calculated, and features are ranked according to this value. The detailed feature selection steps are illustrated in Fig. 2.

In Fig. 2, the original feature set represents all 41 features extracted from the NSL-KDD dataset. These features include basic connection features, content features, and time features of network traffic, which comprehensively reflect network behavior. At this stage, all features are in an unfiltered state and may contain redundant or irrelevant information. Information gain is calculated based on the original feature set. This process aims to assess the correlation between each feature and the target label (normal traffic or different types of attack traffic). Information gain measures the amount of information provided by a feature for classification decisions; the higher the information gain, the greater the feature’s contribution to the classification task. This step provides the basis for subsequent feature selection, identifying the most influential features for classification.

Next, a “feature ranking” process is conducted. In this phase, all features are ranked according to their information gain values, resulting in a new list. The purpose of ranking is to quantify the importance of the features, facilitating the selection of the most effective ones. In this process, the priority of features is clarified, allowing for clear visibility of which features play a critical role in classification. Once the ranking is completed, the “feature selection” phase begins. In this step, a threshold for information gain is set, and features with information gain exceeding this threshold are selected. At this point, features with lower information gain are filtered out, reducing the dimensionality of the model and avoiding the negative impact of feature redundancy on model performance. This ensures that the features input into the model are efficient and relevant, thereby improving the learning effectiveness and predictive capabilities of the subsequent model.

Finally, a “selected feature set” is formed; this set contains the features that have been filtered based on information gain and typically includes those with the highest discriminative power. This optimized feature set will be used in the subsequent model training process, providing reliable input for the model and enhancing the overall performance of the IDS.

Model construction and optimization

This study selects LSTM networks as the primary model for network intrusion detection. LSTM is a special type of RNN that effectively handles sequential data, particularly suited for tasks with long-distance dependencies. With its unique gating mechanisms, LSTM can retain long-term information and avoid the vanishing gradient problem, making it particularly effective in analyzing network traffic data. The network model is illustrated in Fig. 3:

The basic structure of LSTM is illustrated in Eqs. (1)–(6):

ft: The activation value of the forget gate, which determines the proportion of the previous state information retained in the current cell state. it: The activation value of the input gate, which controls the degree of influence of the current input information on the cell state. \(\tilde{C}_{t}\): The candidate value, representing the contribution of the current input to the cell state. Ct: The current cell state, which is influenced by both the previous state and the current input. ot: The activation value of the output gate, which determines the influence of the current cell state on the output. ht: The current hidden state, which serves as the model’s output. Wf, Wi, WC, WC: Weight matrices associated with the forget gate, input gate, candidate value, and output gate, respectively, controlling each gate’s impact on the information flow. bf, bC, b0: Bias terms that adjust each gate’s output to enhance the model’s flexibility. σ: The sigmoid activation function, which restricts output values between 0 and 1. tanh: The hyperbolic tangent activation function, which maps values to the range of −1 to 1, helping to maintain the balance of information.

To assess the model’s performance across different tasks, the following performance metrics were adopted: accuracy, precision, recall, F1-score, and other evaluation metrics for assessing the detection model’s performance. The calculation formulas for these metrics are provided in Eqs. (7) to (10):

TP refers to true positives, TN refers to true negatives, FP refers to false positives, and FN refers to false negatives.

To enhance the performance of the LSTM network model, a hybrid optimization algorithm (WOA-GWO) is constructed by combining the WOA and the GWO. This algorithm not only optimizes the hyperparameters of the LSTM model but also effectively improves the model’s training speed and detection accuracy. The specific steps of the optimization algorithm are illustrated in Fig. 4:

In Fig. 4, a certain number of candidate solutions are randomly generated, with each candidate solution representing a set of hyperparameter combinations. Each candidate solution is represented by a vector, where each element corresponds to a hyperparameter, such as learning rate, batch size, and the number of hidden layer units. The diversity of the population ensures that the algorithm can find better solutions within a broader search space.

Next, fitness evaluation is conducted. The fitness of each candidate solution is determined by training the LSTM model and calculating its accuracy on the validation set. The higher the accuracy, the greater the fitness value. The fitness function is given by Eq. (11):

X represents the candidate solution (hyperparameter combination), while Accuracy(X) represents the accuracy on the validation set.

Following this, both global and local searches are performed. The WOA primarily explores the hyperparameter space through global search, while GWO utilizes local search to refine high-quality candidate solutions. Specifically, WOA explores new candidate solutions by simulating the hunting behavior of whales. The swimming patterns of the whales in water represent extensive exploration of the hyperparameter space. Conversely, GWO achieves local search by mimicking the hunting behavior of wolf packs. Wolf packs typically cooperate around a target to determine the optimal hunting path. This mechanism enables the algorithm to quickly converge to local optima. In each iteration, the candidate solutions are improved through the update formulas of WOA and GWO.

Xt+1 represents the updated candidate solution position. Xt represents the updated candidate solution position. C represents the distance to the current best solution Xbest. A represents the control parameter that influences the movement range of the candidate solutions, calculated as shown in Eq. (14):

As the number of iterations increases, a gradually decreases to reduce the search space range step by step, enhancing the model’s local search capability. C is another control parameter used to adjust the search direction, as shown in Eq. (15):

The introduction of the random number r2 ensures the algorithm’s randomness and flexibility. To balance global exploration and local exploitation, an adaptive switching threshold mechanism was introduced. Specifically, when population diversity—measured by the variance in Euclidean distances among individuals—drops by more than 20% over five consecutive generations, the algorithm activates WOA’s forced random walk strategy. This resets the search space and boosts global exploration. Conversely, if diversity remains stable, the GWO module is reinforced to enhance local exploitation. This dynamic “exploration–exploitation–disturbance” cycle maintains solution diversity throughout convergence and systematically reduces the risk of becoming trapped in local optima.

During the algorithm’s iteration process, a threshold for fitness improvement is set (for instance, if the fitness does not improve over several consecutive generations) as the termination condition. When the termination condition is met, the current best candidate solution is returned as the optimal hyperparameter combination. Finally, the optimized hyperparameter combination is applied to the training of the LSTM model. The model is retrained using these optimal hyperparameters, achieving higher accuracy and faster convergence speed. Ultimately, the effectiveness of the optimization algorithm is validated by evaluating the model’s performance on the test set. This process is represented in pseudocode in Table 1.

Furthermore, the algorithm adopts dual stopping criteria: (1) a predefined maximum number of iterations (MaxIter) as a hard constraint, and (2) a dynamic convergence check based on fitness improvement. Specifically, training is terminated early if the relative improvement in the best fitness across 10 consecutive iterations falls below a threshold \((\Delta Fitness < 1 \times 10^{ - 5} )\), thereby preventing overfitting and reducing computational overhead.

Experimental design

For model ensemble, a soft voting strategy is employed, with weights dynamically assigned based on each base model’s F1-score on the validation set. The predictive probabilities of LSTM, RF, and SVM are combined with weights of 0.5, 0.3, and 0.2, respectively. Soft voting is favored over hard voting because it preserves the confidence levels of individual models regarding different attack classes. This is particularly beneficial in imbalanced datasets, where probability weighting can alleviate majority-class dominance. Additionally, the ensemble weights are optimized via grid search on the validation set, ensuring a balanced trade-off between precision and recall.

The experiments evaluated two tasks: binary classification and multi-class classification. The overall framework of the experiments includes several key steps: first, the construction of an LSTM-based network intrusion detection model, followed by the optimization of hyperparameters using the WOA-GWO optimization algorithm. The model is then trained using the training set, with real-time monitoring of its performance. Finally, the performance of the optimized model is evaluated on the test set and compared with traditional models. The dataset has been detailed previously. To ensure the effectiveness and stability of the model, the parameter settings listed in Table 2 were selected: For parameter settings, WOA’s shrinkage factor a linearly decreases from 2 to 0 to control the global search range. In GWO, the number of hunting wolves is fixed at three (Alpha, Beta, Delta), and the position update coefficient CCC varies within the range [0, 2]. For the LSTM model, key hyperparameters are configured as follows. The learning rate is sampled from a log-uniform distribution between 1e⁻4 and 1e⁻2. Batch size is chosen from the set {32, 64, 128} using grid search. The number of hidden units is adaptively adjusted based on the input feature dimensionality. All parameters are initialized using the Xavier normal distribution to help prevent the vanishing gradient problem.

The population size NNN for the WOA-GWO algorithm is set to 50 to balance search efficiency and computational cost. The maximum number of iterations is defined as MaxIter = 50, combined with a dynamic convergence criterion—early termination is triggered if the improvement in fitness (ΔFitness) remains below 1 × 10−5 for 10 consecutive generations—to prevent overfitting. In the WOA, the contraction factor a is initialized to 2 and linearly decreased according to the rule \(a = 2 - \frac{2 \cdot iteration}{{MaxIter}}\), thereby gradually narrowing the global search space. For the Grey Wolf Optimizer (GWO), the hierarchical weights are fixed in proportion \((\alpha :0.5,\beta :0.3,\delta :0.2)\) to ensure that the most competitive individuals dominate the direction of local exploitation. Inertia weights during position updates are adaptively adjusted using the random vectors A and C, where \(A = 2a \cdot r_{1} - a(r_{1} \in [ - 1,1])\), \(C = 2r_{2} (r_{2} \in [0,1])\), which facilitates a dynamic balance between exploration and exploitation phases.

The configuration of the experimental environment is shown in Table 3.

Results and discussion

Detection results for different classification tasks

The proposed WOA-GWO optimized LSTM model is compared with other models in both binary and multi-class classification tasks, including the LSTM-RNN model, a feature selection method based on information gain ranking, and multi-class SVM. The detection performance of binary classification tasks is compared as shown in Figs. 5:

In Fig. 5, the performance metrics of the proposed WOA-GWO optimized LSTM model in the binary classification task demonstrate its strong detection capabilities. With an accuracy of 96.6%, the model can almost flawlessly distinguish between normal traffic and attack traffic, highlighting its advantages in handling complex network environments. This result not only surpasses that of the LSTM-RNN (90.4%) and the information gain-based feature selection method (88.4%) but also significantly exceeds the performance of the multi-class SVM (88.5%).

Notably, the precision and recall of the WOA-GWO optimized LSTM model are 96.5% and 96.7%, respectively, indicating the model’s effectiveness in reducing false positives and false negatives. The WOA-GWO optimization algorithm enhances the model’s sensitivity and accuracy through effective feature selection and model training, enabling it to capture attack features more comprehensively. This improvement can be attributed to the combination of WOA’s global search capabilities and GWO’s local optimization strategies, allowing the model to explore the underlying patterns in the data more thoroughly during training. Compared to the LSTM-RNN model, the performance in network intrusion detection is significantly enhanced, suggesting that traditional RNN models have certain limitations when dealing with complex data, while the introduction of WOA-GWO optimization considerably strengthens the model’s capabilities.

Figure 6 is a comparison of detection performance for multi-classification tasks.

In Fig. 6, the WOA-GWO optimized LSTM model also performs exceptionally well in multi-class tasks, achieving an accuracy of 94.3%. Compared to the LSTM-RNN (89.1%) and the information gain-based feature selection method (87.2%), the WOA-GWO optimized LSTM model shows significant improvements across all metrics. This result indicates that the WOA-GWO optimization algorithm effectively reduces confusion that may arise during classification tasks when handling multiple types of attack behaviors, enhancing the model’s overall recognition ability.

The optimization of feature selection through the WOA-GWO algorithm strengthens the model’s adaptability to multi-class problems, enabling accurate differentiation between various attack types (such as Denial of Service (DoS), Probe, etc.). The improvements in precision and recall indicate that the model not only excels in identifying positive cases but also effectively reduces the risk of misclassification when dealing with negative cases. The increase in F1-score further demonstrates that the model achieves a good balance between precision and recall, which is crucial for detecting multi-class network attacks.

Overall, the excellent performance of the WOA-GWO optimized LSTM model in both binary and multi-class tasks underscores the importance of optimization algorithms in enhancing model performance. Its potential application in real-time network intrusion detection, particularly in responding to complex and rapidly changing network attack patterns, shows great promise. These results provide new insights and methods for future research in the field of IoT security protection, advancing the development of intelligent detection systems.

Comparison of computation time and efficiency

In terms of computation time and efficiency, this study evaluates the model’s efficiency using multiple metrics. Figure 7 shows the comparison of computational efficiency of binary classification tasks. Computational efficiency in multi-class tasks is shown in Fig. 8.

In Figs. 7 and 8, the WOA-GWO optimized LSTM model shows a computation time of 3.5 s for binary classification tasks, significantly outperforming the LSTM-RNN, which takes 5.2 s, and the multi-class SVM, which takes 6.0 s. This demonstrates the advantages of the WOA-GWO optimization algorithm in accelerating model training and inference. In terms of training time, the WOA-GWO + LSTM model takes 120 s, which is also less than that of other models, indicating that this algorithm can more quickly find the optimal solution, thereby reducing the total training time. Regarding memory usage, the WOA-GWO + LSTM’s 300 MB is relatively low, making it more efficient to run in resource-constrained environments and suitable for practical applications where memory consumption is a concern.

In terms of inference time, the WOA-GWO optimized LSTM model performs excellently, requiring only 20 ms, which is far lower than the inference times of other models. This high inference efficiency is crucial for real-time network IDS, ensuring that the system can respond quickly to potential threats. In multi-class tasks, the WOA-GWO optimized LSTM model also demonstrates outstanding performance, with a computation time of 4.8 s, a training time of 125 s, memory usage of 310 MB, and an inference time of 22 ms. Compared to other models, these metrics further confirm the efficiency of the WOA-GWO optimization algorithm in handling complex tasks. These results indicate that the WOA-GWO optimized LSTM model possesses advantages in both accuracy and efficiency, making it a powerful tool in the field of network security defense, capable of playing a significant role in addressing complex and rapidly changing network attacks.

Stability evaluation

To evaluate the model’s stability, experiments were conducted using different random seeds, and the corresponding performance metrics were recorded. Results are presented in Fig. 9 (Random Seed 1), Table 4 (Random Seed 2), and Table 5 (Random Seed 3).

The performance metrics of the WOA-GWO optimized LSTM model show minimal fluctuations across different random seeds, maintaining an accuracy of over 96.5%. This indicates its strong robustness in the face of dataset variations. In contrast, the performance of other models is relatively unstable, with their accuracies exhibiting significant fluctuations across the different random seeds, demonstrating their sensitivity to varying data conditions.

Table 6 presents a comparison of performance metrics across models. The WOA-GWO optimized model achieved an accuracy of 96.6% ± 0.4% in binary classification tasks (95% confidence interval: [96.2%, 97.0%]), significantly outperforming LSTM-RNN (90.4% ± 0.7%) and SVM (88.5% ± 1.1%). This suggests a narrower performance fluctuation and greater stability. In the multiclass classification task, the WOA-GWO model achieved an F1-score of 94.3% ± 0.5%. In contrast, the confidence interval widths of the baseline models generally exceeded 1.2%. This further confirms the robustness advantage provided by the hybrid optimization algorithm.

The results of statistical significance tests are presented in Table 7. Based on paired t-tests, the WOA-GWO model consistently demonstrated statistically significant superiority across all comparison experiments. In the binary classification task, the accuracy difference between WOA-GWO and LSTM-RNN produced a t-value of 18.7 (p < 0.001). This indicates that the observed performance gap is highly unlikely to be due to random variation. In the multiclass scenario, the F1-score comparison between WOA-GWO and SVM resulted in a t-value of 15.2 (p < 0.001), further validating the robustness of the hybrid algorithm in detecting diverse attack patterns. Even when compared to the InfoGain-based feature selection method, the binary classification t-value reached 12.4 (p < 0.001), highlighting the significant advantage achieved through the integrated use of feature selection and optimization.

ROC curve and AUC analysis

The AUC values for the binary classification task are shown in Fig. 10. As depicted, the WOA-GWO model achieved an AUC of 0.983, with a standard deviation of only 0.003, significantly surpassing that of LSTM-RNN (0.942) and SVM (0.921). The ROC curve of WOA-GWO approaches that of a perfect classifier (AUC = 1.0), indicating stable classification performance across different thresholds. Notably, the model maintains a true positive rate (TPR) above 98% even in the low false positive rate (FPR < 5%) region.

Figure 11 further highlights the models’ detection capabilities across different attack types in the multiclass classification task. WOA-GWO achieved an AUC of 0.978 for DoS attacks, representing a 3.5% improvement over LSTM-RNN (0.943). For the rare U2R attack class, the AUC reached 0.907, outperforming other models by 6.2% to 8.1%. Notably, both the InfoGain method and SVM underperformed on U2R detection (AUC < 0.83), underscoring the hybrid algorithm’s advantage in capturing rare attack features. This result is consistent with the low false positive rate observed in the U2R section of the confusion matrix, confirming that WOA-GWO effectively mitigates class imbalance through dynamic feature selection and parameter tuning.

Confusion matrix analysis

The confusion matrix results for the binary classification task are presented in Table 8. The WOA-GWO model exhibited a false positive rate (FP) of only 1.2% and a false negative rate (FN) of 0.8%, which are significantly lower than those of LSTM-RNN (3.5% and 4.1%, respectively). These results highlight the notable advantage of the hybrid optimization algorithm in reducing both false alarms and missed detections. This is especially critical in high-security IoT scenarios, where low false positive rates help prevent legitimate services from being wrongly blocked.

By attack category, the classification accuracies of the multiclass confusion matrix are shown in Table 9. The results further illustrate that the WOA-GWO model achieved an 89.7% detection accuracy for the rare U2R attack type, representing a 13.3% improvement over LSTM-RNN (76.4%). It also maintained superior performance in detecting common attack types such as DoS, achieving an accuracy of 98.5%. Notably, traditional methods (e.g., InfoGain feature selection and SVM) performed the worst on U2R detection (accuracy < 74%), highlighting the capability of the WOA-GWO hybrid algorithm to capture low-frequency attack patterns through dynamic feature optimization.

Discussion

The performance improvement achieved by the proposed WOA-GWO hybrid optimization framework can be attributed to the synergistic design of swarm intelligence algorithms. As noted by Badman et al. (2020), a key advantage of swarm intelligence lies in its ability to balance multi-scale search capabilities through the simulation of biological behaviors50. In this study, the global spiral search mechanism of the WOA was combined with the hierarchical hunting strategy of the GWO algorithm. This hybrid design effectively mitigates common limitations associated with single algorithms, such as susceptibility to local optima or insufficient convergence speed. The dynamic coupling strategy aligns with the “exploration–exploitation adaptive switching” theory proposed by Blanco et al. (2024)51, enabling efficient localization of optimal feature subsets and model parameter combinations in complex solution spaces, thereby enhancing the precision of attack feature recognition.

However, in real-world IoT environments, device heterogeneity may pose deployment challenges. For instance, variations in computational capabilities across edge nodes could lead to performance degradation of the optimized lightweight model under high-latency or low-bandwidth conditions. This observation is consistent with Ramamoorthi’s (2023) findings on “dynamic adaptation in edge intelligence”52. Additionally, the uneven spatiotemporal distribution of attack traffic—such as the coexistence of bursty DDoS attacks and low-frequency stealth attacks—may impact the stability of feature extraction. To address these dynamic threat environments, online incremental learning mechanisms should be introduced, such as the sliding window strategy proposed by Gou et al. (2022) for processing dynamic data streams53.

Regarding the contribution of ANN within the ensemble framework, their relatively limited performance may be related to feature space adaptability. In ensemble learning, RF, due to their parallel structure and randomized feature selection, are better suited to capturing nonlinear patterns in high-dimensional data. In contrast, ANNs are more sensitive to input feature scaling and reliant on large volumes of training data, which may hinder their generalization capabilities for certain attack types. This phenomenon aligns with the analysis by Allen et al. (2022) on “complementarity in heterogeneous models.” Their findings suggest that when base models differ significantly in how they extract features, ensemble weights should be dynamically adjusted to balance each model’s contribution54.

To prevent overfitting, this study employs a dual mechanism to ensure model robustness. First, training is halted early based on the Early Stopping strategy, whereby training is terminated when the validation loss fails to improve over several consecutive epochs. Second, both Dropout and L2 regularization are applied to constrain neural network weights, reducing sensitivity to noisy features. These techniques parallel the “regularization–pruning joint optimization” framework introduced by Li et al. (2024)55. In particular, when integrating high-weight RF, constraining the depth of decision trees and the number of leaf nodes effectively prevents overfitting to the training data.

In terms of execution time and real-time performance, the WOA-GWO hybrid optimization increases training overhead during the initial phase. However, through feature dimensionality reduction and parameter consolidation, the time complexity during inference is significantly reduced. For example, on resource-constrained devices, the optimized model can achieve millisecond-level response times by leveraging precomputed feature mapping tables and parameter caching techniques. This approach aligns with the “offline optimization–lightweight online inference” paradigm advocated by Gupta et al. (2020)56. Nonetheless, for ultra-large-scale IoT networks, further exploration of distributed optimization frameworks is needed to balance global model consistency with localized adaptation requirements. In addition, the model’s varying detection performance across attack types can be attributed to differences in feature separability and the inherent nature of the attack behaviors. For instance, DoS attacks typically involve abrupt traffic rate changes and protocol anomalies, making their statistical features easier to capture. In contrast, U2R attacks exploit privilege escalation vulnerabilities and exhibit stealthy behaviors, resulting in feature distributions that closely resemble those of normal operations. This challenge may be addressed using the multimodal feature fusion approach proposed by Huddar et al. (2020)57. Their method incorporates contextual semantic analysis and protocol semantic embedding to strengthen the model’s ability to represent complex, low-frequency attacks at the semantic level. As a result, it helps reduce detection blind spots. Future study will integrate knowledge graph technologies to construct an inference network linking attack behaviors with device states, aiming to improve the comprehensiveness of threat perception in heterogeneous scenarios.

At the methodological level, compared with recent hybrid frameworks that combine Particle Swarm Optimization (PSO) with machine learning models, the proposed WOA-GWO framework introduces two key innovations. First, in terms of the search mechanism, PSO relies on a linear combination of global and individual historical bests, which can lead to premature convergence to local optima. In contrast, WOA-GWO synergistically combines the spiral bubble-net feeding strategy of whales (global search) with the hierarchical hunting behavior of grey wolves (localized triangular encirclement), achieving multi-scale coverage of the solution space. Second, regarding the integration strategy, most existing studies use PSO to optimize only the parameters of a single model. In contrast, WOA-GWO jointly optimizes both feature selection and LSTM hyperparameters, thereby enhancing model robustness through a two-stage optimization coupling process. For example, the PSO-enhanced deep autoencoder reinforcement learning model proposed by Roy et al. (2025)58 focuses solely on optimizing neural network weights and does not incorporate dynamic pruning of the feature space. In comparison, the method presented in this study achieves adaptive feature subset selection through the combined use of information gain and optimization algorithms.

At the data level, although the Network Security Laboratory–Knowledge Discovery in Databases (NSL-KDD) dataset is widely used in intrusion detection research, its attack types are primarily derived from the KDD Cup 1999 dataset, and it lacks coverage of emerging advanced persistent threats (APTs) and zero-day attacks. In contrast, more recent benchmark datasets such as Canadian Institute for Cybersecurity Intrusion Detection Dataset 2017 (CICIDS2017) include not only traditional attacks like DDoS and brute force, but also modern attack patterns such as web-based intrusions and botnet command-and-control activities. To evaluate and enhance the generalization capability of the proposed model, future research will adopt a feature abstraction strategy during the design phase. This involves standardizing raw traffic data into unified vectors composed of temporal features, protocol characteristics, and payload statistics. By decoupling attack patterns from specific protocol implementations through feature engineering, the WOA-GWO hybrid optimization framework can adaptively extract generalized attack features across datasets, thereby offering strong potential for transferability to modern datasets such as CICIDS2017.

Conclusion

This study presents a hybrid intelligent framework that combines the WOA with the GWO algorithm to significantly improve network intrusion detection in IoT environments. By integrating WOA’s global spiral search with GWO’s local social hierarchy hunting strategy, the model effectively balances complex attack feature extraction with adaptability to resource-constrained settings. Experimental results show that the framework outperforms traditional methods in both binary and multi-class attack detection, notably enhancing detection of low-frequency threats such as U2R attacks. Nonetheless, some limitations persist. First, the experiments were conducted using the widely adopted NSL-KDD dataset, which lacks coverage of emerging threats like zero-day attacks and does not capture traffic heterogeneity or device dynamics characteristic of real-world IoT environments. Second, the current optimization focuses mainly on offline training and has not been validated for latency sensitivity or computational load balancing in real-time traffic processing.

Future research will address three key areas. First, the model’s generalizability will be tested on modern datasets, such as the CICIDS2017, to evaluate its adaptability to contemporary attack patterns. Second, a lightweight incremental learning mechanism will be developed to leverage the resource constraints of edge computing nodes, allowing dynamic online updates of model parameters to handle the spatiotemporal evolution of attacks in large-scale IoT networks. Finally, distributed intrusion detection under a federated learning framework will be explored, combining local model optimization on edge devices with global knowledge aggregation to enhance system resilience while preserving data privacy. These advances aim to transition the hybrid optimization algorithm from theoretical proof-of-concept to practical deployment, supporting the development of adaptive, low-latency IoT security systems.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Mazhar, T. et al. Analysis of IoT security challenges and its solutions using artificial intelligence. Brain Sci. 13(4), 683 (2023).

Kumar, M. et al. Healthcare Internet of Things (H-IoT): Current trends, future prospects, applications, challenges, and security issues. Electronics 12(9), 2050 (2023).

Yaacoub, J. P. A. et al. Ethical hacking for IoT: Security issues, challenges, solutions and recommendations. Internet Things Cyber-Phys. Syst. 3, 280–308 (2023).

Kamalov, F. et al. Internet of medical things privacy and security: Challenges, solutions, and future trends from a new perspective. Sustainability 15(4), 3317 (2023).

Telo, J. Smart city security threats and countermeasures in the context of emerging technologies. Int. J. Intell. Autom. Comput. 6(1), 31–45 (2023).

Abed, A. K. & Anupam, A. Review of security issues in Internet of Things and artificial intelligence-driven solutions. Secur. Privacy 6(3), e285 (2023).

Sharma, R. & Arya, R. Security threats and measures in the Internet of Things for smart city infrastructure: A state of art. Trans. Emerg. Telecommun. Technol. 34(11), e4571 (2023).

Cvitić, I. et al. An overview of smart home iot trends and related cybersecurity challenges. Mobile Netw. Appl. 28(4), 1334–1348 (2023).

Paul, M. et al. Digitization of healthcare sector: A study on privacy and security concerns. ICT Express 9(4), 571–588 (2023).

Ahad, A. & Tahir, M. Perspective—6G and IoT for intelligent healthcare: Challenges and future research directions. ECS Sens. Plus 2(1), 011601 (2023).

Heidari, A. & Jabraeil Jamali, M. A. Internet of Things intrusion detection systems: A comprehensive review and future directions. Clust. Comput. 26(6), 3753–3780 (2023).

Thakkar, A. & Lohiya, R. A survey on intrusion detection system: Feature selection, model, performance measures, application perspective, challenges, and future research directions. Artif. Intell. Rev. 55(1), 453–563 (2022).

Hnamte, V. & Hussain, J. DCNNBiLSTM: An efficient hybrid deep learning-based intrusion detection system. Telemat. Inform. Rep. 10, 100053 (2023).

Awajan, A. A novel deep learning-based intrusion detection system for IOT networks. Computers 12(2), 34 (2023).

Diro, A. A. & Chilamkurti, N. Distributed attack detection scheme using deep learning approach for Internet of Things. Futur. Gener. Comput. Syst. 82, 761–768 (2018).

Al-Shareeda, M. A., Manickam, S. & Saare, M. A. DDoS attacks detection using machine learning and deep learning techniques: Analysis and comparison. Bull. Electr. Eng. Inform. 12(2), 930–939 (2023).

Zhang, J. et al. Deep learning based attack detection for cyber-physical system cybersecurity: A survey. IEEE/CAA J. Autom. Sin. 9(3), 377–391 (2021).

Saheed, Y. K. et al. A machine learning-based intrusion detection for detecting internet of things network attacks. Alex. Eng. J. 61(12), 9395–9409 (2022).

Butun, I., Österberg, P. & Song, H. Security of the Internet of Things: Vulnerabilities, attacks, and countermeasures. IEEE Commun. Surv. Tutor. 22(1), 616–644 (2019).

Shafiq, M. et al. The rise of “Internet of Things”: Review and open research issues related to detection and prevention of IoT-based security attacks. Wirel. Commun. Mobile Comput. 2022(1), 8669348 (2022).

Parra, G. D. L. T. et al. Detecting Internet of Things attacks using distributed deep learning. J. Netw. Comput. Appl. 163, 102662 (2020).

Mishra, N. & Pandya, S. Internet of things applications, security challenges, attacks, intrusion detection, and future visions: A systematic review. IEEE Access 9, 59353–59377 (2021).

Alabsi, B. A., Anbar, M. & Rihan, S. D. A. Conditional tabular generative adversarial based intrusion detection system for detecting ddos and dos attacks on the internet of things networks. Sensors 23(12), 5644 (2023).

Santhosh Kumar, S. V. N., Selvi, M. & Kannan, A. A comprehensive survey on machine learning-based intrusion detection systems for secure communication in Internet of Things. Comput. Intell. Neurosci. 2023(1), 8981988 (2023).

Rashid, M. M. et al. A federated learning-based approach for improving intrusion detection in industrial internet of things networks. Network 3(1), 158–179 (2023).

Bin, S. Social network emotional marketing influence model of consumers’ purchase behavior. Sustainability 15(6), 5001 (2023).

Alabsi, B. A., Anbar, M. & Rihan, S. D. A. CNN-CNN: Dual convolutional neural network approach for feature selection and attack detection on internet of things networks. Sensors 23(14), 6507 (2023).

Laghrissi, F. E. et al. Intrusion detection systems using long short-term memory (LSTM). J. Big Data 8(1), 65 (2021).

Kasongo, S. M. & Sun, Y. A deep long short-term memory based classifier for wireless intrusion detection system. ICT Express 6(2), 98–103 (2020).

Khan, M. A. HCRNNIDS: Hybrid convolutional recurrent neural network-based network intrusion detection system. Processes 9(5), 834 (2021).

Vijayanand, R. & Devaraj, D. A novel feature selection method using whale optimization algorithm and genetic operators for intrusion detection system in wireless mesh network. IEEE Access 8, 56847–56854 (2020).

Pingale, S. V. & Sutar, S. R. Remora whale optimization-based hybrid deep learning for network intrusion detection using CNN features. Expert Syst. Appl. 210, 118476 (2022).

Tajari Siahmarzkooh, A. & Alimardani, M. A novel anomaly-based intrusion detection system using whale optimization algorithm WOA-based intrusion detection system. Int. J. Web Res. 4(2), 8–15 (2021).

Kaliraj, P. Intrusion detection using whale optimization based weighted extreme learning machine in applied nonlinear analysis. Commun. Appl. Nonlinear Anal. 31(2s), 186–203 (2024).

Jyothi, K. K. et al. A novel optimized neural network model for cyber attack detection using enhanced whale optimization algorithm. Sci. Rep. 14(1), 5590 (2024).

Khaled, K. & Singla, M. K. Predictive analysis of groundwater resources using random forest regression. J. Artif. Intell. Metaheuristics 9, 11–19 (2025).

Elshabrawy, M. A review on waste management techniques for sustainable energy production. Metaheuristic Optim. Rev. 3, 47–58 (2025).

Alzakari, S. A. et al. Early detection of Potato Disease using an enhanced convolutional neural network-long short-term memory Deep Learning Model. Potato Res. 2024, 1–19 (2024).

El-Kenawy, E. S. M. et al. Predicting potato crop yield with machine learning and deep learning for sustainable agriculture. Potato Res. 2024, 1–34 (2024).

El-Kenawy, E. S. M. et al. Greylag goose optimization: Nature-inspired optimization algorithm. Expert Syst. Appl. 238, 122147 (2024).

Hassib, E. M. et al. An imbalanced big data mining framework for improving optimization algorithms performance. IEEE Access 7, 170774–170795 (2019).

Khafaga, D. S. et al. Improved prediction of metamaterial antenna bandwidth using adaptive optimization of LSTM. Comput. Mater. Continua 73(1), 865–881 (2022).

Alzubi, O. A. et al. An IoT intrusion detection approach based on salp swarm and artificial neural network. Int. J. Netw. Manag. 35(1), e2296 (2025).

Alzubi, J. A. et al. A blended deep learning intrusion detection framework for consumable edge-centric iomt industry. IEEE Trans. Consum. Electron. 70(1), 2049–2057 (2024).

Alzubi, O. A. BotNet attack detection using MALO-based XGBoost model in IoT environment. In International Conference on Computing and Communication Networks, 679–690 (Springer Nature Singapore, Singapore, 2023).

Alweshah, M. et al. Intrusion detection for the internet of things (IoT) based on the emperor penguin colony optimization algorithm. J. Ambient. Intell. Humaniz. Comput. 14(5), 6349–6366 (2023).

Alzubi, O. A. et al. Optimized machine learning-based intrusion detection system for fog and edge computing environment. Electronics 11(19), 3007 (2022).

Alzubi, O. A. A deep learning-based frechet and dirichlet model for intrusion detection in IWSN. J. Intell. Fuzzy Syst. 42(2), 873–883 (2022).

Qiqieh, I. et al. An intelligent cyber threat detection: A swarm-optimized machine learning approach. Alex. Eng. J. 115, 553–563 (2025).

Badman, R. P., Hills, T. T. & Akaishi, R. Multiscale computation and dynamic attention in biological and artificial intelligence. Brain Sci. 10(6), 396 (2020).

Blanco, N. J. & Sloutsky, V. M. Exploration, exploitation, and development: Developmental shifts in decision-making. Child Dev. 95(4), 1287–1298 (2024).

Ramamoorthi, V. Real-time adaptive orchestration of AI microservices in dynamic edge computing. J. Adv. Comput. Syst. 3(3), 1–9 (2023).

Gou, X. et al. A sketch framework for approximate data stream processing in sliding windows. IEEE Trans. Knowl. Data Eng. 35(5), 4411–4424 (2022).

Allen, R. & Rehbeck, J. Latent complementarity in bundles models. J. Econom. 228(2), 322–341 (2022).

Li, X. et al. An adaptive joint optimization framework for pruning and quantization. Int. J. Mach. Learn. Cybern. 15(11), 5199–5215 (2024).

Gupta, B. B. & Quamara, M. An overview of Internet of Things (IoT): Architectural aspects, challenges, and protocols. Concurr. Comput. Pract. Exp. 32(21), e4946 (2020).

Huddar, M. G., Sannakki, S. S. & Rajpurohit, V. S. Multi-level feature optimization and multimodal contextual fusion for sentiment analysis and emotion classification. Comput. Intell. 36(2), 861–881 (2020).

Roy, D. K. & Kalita, H. K. Enhanced deep autoencoder-based reinforcement learning model with improved flamingo search policy selection for attack classification. J. Cybersecur. Privacy 5(1), 3 (2025).

Funding

The study was supported by “Shanxi Provincial Basic Research Program of Youth Science Research Project, China (Grant No. 20210302124522)”;“Shanxi Provincial Science and technology innovation project, China (Grant No. 2020L0705)”;“Key research and development project of Luliang City, China (Grant No. 2022NYGG28)”.

Author information

Authors and Affiliations

Contributions

As the sole author, I was responsible for all aspects of the research, including research design, data collection and analysis, result interpretation, as well as the writing and revision of the paper. I confirm my full responsibility for the content of the manuscript and academic ethics.

Corresponding author

Ethics declarations

Competing interests

The author declares no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Shan, L. (IoT) Network intrusion detection system using optimization algorithms. Sci Rep 15, 21706 (2025). https://doi.org/10.1038/s41598-025-04638-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-04638-5

Keywords

This article is cited by

-

Design of an iterative method for adaptive federated intrusion detection for energy-constrained edge-centric 6G IoT cyber-physical systems

Scientific Reports (2025)

-

IoHT attack detection using transformer-aware feature selection with CNN-BiLSTM optimized by hybrid WOA–GWO

Discover Artificial Intelligence (2025)