Abstract

Ovarian Cancer is a malignancy that develops from ovarian cells and is frequently characterized by aberrant cell proliferation that leads to the creation of tumors within the ovaries. The high death rate and often delayed discovery of Ovarian Cancer make it a serious healthcare concern. Due to the annual 207,000 fatalities and 314,000 new cases worldwide, Ovarian Cancer poses a serious threat to public health, making quick and precise detection and classification techniques more essential. This work discusses the importance of Ovarian Cancer diagnosis and presents a new model for Ovarian Cancer classification. It also showcases a comparative analysis with other state-of-the-art models for Ovarian Cancer. Using an Ovarian Cancer image dataset which has data samples named Clear Cell, Endometri, Mucinous, Serous, and Non-Cancerous, it compares the proposed OvCan-FIND model to a wide range of CNN-based architectures, such as Inception V3, different EfficientNet variants, ResNet152V2, MobileNet, MobileNetV2, VGG16, VGG19, and Xception. The study examines the most recent Ovarian Cancer classification algorithms in this context to increase prognosis and diagnostic accuracy; our proposed OvCan-FIND model outperforms base models with an exceptional accuracy of 99.74%. This model presents significant prospects for enhancing ovarian cancer early identification and diagnosis, which will ultimately enhance patient outcomes.

Similar content being viewed by others

Introduction

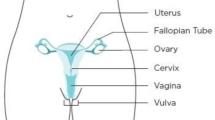

Ovarian Cancer (OC), the eighth most common disease in women globally, poses a significant threat to women’s health due to its insidious nature, which is characterized by challenges in early detection, frequently resulting in a late-stage diagnosis with a poor prognosis1. OC is primarily made up of carcinomas, specifically epithelial-derived tumors, and has a diverse histological landscape that includes mucinous, clear cell, endometrioid, high-grade serous, and low-grade serous subtypes, as well as less common non-epithelial ovarian malignancies like mesenchymal, germ cell, and sex cord-stromal tumors. Notably, high-grade serous carcinoma accounts for 70% of all OC cases and has emerged as the most common subtype2. In the early stages, OC is asymptomatic2. It combined with an absence of efficient screening techniques presents a significant public health concern. OC is notoriously difficult to recognize and diagnose since it presents with ambiguous symptoms similar to menopausal symptoms. By the time symptoms appear, the disease has often progressed significantly, with primary malignant tumors extending throughout the abdomen, covering the ovaries, Fallopian tubes, and peritoneum (FIGO4 Stage 3)1. This delayed diagnosis contributes to an alarming mortality rate, with 314,000 new cases detected each year, resulting in 207,000 fatalities worldwide1. Precise classification of OC subtypes is crucial for developing individualized treatment strategies and addressing each subtype’s unique prognostic and therapeutic nuances. Several histological subtypes of the illness exist, the most prevalent of which are epithelial ovarian carcinomas2. Due to the unique morphological and prognostic features of these subtypes, accurate classification is required to provide patients with more effective treatment plans2. Though OC diagnosis is still best achieved by histopathological evaluation, which involves microscopic inspection of tissue specimens, Developing strong diagnostic tools is essential for early diagnosis and intervention of the malignancy, which is often detected at an advanced stage and is linked to a poor prognosis and high fatality rates. Automated categorization techniques are necessary to improve efficiency and accuracy because this procedure is labor-intensive, time-consuming, and susceptible to inter-observer variability. Automated categorization systems can speed up the diagnostic procedure, help identify OC early, and allow for individualized treatment plans based on histological subtypes. The need for computer-aided diagnosis methods to help interpret histopathological images and stratify OC cases according to subtype and stage is further highlighted by the fact that there is a global scarcity of pathologists. These devices can also enable medical professionals to provide prompt and accurate diagnoses in environments with limited resources, which will eventually improve patient care and survival rates. Advances in artificial intelligence and digital pathology have transformed the study of digitized whole slide images (WSIs) of ovarian tissue samples using automated classification approaches, notably those based on convolutional neural networks3. These sophisticated algorithms provide an appealing alternative to the difficulties involved with human histological investigation, demonstrating excellent accuracy in differentiating between distinct subtypes of OC. CNN-based models offer precise categorization by extracting complex features from histopathology images, which speeds up the diagnostic process and allows for early detection. Furthermore, these AI-driven systems have enormous potential for guiding personalized treatment regimens based on histological subtypes, hence improving patient care and results3. Thus, more research is a must for improved outcomes and better patient treatments.

Several recent studies have significantly advanced the field of OC prediction and treatment through the application of artificial intelligence methodologies. One such study4 conducted a comprehensive examination of OC prediction, focusing on the decision tree algorithm and machine learning techniques. The investigation introduced the MIDR (Machine Intelligent Doctor) model, designed to enhance accessibility to OC prediction, particularly for individuals with limited access to medical resources. Encouraging findings revealed the significant accuracy of the AI-based approach in OC classification, surpassing conventional prediction methods. Additionally, the study underscored the importance of scrutinizing factors such as dataset quality and generalizability across diverse populations to ensure the model’s reliability. Another pioneering study2,5 introduced a methodology for evaluating intratumoral cellularity in high-grade ovarian epithelial cancer, leveraging quantitative features derived from medical images and AI algorithms. The proposed technique achieved impressive accuracy in personalized OC treatment, emphasizing the potential of AI-driven methodologies in improving patient care. Furthermore, a study5 introduced a deep-learning AI framework utilizing histopathology images to anticipate OC responsiveness to platinum-based chemotherapy, demonstrating the model’s efficacy in accurately predicting treatment outcomes. Additionally, another study6 utilized a deep learning ensemble framework to predict treatment response in OC patients, achieving near-perfect prediction of patient response to bevacizumab treatment. Lastly, a study2 employed a deep learning approach to derive a signature from preoperative MRI data, facilitating the prediction of recurrence risk among patients diagnosed with high-grade serous OC. These studies collectively highlight the transformative potential of AI-driven strategies in advancing predictive healthcare solutions for OC diagnosis and treatment planning. The real-world applicability of current OC classification algorithms is hampered by problems with generalizability, overfitting, lack of clinical data integration, and dataset restrictions. Due to small and unbalanced datasets, many deep learning models currently in use suffer from inconsistent performance, which lowers classification task accuracy and reliability. These issues are addressed by our proposed OvCan-FIND model, which employs a task-specific deep-learning framework intended to reduce overfitting and improve resilience. Our methodology guarantees improved safety and accuracy in the categorization of histopathology images by streamlining the feature extraction and classification procedures. Furthermore, thorough tests against cutting-edge models and a variety of classification criteria confirm its exceptional performance, making it a more dependable and effective method for ovarian cancer screening. Even though there have been so much research on it, still there is always space for more accurate diagnosis and more improvements. So, our paper’s main purpose is to try to fill those gaps where more accurate results in OC classification can be achieved.

The creation and application of strong classification algorithms offer great potential for revolutionizing the field of OC diagnosis and treatment as research in these area progresses. The aim is to provide personalized patient care worldwide by enhancing the identification of OC through new insights obtained from this collaborative, multidisciplinary research initiative. So, the overall contributions of the analysis are:

-

We proposed a task-specific advanced deep learning model for Ovarian Cancer Classification.

-

Our strong and robust OvCan-FIND model is built for image classification by eliminating overfitting and performance issues.

-

Enhancing safety and accuracy for Classification tasks on Histopathological images deploying the proposed model.

-

We evaluated our proposed model with comparison of various state-of-the-art models and multiple classification task performance metrics.

Following the Introduction, the paper is structured as follows: In Section 2, the Literature Review has been presented. After that, Methodology was covered in Section 3. Followed by, Results Analysis in Section 4 and Discussion in Section 5. Finally, Section 6 presents the Conclusion.

Literature review

Numerous latest studies have made significant strides in leveraging advanced technologies for the early detection, classification, diagnosis, and prognosis of OC. One study6,7 introduces a novel convolutional neural network algorithm tailored for predicting and diagnosing OC with impressive accuracy for classification. Trained on a histopathological image dataset, the CNN achieved a remarkable 94% accuracy rate, accurately identifying 95.12% of cancerous cases and 93.02% of healthy cells. However, limitations such as reliance on a public TCGA dataset, retrospective design, and absence of clinical data underscore the need for future research collaborations to validate the model on diverse patient populations and incorporate comprehensive clinical datasets to enhance its robustness and real-world applicability. Another study8 delves into prognostic indicators within pathological images of OC, utilizing a deep survival network. Leveraging the TCGA-OV dataset, the study employs meticulous preprocessing and segmentation techniques to predict hazard scores, aiding in stratifying cases into high and low-risk groups. Despite achieving a mean C-index value of 0.5789, indicating moderate prediction ability, the study highlights the potential of deep learning frameworks to offer clinically relevant prognostic insights in OC. Additionally, a paper9 presents a novel Deep Semi-Supervised Generative Learning with Enhanced U-Net and fused Deep Convolutional Neural Network (DSSGL-EUNet-DCNN) model for segmenting and classifying OC from CT scans. Experimental results demonstrate that the proposed model outperforms traditional approaches, reducing training errors and time costs while achieving higher accuracy in segmentation and classification tasks. Furthermore, another innovative technique10 introduces optical photothermal infrared (O-PTIR) imaging coupled with deep learning to enhance spatial resolution and enable sub-cellular spectroscopic examination of tissue for OC diagnosis and classification. Leveraging a dataset comprising patient samples, the approach achieves a high classification accuracy for ovarian cell subtypes and proposes quantitative biomarkers for early cancer detection and classification. Lastly, a comprehensive examination of several machine learning algorithms used in ovarian tumor classification11 highlights the efficiency of models like the C5.0 in predicting OC recurrence and recognizes CNNs as particularly effective for tumor diagnosis. While machine learning techniques show promise in improving accuracy compared to standard statistical methods, the study acknowledges limitations in discussing algorithm shortcomings and potential data quality difficulties, as well as constraints in ultrasound imaging, such as examiner-dependency and outcome variability. Furthermore, the study12 achieves a 100% accuracy improvement in cancer subtype classification by employing Support Vector Machine (SVM) and Cat Swarm Optimization (CSO) for feature selection. High processing requirements, the possibility of overfitting, and the requirement for more extensive validation on a variety of datasets are some of the drawbacks. Wavelet scattering transform (WST) and YOLO-based deep learning approaches have been used in recent studies to construct automated computer vision models for diagnosing dengue from peripheral blood smear (PBS) pictures13. Notwithstanding issues with dataset specificity, the WST approach and YOLOv8 models demonstrated the potential for early dengue detection and their applicability to other mosquito-borne diseases, achieving a classification accuracy of 98.7% with support vector machines and a mean accuracy of 99.3%, respectively13. In a similar vein, another study presented a deep learning approach for the detection of Alzheimer’s disease (AD) using T1-weighted MRI images. Using a volumetric convolutional neural network (ConvNet) that was improved by preprocessing and augmentation techniques, the study achieved 97% accuracy in differentiating AD from normal controls (NC)14. In order to advance automated AD diagnosis, this study addressed the difficulties posed by small datasets and highlighted the crucial importance of high-resolution MRI scans14. Additionally, by comparing GoogLeNet, ResNet18, and ResNet50, the identification of sickle cell disease (SCD) utilising deep neural networks and explainable artificial intelligence (XAI) was investigated; the best accuracy of 94.90% was attained by ResNet5015. In addition to outlining future strategies to address dataset generality, class imbalance, and ethical challenges in AI-driven healthcare solutions, the work used Grad-CAM and transfer learning to improve classification accuracy and interpretability, providing pathologists with significant benefits15.

Moreover, recent research has showcased a myriad of innovative approaches aimed at enhancing the detection, classification, and diagnosis of ovarian tumors, leveraging cutting-edge technologies such as deep learning and machine learning. For instance, one study16 proposes an advanced methodology utilizing the Anisotropic Diffusion Filter (ADF) for preprocessing, Improved Whale Search Optimization (IWSO) for segmentation, and Deep Neural Network (DNN) for classification in ultrasound images. The approach demonstrates superior performance across various metrics, including sensitivity, specificity, accuracy, and error rates, making it a promising technique for clinical use. This study17 analyzes AI techniques in omics data processing, highlighting their application in precision therapy, biomarker discovery, and disease classification. A small sample size, difficulties with data preprocessing, and the requirement for additional AI algorithm tuning are some of the limitations, too. Another investigation18 delves into machine learning approaches for accurately classifying benign ovarian tumors and OC, highlighting the significance of feature selection in improving model performance. While Random Forest with feature selection obtains the highest accuracy, deep learning approaches show comparable results, albeit with limitations such as dataset reliance on Kaggle and lack of detailed methodology information. Additionally, a study19 focuses on predicting OC types using classifiers like Support Vector Machine (SVM), Random Forest, and XGBoost. Despite challenges posed by an imbalanced dataset, the research underscores the need for more robust preprocessing methods and feature engineering techniques. Furthermore, another investigation20 introduces OCCNet, an Ensemble Attention Mechanism (EAM) designed to handle imbalanced datasets and categorize OC subtypes. The study demonstrates the model’s excellent subtype identification capabilities, albeit acknowledging the necessity for further fine-tuning and data augmentation to improve consistency. Moreover, a pioneering attempt21 utilizes the VGG-16 deep convolutional neural network architecture for automatic detection and classification of OC subtypes from histopathology images, showcasing its effectiveness despite dataset size constraints. Meanwhile, a novel transformer-based deep learning network22 demonstrates remarkable performance in detecting OC, yet issues regarding model generalizability and real-world applicability warrant further investigation. Additionally, a study23 explores the efficacy of deep learning models in distinguishing between malignant and non-cancerous ovarian histopathology images, with VGG-19 emerging as the top-performing model. Furthermore, an automated MRI analysis technique24 utilizing deep convolutional neural networks (DCNNs) shows promise in improving diagnostic precision, albeit requiring further validation in real-world clinical settings. Lastly, a unique strategy25 combining CNNs with Grey Wolf Optimization (GWO) demonstrates high accuracy in early OC diagnosis, while Ocys-Net26 showcases promising results in accurately classifying and diagnosing ovarian cysts, highlighting its potential for clinical applications.

Additionally, another paper11 addressed a wide range of methods used in OC research, from deep learning models for tumor segmentation and classification to preprocessing methods for CT scan images. The importance of deep learning approaches in medical image processing has been highlighted by the use of various architectures for classification, segmentation, and detection, including CNN, ResNet, DeepLabv3, TransUnet, and UNet, for a variety of applications3,27,28,29. Furthermore, high accuracy in tumor localization and segmentation has been shown by hybrid approaches combining detection and segmentation models, such as the attention U-Net segmentation model and the YOLO v5 detection model30. It highlights the significance of subtype-specific research in improving treatment outcomes. Furthermore, developments in model development and physician involvement suggest that computer-aided diagnostics (CAD) based on machine learning holds the potential for objective tumor assessment. Nevertheless, issues like overfitting and the requirement for bigger datasets as well as outside validation continue to exist31. However, there is still room for improvement due to constraints such as small dataset sizes and potential bias from manual segmentation. In the meantime, the integration of radiomics characteristics with deep learning has sought to increase tumor classification accuracy, sensitivity, and specificity32. In addition, research on molecular pathways and predictive models, such as evaluating the NHEJ pathway and forecasting treatment response, provides information on possible therapeutic targets and individualized treatment plans33,34. An extensive analysis of AI techniques used on histopathology images emphasizes the significance of physician participation and open reporting. Future developments include strong validations and better accessibility of data35. A thorough assessment of AI algorithms applied to histopathology images emphasizes the significance of clinician involvement and transparent presentation of data and code for enhancing therapeutic value. Furthermore, it has been demonstrated that combining multiomics data with artificial intelligence can improve model performance; in particular, data integration can help address issues like inter-observer variability and data scarcity that arise from manual segmentation36.

Table 1 summarizes and compares the existing research methods, their results and their limitations. The potential for deep learning models to improve diagnostic accuracy and offer prognostic insights that are pertinent to clinical settings. Notwithstanding encouraging outcomes, issues including overfitting, dataset dependence, and the requirement for more extensive, varied datasets and thorough clinical data continue. Furthermore, the use of artificial intelligence (AI) and deep learning techniques in OC research is the main emphasis of this literature review, which covers a wide range of novel approaches and methodologies. Studies have looked into a variety of AI models for tasks like tumor segmentation, classification, treatment response prediction, and prognosis evaluation. These models include convolutional neural networks, decision tree algorithms, and deep learning frameworks. The considerable accuracy attained by AI-driven methods for OC diagnosis, prognosis, and treatment planning are among the important discoveries. Yet, it can not be said that the results so far achieved are the best possible results or can not be improved further. Our research will prove to create a better version of the previous ones, and can help in achieving better results. In summary, the review highlights how AI-driven approaches can revolutionize the field of predictive healthcare for OC.

Methodology

The sources, sample standards, and any filtering methods employed for ensuring high-quality inputs for the model are all described in this section of the study, which also covers the data collection approach and sample data shown in Figure 1. Normalization, augmentation, and data splitting all crucial for enhancing model robustness and minimizing overfitting are covered in detail, as are other steps in the data pre-processing workflow. The development of the suggested model is also discussed, emphasizing the architecture’s distinctive design and the choice of evaluation metrics including accuracy, precision, recall, and F1 score to thoroughly evaluate the model’s classification performance.

General overview of the method

The categorization of OC through the comparison of different CNN models is the main emphasis of this study. The first phases in the method’s data pre-processing include making sure files are accessible, converting images to JPG format, and shrinking and padding them to standardize their size. To split a dataset, separate it into training, validation, and testing sets. To improve model generalization and boost dataset diversity, augmentation techniques such as brightness modification, rescaling, flipping, random rotation, and translation are used. The proposed model with preloaded ImageNet weights serves as the model architecture. Using the Functional API, more layers are added after removing the top layer. These layers include global average pooling, dropout layers for regularization, dense layers with ReLU activation and kernel regularization, and a final dense layer with softmax activation for multi-class prediction. To fine-tune the system to new weights, the first 100 layers are frozen, and then the layers after that are unfrozen. For multi-class classification, a categorical cross-entropy loss function and an Adam optimizer are used. To minimize overfitting and maximize model training, early stopping functions and learning rate scheduling are used. In general, the goal of this approach is to efficiently classify OC through the use of deep learning techniques, all the while maximizing model performance through architectural tweaks and data augmentation.

Dataset description

The dataset used in this research consists of 85 images that were taken from 42 patients who were receiving care at Smt. Kashibai Navale Medical College and General Hospital in Pune, India42. Interestingly, there are no malignant cells in these images, which poses a problem because there aren’t many people in the hospital’s database who have been treated for OC (OC) other than serous carcinoma. Competent pathologists made it easier to get patient samples and prepare slides in their lab thereafter. Staining cell samples with a Leica ICC50 microscopic camera allowed for the real-time transfer of high-definition images to laptops and smartphones. Furthermore, images of carcinomas indicative of each OC subtype were obtained from The Cancer Repository, an open-access resource that requires registration. As a preprocessing step, the RGB images were uniformly resized to ensure compliance with state-of-the-art (SOTA) models for OC prediction. images of carcinomas linked with OC, including malignant tumors across four different subtypes, are included in the dataset along with images of normal tissues and benign images of glandular tissues that are not tumors. With a focus on the subtypes classified as carcinomas, the main goal of this dataset is to make it easier to classify OC automatically using histopathological images. These four subtypes’ example images also non-cancerous histopathological images are displayed in Figure 1. The image distribution for the various classes’ trains, tests, and validations is also shown in Table 2. For training, validation, and testing, the images were divided into 80%−10%−10% ratios, respectively.

Data pre-processing

In order to increase our model’s resilience and versatility, we include many crucial phases in the image dataset preparation procedure. We initially utilize picture normalization to normalize pixel values so that our neural network’s input scale remains consistent. Following the normalization procedure, we incorporate data augmentation methods to enhance our dataset and lessen the likelihood of overfitting. Applying adjustments including converting images to JPG format, resizing and resizing them to a uniform size, rotating, flipping, zooming in or out, and moving them are all part of this approach. These augmentations increase the variety of our training data by improving the model’s capacity to handle distortions and variations in real-world images. The purpose of performing these preprocessing steps is to create a more robust and flexible model that can learn and generalize from the given data in an efficient manner, improving its performance on unknown images.

Proposed model

The benchmarking models are trained using the histopathology image dataset, as seen in Figure 2. To guarantee data consistency and accessibility, pre-processing procedures included resizing images, converting files to a common format, and verifying file accessibility. By adding modifications to the training data, data augmentation techniques such as random rotation, translation, flipping, zooming, and brightness alterations were used to improve the robustness and generalization of the model. To enable model evaluation and performance assessment, the dataset was divided into training, validation, and testing sets according to a standard ratio. Effective classification tasks were made possible by utilizing the state of the art base model, which had been pre-trained on ImageNet weights. This allowed us to have access to an effective standardized benchmarking method and protocol with resources for classification evaluation.

Our proposed model, OvCan-FIND, is designed with layers specifically aimed at enhancing classification accuracy. To condense feature maps, we implemented techniques that streamline the data. We then added layers to extract higher-level features and incorporated methods to prevent overfitting. By randomly deactivating certain neurons during training, we reduced the risk of overfitting and improved the model’s ability to generalize. Finally, the model is capable of predicting the likelihood that an image belongs to one of the five ovarian cancer classifications, ensuring accurate and reliable results.

The reason for selecting the EfficientNetV2B1 model as our base is that Tan and Le (2021) have shown that EfficientNetV2B1 balances accuracy and efficiency, making it a good choice for cancer classification on moderate-sized datasets (thousands to tens of thousands of images)43. Because of its compact architecture, which lowers computational needs, it performs well even on GPUs with low memory43. Faster training and inference are also made possible by its optimized design, which makes it a viable option for real-time clinical applications where prompt diagnosis is essential44. EfficientNetV2B1’s efficacy in medical imaging applications is further supported by its excellent accuracy in picture categorization tests44.

So, building on the EfficientNetV2B1 architecture, the OvCan-FIND model incorporates domain-specific improvements for the classification of ovarian cancer while utilizing its improved feature extraction capabilities. Depthwise separable convolutions, which preserve high representational capacity while lowering computing complexity, are incorporated into the model. The network’s capacity to recalibrate feature maps is further improved by the addition of squeeze-and-excitation (SE) blocks, which highlight important patterns that are essential for distinguishing between subtypes of ovarian cancer. Batch normalization is also used at various stages to reduce internal covariate shifts, speed up convergence, and stabilize learning. The model uses a hybrid activation method, using ReLU in the initial layers for effective feature extraction and the Swish activation function in deeper layers for smooth gradient flow. To increase robustness against fluctuations in histopathology pictures, a progressive resizing method is also used, in which images go through multi-scale training. OvCan-FIND is optimized for high generalization performance by fusing these cutting-edge methods with meticulous data augmentation and regularization, guaranteeing accurate classification results across a variety of datasets.

The convolutional neural network model OvCan-FIND was trained with hyperparameters that reveal information about the optimal configuration for classifying OC which is given in Table 3. The model architecture has six layers and roughly 6.93 million parameters. The first three layers are 3x3 convolutional filters, while the next three are fully linked layers in the head. Preprocessing causes the images to be scaled to (625, 450), and the training data to be standardized to a resolution of (224, 224) with three channels. The Adam optimizer trains the model, with an adjustable learning rate set at 0.0001. The ReduceLROnPlateau scheduler and EarlyStopping method are used to control learning. The categorical CrossEntropy loss function is used for multi-class classification, with a label smoothing default of 0.1. To improve data diversity, augmentation techniques like rotation, flipping, normalization, and zooming are used. L2 kernel regularization and dropout rates of 0.5 and 0.3 are examples of regularization techniques. The network uses the activation functions Swish and ReLU in different places. Class prediction for the five OC classes is made possible by the classifier’s use of the softmax function to generate probabilities across a maximum of 30 epochs during training, using batches of size 32.

Result analysis

This section outlines the hardware and software specs, libraries, and frameworks utilized to achieve optimal performance and reproducibility in the environmental setup for the suggested model. The results research comprises a thorough assessment of the model’s outputs, contrasting its classification performance with that of other designs and benchmarks. To evaluate the performance of our implemented models and compare them with our proposed OvCan-FIND, we used several evaluation metrics, such as Accuracy (Acc), Precision (Pre), Recall (Rec), and F1 Score. In addition, we produced a confusion matrix, which is the source of extra metrics, while not strictly speaking a performance indicator. The confusion matrix shows the difference between the projected labels and the ground truth labels visually. Whereas columns in the confusion matrix describe cases in an actual class, rows in the matrix define examples in To class. There are terms that depend on the confusion matrix: False Positive (FP), False Negative (FN), True Positive (TP), and True Negative (TN).

A thorough explanation of the mathematical intuitions behind the following is provided below: Precision (Pre), Recall (Rec), Accuracy (Acc), and F1 Score, where-

TP = The model correctly predicted a number of positive class samples.

TN = The model predicted a number of negative class samples with accuracy.

FP = The model incorrectly predicted a number of negative class samples.

FN = The model failed to accurately forecast a number of positive class samples.

Accuracy (Acc) = \(\frac{T P+T N}{T P+T N+F P+F N}\)

Precision (Pre) = \(\frac{T P}{T P+F P}\)

Recall (Rec) = \(\frac{T P}{T P+F N}\)

F1 Score = \(\frac{2 \times \text{ Precision } \times \text{ Recall } }{ \text{ Precision } + \text{ Recall } }\)

Overall, the study presents a comprehensive approach to improving OC using seven models, including Inception V3, EfficientNet B1, EfficientNet B2, EfficientNet B3, ResNet152V2, MobileNet, MobileNetV2, VGG16, VGG19, and Xception, which are Convolutional Neural Networks. In order to enhance representation using the dataset, the research focuses on merging OvCan-FIND with extra layers. The suggested approach uses deep learning on the image dataset, and it highlights the hyperparameter configuration for OvCan-FIND for the classification of OC.

Environment setup

Table 4 contains a thorough summary of the environment configuration used to train different CNN models. Table rows are associated with individual models and include key information like Model Name, GPU Name, Batch Size, Optimizer, Learning Rate, Epoch, Activation, and Data Augmentation.

Interestingly, a maximum of 30 epochs and a consistent batch size of 224x224 were used to train all models on the NVIDIA TESLA T4 GPU. To add non-linearity to the network, the activation function ReLU was applied consistently to every model. Although some models used the Adam optimizer with a fixed learning rate of 0.0001, the OvCan-FIND model that was proposed used Adam with an adaptive learning rate that starts at 0.0001. Interestingly, data augmentation was only used in the proposed model’s training phase, with the goal of incorporating random modifications to improve the model’s robustness and generalization performance. This configuration facilitates reproducibility and comparison across many experiments and architectures by offering important insights into the typical setting for CNN model training in the context of OC classification.

Result analysis

By comparing the performance of different models with histopathology data on an OC subtype dataset in Table 5, significant differences in accuracy metrics were found. EfficientNetB1, B2, and B3 showed reasonable validation accuracies between 0.67 and 0.79 and corresponding testing accuracies between 0.71 and 0.83 across the evaluated models. On the other hand, MobileNetV2 performed noticeably worse on all criteria, suggesting difficulties in correctly identifying OC subtypes. ResNet152V2 performed well compared to the other models examined, displaying competitive results with a testing accuracy of 0.83 and a validation accuracy of 0.75. OvC-EfficientNetV281, the suggested model, fared better than all the others, with testing and validation accuracies of 0.9974 and 0.9933, respectively. This outstanding result highlights the effectiveness of the suggested methodology and demonstrates how well it can classify OC subtypes based on the histopathology dataset.

The proposed model OvCan-FIND performed better than the various base models, such as CNN models Inception V3, EfficientNet B1, EfficientNet B2, EfficientNet B3, ResNet152V2, MobileNet, MobileNetV2, VGG16, VGG19, and Xception, according to an analysis of the confusion matrix from Figure 3.

The OvCan-FIND model’s confusion matrix shows outstanding classification performance, with almost flawless accuracy across all ovarian cancer subtypes and non-cancerous cases. A high percentage of correctly identified instances is indicated by the diagonal values, which are near 1.00. This is especially true for Clear Cell, Endometri, Mucinous, and Non-Cancerous Data, which exhibit nearly no misclassification. The overall misclassification rate is very low, even while Serous Data shows very few errors (0.01 misclassified as Clear Cell or Mucinous). This demonstrates the model’s resilience, high accuracy, and dependability in differentiating between ovarian cancer subtypes, making it a viable tool for precise categorization of histopathological images. All of the models nevertheless yielded positive results as well as these models’ extensive performance is also notable45,46,47 but our OvCan-FIND model had the best overall performance.

The diagonal blue hues in the confusion matrix diagram show the percentage of correctly predicted values that the model produced in comparison to the ground truth value. Figures 4 and 5 display the accuracy and loss curve outputs to further elucidate our model.

The OvCan-FIND model, when compared to base models that have been constructed, provides valuable insights into the effectiveness of deep learning architectures in medical imaging applications. By exceeding the base models in terms of accuracy, F1 score, recall, precision, and MCC (Matthews Correlation Coefficient) score, the suggested model displays its potential for better diagnostic accuracy. This superiority implies that, in comparison to its competitors, the OvCan-FIND model has better sensitivity and specificity because it can capture complex patterns and variables related to OC diagnosis. Moreover, the analysis of training and validation loss across epochs shows that the suggested model not only has better convergence but can also reduce overfitting, suggesting that it can robustly generalize to unknown data. These results highlight the potential contribution of customized deep learning models in improving the precision and dependability of OC diagnosis.

The OvCan-FIND model’s performance evaluation in comparison to a set of well-known base models highlights the importance of architectural design decisions in maximizing OC classification accuracy. This focused strategy not only produces better performance measures but also emphasizes how crucial domain-specific model creation is for applications involving medical imaging. The OvCan-FIND model’s success in outperforming its competitors in terms of accuracy, F1 score, recall, precision, and MCC score highlights the importance of continued research and innovation in improving these models for use in actual clinical settings. It also confirms the promise of deep learning methodologies in transforming cancer diagnosis. Figures 6, 7, 8 display the Accuracy, Loss, F1 score, Precision, Recall, and MCC graphs compared with other models, and outputs to further elucidate our model.

Table 6 provides an extensive analysis of model accuracies and performance indicators for image classification tasks. It includes the suggested OvCan-FIND model along with a number of well-known models from previous studies. Interestingly, the suggested model considerably exceeds the prior research, attaining an exceptional accuracy of 99.74%, whereas the latter works produced respectable accuracies ranging from 93% to 95.7%. Moreover, with a precision of 99.68%, recall of 99.56%, and an F1 score of 99%, the suggested model performs exceptionally well in terms of accuracy, recall, and F1 score. This demonstrates the OvCan-FIND model’s effectiveness and advances, establishing it as a top option for image classification problems.

Discussion, challenges and future work

The OvCan-FIND model marks a significant stride in the field of medical imaging, with an accuracy rate that reaches an impressive 99.74% in OC classification. This achievement is not just a step forward but a leap that sets a new precedent, outstripping established CNN architectures like Inception V3 and ResNet152V2. The model’s performance is not only superior but also indicative of the transformative potential of advanced deep learning systems in healthcare diagnostics. The model’s architecture is a testament to thoughtful design, integrating layers such as Global average pooling 2 d, a Dense layer of 1024 units, Dropout, another Dense layer of 512 units, additional Dropout, and a final Dense layer of 5 units, with the Dense layers being accompanied by L2 Kernel Regularizers. These layers contribute significantly to the model’s enhanced performance metrics, including accuracy, recall, precision, and f1 score. The incorporation of the Adam optimizer, categorical cross-entropy loss function, and the ReLU and Softmax activation functions has been pivotal in the model’s success. These choices reflect a commitment to achieving a level of efficacy that sets the OvCan-FIND model apart. Furthermore, refining the EfficientNetV2 framework requires fewer computational resources when compared to other models, while delivering precise and robust results. This balance of efficiency and accuracy underscores the model’s potential as a significant contribution to the field of medical diagnostics.

The model’s adeptness in discerning intricate patterns and variables crucial for OC diagnosis underscores its potential to significantly enhance the precision and dependability of diagnostics. The groundbreaking results achieved by this model herald the advent of a new epoch in precision medicine, offering novel insights and opportunities for augmenting patient outcomes in the management of complex conditions such as OC. Consequently, the performance of the OvCan-FIND model exemplifies the profound impact that state-of-the-art deep learning models can have on medical imaging and healthcare diagnostics, charting a course towards heightened diagnostic accuracy and more tailored therapeutic approaches in the future. Furthermore as proven by the results and lack of a major computational overhead we can clearly see the divide between this model and other models, which puts into the limelight the strength of our model, with which it is able to achieve great results while maintaining efficiency and being less demanding on resources. While models like MobileNet may be more efficient, they still lack the ability to achieve the same performance that OvCan-FIND has achieved. But there is a lack of comparison with other research that has used the same dataset. This omission somewhat limits the ability to benchmark our findings against existing models and assess relative performance. Although the suggested OvCan-FIND model shows encouraging results in classifying ovarian cancer subtypes, there are a number of possible risks to its validity that need to be taken into account:

Dataset Bias: Because the model was trained and assessed using a particular dataset, it might not be as generalizable to data gathered from other organizations, scanners, or staining techniques. Validating the model on external, more varied datasets will be the main goal of future research.

Class Imbalance: Despite efforts to ensure that the training data was balanced, small differences in the distribution of classes may have an impact on the sensitivity of the model to under-represented subtypes.

Overfitting Risk: Given the excellent performance metrics noted, there is still a chance of overfitting even when data augmentation and regularization procedures are used. The robustness of the model would be further confirmed by additional validation on bigger, unseen cohorts.

Lack of Explainability: Explainable AI (XAI) methods like Grad-CAM and LIME were not used in this work. This restricts the model predictions’ interpretability, which is crucial for clinical implementation. This will be addressed in future research by incorporating XAI techniques.

Evaluation Metrics: Standard measures like accuracy, precision, recall, and F1-score were employed, but additional evaluation criteria, including uncertainty estimation and clinician-in-the-loop input would be needed for real-world clinical implementation.

By recognizing these risks, we hope to show the study’s shortcomings and suggest areas for further development.

Conclusion

This study highlights the revolutionary potential of deep learning in medical imaging, namely in the use of sophisticated CNNs for the categorization of OC. With an astounding 99.74% accuracy rate, our suggested OvCan-FIND model marks a substantial breakthrough in OC detection. By showing how task-specific deep learning architectures can outperform conventional models in terms of accuracy and dependability, this work advances the discipline. By contrasting our model with well-known architectures like Xception, ResNet152V2, MobileNet, MobileNetV2, VGG16, VGG19, and Inception V3, we show how CNN-based classification has changed over time and position our model as a new standard for OC detection efficiency and accuracy. This study demonstrates the need for customized AI-driven methods in medical imaging and demonstrates how well-suited deep learning solutions may greatly enhance diagnostic performance.

Practically speaking, the OvCan-FIND model provides significant benefits in actual clinical settings. In order to improve patient outcomes and lower OC-related mortality rates, early detection, and prompt care are essential, and its high classification accuracy lowers diagnostic errors. Additionally, our approach guarantees improved generalizability across various histopathology datasets by tackling overfitting and performance instability, which makes it a feasible option for automated and objective OC classification. The increased dependability of AI-based diagnostic tools may reduce the workload for pathologists, reduce interpretive subjectivity, and speed up clinical judgment, all of which could result in a more effective and easily accessible cancer diagnosis.

This study has certain limitations in spite of these contributions. First, even though our model shows excellent accuracy, more testing is necessary to confirm its resilience across various imaging scenarios and patient demographics. Second, because of the dependence on histopathology pictures, real-time applicability in a clinical context is still unknown, which calls for future research to combine multimodal data, including genetic and radiological information, for a more thorough diagnosis approach. Last but not least, even while deep learning models are capable of achieving high classification accuracy, they frequently function as “black-box” systems, which restricts interpretability and clinician confidence in diagnoses made by AI. It will be necessary to address these issues in order to ensure smooth clinical adoption.

Future studies ought to look in a number of ways. First, using multi-modal fusion techniques that combine genomic, radiographic, and histopathological data may improve diagnosis precision and offer a more comprehensive understandings of the course and outcome of OC. Second, better decision-making and more clinician trust would result from research into explainable AI (XAI) strategies to increase model transparency. Finally, the accessibility and effect of the OvCan-FIND model would be greatly increased by creating computationally efficient and lightweight versions for real-time deployment in clinical settings, especially in contexts with restricted resources. These developments have the potential to further transform AI-powered cancer diagnostics and move oncology closer to precision and personalized medicine.

Data availability

The datasets analyzed for this study can be found in GitHub. This is available at: https://github.com/Anika-Saba-Ibte-Sum/Dataset

References

Binas, D. A. et al. A novel approach for estimating ovarian cancer tissue heterogeneity through the application of image processing techniques and artificial intelligence. Cancers 15, 1058 (2023).

Liu, L. et al. Deep learning provides a new magnetic resonance imaging-based prognostic biomarker for recurrence prediction in high-grade serous ovarian cancer. Diagnostics 13, 748 (2023).

Aghayousefi, R. et al. A diagnostic mirna panel to detect recurrence of ovarian cancer through artificial intelligence approaches. J. Cancer Res. Clin. Oncol.149, 325–341 (2023).

Das, S. et al. A comparative analysis and prediction of ovarian cancer using ai approach. Asia-Pacific J. Manag. Technol. (AJMT) 3, 22–32 (2023).

Liu, Y., Lawson, B. C., Huang, X., Broom, B. M. & Weinstein, J. N. Prediction of ovarian cancer response to therapy based on deep learning analysis of histopathology images. Cancers15, 4044 (2023).

Wang, C.-W. et al. Ensemble biomarkers for guiding anti-angiogenesis therapy for ovarian cancer using deep learning. Clin. Transl. Med.https://doi.org/10.1002/ctm2.1162 (2023).

Ziyambe, B. et al. A deep learning framework for the prediction and diagnosis of ovarian cancer in pre-and post-menopausal women. Diagnostics 13, 1703 (2023).

Wu, M. et al. Exploring prognostic indicators in the pathological images of ovarian cancer based on a deep survival network. Front. Genet.13, 1069673 (2023).

Nagarajan, P. H. & Tajunisha, N. Optimal parameter selection-based deep semi-supervised generative learning and cnn for ovarian cancer classification. ICTACT J. on Soft Comput. 13 (2023).

Gajjela, C. C. et al. Leveraging mid-infrared spectroscopic imaging and deep learning for tissue subtype classification in ovarian cancer. Analyst 148, 2699–2708 (2023).

Sundari, M. J. & Brintha, N. 2021 A comparative study of various machine learning methods on ovarian tumor. Sixth International Conference on Image Information Processing (ICIIP) 6, 314–319 (2021) (IEEE).

Ali, A. M. & Mohammed, M. A. Optimized cancer subtype classification and clustering using cat swarm optimization and support vector machine approach for multi-omics data. J. Soft Comput. Data Min. 5, 223–244 (2024).

Dsilva, L. R. et al. Wavelet scattering-and object detection-based computer vision for identifying dengue from peripheral blood microscopy. Int. J. Imaging Syst. Technol.34, e23020 (2024).

Goenka, N. et al. A regularized volumetric convnet based Alzheimer detection using T1-weighted MRI images. Cogent Eng.11, 2314872 (2024).

Goswami, N. G. et al. Detection of sickle cell disease using deep neural networks and explainable artificial intelligence. J. Intell. Syst.33, 20230179 (2024).

Srilatha, K., Chitra, P., Sumathi, M., Jayasudha, F. & Sanju, I. M. S. Performance comparison of ovarian tumour classification using deep neural network. In 2023 Fifth International Conference on Electrical, Computer and Communication Technologies (ICECCT), 1–7 (IEEE, 2023).

Ali, A. M. & Mohammed, M. A. A comprehensive review of artificial intelligence approaches in omics data processing: evaluating progress and challenges. Int. J. Math. Stat. Comput. Sci. 2, 114–167 (2024).

Aditya, M., Amrita, I., Kodipalli, A. & Martis, R. J. Ovarian cancer detection and classification using machine leaning. In 2021 5th international conference on electrical, electronics, communication, computer technologies and optimization techniques (ICEECCOT), 279–282 (Ieee, 2021).

Akter, L. & Akhter, N. Ovarian cancer classification from pathophysiological complications using machine learning techniques. In 2021 12th International Conference on Computing Communication and Networking Technologies (ICCCNT), 1–6 (IEEE, 2021).

Ahmed, A. et al. Occnet: Improving imbalanced multi-centred ovarian cancer subtype classification in whole slide images. In 2023 20th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), 1–8 (IEEE, 2023).

Kasture, K. R., Sayankar, B. B. & Matte, P. N. Multi-class classification of ovarian cancer from histopathological images using deep learning-vgg-16. In 2021 2nd Global Conference for Advancement in Technology (GCAT), 1–6 (IEEE, 2021).

Shanthini, A. & Boyanapalli, A. Dual-path residual unet with convolutional attention based swin-spectral transformer network for segmentation and detection of ovarian cancer. In 2023 International Conference on Advanced Computing Technologies and Applications (ICACTA), 1–5 (IEEE, 2023).

Falana, W. O., Serener, A. & Serte, S. Deep learning for comparative study of ovarian cancer detection on histopathological images. In 2023 7th International Symposium on Multidisciplinary Studies and Innovative Technologies (ISMSIT), 1–6 (IEEE, 2023).

Jenefa, A. et al. Automating mri-based ovarian cancer diagnosis with a dcnn. In 2023 International Conference on Sustainable Communication Networks and Application (ICSCNA), 1353–1360 (IEEE, 2023).

Mishra, R. et al. An efficient deep learning model for intraoperative tissue classification in gynecological cancer. In 2023 9th International Conference on Smart Structures and Systems (ICSSS), 1–6 (IEEE, 2023).

Fan, J., Liu, J., Chen, Q., Wang, W. & Wu, Y. Accurate ovarian cyst classification with a lightweight deep learning model for ultrasound images. IEEE Accesshttps://doi.org/10.1109/ACCESS.2023.3321408 (2023).

Kodipalli, A., Fernandes, S. L., Gururaj, V., Varada Rameshbabu, S. & Dasar, S. Performance analysis of segmentation and classification of ct-scanned ovarian tumours using u-net and deep convolutional neural networks. Diagnostics 13, 2282 (2023).

Liu, C.-J. et al. Platelet rna signature independently predicts ovarian cancer prognosis by deep learning neural network model. Protein Cell14, 618–622 (2023).

Hu, D., Jian, J., Li, Y. & Gao, X. Deep learning-based segmentation of epithelial ovarian cancer on T2-weighted magnetic resonance images. Quant. Imaging Med. Surg.13, 1464 (2023).

Maria, H. H., Jossy, A. M. & Malarvizhi, S. A hybrid deep learning approach for detection and segmentation of ovarian tumours. Neural Comput. Appl.35, 15805–15819 (2023).

Koch, A. H. et al. Analysis of computer-aided diagnostics in the preoperative diagnosis of ovarian cancer: A systematic review. Insights into Imaging14, 34 (2023).

Jan, Y.-T. et al. Machine learning combined with radiomics and deep learning features extracted from ct images: A novel ai model to distinguish benign from malignant ovarian tumors. Insights Imaging14, 68 (2023).

Walker, T. D. et al. The DNA damage response in advanced ovarian cancer: Functional analysis combined with machine learning identifies signatures that correlate with chemotherapy sensitivity and patient outcome. Br. J. Cancer128, 1765–1776 (2023).

Crispin-Ortuzar, M. et al. Integrated radiogenomics models predict response to neoadjuvant chemotherapy in high grade serous ovarian cancer. Nat. Commun.14, 6756 (2023).

Breen, J. et al. Artificial intelligence in ovarian cancer histopathology: A systematic review. NPJ Precis. Oncol.7, 83 (2023).

Hatamikia, S. et al. Ovarian cancer beyond imaging: Integration of AI and multiomics biomarkers. Eur. Radiol. Exp.7, 50 (2023).

Nobel, S. N. et al. Retracted: Modern subtype classification and outlier detection using the attention embedder to transform ovarian cancer diagnosis. Tomography10, 105–132 (2024).

El-Latif, E. I. A., El-Dosuky, M., Darwish, A. & Hassanien, A. E. A deep learning approach for ovarian cancer detection and classification based on fuzzy deep learning. Sci. Rep.1426463. (2024).

Zelisse, H. S. et al. Improving histotyping precision: The impact of immunohistochemical algorithms on epithelial ovarian cancer classification. Hum. Pathol.151, 105631 (2024).

Pirone, D. et al. Clinically informed intelligent classification of ovarian cancer cells by label-free holographic imaging flow cytometry. Adv. Intell. Syst.https://doi.org/10.1002/aisy.202400390 (2024).

Du, Y. et al. Preoperative molecular subtype classification prediction of ovarian cancer based on multi-parametric magnetic resonance imaging multi-sequence feature fusion network. Bioengineering 11, 472 (2024).

Kasture, K. R., Patil, W. V. & Shankar, A. Comparative analysis of deep learning models for early prediction and subtype classification of ovarian cancer: A comprehensive study. Int. J. Intell. Syst. Appl. Eng. 12, 507–515 (2024).

Tan, M. & Le, Q. Efficientnetv2: Smaller models and faster training. In International conference on machine learning, 10096–10106 (PMLR, 2021).

Zhao, Z., Bakar, E. B. A., Razak, N. B. A. & Akhtar, M. N. Corrosion image classification method based on efficientnetv2. Heliyonhttps://doi.org/10.1016/j.heliyon.2024.e36754 (2024).

Christopher, M. V. et al. Comparing age estimation with cnn and efficientnetv2b1. Procedia Comput. Sci. 227, 415–421 (2023).

Suciu, C. I., Marginean, A., Suciu, V.-I., Muntean, G. A. & Nicoară, S. D. Diabetic macular edema optical coherence tomography biomarkers detected with efficientnetv2b1 and convnext. Diagnostics 14, 76 (2023).

Khushi, H. M. T., Masood, T., Jaffar, A., Rashid, M. & Akram, S. Improved multiclass brain tumor detection via customized pretrained efficientnetb7 model. IEEE Access (2023).

Acknowledgements

This work has been carried out in AIUB. The author (s) thanks AIUB authority for their financial support. We would also like to express our sincere gratitude to the Advanced Machine Intelligence Research Lab (AMIR Lab) for their invaluable support and guidance, which significantly contributed to the successful completion of this study.

Funding

Open access funding provided by Mälardalen University.

Author information

Authors and Affiliations

Contributions

A.K.S. conceived the research and worked on the conceptualization of this study, methodology, resources, results analysis, discussion, Writing – original draft, and investigation. M.R. worked on methodology, resources, results analysis, discussion, and Writing – original draft. A.S.I.S. also worked on methodology, resources, results analysis, discussion, and Writing – original draft. M.F.M. contributed in investigation, supervision, validation, formal analysis, and writing - review. Lastly, M.M.K. also contributed in investigation, supervision, validation, formal analysis, and writing - review.

Corresponding authors

Ethics declarations

Competing interests

The authors declare that they have no known competing financial interests or personal relationships that could have appeared to influence the work.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Saha, A.K., Rabbani, M., Sum, A.S.I. et al. An enhanced deep learning model for accurate classification of ovarian cancer from histopathological images. Sci Rep 15, 21860 (2025). https://doi.org/10.1038/s41598-025-07903-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-07903-9