Abstract

To address the challenges associated with the digital transformation of the power industry, this research develops an optimization and benefit evaluation model for cloud computing platforms tailored to power enterprises. It responds to the current lack of systematic optimization mechanisms and evaluation methods in existing cloud computing applications. The proposed model focuses on resource scheduling optimization, task load balancing, and improvements in computational efficiency. A multidimensional optimization framework is constructed, integrating key parameters such as path planning, condition coefficient computation, and the regulation of task and average loads. The model employs an improved lightweight genetic algorithm combined with an elastic resource allocation strategy to dynamically adapt to task changes across various operational scenarios. Experimental results indicate a 46% reduction in failure recovery time, a 78% improvement in high-load throughput capacity, and an average increase of nearly 60% in resource utilization. Compared with traditional on-premise architectures and static scheduling models, the proposed approach offers notable advantages in computational response time and fault tolerance. In addition, through containerized deployment and intelligent orchestration, it achieves a 43% reduction in monthly operating costs. A multi-level benefit evaluation system—spanning power generation, grid operations, and end-user services—is established, integrating historical data, expert weighting, and dynamic optimization algorithms to enable quantitative performance assessment and decision support. In contrast to existing studies that mainly address isolated functional modules such as equipment health monitoring or collaborative design, this research presents a novel paradigm characterized by architectural integration, methodological versatility, and industrial applicability. It thus addresses the empirical gap in multi-objective optimization for industrial-scale power systems. The theoretical contribution of this research lies in the establishment of a highly scalable and integrated framework for optimization and evaluation. Its practical significance is reflected in the notable improvements in operational efficiency and cost control in real-world applications. The proposed model provides a clear trajectory and quantitative foundation for promoting an efficient and intelligent cloud computing ecosystem in the power sector.

Similar content being viewed by others

Introduction

As society advances and technology evolves, the power industry is undergoing a comprehensive digital transformation. Traditional design platforms used by power enterprises must adapt to meet emerging challenges and seize new opportunities, requiring solutions that are more efficient, flexible, and intelligent1,2,3. In this context, researchers have focused on optimizing these platforms to improve their performance and adaptability. Although the digitalization of power enterprises continues to progress—driven by the rapid development of network information technology and the emergence of big data—the depth and flexibility of its application remain limited. Establishing an efficient asset operation monitoring platform is therefore essential. Such a platform enables real-time, comprehensive, and accurate monitoring of enterprise benefit indicators, business processes, and operational performance, thereby reducing internal consumption, lowering production costs, and supporting the implementation of quality and efficiency enhancement strategies4,5.

As an advanced information technology, cloud computing offers innovative approaches to optimizing the design platforms of power enterprises6. By leveraging its elastic resources and distributed computing capabilities, power enterprises can effectively handle growing data volumes, accelerate processing speeds, and implement more flexible and adaptive business processes7,8,9. Benefit evaluation is a critical component of assessing the economic performance and operational outcomes of power enterprises. This process relies on a robust and well-structured indicator system. During implementation, it is essential to scale the evaluation system appropriately and enhance its optimization by applying effective methodologies that incorporate both scientific and practical approaches to economic benefit analysis. Such integration enables a more effective response to market demands and supports the high-quality development of power enterprises. To ensure efficient resource utilization and cost control during platform operation, several mechanisms have been introduced. First, workload balancing is achieved using Nginx or Kubernetes Service to distribute traffic. This is integrated with Prometheus and Grafana for real-time load monitoring and dynamic resource adjustment. Second, a cost minimization strategy is implemented through workload-based horizontal pod auto-scaling (HPA), which automatically adjusts resource allocation based on task volume, scaling down resources during idle periods to reduce operational expenses. Third, economic resource utilization is improved by employing cloud-based Spot instances and reserved instances, thereby lowering long-term operational costs. Additionally, non-real-time tasks are offloaded to edge nodes, reducing the burden on core computing resources.

This research highlights the key challenges currently confronting the power industry, including demand uncertainty, the complexity of energy management, and the urgent need for advanced information technology solutions. By integrating cloud computing technology, the research aims to enhance the efficiency and performance of design platforms within power enterprises and to support the sustainable development of the industry.

An integrated design scheme for a cloud computing platform tailored to power enterprises is proposed, combining an optimization scheduling mechanism with a performance evaluation model. This research addresses a central question: in the complex, resource-sensitive operational environment of power enterprises, how can the elastic computing capabilities of cloud technology and container orchestration mechanisms be leveraged to achieve system-level performance optimization under dynamic workload, failure, and cost constraints? To answer this question, an optimization model is developed that focuses on path scheduling, task load management, and elastic resource allocation. Simultaneously, a performance evaluation index system is constructed, encompassing three structural layers—power generation, grid operations, and end-user services. This system facilitates quantitative analysis of platform performance by holistically assessing cost control, resource utilization, and security metrics. In comparison with existing approaches—such as migration-based design frameworks10, equipment parameter optimization methods11, and monitoring platform concepts12—the model proposed in this research exhibits superior industrial adaptability, characterized by architectural integration and compatibility with multiple objectives. Through the incorporation of a lightweight optimization algorithm and a distributed real-time decision-making mechanism, the proposed model addresses common limitations of traditional systems, including high coupling, elevated operational costs, and insufficient flexibility. This research fills both theoretical and practical gaps in system-level optimization and benefit evaluation for cloud computing platforms in the power industry. Furthermore, it provides a replicable technical pathway and empirical foundation for promoting the transition toward more intelligent, efficient, and cost-effective operations.

Literature review

To enhance resource utilization and reduce platform operational costs, various load balancing and cost control mechanisms have been implemented. The platform employs Nginx services alongside Kubernetes’ built-in load balancing modules to dynamically distribute incoming requests across multiple backend computing nodes. Real-time monitoring of system resource utilization is achieved through the integration of Prometheus and Grafana. By adopting a workload-based HPA mechanism, the system automatically adjusts resource allocation in response to fluctuations in task volume, thereby minimizing resource wastage. In terms of cost control, the platform makes extensive use of Spot instances and reserved instances offered by cloud service providers. Intelligent task allocation strategies—based on task criticality and latency requirements—are employed to reduce long-term operational expenditures. Additionally, certain non-critical and non-real-time tasks are offloaded to edge computing nodes, thereby alleviating pressure on core computing resources and further reducing cost.

Research on the application of cloud computing in power enterprises has shown significant progress. Yahia et al.13 conducted pioneering work by transitioning traditional power grid planning and design platforms to a cloud computing environment. This approach enabled elastic resource allocation and high-performance computing, allowing power enterprises to optimize grid planning, improve equipment configuration, and address the complexities of power system operations. Tian et al.14 focused on optimizing power equipment design parameters through cloud-based computing. By utilizing large-scale cloud resources, rapid parameter optimization and simulation analysis were achieved, resulting in improved equipment performance and reduced energy consumption. Paukstadt and Becker15 developed a cloud-based simulation and monitoring platform for power systems, providing real-time operational monitoring and simulation analysis. Cloud services facilitated convenient access to system status, fault simulation, and timely system adjustments, thereby enhancing reliability and operational stability. Al-Wesabi et al.16 investigated the use of cloud computing in the planning of new energy power plants. They established a collaborative cloud-based design platform that supported multi-team coordination, improved design efficiency, and enabled information sharing and collaborative innovation. Mustapha et al.17 introduced a cloud-based power equipment health management platform by integrating sensor data with cloud-based monitoring systems. This platform enabled real-time equipment condition tracking, failure prediction, and remote maintenance services, ultimately reducing equipment downtime and maintenance costs. These studies demonstrate the diverse applications of cloud computing in the context of power enterprise design platforms, encompassing areas such as planning and design, equipment parameter optimization, system simulation and monitoring, collaborative development, and health management. Collectively, these advancements have contributed to improved operational efficiency, enhanced system performance, and the sustainable and innovative development of the power industry.

Performance evaluation in power enterprises is a complex and critical domain that involves multiple interrelated factors. Several recent studies have contributed to this field. Senjab et al.18 employed data mining techniques to analyze big data from power enterprises, aiming to establish more accurate performance evaluation models. These models utilize historical data to predict future performance and support informed decision-making. Yang et al.19 developed an intelligent operation and maintenance management system by integrating Internet of Things (IoT) technology and artificial intelligence (AI). This system enabled real-time monitoring and predictive maintenance of power equipment, thereby improving equipment availability and overall performance. In light of the growing emphasis on sustainability, Uslu et al.20 proposed indicators and models for assessing the environmental, social, and economic sustainability of power enterprises, offering a comprehensive evaluation framework. Devaraj et al.21 focused on the development of smart grids and assessed their benefits, including enhanced grid reliability and reduced energy waste, to support strategic decision-making in power enterprises. Jiao22 examined the competitive dynamics of the power market, providing performance evaluation and strategic insights for enterprises operating in highly competitive environments. This analysis included market structure, pricing strategies, and corporate performance indicators. These studies reflect the diversity and complexity of performance evaluation approaches in the power industry and highlight a growing trend toward integrating emerging technologies to support enterprise development.

The reviewed studies primarily focus on localized or domain-specific optimizations, such as parameter tuning, equipment health management, or collaborative platform design. While effective in their respective contexts, these approaches generally fall short of establishing a unified, multi-objective, and cross-departmental optimization framework. Moreover, few provide systematic comparisons of cloud architecture innovations or robust empirical validation of performance outcomes. In contrast, the cloud-based optimization and benefit evaluation model proposed in this research offers a more integrated and system-level approach. It constructs a multi-layered performance evaluation system spanning power generation, grid operations, and end-user applications, and incorporates dynamic optimization algorithms and lightweight scheduling mechanisms. This enables high-performance responsiveness and effective cost control under diverse workload and failure conditions. Compared with existing methodologies, the proposed model demonstrates superior scalability and practical applicability in terms of computational architecture, evaluation scope, and optimization efficiency. This research addresses critical gaps in the current literature by presenting a comprehensive framework capable of supporting industrial-scale deployment and cross-system assessment. Its contribution lies not only in technical performance but also in advancing theoretical and methodological understanding, offering a more universal solution for the digital transformation of power enterprises.

Motivation

The motivation for this research arises from the complex and unprecedented challenges facing power enterprises in the context of ongoing digital transformation. As society advances and technological development accelerates, the power industry encounters not only emerging opportunities but also significant obstacles, including massive data volumes, uncertain market demand, and the inability of traditional design platforms to meet evolving business requirements. In this rapidly changing environment, power enterprises require more adaptable, efficient, and sustainable solutions to navigate current and future challenges. Accordingly, this research responds to the urgent need for digital transformation by focusing on the optimization of design platforms to enhance operational efficiency, increase flexibility, and promote sustainable innovation. By addressing core issues in the industry’s transformation, this research aims to provide effective solutions to support power enterprises in overcoming both existing and anticipated difficulties.

In parallel, the research emphasizes the integration of cloud computing technology, which represents a leading trend in the contemporary information technology domain. The elastic resource provisioning and distributed computing capabilities of cloud platforms offer new possibilities for improving the design platforms of power enterprises. This integration is expected to overcome the limitations of traditional systems in handling large-scale data and complex business operations, thereby advancing power enterprises toward a future marked by increased efficiency, intelligence, and sustainability. The research is driven by a commitment to accelerating the adoption of advanced technologies in the power sector, with the goal of achieving more holistic, innovative, and sustainable development outcomes.

Contribution

This research proposes a comprehensive and cloud-based design platform tailored for power enterprises, addressing critical challenges related to operational efficiency, flexibility, cost control, and sustainability in the context of digital transformation. Central to this platform are advanced load balancing and cost optimization mechanisms designed to ensure efficient resource utilization and minimize operational expenses. To distribute user requests efficiently, the platform integrates Nginx services with Kubernetes’ native load balancing modules. Real-time monitoring and visualization of resource usage are achieved through a system built on Prometheus and Grafana. To adapt to workload fluctuations, the platform employs a HPA mechanism that dynamically adjusts computing resources, thereby preventing resource waste and improving responsiveness. For cost optimization, the system strategically leverages Spot instances and reserved instances provided by cloud service vendors. Task scheduling is based on task priority and interruptibility, allowing the system to allocate resources intelligently and significantly reduce long-term operational costs. Furthermore, certain low-priority and non-real-time tasks are offloaded to edge computing nodes, thereby alleviating the computational burden on core platform components and further reducing overall cloud resource costs.

This research, through a comprehensive analysis of the current state of digital transformation and the challenges facing the power industry, proposes an integrated solution aimed at enhancing the operational efficiency, flexibility, and sustainability of power enterprises. A central focus of the research is the adoption of cloud computing technology. Beyond identifying its potential applications in power design platforms, the research proposes innovative implementation strategies to maximize the benefits of elastic resource allocation and distributed computing inherent to cloud architectures.

To further improve resource utilization and reduce platform operational costs, a combination of load balancing and cost control mechanisms has been implemented. The platform employs Nginx services alongside Kubernetes’ built-in load balancing modules to dynamically distribute incoming external requests across multiple backend computing nodes. In conjunction with Prometheus and Grafana, real-time system resource monitoring is achieved. Leveraging a HPA mechanism based on workload conditions, the system dynamically adjusts resource allocation, scaling up or down in response to task volume, thus minimizing resource waste. From a cost management perspective, the platform extensively utilizes Spot instances and reserved instances provided by cloud service vendors. Through intelligent task allocation strategies that consider task type and interruptibility, long-term operational costs are significantly reduced. Additionally, by offloading non-critical, non-time-sensitive tasks to edge computing nodes, the system not only enhances overall cost efficiency but also ensures improved load distribution across the computing infrastructure.

This research also introduces a multi-level benefit evaluation model that accounts for the complex and diverse demands of power enterprises. The model provides decision-makers with a more accurate and comprehensive tool for assessing performance and guiding strategic decisions. By embedding sustainability assessment into the evaluation framework, the model promotes balanced development across economic, environmental, and social dimensions, offering holistic guidance for enterprises striving toward sustainable transformation.

To respond adaptively to fluctuating workloads, the system employs a HPA mechanism, which dynamically scales computing resources based on real-time demand. This approach prevents overprovisioning and minimizes resource waste. For cost optimization, the platform extensively utilizes Spot instances and reserved instances provided by cloud service vendors. By implementing a resource scheduling strategy based on task interruptibility, the system significantly reduces long-term operational expenditures. Moreover, low-priority and non-real-time tasks are offloaded to edge computing nodes, which helps relieve the computational burden on core platform resources and further lowers overall cloud infrastructure costs.

Architecturally, the platform adopts a layered design aligned with cloud computing paradigms. It consists of four primary layers:

-

User Layer: Offers a unified web-based portal for designers and project managers to submit tasks and access results.

-

Platform Service Layer: Comprises Computer Aided Design (CAD) services, process management modules, and data analytics tools, deployed as microservices using Spring Cloud or Kubernetes to ensure modularity and scalability.

-

Resource Scheduling Layer: Utilizes Docker containers and Kubernetes clusters for automatic orchestration, elastic resource allocation, and efficient task execution.

-

Infrastructure Layer: Built on mainstream Infrastructure-as-a-Service (IaaS) providers such as Alibaba Cloud and Huawei Cloud, offering high availability, scalability, and elastic resource provisioning.

In terms of security, the platform employs OAuth 2.0 for unified user authentication and access control. Data security is further strengthened through the use of Virtual Private Networks (VPN) and encryption technologies, safeguarding system integrity and confidentiality.

The platform adopts a cloud computing-based layered architecture, designed to ensure scalability, flexibility, and high availability in power enterprise applications. It is structured into four primary layers: the user layer, platform service layer, resource scheduling layer, and infrastructure layer. The user layer provides a unified web-based access portal through which designers and project managers can submit tasks, monitor progress, and retrieve results. This interface ensures intuitive interaction and transparent task management throughout the operational workflow. The platform service layer encompasses key functional modules such as CAD, process management, and data analytics. These services are deployed using a microservice architectures based on Spring Cloud and Kubernetes, which ensures the scalability and modular decoupling of platform components. The resource scheduling layer integrates Docker containers with Kubernetes clusters to support containerized deployment and dynamic resource allocation, thereby enhancing platform’s responsiveness and operational flexibility. At the infrastructure level, the platform leverages computing and storage resources from leading IaaS cloud providers, such as Alibaba Cloud and Huawei Cloud, to ensure high availability and elasticity. To safeguard platform security, the system employs the OAuth 2.0 protocol for unified authentication and access control, supplemented by VPNs and data encryption mechanisms to effectively mitigate the risk of data breaches.

The resource scheduling layer integrates Docker containers and Kubernetes clusters to enable containerized deployment, automatic orchestration, and elastic resource allocation. This dynamic scheduling mechanism enhances the platform’s responsiveness to fluctuating workloads and ensures high availability of services. The infrastructure layer is built on mainstream IaaS cloud platforms, including Alibaba Cloud and Huawei Cloud. It provides reliable and elastic computing, storage, and network resources, supporting stable and scalable system performance. To ensure security and data integrity, the platform adopts the OAuth 2.0 protocol for unified user authentication and access control. This is further reinforced by the use of VPNs and data encryption technologies, which collectively safeguard against data breaches and unauthorized access.

Paper organization

This research begins by outlining the background and objectives, with a particular focus on optimizing and evaluating the benefits of power enterprise platforms through the integration of cloud computing. The second section explores in detail the application of cloud computing technologies within the power industry, identifying the key opportunities and challenges. The third section presents core research findings, including the proposed platform optimization model and a comprehensive benefit evaluation model tailored for power enterprises. The final section summarizes the main contributions of this research, highlighting the models’ potential to significantly enhance the operational efficiency, economic performance, and sustainability of power enterprises. These findings provide practical insights for advancing intelligent and efficient power industry practices. A glossary of key terms used in this research is presented in Table 1.

Experimental environment and data description

This research is conducted using enterprise-grade servers and a high-performance network environment to deploy a cloud platform based on OpenStack. The underlying infrastructure includes one control node and four compute nodes, all configured to support KVM virtualization along with multiple OpenStack service components. The software environment comprises CentOS 7.9, Python, Docker, and Kubernetes, while MySQL, RabbitMQ, and Redis are utilized as the database and middleware stack. Experimental data are obtained from a medium-sized power design enterprise, spanning a six-month period. The dataset encompasses records related to equipment monitoring, task scheduling, and resource utilization. After undergoing data preprocessing—such as deduplication, handling of missing values, normalization, and feature extraction—the data are employed for model development and performance evaluation. A summary of the experimental specifications and configurations is presented in Table 2.

The design of power enterprise platform based on cloud computing

Cloud workflow architecture design for power enterprises

Cloud computing, a network-based computing paradigm, fundamentally transforms the way computing resources are accessed and utilized by offering on-demand, pay-per-use services for computing power, storage, and applications23,24. Supported by large-scale data centers, cloud computing provides elastic computing capabilities and flexible service delivery, enabling efficient resource management over the internet at reduced operational costs25. Cloud computing services are typically categorized into three primary models: IaaS, Platform as a Service, and Software as a Service26. This model delivers scalable, flexible, and cost-effective solutions, accelerating digital transformation across various industries27,28,29. Figure 1 illustrates the service scopes associated with each cloud computing deployment type.

The cloud computing-based platform developed in this research represents a significant technological innovation tailored for the operational needs of power enterprises. By strategically leveraging the elastic and distributed computing capabilities of cloud computing, the platform substantially enhances the flexibility and operational efficiency of power enterprises. The migration of computing power, storage resources, and applications from local infrastructure to the cloud enables optimized resource allocation, cost reduction, and improved adaptability to business and data processing demands. This platform integrates advanced technologies, including IoT and AI, to enable real-time monitoring of power equipment. As a result, it significantly improves equipment availability and maintenance efficiency, supporting safe and efficient operation. The cloud workflow architecture further facilitates dynamic resource allocation and automated task processing, thereby enhancing system scalability and stability. A key innovation lies in the seamless integration of this cloud-based platform with the asset operation monitoring system. By bridging the design and operational stages, the platform enables real-time feedback and monitoring throughout the lifecycle of power systems and equipment. This integration ensures that design processes are streamlined and operational insights are continuously fed back into system management, facilitating intelligent decision-making and lifecycle optimization. Additionally, the platform’s collaborative features support real-time updates on equipment operational status, allowing enterprises to adjust operational strategies promptly. This capability minimizes equipment downtime, maximizes operational efficiency, and promotes continuous improvement of enterprise processes. Overall, the proposed solution provides a flexible, intelligent, and efficient architecture that drives the digital transformation of the power industry. It enhances market competitiveness, improves resource utilization, and offers valuable practical and technical guidance for the development of future-oriented power enterprises.

This design framework strategically capitalizes on the core advantages of cloud computing—namely, elasticity and distributed computing—to improve the agility and operational capabilities of power enterprises30. The inherent characteristics of cloud computing allow these enterprises to manage large volumes of data, accelerate processing throughput, and respond flexibly to evolving business needs. Central to the design is the integration of cloud technologies within the power enterprise design platform. By shifting computing resources and applications to the cloud, enterprises achieve improved resource utilization and cost-effectiveness. Furthermore, the design incorporates an intelligent operation and maintenance management system, underpinned by IoT and AI technologies, to support real-time monitoring and analysis of equipment status. This approach enhances both equipment availability and maintenance performance31,32. The specific architecture of the proposed cloud workflow is depicted in Fig. 2.

In summary, this cloud-based platform design introduces an innovative and forward-looking solution for the power industry. It delivers intelligent, scalable, and flexible capabilities that are expected to significantly enhance the digital competitiveness of power enterprises.

The proposed architecture integrates an improved genetic algorithm (IGA) for global optimization scheduling alongside a dynamic load balancing strategy that continuously monitors node workloads in real time and dynamically adjusts task assignments. This dual mechanism is specifically designed to address the complex operational demands of power enterprises, particularly in large-scale, multi-task processing scenarios. It is highly applicable to cloud-based platforms in the electric power sector, particularly in the context of ongoing digital and intelligent transformation, where systems are required to simultaneously deliver high performance, low cost, and high reliability.

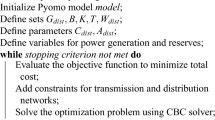

To illustrate the core scheduling mechanism, the following pseudocode outlines the logic of the optimization algorithm tailored to cloud workflow platforms in the power industry. The objective is to improve task scheduling efficiency, optimize resource utilization, and enhance overall system stability.

Development of an asset operation monitoring platform for power enterprises

The integration of an asset operation monitoring platform plays a pivotal role in optimizing the design platform for power enterprises. This integration enables comprehensive and coordinated management, ensuring seamless connectivity between the design and operational phases. Enhancing the design platform not only streamlines the development process for power equipment and systems, but—when combined with the asset monitoring platform—also supports real-time monitoring of design implementations during operational stages33. This facilitates the incorporation of operational considerations, such as equipment performance, maintenance requirements, and benefit evaluations, directly into the design phase32. As a result, the synergistic integration of the design platform and the asset operation monitoring platform enables power enterprises to achieve more intelligent, efficient, and sustainable operational management33,34,35. The overall framework of the asset operation monitoring platform is depicted in Fig. 3.

To ensure the efficiency of enterprise operational management—improving task completion rates, timeliness, and enabling real-time fault response through mobile applications—it is essential to optimize scheduling strategies, design accurate reference models, explore high-precision objective functions, and conduct research on robust solution algorithms. Organizational planning must align closely with business requirements, typically through mathematical modeling of the problem36,37, as elaborated below:

-

1)

Path Coefficient: This metric quantifies the direct causal effect between variables and is commonly used in path analysis or structural equation modeling. It is represented as a standardized regression coefficient ranging from -1 to 1, indicating both the magnitude and direction of the influence of an independent variable on a dependent variable. For instance, in analyzing the relationship between income and consumption, the path coefficient reflects the immediate effect of income changes on consumption behavior. A positive value indicates a favorable influence, a negative value indicates an adverse effect, and a value near zero suggests a weak or negligible relationship.

-

2)

Conditional Coefficient: This metric assesses the strength of variable relationships under specific conditions or contextual settings. It is typically applied in studies involving interaction effects or multilevel models and reveals how relationships vary across different environments or subgroups. For example, when exploring how education level affects consumption expenditure, the conditional coefficient may highlight differences in this relationship across high- and low-income groups. This coefficient thus captures the moderating role of contextual factors in shaping variable interactions.

The objective functions are defined as follows:

Here, \({s}_{j(j+1)}^{{\prime}i}\) represents the distance from site j to j + 1 in plan i;\({\rho }_{j(j+1)}^{i}\) denotes the path condition coefficient for the corresponding segment. Equation (1) aims to minimize total cost consumption. Equation (2) introduces \({\theta }_{i}\), the workload associated with plan i, and \(\mu\) , the average workload across all plans. This objective seeks to minimize workload variance, thereby promoting balanced task allocation.

The workload \({\theta }_{i}\) for each plan is calculated as Eq. (3):

In Eq. (3), \({\gamma }_{k}^{i}\) denotes the difficulty coefficient of the k-th task in plan i; \({\omega }_{1}\) and \({\omega }_{2}\) are the respective weights assigned to road conditions and task difficulty. Here, i ranges from 1 to N, and k ranges from 1 to n.

To reduce computational complexity, this research simplifies and optimizes the multi-objective function by prioritizing \({f}_{1}\) as the primary objective—minimizing total workload. The secondary objective, \({f}_{2}\), is used to define a threshold, serving as a constraint rather than a separate optimization goal. This reformulation effectively transforms the multi-objective problem into a single-objective one, streamlining the algorithm and improving computational efficiency38,39,40.

Constructing an evaluation model for power enterprise benefits

A clear definition of key evaluation indicators is fundamental for accurately assessing the benefits of power enterprises. Based on extensive literature and relevant research, this research establishes a multi-level evaluation indicator system, as illustrated in Fig. 4:

The model systematically evaluates the performance of power companies from three critical perspectives: the generation side, the grid side, and the user side. This comprehensive framework supports the economic and operational assessment of companies advancing through digital and intelligent transformation. From the generation side, the focus lies on cost savings and efficiency improvements attained via technological optimizations and enhanced scheduling strategies. Key indicators include avoided peak load capacity costs, fuel costs, and power generation construction costs. The grid side emphasizes minimizing investment and operational expenditures related to grid infrastructure while enhancing system flexibility and stability. This is achieved through investments in smart equipment and load optimization, reflected in avoided grid investment costs, system operation and maintenance expenses, and improvements in system load factors. On the user side, the evaluation centers on economic benefits realized through policy incentives and technological adoption, such as gains from policy subsidies, reduced distribution costs for terminal users, and long-term savings from investments in efficient equipment.

The evaluation method employs hierarchical weighted aggregation to quantitatively combine multi-dimensional indicators according to their relative importance. This method integrates historical data analysis, expert judgment, and calculated weighting factors to ensure scientific rigor and reliability. Furthermore, dynamic optimization algorithms adapt key parameters to reflect the actual operating environment, effectively reducing computational complexity and improving practical applicability. By providing a quantitative analysis across multiple levels, this model assists power enterprises in accurately identifying benefit sources and delivers data-driven support for optimizing resource allocation and enhancing market competitiveness. The model’s distinct advantage lies in its holistic approach, incorporating cost savings, revenue enhancement, and long-term investment value. Consequently, it offers a robust and comprehensive solution for evaluating the benefits of power enterprises undergoing digital transformation.

Pseudocode for cloud-based electric power enterprise performance evaluation model:

Explanation of secondary indicators

-

A1: generation-side benefits

Generation-side benefits encompass the cost savings and efficiency improvements realized throughout the power production process by employing optimized and advanced technological methods. This includes direct reductions in operational costs, improvements in equipment maintenance, more efficient fuel consumption, and enhanced scheduling practices. Collectively, these factors enable power generation enterprises to achieve higher economic returns and strengthen their capacity for sustainable development in a competitive market environment.

-

A2: Grid-side benefits

Grid-side benefits refer to the cost reductions and operational efficiency gains attained during power transmission and distribution through technological innovations and optimized management strategies. These benefits include savings in capital investment and operational expenditures, as well as enhancements in grid reliability, flexibility, and intelligence. Such improvements contribute to increasing the overall economic performance and stability of the power system.

-

A3: User-side Benefits

User-side benefits describe the cost savings and additional economic advantages realized by power consumers through the application of advanced technologies and supportive policies during electricity usage. This encompasses direct reductions in electricity expenses, the reception of policy-driven subsidies, and investments in more efficient electrical appliances and systems. As a result, end users experience both economic and qualitative improvements in their energy consumption.

Explanation of tertiary indicators

-

A11: Avoidable peak load capacity costs

Avoidable peak load capacity costs represent the reduction in additional capacity investments required during peak electricity demand periods achieved through effective load management and demand response strategies. By actively controlling and shifting demand during peak usage times, the necessity for constructing new generation facilities is minimized, resulting in substantial savings in infrastructure development and ongoing maintenance costs.

-

A12: Avoidable generation fuel costs

Avoidable generation fuel costs refer to the savings in fuel expenditures attained by improving generation efficiency and optimizing the energy mix. The adoption of more efficient generation technologies or increasing the integration of renewable energy sources reduces dependence on conventional fossil fuels, thereby significantly lowering overall fuel consumption and associated costs.

-

A13: Avoidable unit start-up and shut-down costs

Avoidable unit start-up and shut-down costs denote the cost savings derived from optimizing power system operational scheduling to decrease the frequency of generator start-up and shut-down cycles. This optimization reduces fuel usage and mitigates mechanical wear during these processes, leading to lower maintenance demands and extending equipment lifespan, ultimately decreasing maintenance and replacement expenses.

-

A14: Avoidable power generation construction costs

Avoidable power generation construction costs refer to the reduction in the need for building new power generation facilities by improving the utilization and efficiency of existing power systems. This reduction is achieved through the application of smart grid technologies and distributed energy systems, which help optimize existing assets and thereby save on large-scale infrastructure investments, effectively lowering long-term capital expenditures.

-

A21: Avoidable grid investment costs

Avoidable grid investment costs represent the decreased requirement for new grid infrastructure investments, attained through effective demand management and grid optimization. By maximizing the use of existing grid resources and enhancing load management capabilities, unnecessary grid expansions and new construction projects can be avoided, resulting in significant capital cost savings.

-

A22: Avoidable system operation and maintenance costs

Avoidable system operation and maintenance costs indicate the reduction in resources and expenses necessary for daily grid operation and maintenance. This is accomplished by deploying intelligent and automated technologies that enhance fault detection, streamline inspections, and facilitate equipment upgrades, thereby lowering operational expenditures related to repairs and routine upkeep.

-

A23: Smart equipment investment costs

Smart equipment investment costs refer to the capital expenditures involved in deploying advanced grid intelligence devices such as smart meters, sensors, and control systems. Although these investments entail higher upfront costs, they enable more precise monitoring, real-time management, and operational efficiency, ultimately delivering substantial long-term economic benefits and improved grid performance.

-

A24: Enhancing system load factor

Enhancing the system load factor involves improving the utilization rate of grid equipment by optimizing power demand management and load scheduling. This approach ensures the power system operates efficiently across varying load conditions, reducing idle capacity and thereby increasing the overall economic efficiency and operational stability of the grid.

-

A31: End-user forced power rationing costs

End-user forced power rationing costs represent the economic losses and inconveniences experienced by users when electricity supply is forcibly limited due to tight supply conditions. Effective power supply and demand optimization can minimize the frequency and impact of forced rationing, thereby reducing both direct financial losses and indirect disruptions to users’ operations.

-

A32: Benefits of policy subsidies

Benefits of policy subsidies refer to the financial incentives and support provided by government programs to users who participate in energy-saving initiatives and renewable energy projects. These subsidies directly reduce users’ electricity expenses while promoting investments in green technologies and energy-efficient equipment.

-

A33: User equipment investment costs

User equipment investment costs denote the expenditures incurred by end-users to improve electricity efficiency and lower energy costs through investments in high-efficiency electrical appliances and systems. Such investments may include energy-saving devices, smart home technologies, and distributed energy resources. Despite higher initial costs, these investments generate significant long-term savings and economic benefits.

Performance testing section

To ensure database consistency, data insertion operations are executed in a single-threaded manner. Table 3 presents a comparison of insertion times for two systems using identical data volumes. The results demonstrate that the proposed system significantly outperforms the simulation system in terms of insertion speed. Moreover, the performance advantage becomes increasingly pronounced as the data volume grows. Specifically, the insertion efficiency for datasets of 50,000, 500,000, and 5,000,000 battery data entries is quantitatively compared. As shown, the efficiency gap widens with larger datasets, confirming the proposed system’s superior capability in handling large-scale data imports.

This section also evaluates concurrent multi-threaded data access performance for both MySQL and distributed HBase databases. The results, summarized in Table 4, show that despite MySQL optimizations such as indexing, its query performance remains inferior to that of HBase. The performance gap further widens with increasing data volume and thread concurrency. Remarkably, the current HBase cluster maintains stable query times and has not reached its processing capacity even with tens of millions of records and high concurrency levels.

Furthermore, a variance calculation test was conducted on a specified data volume to compare computational capabilities between the simulation system and the proposed system. The results, shown in Table 5, clearly indicate that the proposed system achieves significantly lower computation times. Although overheads such as Hadoop task initialization, file I/O, and inter-cluster communication latency prevent the cluster from reaching a fivefold performance gain over a single server, the improvements remain substantial.

Table 6 illustrates the significant advantages of the optimized cloud computing platform proposed in this research across various operational scenarios. Under normal load conditions, the average task completion time is nearly halved, while Central Processing Unit (CPU) and memory utilization increase substantially. This demonstrates that the optimization strategy effectively enhances computing density and response speed. During high load conditions, the platform achieves an approximately 78% increase in system throughput and reduces average response latency by over 57%, owing to more efficient scheduling mechanisms and elastic resource allocation. These improvements contribute markedly to system stability, as evidenced by a 75% reduction in service interruptions. In fault scenarios, the optimized platform leverages container orchestration and intelligent rescheduling mechanisms to significantly shorten fault recovery time by nearly 67%, decrease task loss rate by almost 79%, and improve fault-tolerant task rescheduling success rate by over 50%. These enhancements ensure robust fault tolerance and business continuity. From a cost perspective, the platform demonstrates excellent economic benefits, reducing monthly power consumption by nearly 32% and labor maintenance costs by over 54%, leading to an estimated overall monthly cost saving of approximately 43%.

During the functional testing phase, the system’s eight functional modules are evaluated using real-world data, with key modules undergoing rigorous result verification. Particular emphasis is placed on assessing data insertion, retrieval, and computation performance. Comparative tests simulate traditional data analysis systems, and the results demonstrate that the current system significantly outperforms conventional power data analysis solutions, effectively satisfying the performance requirements for the current implementation phase.

This experimental section details the technical specifications and procedures employed to validate the proposed system’s performance advantages. To ensure database consistency and maintain stability and accuracy during data insertion, a single-threaded execution mode is adopted, minimizing the impact of concurrent operations on data integrity. Table 3 compares data insertion performance with that of a traditional simulation system, revealing substantial efficiency gains. Specifically, insertion of 50,000, 500,000, and 5 million battery records requires only 1.24 s, 7.39 s, and 60.11 s, respectively. These results represent improvements of 8.6, 13.1, and 16.5 times compared to the simulation system, underscoring the proposed system’s superior capacity for handling large-scale data ingestion. Subsequently, multi-threaded concurrent access tests simulate multiple users simultaneously querying the database. Despite optimizations such as indexing applied to the MySQL database, its query performance significantly lags behind the distributed HBase system. Table 4 presents query time comparisons under varying thread counts and data volumes. For example, querying 1 million records with a single thread results in a 1.45-s query time for MySQL versus 0.72 s for HBase. As concurrency increases, MySQL’s query time rises sharply, whereas HBase maintains relatively stable performance. This highlights HBase’s clear advantage in handling high-concurrency workloads over traditional relational databases. Finally, data computation capability is evaluated by comparing variance calculation times between the current system and the simulation system. Table 5 shows that the current system achieves computation times of 285 s, 1,556 s, and 3,112 s for datasets of 300,000, 10 million, and 100 million records, respectively. These results reflect efficiency gains of approximately 3.4, 3.1, and 2.9 times compared to the traditional system. Although the cluster-based approach does not achieve a fivefold speedup over a single server due to overheads such as Hadoop task startup, file reading, and inter-node communication latency, it still delivers significant improvements in processing capacity. In summary, comprehensive testing of data insertion, retrieval, and computation clearly demonstrates that the current system outperforms traditional power data analysis platforms. It effectively meets the performance demands of practical applications, providing a more efficient, scalable, and stable solution for large-scale power data analysis and processing.

This research presents a novel multi-level benefit evaluation model for power enterprises, integrating cloud computing technology and intelligent management techniques, which offers significant advancements compared to traditional evaluation methods. Conventional approaches tend to emphasize single-dimensional analyses focused solely on cost or efficiency. In contrast, this model adopts a comprehensive perspective by evaluating benefits across three key dimensions: the generation side, the grid side, and the user side. This hierarchical, multi-dimensional framework effectively captures the overall benefits of power enterprises during their digital transformation. Moreover, the model leverages cloud computing and IoT technologies to enable real-time data monitoring and intelligent scheduling optimization. These technological integrations not only enhance calculation efficiency and accuracy but also substantially improve resource utilization, cost savings, and system reliability. Compared to traditional methods, this integrated and innovative model facilitates more precise and dynamic benefit evaluations while reducing computational complexity. As a result, it supports the intelligent and precise development of power enterprise management and decision-making processes.

Additionally, the research investigates four types of new energy grid connection and absorption methods. It develops an economic benefit evaluation indicator system for new energy grid integration, centered on demand response and involving power users, grid operators, and power generators as principal stakeholders. Empirical analysis evaluates comprehensive absorption pathways for various new energy types and establishes their specific prioritization order41.

In traditional domains, the values of indicators in the positive domain matrix, interval domain positive domain matrix, and the to-be-tested positive domain matrix may differ significantly. Therefore, it is necessary to normalize the values of each indicator to facilitate efficient and consistent calculations42. For positive indicators, where a larger value is preferable (“larger is better”), normalization is performed as follows:

For negative indicators, where a smaller value is preferable (“smaller is better”), the normalization equation is:

In these equations, (\({\alpha }_{pi},{\beta }_{pi}\)) represents the range of values for the i-th indicator, known as its domain43.

Following normalization, the weights of each indicator are determined by constructing the judgment matrix A, which satisfies the following conditions:

In Eq. (6), \({\alpha }_{ij}\) represents the relative weight of indicator i compared to indicator j. The scale values and their corresponding qualitative descriptions are listed in Table 7.

The consistency index C1 is expressed as Equation (7):

In Eq. (7), \({\varphi }_{max}\) represents the maximum eigenvalue of the judgment matrix, and \(n\) is the order (size) of the matrix. To evaluate the magnitude of C1 and assess the consistency of the judgment matrix, the random consistency index R1 is introduced44,45,46. The corresponding values of R1 for different matrix orders are illustrated in Fig. 5.

Results and discussion

Results

The Analytic Hierarchy Process is first applied to determine the subjective weights of each indicator. Through expert scoring, judgment matrices for the secondary indicators of efficiency assessment are constructed, as shown in Fig. 6.

From Fig. 6, the weights of the secondary indicators for efficiency evaluation in power enterprises are 0.221, 0.319, and 0.459, respectively. The consistency test results yield C1=0.0008 and CR=0.0013<0.1, confirming that the judgment matrix satisfies the consistency requirements.

The judgment matrix and weights for the tertiary indicators of economic efficiency on the generation side are shown in Fig. 7.

From Fig. 7, the weights of tertiary indicators for economic efficiency on the generation side are 0.435, 0.330, 0.113, and 0.122, respectively. The consistency test results show C1=0.005 and CR=0.006 <0.1, indicating that the matrix has passed the consistency test.

Similarly, Fig. 8 presents the judgment matrix and weights for tertiary indicators related to economic efficiency on the grid side.

According to Fig. 8, the weights of tertiary indicators on the grid side are 0.128, 0.185, 0.182, and 0.505, respectively. The consistency test yields C1=0.036 and CR=0.039<0.1, confirming the matrix’s consistency.

The judgment matrix and weights for tertiary indicators on the user side are shown in Fig. 9.

From Fig. 9, the weights of tertiary indicators for economic efficiency on the user side are 0.582, 0.309, and 0.110, respectively. The consistency test results are C1=0.002 and CR=0.003<0.1, indicating good consistency.

These computed weights from the three indicator levels are integrated using the entropy weighting method, which adjusts and combines them with the weights of the secondary indicators to obtain the final composite weights. The statistical results are summarized in Fig. 10.

Figure 10 illustrates the weight distribution of the multi-level indicators within the cloud-based electric power enterprise performance evaluation model, highlighting the relative contributions of different dimensions to overall enterprise performance. On the generation side, the indicator “Avoidable Generation Fuel Costs (A12)” holds the highest comprehensive weight (0.17), underscoring its key role in improving economic benefits by optimizing generation efficiency and reducing fossil fuel dependence. However, its final weight is comparatively low (0.04), suggesting that although this indicator is theoretically important, its overall contribution balances out in the comprehensive evaluation. On the grid side, the “Enhancing System Load Factor (A24)” also attains a comprehensive weight of 0.17 and retains a relatively high final weight (0.08), highlighting its importance in enhancing grid resource utilization and minimizing redundant investments. On the user side, “Benefits of Policy Subsidies (A32)” commands the highest final weight of 0.06, reflecting significant economic benefits driven by policy incentives for energy-saving transformations. Conversely, indicators such as “User Equipment Investment Costs (A33)” maintain relatively low weights in both comprehensive and final evaluations, indicating weaker short-term returns. Overall, this model establishes a hierarchical, multi-dimensional indicator system encompassing generation, grid, and user perspectives. By combining historical data, expert weighting, and leveraging a cloud computing platform for real-time processing and dynamic optimization, it achieves a comprehensive evaluation of economic, social, and environmental benefits. Compared with traditional methods, this innovative approach excels in accurately identifying primary sources of benefits, dynamically adapting to real-world operating conditions, supporting efficient resource allocation and strategic decision-making, and promoting sustainable development throughout the digital and intelligent transformation of electric power enterprises.

Discussion

Azimi47 explored optimization strategies for power utility design platforms based on cloud computing and proposed a benefit evaluation model. Their study validated the model’s effectiveness through real-world cases, demonstrating improvements in platform performance and reductions in operational costs. Similarly, Li48 focused on performance optimization in cloud computing applications for power utilities, proposing innovative solutions through a detailed examination of system architecture and resource management. The experimental results indicated notable improvements in response time and overall platform efficiency. While both studies contributed significantly to cloud computing and the optimization of power utility platforms, they lack thorough validation in practical application contexts and do not fully integrate benefit evaluation models. In contrast, the present research emphasizes real-world applicability by incorporating platform optimization elements with a comprehensive benefit evaluation framework. This integration enhances the model’s practicality and feasibility, offering robust support for the digital transformation of power enterprises.

Most existing models tend to optimize a single objective, such as minimizing operational costs or improving equipment utilization, with limited consideration of multi-objective optimization. The model proposed in this research distinguishes itself by integrating multi-objective optimization algorithms with a benefit evaluation system. This enables the simultaneous optimization of various factors, including cost, efficiency, and sustainability, in complex power system environments. By incorporating cloud computing technologies, the model not only enhances data processing speed and resource utilization but also significantly increases the platform’s flexibility and adaptability, enabling it to better address the uncertainties encountered in the digital transformation of power enterprises. Table 8 presents a performance comparison of the proposed model with other optimization approaches.

Existing models for benefit evaluation predominantly emphasize economic indicators, often overlooking environmental and social dimensions. In contrast, the benefit evaluation model developed in this research adopts a more comprehensive approach, encompassing economic, environmental, and social aspects. By quantitatively analyzing key indicators such as demand response and the integration of new energy sources into the grid, the model offers a more robust foundation for decision-making that supports the sustainable development of power enterprises. For example, in assessing new energy grid integration pathways, the proposed model is capable of more precisely identifying the optimal balance between economic viability and environmental impact, thereby providing scientific support for formulating integrated energy management strategies. From a technical implementation perspective, unlike traditional models that rely on locally deployed computing infrastructure, the proposed model fully capitalizes on the elastic resource allocation and distributed computing capabilities of cloud computing. This significantly reduces hardware investment requirements and simplifies maintenance. Furthermore, by integrating real-time data monitoring and intelligent operation and maintenance management systems, the model enables dynamic resource allocation, thereby enhancing both the operational efficiency and reliability of the platform.

To facilitate a quantitative comparison, Table 8 translates qualitative descriptors into numerical values: “Yes”/”High” = 3, “Medium” = 2, “Low”/”Economic” = 1, and “No” = 0, with “Economic, Environmental, Social” also equating to 3.

Figure 11 presents a bar chart comparing the performance of four optimization models across multiple dimensions.

As illustrated in Fig. 11, the proposed model consistently outperforms traditional local deployment, big data optimization, and smart grid optimization models across key evaluation criteria, demonstrating a significant comprehensive advantage. It supports multi-objective optimization, allowing for an effective balance among economic, environmental, and social benefits, thereby addressing the common limitation of single-objective focus in conventional models. Moreover, the use of lightweight optimization algorithms achieves high precision with low computational complexity. Architecturally, the model is deployed through a cloud computing platform using containerized services, which contributes to enhanced data processing speed, improved resource utilization, greater platform flexibility, and lower costs. This deployment approach also substantially reduces the complexity and cost of hardware maintenance. Additionally, the system supports real-time monitoring and intelligent operational control, thereby enhancing automation, system robustness, and overall performance. These advantages are rooted in the model’s innovative computational framework and advanced platform design, positioning it as superior to traditional models in terms of performance, scalability, and practical application in the digital transformation of power enterprises.

Conclusion

This research systematically constructs and validates a cloud computing platform that integrates benefit evaluation and performance optimization for the power sector. A multi-level indicator system is developed, covering the generation, grid, and user sides. By incorporating real-time data, expert-assigned weights, and lightweight optimization algorithms, the platform enables precise assessment of enterprise benefits and substantial improvements in system performance. Experimental results under various scenarios—including normal operation, high load, and fault conditions—demonstrate significant advantages in task processing speed, resource utilization, fault tolerance, and cost reduction. Specifically, the average task response time is reduced by 50%, system throughput increases by nearly 80%, and cost savings reach 43%. These improvements reflect the effectiveness of coordinated optimization in cloud resource scheduling, containerized management, and intelligent operation and maintenance. In addition, the research constructs an optimized model tailored to power platform operations, establishes a three-level indicator system, and verifies the internal consistency of the indicators. The secondary indicator weights for evaluating power enterprise performance are 0.221, 0.319, and 0.459, with a consistency index of 0.0008. For tertiary indicators of economic benefits on the user side, the weights are 0.582, 0.309, and 0.110, with a consistency test result of 0.002. Among the indicators, “avoidable fuel cost” on the generation side and “system load factor improvement” on the grid side emerge as critical factors, while policy incentives play a dominant role on the user side. This multidimensional, full-chain evaluation approach enables not only the quantitative assessment of operational benefits for power enterprises but also strategic guidance in resource allocation, thereby playing a vital role in advancing digital and intelligent transformation within the power industry.

Despite the model’s demonstrated strengths in performance and functionality, certain limitations and challenges remain in terms of scalability and extensibility. First, the platform’s scalability may be constrained when applied to large-scale, multi-regional electric power enterprise clusters. Bottlenecks in data synchronization, task scheduling, and edge node resource allocation may arise under such complex deployments. Second, under conditions involving frequent failures or large-scale network anomalies, the current fault recovery and rescheduling mechanisms may not fully satisfy stringent continuity requirements, indicating a need for enhanced system robustness. Third, although the model performs effectively within the power industry, its extension to other sectors—such as water resource management, transportation, or manufacturing—would require customized redevelopment of the indicator system and optimization logic tailored to domain-specific requirements. At its current stage, the model’s generalizability and cross-industry transferability remain limited. To address these challenges, this research proposes three specific directions for future research. First, the integration of federated learning or other cross-domain data collaboration frameworks could enhance generalization capabilities in multi-regional and multi-entity data fusion scenarios. Second, incorporating edge computing technologies may improve real-time responsiveness and enable greater autonomy at terminal nodes. Third, embedding dynamic external factors such as carbon asset management and energy market price fluctuations into the evaluation framework could result in a more forward-looking and adaptive decision-support system. These enhancements are expected to further improve the model’s practical utility, operational resilience, and cross-sector applicability, facilitating the broader adoption of intelligent energy platforms.

While the research demonstrates strong innovation in theoretical modeling and system architecture, as well as notable engineering applicability, it falls short in terms of reproducibility and alignment with open science practices. Although the paper provides detailed descriptions of platform structure, optimization mechanisms, and the indicator system, it does not disclose the original experimental datasets, source code, or a reproducibility package. This lack of transparency poses challenges for independent verification and extended evaluation by other researchers. As the scientific community increasingly emphasizes data openness, code sharing, and reproducibility tools, the absence of these components limits the broader dissemination and impact of the research. Future iterations of this research would benefit significantly from providing standardized data interfaces, open-source algorithm libraries, and executable experimental scripts. Such enhancements would improve its scientific rigor and reusability across disciplines, thereby supporting further advances in energy digitalization, intelligent scheduling, and collaborative innovation.

Data availability

The data presented in this study are available on request from the corresponding author.

References

Osypanka, P. & Nawrocki, P. Resource usage cost optimization in cloud computing using machine learning. IEEE Trans. Cloud Comput. 10(3), 2079–2089. https://doi.org/10.1109/tcc.2020.3015769 (2020).

Manikandan, N., Gobalakrishnan, N. & Pradeep, K. Bee optimization based random double adaptive whale optimization model for task scheduling in cloud computing environment. Comput. Commun. 187, 35–44. https://doi.org/10.1016/j.comcom.2022.01.016 (2022).

Sefati, S., Mousavinasab, M. & Zareh Farkhady, R. Load balancing in cloud computing environment using the Grey wolf optimization algorithm based on the reliability: Performance evaluation. J. Supercomput. 78(1), 18–42. https://doi.org/10.1007/s11227-021-03810-8 (2022).

Mangalampalli, S. et al. Multi objective task scheduling in cloud computing using cat swarm optimization algorithm. Arab. J. Sci. Eng. 47(2), 1821–1830. https://doi.org/10.1007/s13369-021-06076-7 (2022).

Hashmi, S. A., Ali, C. F. & Zafar, S. Internet of things and cloud computing-based energy management system for demand side management in smart grid. Int. J. Energy Res. 45(1), 1007–1022. https://doi.org/10.1002/er.6141 (2021).

Liu, P. & Lu, C. Strategic analysis and development plan design on digital transformation in the energy industry: A global perspective. Int. J. Energy Res. 45(14), 19657–19670. https://doi.org/10.1002/er.7032 (2021).

Saleem, M. U. et al. Integrating smart energy management system with internet of things and cloud computing for efficient demand side management in smart grids. Energies 16(12), 35–48. https://doi.org/10.3390/en16124835 (2023).

Wang, D., Zhong, D. & Li, L. A comprehensive study of the role of cloud computing on the information technology infrastructure library (ITIL) processes. Library Hi Tech 40(6), 1954–1975. https://doi.org/10.1108/LHT-01-2021-0031 (2022).

Luo, Y. Retracted article: Environmental cost control of coal industry based on cloud computing and machine learning. Arab. J. Geosci. 14(12), 1081–1092. https://doi.org/10.1007/s12517-021-07411-W (2021).

Zhou, H., Yang, C. & Sun, Y. Intelligent ironmaking optimization service on a cloud computing platform by digital twin. Engineering 7(9), 1274–1281. https://doi.org/10.1016/j.eng.2021.04.022 (2021).

Li, J. et al. Edge-cloud computing systems for smart grid: state-of-the-art, architecture, and applications. J. Mod. Power Syst. Clean Energy 10(4), 805–817. https://doi.org/10.35833/MPCE.2021.000161 (2022).

Chen, Y. Intelligent algorithms for cold chain logistics distribution optimization based on big data cloud computing analysis. J. Cloud Comput. 9(1), 1–12. https://doi.org/10.1186/s13677-020-00174-x (2020).

Yahia, H. S. et al. Comprehensive survey for cloud computing based nature-inspired algorithms optimization scheduling. Asian J. Res. Comput. Sci. 8(2), 1–16. https://doi.org/10.9734/AJRCOS/2021/v8i230195 (2021).

Tian, J. et al. Platform-based servitization and business model adaptation by established manufacturers. Technovation 118, 10–22. https://doi.org/10.1016/j.technovation.2021.102222 (2022).

Paukstadt, U. & Becker, J. Uncovering the business value of the internet of things in the energy domain–a review of smart energy business models. Electr Markets 31, 51–66. https://doi.org/10.1007/s12525-019-00381-8 (2021).

Al-Wesabi, F. N. et al. Energy aware resource optimization using unified metaheuristic optimization algorithm allocation for cloud computing environment. Sustain. Comput.: Inf. Syst. 35, 12–17. https://doi.org/10.1016/j.suscom.2022.100686 (2022).

Mustapha, U. F. et al. Sustainable aquaculture development: a review on the roles of cloud computing, internet of things and artificial intelligence (CIA). Rev. Aquac. 13(4), 2076–2091. https://doi.org/10.1111/raq.12559 (2021).

Senjab, K. et al. A survey of Kubernetes scheduling algorithms. J. Cloud Comput. 12(1), 81–87. https://doi.org/10.1186/s13677-023-00471-1 (2023).

Yang, S. et al. Implementation for a cloud battery management system based on the CHAIN framework. Energy AI 5, 1–8. https://doi.org/10.1016/j.egyai.2021.100088 (2021).

Uslu, B. Ç., Okay, E. & Dursun, E. Analysis of factors affecting IoT-based smart hospital design. J. Cloud Comput. 9(1), 1–23. https://doi.org/10.1186/s13677-020-00215-5 (2020).

Devaraj, A. F. S. et al. Hybridization of firefly and improved multi-objective particle swarm optimization algorithm for energy efficient load balancing in cloud computing environments. J. Parallel Distrib. Comput. 142, 36–45. https://doi.org/10.1016/j.jpdc.2020.03.022 (2020).

Jiao, T. Mobile English teaching information service platform based on edge computing. Mob. Inf. Syst. 1, 1–10. https://doi.org/10.1155/2021/2082282.(2021) (2021).

Wu, W. & Plakhtii, A. E-learning based on cloud computing. Int. J. Emerg. Technol. Learn. (IJET) 16(10), 4–17. https://doi.org/10.3991/ijet.v16i10.18579 (2021).

Shetty, J. P. & Panda, R. An overview of cloud computing in SMEs. J. Global Entrep. Res. 11(1), 175–188. https://doi.org/10.1007/s40497-021-00273-2 (2021).

Bharany, S. et al. A systematic survey on energy-efficient techniques in sustainable cloud computing. Sustainability 14(10), 56–62. https://doi.org/10.3390/su14106256 (2022).

Masdari, M. et al. Bio-inspired virtual machine placement schemes in cloud computing environment: taxonomy, review, and future research directions. Clust. Comput. 23(4), 2533–2563. https://doi.org/10.1007/s10586-019-03026-9 (2020).

Li, S. Structure optimization of e-commerce platform based on artificial intelligence and blockchain technology. Wirel. Commun. Mobile Comput. 2020, 1–8. https://doi.org/10.1155/2020/8825825 (2020).

Gong, J. & Navimipour, N. J. An in-depth and systematic literature review on the blockchain-based approaches for cloud computing. Cluster Comput. 25(1), 383–400. https://doi.org/10.1007/s10586-021-03412-2 (2022).

Sadeeq, M. M. et al. IoT and Cloud computing issues, challenges and opportunities: A review. Qubahan Acad. J. 1(2), 1–7. https://doi.org/10.48161/qaj.v1n2a36 (2021).

Mansouri, N., Ghafari, R. & Zade, B. M. H. Cloud computing simulators: A comprehensive review. Simul. Modell. Pract. Theory 104(1), 102–144. https://doi.org/10.1016/j.simpat.2020.102144 (2020).

Trilles, S., González-Pérez, A. & Huerta, J. An IoT platform based on microservices and serverless paradigms for smart farming purposes. Sensors 20(8), 18–24. https://doi.org/10.3390/s20082418 (2020).

Islam, R. et al. The future of cloud computing: benefits and challenges. Int. J. Commun., Netw. Syst. Sci. 16(4), 53–65. https://doi.org/10.4236/ijcns.2023.164004 (2023).

Yathiraju, N. Investigating the use of an Artificial Intelligence Model in an ERP Cloud-Based System. Int. J. Elect., Elect. Comput. 7(2), 1–26. https://doi.org/10.22161/eec.72.1 (2022).

Ghafari, R., Kabutarkhani, F. H. & Mansouri, N. Task scheduling algorithms for energy optimization in cloud environment: a comprehensive review. Cluster Comput. 25(2), 1035–1093. https://doi.org/10.1007/s10586-021-03512-z (2022).

Helali, L. & Omri, M. N. A survey of data center consolidation in cloud computing systems. Comput. Sci. Rev. 39(1), 1–16. https://doi.org/10.1007/s10586-021-03512-z (2021).

Bharany, S. et al. Energy efficient fault tolerance techniques in green cloud computing: A systematic survey and taxonomy. Sustain. Energy Technol. Assess. 53(1), 1–13. https://doi.org/10.1016/j.seta.2022.102613 (2022).

Dittakavi, R. S. S. K. Evaluating the efficiency and limitations of configuration strategies in hybrid cloud environments. Int. J. Intell. Autom. Comput. 5(2), 29–45 (2022).

Li, W. et al. Blockchain-based trust management in cloud computing systems: a taxonomy, review and future directions. J. Cloud Comput. 10(1), 1–34. https://doi.org/10.1186/s13677-021-00247-5 (2021).

Atieh, A. T. The next generation cloud technologies: a review on distributed cloud, fog and edge computing and their opportunities and challenges. Res. Berg Rev. Sci. Technol. 1(1), 1–15 (2021).

Li, H. Optimization of the enterprise human resource management information system based on the internet of things. Complexity 1, 1–12. https://doi.org/10.1155/2021/5592850.(2021) (2021).

Ashawa, M. et al. Improving cloud efficiency through optimized resource allocation technique for load balancing using LSTM machine learning algorithm. J. Cloud Computing 11(1), 87. https://doi.org/10.1186/s13677-022-00362-x (2022).

Taghipour, M. et al. Application of cloud computing in system management in order to control the process. Management 3(3), 34–55. https://doi.org/10.31058/j.mana.2020.33003 (2020).

Ahmadi, S. Optimizing data warehousing performance through machine learning algorithms in the cloud. Int. J. Sci. Res. (IJSR) 12(12), 1859–1867. https://doi.org/10.21275/SR231224074241 (2023).

Azimi Nasab, M. et al. Optimal planning of electrical appliance of residential units in a smart home network using cloud services. Smart Cities 4(3), 1173–1195. https://doi.org/10.3390/smartcities4030063 (2021).

Li, J. et al. Study on the reference architecture and assessment framework of industrial internet platform. IEEE Access 8(1), 164950–164971. https://doi.org/10.1109/ACCESS.2020.3021719 (2020).

Damera, V. K. et al. Improved snake optimization-based task scheduling in cloud computing. Computing 106(10), 3353–3385. https://doi.org/10.1007/s00607-024-01323-9 (2024).

Thirunavukkarasu, G. S. et al. Role of optimization techniques in microgrid energy management systems—A review. Energ. Strat. Rev. 43(1), 100899. https://doi.org/10.1016/j.esr.2022.100899 (2022).

Saleem, M. U. et al. Design, deployment and performance evaluation of an IoT based smart energy management system for demand side management in smart grid. IEEE Access 10(1), 15261–15278. https://doi.org/10.1109/access.2022.3147484 (2022).

Author information

Authors and Affiliations

Contributions