Abstract

Accurate localization and segmentation of the optic disc (OD) are considered crucial for the early detection of ophthalmic diseases such as glaucoma and diabetic retinopathy. Challenges such as image quality variability, high background noise, and insufficient edge information are often encountered by existing methods. To address these issues, an adaptive framework is proposed in which Fast Circlet Transformation (FCT) is combined with entropy-based features derived from retinal blood vessels for robust OD localization. Minkowski weighted K-means clustering is utilized to dynamically assess feature importance, thereby enhancing resilience to dataset variations. Following localization, partial differential equation-based image inpainting is employed for blood vessel removal, and OD segmentation is refined using the Chan-Vese active contour model. The method’s localization efficacy is demonstrated through extensive evaluations across multiple public datasets (DRISHTI-GS, DRIONS-DB, IDRID, and ORIGA), and segmentation performance metrics, including Dice coefficients of 0.94–0.95 and Jaccard indices of 0.9, are achieved on the ORIGA and DRISHTI-GS datasets. Through these results, the robustness and generalizability of the proposed method for clinical applications in retinal image analysis are highlighted.

Similar content being viewed by others

Introduction

The timely detection and treatment of retinal disorders, including glaucoma, diabetic retinopathy, hypertensive retinopathy, and age-related macular degeneration, are considered crucial for the prevention of irreversible vision loss and associated complications. Among these conditions, glaucoma is exemplified as a case demonstrating the urgent need for early intervention, as it is characterized by progressive optic nerve damage due to elevated intraocular pressure. It has been indicated by current research that global glaucoma cases will increase to 111.8 million by 2040. This issue is disproportionately observed in populations from Asia and Africa, where healthcare infrastructure and screening programs are often less accessible1. Consequently, the development of robust and accessible diagnostic tools has been identified as a critical priority in ophthalmology.

To address this pressing challenge, the Optic Disc (OD) is utilized as an important biomarker for identifying the severity of these diseases. Anatomically known as the Optic Nerve Head (ONH), the OD is represented as a circular, typically yellowish-white region in fundus images, characterized by the highest luminance intensity. It is marked as the convergence point of retinal vasculature and is identified as the exit site of retinal nerve fibers from the eye, thereby being recognized as a fundamental structure for clinical assessment.

Despite the OD’s distinctive morphological characteristics, including its circular shape, elevated brightness, and vascular convergence, substantial challenges are encountered by numerous image localization and segmentation methodologies in accurately delineating its boundaries and position. Many traditional localization and segmentation techniques2,3 have been focused on detecting the circular nature of the OD; however, this approach becomes problematic when boundary-depleted images, in which OD margins are poorly defined, are encountered.

Prior to the advent of deep learning, various supervised and unsupervised algorithms were proposed4,5. While unsupervised methods are shown to be robust against bright lesions, they are affected by vessel occlusion and imaging artifacts such as haze, blurry boundaries, and illumination variations. Although greater robustness to such distractions is demonstrated by traditional supervised learning methods, shallow learning approaches have been dependent on manual feature extractor design, which has relied heavily on expert experience and often has resulted in model overfitting6.

Despite significant progress made by deep learning approaches7,8 in OD detection, the requirement for extensive amounts of data has been noted as a limitation for robust classification, and the determination of optimal network hyperparameters remains a challenge. Given the significant variation in fundus images across geographical populations, the need for unsupervised domain adaptation9 has been emphasized to ensure consistent performance across diverse datasets.

To circumvent these limitations, a novel technique is proposed, inspired by the Fast Circlet Transform (FCT)10 and entropy-based features for OD localization11. Although FCT has previously been applied successfully to OD detection10,12, performance degradation has been observed in challenging clinical scenarios. To enhance performance, FCT is integrated with entropy features, which are known to provide crucial information about OD vascular convergence patterns.

Optimal OD localization is achieved in our approach by targeting image patches with strong circular patterns (indicated by high FCT coefficients) and significant blood vessel convergence (characterized by high entropy values). A weighted linear combination of these features across image patches is computed, with weights being adaptively learned using Minkowski weighted K-means clustering. This enables the system to be dynamically adjusted to diverse image conditions, thereby providing a data-driven strategy that improves robustness over fixed-weight methods. Following OD center localization, an active contour-based segmentation strategy is implemented. First, pre-processing is conducted using a Partial Differential Equation (PDE) based inpainting method to reduce vascular interference, followed by the application of Chan-Vese active contour segmentation initialized at the detected center. This hybrid methodology effectively is constructed by combining circlet features, vascular information, adaptive learning, and variational segmentation techniques for precise OD delineation.

The key contribution of this work is demonstrated through the development of a novel feature extraction and unsupervised adaptive localization method that addresses the practical limitations of deep learning approaches. Unlike deep models that require large annotated datasets and high-end GPUs for training, the proposed method is shown to be robust across different datasets due to its adaptive feature weighting and does not require retraining for new data. Most importantly, it is considered interpretable, offering clinically meaningful features such as circular structure strength and vessel convergence that can be easily understood by clinicians. This makes the proposed approach highly suitable for clinical use.

The literature is organized as follows: “Related Works” presents a review of existing methods in the field. “Methodology” introduces the proposed unsupervised framework. “Datasets and evaluation metrics” outlines the datasets and evaluation criteria employed in the study. “Results” presents the performance outcomes, while “Discussion” discusses comparative results and highlights limitations. Finally, “Conclusion and future scope” summarizes the key contributions and offers future research directions.

Related works

OD feature extraction methodologies are generally categorized into appearance-based methods, model-based methods, and pixel-based approaches13.

In appearance-based methods, color, intensity, and texture properties are leveraged for OD detection. Automated OD detection was pioneered by Sinthanayothin et al.2, in which RGB fundus images were converted to HSI color space and regions of maximum intensity variance were identified. Subsequently, robustness was improved by Blanco et al.3 through the use of the Fuzzy Circular Hough Transform (FCHT), where fuzzy logic was employed to handle imperfect circular OD boundaries. The FCT was proposed by Chauris et al.10 to detect the OD efficiently by splitting images into circular components. Traditional circle detection algorithms such as the Hough Transform (HT) were outperformed through frequency-based circle analysis. However, these methods are found to struggle when the OD’s circular shape and color characteristics are distorted in pathological cases.

In model-based methods, prior geometric knowledge of OD morphology is incorporated, particularly by exploiting vessel convergence information. To account for anatomical variability, blood vessel components are extracted using various algorithms14,15,16,17,18,19,20,21, and the vessel convergence region is identified for OD detection. Ultrafast OD localization was achieved by Mahfouz and Fahmy22, in which vessel orientation was projected onto axes. Vessel density and directional features were combined with the HT by Zhang and Zhao23. The approach was further refined by Panda et al.24 through the exploitation of global and local vessel symmetry patterns. Although these methods are considered efficient, they remain sensitive to vascular abnormalities. When retinal blood vessels are thinned or are absent, accurate OD localization may not be achieved.

Hybrid approaches, where multiple features are integrated, have gained considerable attention in recent years. Morphological operations were fused with the Circular Hough Transform (CHT) by Aquino et al.25, while line operators and level sets were combined for boundary detection by Ren et al.26. Confidence scores based on intensity and directional vessel features were introduced by Xiong and Li27, resulting in improved robustness in pathological images. Despite such advancements, hybrid methods are observed to struggle in the presence of low-contrast OD regions and retinal abnormalities.

OD analysis capabilities have been fundamentally transformed by the deep learning revolution, where pixel-based approaches are employed for precise segmentation and localization at the pixel level. DRNet was developed by Hasan et al.28, in which Gaussian heatmaps were utilized for OD localization. Fuzzy clustering was integrated with active contours for segmentation by Abdullah et al.4. Transformer-based architectures such as JOINEDTrans5 and R-CNN frameworks7 shown to generalize effectively across diverse datasets. However, large annotated datasets are required, and domain adaptation challenges are encountered in clinical settings8.

Recently, unsupervised and lightweight methods were introduced to bridge the gap between accuracy and practical usability. Polar transforms were employed for rapid localization by Zahoor and Fraz29, while ConvNet region proposals were combined with mathematical priors by Dinc and Kaya30. The promise of unsupervised OD segmentation was highlighted by emerging foundation models such as the Fundus Segment Anything Model (FundusSAM), offering greater flexibility across datasets31. Recent advancements in attention modules and multi-scale fusion have been utilized to enhance OD feature extraction. While these approaches deliver strong performance, their dependence on annotated data remains, and reduced generalizability is often observed across diverse domains32,33. Additionally, fine boundary delineation and domain-specific variations are still found to be challenging.

Despite the considerable progress made across all methodological categories, existing approaches continue to face persistent challenges: appearance-based methods fail under pathological variation; model-based techniques struggle with abnormal vasculature; deep learning-based models require large datasets and lack adaptability; and current unsupervised methods trade off accuracy for computational simplicity. This gap has motivated the development of our adaptive approach, in which the geometric robustness of the FCT is combined with entropy-based vascular information through dynamic feature weighting, thereby enabling both precision and practical applicability without extensive training requirements.

Methodology

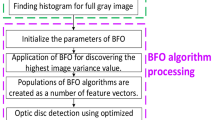

The proposed method is designed to integrate frequency-domain coefficients from the FCT with spatial-domain vessel entropy features, allowing the structural and anatomical characteristics of the OD to be captured. The methodology is divided into five key stages: image preprocessing based on color dominance analysis, patch-based FCT for circular structure detection, blood vessel entropy analysis for anatomical feature extraction, adaptive feature fusion for robust OD localization, and a segmentation pipeline.

Image preprocessing based on color dominance

Retinal fundus images exhibit significant variations in illumination, contrast, and color characteristics due to different acquisition systems, patient demographics, and pathological conditions. These variations can severely impact the performance of OD detection algorithms, as the disc’s circular boundary and vascular patterns may become obscured or distorted. Traditional preprocessing approaches using fixed color channels often fail to adapt to these diverse imaging conditions, leading to suboptimal feature extraction. In34, a preprocessing pipeline is proposed that addresses these challenges by automatically selecting the optimal color channels based on the specific characteristics of each image. This approach ensures consistent enhancement of crucial retinal structures across diverse datasets.

Following this approach, the input cropped fundus image \((512 \times 512)\), denoted as \(I_{\text {RGB}}\), is considered in the RGB color space. The variance of the blue channel B(i, j) is then computed to determine the image’s color dominance:

where \(\mu _B\) is the mean intensity of the blue channel, and \(m \times n\) denotes the dimensions of the image. A low variance in the blue channel indicates red dominance (commonly observed in OD regions), while high variance suggests the presence of more chromatic variation, possibly due to pathology (e.g., exudates, hemorrhages). Using a threshold \(\theta = 1500\), if \(\sigma ^2 \le \theta\), the image is classified as red-dominant and the \(a^*\) channel (red-green) in Lab space is enhanced; otherwise, the \(b^*\) channel (blue-yellow) is enhanced34.

Subsequently, \(I_{\text {RGB}}\) is converted to L*a*b* space, and Contrast Limited Adaptive Histogram Equalization (CLAHE) is applied to the selected \(a^*\) or \(b^*\) channel. The enhanced L*a*b* image is then converted back to RGB space. The enhanced RGB image is split, and CLAHE is applied to the green channel G(i, j) due to its superior vessel visibility and reduced noise susceptibility. The green channel closely resembles the lightness component and exhibits high contrast for vessels and OD regions. To remove noise while preserving edges, a bilateral filter is applied.

The bilateral filter smooths flat regions while retaining edge features such as vessel boundaries and OD edges. Finally, brightness and contrast are automatically optimized using a linear transformation,

where \(\alpha = \frac{255}{\text {Gray}_{\max } - \text {Gray}_{\min }}, \quad \beta = -\text {Gray}_{\min } \cdot \alpha\). This scaling ensures dynamic range expansion and standardized intensity distribution, thereby enhancing the visibility of anatomical structures before entropy and circlet-based analysis. Figure 1 illustrates the preprocessing effect, where retinal blood vessels and OD pixel intensities are visibly enhanced, facilitating more effective feature detection.

Patch-based FCT for circular structure detection

The FCT is a specialized algorithm for circular structure detection introduced by Chauris et al.10. Unlike traditional approaches such as the Hough Transform (HT), the FCT decomposes images into circular components across various radii and frequency scales while accounting for the finite frequency content of features. This enables efficient detection of imperfect circular shapes in noisy environments, making it particularly valuable for applications in medical imaging, astronomy, and oceanography10,12,35,36.

The FCT methodology is based on frequency-domain filter design. The process begins with the construction of one-dimensional frequency-domain filters \(H_j(\xi )\), which partition the frequency domain to isolate bands associated with circular structures:

where \(j\) represents the scale index, \(N\) denotes the number of filters, and \(\xi _j = \frac{j - 1}{N - 1}\). The partition of unity property ensures complete coverage of the frequency domain without amplification or attenuation of any frequency component. These 1-D filters are then extended to two dimensions through a phase shift operation:

where \(\xi = (\xi _1, \xi _2)\), \(|\xi | = \sqrt{\xi _1^2 + \xi _2^2}\), and \(r_m\) represents the radius of the target circular structure. These 2-D filters are designed to satisfy perfect reconstruction:

Subsequently, the preprocessed input image \(I_{\text {enhanced}}\) is converted to grayscale \(g(x, y)\). Then, the 2D Fast Fourier Transform (FFT) is applied to \(g(x, y)\) to obtain its frequency representation:

For each combination of radius \(r_m\) and scale \(j\), the transformed image is filtered:

where \(n = (r_m, x_p, j)\) encodes the center position, radius, and scale. The inverse FFT is then applied to obtain the circlet coefficients:

FCT-based approaches typically identify the OD location by selecting the pixel with the maximum circlet coefficient across the entire image. However, this global approach often fails when the OD region exhibits low contrast or is partially obscured, as pathological conditions tend to distort the circular appearance of the OD.

This issue is addressed by dividing the FCT coefficients into patches and analyzing each independently. In the proposed method, local contrast differences are exploited, reducing the impact of global illumination variations while enabling the detection of subtle circular features that may be missed in a global analysis. Additionally, patch-level entropy features are incorporated. In contrast to previous works that use radii ranging from 30 to 80 pixels (targeting the OD itself), this method focuses on the optic cup using smaller radii of \(r = 10\) and \(r = 20\) pixels. This choice is anatomically justified, as the optic cup represents the central depression within the OD and typically comprises one-third to one-half of the OD diameter.

The analysis is further constrained to scale \(j = 2\) based on experimental results indicating that lower scales (\(j = 1\)) capture excessive high-frequency noise, while higher scales (\(j \ge 3\)) yield minimal additional information at the expense of increased computational cost.

This parameter selection optimizes the trade-off between detection accuracy and computational efficiency. The patch-based FCT implementation divides the coefficient map \(c_n(x, y)\) into 100 non-overlapping patches \(P_i\), each measuring \(60 \times 60\) pixels. The patch size is chosen to ensure that even the smallest ODs in standard fundus images are wholly contained within at least one patch. For each patch, the maximum circlet coefficient from both radii at scale \(j = 2\) is extracted:

where \(i = 1, 2, \dots , 100\) indexes the patches. This approach enables the detection of circular structures even when they are located at patch boundaries or exhibit size variations.

The complete feature set from all patches and both radii is defined as:

where the superscripts denote the radius values.

Blood vessel entropy analysis for anatomical feature extraction

To enhance detection accuracy across diverse imaging conditions, complementary entropy features are incorporated alongside FCT features. These entropy features are derived from retinal blood vessel patterns, which represent a key anatomical characteristic that remains relatively consistent even under challenging imaging conditions. The convergence of major retinal blood vessels at the OD creates a distinctive vascular pattern that can be leveraged for localization purposes. This anatomical arrangement persists even when the circular shape of the OD is distorted by pathology or when image contrast deteriorates. By analyzing the entropy of vessel distributions across image patches, regions exhibiting high vascular complexity–indicative of the OD region can be effectively identified.

A comprehensive approach to vessel segmentation is adopted, extending beyond conventional green-channel analysis. The methodology proposed by Coye37 is utilized, which leverages the complete color information via a weighted L*a*b* color model processed through Principal Component Analysis (PCA). The resulting binary image B contains segmented vessels (value = 1) against the background (value = 0), capturing the retinal vascular network with high fidelity.

To maintain consistency with the FCT analysis framework, the binary vessel image B is divided into the same set of 100 non-overlapping patches \(P_i\), each of size \(60 \times 60\) pixels. For each patch, entropy \(H_i\) is calculated to quantify the randomness and complexity of the vessel distribution:

where \(p_0\) represents the proportion of background pixels (value = 0) and \(p_1\) represents the proportion of vessel pixels (value = 1) in patch \(P_i\).

Entropy serves as an effective measure of vessel pattern complexity and exhibits several key properties relevant to OD localization. High entropy values (\(H_i \approx 1\)) indicate patches with nearly equal proportions of vessel and background pixels, typically observed at the OD boundary where vessels enter and branch. Medium entropy values occur in regions with moderate vessel density, such as areas adjacent to the OD. Low entropy values correspond to patches dominated by either vessels or background, as commonly seen in peripheral retinal regions.

The complete vessel-based feature set is defined as:

At each patch, the availability of vessel information is represented through the entropy value, which complements the FCT-derived circularity feature. This synergy significantly improves the identification of OD candidates. A weighted linear combination of these two feature sets is then computed, and the patch with the maximum combined response is selected as the localized OD center.

Adaptive feature fusion for robust OD localization

The complete feature set Y is constructed by concatenating the FCT-derived features with the vessel entropy features:

This unified feature space captures both structural (circular) and anatomical (vascular) characteristics of the OD region. The optimal patch containing the OD is identified by evaluating a weighted linear combination of the extracted features:

where \(w_1\), \(w_2\), and \(w_3\) denote the weights associated with each feature. Initially, equal weights (\(w_1 = w_2 = w_3 = \frac{1}{3}\)) are employed, yielding promising results across several test cases. To further improve robustness under varying imaging conditions, a Minkowski weighted K-means clustering algorithm is implemented. This algorithm adaptively learns the optimal weights based on the specific characteristics of each image, enabling dynamic feature weighting.

By leveraging the complementary strengths of FCT based circular structure detection and entropy-based vascular analysis, the proposed adaptive fusion strategy ensures enhanced OD localization even in the presence of image noise, illumination variability, or pathological deformation.

The patch corresponding to the maximum linear combination score is selected as the final OD location:

Adaptive feature weighting

To determine suitable weights for the features, the Minkowski weighted K-means algorithm is adapted, which classifies the feature space based on the available information in each patch. As an unsupervised method, it does not require labeled data and effectively exploits the structure present in unlabelled datasets38,39,40,41.

The algorithm minimizes the following objective function:

where \(\text {K} = 3\) is the number of clusters, \(n = 100\) is the number of patches, \(M = 3\) is the number of features, \(s_{ik}\) indicates the assignment of patch i to cluster k (1 if assigned, 0 otherwise), \(z_{iv}\) denotes the value of feature v for patch i, \(c_{kv}\) is the centroid of cluster k for feature v, \(w_v\) is the weight for feature v, and \(\gamma\) is the Minkowski exponent adaptively selected via silhouette analysis.

To ensure stable clustering, an anomalous cluster initialization strategy is employed. A reference point is computed as the mean of all patch features, and the patch farthest from this reference is identified. Centroids are initialized by iteratively selecting points from the largest clusters, enhancing convergence stability.

The algorithm proceeds through three main iterative steps:

Cluster Assignment: Each patch is assigned to the nearest centroid using the weighted Minkowski distance:

Centroid Update: Cluster centroids are recalculated as the mean of features from assigned patches:

Weight Optimization: Feature weights are updated to minimize the objective function:

where \(E_v\) represents the within-cluster dispersion for feature v.

Using the optimized weights, the weighted linear combination defined in Equation 1 is applied to each patch. The patch with the highest score, as defined in Equation 2, is selected as the candidate OD region. This patch, denoted as \(\text {Patch}_{\text {max}}\), reflects the strongest combined evidence from both circular structure detection and vascular pattern complexity.

The proposed adaptive weighting scheme automatically emphasizes the most discriminative features while suppressing noisy ones, thereby enhancing robustness across diverse datasets without manual parameter tuning. In scenarios where FCT-derived features exhibit greater relevance than entropy features, the algorithm inherently assigns higher weights to the FCT terms, and vice versa.

Finally, the maximum-intensity pixel within the selected patch is identified, and its coordinates \((x_0, y_0)\) are taken as the localized OD center. This point is subsequently used to initialize the active contour model for precise OD segmentation.

OD segmentation pipeline

Building upon the accurately localized OD center \((x_0, y_0)\) obtained from the hybrid FCT and entropy-based approach, a two-stage segmentation pipeline is implemented to address the challenges posed by retinal vasculature and pathological distortions. The process sequentially performs vessel removal and OD boundary segmentation as described below.

Vessel removal via PDE-based inpainting

In fundus images, blood vessels overlapping the OD boundary introduce intensity discontinuities, which adversely impact the performance of active contour-based segmentation techniques such as the Chan-Vese method. These discontinuities often cause contour leakage or convergence to incorrect boundaries. To mitigate this issue, PDE based inpainting approach is employed as a preprocessing step to selectively smooth vessel regions while preserving the global OD morphology42.

The original grayscale image is represented as \(f(x, y): \Omega \rightarrow \mathbb {R}\), where \(\Omega = [0, W] \times [0, H] \subset \mathbb {R}^2\), with W and H denoting the image width and height, respectively. A binary vessel mask \(B(x, y): \Omega \rightarrow \{0, 1\}\) is used to identify vessel pixels in the image. The inpainting domain is defined as \(D = \{ (x, y) \in \Omega \ | \ B(x, y) = 1 \}\), corresponding to pixels occupied by vessels requiring restoration. The complement \(\Omega \setminus D\) defines the known, vessel-free regions.

The objective is to compute an inpainted image \(u(x, y, t)\), which evolves over artificial time \(t\) according to the heat equation with a data fidelity term:

where \(\lambda\) controls the diffusion rate, \(\Delta u\) is the Laplacian of \(u\), and \(\chi _{\Omega \setminus D}\) is the characteristic function defined as:

In regions where \(\chi = 1\), the evolution of \(u\) is driven toward the original image \(f\), thereby preserving the intensities in known areas. In contrast, within vessel regions (\(\chi = 0\)), the evolution is governed solely by diffusion, promoting smooth intensity propagation from neighboring pixels. The initial condition is given by \(u(x, y, 0) = f(x, y)\), for all \((x, y) \in \Omega .\)

To prevent artifacts near image boundaries, homogeneous Neumann boundary conditions are imposed:

where \(W \times H\) denote the image width and height, respectively. These conditions ensure zero intensity flux across the boundaries.

The PDE is solved iteratively using explicit finite differences, and the iteration continues until convergence is reached, based on a predefined tolerance criterion \(\Vert u^{n+1} - u^n\Vert < \varepsilon\). The resulting inpainted image, denoted as \(I_w\), exhibits reduced vessel artifacts near the OD boundary, thereby facilitating robust segmentation using active contours.

In this approach, PDE-based inpainting is used as a preprocessing step to improve the performance and convergence of the Chan-Vese algorithm. Following inpainting, the green channel is discarded to suppress residual vessel artifacts. A weighted linear combination of the red and blue channels is computed to enhance the intensity transitions at the OD boundary. The enhanced image \(I_w\) is defined as:

where \(I_R\) and \(I_B\) denote the red and blue channels, respectively, of the inpainted image. This vessel-suppressed image \(I_w\) is used as input to the Chan-Vese segmentation method. An example of the resulting vessel-free fundus image is illustrated in Fig. 2.

Boundary segmentation via Chan-Vese model

Following vessel removal, the Chan-Vese segmentation method is applied to accurately delineate the OD region from the vessel-free image \(I_w(x, y)\). This active contour technique formulates segmentation as a piecewise constant approximation problem and evolves a contour \(C\) that separates regions of homogeneous intensity by minimizing an energy functional43.

The Chan-Vese energy functional is given by:

where \(\alpha _1\) and \(\alpha _2\) denote the average intensities inside and outside the contour \(C\), respectively. The parameter \(\mu\) controls the smoothness of the contour, while \(\lambda _1\) and \(\lambda _2\) govern the data fidelity terms inside and outside the contour.

To facilitate numerical implementation, the contour \(C\) is represented implicitly as the zero level set of a Lipschitz-continuous function \(\varphi\), such that \(C = \{ (x,y) \in \Omega : \varphi (x,y) = 0 \}\). The segmentation functional is reformulated in terms of \(\varphi\) using a regularized Heaviside function \(H_\epsilon (\varphi )\) and its derivative, the Dirac delta function \(\delta _\epsilon (\varphi )\):

The regularized Heaviside function is defined as:

and the corresponding regularized Dirac delta function is given by:

For a fixed \(\varphi\), the optimal values of \(\alpha _1\) and \(\alpha _2\) are computed as the mean intensities within the regions defined by \(H_\epsilon (\varphi )\). Subsequently, the level set function \(\varphi\) is evolved using gradient descent as follows:

This iterative update continues until convergence is achieved, resulting in a stable contour that accurately segments the OD region by balancing the contour’s smoothness with the fidelity to image intensities.

The parameters \(\mu , \lambda _1, \lambda _2\), and \(\epsilon\) are empirically selected based on the image quality and resolution. Upon convergence, the largest segmented circular region is extracted and designated as the final OD mask.

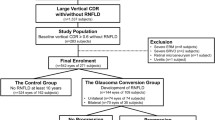

Datasets and evaluation metrics

The effectiveness and generalizability of the proposed OD localization and segmentation framework are validated using four publicly available retinal fundus image datasets: DRISHTI-GS, DRIONS-DB, IDRID, and ORIGA. These datasets are selected to ensure diversity in image characteristics, particularly variations in OD contrast and structure, which are critical for evaluating the robustness of segmentation algorithms.

The DRISHTI-GS dataset consists of 101 high-resolution fundus images with well-defined OD boundaries. These images provide an ideal setting for performance evaluation under favorable contrast conditions. Similarly, the DRIONS-DB dataset contains 110 images where OD regions are clearly delineated, facilitating precise contour detection.

The IDRID dataset presents a more challenging scenario due to the low contrast between the OD and surrounding retinal background. For this study, only the segmentation subset comprising 103 images with annotated OD masks is utilized. These images are employed specifically for the tasks of OD localization and segmentation.

The ORIGA dataset includes 650 images and introduces further complexity, such as variability in OD and cup sizes, inconsistent textures, and the presence of imaging artifacts. Additionally, the limited number of annotated segmentation samples increases the risk of overfitting, particularly when detecting subtle structural deformations associated with early-stage glaucoma.

Together, these datasets represent a comprehensive evaluation benchmark, spanning from high-contrast, well-structured images to challenging, noisy cases. This ensures a rigorous assessment of the proposed framework’s accuracy, robustness, and generalization across diverse retinal imaging conditions.

Evaluation metrics

To quantitatively assess localization precision, the normalized Euclidean distance between the predicted and ground truth OD centers is computed as follows:

where \((x_1, y_1)\) and \((x_2, y_2)\) denote the coordinates of the ground truth and predicted OD centers, where W and H represents the width and height of the image as mentioned early. For segmentation accuracy evaluation, the Dice Coefficient and the Jaccard Index (Intersection over Union, IoU) are utilized. These metrics effectively quantify the spatial overlap between the predicted segmentation and the ground truth region.

-

Dice Coefficient: The Dice score measures the similarity between two binary masks and is given by:

$$\begin{aligned} \text {Dice} = \frac{2 \times |A \cap B|}{|A| + |B|}, \end{aligned}$$where \(A\) is the predicted segmentation and \(B\) is the ground truth. Dice score closer to 1 indicates a higher degree of overlap.

-

Jaccard Index (IoU): The Jaccard Index evaluates the intersection over union of the predicted and ground truth regions and is defined as:

$$\begin{aligned} \text {IoU} = \frac{|A \cap B|}{|A \cup B|}. \end{aligned}$$

These metrics are computed for each image in the dataset, and the mean values are reported to summarize the overall segmentation performance.

Results

The performance of the proposed OD localization approach was evaluated on three publicly available datasets: IDRID, DRION, and DRISHTI-GS. The results, summarized in Table 1, highlight the comparative performance of the adaptive and non-adaptive methods using normalized distance metrics.

On the IDRID dataset, the adaptive method achieved a mean normalized distance error of 0.2493, compared to 0.2539 for the non-adaptive method, reflecting a consistent improvement of approximately 1.8%. The median error decreased from 0.2628 to 0.2520, and the minimum error improved from 0.0526 to 0.0479, indicating enhanced precision in optimal scenarios.

On the DRION dataset, the adaptive method outperformed the non-adaptive version with a reduced mean error (0.0736 vs. 0.0745) and a lower standard deviation (0.0816 vs. 0.0824), suggesting more consistent localization–a desirable attribute for clinical applications.

In contrast, the DRISHTI-GS dataset presented a marginal anomaly. The non-adaptive method exhibited a slightly better mean error (0.0756 vs. 0.0771); however, the standard deviation remained comparable between the two methods. This result suggests that the performance difference is negligible and unlikely to be clinically significant.

Overall, the adaptive method consistently demonstrated improved or equivalent performance across datasets, particularly excelling in challenging imaging conditions as observed in the IDRID and DRION datasets. The observed reductions in mean error and variability establish the adaptive approach as a more robust and reliable solution for OD localization across diverse retinal fundus image datasets.

OD segmentation performance

The proposed OD segmentation method was rigorously evaluated on two publicly available and clinically challenging datasets: ORIGA and DRISHTI-GS. These datasets were selected to assess the robustness and generalizability of the method across a wide range of image qualities, pathological conditions, and demographic variations.

Table 2 presents the quantitative performance metrics, namely Dice Coefficient and Intersection over Union (IoU), for the proposed method on each dataset.

On the ORIGA dataset, which comprises 650 images characterized by diverse pathological features including exudates and OD deformation, the method achieved a median Dice coefficient of 0.94 and an IoU of 0.90. These results demonstrate the method’s ability to maintain high segmentation accuracy even in the presence of noise, anatomical variability, and retinal pathologies.

On the DRISHTI-GS dataset, a slightly higher median Dice coefficient of 0.95 was observed, while the IoU remained consistent at 0.90. This marginal improvement is attributed to the dataset’s higher imaging quality and more standardized acquisition protocol, which facilitate more accurate boundary detection.

The consistently high Dice coefficients (above 0.94) across both datasets indicate excellent agreement between the predicted and ground truth OD boundaries. Similarly, the high IoU scores (0.90) confirm precise spatial overlap, affirming the method’s clinical applicability.

Qualitative validation is provided in Fig. 3, which presents segmentation overlays where predicted contours (in blue) are compared against ground truth boundaries (in green). These visual results further validate the robustness of the proposed method, showing high boundary accuracy across both normal and pathological conditions. Notably, the method maintains segmentation reliability in challenging scenarios, including low-contrast fundus images and glaucomatous changes.

Discussion

Comparative analysis with state-of-the-art methods

To establish the clinical relevance and technical merit of the proposed segmentation framework, comprehensive comparisons were conducted with several state-of-the-art OD segmentation techniques using multiple evaluation metrics. Table 3 presents the Dice Coefficient and Jaccard Index (IoU) for various methods across standard datasets.

The proposed method achieved Dice scores of 0.95 and 0.94 on the DRISHTI-GS and ORIGA datasets, respectively, demonstrating competitive performance. While certain deep learning-based approaches, such as MSGANet-OD 44 and the modified U-Net with ResNet-34 45, reported marginally higher Dice coefficients (0.96), the proposed method maintains clinical viability without the reliance on large-scale annotated data or extensive training.

In addition, the proposed method was compared with frequency-domain based segmentation techniques. Table 4 outlines this comparison on the DRISHTI-GS dataset. While methods such as wavelet-enhanced CNN 49 and Fourier contour models 50 achieved higher Dice scores (up to 0.9765), they also imposed increased computational complexity and greater reliance on data-driven learning or shape priors. In contrast, the proposed method offers a balanced trade-off between accuracy and computational efficiency.

Statistical validation and significance testing

To establish the statistical significance of the segmentation results, comparative analyses were performed against established methods using the DRISHTI-GS dataset. Specifically, statistical tests were conducted against U-Net and SAM 46, both of which are widely adopted for OD segmentation.

No statistically significant differences were observed when comparing the proposed method with U-Net (\(p = 0.598\), Cohen’s \(d = 0.117\)) or SAM (\(p = 0.609\), Cohen’s \(d = 0.206\)), though small positive effect sizes were noted in favor of the proposed approach. These outcomes suggest that, while differences are subtle, the method consistently achieves comparable or slightly superior results.

Appropriate statistical methodologies were employed based on the data distribution: paired t-tests for normally distributed data, and Wilcoxon signed-rank tests otherwise. The small effect sizes (\(d < 0.3\)) indicate consistency without significant variability across test cases.

Unlike deep learning approaches that often require extensive labeled datasets and are prone to overfitting, especially under limited data availability, the proposed method exhibits robust generalization capabilities. This resilience stems from its unsupervised design and the adaptive weighting mechanism in the localization stage. Theoretically, this adaptivity aligns with the principle of maximum likelihood estimation, wherein local feature weights are dynamically adjusted to reduce reliance on any single modality, thereby enhancing robustness across varying imaging conditions and pathological scenarios.

Failure case analysis and limitations

Despite the overall strong performance, specific failure modes were identified, particularly within the ORIGA dataset, that merit detailed discussion. Figure 4 presents representative examples of segmentation failures, offering insights into scenarios where the proposed method encounters limitations. In certain cases, the OD boundary appears indistinct or blurred, reducing the efficacy of edge-preserving and gradient-based techniques. This often results in leakage of the segmentation contour into adjacent tissues. As illustrated in Fig. 4a, the presence of peripapillary atrophy around the OD combined with low image contrast, leads to ambiguous transitions between disc and background regions. This degrades the performance of intensity-driven models such as Chan-Vese. Figure 4b highlights a case where the OD exhibits non-circular, pathological morphology. Such irregularity challenges the assumptions of circularity or ellipticity embedded in many segmentation frameworks. Imaging artifacts, such as glare or blur, can further confound contour evolution, especially when they overlap with the OD boundary region.

These failure cases emphasize the intrinsic complexity of medical image segmentation and underscore the importance of developing robust preprocessing pipelines. Future work could explore integrating texture-based priors, shape-aware post-processing, and adaptive contrast enhancement to further mitigate such limitations. Additionally, hybrid models that combine data-driven learning with prior-based regularization may offer improved resilience to pathological variation and imaging noise.

Conclusion and future scope

An enhanced method for OD localization is introduced in this research, utilizing features derived from the FCT and blood vessel-extracted images, in conjunction with the Chan-Vese active contour method. Substantial improvements over conventional image processing techniques are demonstrated, with performance levels comparable to deep learning models achieved without the reliance on annotated training data, particularly on challenging datasets such as ORIGA and DRISHTI-GS. In future work, the proposed method is intended to be incorporated as a preprocessing module within lightweight deep learning architectures. By leveraging the unsupervised nature and efficiency of the current framework, it is anticipated that both the robustness and accuracy of subsequent learning-based models will be enhanced across diverse retinal image datasets. Furthermore, to mitigate the influence of blurry boundaries often occurring in fundus images due to illumination variance and acquisition artifacts, advanced frequency-domain filtering strategies, such as adaptive thresholding in the FCT domain or multiscale vessel suppression, are planned to be explored. This strategic integration aims to endow deep learning systems with improved resilience and efficiency, thereby facilitating their deployment in resource-constrained clinical environments and large-scale population screening programs. Ultimately, the proposed direction underscores a commitment to reconciling precision with practicality in the field of medical image analysis.

Data availability

The datasets used in this study are publicly available at the following links: DRISTI-GS: https://www.kaggle.com/datasets/deathtrooper/multichannel-glaucoma-benchmark-dataset, DRIONS-DB: http://www.ia.uned.es/~ejcarmona/DRIONS-DB/BD/DRIONS-DB.rar, IDRID: https://www.kaggle.com/datasets/aaryapatel98/indian-diabetic-retinopathy-image-dataset, ORIGA: https://www.kaggle.com/datasets/arnavjain1/glaucoma-datasets. The implementation code for the proposed method is available at: https://github.com/Gowthaman803/Optic_Disc/tree/main

References

Tham, Y. C. et al. Global prevalence of glaucoma and projections of glaucoma burden through 2040: A systematic review and meta-analysis. Ophthalmology 121, 2081–2090 (2014).

Sinthanayothin, C., Boyce, J. F., Cook, H. L. & Williamson, T. H. Automated localisation of the optic disc, fovea, and retinal blood vessels from digital colour fundus images. Br. J. Ophthalmol. 83, 902–910 (1999).

Blanco, M., Penedo, M. G., Barreira, N., Penas, M. & Carreira, M. J. Localization and extraction of the optic disc using the fuzzy circular hough transform. In Artificial Intelligence and Soft Computing-ICAISC 2006: 8th International Conference, Zakopane, Poland, June 25–29, 2006. Proceedings 8 (ed. Blanco, M.) 712–721 (Springer, 2006).

Abdullah, A. S., Rahebi, J., Ozok, Y. E. & Aljanabi, M. A new and effective method for human retina optic disc segmentation with fuzzy clustering method based on active contour model. Med. Biol. Eng. Comput. 58, 25–37 (2020).

Lei, H. et al. Prior information guided coarse-to-fine dual-branch encoding network for fovea localization and optic disc/cup segmentation. In 2023 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (ed. Lei, H.) 1218–1223 (IEEE, 2023).

Fu, Y. et al. Optic disc segmentation by u-net and probability bubble in abnormal fundus images. Pattern Recognit. 117, 107971 (2021).

Elmannai, H. et al. An improved deep learning framework for automated optic disc localization and glaucoma detection. CMES Comput. Model. Eng. & Sci.140 (2024).

Almeshrky, H. & Karaci, A. Optic disc segmentation in human retina images using a meta heuristic optimization method and disease diagnosis with deep learning. Appl. Sci. 14, 5103 (2024).

Li, Z., Zhao, C., Han, Z. & Hong, C. Tunet and domain adaptation based learning for joint optic disc and cup segmentation. Comput. Biol. Med. 163, 107209 (2023).

Chauris, H. et al. The circlet transform: A robust tool for detecting features with circular shapes. Comput. Geosci. 37, 331–342 (2011).

Muhammed, L. A. Localizing optic disc in retinal image automatically with entropy based algorithm. Int. J. Biomed. Imaging 2018, 2815163 (2018).

Sarrafzadeh, O., Rabbani, H. & Dehnavi, A. M. Circlet based framework for optic disk detection. In 2017 IEEE International Conference on Image Processing (ICIP) (ed. Sarrafzadeh, O.) 3330–3334 (IEEE, 2017).

Morales, S., Naranjo, V., Angulo, J. & Alcaniz, M. Automatic detection of optic disc based on pca and mathematical morphology. IEEE Trans. Med. Imaging 32, 786–796 (2013).

Fu, Y. et al. Fovea localization by blood vessel vector in abnormal fundus images. Pattern Recognit. 129, 108711 (2022).

Guo, F. et al. Retinada: a diverse dataset for domain adaptation in retinal vessel segmentation. Front. Comput. Sci.19 (2025).

Bhati, A. et al. Dynamic statistical attention-based lightweight model for retinal vessel segmentation: Dysta-retnet. Comput. Biol. Med. 186, 109592 (2025).

Feng, T. et al. Retinal vessel segmentation via cross-attention feature fusion. In 2024 IEEE International Conference on Multimedia and Expo (ICME) (ed. Feng, T.) 1–6 (IEEE, 2024).

Jin, Q. et al. Inter-and intra-uncertainty based feature aggregation model for semi-supervised histopathology image segmentation. Expert Syst. Appl. 238, 122093 (2024).

Zhang, R. & Jiang, G. Exploring a multi-path u-net with probability distribution attention and cascade dilated convolution for precise retinal vessel segmentation in fundus images. Sci. Rep. 15, 13428 (2025).

Luo, X., Peng, L., Ke, Z., Lin, J. & Yu, Z. Pa-net: A hybrid architecture for retinal vessel segmentation. Pattern Recognit. 161, 111254 (2025).

Jin, Q. et al. Semi-supervised histological image segmentation via hierarchical consistency enforcement. In International Conference on Medical Image Computing and Computer-Assisted Intervention (ed. Jin, Q.) 3–13 (Springer, 2022).

Mahfouz, A. E. & Fahmy, A. S. Ultrafast localization of the optic disc using dimensionality reduction of the search space. In International Conference on Medical Image Computing and Computer-Assisted Intervention (eds Mahfouz, A. E. & Fahmy, A. S.) 985–992 (Springer, 2009).

Zhang, D. & Zhao, Y. Novel accurate and fast optic disc detection in retinal images with vessel distribution and directional characteristics. IEEE J. Biomed. Health Inform. 20, 333–342 (2014).

Panda, R., Puhan, N. & Panda, G. Robust and accurate optic disk localization using vessel symmetry line measure in fundus images. Biocybern. Biomed. Eng. 37, 466–476 (2017).

Aquino, A., Gegúndez-Arias, M. E. & Marin, D. Detecting the optic disc boundary in digital fundus images using morphological, edge detection, and feature extraction techniques. IEEE Trans. Med. Imaging 29, 1860–1869 (2010).

Ren, F., Li, W., Yang, J., Geng, H. & Zhao, D. Automatic optic disc localization and segmentation in retinal images by a line operator and level sets. Technol. Health Care 24, S767–S776 (2016).

Xiong, L. & Li, H. An approach to locate optic disc in retinal images with pathological changes. Comput. Med. Imaging Graph. 47, 40–50 (2016).

Hasan, M. K., Alam, M. A., Elahi, M. T. E., Roy, S. & Martí, R. Drnet: Segmentation and localization of optic disc and fovea from diabetic retinopathy image. Artif. Intell. Med. 111, 102001 (2021).

Zahoor, M. N. & Fraz, M. M. Fast optic disc segmentation in retina using polar transform. IEEE Access 5, 12293–12300 (2017).

Dinç, B. & Kaya, Y. A novel hybrid optic disc detection and fovea localization method integrating region-based convnet and mathematical approach. Wirel. Pers. Commun. 129, 2727–2748 (2023).

Yu, J., Nie, Y., Qi, F., Liao, W. & Cai, H. Fundusam: A specialized deep learning model for enhanced optic disc and cup segmentation in fundus images. In 2024 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) (ed. Yu, J.) 3955–3960 (IEEE, 2024).

Jin, B., Liu, P., Wang, P., Shi, L. & Zhao, J. Optic disc segmentation using attention-based u-net and the improved cross-entropy convolutional neural network. Entropy 22, 844 (2020).

Chen, Y., Bai, Y. & Zhang, Y. Optic disc and cup segmentation for glaucoma detection using attention u-net incorporating residual mechanism. PeerJ Comput. Sci. 10, e1941 (2024).

Priyadharsini, C. & Jagadeesh Kannan, R. Retinal image enhancement based on color dominance of image. Sci. Rep. 13, 7172 (2023).

Sarrafzadeh, O., Dehnavi, A. M., Rabbani, H., Ghane, N. & Talebi, A. Circlet based framework for red blood cells segmentation and counting. In 2015 IEEE Workshop on Signal Processing Systems (SiPS) (ed. Sarrafzadeh, O.) 1–6 (IEEE, 2015).

Sarrafzadeh, O., Rabbani, H., Dehnavi, A. M. & Talebi, A. Circlet transform in cell and tissue microscopy. Opt. Laser Technol. 124, 106000 (2020).

Coye, T. A novel retinal blood vessel segmentation algorithm for fundus images. MATLAB Cent. File Exch. (2015).

Ikotun, A. M., Ezugwu, A. E., Abualigah, L., Abuhaija, B. & Heming, J. K-means clustering algorithms: A comprehensive review, variants analysis, and advances in the era of big data. Inf. Sci. 622, 178–210 (2023).

Jović, A., Brkić, K. & Bogunović, N. A review of feature selection methods with applications. In 2015 38th International Convention on Information and Communication Technology, Electronics and Microelectronics (MIPRO) (ed. Jović, A.) 1200–1205 (IEEE, 2015).

De Amorim, R. C. & Komisarczuk, P. On initializations for the minkowski weighted k-means. In International Symposium on Intelligent Data Analysis (eds De Amorim, R. C. & Komisarczuk, P.) 45–55 (Springer, 2012).

De Amorim, R. C. & Mirkin, B. Selecting the minkowski exponent for intelligent k-means with feature weighting. Clust. Orders Trees Methods Appl. 92, 103–117 (2014).

Bertalmio, M., Sapiro, G., Caselles, V. & Ballester, C. Image inpainting. In Proceedings of the 27th Annual Conference on Computer Graphics and Interactive Techniques, 417–424 (2000).

Getreuer, P. Chan-vese segmentation. Image Process. Line 2, 214–224 (2012).

Chowdhury, A. E., Mehnert, A., Mann, G., Morgan, W. H. & Sohel, F. Multiscale guided attention network for optic disc segmentation of retinal images. Comput. Methods Programs Biomed. Updat. 7, 100180 (2025).

Yu, S., Xiao, D., Frost, S. & Kanagasingam, Y. Robust optic disc and cup segmentation with deep learning for glaucoma detection. Comput. Med. Imaging Graph. 74, 61–71 (2019).

Kirillov, A. et al. Segment anything. In: Proc. IEEE/CVF international conference on computer vision, 4015–4026 (2023).

Faisal, A., Munilla, J. & Rahebi, J. Detection of optic disc in human retinal images utilizing the bitterling fish optimization (bfo) algorithm. Sci. Rep. 14, 25824 (2024).

Tadisetty, S., Chodavarapu, R., Jin, R., Clements, R. J. & Yu, M. Identifying the edges of the optic cup and the optic disc in glaucoma patients by segmentation. Sensors 23, 4668 (2023).

Sun, G. et al. Joint optic disc and cup segmentation based on multi-scale feature analysis and attention pyramid architecture for glaucoma screening. Neural Comput. Appl. 1–14 (2023).

Chen, C., Zou, B., Chen, Y. & Zhu, C. Optic disc and cup segmentation based on information aggregation network with contour reconstruction. Biomed. Signal Process. Control 104, 107179 (2025).

Acknowledgements

This work is supported by the Vellore Institute of Technology, Vellore.

Funding

Open access funding provided by Vellore Institute of Technology.

Author information

Authors and Affiliations

Contributions

S.G.: Conceptualization, Investigation, Software, Writing - original draft. A.D.: Conceptualization, Investigation, Supervision, Writing - review & editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gowthaman, S., Das, A. A novel method for optic disc localization using fast circlet transform and Chan-Vese segmentation. Sci Rep 15, 31399 (2025). https://doi.org/10.1038/s41598-025-11257-7

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11257-7