Abstract

Mastering microsurgical skills is essential for neurosurgical trainees. Video-based analysis of target tissue changes and surgical instrument motion provides an objective, quantitative method for assessing microsurgical proficiency, potentially enhancing training and patient safety. This study evaluates the effectiveness of an artificial intelligence (AI)-based video analysis model in assessing microsurgical performance and examines the correlation between AI-derived parameters and specific surgical skill components. A dual AI framework was developed, integrating a semantic segmentation model for artificial blood vessel analysis with an instrument tip-tracking algorithm. These models quantified dynamic vessel area fluctuation, tissue deformation error count, instrument path distance, and normalized jerk index during a single-stitch end-to-side anastomosis task performed by 14 surgeons with varying experience levels. The AI-derived parameters were validated against traditional criteria-based rating scales assessing instrument handling, tissue respect, efficiency, suture handling, suturing technique, operation flow, and overall performance. Rating scale scores correlated with microsurgical experience, exhibiting a bimodal distribution that classified performance into good and poor groups. Video-based parameters showed strong correlations with various skill categories. Receiver operating characteristic analysis demonstrated that combining these parameters improved the discrimination of microsurgical performance. The proposed method effectively captures technical microsurgical skills and can assess performance.

Similar content being viewed by others

Introduction

A surgeon’s technical proficiency is critical and has been linked to postoperative adverse events, morbidity, and mortality1,2. In extracranial-intracranial (EC-IC) bypass surgery, the fragility of cerebral arteries necessitates advanced microsurgical skills to achieve complete intimal approximation between the recipient and donor arteries3,4,5. This demanding technique requires precise instrument handling, smooth and efficient movements, and gentle tissue manipulation to prevent vessel wall tears6,7. A comprehensive, objective assessment of microsurgical skills provides a reliable means to identify deficiencies in surgical trainees through constructive feedback and validates surgeon proficiency, ultimately enhancing patient safety8.

Traditionally, microsurgical skill assessments have relied on subjective evaluations by master surgeons9. Although various criteria-based scoring systems have been developed to reduce subjectivity, they require substantial human and time resources, making real-time feedback impractical10,11,12,13. Quantitative methodologies, such as force and motion sensors affixed to surgical instruments, have been explored14,15,16; however, their reliance on specialized sensors and equipment limits their widespread adoption and raises concerns regarding generalizability and reproducibility17,18.

With the increasing availability of surgical video recordings, video analytics is gaining traction for skill assessment across various procedures19,20,21. Artificial intelligence (AI)-driven video analysis is also being increasingly applied in surgical skill evaluation22,23. Building on this trend, we previously developed two AI models for assessing microvascular anastomosis performance: one incorporating a semantic segmentation algorithm to evaluate vessel area (VA) changes24 and another using an object detection algorithm to analyze surgical instrument-tip motion25.

This study examines whether combining these AI models improves the accuracy of surgeon performance assessment and identifies which aspects of microsurgical skills correlate with AI-derived parameters.

Methods

Combined AI model

Two AI models were developed as described previously: a semantic segmentation algorithm for the VA and a trajectory tracking algorithm for the instrument tip24,25. These models were based on the Residual Network 50 (ResNet-50) and You Only Look Once version 2 (YOLOv2), which were trained on clinical microsurgical videos and microvascular anastomosis practice videos.

ResNet-50 is a 50-layer deep convolutional neural network that utilizes residual learning, enabling the training of very deep architectures, and is widely applied to tasks such as segmentation and classification in medical imaging26. YOLOv2 is a real-time, deep learning-based object detection algorithm designed to detect objects quickly and accurately in a single pass. It divides the input image into grids and simultaneously predicts the presence of objects within each grid cell, making it ideal for high-speed, real-time applications such as surveillance systems for detecting people and vehicles, and identifying anatomical structures in medical images27. Both models were implemented in MATLAB (MathWorks, Natick, MA, USA). Detailed training procedures for each model can be found in our previous studies24,25.

The accuracy of these models was ensured by an Intersection over Union of 0.93 and a mean Dice similarity coefficient of 0.8724,25. We integrated both AI models to comprehensively analyze the microsurgical performance, as shown in Fig. 1.

Participants

This study adhered to the SQUIRE guidelines and Declaration of Helsinki. This study was conducted with institutional approval from the Hokkaido University Hospital (No. 018-0291). As our facility regularly holds off-the-job microvascular anastomosis training sessions for educational purposes, surgeon participants, including both instructors and trainees, were recruited from these sessions. Fourteen surgeons with varying levels of microsurgical experience, ranging from postgraduate years (PGY) 1 to 28, participated in the experimental surgical performance analysis. Table 1 summarizes the characteristics of the participating surgeons. All participants were right-handed.

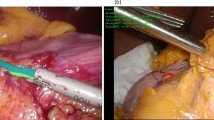

Microsurgical task

Each surgeon was assigned to perform interrupted suturing following two stay sutures in end-to-side anastomosis using artificial blood vessels (2.0 mm blood vessel model, WetLab Incorporated, Shiga, Japan) and 10-0 nylon monofilament threads (C26-004-01, Muranaka Medical Instruments Co., Ltd, Osaka, Japan), which assimilated the actual EC-IC bypass procedure (Fig. 2). To standardize the anastomosis procedure and minimize procedural variability, tasks such as stabilizing the two vessels, cutting the donor artery, performing arteriotomy on the recipient vessel, and preparing two stay sutures were performed and confirmed by a single instructor. A surgical trial was defined as the completion of a single suturing process, consisting of four phases: Phase A, grasping and inserting the needle; Phase B, pushing and extracting the needle; Phase C, pulling the threads to the first knot; and Phase D, tying three knots and cutting the threads25. To minimize the learning effect of repeated trials, only the first two trials from each surgeon were selected for the performance assessment.

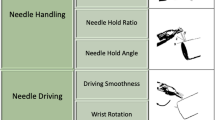

Criteria-based objective assessment

The Stanford Microsurgery and Resident Training Scale was used to assess each surgeon’s performance10,11. This rating scale consists of nine technical categories: (1) instrument handling, (2) respect for tissue, (3) efficiency, (4) suture handling, (5) suturing technique, (6) quality of the knot, (7) final product, (8) operation flow, and (9) overall performance. Three experts independently rated all surgeons’ performances on a scale of 1 to 5 for each technical category in a blinded manner, ensuring that the participants’ identities remained concealed. The score from the first two trials averaged across the three raters was used as the representative score for each surgeon.

Video parameters of dual AI model

Table 2 presents the parameters generated by the combined AI model. These include the coefficient of variation (CV) of all measured VA values (CV-VA), the relative change in VA over time (ΔVA), the maximum absolute value of ΔVA during the procedure (Max-ΔVA), the number of tissue deformation errors (No. of TDE), the path distance (PD) for the right and left forceps tips, and the normalized jerk index (NJI) for the right and left forceps tips24,25. As the mean ± 1.96 × SD of ΔVA for all trials by all surgeons was calculated as ± 1.13 in a previous study, this threshold was used for the definition of TDE24.

We analyzed videos from all surgeons’ trials and calculated each parameter. The average of the first two trials was used as the representative parameter for each surgeon.

Statistical analysis

The results are expressed as the mean ± standard deviation. The interrater reliability of the criteria-based objective rating scale was assessed using Cronbach’s α coefficient among the three raters. Correlations between the AI-based parameters and each category of the rating scale were analyzed using Spearman’s rank correlation coefficient (ρ).

To assess the discriminative ability of each AI model’s parameters, we conducted discriminant analyses between the good- and poor-performance groups stratified by the distribution of the criteria-based rating scale scores of the surgeons. For each AI model, we selected the parameter that showed a significant correlation (p < 0.05) with the highest number of technical categories and included it in discriminant analysis (Models 1 and 2). Finally, to evaluate the combined use of both AI models, all the parameters from Models 1 and 2 were included in the discriminant analysis (Model 3).

Statistical analyses were performed using JMP Pro (version 17.0.0; SAS Institute Inc., Cary, North Carolina, USA). Statistical significance was set at p < 0.05.

Results

Criteria-based scale

The high interrater reliability for the criteria-based rating scale was confirmed with a Cronbach’s α coefficient of 0.88–0.97 for each category (Supplementary Table 1). The original criteria-based scale scores for each surgeon are provided in Table 1, and detailed in Supplementary Table 2.

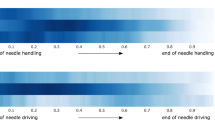

The total score of the criteria-based rating scale significantly correlated with the surgeon’s experience (Fig. 3). As the total score on the criteria-based rating scale across the 14 surgeons exhibited a bimodal distribution, performances that scored over 35 points were regarded as good, whereas trials that scored under 35 were regarded as poor (Fig. 3).

Correlation between AI-based and criteria-based performance analysis

Supplementary Table 3 provides the AI-derived performance parameters for each surgeon. Table 3 presents the p-values from the Spearman’s rank correlation analyses, and Supplementary Table 4 provides the Spearman’s rank correlation coefficient (ρ).

The No. of TDE for all phases and Phase C were significantly correlated with instrument handling, respect for tissue, efficiency and overall performance. In addition, the No. of TDE for Phase C was significantly correlated with suturing technique and final product. Although CV-VA and Max-ΔVA for all phases did not show significant correlations with technical categories, Max-ΔVA for Phase B was significantly correlated with instrument handling and respect for tissue. Therefore, the No. of TDE for Phase C and Max-ΔVA for Phase B were included in Models 1 and 3 for subsequent discriminant analysis.

The PD of the right forceps (Rt-PD) and the NJI of the left forceps (Lt-NJI) for all phases were significantly correlated with almost all performance categories. The NJI of the right forceps (Rt-NJI) for all phases was significantly correlated with five technical categories, while the Rt-NJI for Phase C and Phase D were significantly correlated with seven categories. The PD of the left forceps (Lt-PD) was significantly correlated with efficiency, suture handling, suturing technique, and operation flow. Therefore, Rt-PD, Lt-PD, and Lt-NJI for all phases and Rt-NJI for Phase C were included in Models 2 and 3 for the subsequent discriminant analysis.

Discriminative abilities of each model for surgical performance

Supplementary Table 5 provides the discriminant functions of Models 1–3.

The receiver operating characteristic (ROC) curves for Models 1–3 used to distinguish between good and poor performance are shown in Fig. 4. The AUC values for Models 1 and 2 were 0.85 (95% confidence interval [CI]: 0.53–0.97) and 0.96 (95% CI: 0.67–1.00), respectively. Model 3 demonstrated the highest AUC value of 1.00.

Discussion

We employed a combined AI-based video analysis approach to assess the microvascular anastomosis performance by integrating VA changes and instrument motion. By comparing technical category scores with AI-generated parameters, we demonstrated that the parameters from both AI models encompassed a wide range of technical skills required for microvascular anastomosis. Furthermore, ROC curve analysis indicated that integrating parameters from both AI models improved the ability to distinguish surgical performance compared to using a single AI model. A distinctive feature of this study was the integration of multiple AI models that incorporated both tools and tissue elements.

AI-based technical analytic approach

Traditional criteria-based scoring by multiple blinded expert surgeons was a highly reliable method for assessing surgeon performance with minimal interrater bias (Fig. 2 and Supplementary Table 1). However, the significant demand for human expertise and time makes real-time feedback impractical during surgery and training10,11,18. A recent study demonstrated that self-directed learning using digital instructional materials provides non-inferior outcomes in the initial stages of microsurgical skill acquisition compared to traditional instructor-led training28. However, direct feedback from an instructor continues to play a critical role when progressing toward more advanced skill levels and actual clinical practice.

AI technology can rapidly analyze vast amounts of clinical data generated in modern operating theaters, offering real-time feedback capabilities. The proposed method’s reliance on surgical video analysis makes it highly applicable in clinical settings18. Moreover, the manner in which AI is utilized in this study addresses concerns regarding transparency, explainability, and interpretability, which are fundamental risks associated with AI adoption. One anticipated application is AI-assisted devices that can promptly provide feedback on technical challenges, allowing trainees to refine their surgical skills more effectively29,30. Additionally, an objective assessment of microsurgical skills could facilitate surgeon certification and credentialing processes within the medical community.

Theoretically, this approach could help implement a real-time warning system, alerting surgeons or other staff when instrument motion or tissue deformation exceeds a predefined safety threshold, thereby enhancing patient safety17,31. However, a large dataset of clinical cases involving adverse events such as vascular injury, bypass occlusion, and ischemic stroke would be required. For real-time clinical applications, further data collection and computational optimization are necessary to reduce processing latency and enhance practical usability. Given that our AI model can be applied to clinical surgical videos, future research could explore its utility in this context.

Related works: AI-integrated instrument tracking

To contextualize our results, we compared our AI-integrated approach with recent methods implementing instrument tracking in microsurgical practice. Franco-González et al. compared stereoscopic marker-based tracking with a YOLOv8-based deep learning method, reporting high accuracy and real-time capability32. Similarly, Magro et al. proposed a robust dual-instrument Kalman-based tracker, effectively mitigating tracking errors due to occlusion or motion blur33. Koskinen et al. utilized YOLOv5 for real-time tracking of microsurgical instruments, demonstrating its effectiveness in monitoring instrument kinematics and eye-hand coordination34.

Our integrated AI model employs semantic segmentation (ResNet-50) for vessel deformation analysis and a trajectory-tracking algorithm (YOLOv2) for assessment of instrument motion. The major advantage of our approach is its comprehensive and simultaneous evaluation of tissue deformation and instrument handling smoothness, enabling robust and objective skill assessment even under challenging conditions, such as variable illumination and partial occlusion. YOLO was selected due to its computational speed and precision in real-time object detection, making it particularly suitable for live microsurgical video analysis. ResNet was chosen for its effectiveness in detailed image segmentation, facilitating accurate quantification of tissue deformation. However, unlike three-dimensional (3D) tracking methods32, our current method relies solely on 2D imaging, potentially limiting depth perception accuracy.

These comparisons highlight both the strengths and limitations of our approach, emphasizing the necessity of future studies incorporating 3D tracking technologies and expanded datasets to further validate and refine AI-driven microsurgical skill assessment methodologies.

Future challenges

Microvascular anastomosis tasks typically consist of distinct phases, including vessel preparation, needle insertion, suture placement, thread pulling, and knot tying. As demonstrated by our video parameters for each surgical phase (phases A–D), a separate analysis of each surgical phase is essential to enhance skill evaluation and training efficiency. However, our current AI model does not have the capability to automatically distinguish these surgical phases.

Previous studies utilizing convolutional neural networks (CNN) and recurrent neural networks (RNN) have demonstrated high accuracy in recognizing surgical phases and steps, particularly through the analysis of intraoperative video data35,36. Khan et al. successfully applied a combined CNN-RNN model to achieve accurate automated recognition of surgical workflows during endoscopic pituitary surgery, despite significant variability in surgical procedures and video appearances35. Similarly, automated operative phase and step recognition in vestibular schwannoma surgery further highlights the ability of these models to handle complex and lengthy surgical tasks36. Such methods could be integrated into our current AI framework to segment and individually evaluate each distinct phase of microvascular anastomosis, enabling detailed performance analytics and precise feedback.

Furthermore, establishing global standards for video recording is critical for broadly implementing and enhancing computer vision techniques in surgical settings. Developing guidelines for video recording that standardize resolution, frame rate, camera angle, illumination, and surgical field coverage can significantly reduce algorithmic misclassification issues caused by shadows or instrument occlusion18,37. Such standardization ensures consistent data quality, crucial for training accurate and widely applicable AI models across diverse clinical settings 37. These guidelines would facilitate large-scale data sharing and collaboration, substantially improving the reliability and effectiveness of AI-based surgical assessment tools globally.

Technical consideration

The semantic segmentation AI models were designed to assess respect for tissue during the needle manipulation process24. As expected, the Max-ΔVA correlated with respect for tissue in Phase B (from needle insertion to extraction). Proper needle extraction requires following its natural curve to avoid tearing the vessel wall6,7, and these technical nuances were well captured by these parameters. Additionally, the No. of TDE correlated with respect for tissue in Phases C, indicating that even during the process of pulling the threads, surgeons must exercise caution to prevent thread-induced vessel wall injury6,7. These parameters also correlated with instrument handling, efficiency, suturing technique and overall performance—an expected finding, as proper instrument handling and suturing technique are fundamental to respecting tissue. Thus, the technical categories are interrelated and mutually influential.

Trajectory-tracking AI models were designed to assess motion economy and the smoothness of surgical instrument movements25. Motion economy can be represented by the PD during a procedure. The smoothness and coordination of movement are frequently assessed using jerk-based metrics, where jerk is defined as the time derivative of acceleration. Since these jerk indexes are influenced by both movement duration and amplitude, we utilized the NJI, first proposed by Flash and Hogan38. The NJI is calculated by multiplying the jerk index by [(duration interval)5/(path length)2], with lower values indicating smoother movements. The dimensionless NJI has been used as a quantitative metric to evaluate movement irregularities in various contexts, such as jaw movements during chewing39,40, laparoscopic skills41, and microsurgical skills16,25. In this study, the Rt-PD and Lt-NJI correlated with a broad range of technical categories. Despite their distinct roles in microvascular anastomosis, coordinated bimanual manipulation is essential for optimal surgical performance6,7. With regard to Rt-NJI, these trends were particularly evident in Phases C and D, highlighting the importance of the motion smoothness in thread pulling and tying knots in determining overall surgical proficiency.

Overall, integrating these parameters enabled a comprehensive assessment of complex microsurgical skills, as each parameter captured different technical aspects. Despite its effectiveness, the model still exhibited some degree of misclassification when differentiating between good and poor performance. Notably, procedural time—a key determinant of surgical performance24,25—was intentionally excluded from the analysis. Although further exploration of additional parameters remains essential, integrating procedural time could significantly improve the classification accuracy.

This study employed the Stanford Microsurgery and Resident Training scale10,11 as a criteria-based objective assessment tool, as it covers a wide range of microsurgical technical aspects. Future research incorporating leakage tests or the Anastomosis Lapse Index13, which identifies ten distinct types of anastomotic errors, could provide deeper insights into the relationship between the quality of the final product and various technical factors.

Limitations

As mentioned above, a fundamental technical limitation of this analytical approach is the lack of 3D kinematic data, particularly in the absence of depth information. Another constraint was that when the surgical tool was outside the microscope’s visual field, kinematic data of the surgical instrument could not be captured25. Additionally, the semantic segmentation model occasionally misclassified images containing shadows from surgical instruments or hands24. To mitigate this issue, future studies should expand the training dataset to include shadowed images, thereby improving model robustness. Given that the AI model in this study utilized the ResNet-50 and YOLOv2 networks, further investigation is warranted to optimize network architecture selection. Exploring alternative deep learning models or fine-tuning existing architectures could further improve the accuracy and generalizability of surgical video analysis18.

Our study had a relatively small sample size with respect to the number of participating surgeons, although it included surgeons with a diverse range of skills. Moreover, we did not evaluate the data from repeated training sessions to estimate the learning curve or determine whether feedback could enhance training efficacy. Future studies should evaluate the impact of AI-assisted feedback on the learning curve of surgical trainees and assess whether real-time performance tracking leads to more efficient skill acquisition.

Conclusion

A combined AI-based video analysis approach incorporating VA changes and instrument motion effectively captured a broad spectrum of microsurgical technical skills and evaluated microvascular anastomosis performance. Moreover, this approach is highly adaptable to clinical applications, can advance computer-assisted surgical education, and contributes to the improvement of patient safety.

Data availability

Due to privacy and ethical restrictions, the dataset is not publicly available. The data and materials supporting the findings of this study are available from the corresponding author upon reasonable request.

References

Birkmeyer, J. D. et al. Surgical skill and complication rates after bariatric surgery. N. Engl. J. Med. 369, 1434–1442 (2013).

Fecso, A. B., Szasz, P., Kerezov, G. & Grantcharov, T. P. The effect of technical performance on patient outcomes in surgery. Ann. Surg. 265, 492–501 (2017).

Rashad, S., Fujimura, M., Niizuma, K., Endo, H. & Tominaga, T. Long-term follow-up of pediatric moyamoya disease treated by combined direct–indirect revascularization surgery: Single institute experience with surgical and perioperative management. Neurosurg. Rev. 39, 615–623 (2016).

Matsuo, M. et al. Vulnerability to shear stress caused by altered peri-endothelial matrix is a key feature of Moyamoya disease. Sci. Rep. 11, 1–12 (2021).

Fujimura, M. & Tominaga, T. Flow-augmentation bypass for moyamoya disease. J. Neurosurg. Sci. 65, 277–286 (2021).

Villanueva, P. J. et al. Using engineering methods (Kaizen and micromovements science) to improve and provide evidence regarding microsurgical hand skills. World Neurosurg. 189, e380–e390 (2024).

Sugiyama, T. Mastering Intracranial Microvascular Anastomoses-Basic Techniques and Surgical Pearls. (MEDICUS SHUPPAN, Publishers Co., Ltd, 2017).

Sugiyama, T. et al. Forces of tool-tissue interaction to assess surgical skill level. JAMA Surg. 153, 234–242 (2018).

Reznick, R. K. Teaching and testing technical skills. Am. J. Surg. 165, 358–361 (1993).

McGoldrick, R. B. et al. Motion analysis for microsurgical training: Objective measures of dexterity, economy of movement, and ability. Plast. Reconstr. Surg. 136, 231e–240e (2015).

Satterwhite, T. et al. The stanford microsurgery and resident training (SMaRT) scale: Validation of an on-line global rating scale for technical assessment. Ann. Plast. Surg. 72, S84–S88 (2014).

Zammar, S. G. et al. The cognitive and technical skills impact of the congress of neurological surgeons simulation curriculum on neurosurgical trainees at the 2013 neurological society of India meeting. World Neurosurg. 83, 419–423 (2015).

Ghanem, A. M., Al Omran, Y., Shatta, B., Kim, E. & Myers, S. Anastomosis lapse index (ALI): A validated end product assessment tool for simulation microsurgery training. J. Reconstr. Microsurg. 32, 233–241 (2016).

Harada, K. et al. Assessing microneurosurgical skill with medico-engineering technology. World Neurosurg. 84, 964–971 (2015).

Sugiyama, T., Gan, L. S., Zareinia, K., Lama, S. & Sutherland, G. R. Tool-tissue interaction forces in brain arteriovenous malformation surgery. World Neurosurg. 102, 221–228 (2017).

Ghasemloonia, A. et al. Surgical skill assessment using motion quality and smoothness. J. Surg. Educ. 74, 295–305 (2017).

Sugiyama, T. et al. Tissue acceleration as a novel metric for surgical performance during carotid endarterectomy. Oper. Neurosurg. 25, 343–352 (2023).

Sugiyama, T., Sugimori, H., Tang, M. & Fujimura, M. Artificial intelligence for patient safety and surgical education in neurosurgery. JMA J. 8, 76–85 (2024).

Sarkiss, C. A. et al. Neurosurgical skills assessment: Measuring technical proficiency in neurosurgery residents through intraoperative video evaluations. World Neurosurg. 89, 1–8 (2016).

Davids, J. et al. Automated vision-based microsurgical skill analysis in neurosurgery using deep learning: Development and preclinical validation. World Neurosurg. 149, e669–e686 (2021).

Sugiyama, T. et al. A pilot study on measuring tissue motion during carotid surgery using video-based analyses for the objective assessment of surgical performance. World J. Surg. 43, 2309–2319 (2019).

Sugimori, H., Sugiyama, T., Nakayama, N., Yamashita, A. & Ogasawara, K. Development of a deep learning-based algorithm to detect the distal end of a surgical instrument. Appl. Sci. 10, 4245 (2020).

Khalid, S., Goldenberg, M., Grantcharov, T., Taati, B. & Rudzicz, F. Evaluation of deep learning models for identifying surgical actions and measuring performance. JAMA Netw. Open 3, e201664 (2020).

Tang, M. et al. Assessment of changes in vessel area during needle manipulation in microvascular anastomosis using a deep learning-based semantic segmentation algorithm: A pilot study. Neurosurg. Rev. 47, 200 (2024).

Sugiyama, T. et al. Deep learning-based video-analysis of instrument motion in microvascular anastomosis training. Acta Neurochir. (Wien). 166, 6 (2024).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 770–778 (2016). https://doi.org/10.1109/CVPR.2016.90.

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In: Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition 779–788 (2016). https://doi.org/10.1109/CVPR.2016.91.

Dąbrowski, F. et al. Video-based microsurgical education versus stationary basic microsurgical course: A noninferiority randomized controlled study. J. Reconstr. Microsurg. 38, 585–592 (2022).

Baghdadi, A. et al. A data-driven performance dashboard for surgical dissection. Sci. Rep. 11, 15013 (2021).

Baghdadi, A., Lama, S., Singh, R. & Sutherland, G. R. Tool-tissue force segmentation and pattern recognition for evaluating neurosurgical performance. Sci. Rep. 13, 9591 (2023).

Curtis, N. J. et al. Clinical evaluation of intraoperative near misses in laparoscopic rectal cancer surgery. Ann. Surg. 273, 778–784 (2021).

Franco-González, I. T., Lappalainen, N. & Bednarik, R. Tracking 3D motion of instruments in microsurgery: A comparative study of stereoscopic marker-based vs. deep learning method for objective analysis of surgical skills. Informat. Med. Unlocked 51, 101593 (2024).

Magro, M., Covallero, N., Gambaro, E., Ruffaldi, E. & De Momi, E. A dual-instrument Kalman-based tracker to enhance robustness of microsurgical tools tracking. Int. J. Comput. Assist. Radiol. Surg. 19, 2351–2362 (2024).

Koskinen, J., Torkamani-Azar, M., Hussein, A., Huotarinen, A. & Bednarik, R. Automated tool detection with deep learning for monitoring kinematics and eye-hand coordination in microsurgery. Comput. Biol. Med. 141, 105121 (2022).

Khan, D. Z. et al. Automated operative workflow analysis of endoscopic pituitary surgery using machine learning: Development and preclinical evaluation (IDEAL stage 0). J. Neurosurg. 137, 51–58 (2022).

Williams, S. C. et al. Automated operative phase and step recognition in vestibular schwannoma surgery: Development and preclinical evaluation of a deep learning neural network (IDEAL Stage 0). Neurosurgery https://doi.org/10.1227/neu.0000000000003466 (2025).

Garrow, C. R. et al. Machine learning for surgical phase recognition: A systematic review. Ann. Surg. 273, 684–693 (2021).

Flash, T. & Hogan, N. The coordination of arm movements: An experimentally confirmed mathematical model. J. Neurosci. 5, 1688–1703 (1985).

Yashiro, K., Yamauchi, T., Fujii, M. & Takada, K. Smoothness of human jaw movement during chewing. J. Dent. Res. 78, 1662–1668 (1999).

Takada, K., Yashiro, K. & Takagi, M. Reliability and sensitivity of jerk-cost measurement for evaluating irregularity of chewing jaw movements. Physiol. Meas. 27, 609–622 (2006).

Chmarra, M. K., Kolkman, W., Jansen, F. W., Grimbergen, C. A. & Dankelman, J. The influence of experience and camera holding on laparoscopic instrument movements measured with the TrEndo tracking system. Surg. Endosc. 21, 2069–2075 (2007).

Acknowledgements

We would like to thank Yasuhiro Ito, Haruto Uchino, Masayuki Gekka, Masaki Ito, and Katsuhiko Ogasawara together with the Hokkaido University Neurosurgery Residency Program, for their participation in this study. We thank Masaki Mizuhara (Integra Japan Co., Ltd.), Kazuhiro Goto (Zeiss Japan Co., Ltd.), and Shusaku Tsutsui (Johnson & Johnson Inc.) for their support in organizing microvascular hands-on training courses. We thank Editage (www. editage.com) for English language editing.

Funding

This work was supported by JSPS KAKENHI (grant number JP21K09091 and JP24K15785).

Author information

Authors and Affiliations

Contributions

T.S., M.T., and H.S. contributed to study conception and design. The data were collected by T.S.. The creation of new software was conducted by M.T., H.S., and M.S.. T.S., M.T., H.S., and M.S. analyzed the data. T.S. and M.F. contributed to interpretation of data. T.S. wrote the manuscript draft, and all authors critically revised and approved the final version of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

This study was approved by the Institutional Review Board of Hokkaido University Hospital (No. 018-0291), Sapporo, Japan.

Consent to participate

Informed consent was obtained from all the participants included in this study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sugiyama, T., Tang, M., Sugimori, H. et al. Artificial intelligence-integrated video analysis of vessel area changes and instrument motion for microsurgical skill assessment. Sci Rep 15, 27898 (2025). https://doi.org/10.1038/s41598-025-13522-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-13522-1

Keywords

This article is cited by

-

Artificial intelligence and machine learning approaches for patient safety in complex surgery: a review

Patient Safety in Surgery (2025)