Abstract

Aiming at the problem of complex background interference and insufficient weld detection accuracy in steel structure weld recognition task, this paper proposes a target detection algorithm based on improved YOLOv5. By introducing the Coordinate Attention (CA) mechanism in the backbone network and integrating the CA with the C3 structure, the C3CA module is designed to effectively enhance the model’s perception of the spatial position of the weld and the detection accuracy of the weld. Experiments show that the improved YOLOv5s-C3CA model achieves 93.79% mAP@0.5 on the self-made weld data set, which is 2.48% higher than the original model. At the same time, the amount of model parameters is reduced by 8.53%, and the floating point operation is reduced by 10.6%, which achieves a balance between detection accuracy and calculation efficiency. This study verifies the effectiveness of the coordinate attention mechanism in improving the feature expression ability of the model, and provides a solution for the automatic detection of welds in industrial scenarios.

Similar content being viewed by others

Introduction

With the development of industrialization, welding technology plays an increasingly important role in modern manufacturing industry, especially in the field of steel structure, welding technology has been widely used. Whether in bridge construction and shipbuilding, or in machinery and aerospace industries, it is inseparable from the support of welding. As an important part of the steel structure connection process, the quality of welding will not only affect the performance of the product, but also related to the reliability and safety of the product. However, the traditional manual welding method is easily disturbed by human factors, and it is usually difficult to meet the requirements of modern industrial production for accuracy and production efficiency. Therefore, it is of great practical significance to realize automatic and intelligent welding.

As the basic link of welding automation, the core task of weld seam recognition is to obtain the spatial coordinates, geometric parameters and surface characteristics of weld seam through machine vision system, and provide data support for subsequent path planning and process parameter optimization. In the steel structure welding scene, according to the structural design and the actual demand, the length and shape of the steel structure weld have great changes, which brings difficulty to the weld identification.

With the development of image recognition technology, the field of weld recognition has ushered in an effective solution. The technology uses the machine vision system to collect the weld image. Through the processing and analysis of the image, the characteristics of the weld are extracted to realize the recognition of the weld. It not only has the advantages of non-contact, high precision, good real-time performance and strong adaptability, but also can effectively solve the shortcomings of traditional methods, and has broad application prospects in the field of welding automation.

In the early research, the traditional image processing technology was mainly used, involving edge detection, threshold segmentation, feature extraction, target region generation, feature classification and post-processing steps1. Dang Y X2 used Otsu combined with improved genetic algorithm to calculate the threshold, and then used the classification error to process the image edge. Zou L3 proposed an improved Canny algorithm for weld pool edge detection, and obtained a double threshold by an enhanced secondary Otsu algorithm to extract more accurate weld pool edge information. However, the shape and size of steel structure welds are diverse, and there may be interference such as strong light, shadow and noise in the actual environment, which makes the traditional image processing methods expose defects in the steel structure weld recognition task. On the one hand, traditional image processing algorithms are usually only suitable for detecting specific objects, lacking universality4; on the other hand, when dealing with complex background, noise interference and irregular weld shape, the expression ability of the algorithm is limited, and it is difficult to obtain the image information comprehensively, which greatly limits its application in the actual steel structure welding scene.

In order to solve the shortcomings of traditional methods in obtaining weld image information and achieve more accurate weld recognition, machine learning algorithms have been widely used in the field of welding. Among them, the supervised learning algorithm has achieved remarkable results. Based on statistical features, Quadir et al.5 used decision tree and support vector machine (SVM) classification techniques to realize the classification of arc welding weld defects. Patil Rajesh V et al.6 realized the efficient detection and classification of weld defects by using support vector machine and artificial neural network. JIA P et al.7 proposed a classification and prediction algorithm for spot welding. Through the training and classification of naive Bayes classifier, the distinction of various types of welded joints is realized. In the field of unsupervised learning, He T et al.8 proposed a non-baseline damage detection method for welded plate structures, which combined with K-means intelligent clustering algorithm to improve the positioning accuracy. Wei Cui et al.9 ealized the recognition of different types of welding defects based on gray gradient co-occurrence matrix, clustering analysis and mathematical morphology. In addition, Fan R et al.10studied the online welding method of gas shielded metal arc welding based on active visual perception, and realized the real-time detection of weld feature information. However, these machine learning algorithms still face many challenges in the task of steel structure weld recognition. Traditional machine learning methods need to manually design feature extractors, which have high requirements for feature engineering, and are prone to over-fitting problems when dealing with high-dimensional data. The weld image of steel structure usually has rich details and complex structural information, and the characteristics of manual design are difficult to describe comprehensively, which affects the recognition effect of steel structure weld.

In order to solve the problems of traditional machine learning algorithms, deep learning technology is gradually used in weld recognition and detection. Deep learning is an important branch of machine learning. With the development of theory, its neural network feature extraction technology is widely used in computer vision tasks, which promotes the innovation in the field of target detection. Convolutional neural network can automatically extract image features through the construction of multi-layer convolution layer and pooling layer, and has strong feature learning ability for complex images. At present, the target detection algorithm based on convolutional neural network has rapidly become the mainstream of research in the field of image processing11,12. Cai W et al.13 proposed a weld detection method based on deep fully convolutional neural network, which can achieve MIoU and MPA values of 78.15% and 85.18% on the verification set, respectively. Cao et al.14 constructed a multi-modal deep learning model to detect pseudo-defects in ultra-thick stainless steel welds. Compared with the single-modal model, the performance is improved by 8%. Yang et al.15 proposed an improved Faster R-CNN for weld defect recognition, and the average accuracy reached 80.1%. Gao A et al.16 designed a feature point detection network, which can accurately obtain the characteristics of welding points in high noise environment. Wang J et al.17 used the semantic segmentation model to realize the tracking and detection of welds. Zhao M et al.18 realized the path planning of the seam tracking detection robot based on YOLOv8s-seg.

However, there are still some deficiencies in the current deep learning method in the field of steel structure weld recognition. On the one hand, the stability of existing models in complex industrial environments needs to be improved. Although some models perform well on general datasets, their performance fluctuates greatly in special scenes such as steel structure welding with strong light and noise. On the other hand, the computational efficiency and accuracy of the model are difficult to achieve the best balance. The two-stage detection model such as R-CNN algorithm19Fast R-CNN algorithm20Faster R-CNN algorithm21 and Mask R-CNN algorithm22etc., although high precision but huge amount of calculation, detection process takes a long time, often difficult to achieve the standard of industrial real-time detection23. Single-stage object detection networks, such as SSD24YOLO25etc., although the generation steps of candidate regions are removed, the targets are predicted and classified directly based on the extracted features, which shortens the detection time of the targets. However, in order to pursue speed, some models sacrifice certain detection accuracy in complex scenes, which is prone to insufficient feature extraction.

In addition, in terms of data sets, public data sets mostly focus on general defect detection, and lack industrial-level data sets containing multiple types of welds and multiple interference factors, which is difficult to meet the complex and diverse needs in practical industrial applications. In terms of feature extraction optimization, there are still deficiencies in feature extraction optimization for steel structure welds, and the key features of weld images are not fully explored, resulting in limited recognition ability of the model for small or complex welds.

In this study, based on YOLOv5, CA attention mechanism is introduced into the backbone network and fused with C3 module to construct C3CA module, which enhances the model ‘s ability to extract weld features and can better improve the recognition accuracy in complex industrial environment. At the same time, the data set is expanded by data augmentation technology to improve the generalization ability of the model and make it more suitable for various interference factors in the actual industrial scene. The improved model reduces the number of parameters and computational complexity while ensuring accuracy, improves computational efficiency, and is easier to deploy on industrial equipment with limited hardware resources. This series of improvements provide a more reliable and efficient solution for steel structure weld identification, which is of great significance for improving the accuracy and efficiency of weld identification and promoting the wide application of welding automation in practical engineering.

Methods

YOLOv5 target detection model

YOLOv5 is a fast target detection algorithm based on deep learning. It can complete the target detection task through a single neural network model and convert the target detection problem into a regression problem. The algorithm uses the convolutional neural network to directly output the bounding box coordinates and category probability of the target in the image, and achieves faster detection speed while maintaining high detection accuracy. This efficiency makes YOLOv5 suitable for application scenarios with high real-time requirements.

The YOLOv5 model is a more advanced target detection model. Since its release, it has been continuously updated and optimized. At present, it has multiple versions, mainly including YOLOv5n, YOLOv5s, YOLOv5m, YOLOv5l and YOLOv5x. The difference between these versions is mainly the depth and width of the model, and the difference in width and depth affects the accuracy and inference speed of the model. Among them, the YOLOv5 s version is a model with small depth and width in the YOLOv5 series. It has a fast inference speed and is suitable for scenarios with high real-time requirements. YOLOv5x is the model with the largest depth and width in the YOLOv5 series. It has high detection accuracy, but its inference speed is the slowest and is suitable for scenarios with very high accuracy requirements.

In the process of industrial production, in order to quickly find the weld position and complete the welding operation, the recognition of the weld needs to be real-time. Therefore, the detection speed is a factor to be considered. Considering the accuracy and inference speed of the model, this paper chooses YOLOv5 s as the basic model for data training. The YOLOv5 s model can not only ensure high detection accuracy, but also meet the requirements of industrial real-time detection, which is suitable for steel structure weld recognition tasks.

Model structure

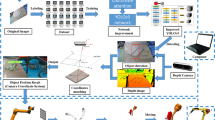

The model structure of YOLOv5 can be divided into four main parts : Input, Backbone, Neck and Head26,27. The specific structure is shown in Fig. 1.

The input part mainly performs the preprocessing of the original image, including a series of operations such as image size adjustment and normalization. In order to maintain the aspect ratio of the image and avoid the distortion of the image, YOLOv5 also uses adaptive scaling technology. In addition, the input side also supports adaptive anchor frame calculation and Mosaic data enhancement to improve the generalization ability of the model. The backbone network is the feature extraction part of YOLOv5. The CSPDarknet53 network structure is used to obtain the features in the image through feature segmentation, convolution operation, feature fusion and transition layer. The structure contains a deep neural network architecture diagram of multiple cross-stage local network (CSP) modules. The feature mapping of the basic layer is divided into two parts. One part is directly transmitted to the next layer, and the other part is convoluted and then merged with the former. This method not only retains the rich and detailed information of the underlying layer, but also extracts high-level semantic features through convolution operations. The neck network is located between the backbone network and the detection head. The PAN (Path Aggregation Network) structure is used to fuse feature maps from different resolutions. PAN will transmit features from bottom to top, combining the better object border information obtained from the low-resolution layer with the better object feature information obtained from the high-resolution layer to help the network extract more accurate target features. The detection head is the output part of YOLOv5, which is responsible for generating the prediction box of the target. The Head output part contains a series of convolution layers and linear layers, and the prediction box of the target is obtained by multiple convolution operations and pooling operations. The prediction box contains the target category, bounding box coordinates, and related confidence scores.

Improvement of YOLOv5 model

In order to improve the feature extraction ability of YOLOv5 model and enhance the recognition accuracy of the model for steel structure welds, this paper modifies the backbone network of YOLOv5. The attention mechanism is added to the backbone network, and the attention mechanism module is integrated with the C3 module, so that the network pays more attention to the key area where the weld is located while retaining the shallow detail features and depth features, and improves the ability to capture the weld features.

CA attention mechanism

The CA (Coordinate Attention) attention mechanism is a lightweight attention mechanism proposed by Hou et al.28 in 2021. On the basis of the traditional attention mechanism, the CA attention mechanism integrates the location information into the attention calculation, so that it not only inherits the advantages of the channel attention method, can effectively capture the dependencies between channels, but also can improve the sensitivity of the model to spatial details through unique location information coding. This improvement enables the model to capture the feature space position more accurately and obtain a wider range of spatial position information without bringing too much calculation.

In convolutional neural networks, channel features usually correspond to semantic information, and spatial features correspond to location information. The CA attention mechanism can not only determine the importance between channels, but also integrate the importance of spatial locations through convolution and full connection operations. This mechanism makes the CA attention mechanism perform well in a variety of computer tasks. CA attention mechanism can achieve good results in image classification, target detection and semantic segmentation tasks. In the image classification task, the CA attention mechanism enhances the model ‘s ability to obtain details and improve the classification accuracy by strengthening the spatial information position. In the target detection task, the CA attention mechanism can make the model pay more attention to the target area and ignore the background interference, so as to improve the detection accuracy. In the semantic segmentation task, the CA attention mechanism will simultaneously obtain the spatial location information and the dependencies between channels, and improve the segmentation accuracy of the model to the target.

The CA attention mechanism encodes the position information of the feature map in a unique way. The input feature map is pooled along the horizontal and vertical directions respectively, and two feature descriptors with spatial location information are obtained, which contain horizontal and vertical location information respectively. Then, using these two feature descriptors, through a series of convolution and full connection operations, the attention weights corresponding to the horizontal and vertical directions are generated. These weights not only reflect the importance between channels, but also include the importance of spatial location. Finally, the generated attention weight is weighted and fused with the original feature map to highlight the location and channel features related to the target and suppress irrelevant information. The network structure of CA attention mechanism is shown in Fig. 2.

It can be seen from Fig. 2 that if the input size is a C × H × W feature map, where C is the number of channels, H and W are the height and width, respectively. First, the input feature map is averaged in the horizontal and vertical directions, respectively. The specific expressions are shown in Eq. (1) and Eq. (2).

Here, \(\:{\text{z}}^{\text{h}}\) is the output after average pooling along the horizontal direction, \(\:{\text{z}}^{\text{h}}\in\:{\text{R}}^{\text{C}\times\:\text{H}\times\:1}\); \(\:{\text{z}}^{\text{w}}\) is the output after average pooling along the vertical direction, \(\:{\text{z}}^{\text{w}}\in\:{\text{R}}^{\text{C}\times\:1\times\:\text{W}}\); x is the input feature map, \(\:\text{x}\in\:{\text{R}}^{\text{C}\times\:\text{H}\times\:\text{W}}\).

The feature map in two directions is generated by average pooling, which retains the spatial location information. Then, the two pooled feature maps are spliced on the channel dimension to obtain a feature vector, whose expression is shown in formula (3).

Then, the features are fused and converted through a 1 × 1 two-dimensional convolutional layer, and the convolutional feature vectors are normalized in batches, and the expression ability of the model is increased through a nonlinear activation function. The specific expression is shown in Eq. (4).

In the formula, \(\:\delta\:\) is a nonlinear activation function, BN represents a batch normalization operation, Conv represents a 1 × 1 two-dimensional convolution, and f is an intermediate feature map.

Then, the intermediate feature map after batch normalization and nonlinear activation is split to obtain the horizontal feature \(\:{f}^{h}\in\:{R}^{C\times\:1\times\:W}\) and the vertical feature \(\:{f}^{w}\in\:{R}^{C\times\:H\times\:1}\). The two features are subjected to two-dimensional convolution operation respectively, and then the Sigmoid activation function is applied to further generate the attention weights and in both directions. The specific expressions are as shown in formulas (5) and (6).

Finally, the two attention weights are multiplied by the original input feature map to obtain the weighted output feature map Y, which is shown in expression (7).

The size of the output weighted feature map is still C × H × W, and the channel information and spatial position information of the original input features have been combined. The CA attention mechanism is inserted into the model ‘s backbone network Backbone as shown in Fig. 3.

C3CA module

In order to avoid the excessive increase in model parameters and calculations after introducing the attention mechanism, this paper embeds the CA attention module into the Bottleneck residual path in the C3 module. On the basis of retaining the original CSP structure to maintain the efficient transfer of gradient flow, the design is optimized by residual connection branch. On the one hand, the CA module applies directional attention to the feature map processed by the residual block based on the coordinate information coding, which significantly enhances the feature expression of the target area and suppresses the background interference. On the other hand, the residual connection and the CA module form a dual feedback mechanism, which not only realizes information integration through feature fusion, but also avoids gradient attenuation in the back propagation process by means of the gradient direct transmission of attention weights. In addition, the coordinate information embedding of the CA attention module can help the network adaptively adjust the features at different scales, thereby improving the network ‘s ability to detect targets of different sizes.

In this study, the CABottleneck module was designed by embedding the coordinate attention mechanism into the Bottleneck module of YOLOv5, as shown in Fig. 4. The module first compresses the channel dimension and extracts spatial features by convolution, and then inserts the CA module to generate direction-specific attention weights. The CA module encodes the absolute position information into the features through pooling operations in the horizontal and vertical directions, generates horizontal weights and vertical weights, and finally acts on the original features by element-by-element multiplication. The improved CABottleneck module fuses the attention-enhanced features with the original features through residual connection, which enables the model to dynamically adjust the sensitivity to spatial location while maintaining gradient stability. The CABottleneck module is replaced by the original Bottleneck module to obtain the changed C3CA module. The structure of the C3CA module is shown in Fig. 5.

In this paper, the C3 module in the backbone network Backbone of the YOLOv5s model is replaced by the C3CA module, so that the model can pay more attention to important areas and improve the accuracy and performance of the model in target detection. The structure of YOLOv5s-C3CA model is shown in Fig. 6.

Loss function

The loss function of YOLOv5 consists of three parts: classification loss, location loss and confidence loss. These losses are combined into the final loss function by weighted summation for model optimization. The classification loss is used to evaluate the difference between the target category predicted by the model and the real category. The bounding box used to evaluate the model prediction contains the difference between the confidence of the target and the actual situation. The positioning loss is used to evaluate the difference between the predicted bounding box position of the model and the real bounding box position. YOLOv5 uses CIoU loss (Complete Intersection over Union Loss) to calculate the location loss. The CIoU loss takes into account not only the overlap area of the bounding box, but also the center distance and aspect ratio. The specific formula is shown in formula (8).

Here, \(\:{{\uprho\:}}^{2}(b,{b}^{\text{g}\text{t}})\) represents the Euclidean distance between the center point of the prediction box and the real box; \(\:c\) refers to the diagonal length of the minimum circumscribed rectangle; IoU represents the intersection and union ratio between the prediction box and the real box; \(\:\alpha\:\) is the weight coefficient; \(\:v\) is a parameter used to measure the consistency of the width-to-height ratio of the prediction frame and the real frame. The calculation formula is shown in Eq. (9).

Here, w and h is the width and height of the prediction box, wgt and hgt is the width and height of the real box.

Experiment

Construction of dataset

The steel structure weld image data set used in this study was collected from the welding factory of Xinjiang Furui Assembly Technology Co., Ltd., and was obtained by CGU2-500 C-UVC industrial camera. It contains a total of 1300 images with a resolution of 1920 × 1080.

The collected images include common weld forms such as fillet welds and splicing welds. At the same time, in order to avoid the influence of existing solder joints on the weld recognition process, images of solder joints between welds are also collected to ensure that the data set can contain welds in this scenario. Among them, the fillet weld accounts for 45%, the butt weld accounts for 35%, and the weld with solder joints accounts for 20%. In order to better simulate the real situation, the image data of different light, shooting distance and angle are collected. The images under different illumination conditions were collected from the front view and side view respectively. Among them, the normal illumination image was 70%, the strong illumination image was 15%, and the low illumination image was 15%. The weld image is shown in Fig. 7.

In addition, this paper also enhances the data of the weld image, and some pictures are shown in Fig. 8. By rotating the images in the data set by 90o and 180o respectively, and then processing the images by reducing the brightness and increasing the brightness by 30%, adding Gaussian noise and salt and pepper noise, the number of weld images is further expanded, so as to make up for the missing data and improve the generalization ability of the model.

After the weld image is obtained, all the image data are labeled. Use the data annotation tool LabelImg to manually annotate all weld images. All labels are saved as.xml files in VOC format, and the label files are saved under the Annotations folder. After labeling all the pictures, the weld image and its label file are divided into three parts : training set, verification set and test set. Among them, 1040 images in the training set, accounting for 80%, 130 images in the verification set, accounting for 10%, and 130 images in the test set, accounting for 10%, are used for the training process, verification process and test process respectively.

Experimental parameters

The model training of this experiment is carried out under the same conditions. The operating system uses the Windows 10 operating system, the compilation software is PyCharm, the Python version is 3.7, the CUDA version is 11.0, the deep learning framework is Pytorch, the Pytorch version is 1.7.1, the CPU is Intel (R) Core i7-13700 H, and the GPU is NVIDIA GeForce RTX 2080Ti.

In addition, the learning rate also has an impact on the training of the model. When the learning rate is set too high, it may cause the model to fail to converge; when the learning rate is set too small, the training speed of the model will be slow. In this experiment, the initial learning rate is set to 0.001, which is a general value that can show good results in most tasks. In addition, the choice of the optimizer will also affect the convergence speed and final performance of the model. Because the Adam optimizer can adaptively adjust the learning rate of each parameter during the training process, it performs well in most tasks, so the Adam optimizer is selected in this experiment.

In addition, the size of the input image will affect the performance and computational complexity of the model. In this paper, the image is set to 640 × 640, which is suitable for large steel structure weld recognition tasks that require high-resolution input. The number of iterations epoch is set to 250, and the batch size of each training is set to 16, which can not only ensure the training speed of the model, but also improve the generalization ability of the model to a certain extent.

Evaluating indicator

In order to verify the performance of the model and objectively evaluate the model, this paper uses mAP (mean Average Precision), P (Precision) and R (Recall) as indicators to evaluate the detection effect of the model. The accuracy rate represents the proportion of the real positive samples in all the instances predicted to be positive samples, which reflects the accuracy of the model’s detection of positive samples. In this experiment, it refers to the proportion of the number of correctly identified weld samples to the total number of identified samples. The recall rate represents the proportion of correctly predicted positive samples in all instances that are actually positive samples, reflecting the model ‘s ability to detect positive samples. The higher the value, the better the model effect. This paper refers to the proportion of the number of correctly identified weld samples in the actual number of weld samples. mAP is the average accuracy of all categories, reflecting the average accuracy of each category. The higher the value of mAP, the better the model effect. The specific calculation formula is as follows:

In the above formula, P represents the accuracy rate, R represents the recall rate, and TP represents the real example, that is, the number of instances predicted to be positive samples and actually positive samples; FP represents a false positive example, that is, the number of instances predicted as positive samples but actually negative samples; FN represents a false counterexample, that is, the number of instances predicted as negative samples but actually as positive samples; AP represents the average accuracy of a single category; k represents the number of categories.

Experimental results and analysis

The training results of each model obtained during the experimental training process are shown in Table 1. The complexity of the model is shown in Table 2, and the mAP@0.5 training result curve is shown in Fig. 9.

It can be seen from the graph in Fig. 9 that the average accuracy of the improved YOLOv5s-C3CA model is higher than that of the original YOLOv5s model. It can be seen from Table 1 that the original YOLOv5s model mAP@0.5 is 91.31%. The accuracy of the YOLOv5s-CA model after introducing the CA attention mechanism has been improved, indicating that the CA attention mechanism enhances the model ‘s attention to the target features and improves the detection accuracy. In addition, the YOLOv5s-C3CA model that integrates the attention mechanism into the C3 module, mAP@0.5 reaches 93.79%, showing better performance than the YOLOv5s model, the YOLOv7 model, and the YOLOv8 model, and also better than YOLOv5s-SE and YOLOv5s-CBAM, with the highest accuracy among all models. Compared with the original YOLOv5s model, the mAP@0.5 of the YOLOv5s-C3CA model is increased by 2.48%, which indicates that the C3CA structure has a good effect on improving the performance of the model and can identify and locate the target more accurately. In addition, the accuracy and recall rate of the model after adding the attention mechanism are also higher than those of the original model, which indicates that the CA and C3CA structures not only improve the average accuracy, but also optimize the model ‘s ability to judge positive samples, so that the model can better detect all existing targets and reduce the occurrence of false detection.

It can be seen from Table 2 that the parameters and floating-point operations of the YOLOv5-CA model are slightly larger than the original YOLOv5 model. This is because the CA attention mechanism is introduced into the model, which not only increases the parameters, but also brings additional floating-point operations in the calculation process. The parameters and floating-point operations of the YOLOv5s-C3CA model are smaller than those of the YOLOv5 model. While the accuracy of the model to detect the weld is guaranteed, the parameters are reduced by 8.53% and the computational complexity is reduced by 10.6%.

It can be seen from Fig. 10 (a) and (b) that during the whole training process, the downward trend of YOLOv5s-C3CA model is similar to that of YOLOv5s model, and tends to a lower value in the later stage. From (c) and (d), it can be seen that the positioning loss of the verification set of the two models is also an almost similar downward trend, but the target loss volatility of YOLOv5s is larger than that of the improved model, indicating that the improved model has better target detection performance.

Figure 11 shows the curve of the accuracy and recall rate of the YOLOv5-C3CA model with confidence. It can be seen that when the confidence is small, the recall rate of the model is high and the precision is small, and when the confidence is large, the precision is high and the recall rate is low. When the confidence threshold is 0.5, the precision and recall rate are higher.

In order to verify the actual detection efficiency of the model, the test set is used for verification. The confidence threshold is set to 0.5, and the test results are shown in Fig. 12. The results show that the model can accurately identify the weld targets in structures such as butt welds, fillet welds and solder joint welds, showing stable detection performance.

In order to verify the robustness of the model, this study introduces the public weld data set JPEGWD for cross-dataset testing. The data set contains 12,000 welding defect classification images and 1800 splicing weldment images, covering butt welds, fillet welds and other types, and including interference scenes such as strong light and shadow. The results are shown in Table 3.

It can be seen from Table 3 that the mAP@0.5 of the YOLOv5-C3CA model reaches 90.8% on the JPEGWD public weld data set, and compared with the models such as YOLOv5s-SE and YOLOv5s-CBAM, the model also has better detection results, which verifies the effectiveness of the algorithm on the non-self-made dataset, and shows that the model has good adaptability and robustness.

Conclusion

In this paper, an improved YOLOv5 model integrating coordinate attention mechanism is proposed for steel structure weld recognition task. By embedding the coordinate attention module into the C3 module, the C3CA structure is constructed. While retaining the efficient transmission characteristics of the CSP network gradient, the direction-aware feature enhancement strategy significantly improves the model ‘s refined perception of the spatial position of the weld and enhances the recognition ability of the weld. The experimental results show that the improved YOLOv5s-C3CA model achieves 93.79% mAP@0.5 detection accuracy, which is 2.48% higher than the original model, and the parameter amount is reduced by 8.53% and FLOPS is reduced by 10.6%, which verifies the balance optimization of lightweight and high precision. Compared with other detection models, the proposed method shows better comprehensive performance and better detection accuracy in weld detection tasks. This study provides a scalable technical framework for industrial-grade weld inspection and has important reference value for promoting intelligent welding.

However, there are still some limitations in this study: (1) the diversity of weld types and working conditions covered by the data set needs to be further expanded; (2) The existing models only rely on visual information, and do not make full use of multi-source data such as ultrasound and infrared to improve the recognition ability.

Future research can focus on the following directions: (1) exploring the deep integration strategy of attention mechanisms and feature pyramids; (2) conducting multi-sensor data fusion experiments to enhance adaptability in complex scenes; (3) further developing an online learning framework to meet the real-time detection requirements of dynamic welding conditions.

Data availability

The datasets used and/or analysed during the current study available from the corresponding author on reasonable request.

References

Liang, Y. et al. A stable diffusion enhanced YOLOV5 model for metal stamped part defect detection based on improved network structure[J]. J. Manuf. Process. 111, 21–31 (2024).

Dang, X. Y. Weld seam edge detection based 2D maximum between-Cluster variance and genetic Algorithm[J]. Appl. Mech. Mater. 608, 3–6 (2014).

Zou, L., Zheng, Y. & Lu, J. An edge detection method for welding pool based on an improved Canny algorithm[C]//Journal of Physics: Conference Series. IOP Publishing, 2785(1): 012013. (2024).

Park, C., Bae, H., Yun, J. & Yun, S. Automated surface inspection system for black resin coated steel. In: 2012 12th International Conference on Control, Automation and Systems;2012.pp. 1683–5.

Quadir, S. M. et al. A study on weld defects classification in gas metal arc welding process using machine learning techniques[J].Materials Today: Proceedings,2021,43(P1):623–628.

Patil, R. V. & Reddy, Y. P. An autonomous technique for multi class weld imperfections detection and classification by support vector machine[J]. J. Nondestr. Eval. 40 (3), 76 (2021).

JIA, P. & SHENG, B. ZHAO G. Machine learning-based algorithm for the classification and prediction of multi-type spot weld quality:Papers[J]. Journal of Advanced Mechanical Design, Systems, and Manufacturing,2023,17(6):JAMDSM0074–JAMDSM0074.

He, T. et al. A novel baseline-free defect detection and localization method of welded steel plate based on reciprocity loss[J]. Meas. Sci. Technol. 32 (2), 025602 (2021).

Cui, W. et al. A recognition algorithm to detect pipe weld defects[J]. Tehnicki Vjesnik-Technical Gaz. 24 (6), 1969–1975 (2017).

Fan, R. et al. Active visual continuous seam tracking based on adaptive feature detection and particle filter tracking[J]. Appl. Opt. 63 (14), 3944–3954 (2024).

Zhao, Z. Q. et al. Object detection with deep learning: A review[J]. IEEE Trans. Neural Networks Learn. Syst. 30 (11), 3212–3232 (2019).

Jiao, L. et al. A survey of deep learning-based object detection[J]. IEEE Access. 7, 128837–128868 (2019).

Cai, W. et al. Weld beads and defects automatic identification, localization, and size calculation based on a lightweight fully convolutional neural network[J]. Opt. Laser Technol. 170, 110266 (2024).

Cao, W. et al. The detection of PAUT Pseudo defects in ultra-thick stainless-steel welds with a multimodal deep learning model[J]. Measurement 241, 115662 (2025).

Yang, W. et al. Generalized weld bead region of interest localization and improved faster R-CNN for weld defect recognition[J]. Measurement 222, 113619 (2023).

Gao, A. et al. YOLO-Weld: A modified YOLOv5-Based weld feature detection network for extreme weld Noise[J]. Sensors 23 (12), 5640 (2023).

Wang, J. et al. Weld seam tracking and detection robot based on artificial intelligence Technology[J]. Sensors 23 (15), 6725 (2023).

Zhao, M. et al. Welding seam tracking and inspection robot based on improved YOLOv8s-Seg model[J]. Sensors 24 (14), 4690 (2024).

Girshick, R. et al. Rich feature hierarchies for accurate object detection and semantic segmentation[C]//Proceedings of the IEEE conference on computer vision and pattern recognition. : 580–587. (2014).

Girshick, R. Fast r-cnn[J]. arxiv preprint arxiv:1504.08083, (2015).

Ren, S. et al. Faster R-CNN: towards real-time object detection with region proposal networks[J]. IEEE Trans. Pattern Anal. Mach. Intell. 39 (6), 1137–1149 (2016).

He, K. et al. Mask r-cnn[C]//Proceedings of the IEEE international conference on computer vision. : 2961–2969. (2017).

Xu, L. et al. A light-weight defect detection model for capacitor appearance based on the Yolov5[J]. Measurement 232, 114717 (2024).

Liu, W. et al. Ssd: Single shot multibox detector[C]//Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14. Springer International Publishing, : 21–37. (2016).

Redmon, J. et al. You Only Look Once: Unified, Real-Time Object Detection.[J].CoRR,2015,abs/1506.02640.

Lihu, P. et al. A lightweight algorithm based on YOLOv5 for relative position detection of hydraulic support at coal mining faces[J]. J. Real-Time Image Proc. 20 (2), 40 (2023).

Ying, Z. et al. A modified-YOLOv5s model for detection of wire braided hose defects[J]. Measurement 190, 110683 (2022).

Hou, Q., Zhou, D. & Feng, J. Coordinate attention for efficient mobile network design[C]//Proceedings of the IEEE/CVF conference on computer vision and pattern recognition. : 13713–13722. (2021).

Author information

Authors and Affiliations

Contributions

Shijie Zhu conducts experiments and writing papers. Lixin Zhang and Jiawei Zhao review and modify the paper, guide the methods and ideas. Xue Hu review the text. Xi Luo assists in obtaining weld image data.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhu, S., Zhang, L., Zhao, J. et al. Research on steel structure weld seam recognition algorithm based on improved YOLOv5. Sci Rep 15, 34276 (2025). https://doi.org/10.1038/s41598-025-16550-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16550-z