Abstract

Classification of indoor scenes is a crucial task of computer vision. It has widespread applications like smart homes, smart cities, robotics, etc. Primitive classification methods like Support Vector Machines (SVM) and K-Nearest Neighbors (KNN), provide a compromised performance with complex indoor environments due to light variations, intra-class similarities, and occlusions. Deep Learning (DL) models, especially Convolutional Neural Networks (CNNs), have improved classification accuracy significantly by extracting the image features. This study proposes and implements a novel MultiData model, which integrates DL with Linear Discriminant Analysis (LDA) and Federated Learning (FL) to enhance classification performance while preserving data privacy. Comparative performance analysis of the MultiData model with VGG16, VGG19, and ResNet152 shows that MultiData achieves near-perfect accuracy (99.99%) and minimal validation loss (0%). The performance of the proposed model was further compared with FL-based training across four clients using IID and non-IID datasets. The results further confirm the model’s robustness, achieving 100% accuracy in training and over 95% in validation times respectively. This research is beneficial for stakeholders in healthcare, smart infrastructure, surveillance, and other IoT-based automation, aligning with SDG 9 (Industry, Innovation, and Infrastructure), SDG 11 (Sustainable Cities and Communities), and SDG 12 (Responsible Consumption and Production). By enabling efficient and privacy-preserving scene classification, this work contributes significantly to the development of smart and sustainable environments enhancing AI-based decision-making across various sectors.

Similar content being viewed by others

Introduction

Classification of objects is a typical topic of research in the image domain. Classifying or categorizing indoor images into predefined classes is termed indoor scene classification1. The images are focused on indoor settings or enclosed spaces. It is a subdomain of image processing, computer vision, and scene recognition where the classification is majorly done into various classes like office, kitchen, library, living room, gym, bedroom, etc., based on the visual content of the images. Monitoring and analyzing domestic activities also make understanding indoor environment behaviors and human activities easier2.

The performance of Machine Learning (ML) models is highly dependent on the quality of the input training data, and proper organization of the dataset. An enhanced output is obtained when the training data is of high quality. The high cost and time-consuming process of data collection often results in low-quality samples or an imbalanced dataset which further creates performance gaps and degrades the performance of ML models3. Diverse fields are explored to provide a personal service of recognizing the activities and surroundings of users. Data augmentation has been applied in various fields to address data-related issues. Various operations like rotating, cropping, or adding noise to the image are used in the image domain and acoustic studies. One of the computer vision tasks that analyze background and target objects and predicts the scene category is called ‘scene classification’4. The same scene can have multiple points of view or scene areas, which makes it difficult to obtain feature space. Indoor scene classification is also integrated with robotic applications to perceive and recognize the background environment5.

DL models have also emerged as effective methods to classify indoor images6. Before DL models emerged, primitive methods like traditional image processing and feature extraction techniques were used to categorize indoor images into predefined classes. Primitive methods heavily depended on basic classification techniques like SVM, KNN, and other manual feature extraction techniques7. Though such primitive methods were somewhat effective, they were inefficient with complex scenes with cluttered, overlapped, or dimly lit objects. The DL models emerged with significant advancements in this field. Several challenges related to the variability and complexity of the object have been overcome with the use of DL in this domain. Approaches like DL-based CNNs can automatically learn from robust features of raw images and have replaced primitive classification methods8.

FL offers many newly developed services and applications that can revolutionize existing IoT systems completely. It plays a major role in classifying indoor images by enabling collaborative learning, addressing privacy concerns, and enhancing performance without centralized data storage9. The common applications of FL in indoor image classification are in smart homes, retail, security systems, and healthcare, where sensitive information cannot be shared among devices10. The foremost advantage of FL is preserving the privacy of indoor images as they may contain confidential information. The data is kept localized on user devices like cameras, smartphones, etc., and each local device trains its local ML model on its images11. The devices then share the mathematical representations of their model performances, called gradients, to the central server where the aggregates are combined into a global model which is redistributed to the devices. These global models enhance knowledge without data sharing which is effective across various scenarios. This methodology reduces data breaches and enforces data privacy preservation by not sharing the raw data and collaborative training of ML models on multiple local devices12. FL also allows the training of models on diverse datasets collected across diverse locations further enhancing the robustness of the classification models. It can minimize the computational load by distributing them across the local devices13.

Several factors like high intra-class variability handling, addressing similar inter-class objects, feature extraction, occlusion and cluttered objects, and consistent lighting conditions affect the classification of indoor scenes. High intra-class variability handling is an essential factor that affects indoor scene classification14. Significant visual variations are exhibited by indoor scenes of the same class or category. This can be caused by varied structure, object placement, and lighting. Therefore, a robust and reliable classification method must be able to handle data variations. Another major factor affecting the classification of indoor scenes is addressing the similarity of inter-class objects15. Many classes may exhibit inter-class visual similarity of objects, e.g., chairs and tables in living rooms and offices. A reliable classifier must be able to differentiate scenes based on their contextual information and spatial arrangements rather than just the objects.

The classification method must be sufficiently resilient to ensure accuracy. Occluded and cluttered images often add complexity to indoor objects therefore, feature extraction is a comprehensive understanding of the scene necessary for capturing the spatial and object-specific details16. Lighting conditions are another major aspect that affects the classification of indoor scenes. Indoor images may be subject to inconsistent lighting conditions caused by shadows, reflections, etc. The classification methods must perform efficiently in diverse lighting conditions.

Classification of indoor scenes plays an important role in understanding the real-world indoor scenario. Some domains where indoor scene classification is important are: robotics for context-aware navigation, IoT-enabled smart homes for personalized automation, drones and CCTV for surveillance and disaster management, Augmented and virtual realities for interior design simulation, and assistive technologies for the elderly or the visually impaired17. This way ML and DL have revolutionized image classification tasks by accurately identifying and classifying objects.

The present research work addresses various key objectives regarding the classification of images. The key objectives of the research can be enlisted as follows:

-

A basic DL algorithm is implemented.

-

A new approach named LDA, which combines ML and DL models, has been implemented as shown in Fig 3.

-

A new approach implemented on FL as depicted in Fig 4.

The rest of the manuscript is organized in 5 sections. Section 2 comprises the background literature study in various sections relevant to the experiment. The third section comprises the materials and methods required for the implementation of this study. Section 4 comprises the results and discussions of the study, followed by the conclusion section.

Literature survey

Indoor image classification is becoming increasingly important in applications like smart cities, smart healthcare, robotics, and security systems. Several research works have been done to propose and implement robust computational approaches and facilitate indoor scene classifications in various research domains18.

Traditional indoor image classification methods were performed using feature extraction methods like Scale-Invariant Feature Transform (SIFT) and Histogram of Oriented Gradients (HOG)19. Such techniques implemented manually designed algorithms for detecting textures and edges in an image. Though effective, these methods seemed ineffective in complex indoor environments like variable lighting or occlusions and had several limitations such as reliance and expertise on the domain.

The advent of ML algorithms like SVMs and Random Forest (RF) significantly enhanced the learning patterns from the features extracted using traditional methods20. Though being comparatively more effective, the quality of these models was constrained which was eventually removed by making a transition to DL models21. CNNs improved the performance of tasks involving diverse and complex indoor scenes by ensuring automatic feature learning from the raw images. Such evolution allows better generalization across various application domains and datasets by minimizing the dependence on manually extracting features.

-

a.

Applications of ML in indoor image classification.

Various researchers have understood the importance of classifying indoor images in diverse application areas. Several ML methods have efficiently been used to classify indoor scenes into distinct classes. The lightweight architecture of the GenericConv model is designed and proposed in22 to classify images using max-pooling, dense layers, integration of convolutional layers, dropout, and few-shot learning which helps in the prevention of overfitting and efficiently extracts features. MiniSun, MiniPlaces, and MIT-Indoor 67 datasets were used to test the model results, where superior model performances were highlighted. In23, the authors used S3DIS dataset to classify 3D indoor point clouds using XGBoost, Multi-Layer Perceptron (MLP), Random Forest (RF), and TabNet al.gorithms, with RF achieving 86% accuracy. A novel ML model named RepConv is introduced in24 to classify scenes on the Intel scenes dataset which was recategorized for binary and multi-class classification. The authors demonstrated a comparative performance of RepConv on ResNet 50 and SE ResNext 101 using fewer parameters and epochs. For multi-task classification, 93.55% and 75.54% accuracies are achieved on training and validation data, respectively. Whereas, for binary classification, 98.08% and 92.70% are achieved on training and validation data, respectively.

A comprehensive review of ML is provided in various image processing techniques, highlighting the challenges of foggy images and enhancing, segmenting, and denoising images using ML. The advantages of transfer learning for small datasets are emphasized and the need for better datasets and lightweight architectures is also emphasized for real-time defogging applications. Scene-Aware Label Graph Learning (SALGL) framework to classify multi-label images is introduced in25 which captures label co-occurrence dynamically by associating them with scene categories. A semantic attention module is incorporated for aligning visual label features, a graph propagation mechanism is used to refine label predictions, and a scene-aware co-occurrence module is implemented to model label interactions. Robust label handling dependencies are implemented in diverse datasets, which outperforms the existing methods significantly. In26, the authors present a semantic classification of LiDAR-based sensory data of indoor robot navigation. A cost and memory-efficient framework is introduced to distinguish between doorways, rooms, halls, and corridors and missing or infinite LiDAR values are handled using the preprocessing techniques. SVM achieved the best testing accuracy of 97.21%. Table 1 summarizes the various ML approaches used for classifying indoor images.

ML uses algorithms and statistical methods that can be trained using data patterns without explicit programming. ML models extract features to classify images into identified classes. Some of the most widely used ML methods for image classification are:

-

SVMs classify images by segregating features into specific classes using hyperplanes. They can classify indoor images from outdoor images using edges, colors, or textures27.

-

RF and DT classify images by learning decision rules based on their extracted features. They generally detect indoor objects based on their shapes28.

-

KNN is capable of identifying the image class based on the nearest neighbor of the image. They can classify images based on their visual similarity29.

Though ML algorithms are efficient in classification methods, they are encountered with several challenges. Some of the challenges encountered by ML algorithms are that they require manual feature extraction thereby making them more time-consuming. They also exhibit limited performance of large-scale, complex datasets30.

-

b.

Applications of DL in indoor image classification.

Various DL approaches and frameworks are also used in indoor scene classification. The extensive literature review demonstrates diverse applications of DL in this domain. A novel DL framework for multi-label image classification is presented in31, where the label-specific pooling (LSP) is introduced. MS-COCO and PASCAL VOC datasets were used to evaluate the methods and achieve better classification results, mean average precision (mAP), recall, and precision compared to the existing models. A multi-scale CNN and LSTM-based scene classification method is proposed and optimized by a Whale Optimization Algorithm (WOA). 98.91% and 94.35% classification accuracies were obtained on lab and FR079 public datasets. On optimizing the learning rate and regularization, the WOA elevated the accuracy to 99.7632.

The authors proposed a fusion of hand-crafted methods for indoor scene classification, with DL features from the EfficientNet-B7 model. An enhanced accuracy of 93.87% is obtained using the fusion model which outperformed the state-of-the-art methods33. The authors present a lightweight DL model for big data applications in indoor scene classification. IRDA-YOLOv3, an enhanced lightweight architecture is proposed which improves the YOLOv3-Tiny model for scene classification and object detection. IRDA-YOLOv3 demonstrated improved efficiency and forward computation time by 0.2 ms, reduced parameters by 56.2%, and computation by 46.3%34. Multimodal DL techniques for indoor and outdoor scene classification and recognition are reviewed, demonstrating advancements in CNN and computer vision. The integration of transfer learning and CNNs is highlighted to enhance parameter optimization and feature extraction. Significantly enhanced accuracy was attained on Kaggle’s Indoor Scene dataset35. The various DL models used for indoor scene classification are summarized in Table 2.

These methods are capable of automating feature extraction and achieving higher accuracy while classifying images. Some of the commonly used DL methods are enlisted as follows:

-

CNNs can classify large datasets with thousands of categories. They use convolutional layers to learn hierarchical features like shapes, edges, or textures for classification. The most popular CNN models are AlexNet, VGG, ResNet, and DenseNet36.

-

RNNs with CNNs: In sequence-based or video classifications like indoor surveillance videos, RNNs capture temporal relationships while CNNs perform feature extraction37.

-

Generative Adversarial Networks (GANs): These networks augment training datasets on synthetic data to improve classification accuracy. It is generally used in training models with limited indoor images by generating realistic samples38.

-

Transfer Learning: Transfer learning leverages pre-trained models such as ResNet or VGG. These models are fine-tuned for indoor image classification tasks with limited datasets. This approach also enhances accuracy by reducing training time and requiring fewer computational resources39.

Though ML methods like SVM, KNN, etc., have laid the basis for image classification, DL methods like CNNs have improved their scalability and accuracy. These aspects help in diverse applications across various fields like healthcare, robotics, security and surveillance, drones, autonomous vehicles, and real-time indoor and outdoor classifications.

-

c.

Applications of FL in indoor image classification.

FL can be applied to classify indoor scenes to address challenges in distributed data across multiple devices. It creates and empowers robust and privacy-preserving models, especially in distributed environments40. The indoor images are collected from different devices and classified locally without transferring the data to a centralized server. Some of the major applications of FL in indoor scene classification are in smart homes, indoor robotics, healthcare, retail, and smart public places where there are multifold benefits like preservation of data privacy, scalability, and learning from diverse heterogeneous data41. Another crucial aspect that affects the model performance and training process in FL is Independent and Identically Distributed (IID) and Not Independent and/or Not Identically Distributed (Non-IID) data42.

IID refers to the identically distributed independent data across various devices. It has the additional advantages of faster convergence during training and lower model bias risk. Whereas, non-IID refers to the non-uniform data distributed across various clients. Unlike IID, the non-IID exhibits challenges like comparatively slower convergence, biases in the model, and challenges in aggregation.

In43, the authors have designed a blockchain-based FL scheme, namely FedBG, to address privacy concerns and insufficient medical image classification data challenges. It incorporates EC-GAN, an enhanced classification-GAN to improve image diversity along with optimizing classification accuracy. Results after experimentation demonstrate that FedBG exhibits a 27–38% reduced training time and 0.9-2% better accuracy along with data privacy preservation while high-quality medical images are generated. An FL-based indoor image recognition positioning system is proposed in44 to address privacy concerns in image-based indoor positioning methods. The system exhibits client privacy and is 94% accurate by implementing the MobileNet model with FedAvg and FedOpt algorithms. Whereas, in45, four FL algorithms, namely FedBABU, FedAvg, FedProto, and APPLE in non-IID data in the Fashion MNIST dataset. 76.04% and 74.62% accuracies were obtained from FedAvg and FedBABU algorithms respectively which were surpassed by APPLE and FedProto algorithms attaining 99.21% and 99.40% accuracies. Personalized FL (P-FL) and generalized FL (G-FL) were bridged for image classification and FED-ROD framework was proposed which outperformed the other methods with non-IID data in diverse datasets with superior scalability and balanced accuracy46. A FL-based MRI brain tumor classification was proposed leveraging the FedAvg algorithm and the EfficientNet-B0 model to minimize privacy breach issue and enhance accuracy in diagnosis. ResNet-50 was outperformed by EfficientNet-50, with a maximum of 80.17% test accuracy and minimum of 0.612 loss as compared to ResNet-50 which attained 65.32% accuracy and 1.017 loss. The FL model was resilient to heterogeneous data and achieved 99% accuracy46. In the research done47, the transformative impact of FL on medical imaging is highlighted while addressing data privacy concerns. The results demonstrate that FL showed comparable results as centralized models in diagnostic conditions, disease classification, etc. Along with active ongoing optimization improvements, there are several prevalent issues like communication bottlenecks, heterogeneity, and computational costs.

The effectiveness of FL in analyzing histopathological images of breast cancer in a secure manner using BreakHis dataset is done in48. The research compares federated, independent, and centralized training models where FL achieves results comparable to centralized learning with negligible differences in F1 scores, accuracy, and precision. DenseNet-201 and ResNet-152 achieved high diagnostic odds ratios and Kappa values and outperformed other models in reliability and consistency, thus validating FL as a solution to address privacy concerns in the collaborative analysis of medical images. In another research work49, the authors introduce the FLBIC-CUAV framework as a combination of blockchain and FL for efficient and secure classification of UAV network images in Industrial IoT (IIoT) environments. The proposed framework leverages FL with ResNet for classification, beetle swarm optimization (BSO) for UAV clustering, and blockchain for secure data transmission. The results showed that the proposed framework produced superior outcomes as compared to existing methods with up to 99.15% accuracy, reduced delay, improved throughput, and lower energy consumption. A concise representation of various FL approaches used to classify indoor images is represented in Table 3.

Problem description

The extensive literature survey found that the existing research does not address several aspects in various domains. ML and DL algorithms play a pivotal role in classifying indoor images providing reliable and robust techniques for essential decision-making based on pattern recognition and feature extraction. The roles of ML and DL algorithms in indoor image classification include better feature extraction, enhanced classification accuracy, and integration with multimodal data. Apart from these, DL proves to be a dominant paradigm in this domain, providing further advantages over ML in terms of scalability and generalization, where DL models can scale up to larger datasets and provide better generalization as compared to ML models, which have limited capacity to learn from large datasets50. ML also lags in using contextual information in classification, which is overcome by using DL models, along with handling occlusions, cluttering, and lighting variations51. The pre-trained DL models like ResNet, MobileNet, and VGG can help in reducing the indoor classification time and improving performance.

Various smart domains like smart homes, indoor robotic navigation, and augmented reality (AR) require privacy preservation as the foremost context of scene classification. Multiple devices are used in the data collection process, where either the data distribution may be IID or Non-IID52. FL models are effective in these cases where privacy preservation and data integrity are the key concerns. Unlike the centralized data, in FL models, the data is trained in their respective devices and the gradients are shared with the central server53.

The key contributions of this work are:

-

To analyze machine learning and DL algorithms for indoor scene classification.

-

To design and develop hybrid pre-trained model-based feature extraction for better classification.

-

To implement the proposed hybrid pre-trained model-based feature extraction technique in an FL environment with identical and non-identical data distribution.

Materials and methods

The various materials and the corresponding method used in this study are explained in this section. The various materials include the dataset, the algorithms used, and the performance metrics, whereas the methodology includes a detailed description of the process flow for the entire study.

Dataset description

The Indoor Scene Recognition Dataset, introduced in the CVPR 2009 paper by MIT, is a standard benchmark dataset designed for the challenging task of indoor scene classification. It is taken from Kaggle54 and is widely used for evaluating scene recognition methods in computer vision. Due to the increasing complexity of the indoor scenes, this dataset was created to broaden the scope of existing scene recognition algorithms, helping the researchers to develop DL methods and evaluate them for indoor scene classification. It has also inspired advancements in DL architectures, feature extraction, and object detection.

Figure 1 demonstrates the screenshot of the dataset. The noteworthy features of the dataset can be enlisted under several classifications. The dataset contains 15,620 images and 67 categories of indoor scenes. The categories of indoor images include airport with 66 images, art studio with 63 images, auditorium with 4 images, bakery with 58 images, bar with 57 images, bathroom with 197 images, bedroom with 350 images, bookstore with 380 images, bowling 213 images, buffet 111 images, casino 515 images, children room 112 images, church 180 images, classroom 113 images, cloister 120 images, closet 135 images, clothing store 106 images, computer room 114 images, concert hall 103 images, corridor 346 images, deli 258 images, dental office 131 images, dining room 274 images, elevator 101 images, fast food restaurant 116 images, florist 103 images, game room 127 images, garage 103 images, greenhouse 101 images, grocery store 213 images, gym 231 images, hair salon 239 images, hospital room 101 images, inside bus 102 images, inside subway 457 images, jewellery shop 157 images, kindergarten 127 images, kitchen 734 images, laboratory 125 images, laundromat 276 images, library 107 images, living room 706 images, lobby 101 images, locker room 249 images, mall 176 images, meeting room 233 images, movie theatre 175 images, museum168 images, nursery 144 images, office 109 images, operating room 135 images, pantry 384 images, inside pool 174 images, prison cell 103 images, restaurant 513 images, restaurant kitchen 107 images, shoe shop 116 images, staircase 155 images, studio music 108 images, subway 539 images, toystore 347 images, train station 153 images, tv studio 166 images, video store 110 images, waiting room 151 images, warehouse 506 images and wine cellar 269 images. Each category where each image belongs to one image. All the images in this dataset are provided explicitly for research purposes only. Clutter and complex spatial layouts of the images cause high intra-class variability and similarity, making the classification problem more challenging.

The dataset consists of IID and non-IID data from four different clients. Initially, the image data is equally divided among four clients in 67 different classes (IID). In this case, the image distribution is of IID type. The image features are extracted using pre-trained models and the best model is identified. The extracted features are then implemented in the FL environment for collaborative training and model improvement using LDA. The same process is repeated using an uneven and random distribution of images in 67 different classes across all 4 clients.

LDA is a statistical method commonly used to separate various classes in ML and DL. It finds a linear combination of features that separates the classes in the best way, and is mainly used for dimensionality reduction and classification applications55. It has two pivot goals:

-

To maximize the inter-class separation while minimizing the intra-class variation (high inter-class scatter).

-

To ensure maximum class-discriminatory information while reducing feature dimensions (low intra-class scatter).

These goals are achieved using the optimization demonstrated in Eq. 1 which maximizes the ratio of inter-class scatter to intra-class scatter:

where,

O’ - optimization objective formula of LDA.

Methodology

The methodology followed to carry out this study is demonstrated in Fig. 2. Various images are collected in a dataset and an efficient classification is carried out by initially cleaning the data and extracting their features using some pre-trained models. The privacy and integrity of the images are preserved and the aggregates are then implemented in a FL environment on IID and non-IID datasets separately.

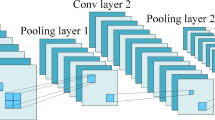

It begins with collecting indoor images to form a dataset. Then, the data is preprocessed to prepare the raw data for training and model evaluation by cleaning, and transforming the data. Pretrained DL models like EfficientNet-B3, DenseNet-201, InceptionResNetV2, EfficientNet V2S, VGG16, and MobileNet are implemented to extract features of the preprocessed data. The best DL algorithm is identified based on various evaluation parameters like accuracy, recall, precision, F-1 score, loss, etc. The extracted features form compact representations of the images, reducing their size and preserving privacy, which are implemented in the FL environment. FL is implemented on IID and non-IID datasets separately to evaluate and analyze the resulting outcomes.

The process of performance analysis of the models is depicted in Fig. 3. The feature extraction of the image data is done using various DL models mentioned above. After the feature extraction step, EfficientNetB3 extracted 1536 features, DenseNet201 extracted 1920 features, InceptionResnetV2 extracted 1536 features, EfficientNetV2S extracted 1280 features, VGG16 extracted 4096 features, and MobileNet extracted 1000 features. The corresponding extracted features are applied upon using the LDA approach of image classification. The performance analysis of each of the models is computed and compared with the proposed MultiData model, where it is found to outperform the other models with superior accuracy, precision, recall, and F1-Score and minimal loss.

This method is followed across four different clients to attain an average performance of all the clients and get a consolidated performance of the same. The implementation is done across 10 communication rounds comprising 10 epochs each, summing to a total of 100 epochs. The average performance from all four clients is collected by the server and the model performance is analyzed as depicted in Fig. 4.

The classifiers used for each model were evaluated on various performance metrics like accuracy, precision, recall, F1-Score, Zero-One Loss (ZOL), Methews Correlation Coefficient (MCC), and Kappa Statistics.

Where,

-

Accuracy is the proportion of correct instances that are classified. It can be defined as in Eq. 2.

-

Validation accuracy measures how well a trained DNN generalizes to unseen data by evaluating performance on a separate validation set. A high validation accuracy indicates good generalization, while a large gap from training accuracy suggests overfitting.

It can be represented by Eq. 3.

where,

N = Total validation samples.

y = True label for the ith sample.

z = Predicted output for the ith sample.

L(y, z) = Selected loss function.

-

Precision is the proportion of the true positive predictions among all the positive predictions. It can be calculated according to the Eq. 4.

-

Recall is the proportion of the true positives among all the actual positives. Equation 5 describes the loss calculation.

-

F1-Score is the harmonic mean of precision and recall. F1-Score can be evaluated using the formula in Eq. 6.

-

ZOL is the measure of incorrect predictions. The lower the ZOL, the better it is. Equation 7 defines the calculation of ZOL.

N = Total samples.

y = True label of the ith sample.

z = Predicted label of the ith sample.

1(y ≠ z) = Indicator function: 1 - prediction is incorrect and

0 - prediction is correct.

-

Validation loss is the measure of the performance of a trained DNN on unknown data. The lower the validation loss, the better the generalization. Equation 8 demonstrates the formula for the calculation of validation loss.

-

MCC is a balanced metric that considers false positives, true positives, false negatives, and true negatives. It can be derived using Eq. 9.

Where,

TP (True Positives) = Correctly predicted positive cases.

TN (True Negatives) = Correctly predicted negative cases.

FP (False Positives) = Incorrectly predicted positive cases.

FN (False Negatives) = Incorrectly predicted negative cases.

-

Kappa Statistics is a measure of correlation between predicted and actual classifications. It is measured using Eq. 10.

Results and discussions

The results of the indoor image classification can be divided into four subsections. Initially, the features of indoor images are extracted, and various DL models are used to check their performances on various parameters, and their performances are compared with the proposed MultiModel architecture. Subsequently, the results of the DNN architecture are compared based on various parameters as compared to the proposed MultiData model. Furthermore, the client-wise training and validation time plots for IID and Non-IID datasets are demonstrated and explained, followed by the client-wise training and validation time plots for NIID datasets. The entire results are combined in a table showing the comparative results.

Performance analysis of the proposed vs. other DL models

The feature extraction of indoor scenes is done using various DL models like VGG16, VGG19, ResNet152, InceptionResNet, MobileNet, DenseNet, and the proposed multi-data model. The models were applied to the extracted features to classify indoor images. The various classifiers used in the experiment were Decision Tree (DT), Logistic Regression (LR), Random Forest (RF), Extra Trees (ET), Histogram-Based Gradient Boosting Technique, and Multi Layered Perceptron Model.

-

VGG16 - a deep convolutional neural network (CNN) architecture that has 16 layers and uniform 3 × 3 convolutional filters. It is effective for image feature extraction and is widely used in transfer learning for various computer vision tasks.

-

VGG19 - an extended VGG16 architecture with 19 layers. It maintains the 3 × 3 convolutional filter architecture with increased depth for enhanced feature extraction.

-

ResNet152 - a deep convolutional neural network with 152 layers that utilize the remaining connections to solve the vanishing gradient problem. It is a highly effective architecture for image recognition and transfer learning with a greater depth and optimal efficiency of training.

-

InceptionResNet - combines the Inception architecture with residual connections to enhance the training accuracy and efficiency in deep networks. It attains enhanced performance in image recognition.

-

MobileNet - a lightweight CNN designed for efficient device applications. It reduces model sizes and computational complexities.

-

DenseNet - a deep CNN with every layer inter-connected helping in the reuse of features, better efficiency, and enhanced performance. It mitigates the vanishing gradient problem and also reduces the parameters.

A concise representation of the performance comparison of various models is mentioned in Table 4. The proposed MultiData model outperforms all the other models in all the performance parameters.

From the analysis of the tabular results, it is observed that the performance of the VGG16 and VGG19 feature extractors was good, with an accuracy of more than 85%. ResNet152 and InceptionResNet showed lower overall performance with some classifiers, attaining around 60% accuracy. MobileNet and DenseNet depicted moderate performance, with LR and RF attaining better results. The proposed MultiData performs exceptionally better across classifiers, attaining an accuracy of close to 100%.

An analysis of the best classifier for each feature extractor shows that the LR, RF, ET, and Histogram-Based Gradient Boosting models perform consistently well with all the feature extractors. Whereas the DT and Multi-Layer Perceptron (MLP) are less effective with a lower accuracy. Overall, exceptionally high accuracy is obtained from the MultiData which achieves near-perfect accuracy across all classifiers.

Performance analysis of the proposed vs. other DNN models

After comparing the MultiData model with various DL models, various DNN models were compared with the proposed MultiData model concerning various performance metrics like accuracy, validation accuracy, loss, and validation loss. Various DL models used for performance comparison with the proposed MultiData model are DenseNet201, InceptionResNetV2, MobileNetV2, ResNet152V2, VGG16, VGG19, and MultiData, and their performances were calculated on various metrics like accuracy, validation accuracy, loss, and validation loss. Performance comparison of the proposed model with various DNN models is represented in Table 5.

The inference drawn from the table can be explained in terms of various observations on each of the models. The VGG16 and VGG19 attained the maximum validation accuracy of 87.47% and 85.21% respectively. They also exhibit strong generalization due to a minimized validation loss. DenseNet201 and ResNet152V2 demonstrate a moderate validation accuracy of 72.92% and 60.15% respectively but show a relatively high validation loss. The InceptionResNetV2 and MobileNetV2 perform the least, attaining a validation accuracy below 60% and moderately high validation loss values. Conversely, the MultiData model achieves the best accuracy and validation accuracy of 99.99% and 99.93% respectively, with a validation loss close to zero. The performances of various models obtained are plotted in graphs for a clearer insight as in Fig. 5.

Figure 6 demonstrates the performances of DenseNet201, InceptionResNetV2, MobileNetV2, ResNet152V2, VGG16, VGG19, and the proposed MultiData model in training time. The MultiData is found to perform the best among all others with higher accuracy, precision, and recall. The reduced loss in the MultiData also adds to the superior performance of the proposed model. Figure 4 is a graphical depiction of the validation results of the models as compared to the proposed MultiData model.

After training, the performance comparison of various models is done in the validation time. The proposed model’s accuracy, precision, and recall are close to 100% in validation and other performances. The loss and validation loss are almost negligible. Thus, the proposed model is the most efficient among all the DL models.

Federated learning based proposed ecosystem for IID dataset

After the exceptionally better performance of the proposed model, while comparing it with various DL and DNN models, the model performance was then compared with four clients in a federated learning ecosystem. The performance was first compared with an IID dataset and then with a non-IID dataset.

For IID datasets, the accuracy, precision, and recall during the training time are approximately 100% in the case of all four clients. On the other hand, the loss functions of the clients are found to be 0%. All the training time performance parameters of the clients are demonstrated in Fig. 7.

The blue bar represents the accuracy, the green bar represents the precision, the yellow line represents the recall, and the red line represents the loss function of the various clients. During the validation, the clients’ performances are evaluated based on the same parameters, i.e. accuracy, precision, recall, and loss.

Figure 8 demonstrates the client-wise validation plots for IID datasets. After a certain number of epochs, all four clients attain a state of convergence in accuracy, precision, and recall. Though the parameters vary differently in each client, the final average of all four clients after 100 epochs shows very high accuracy, precision, and recall. In this case, 10 epochs comprise one communication round, and 10 communication rounds are used, summing up to 100 epochs. This way, the federated learning based proposed ecosystem performs exceptionally well in case of IID datasets.

Federated learning based proposed ecosystem for non-IID dataset

In case of non-IID datasets, the client-wise training time plots are generated based on the same evaluation parameters. Where, the blue line represents the accuracy, the green line represents the precision, the yellow line represents the recall, and the red line represents the loss percentages respectively.

While training, all four clients attain exceptionally high accuracy, precision, and recall convergences after 10 communication rounds comprising 10 epochs each. Among all the parameters, the loss function varies the most, but despite that, the average loss of all the four clients is close to zero as shown in Fig. 9.

The performance evaluation of the clients while the validation process is evaluated and plotted in Fig. 10. All the clients perform in unison, depicting the convergence in various performance parameters, like accuracy, precision, and recall.

Discussions

The final results obtained after taking the mean of all the performances, and consolidating all the performance of the proposed model, are depicted in Table 6.

The results demonstrate that the proposed Multidata model attains near perfect accuracy, precision, and recall in training and validation phases. The performances of all the four clients are excellent in cases of IID as well as non-IID datasets, which shows the superior ability of the model in classifying indoor images in any scenario.

Conclusion and future research directions

The necessity to classify indoor images is important in many aspects, and several research has been done to identify the best method of doing it. This study proposes and implements a MultiModel architecture, which is tested and compared with various DL models, DNN models, as well as FE environment. The diversity of the experiment also includes testing its performance on IID as well as non-IID datasets. After the implementation, it has been observed that the proposed MultiModel data performs the best when compared with various DL models. It also outperforms various DNN models in terms of all the performance metrics.

Not only in DL and DNN models, the proposed model is also checked for its performance in a federated learning environment. The client-wise performance of the proposed model is checked in the training as well as the validation phases, whose average shows the best result of the proposed model in case of IID as well as non-IID datasets. In all the cases of comparison, the proposed MultiData model achieves an accuracy, precision, and recall close to 100%, with negligible loss.

This work is a computation-based experiment where the performance is calculated and analyzed through computational methods and not in real time. Analyzing the real-time performance of the model can be performed as the future prospect of this research work. This model can be deployed on some low memory devices to check for its real-time performance.

Data availability

The dataset is available at [https://www.kaggle.com/datasets/itsahmad/indoor-scenes-cvpr-2019].

Change history

06 October 2025

The original online version of this Article was revised: The Funding section in the original version of this Article was incomplete. The Funding section now reads: “Open access funding provided by Parul University. This research was also funded by Taif University, Taif, Saudi Arabia (TU-DSPP-2024-17).” The original Article has been corrected.

References

Khan, S. D. & Othman, K. M. Indoor scene classification through dual-stream deep learning: a framework for improved scene Understanding in robotics. Computers 13 (5), 121 (2024).

Soroush, R. & Baleghi, Y. NIR/RGB image fusion for scene classification using deep neural networks. Visual Comput. 39 (7), 2725–2739 (2023).

Thepade, S. D. & Idhate, M. E. Machine Learning-Based Scene Classification Using Thepade’s SBTC, LBP, and GLCM. in Futuristic Trends in Networks and Computing Technologies: Select Proceedings of Fourth International Conference on FTNCT 2021. Springer. (2022).

Lei, Y. et al. Research on indoor robot navigation algorithm based on deep reinforcement learning. in 2023 International Conference on Image Processing, Computer Vision and Machine Learning (ICICML). IEEE. (2023).

Ahmed, M. W. & Jalal, A. Indoor Scene Classification Using RGB-D Data: A Vision Transformer and Conditional Random Field Approach.

Pereira, R. et al. A deep learning-based global and segmentation-based semantic feature fusion approach for indoor scene classification. Pattern Recognit. Lett. 179, 24–30 (2024).

Tran, H. N. et al. Enhancing semantic scene segmentation for indoor autonomous systems using advanced attention-supported improved UNet. Signal. Image Video Process. 19 (2), 190 (2025).

Yue, H. et al. Recognition of Indoor Scenes Using 3D Scene Graphs (IEEE Transactions on Geoscience and Remote Sensing, 2024).

Govea, J., Gaibor-Naranjo, W. & Villegas-Ch, W. Securing critical infrastructure with blockchain technology: an approach to Cyber-Resilience. Computers 13 (5), 122 (2024).

Wen, J. et al. A survey on federated learning: challenges and applications. Int. J. Mach. Learn. Cybernet. 14 (2), 513–535 (2023).

Banabilah, S. et al. Federated learning review: fundamentals, enabling technologies, and future applications. Inf. Process. Manag. 59 (6), 103061 (2022).

Blanco-Justicia, A. et al. Achieving security and privacy in federated learning systems: survey, research challenges and future directions. Eng. Appl. Artif. Intell. 106, 104468 (2021).

Chen, R. et al. Service delay minimization for federated learning over mobile devices. IEEE J. Sel. Areas Commun. 41 (4), 990–1006 (2023).

Bharati, S. et al. Federated learning: applications, challenges and future directions. Int. J. Hybrid. Intell. Syst. 18 (1–2), 19–35 (2022).

Song, C. et al. Semantic-embedded similarity prototype for scene recognition. Pattern Recogn. 155, 110725 (2024).

Alazeb, A. et al. Remote intelligent perception system for multi-object detection. Front. Neurorobotics. 18, 1398703 (2024).

Qiao, D. et al. AMFL: Resource-efficient adaptive metaverse-based federated learning for the human-centric augmented reality applications. IEEE Trans. Neural Networks Learn. Syst. 36(5), 7888–7902 (2024).

Fadhel, M. A. et al. Comprehensive systematic review of information fusion methods in smart cities and urban environments. Inform. Fusion. 102317, p (2024).

Chang, Y. et al. SAR image matching based on rotation-invariant description. Sci. Rep. 13 (1), 14510 (2023).

Li, P. Machine Learning Techniques for Pattern Recognition in High-Dimensional Data Mining. arXiv preprint arXiv:2412.15593, (2024).

Liu, T., Zheng, P. & Bao, J. Deep learning-based welding image recognition: A comprehensive review. J. Manuf. Syst. 68, 601–625 (2023).

Lee, J. & Kim, M. Rare data image classification system using Few-Shot learning. Electronics 13 (19), 3923 (2024).

Sen, A. & Bilgili, A. Indoor Mapping Using Machine Learning Based Classification of 3D Point Clouds. in 18th International Conference on Location Based Services. (2023).

Soudy, M., Afify, Y. & Badr, N. RepConv: A novel architecture for image scene classification on intel scenes dataset. Int. J. Intell. Comput. Inform. Sci. 22 (2), 63–73 (2022).

Zhu, X. et al. Scene-aware label graph learning for multi-label image classification. in Proceedings of the IEEE/CVF International Conference on Computer Vision. (2023).

Alenzi, Z. et al. A semantic classification approach for indoor robot navigation. Electronics, 11(13): p. 2063. (2022).

Roy, A. & Chakraborty, S. Support vector machine in structural reliability analysis: A review. Reliab. Eng. Syst. Saf. 233, 109126 (2023).

Ibrahim, H. B. et al. Smart monitoring of road pavement deformations from UAV images by using machine learning. Innovative Infrastructure Solutions. 9 (1), 16 (2024).

Cheng, K. Prediction of emotion distribution of images based on weighted K-nearest neighbor-attention mechanism. Front. Comput. Neurosci. 18, 1350916 (2024).

Salehin, I. et al. AutoML: A systematic review on automated machine learning with neural architecture search. J. Inform. Intell. 2 (1), 52–81 (2024).

Xu, J. et al. Joint input and output space learning for multi-label image classification. IEEE Trans. Multimedia. 23, 1696–1707 (2020).

Ran, Y. et al. Scene classification method based on multi-scale convolutional neural network with long short-term memory and Whale optimization algorithm. Remote Sens. 16 (1), 174 (2023).

Anami, B. S. & Sagarnal, C. V. A fusion of hand-crafted features and deep neural network for indoor scene classification. Malaysian J. Comput. Sci. 36 (2), 193–207 (2023).

Liu, L. Scene classification in the environmental Art design by using the lightweight deep learning model under the background of big data. Comput. Intell. Neurosci. 2022 (1), 9066648 (2022).

Tyagi, B., Nigam, S. & Singh, R. A review of deep learning techniques for crowd behavior analysis. Arch. Comput. Methods Eng. 29 (7), 5427–5455 (2022).

Chen, L. et al. Review of image classification algorithms based on convolutional neural networks. Remote Sens. 13 (22), 4712 (2021).

Alam, M. S. et al. A review of recurrent neural network based camera localization for indoor environments. IEEE Access. 11, 43985–44009 (2023).

Lu, Y. et al. Generative adversarial networks (GANs) for image augmentation in agriculture: A systematic review. Comput. Electron. Agric. 200, 107208 (2022).

Sayed, A. N., Himeur, Y. & Bensaali, F. Deep and transfer learning for Building occupancy detection: A review and comparative analysis. Eng. Appl. Artif. Intell. 115, 105254 (2022).

Himeur, Y. et al. Federated learning for computer vision. arXiv preprint arXiv:2308.13558, (2023).

Wu, J. et al. Topology-aware federated learning in edge computing: A comprehensive survey. ACM Comput. Surveys. 56 (10), 1–41 (2024).

Abdel-Basset, M. et al. Privacy-preserved learning from non-iid data in fog-assisted iot: A federated learning approach. Digit. Commun. Networks. 10 (2), 404–415 (2024).

Liu, W. et al. An efficient federated learning method based on enhanced classification-GAN for medical image classification. Multimedia Syst. 31 (1), 1–17 (2025).

Yu, H. S. & Jhuang, Y. C. and J.-S. Leu. Image Recognition-Based Indoor Positioning System Using Federated Learning. in 2024 IEEE 100th Vehicular Technology Conference (VTC2024-Fall). IEEE. (2024).

Abir, M. R., Zaman, A. & Mursalin, S. Efficiency measurement of FL algorithms for image classification. GSC Adv. Res. Reviews. 18 (3), 356–366 (2024).

Chen, H. Y. & Chao, W. L. On bridging generic and personalized federated learning for image classification. arXiv preprint arXiv:2107.00778, (2021).

Nazir, S. & Kaleem, M. Federated learning for medical image analysis with deep neural networks. Diagnostics 13 (9), 1532 (2023).

Li, L., Xie, N. & Yuan, S. A federated learning framework for breast cancer histopathological image classification. Electronics 11 (22), 3767 (2022).

Abunadi, I. et al. Federated learning with blockchain assisted image classification for clustered UAV networks. Comput. Mater. Contin. 72, 1195–1212 (2022).

Ahmed, S. F. et al. Deep learning modelling techniques: current progress, applications, advantages, and challenges. Artif. Intell. Rev. 56 (11), 13521–13617 (2023).

Gupta, P. Presence detection for lighting control with ML models using RADAR data in an indoor environment. (2021).

Qamar, F. et al. Federated learning for millimeter-wave spectrum in 6G networks: applications, challenges, way forward and open research issues. PeerJ Comput. Sci. 10, e2360 (2024).

Savazzi, S., Nicoli, M. & Rampa, V. Federated learning with cooperating devices: A consensus approach for massive IoT networks. IEEE Internet Things J. 7 (5), 4641–4654 (2020).

https://www.kaggle.com/datasets/itsahmad/indoor-scenes-cvpr-2019. accessed 3 Apr 2025.

Balakrishnama, S. & Ganapathiraju, A. Linear discriminant analysis-a brief tutorial. Institute for Signal and Information Processing, 18(1998): pp. 1–8. (1998).

Acknowledgements

The authors extend their appreciation to Taif University, Saudi Arabia, for supporting this work through project number (TU-DSPP-2024-17).

Funding

Open access funding provided by Parul University. This research was also funded by Taif University, Taif, Saudi Arabia (TU-DSPP-2024-17).

Author information

Authors and Affiliations

Contributions

M.D. and D.G. wrote the initial draft, V.K. performed the supervision, S.J. and R.A. wrote the final draft, P.J. wrote the methodology and provided the software. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Dutta, M., Gupta, D., Khullar, V. et al. Hybrid pre trained model based feature extraction for enhanced indoor scene classification in federated learning environments. Sci Rep 15, 30803 (2025). https://doi.org/10.1038/s41598-025-16673-3

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-16673-3