Abstract

Personalized out-of-hospital management could significantly improve quality of life of breast cancer patients. We aimed to evaluate the accuracy, effectiveness, safety, personalization and emotional care of Large Language Models (LLMs) in the out-of-hospital management of breast cancer. We established a data cleaning and classification pipeline to summarize three major scenarios of out-of-hospital management. Authentic electronic health record (EHR) datasets for data collection were generated using 10 patients with ID information masked from Breast Cancer Database in Affiliated Sir Run Run Shaw Hospital, Zhejiang University. Then we matched the EHR datasets with three out-of-hospital management scenarios as 100 virtual patients (VPs) for LLMs to perform the conversation generation using GPT-o3 and DeepSeek-R1. Further, we incorporated four human specialists to rate the responses of LLMs in five dimensions using Likert scale. As of April 1, 2025, the 4 evaluator specialists rated the conversations of LLMs and 100 VPs. The results demonstrate that both DS-R1and GPT-o3 performed well, with scores primarily concentrated at 3 and 4 points. We revealed statistically significant differences between DS-R1and GPT-o3 in accuracy, personalization, and emotional care (P < 0.01). However, the P-values for effectiveness and safety were 0.231 and 0.086. Furthermore, DS-R1generated more tokens (approximately 1.8 times) in identical time with less economic cost, and it also had shorter response time than GPT-o3. GPT-o3 and DS-R1 demonstrated personalized, empathetic, and accurate performance in the out-of-hospital management for breast cancer patients. DS-R1 had better overall performance than GPT-o3, especially in personalization, emotional care and accuracy. More research is warranted in the development specific knowledge embedding LLMs to reduce the detractors like hallucinatory or verbose responses.

Similar content being viewed by others

Introduction

Breast cancer has been a critical threat to public health due to its high incidence and heavy social economic burden1. Out-of-hospital management for breast cancer consists of patient education, psychological management, and disease-related queries. Appropriate out-of-hospital management can effectively reduce the recurrence rate among low-risk breast cancer patients2, but it still needs improvements in availability, personalization, and other areas3. Home-based multidimensional survivorship programs for breast cancer survivors (HBMS), which are one kind of out-of-hospital management, have been proposed to improve the quality of life (QoL) of breast cancer patients via education and training provided by health care professionals, but the benefit is still restricted by availability and cost4. Therefore, more accessible, low-cost and personalized out-of-hospital management for breast cancer patients is urgently needed.

The emergence of Large Language Models (LLMs) has revolutionized the care for breast cancer. Chatbots based on LLMs were developed to collect the data of PROs5, to respond top cancer-related search queries6, and to assist in the tumor board decision making7. For instance, Pan et al. evaluated the understandability of 4 AIs (ChatGPT version 3.5 (OpenAI), Perplexity (Perplexity.AI), Chatsonic (Writesonic), and Bing AI (Microsoft)) regarding 5 most searched queries for skin, lung, breast, colorectal, and prostate cancer. The results showed that 4 AIs had accurate responses, but had limited actionability6. Similarly, another study reported the feasibility of a medical LLM in assisting disease diagnosis. This medical LLM demonstrated superior performance compared to other baseline LLMs and specialized models8. However, personalized out-of-hospital management, like education of limb rehabilitation, psychological management, and other breast cancer related queries, remained unsolved in clinical practice due to the distance between the patients and care-givers. Could the LLMs like DeepSeek-R1 (DS-R1), GPT-o3 powered by sophisticated reasoning models, be the key to solving these problems in the era of AIs? How is the performance of these reasoning enhanced LLMs according to human physicians? Till now, no study has answered these questions.

To explore the role of reasoning-enhanced LLMs in out-of-hospital management for breast cancer, we conducted a multi-phase randomized single-blind study. First, we established a data cleaning and classification pipeline to summarize three major scenarios of out-of-hospital management, and we also constructed authentic EHR datasets for data collection. Second, we matched the EHR datasets with three out-of-hospital management scenarios to create virtual patients (VP) (N = 10) for LLMs to perform the conversation generation using GPT-o3 and DS-R1. Third, we recruited four human specialists to evaluate the responses of LLMs in five dimensions, which highlighted the potential of involved LLMs in the scenarios of out-of-hospital management. To the best of our knowledge, this is the first study evaluating the role of reasoning enhanced LLMs in out-of-hospital management for breast cancer.

Method

Ethics

This study was approved and supervised by the Institutional Review Board of SRRSH (IRB#: 20210910-30). All the patients enrolled in this study were fully informed and consented of their rights, with their identification information masked. The study was performed in accordance with the Declaration of Helsinki.

Overview

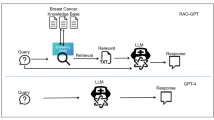

As shown in Fig. 1, the methodology consists of three steps: Data Collection, Conversation Generation, and Human Evaluation.

First, in the data collection phase, we established a data cleaning and classification pipeline to summarize a question dataset from publicly available breast cancer-related dialogue datasets. This dataset covers three typical out-of-hospital management application scenarios: disease consultation, rehabilitation guidance, and psychological management. Additionally, we selected authentic patient data with ID information masked from the Breast Cancer Database of Affiliated Sir Run Run Shaw Hospital, Zhejiang University to construct an EHR dataset.

Based on these two datasets, in the conversation generation phase, we matched each patient’s EHR data with several sets of questions corresponding to the three out-of-hospital management scenarios. Using the EHR data and its corresponding question set, we designed a prompt engineering framework that drives LLMs to act as VPs, simulating out-of-hospital management scenarios and engaging in multi-round dialogues with GPT-o3 and DS-R1(released on 2025.01.20). This process generates a question-and-answer dataset that mimics real-world interactions.

In the human evaluation phase, the question-and-answer datasets generated by GPT-o3 and DS-R1were randomly and evenly divided into two parts, each assigned to two groups of evaluators. A Likert scale9 was used to obtain subjective evaluations across five dimensions, including effectiveness, safety, accuracy, personalization, and emotional care. Further details of these three steps will be elaborated in the following three subsections.

We have open-sourced the code, anonymized dataset, and evaluation results used in this experiment on GitHub (https://github.com/Maxin-C/LLM-Evaluation).

Data collection

In this section, we aim to identify frequently mentioned issues by patients during the out-of-hospital management of breast cancer and obtain real patient EHR data to facilitate subsequent steps where the model is required to act as a virtual patient interacting with the LLM under evaluation. For the construction of the common issues dataset, we selected the Huatuo-BC dialogue dataset extracted from Huatuo-26 M as the raw data. Huatuo-26 M was derived from Qianwen Health, offering more than 26 million real-world doctor-patient dialogues. Huatuo-BC is a breast cancer-related subset of this dataset, comprising 208 K question-answer pairs. First, by constructing a dataset filtering prompt (Appendix A1), we used the API (Application Programming Interface) to instruct DeepSeek-V3-0324 (DeepSeek-V3) to extract dialogue content related to out-of-hospital breast cancer management from the original dataset, resulting in 29 K question-answer pairs. Then, by clearly defining three scenarios—disease consultation, rehabilitation guidance, and psychological management—we designed classification and summarization prompts (Appendix A2) to drive DeepSeek-V3 to categorize the question-answer data. Ultimately, we obtained 256, 309, and 350 common question datasets for the three scenarios, respectively.

Ten patient data with complete indicators and different conditions (with Stage as the standard) were extracted as the background information of EHR information to support personalized question and answer. The main data distribution of the EHR dataset is shown in Table 1.

Conversation generation

The process of conversation generation is illustrated in Fig. 2. Based on the question dataset and the EHR dataset, we matched the questions with patient data, assigning 10 sets of questions to each patient, with each set including one question from each of the three clinical scenarios. Considering that patients at different stages may raise different questions, we designed a question matching prompt (Appendix A3) to instruct DeepSeek-V3 to identify the 10 most likely sets of questions based on the patient’s clinical background, thereby achieving dataset pairing. Through this process, we obtained background information for 100 VPs, which includes EHR data and one likely question from each of the three scenarios.

For each set of virtual patient information, the virtual patient prompt (Appendix A4) can drive DeepSeek-V3 to act as a patient undergoing post-operative out-of-hospital management for breast cancer and engage in conversation with the model under evaluation. To make the virtual patient’s questions more closely resemble the conversation process of real patients, this study extracted a total of 1,775 dialogue histories from a WeChat group chat focused on post-operative management for breast cancer patients, spanning from June 2023 to January 2025. After removing private information, these dialogues were used as few-shot inputs in the virtual patient prompt, instructing the model to mimic the conversation style in its outputs. Upon receiving the needs raised by the virtual patient, the model under evaluation will act as a doctor providing out-of-hospital management services through the virtual doctor prompt (Appendix A5). The virtual patient and virtual doctor will engage in multiple rounds of conversation. To avoid meaningless conversations, we additionally used a conversation monitoring prompt (Appendix A6) to instruct DeepSeek-V3 to determine whether the current content has addressed the needs raised by the virtual patient. If the judgment is affirmative or the conversation exceeds 8 rounds, the conversation will be terminated, and the next conversation will begin. In this experiment, the reasoning parameters of the models were kept consistent, with the temperature set to 0.1 and top-p set to 1, to ensure the stability of text generation and enhance the reproducibility of the experiment.

Human evaluation

Based on 100 sets of virtual patient information, evaluating GPT-o3 and DS-R1 yielded 200 sets of conversations. Four breast doctors were invited to assess the dataset. The dataset was evenly and randomly divided into two parts, each containing 50 sets of conversations from GPT-o3 and 50 sets from DS-R1. Each part was evaluated by 2 doctors who were aware of the EHR data but unaware of the model sources. Since GPT-o3’s reasoning process is in English, which naturally distinguishes it from DS-R1’s content, a translation prompt (Appendix A7) was used to instruct DeepSeek-V3 to convert the English content into Chinese, and model responses were uniformly formatted as “Reasoning: … Answer: …”. Translated content (shown in Appendix B) was manually reviewed by non-evaluators with advanced English-Chinese bilingual competence (Master’s degree or higher) to ensure fidelity and mitigate potential misinterpretations. The data content was rendered into images using unified HTML rendering code for display. The models were evaluated using a five-point Likert scale9 (as shown in Table 2). The evaluation results were collected through electronic forms.

Result

Human evaluation result

We compiled the ratings of conversations from four evaluators and calculated the average scores of DS-R1 and GPT-o3 across five dimensions, as shown in Fig. 3a. The radar chart indicates that the mean values of DS-R1across all five dimensions are higher than those of GPT-o3. We tested the normality of the data distributions for both LLMs across the five dimensions using the Shapiro-Wilk test. The results showed that the data did not follow a normal distribution (P < 0.01). Therefore, we applied the Mann-Whitney U test, which revealed statistically significant differences between DS-R1and GPT-o3 in accuracy, personalization, and emotional care (P < 0.01). However, the P-values for effectiveness and safety were 0.231 and 0.086, respectively. Additionally, we used the Hodges-Lehmann estimator as a non-parametric measure of effect size to estimate the median difference in the data. Consistent with the results of the Mann-Whitney U test, the two LLMs showed statistically significant differences in accuracy, personalization, and emotional care (P < 0.01), while the p-values for effectiveness and safety were 0.231 and 0.086, respectively. Figure 3b shows the average evaluation scores from the four raters. The results indicate that, except for slight advantages of GPT-o3 over DS-R1 in Rater 1’s emotional care, Rater 2’s safety, Rater 3’s safety, and Rater 4’s effectiveness—none of which were statistically significant—DS-R1outperformed GPT-o3 in all other dimensions, consistent with the overall conclusion. We plotted the score distributions of the two LLMs across the five dimensions as bar charts, as shown in Fig. 3c. The results demonstrate that both models performed well, with scores primarily concentrated at 3 and 4 points. Furthermore, DS-R1 had a lower proportion of scores below 3 and a significantly higher proportion of scores at 4 compared to GPT-o3 across all five dimensions, indicating that DS-R1received more positive evaluations and a higher proportion of high scores.

Human evaluation results: (a) The average scores of LLMs, where the blue area and numbers represent DS-R1, and the yellow area and numbers represent GPT-o3. The labels with “*” indicate statistically significant differences in that dimension; (b) The average scores from each of the four raters. The color correspondence with the models remains consistent with (a), and the labels with “*” indicate statistically significant differences in that dimension.

Time and economic costs evaluation result

As shown in Fig. 4, the results indicate that whether measured per single-round dialogue or per entire conversation of a virtual patient, the number of tokens generated by DS-R1 is approximately 1.8 times that of GPT-o3. This suggests that, excluding differences in the number of rounds, DS-R1 tends to generate more characters for reasoning and explanation.

The verbose content, on one hand, makes the model’s responses harder to quickly scan and understand, preventing its effectiveness from achieving a statistically significant difference compared to GPT-o3 in human evaluation. On the other hand, despite having a lower per-token cost, the total economic expense of DS-R1 reaches about 1.6 times that of GPT-o3, which is counterintuitive.

Thanks to DS-R1’s faster inference speed, the time costs of the two LLMs are nearly identical even when generating more tokens. Moreover, DS-R1has a shorter total response time, enabling it to meet demands more quickly.

Discussion

In this study, we systematically evaluated the performance of mainstream LLMs in the scenarios of out-of-hospital management for breast cancer patients. We simulated 100 VPs from real breast cancer cases, and engaged multiple rounds of dialogues under out-of-hospital management scenarios with GPT-o3 and DS-R1. The performance of LLMs was evaluated in five dimensions, including effectiveness, safety, accuracy, personalization, and emotional care. The results showed that both LLMs had satisfactory performance in out-of-hospital management. Compared to GPT-o3, DS-R1 behaved better in all dimensions according to human specialists except in Rater 1’s emotional care, Rater 2’s safety, Rater 3’s safety, and Rater 4’s effectiveness. Also, DS-R1generated more tokens in identical time with less economic cost, and it also had shorter response time than GPT-o3. Therefore, this study suggested that LLMs could be deployed in the scenarios of out-of-hospital management for breast cancer patients, DS-R1seems to have better performance compared to GPT-o3.

The LLMs’ role in out-of-hospital management of cancer patients remains in debate. Our study suggested that the majority of human physicians rated LLMs’ responses at the score of 3, which means satisfactory performance in out-of-hospital management. However, still there are existing problems, like hallucinatory responses. During the evaluation, we occasionally encountered hallucinatory responses (accounting for 2.0%, 4/200), which could severely mislead patients and cause hazardous events. For instance, LLMs sometimes suggested a HER2 negative patient to receive target therapy, or suggested a stage 0 VP to receive chemotherapy in our study. Though the case is rare, it could result in irretrievable consequences. This is in accordance with another study employing GPT-3.5 and GPT-4. They conducted an intrinsic evaluation study rating 60 GPT-powered VP-clinician conversations to evaluate the clinical performance of LLMs and to rate the quality of dialogues and feedback. The result showed that the quality of LLMs-generated ratings of feedback is similar to human physicians, but it still has detractors like lower authenticity, verbose vocabulary and failure to mention important weaknesses or strengths10. Similar conclusions were also generated by other studies focusing on Alzheimer’s disease management11 and pain management12. To cope with the inaccuracy of response by LLMs, Ge et al. suggested using liver disease-specific LLM “LiVersa”, which enhance the LLMs with retrieval-augmented generation (RAG). The LiVersa demonstrated better performance than GPT-4 in answering hepatology-related questions13. Therefore, we believe in the promising future of LLMs in out-of-hospital management of cancer patients, however, before it could be universally deployed, we may need to address problems like hallucinatory responses. RAG specific LLM could be a future direction to improve the performance of LLMs in various medical scenarios.

Both GPT-o3 and DS-R1demonstrated substantial potential in assisting out-of-hospital management, but DS-R1 had better overall performance and less cost than GPT-o3 in our study. As newly emerged AI, DS-R1has little research in breast cancer, whereas GPT has the most applications among existing LLMs in multiple scenarios of the practice. One retrospective, cross-sectional study reported that over one-third recommendations for breast, prostate, and lung cancer by GPT-3.5 were not consistent with the standard care set provided by the National Comprehensive Cancer Network (NCCN)14, though the updated GPT-4 has significant improvement in accuracy and details of recommendations15. Another cross-sectional study assessed the response to the 5 most searched queries in Google by 4 mainstream AIs. GPT-3.5 demonstrated relatively high readability (DISCERN score) and understandability (PEMAT score), but relatively low actionability6. This is consistent with our findings that GPT has satisfied accuracy, personalization, safety, effectiveness and emotional care in out-of-hospital management according to human specialists, though it is inferior to DeepSeek-R1. Further, DS-R1generates more tokens than GPT-o3 at similar time, though it has higher cost in total. Compared to human being, LLMs application in remote care management could save tremendous resources16, however, few study make comparison among existing LLMs in the field of cost-effectiveness17. We believe that with the fast iteration of LLMs, the cost of chatbot derived from them will continuously decrease, with significantly increased efficacy.

Although LLMs have demonstrated promising applications in the out-of-hospital management for breast cancer, limitations are still exist. According to the human specialists, the responses of involved LLMs have moderate risk of misleading for the patients (Likert scale 2.92/2.77). The reason for the misleading risk could be derived from wrong suggestions based on VPs, which is consistent with previous literatures indicating the limited applicability of LLMs18,19. Deng et al. reported that GPT-4 has superiority over GPT-3.5 and Claude2 in terms of quality, relevance and applicability in the analyses of breast cancer cases, however, the applicability remains limited according to human raters18. Similarly, another study also reported LLM makes considerably fraudulent decisions at times, which could mislead multidisciplinary tumor board for breast cancer (MTB) decisions19. Therefore, LLMs are not yet ready for the full application in out-of-hospital management for breast cancer, more research is warranted in the improvement of accuracy of responses in future.

Our study indicated that LLMs could provide personalized, empathetic, and accurate suggestions in the out-of-hospital management for breast cancer patients. LLMs could identify the emotional requirement of the patients and provide support for the psychological problems. This is consistent with a previous study that chatbot based on GPT could generate empathetic, quality and readable responses to patient questions compared to human physicians in social media20. Another study reported that Chatbot “Vivibot” could deliver positive personalized psychology skills to young adults who have undergone cancer treatment, which could significantly reduce anxiety21. Therefore, LLMs could be a strong supporter of physicians as well as cancer patients during the treatment and out-of-hospital management.

Our study has significant advantages of randomized, and multi-phase study design, LLM-human physician evaluation and validation for the results. However, we also confess several limitations. First, we only evaluated two most up-to-dated reasoning enhanced LLMs, other LLMs like Grok3, were not included in the study. Second, only 10 VPs were simulated for the test, though over 100 question datasets were created, still the sample size is limited. Third, we included 4 human physicians participating in the evaluation of the responses from LLMs, inter-person heterogeneity could also affect the results. However, we employed Cohen’s Kappa test to reduce the potential bias. In Dataset A, the Cohen’s Kappa test results were 0.52 for DS-R1 scores (P < 0.01) and 0.68 for GPT-o3 scores (P < 0.01). In Dataset B, the results were 0.80 for DS-R1 scores (P < 0.01) and 0.54 for GPT-o3 scores (P < 0.01). Fourth, limited by the time of follow-up, we have no events of prognosis, which restricts our exploration of the association between LLM deployed out-of-hospital management and the prognosis of the disease. Last, our study is a single center study, additional validation is required.

Conclusion

Our findings demonstrate that LLMs like GPT-o3 and DS-R1 show significant promise for the out-of-hospital management of breast cancer patients. Both models delivered personalized and empathetic responses, with DS-R1 showing superior overall performance, particularly in personalization, emotional care, and accuracy. However, the critical barrier to their autonomous application is the risk of generating factually incorrect and dangerous medical advice. These “hallucinations,” while infrequent, pose an unacceptable threat to patient safety, limiting the current applicability of LLMs. Therefore, before LLMs can be safely integrated into the era of digital healthcare, future research must prioritize improving the safety and reliability of their answers. The focus must be on eliminating these critical errors, potentially through advanced methods like Retrieval-Augmented Generation (RAG), to increase their real-world clinical applicability.

Data availability

We have open-sourced the code, anonymized dataset, and evaluation results used in this experiment on GitHub (https://github.com/Maxin-C/LLM-Evaluation).

References

Kim, J. et al. Global patterns and trends in breast cancer incidence and mortality across 185 countries. Nat. Med. (2025).

Houzard, S. et al. Out-of-hospital follow-up after low risk breast cancer within a care network: 14-year results. Breast 23 (4), 407–412 (2014).

Dai, S. et al. Current status of Out-of-Hospital management of cancer patients and awareness of internet medical treatment: A questionnaire survey. Front. Public. Health. 9, 756271 (2021).

Cheng, K. K. F. et al. Home-based multidimensional survivorship programmes for breast cancer survivors. Cochrane Database Syst. Rev. 8 (8), CD011152 (2017).

Chen, Z. et al. Chat-ePRO: development and pilot study of an electronic patient-reported outcomes system based on ChatGPT. J. Biomed. Inf. 154, 104651 (2024).

Pan, A. et al. Assessment of artificial intelligence chatbot responses to top searched queries about cancer. JAMA Oncol. 9 (10), 1437–1440 (2023).

Sorin, V. et al. Large Language model (ChatGPT) as a support tool for breast tumor board. NPJ Breast Cancer. 9 (1), 44 (2023).

Liu, X. et al. A generalist medical Language model for disease diagnosis assistance. Nat. Med. 31 (3), 932–942 (2025).

Allen, E. S. & Christopher Likert Scales and Data Analyses (Quality Progress, 2007).

Cook, D. A. et al. Virtual patients using large Language models: scalable, contextualized simulation of Clinician-Patient dialogue with feedback. J. Med. Internet Res. 27, e68486 (2025).

Zeng, J. et al. Assessing the role of the generative pretrained transformer (GPT) in alzheimer’s disease management: comparative study of Neurologist- and artificial Intelligence-Generated responses. J. Med. Internet Res. 26, e51095 (2024).

Young, C. C. et al. Racial, ethnic, and sex bias in large Language model opioid recommendations for pain management. Pain 166 (3), 511–517 (2025).

Ge, J. et al. Development of a liver disease-specific large Language model chat interface using retrieval-augmented generation. Hepatology 80 (5), 1158–1168 (2024).

Chen, S. et al. Use of artificial intelligence chatbots for cancer treatment information. JAMA Oncol. 9 (10), 1459–1462 (2023).

Tsai, C. Y. et al. ChatGPT v4 outperforming v3.5 on cancer treatment recommendations in quality, clinical guideline, and expert opinion concordance. Digit. Health. 10, 20552076241269538 (2024).

Tawfik, E., Ghallab, E. & Moustafa, A. A nurse versus a chatbot – the effect of an empowerment program on chemotherapy-related side effects and the self-care behaviors of women living with breast cancer: a randomized controlled trial. BMC Nurs. 22 (1), 102 (2023).

Laymouna, M. et al. Roles, users, benefits, and limitations of chatbots in health care: rapid review. J. Med. Internet Res. 26, e56930 (2024).

Deng, L. et al. Evaluation of large Language models in breast cancer clinical scenarios: a comparative analysis based on ChatGPT-3.5, ChatGPT-4.0, and Claude2. Int. J. Surg. 110 (4), 1941–1950 (2024).

Griewing, S. et al. Challenging ChatGPT 3.5 in senology-an assessment of concordance with breast cancer tumor board decision making. J. Pers. Med. 13 (10). (2023).

Chen, D. et al. Physician and artificial intelligence chatbot responses to cancer questions from social media. JAMA Oncol. 10 (7), 956–960 (2024).

Greer, S. et al. Use of the chatbot vivibot to deliver positive psychology skills and promote Well-Being among young people after cancer treatment: randomized controlled feasibility trial. JMIR Mhealth Uhealth. 7 (10), e15018 (2019).

Author information

Authors and Affiliations

Contributions

The study was designed by QW and ZC. ZC and QW contributed to data acquisition. Biostatistical analysis was done by ZC, XL, and QW. Patient recruitment, sample collection as well as data collection was done by QW, HZ, YZ, CD, WH, and HM.Z. All authors had full access to all the data in the study and all authors interpreted the data. The first draft of the report was written by QW. Verification of the underlying data was done by ZC, QW, and HZ. The decision to submit the report for publication was made by all the authors. All authors contributed to the review of the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Wang, Q., Chen, Z., Zhang, H. et al. Large language models could be applied in personalized out-of-hospital management for breast cancer: a prospective randomized single blind study. Sci Rep 15, 33589 (2025). https://doi.org/10.1038/s41598-025-18759-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-18759-4