Abstract

A disability is one of the significant problems which has been introduced and leads to current problems. Disability is and continues to be a basis of frustration as it is observed as a limitation, a physical, cognitive, and mental handicap, which limits the individual’s growth and involvement. Therefore, considerable effort is put into removing this type of limitation. The problems that disabled individuals face are tackled in the initiative. Disabled individuals must depend on others to meet their requirements. Machine learning (ML) is performing great work to make a smart city and provide a safe presence for disabled people. Emerging as a smart city is possible when we can perceptively treat people with disabilities. This paper introduces a novel Sustainable Emotion Recognition System for Disabled Persons Using Deep Learning and Equilibrium Optimiser for Real-Time Communication Enhancement (SERDP-DLEOCE) approach. The SERDP-DLEOCE approach is designed as an advanced approach for emotion recognition in text to facilitate enhanced communication for people with disabilities. The process begins with text pre-processing, which involves multiple distinct stages to convert the raw text into a more suitable format for analysis. Furthermore, Word2Vec is employed for word embedding to capture semantic meaning by mapping words to dense vector representations. Moreover, the Elman neural network (ENN) model is used for emotion recognition in the test. Finally, the equilibrium optimizer (EO) model adjusts the hyperparameter values of the ENN model optimally and results in greater classification performance. The experimental validation of the SERDP-DLEOCE methodology is performed under the Emotion detection from text dataset. The comparison study of the SERDP-DLEOCE methodology portrayed a superior accuracy value of 95.15% over existing techniques.

Similar content being viewed by others

Introduction

Emotion drives the common decision-making of humans in their daily activities. Additionally, emotional conditions can indirectly affect human attention, communication, and the ability to remember information1. However, the detection and understanding of emotional states often come naturally to humans; these task poses critical challenges to computational sequences. The term affective computing denotes the models for identifying, predicting, and detecting human emotions, such as anger, joy, trust, sadness, anticipation, and surprise, to adjust computational methods to these states2. Emotional information is transferred through a diversity of physiological and physical features3. Emotions play a significant role in the survival and the overall individual makeup4. They observe information relating to our existing state and well-being. For people and businesses to offer optimal services to customers, they have to detect the diverse emotions expressed by individuals with disabilities and utilize the source to provide bespoke suggestions to meet the individual necessities of their customers5. Emotion recognition for individuals with disabilities will play a favourable role in human-computer interaction and the field of artificial intelligence (AI)6.

Several kinds of models were utilized to identify emotions from humans, such as body movements, facial expressions, textual information, heartbeat, and blood pressure. In computational linguistics, human emotion recognition in a text for disabled individuals is becoming progressively significant from an applicative viewpoint7. Currently, there is a massive amount of textual information. It is captivating to remove emotion from many targets, such as those in business. Numerous studies have attained acceptable outcomes in the emotion recognition field from text8. Most ML approaches excessively depend on handcrafted aspects that necessitate extensive manual design and adjustment, and it is cost-intensive and time-consuming9. Nevertheless, this concern is assisted prominently by the application of deep learning (DL) recently. DL models have effectively addressed difficulties in multiple fields, like machine translation, image classification, text-to-speech generation, speech recognition, and other relevant ML fields10. Likewise, extensive performance improvements are attained when DL models are utilized for statistical speech processing.

This paper introduces a novel Sustainable Emotion Recognition System for Disabled Persons Using Deep Learning and Equilibrium Optimiser for Real-Time Communication Enhancement (SERDP-DLEOCE) approach. The SERDP-DLEOCE approach is designed as an advanced approach for emotion recognition in text to facilitate enhanced communication for people with disabilities. The process begins with text pre-processing, which involves multiple distinct stages to convert the raw text into a more suitable format for analysis. Furthermore, Word2Vec is employed for word embedding to capture semantic meaning by mapping words to dense vector representations. Moreover, the Elman neural network (ENN) model is used for emotion recognition in the test. Finally, the equilibrium optimizer (EO) model adjusts the hyperparameter values of the ENN model optimally and results in greater classification performance. The experimental validation of the SERDP-DLEOCE methodology is performed under the Emotion detection from text dataset. The key contribution of the SERDP-DLEOCE methodology is highlighted below.

-

The SERDP-DLEOCE approach applies a comprehensive text pre-processing to clean, normalize, and structure raw text data, thus improving its suitability for analysis. This process ensures that the irrelevant and noise are removed, enabling more precise word embedding and overall performance of the emotion recognition model.

-

The SERDP-DLEOCE methodology employs the Word2Vec model for generating meaningful word embeddings, which effectually captures semantic relationships within the text, thus enhancing the word representation in a dense vector space. This improves the capability of the model in comprehending context and subtle variances, resulting in more accurate emotion recognition and better overall classification performance.

-

The SERDP-DLEOCE technique implements the ENN model for robustly recognizing textual emotions by effectively capturing temporal dependencies in sequential data, which also improves the sensitivity of the model to contextual cues in text. This approach enhances the accuracy and reliability of emotion classification across diverse samples.

-

The SERDP-DLEOCE model applies the EO technique for effectually tuning the hyperparameters, thus enhancing the performance of the model by finding optimal settings that efficiently balance accuracy and computational efficiency. This step ensures more stable and effective training outcomes, contributing to an improved emotion recognition output.

-

The integration of Word2Vec embeddings with the ENN and EO models forms a novel unified framework that improves emotion recognition accuracy. This methodology uniquely balances semantic understanding and dynamic modelling with optimized hyperparameters, resulting in enhanced performance. Additionally, it maintains computational efficiency, making it appropriate for real-world applications.

Literature works

Tanabe et al.11 proposed a concept for emotion recognition methods that depend on AI employing motion and physiological signals. Primarily, the heartbeat interval of a child with PIMD was determined, and the correlation between the emotion and RRI was shortly examined in a primary experiment. Afterwards, an emotion recognition method for children with PIMD was formed employing motion and physiological signals by applying an RF classifier. Chhimpa et al.12 advanced a video-oculography (VOG) based method under natural head movements for cursor movement and eye-tracking. Furthermore, a GUI is designed to analyze the fulfilment and performance of the day-to-day essential needs of disabled people. Komuraiah et al.13 developed a web-based facial emotion recognition method intended to aid ASD children with ASD in understanding and recognizing emotions in others, finally improving their social skills. This method was applied by utilizing the CNN and Flask web structure, offering a user-friendly interface for both children and therapists or caregivers. This work utilizes DL to identify faces and investigate facial expressions and precisely classify emotions. In14, an Inception-based CNN structure is developed for emotion prediction. The presented technique has attained better precision, resulting in a six per cent enhancement around the current network structure for emotion classification.

This technique is tested over seven diverse datasets to verify its sturdiness. Furthermore, the real-world performance abilities of the verified humanoid robot NAO. Priya and Sandesh15 focused on increasing communication for the speech and hearing-impaired communities by detecting alphabets and ISL digits of static images in either offline or real-time situations. This work utilized both DL and ML with CNN to classify the ISL numbers and alphabets. In the ML method, image pre-processing models like skin mask generation, HSV conversion, Gabor filtering and skin portion extraction were utilized to divide the region of interest (ROI) that is fed into 5 ML methods for sign prediction. Conversely, the DL method applied CNN techniques. The authors16 developed a multimodal emotion recognition platform (MEmoRE) approach. MEmoRE utilizes advanced emotion recognition models, incorporating textual sentiment analysis, vocal intonation, and facial expression, to extensively recognize stakeholder emotions. This technique is additionally designed to be employed. Nie et al.17 developed an avant-garde pig FER method called CReToNeXt-YOLOv5. The presented approach comprises multiple refinements modified for adeptness and heightened precision in detection.

The existing studies exhibit various limitations and research gaps in emotion recognition. Several techniques heavily depend on controlled environments or specific signal types, restricting generalizability across diverse real-world scenarios and sensor modalities. The model also lacks effective fusion models to capture complementary emotional cues. The integration of multimodal data (e.g., physiological, motion, facial, and vocal) also remains underexplored. Furthermore, additional refinement is required for real-time implementation and user-friendly interfaces for individuals with varied disabilities. Various techniques concentrate on isolated datasets or specific disabilities, illustrating a research gap in developing robust, scalable models adaptable to varied populations and varying data quality. Addressing these gaps is significant for enhancing the accuracy, accessibility, and practical applicability of emotion recognition systems.

The proposed model

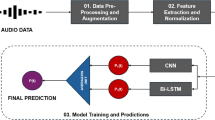

In this paper, a novel SERDP-DLEOCE method is introduced. The SERDP-DLEOCE method is designed as an advanced approach for emotion recognition in text to facilitate enhanced communication for people with disabilities. Figure 1 portrays the workflow of the SERDP-DLEOCE technique.

Text pre-processing

Text pre-processing is an essential stage in emotion recognition, as it prepares raw textual data for analysis by eliminating noise and normalizing the input18. Efficient pre-processing guarantees that the text data is in a systematic format appropriate for feature classification and extraction. The method typically includes many phases, all intended to deal with particular challenges in textual data.

-

1.

Tokenization: This phase splits the input text into small components, like sentences or words, to enable study. It assists in breaking down composite sentences into controllable units for additional processing.

-

2.

Lowercasing: To remove case sensitivity, each text is transformed to lowercase. This guarantees that words like \(\:Happy\) and \(\:happy\) are considered equal, minimizing redundancies in the data.

-

3.

Removal of stop words: Normally applied words, namely, \(\:is\), \(\:and\), \(\:the\), and \(\:of\), are eliminated as they give little to the emotional or sentiment content. This decreases noise and concentrates on the words, which transfer meaningful context.

-

4.

Removal of punctuation and special characters: Special characters and punctuation marks are eliminated to streamline the text and guarantee uniformity. Nevertheless, in similar cases, particular punctuation marks might be maintained after they convey emotion (for example, exclamation marks).

-

5.

Lemmatisation and stemming: Words are limited to their root or base forms. For instance, \(\:running\) becomes\(\:\:run\), guaranteeing reliability in the example for related words.

-

6.

Handling negations: Negations, namely \(\:not\:happy\), are recognized and considered as individual concepts for preserving the projected sentiments.

By performing these pre-processing methods, emotion recognition systems can successfully examine textual data, enhancing the precision of downstream tasks such as feature classification and extraction, and word embedding.

Word embedding process

Afterwards, the Word2Vec is employed for word embedding to capture semantic meaning by mapping words to dense vector representations19. This model effectually captures semantic relationships between words by mapping them into dense vector representations. This technique also provides a continuous, low-dimensional space that preserves meaningful linguistic patterns, allowing the model to comprehend context and similarity in language. The capability of the model in both syntactic and semantic information makes it superior to other embedding models. Furthermore, this is an ideal technique for balancing performance with resource constraints, and hence is computationally efficient and scalable for massive datasets. This balance between accuracy and efficiency justifies its use in the proposed framework.

Word2Vec is the word embedding approach commonly used in NLP for text classification. Its primary aim is to characterize words as dense vectors within the constant vector area. For numerous reasons, with its capability to capture semantic relationships among words, Word2Vec is confirmed as a valuable tool for text classification. Using Word2Vec for text classification provides many advantages, including its ability to determine semantic relations between words. Therefore, the classifier performs well on the tasks of classification as it may acquire a better knowledge of the meanings of words in the text. It is introduced to characterize a word as a constant-valued vector in this higher-dimensional vector space. The two main models are a Continuous Bag of Words (CBOW) and Skip-gram.

(i). CBOW: According to the contextual words near targeted words, it tries to predict such terms. For maximizing the probability of accurately forecasting the targeted word \(\:w\_t\) provided its contextual words \(\:w\_c\), whereas c travels from \(\:-C\) to \(\:C\) as exposed in Eq. (1)

\(\:\left(1\right)\)

Whereas T indicates each of the targeted words within the training data.

The likelihood of forecasting the targeted word, given its contextual words, is demonstrated by utilizing the functions of softmax exposed in Eq. (2):

Here, \(\:{\theta\:}_{-}\)w_t: Target word embedding as vector representations of the targeted word \(\:w\_t\). \(\:{\phi\:}_{-}{w}_{-}c\): The contextual word \(\:w\_c\) (contextual word embedding) is characterized as vectors. Dot \(\:\left(\bullet\:\right)\) product among dual vectors.

b. Skip-gram: For estimating the contextual words for a targeted word, it is applied. The aim is to improve the probability of precisely forecasting the targeted word \(\:\left({w}_{-}t\right)\) specified by the contextual word \(\:\left({w}_{-}c\right)\) exposed in Eq. (3).

The function of the SoftMax is applied to signify the probability of accurately guessing the contextual word given the target exposed in Eq. (4)

Emotion classification using ENN model

For the emotion recognition in text, the ENN model is utilized20. This model is chosen for its robust capability in capturing contextual data and temporal dependencies in sequential data. This technique effectually remembers prior inputs, making it significant in comprehending the flow and variances of language, unlike conventional feedforward networks. This methodology also presents a simple architecture with fewer parameters, making it computationally effective while still efficiently modelling short-term dependencies, compared to more intrinsic techniques such as LSTM or GRU. This balance between performance and efficiency makes ENN appropriate for emotion recognition tasks where capturing the sequence and context of words is crucial for accurate classification.

The ENN is a dynamical recurrent neural network (RNN) with local feedback networks. It usually contains four layers: the context, input, output layers, and hidden layer (HL). The contextual neuron layers relate one-to-one to the HL neurons. The HL output is generated in response to the HL input over the contextual layer’s storage and delay, attaining shorter-term memories and searching for local information. This tool characterizes a particular NN object, summarising the network’s weights, biases, training parameters, and structure, enabling fast utilization of the Elman code for calculation and investigation.

The mathematical formulation for its networking architecture is provided as shown:

Here, \(\:x\) stands for an n-dimensional vector of the intermediate layer node unit; \(\:y\) refers to an m-dimensional vector of the output node. \(\:{x}_{c}\) denotes \(\:n\:\)dimensional feedback state vector;\(\:\:u\) signifies \(\:r\)-dimensional input vector;\(\:\:{w}_{1}\) characteristics connected weight; \(\:{w}_{2}\) symbolize connected weighting from an input to intermediate layers;\(\:{\:w}_{3}\) represents connected weighting from the middle layer to the output layer; \(\:0\le\:\alpha\:<1\) indicates the self‐feedback gain feature; \(\:f(\bullet\:)\) epitomizes the transfer function of the intermediate layer neuron, typically utilizing Sigmoid and\(\:\:g(\bullet\:)\) means an output neuron’s transfer function, demonstrating the linear mixture of the outputs of the intermediate layer.

Parameter optimizer using EO

Finally, the EO approach adjusts the hyperparameter values of the ENN approach optimally and results in greater classification performance21. This approach is chosen for its capability in effectually exploring and exploiting the search space, resulting in optimal parameter settings. This model also converges more efficiently and avoids getting trapped in local optima, unlike conventional techniques such as grid or random search, thus improving the overall performance of the model. The method also effectively balances exploration and exploitation dynamically, and its physics-inspired optimization mechanism allows for effective tuning. This results in an enhanced accuracy while mitigating the computational overhead, making EO appropriate and effective for improving the ENN model.

The EO is a heuristic optimizer algorithm. This theory is represented using the following equation: The rate of change in mass is computed as the rate of inflow minus the rate of outflow, resulting in the final mass.

During this EO model, particles characterize solutions, and their attentions are applied to searching parameters. All particles try to discover an equilibrium point arbitrarily from a particular collection of best solutions within the exploration area. Attention directs the location of the solution in this regard. The model effectively directs particles to this equilibrium place to discover optimum solutions. The equilibrium candidates are successively applied to build a vector, which imitates the set of equilibria that is theoretically described as demonstrated:

Here, \(\:{X}_{eq1}\left(Iter\right),\dots\:,{X}_{eq4}\left(Iter\right)\) represent controller variables similar to the four best-fitted people in generation \(\:Ite{r}^{th}\:{X}_{eq,ave}\left(Iter\right)\) specifies the average value of these four individuals. \(\:X\left(Iter\right)\) are selected arbitrarily amongst these individuals. In every cycle, all particle locations are upgraded by arbitrarily choosing one such option with equivalent likelihood. For instance, in the initial testing, the primary particle can update its position according to \(\:{X}_{eq1}\left(Iter\right)\), then in the next iteration, the average value \(\:{X}_{eq,ave}\left(Iter\right)\) may be applied. Using the result of the optimizer method, all particles should be upgraded to an equivalent repeatedly for each of the possible solutions. \(\:\lambda\:\) refers to a random vector distributed uniformly in the interval of \(\:\left[\text{0,1}\right],\) \(\:F\) denotes a randomly generated vector described by the relations provided in Eq. (9), and \(\:G\) is another arbitrary vector shown in Eq. (10).

\(\:r\) stands for a randomly generated vector by a uniform distribution across the component interval. \(\:{r}_{1}\) and \(\:{r}_{2}\) represent randomly formed values through a uniform distribution inside the component interval. \(\:l\) denotes the parameter presented by Eq. (11).

The fitness selection refers to a substantial factor that manipulates the performance of the EO model. The hyperparameter range procedure comprises the solution-encoded technique for evaluating the effectiveness of the candidate solution:

Here, \(\:TP\) and \(\:FP\) signify the true and false positive values.

Experimental result and analysis

The performance validation of the SERDP-DLEOCE methodology is verified under Emotion detection from text dataset22. The method runs on Python 3.6.5 with an i5-8600k CPU, 4GB GPU, 16GB RAM, 250GB SSD, and 1 TB HDD, using a 0.01 learning rate, ReLU, 50 epochs, 0.5 dropout, and batch size 5. The dataset contains 31,390 samples under seven sentiments as depicted in Table 1. Table 2 signifies the sample text.

Table 3; Fig. 2 represent the emotion detection of the SERDP-DLEOCE technique under dissimilar epochs. The results imply that the SERDP-DLEOCE technique correctly identified the samples. On Epoch 500, the SERDP-DLEOCE technique attains an average \(\:acc{u}_{y}\) of 91.02%, \(\:pre{c}_{n}\) of 76.70%, \(\:rec{a}_{l}\) of 45.51%, \(\:F{1}_{score}\:\)of 44.85%, and \(\:{AUC}_{score}\) of 69.81%. Moreover, on Epoch 1000, the SERDP-DLEOCE technique attains an average \(\:acc{u}_{y}\) of 91.91%, \(\:pre{c}_{n}\) of 75.20%, \(\:rec{a}_{l}\) of 50.87 \(\:F{1}_{score}\:\)of 52.04%, and \(\:{AUC}_{score}\) of 72.79%. Besides, on Epoch 2000, the SERDP-DLEOCE methodology attains an average \(\:acc{u}_{y}\) of 93.05%, \(\:pre{c}_{n}\) of 78.07%, \(\:rec{a}_{l}\) of 57.81 \(\:F{1}_{score}\:\)of 61.15%, and \(\:{AUC}_{score}\) of 76.65%. Also, on Epoch 2500, the SERDP-DLEOCE methodology accomplishes attains an \(\:acc{u}_{y}\) of 93.93%, \(\:pre{c}_{n}\) of 78.89%, \(\:rec{a}_{l}\) of 63.48 \(\:F{1}_{score}\:\)of 67.14%, and \(\:{AUC}_{score}\) of 79.80%. At last, on Epoch 3000, the SERDP-DLEOCE methodology attains an average \(\:acc{u}_{y}\) of 95.15%, \(\:pre{c}_{n}\) of 80.95%, \(\:rec{a}_{l}\) of 71.65 \(\:F{1}_{score}\:\)of 75.00%, and \(\:{AUC}_{score}\) of 84.29%.

In Fig. 3, the training (TRA) \(\:acc{u}_{y}\) and validation (VAL) \(\:acc{u}_{y}\) analysis of the SERDP-DLEOCE technique below Epoch 3000 is illustrated. The \(\:acc{u}_{y}\:\)analysis is computed throughout 0-3000 epochs. The figure highlights that the TRA and VAL \(\:acc{u}_{y}\) analysis exhibits an increasing tendency, which informed the capacity of the SERDP-DLEOCE methodology with maximal outcomes over several iterations. Simultaneously, the TRA and VAL \(\:acc{u}_{y}\) remains closer across the epochs, which indicates inferior overfitting and exhibits better outcomes of the SERDP-DLEOCE methodology, assuring reliable prediction on hidden samples.

In Fig. 4, the TRA loss (TRALOS) and VAL loss (VALLOS) curve of the SERDP-DLEOCE approach under Epoch 3000 is shown. The values of loss are computed across the range of 0-3000 epochs. The TRALOS and VALLOS analysis exhibits a decreasing trend, demonstrating the capacity of the SERDP-DLEOCE method in balancing a trade-off between simplification and data fitting. The continuous reduction in values of loss moreover guarantees the maximum outcomes of the SERDP-DLEOCE method and tunes the prediction results over time.

In Fig. 5, the precision-recall (PR) curve results of the SERDP-DLEOCE methodology below Epoch 3000 present clarification into its results by plotting Precision alongside Recall for all the classes. The steady increase in PR analysis across all class labels illustrates the efficacy of the SERDP-DLEOCE model in the classification procedure.

In Fig. 6, the ROC graph of the SERDP-DLEOCE technique below Epoch 3000 is examined. The outcomes imply that the SERDP-DLEOCE technique accomplishes maximal ROC outcomes across all classes, demonstrating an essential capacity for discriminating the classes. This dependable trend of maximal ROC analysis across several classes indicates the capable outcomes of the SERDP-DLEOCE approach on predicting classes, highlighting the robust nature of the classification procedure.

Table 4; Fig. 7 present a comparison of the SERDP-DLEOCE methodology with the existing techniques23,24,25. The results emphasized that the CGT-2, AraBERT, DenseNet201, EfficientNet-B3, ResNet50, and NB + lexicon methods have reported worse performance. Meanwhile, the MGT-2 model has gotten closer to outcomes. Furthermore, the SERDP-DLEOCE technique reported superior performance with higher \(\:pre{c}_{n}\), \(\:rec{a}_{l},\) \(\:acc{u}_{y},\:\)and \(\:{F1}_{score}\) of 80.95%, 71.65%, 95.15%, and 75.00%, respectively.

In Table 5; Fig. 8, the comparative results of the SERDP-DLEOCE methodology are identified in terms of execution time (ET). The results imply that the SERDP-DLEOCE methodology gets maximum performance. Depending on ET, the SERDP-DLEOCE model delivers a lower ET of 1.01 min, whereas the MGT-2, CGT-2, AraBERT, DenseNet201, EfficientNet-B3, ResNet50, and NB + lexicon models achieve better ET values of 2.75 min, 3.37 min, 5.47 min, 5.21 min, 3.89 min, 4.57 min, and 5.45 min, disparately.

Table 6; Fig. 9 specifies the error analysis of the SERDP-DLEOCE technique with existing methods. The MGT-2 and CGT-2 models exhibit better balance across metrics, with MGT-2 attaining a higher F1-Score of 33.45%, illustrating more stable classification. Additionally, the AraBERT, DenseNet201, and ResNet50 models illustrate robust representation learning. The NB + lexicon technique surpasses the SERDP-DLEOCE model by attaining an \(\:acc{u}_{y}\) of 30.15% and \(\:{F1}_{score}\) of 26.59%. The SERDP-DLEOCE model attains an \(\:acc{u}_{y}\) of 4.85%, \(\:pre{c}_{n}\) of 19.05%, \(\:rec{a}_{l}\) of 28.35%, and \(\:{F1}_{score}\) of 25.00%, underperforms significantly compared to other baseline models.

Table 7; Fig. 10 indicates the ablation study of the SERDP-DLEOCE methodology. The SERDP-DLEOCE methodology attained the highest performance with an \(\:acc{u}_{y}\) of 95.15%, \(\:pre{c}_{n}\) of 80.95%, recall of 71.65%, and \(\:{F1}_{score}\) of 75.00%. When only the ENN model is used without the full integration, performance drops slightly to an \(\:acc{u}_{y}\) of 94.61% and \(\:{F1}_{score}\) of 74.21%, indicating the robust baseline capabilities of the ENN model. The EO method achieves an \(\:acc{u}_{y}\) of 94.02% and \(\:{F1}_{score}\) of 73.33%, illustrating its efficiency in optimization but also highlighting the necessity of integration with other components for best results. The Word2Vec technique achieves an \(\:acc{u}_{y}\) of 93.24% and \(\:{F1}_{score}\) of 72.66%, reflecting its contribution to semantic understanding. These results highlight the superior output of the SERDP-DLEOCE model.

Conclusion

In this paper, a novel SERDP-DLEOCE method is introduced. The SERDP-DLEOCE method is designed as an advanced approach for emotion recognition in text to facilitate enhanced communication for people with disabilities. Initially, the SERDP-DLEOCE method begins with text pre-processing, which involves several distinct stages to transform the raw text into a more suitable format for analysis. Furthermore, Word2Vec is employed for word embedding to capture semantic meaning by mapping words to dense vector representations. For the emotion recognition in text, the ENN model is utilized. Finally, the EO technique adjusts the hyperparameter values of the ENN technique optimally and results in greater classification performance. The experimental validation of the SERDP-DLEOCE methodology is performed under the Emotion detection from text dataset. The comparison study of the SERDP-DLEOCE methodology portrayed a superior accuracy value of 95.15% over existing techniques.

Data availability

The data that support the findings of this study are openly available in the Kaggle repository at https://www.kaggle.com/datasets/pashupatigupta/emotion-detection-from-text, reference number [22].

References

Kratzwald, B., Ilić, S., Kraus, M., Feuerriegel, S. & Prendinger, H. Deep learning for affective computing: Text-based emotion recognition in decision support. Decis. Support Syst. 115, 24–35 (2018).

Zhao, S., Jia, G., Yang, J., Ding, G. & Keutzer, K. Emotion recognition from multiple modalities: fundamentals and methodologies. IEEE. Signal. Process. Mag. 38 (6), 59–73 (2021).

Hassouneh, A., Mutawa, A. M. & Murugappan, M. Development of a real-time emotion recognition system using facial expressions and EEG based on machine learning and deep neural network methods. Informatics in Medicine Unlocked, 20, p.100372. (2020).

Yoon, S., Byun, S. & Jung, K. December. Multimodal speech emotion recognition using audio and text. In 2018 IEEE Spoken Language Technology Workshop (SLT) (112–118). IEEE. (2018).

Argaud, S., Vérin, M., Sauleau, P. & Grandjean, D. Facial emotion recognition in parkinson’s disease: a review and new hypotheses. Mov. Disord. 33 (4), 554–567 (2018).

Zhang, J., Yin, Z., Chen, P. & Nichele, S. Emotion recognition using multimodal data and machine learning techniques: A tutorial and review. Inform. Fusion. 59, 103–126 (2020).

Al-Nafjan, A., Hosny, M., Al-Ohali, Y. & Al-Wabil, A. Review and classification of emotion recognition based on EEG brain-computer interface system research: a systematic review. Applied Sciences, 7(12), p.1239. (2017).

Li, M., Xu, H., Liu, X. & Lu, S. Emotion recognition from multichannel EEG signals using K-nearest neighbor classification. Technol. Health Care. 26 (S1), 509–519 (2018).

Batbaatar, E., Li, M. & Ryu, K. H. Semantic-emotion neural network for emotion recognition from text. IEEE access. 7, 111866–111878 (2019).

Thenappan, S. et al. Quasi oppositional Jaya algorithm with computer vision based deep learning model for emotion recognition on autonomous vehicle drivers. Journal Intell. Syst. Internet Things, (1), (2025). pp.141 – 41.

Tanabe, H. et al. A concept for emotion recognition systems for children with profound intellectual and multiple disabilities based on artificial intelligence using physiological and motion signals. Disabil. Rehabilitation: Assist. Technol. 19 (4), 1319–1326 (2024).

Chhimpa, G. R., Kumar, A., Garhwal, S. & Dhiraj Development of a real-time eye movement-based computer interface for communication with improved accuracy for disabled people under natural head movements. Journal of Real-Time Image Processing, 20(4), p.81. (2023).

Komuraiah, B., Perna, S., Mudapu, V., Sridasyam, A. & Koyyada, N. June. Web-Based Facial Emotion Recognition System for Enhancing Social Interaction in Autistic Spectrum Disorder Children Using CNN & Flask Framework. In 2024 15th International Conference on Computing Communication and Networking Technologies (ICCCNT) (pp. 1–7). IEEE. (2024).

Jaiswal, S. & Nandi, G. C. Optimized, robust, real-time emotion prediction for human-robot interactions using deep learning. Multimedia Tools Appl. 82 (4), 5495–5519 (2023).

Priya, K. & Sandesh, B. J. Developing an offline and real-time Indian sign language recognition system with machine learning and deep learning. SN Computer Science, 5(3), p.273. (2024).

Cheng, B. et al. September. Multimodal emotion recognition for enhanced requirements engineering: a novel approach. In 2023 IEEE 31st International Requirements Engineering Conference (RE) (pp. 299–304). IEEE. (2023).

Nie, L. et al. Deep learning strategies with CReToNeXt-YOLOv5 for advanced pig face emotion detection. Scientific Reports, 14(1), p.1679. (2024).

Ali, M. A. & Kulkarni, S. B. Pre-processing of text for emotion detection and sentiment analysis of Hindi movie reviews. (2020).

Krishna, D. S., Srinivas, G. & Reddy, P. P. A Deep Parallel Hybrid Fusion Model for disaster tweet classification on Twitter data. Decision Analytics J., 11, p.100453. (2024).

Cao, S. et al. The Debris Flow Risk Prediction Model Based on PCA-Elman. Appl. Sci., 14(24), p.11960. (2024).

Abdelmalek, F., Afghoul, H., Krim, F., Bajaj, M. & Blazek, V. Experimental validation of novel hybrid grey Wolf equilibrium optimization for MPPT to improve the efficiency of solar photovoltaic system. Results Eng., p.103831. (2024).

https://www.kaggle.com/datasets/pashupatigupta/emotion-detection-from-text

Ghosh, S. & Paul, G. A Type-2 approach in emotion recognition and an extended Type-2 approach for emotion detection. Fuzzy Inform. Eng. 7 (4), 475–498 (2015).

Jain, P. R., Quadri, S. M. K. & Khattar, A. EM-UDA: emotion detection using unsupervised domain adaptation for classification of facial images. IEEE Access (2024).

Al Maruf, A. et al. Challenges and opportunities of text-based emotion detection: A survey. IEEE Access (2024).

Acknowledgements

The authors acknowledge the King Salman center For Disability Research for funding this work through Research Group no KSRG-2024- 217.

Funding

The authors extend their appreciation to the King Salman Centre for Disability Research for funding this work through Research Group no. KSRG-2024- 217.

Author information

Authors and Affiliations

Contributions

Turki Alghamdi: Methodology, Formal analysis, Investigation, Writing - original draft, Project administration, Funding acquisition.Saud Alotaibi: Methodology, Validation, Data curation, Visualization, Writing - review & editing.Reem Alharthi: Resources, Investigation, Formal analysis, Writing - review & editing, Supervision.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Alghamdi, T.A., Alotaibi, S.S. & Alharthi, R.M. Improving real-time emotion recognition system in assistive communication technologies for disabled persons using deep learning with equilibrium algorithm. Sci Rep 15, 38291 (2025). https://doi.org/10.1038/s41598-025-22031-0

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-22031-0