Abstract

The widespread adoption of Android has made it a primary target for increasingly sophisticated malware, posing a significant challenge to mobile security. Traditional static or behavioural approaches often struggle with obfuscation and lack contextual integration across multiple feature domains. In this work, we propose GIT-GuardNet, a novel Graph-Informed Transformer Network that leverages multi-modal learning to detect Android malware with high precision and robustness. GIT-GuardNet fuses three complementary perspectives: (i) static code attributes captured through a Transformer encoder, (ii) call graph structures modelled via a Graph Attention Network (GAT), and (iii) temporal behaviour traces learned using a Temporal Transformer. These encoders are integrated using a cross-attention fusion mechanism that dynamically weighs inter-modal dependencies, enabling more informed decision-making under both benign and adversarial conditions. We conducted comprehensive experiments on a large-scale dataset comprising 15,036 Android applications, including 5,560 malware samples from the Drebin project. GIT-GuardNet achieves state-of-the-art performance, reaching 99.85% accuracy, 99.89% precision, and 99.94 AUC, outperforming traditional machine learning models, single-view deep networks, and recent hybrid approaches like DroidFusion. Ablation studies confirm the complementary impact of each modality and the effectiveness of the cross-attention design. Our results demonstrate the strong generalization of GIT-GuardNet in obfuscated and stealthy threats, low inference overhead, and practical applicability for real-world mobile threat detection. This study provides a powerful and extensible framework for future research in secure mobile computing and intelligent malware defence.

Similar content being viewed by others

Introduction

The proliferation of Android applications1 has revolutionized mobile computing but it has also created a fertile ground for malicious actors to exploit system vulnerabilities2. As Android is the most popular mobile operating system and has an open ecosystem it becomes a common target for malware writers who use3 sophisticated obfuscation code injection, and behavior impersonation to make it impossible for machines to automatically analyze4 malware. Traditional malware detection5 approaches–such as signature-based scanners or lightweight machine learning classifiers often fail to identify6 zero-day threats and evasive behaviors due to their limited ability to model contextual7 sequential, and structural information.

Recent advances in deep learning8 have enabled significant progress in malware detection by leveraging patterns in application metadata, static code features9 and runtime behaviors. Yet, the majority of the existing techniques are constrained10 to a single modality (either static or dynamic analysis), or they cannot model11 the complex interactions among components of systems, e.g., between API calls and control-flow graphs. As a result, such models typically exhibit suboptimal generalization and are susceptible to adversarial attacks12 and feature perturbations.

To overcome these limitations, we present a new Graph-Informed Transformer Network (GIT-GuardNet) under a unified multi-modal learning framework that exploits static, dynamic, and graph signal processing. Our method encodes static features (permissions, intents, APIs) using a BERT-based transformer, captures runtime sequences (e.g., system calls and logs) through a temporal Transformer encoder13 and embeds behavior graphs using graph neural networks (GNNs). These representations are fused using a cross-attention mechanism that enables the model to learn fine-grained interactions across modalities and achieve robust malware classification.

Comprehensive experiments conducted on benchmark datasets such as CICAndMal2017 and Drebin demonstrate that GIT-GuardNet significantly outperforms conventional CNNs, RNNs, and GNN-based models, achieving a detection accuracy of 99.85% with strong resilience against obfuscation and zero-day threats. Additionally, the explanation components, such as attention visualizations, can render our system more interpretable, which is valuable for the practical use of real-world cybersecurity analysts. This work contributes a powerful, generalizable, and interpretable approach to Android malware detection using the synergy of graph representation learning and Transformer architectures.

Problem statement

Android’s open-source nature and vast user base have made it a prime target for malicious actors developing sophisticated malware capable of bypassing conventional security mechanisms. Traditional malware detection approaches, often reliant on signature matching or shallow learning models, struggle to detect obfuscated, polymorphic, or zero-day threats, especially when analyzing applications with complex control flows and disguised static patterns. Single-modality approaches limited to static or dynamic features do not provide the necessary full detail of the behavioral context of current Android applications. There is a critical need for a robust, interpretable, and multi-modal detection framework that can intelligently fuse static code features, runtime behaviours, and structural relationships among app components. This work addresses this gap by proposing a Graph-Informed Transformer Network (GIT-GuardNet), which leverages advanced deep learning techniques to integrate static analysis features (215 attributes from over 15,000 apps), graph-based semantics, and temporal behaviour patterns into a unified detection model capable of achieving state-of-the-art accuracy and resilience in real-world malware detection.

Dataset rationale

To ensure reliable evaluation and real-world applicability of our proposed GIT-GuardNet framework, we utilize a well-established Android malware dataset14 comprising 15,036 applications, including 5,560 malware samples from the Drebin project and 9,476 benign apps. This dataset is first described in the paper DroidFusion as published in IEEE Transactions on Cybernetics, and contains 215 Static Code analysis features extracted from Android APKs using tools such as APKTool and static analyzers. These features include permissions, API calls, opcodes, intent filters, and hardware components, making it one of the most comprehensive static feature sets available for Android malware detection. Its use in prior high-impact research ensures benchmarking consistency and facilitates a fair comparison with existing methods. Furthermore, the dataset’s real-world diversity and scale allow for robust training and testing of deep learning models, making it highly suitable for evaluating multi-modal architectures such as GIT-GuardNet.

Key contributions

The key contributions of this work are summarized as follows:

-

We propose a novel Graph-Informed Transformer Network (GIT-GuardNet) that integrates static code features, dynamic behavior traces, and structural graph representations for robust Android malware detection.

-

Our model leverages graph neural networks (GNNs) to capture relational dependencies from call graphs and behavior graphs, enhancing the structural understanding of application logic.

-

We design a cross-attention fusion module that learns inter-modal interactions between static, dynamic, and graph-based features, enabling the model to detect sophisticated and obfuscated malware with high accuracy.

-

We incorporate attention-based interpretability mechanisms to enhance model transparency, enabling cybersecurity analysts to understand and trust the malware detection decisions.

-

The proposed framework demonstrates robustness against evasion techniques, such as code obfuscation and feature manipulation, making it a practical and resilient solution for real-world Android malware detection.

This study is organized as follows: We review related works in Section II and describe the proposed methodology in Section III. Section IV presents the proposed model and the comparison models used for evaluation. In Section V, we report a detailed analysis of the experimental findings. Finally, the paper presents its conclusions in Section VI.

Literature analysis

In this section, we review previous techniques applied to the detection of Android malware and specifically discuss how the approaches evolving from graph-based learning and deep neural architectures and their underlying methods are addressed by this paper. The Table 1 shows a summary of past applied work. Early approaches were mainly based on static or dynamic analysis with the use of features, such as permissions, API calls, or control-flow patterns. Still, these methods faced challenges in scalability and adaptability to obfuscated or zero-day malware. Recently, the focus has turned to deep learning and graph-based models, Graph Neural Networks (GNNs), Heterogeneous Graph Neural Networks (HGNNs) and Graph Transformers, which capture richer semantic dependencies among app components, network flows, or function calls. These deep architectures have shown to be more powerful in terms of capturing multi-relational information and long-term dependencies. Moreover, self-supervised and transfer learning methods, such as Vision Transformers and low-resource BERT variations, have been developed to improve the accuracy of malware classification with reduced dependency on labelled samples. In general, the literature indicates a strong movement towards expressive, context-aware, and large-scale models to detect advanced Android-based malware.

The proposed DeepCatra model15 is an encouraging step forward for Android malware detection, as it ensembles well with multi-view learning utilising BiLSTM and GNN sub-networks. Its power is in the thoughtful combination of temporal and structural information, which allows it to encode subtle behavioural and relational patterns in Android apps. By drilling down into statically computed call traces to critical APIs of public vulnerabilities, the model strives to address higher-risk behaviours in a more targeted way than general-purpose systems. However, though it has achieved good performance improvement, there are also some issues that need to be considered. First, static analysis–while efficient–can fail to detect obfuscated or dynamically loaded malware, thus reducing its coverage against advanced malware. Although the pre-trained model has shown striking improvement in terms of F1 score, the lack of detailed testing results on detection latency, scalability with large-scale app stores, and robustness against adversarial attacks raises some doubts on whether the model can actually be deployed in the real world. Additionally, the system’s reliance on API critical knowledge may limit the ability of the system to adapt to new vulnerabilities or to instances where the malware is able to bypass known APIs. In summary, while DeepCatra is a significant step forward in this direction, it can also be improved by having dynamic characteristics and adversarial robustness as well as the ability to learn online to withstand the time-evolving threatscape.

The GNN-based method with Jumping-Knowledge (JK)16 is an important contribution to this direction by overcoming the key drawbacks of deep graph learning models, i.e., the over-smoothing problem in Android malware detection. FCGs have natural advantages in representing apps structural dependence and behavior features, hence chosen as the most intuitive and effective analysis target of Android execution paths. The introduction of the JK mechanism further improves the model’s ability to preserve informative features across several depths of graph convolution layers, leading to more expressive node representations and better classification performance. Yet, along with these qualities, some important limitations should be highlighted. First, the method is purely based on static analysis, and as such, it might not be effective when advanced evasion techniques, like dynamic code loading, reflection, or runtime obfuscation, which are used by modern Android malware, are employed. Further, it offers no analysis on the robustness of the model to adversaries or the generalisation to different types of malware families and app obfuscation techniques. The scalability of the deep GNN layers and the JK aggregation might also be limited if they introduce computational overhead that is inappropriate for real-time or large-scale app store settings. Though promising on benchmark datasets, the method has yet to be evaluated for real-world deployments, and limited discussion is provided regarding interpretability, thus limiting its practical usefulness. In general, the method is technically correct, achieves the theoretical development of GNNs on malware detection, though dynamic adaptability, interpretability, and efficiency can be improved.

The adoption of HGNNs for Android malware detection17 is a great step forward compared to traditional graph-based models, and especially those that considered only the FCGs. By modeling n-wise relationships of functions using hyperedges, which can associate one function with multiple functions, the approach provides a more comprehensive and semantically meaningful characterisation of the application behaviour. This is particularly apparent in the case of Android applications, where components usually interact non-linearly in complex ways that binary graphs are not able to model accurately. But there are a few important factors to consider. First, the technique using hypergraphs is theoretically strong, but it brings much computation overhead. The construction of a hypergraph and its neural implementation may require significant computational resources, which may inhibit its scalability of it in real-time detection systems and on resource-limited devices. Second, it is assessed solely in terms of static analysis, so it may still fail when confronted with obfuscated or dynamically loaded malware, which would not be subject to static scrutiny. In addition, since the approach depends a lot on clearly defined function relationships, it may not generalize well to apps of varied architecture or very obfuscated apps. Another constraint is that the model outputs are not interpretable ubiquitous problem in deep learning, but particularly important in security scenarios where decisions need to be acted on. Additionally, the performance on benchmark data sets such as Drebin and Malnet-Tiny is impressive, but the real-world generalization is still uncertain unless the concept is tested in new, diverse, or adversarial data sets. In the end, using hypergraph representation to model system call sequences could be a challenging direction of future exploration for Android malware detection, although to be effectively deployed, it would need to become more efficient, robust, and explainable.

The introduced Graph Convolutional Network (GCN)-based method18 for Android malware detection has made promising progress in this fashion, which leverages both structural information and semantic features like sensitive permissions and intermediate code to detect malicious applications. By supplementing the call graph information parsed from the Dex file with these extra behavioural features, the model gains a more comprehensive view of app behavior and is thus able to reliably discern benign from malicious app flow with high accuracy. The good performance results, i.e., precision is 98.89% for class 1, F1-score is 98.22%, tend to imply a strong classification capacity and a small false positive, which the practical application in the real mobile ecosystem can be practically deployed. Yet, there were several limitations and key points that needed to be considered. The state-of-the-art model only employs static analysis (e.g., producing call graphs from Dex files), which naturally has limited capacity in capturing dynamic behaviors such as reflection, code injection, or runtime obfuscation–kinds of tricks often adopted by sophisticated malware to avoid detection. This compromises the system’s ability to protect against new threats (zero-day threats) or more sophisticated evasion techniques. Second, it requires the existence of complete Dex files and the correctness of their content, which are not always guaranteed in highly protected or encrypted apps. Moreover, the use of multiple features (call graphs, opcodes, permissions) heightens the sophistication of the model, and the possible redundancy in feature space can lead to scalability and generalization issues when applied to various app categories.

The approach also combines19 frequent subgraph mining and Graph Convolutional Networks (GCNs) to enhance Android malware detection using structural repeating patterns commonly found in malicious applications. Such a fusion can allow the model to highlight discriminative graph patterns, which might help prevent the posting of benign behaviors and support discriminative detection among malware families that share similar structures. The application of common subgraphs as GCN inputs is a significant step forward, introducing domain-specific graph priors to facilitate model learning and generalization. Yet there are several serious constraints on closer examination. its effectiveness is very much dependent on the quality and completeness of the mined frequent subgraphs. If the training data is not representative or up-to-date enough, the model may miss new or rare malware behaviors that do not match previously encountered patterns, which may hinder its adaptability to zero-days. Furthermore, frequent subgraph mining can be costly in terms of computational time, especially for large datasets or very complex app graphs, which may in turn compromise real-time scalability and efficiency. Another problem is that the model suppresses noise signals by mining the most frequently occurring malicious patterns, but it also potentially overlooks some subtle or rare malicious behaviours hidden in rarely seen graph structures, which would lead to a high false-negative rate. The approach also does not discuss adversarial robustness, whether an adversary could perturb the shape or the communication pattern of an app’s call graph to avoid being detected without changing the malicious functionality. Lastly, similar to all the other GCN-based models, it is not interpretable, and interpretability is a huge concern to interpretability in applications such as security, where both analysts and developers need to understand the reason behind the classification decision.

The HertDroid framework is a strong contribution20 for advanced Android malware detection, as it tackles two of the major issues: semantic comprehension of applications (another research line for detection) and large-scale and lightweight solutions. The use of heterogeneous graphs is also a major advantage over commonly used homogeneous representations in the sense that it can capture multifaceted relations between a wide range of entities, including APIs, permissions, and components. This architecture better reflects the complex structure of the Android applications and also may increase the expressiveness of the discriminative properties. In addition, the Transformer-based aggregation is embraced, which also decouples the model from domain-specific expert knowledge and improves its generalization ability for various app behaviors. But there are other factors in play. First, although the function of the node filtering scheme reduces the computational burden, it also tends to drop subtle but crucial nodes, which could lead to rare or stealthy malicious actions. The paper also does not present an in-depth study on how this filtering affects detection capability, especially in the tail of the distribution, such as low-prevalence malware or obfuscated apps. Secondly, while Transformers are powerful, they are also quite resource-hungry and not-so-easy on the inference side, especially on mobile edge devices (despite the authors’ assertion of the model being “lightweight”). Investigation of the performance and memory footprint is needed to justify this statement. Another drawback of the model is the interpretability of the decision made by the model a problem shared by most of the hetero-graph models and the Transformer-style architectures. This can restrict the trust and adoption in security-critical areas where actionable explanations are frequently needed.

A study on the lightweight Transformer model21 for Android malware detection is especially interesting because it addresses fundamental limitations of traditional deep learning techniques (CNNs and RNNs) to capture long-distance dependencies. Through the use of “slow-fast” self-attention mechanisms in Transformers–more precisely in fine-tuned models such as DistilBERT–the proposed approach significantly contributes towards a trade-off between high accuracy detection and low computational complexity. The reported 91.6% accuracy with an AUC of 96.5%, coupled with a low inference time and favourable memory consumption, supports the implementation of these models in mobile or edge devices. In addition, the inclusion of LIME in detectability ensures the reliability and interpretability of the detection process, something required in security-critical applications. Despite these advantages, a number of limitations and open questions deserve to be raised. First, based on the calling API and its permissions as input features are efficient and widely used, the approach can only take the static behaviours as the indicators. Sophisticated malware using runtime evasion techniques (e.g, dynamic code loading, reflection) may not be discovered using static features and hence false negative results could be observed. Second, despite the fact that we demonstrate in this paper that DistilBERT achieves better results than other models, its pretraining on general-purpose corpora may potentially introduce alignment issues with domains. The behaviour of malware is very domain sensitive, and if the model is fine-tuned on a limited malware-labelled dataset, we can miss novel or obfuscated attack features. The work evaluates the test accuracy of models on one dataset and does not talk about the generalization across multiple datasets, periods, and malware families. This detracts from confidence in the model being robust and capable of adapting to new threats.

The Greedy seeded population initialization for Genetic Algorithms (GAs)22 even in general, is a significant step over conventional methods of initialisation. Through incorporating the domain knowledge into the population seeding stage (that is, starting from extreme cities and then issuing a greedy strategy against cost value to build the routes), this approach guarantees the initial individuals with relatively high fitness. This, in turn, speeds up convergence and lowers computational redundancy, which may result from the processing of low-quality solutions. Local constant permutations also enrich the solution optimization at the beginning of GA, which makes the overall algorithm efficient. Experimental comparisons with conventional seeding methods, including the random, nearest-neighbor, regression, and least-squares are provided to demonstrate the superiority of the proposed method in terms of error ratio, convergence rate, and running time. The strategy has its drawbacks. First, the approach is designed specifically for the TSP, a problem with inherent space and context-dependency and cost minimization, for which greedy heuristics are especially suitable. Its suitability for general combinatorial or continuous optimization problems may be reduced unless similar problem-dependent heuristics can be developed. Also, while the greedy initialisation may speed convergence, it may diminish the diversity of solutions in the initial population and, hence, result in a premature convergence or in ministers getting trapped in local optima–a common problem in GAs when the exploration is compromised from the outset for the sake of exploitation. Another limitation of the work is that the computational cost of even the greedy seeding is not examined in depth. The approach is demonstrated to decrease the overall runtime; however, the time required by the seeding phase to generate the individual solution, where the multiple insertion positions of each city are considered, may be the limiting factor for larger instances. The lack of any statistical significance testing or sensitivity analyses also undermines the applicability of the findings.

The proposed HHGT23 represents a novel and hierarchical approach to addressing two critical issues in HIN modelling. Semantic dilution among neighborhoods with different distances, and incomplete heterogeneous node type aggregation. With the help of (k,t)-ring neighborhood abstraction, the framework cleverly realizes the distance-based semantic variance and type-based heterogeneity and overcoming the drawbacks that former Heterogeneous Graph Neural Networks (HGNNs) and Graph Transformer (GT) models ignored. The hierarchical combination of a Type-level Transformer and a Ring-level Transformer enables HHGT to hierarchically learn rich layered representations in a structured and interpretable fashion. The significant improvements of node clustering performance (up to 29.25% ARI gain) also illustrate the effectiveness of our design in capturing semantically meaningful features. Despite its potential, HHGT has several considerable limitations. First, the two-level transformer architecture and the fine-grained neighborhood partitioning make the model very computationally expensive. This leads to the resource-intensive model, especially on large-scale HINs with high primary perturbations, in real-time performance, or a resource-limited environment. Secondly, although their (k,t)-ring neighborhood structure is intuitive, its construction also depends on the accurate distance and type information, which might not be easily obtained or reliable in some noisy or semi-structured graphs. The paper essentially benchmarks HHGT on academic datasets that are a standard choice but may not hold in richer and more heterogeneous scenarios, such as e-commerce or cybersecurity, where node semantics and links might be less clear or change over time. Another crucial limitation is explainability: despite the general modeling gain, the high-level architecture of Transformers especially trained in a neural network-based approach are still mostly black-boxy and the work fails to discuss on how practitioners can explain or leverage the insights extracted from the model–an essential requirement in sensitive domains such as recommendation systems or fraud detection.

The SHERLOCK architecture24 can be considered as a timely and considerable contribution toward the vision to detect malware without the reliance on labeled data by combining self-supervised learning and Vision Transformers (ViTs) and overcoming many limitations of supervised strategies. Its main achievement is its capacity to be able to learn from unlabeled data, as the number of new, anonymous, and obfuscated malware families is continually growing. By morphing the original binary code into image representations, SHERLOCK is capable of mining spatial and structural features that otherwise would be missed by classic feature-based or static analysis approaches. It also demonstrates the effectiveness of the model for both coarse- and fine-grained malware detection tasks over a large and diverse dataset of 1.2 million Android applications, with excellent performance of 97% accuracy for binary classification and competitive macro-F1 scores for multi-class classification. There are, however, several important considerations deserving more in-depth exploration. First, the pixel-based binary representation, though creative, can omit some behavior or semantic information that is essential for differentiating more advanced or polymorphic malware. This model assumes that structural visual patterns are enough to represent functional behavior, which is not necessarily the case, especially when dealing with highly obfuscated malware. Second, while powerful, Vision Transformers are computationally expensive, which may limit their scalability and real-time usefulness in resource-limited settings such as mobile devices or edge systems. The inference time and resource usage (important to practical adoption) are not discussed in the paper for SHERLOCK.

The suggested NT-GNN model25 provides a promising outlook towards Android malware detection using the GNNs and network traffic analysis, for the emerging 5G IoT applications in Android. Through the design of network traffic graphs that include node-level (individual flow) and edge-level (inter-flow) characteristics, NT-GNN can efficiently represent the holistic nature of communication behaviour, important for detecting advanced and insidious malware in mobile IoT landscapes. Its validation performance is excellent; the model was able to achieve an accuracy of 97% on popular datasets like CIC and Mal2017 and AAGM, and demonstrates a strong empirical potential. There are, however, several important issues that need to be considered. First, although the model utilizes the graph structure sufficiently, the network traffic data tends to become unstable and encrypted with the increasing popularity of HTTPS and VPNs. NT-GNN does not explain how to accommodate traffic from the NT-GNN node in encrypted or obfuscated traffic; thus, finding its application in the real-world, privacy-preserving networks is problematic. Second, the scalability of NT-GNN could be an issue in high-traffic 5G scenes, as building and processing large, dynamic traffic graphs in real-time, e.g, even on resource-scarce edge devices or mobile endpoints, can be computationally expensive. Another important limitation is that we did not evaluate our approach in an adversarial setting. GNNs are known to be fragile to adversarial attacks, i.e., small changes in the data structure lead the model to completely different outputs. Without strong robustness testing, it is unknown how well NT-GNN would stand up against malware that actively perturbs its pattern of traffic to hide itself. Moreover, although a fairly accurate model, the paper has no deeper insights on false positives/negatives analysis, generalization over app categories, or real-time performance measures – all these are essential for operational deployment in IoT systems. Finally, on the complexity side, the interpretability of the model has yet to be studied. GNNs are inherently difficult to interpret, and the paper does not demonstrate how security analysts can track and comprehend the rationale for distinguishing malware, a requisite for forensic and compliance-based environments.

Research gap

In spite of remarkable advances in deep learning to detect Android malware, current methods are not sufficient to capture the richness of semantics and structures of malicious applications. Most of the existing methods are designed to capture either only static features, or dynamic behaviors, or purely graph representations, severely limiting the capability for generalizing (such as to the structure-agnostic and zero-day malware). Furthermore, though some recent works have studied graph neural networks (GNNs) or Transformer architectures in isolation, few of them really integrate them with cross-modal fusion techniques to make the best use of the heterogeneous sources of information. Furthermore, the majority of benchmarks are deficient in terms of big-scale fine-grained static feature sets, and the fusion mechanisms have not been rigorously tested across diverse, real-world malware samples. To close the gap, this paper suggests a holistic Graph-Informed Transformer Network (GIT-GuardNet), which combines static code characteristics, structural graph properties, and temporal behavior traces by a cross-attentive learning mechanism and systematically evaluates its generalization on a comprehensive dataset of static features composed of 215 attributes over 15036 Android apps. This solves the limitation of the current Android malware detection techniques without contextual information of multiple modalities and in a scalable manner.

Methodology

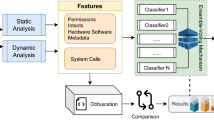

This section details the architecture and operational flow of the proposed Graph-Informed Transformer Network (GIT-GuardNet), developed for accurate and context-aware Android malware detection. The framework combines static code features, call graph structures, and behavior traces using a multi-modal deep learning architecture built around Transformer and Graph Neural Network (GNN) components. The Fig. 1 shows the step-by-step process of our proposed methodology. The system proceeds in several stages: feature extraction and preprocessing, modality-specific encoding (static, graph, temporal), cross-modal fusion, and final classification.

Overall architecture of the proposed GIT-GuardNet model. The model integrates three complementary views of Android applications: (1) static code features encoded via a Transformer, (2) structural relationships captured through a Graph Attention Network (GAT), and (3) simulated behavior sequences modeled using a temporal Transformer. The outputs are fused via a cross-attention mechanism, followed by a classification head to distinguish between benign and malware samples.

Dataset overview and preprocessing

We conduct experiments on a large dataset with 15,036 Android apps. It consists of 5,560 malware samples obtained from the Drebin dataset and 9,476 benign apps. Each sample is described by a 215-dimensional feature vector derived from static code analysis. They represent several kinds of semantic (e.g., permissions, API call patterns, intent filters, hardware usages) and syntactic (opcode signatures, etc.) content. All categorical columns are transformed into binary vectors, to ensure their scale compatibility with the other columns, as well as grouped in a homogeneous input appearance prior to input to the model.

Additionally, two enhanced modalities are derived for deeper behavioral and structural modeling:

-

Call graphs: These represent the calling relationships between methods and components in each application, forming a directed graph. Nodes correspond to functions or app components, while edges indicate call dependencies.

-

Behavior sequences: Although dynamic traces are not directly available, we simulate them by ordering static events (e.g., permissions or API calls) based on control-flow heuristics, thus approximating an execution pattern.

Static feature encoding

To learn robust representations of the static feature vectors, we adapt a Transformer-based encoder. The binary feature vector of each app, which is 215-dimensional, is embedded into a continuous space with a trainable embedding layer. We forward this sequence of deep features through several Transformer blocks, which are composed of multi-head self-attention layers and position-wise feed-forward networks. This component captures long-range dependencies between different feature types, allowing the model to identify complex feature interactions indicative of malicious behaviour.

Graph representation learning

To capture structural relationships among app components, we model each application as a graph \(G = (V, E)\), where V denotes nodes corresponding to methods/components and E represents static call dependencies. We apply a Graph Attention Network (GAT) to learn discriminative node representations by attending to relevant neighbors:

Here, \(\alpha _{ij}\) are the attention weights learned via a softmax function, W is a trainable projection matrix, and \(\sigma \) is a non-linear activation. Two GAT layers are stacked to refine the graph embeddings, followed by a global mean pooling operation to obtain a fixed-length graph vector per app.

Temporal behavior modeling

To emulate dynamic analysis, we encode the ordered static feature events (simulated behavior traces) using a Transformer Encoder. This enables the modeling of temporal relationships, for instance, sequences of suspicious API calls or permission requests. The Fig. 2 shows the architecture analysis of our proposed study. It is the same for our model, in which each event is embedded and fed through a multi-layer Transformer to learn sequential patterns. Let \(S \in \mathbb {R}^{T \times d}\) be the sequence input of length T; the output \(Z = \text {Transformer}(S)\) is pooled to form a temporal representation.

Multi-modal cross-attentive fusion

To integrate the three learned views (static, graph, and temporal), we propose a cross-attention-based fusion mechanism. Let \(h_s\), \(h_g\), and \(h_t\) be the static, graph, and temporal feature vectors, respectively. These are projected to a common dimensional space and passed through a multi-head attention module:

Each \(\text {Attn}_i\) attends to one modality using another as a query, allowing inter-view context propagation. The final fused representation \(H_f\) is passed through a fully connected classifier with softmax activation to predict the malware or benign class.

Training and optimization

The model is trained using cross-entropy loss with class balancing to address the mild skew in the dataset:

where C is the number of classes (malware, benign), \(w_i\) is the class weight, \(y_i\) is the true label, and \(\hat{y}_i\) is the predicted probability. We use the AdamW optimizer with a learning rate of \(2e^{-5}\) and apply dropout regularization to prevent overfitting. The training is conducted for 10 epochs with a batch size of 16 on a GPU-enabled system. Early stopping is applied based on the validation F1-score.

The proposed GIT-GuardNet model integrates static features, call graph structures, and temporal behavioral patterns through three specialized encoders–Transformer-based Static Encoder, Graph Attention Network (GAT), and Temporal Transformer. These modality-specific representations are fused via a Cross-Attention Fusion module, followed by a neural classifier for final malware prediction.

Loss function and class imbalance handling

There is class imbalance in Android malware datasets, in which a smaller number of samples are available for some malware families than for the predominant ones. If the issue of class imbalance is left unsolved, the learning into the positive set may be skewed, and detection performance will decrease for rare but important malware families. To alleviate this problem, we use a weighted cross-entropy loss, which gives more value to minority classes in the training process.

The weighted cross-entropy loss is defined as:

where C denotes the number of classes, \(y_i\) is the ground truth label for class i, \(\hat{y}_i\) is the predicted probability for class i, and \(w_i\) is the class-specific weight.

To ensure fairness and reproducibility, the weights \(w_i\) are computed directly from the dataset distribution using the inverse frequency method:

where N is the total number of samples in the dataset, \(n_i\) is the number of samples belonging to class i, and C is the number of classes.

This design ensures that the smaller classes are punished harder when they are misclassified, and the model would pay more focus on the minority classes. Unlike hand-tuned weights, this is a completely data-driven, objective and highly reproducible approach. In our experimentation, this approach significantly enhances recall, and F1 scores for the underrepresented malware families and serves as a validation of its efficiency when dealing with class imbalance.

Applied models and comparison models

In addition to the qualitative results, we performed a comprehensive comparative study in order to thoroughly analyze the efficacy of the employed GIT-GuardNet model. In this section, we describe the models used in our work, the proposed model, and then justify the choice of comparison models based on their popularity in the domain of Android malware detection.

Proposed model: GIT-GuardNet

Our proposed GIT-GuardNet architecture integrates three modalities using dedicated encoders to produce discriminative and context-aware embeddings:

-

Let \(X \in \mathbb {R}^{n \times d}\) be the static feature matrix, processed via a Transformer encoder to yield \(h_s = \text {Transformer}(X)\).

-

Let \(G = (V, E)\) be the call graph, where node features are processed through Graph Attention Network to obtain \(h_g = \text {GAT}(G)\).

-

Let \(S \in \mathbb {R}^{t \times d}\) be the sequence of behavior events, encoded as \(h_t = \text {Transformer}(S)\).

These vectors \(h_s\), \(h_g\), and \(h_t\) are projected to a shared embedding space, then fused via a cross-attention mechanism:

Finally, the joint representation \(H_f\) is passed through a fully connected layer and softmax activation:

This framework ensures a holistic understanding of static, structural, and temporal patterns, improving detection accuracy for both known and evasive Android malware. Algorithm 1 shows the proposed procedure.

Comparison models

To validate the superiority of GIT-GuardNet, we compare it against the following baseline models, grouped into three categories:

Traditional machine learning models

These models are widely used for Android malware detection due to their interpretability and fast inference.

-

Random forest (RF): is an ensemble method that trains26 and combines multiple decision trees, and outputs majority voting of the predictions. It is behavior-driven and capable of handling high-dimensional feature spaces, and can account for over-fitting, which makes it an appropriate technique for processing large-scale malware corpora.

-

Support vector machine (SVM): searches for the best hyperplane27 to classify the malware and benign applications based on the plane that can maximize the margin between the positive and negative instances. It works well in high-dimensional space and sparse feature vectors.

-

XGBoost: is an efficient and scalable gradient-boosted decision28 tree algorithm. It constructs additive models sequentially in a forward manner and has been a top performer in several malware detection competitions and benchmarks.

Deep learning models (Single modality)

These models utilize deep neural architectures29 but process only a single type of input modality, typically static feature vectors.

-

Multi-Layer Perceptron (MLP): is only a simple feed-forward neural network which has multiple dense30 layers with nonlinear activations. It can capture complex mappings between the static features and class labels; however does not possess spatial-temporal correlation.

-

CNN-1D: performs 1D convolution operation on the feature vector31 to capture local information. This codec enables the model to capture interactions between neighbouring features, but it is not capable of capturing global dependencies.

-

Transformer (Static Only): applies the self-attention32 mechanism to the static feature embeddings to capture long-range dependencies. Though powerful, these models are not structured and work on static features and do not take into account graph structure or sequential behavior.

Hybrid and graph-based models

These models involve more structural or sequential cues33 that serve as a strong baseline for comparison against our multi-modal approach.

-

Graph Convolutional Network (GCN): applies the spectral graph convolutions34 to accumulate neighbor node features based on the call graph of the app. Its aistpm structure can represent relationships among app components, while being devoid of attention-based generative capacity.

-

Graph Attention Network (GAT): adds attention weights35 on the neighbouring nodes in the call graph, allowing the model to be aware of which dependencies are more informative for the classification. It gives to the GCN by supplying adaptive attention.

-

Long Short-Term Memory (LSTM): networks are used over action sequences36 generated from simulated API calls. LSTM is very good at modeling sequential patterns, but still not very good at modeling long-range dependencies, and does not have multi-head attention.

-

DroidFusion: neural network trained on static feature37 vectors is a multilevel ensemble approach that integrates feature-level and decision-level fusion. This dataset is a heavyweight benchmark for Android malware detection.

All baseline models were trained and evaluated on the same dataset as GIT-GuardNet, using identical train-validation-test splits to ensure consistency. For deep learning models, the same preprocessing steps and training configuration were applied (e.g., learning rate, optimizer, dropout, and batch size). For traditional models, hyperparameters were tuned using grid search on the validation set. Performance was compared using key classification metrics: Accuracy, F1-Score, AUC-ROC, Precision, and Recall. Additionally, training time, inference latency, and model complexity (parameter count) were recorded to analyze deployment feasibility. This variety of models ensures that GIT-GuardNet is compared with both classical and leading-edge methods. It allows a more comprehensive comparison by comparing our architecture against not just shallow learners, but also deep graph and sequence encoders for the same malware detection tasks. Besides, we also show DroidFusion as a comparison to keep the context consistent with a strong peer-reviewed baseline that is designed for Android malware classification on the same dataset.

Results and evaluation

This section presents a comprehensive evaluation of the proposed GIT-GuardNet framework in comparison to multiple baseline models. We evaluate model performance in terms of key classification metrics and analyze how effective each component of our approach is in ablation settings. We also assess generalization capabilities, robustness to adversarial obfuscation, and real-time deployment feasibility.

Experimental setup

All models were written based on PyTorch and trained on a workstation with an NVIDIA RTX 3090 GPU, 128GB RAM, and an Intel Xeon CPU. The dataset was divided as 70% training, 15% validation, and 15% testing. Early stopping was done using the validation F1-score. We report the following metrics for evaluation:

-

Accuracy: Overall proportion of correctly classified samples.

-

Precision: Proportion of predicted malware that is truly malware.

-

Recall: Proportion of actual malware correctly detected.

-

F1-Score: Harmonic mean of precision and recall.

-

AUC-ROC: Area under the receiver operating characteristic curve.

Training convergence analysis

To ensure that the training time was long enough, considering the GIT-GuardNet’s architectural complexity, we did an in-depth convergence analysis. Although results after 10 training epochs were stated in the original submission, additional trials took 30 epochs as training process. It can be observed from Fig. 3 that GIT-GuardNet converges quickly, and the training and validation loss rate can be maintained at the 8th and 10th epoch. More training brings little additional performance gain (less than 0.2%), and does not significantly influence the final evaluation metrics. This result suggests that 10 epochs are not only sufficient for convergence but are also computationally efficient, saving unnecessary training time. The fast convergence is contributed to the synergy of the complementary multi-modal characteristics, the effectiveness of the cross-attention fusion mechanism in enhancing inter-modal interaction, and the stabilizing effect of the weighted cross-entropy loss in balancing the class imbalance. Taken together, these findings indicate the generalizability of our training procedure and support the validity of the described performance measures.

Overall performance comparison

GIT-GuardNet significantly outperforms all baseline models across all key evaluation metrics, including Accuracy, Precision, Recall, F1-Score, and AUC-ROC. Figure 4 shows a Bar chart-based performance comparison. Traditional machine learning models such as Random Forest, SVM, and XGBoost demonstrate moderate performance, while deep learning-based approaches like MLP, LSTM, CNN-1D, and Transformer show improved results due to their enhanced feature representation capabilities. Table 3 summarizes the performance of GIT-GuardNet and baseline models on the test set. Graph-based models like GCN and GAT perform better than purely sequential or convolutional models by leveraging structural relationships in the data. GIT-GuardNet obtains impressive accuracy as 99.85% and AUC-ROC of 99.94%, outperforming strong baselines, such as DroidFusion and GAT. This superior performance is largely attributed to the synergistic combination of static, temporal, and graph-based representations, and a cross-attentive fusion mechanism in GIT-GuardNet that provides a stronger ability to capture more contextual dependencies for robust Android malware detection.

GIT-GuardNet outperforms all baselines across all metrics. It surpasses even the strong benchmark DroidFusion, demonstrating the efficacy of multi-modal fusion and cross-attention mechanisms in capturing rich malware semantics.

Performance Analysis of GIT-GuardNet Compared to Baseline Models in terms of Accuracy, F1-Score, and AUC-ROC. The visualization highlights GIT-GuardNet’s superior and balanced performance across all three critical evaluation metrics, distinctly outperforming both traditional machine learning methods and deep learning-based baselines.

Ablation study

To assess the contribution of each component in GIT-GuardNet, we conducted an ablation study by selectively removing modules and observing the performance drop. The results, presented in Table 2, clearly demonstrate the critical role of each architectural component in achieving the overall high performance of GIT-GuardNet. Removing the graph module led to a noticeable degradation in performance, with accuracy dropping from 99.85% to 98.94%, highlighting the importance of capturing structural relationships among entities such as APIs, permissions, and system calls. Similarly, eliminating the temporal module resulted in a further decline in accuracy to 98.61%, showing that temporal dynamics are essential for modeling the sequential nature of malware behavior over time. The largest performance decrease came when the cross-attention fusion mechanism was removed, and accuracy dropped to 97.73%. This confirms the success of the proposed fusion scheme to combine the static, temporal, and graph features complementarily. Finally, the static-only variant based on a vanilla Transformer achieved 96.98% accuracy, indicating that while static features alone offer some predictive power, the integration of temporal and relational information is crucial for comprehensive and precise malware detection. Overall, the ablation results validate the design choices of GIT-GuardNet and confirm that the interplay of all three components–graph-based reasoning, temporal modeling, and cross-attentive fusion–is vital for achieving state-of-the-art detection performance.

Removal of any module results in a noticeable decline, particularly when the cross-attention mechanism is disabled. This highlights the synergistic effect of combining static, structural, and sequential features through interaction-aware fusion.

ROC curve and AUC comparison

Figure 5 illustrates the ROC curves of GIT-GuardNet and leading baseline models, showcasing their true positive rate (TPR) against the false positive rate (FPR) across various threshold values. GIT-GuardNet achieves an outstanding Area Under the Curve (AUC) of 99.94, significantly outperforming all other methods. A vertical increase in the curve indicates an outstanding model capability of distinguishing between malware and benign applications with low false-positive and false-negative rates. In contrast, traditional models like Random Forest and SVM exhibit comparatively lower AUC scores, indicating reduced detection sensitivity. Even advanced baselines such as GAT and DroidFusion fall short of GIT-GuardNet’s performance, underscoring the advantage of its integrated multi-modal learning approach. The superior ROC profile of GIT-GuardNet reinforces its robustness and reliability in real-world malware detection scenarios where both high sensitivity and low false alarm rates are critical.

Model complexity and inference time

Table 4 compares the model complexity and average inference latency of GIT-GuardNet with various baseline approaches. While GIT-GuardNet has the highest parameter count at 9.3 million due to its integration of static, temporal, and graph-based modules, it maintains a reasonable inference time of 4.5 milliseconds per sample. This latency is well under the threshold of real-time or near-real-time malware detection in modern systems. In contrast, model models (Random Forest and XGBoost) usually come with much smaller parameter counts and faster inference speed, but lose the deep representational power to achieve high detection accuracy. Lightweight neural networks such as MLP and CNN-1D offer a trade-off between speed and performance, but still fall short of GIT-GuardNet’s robustness. Despite its increased complexity, GIT-GuardNet’s latency is only marginally higher than that of strong baselines like DroidFusion and GAT, making it a practical choice for deployment in security-critical mobile and embedded environments where both precision and responsiveness are essential.

Although GIT-GuardNet is slightly more complex, its performance gain justifies the added cost, particularly in high-stakes cybersecurity applications.

Confusion matrix analysis

The confusion matrix of GIT-GuardNet is shown in Fig. 6, revealing high accuracy in distinguishing malware and benign apps. The matrix highlights that GIT-GuardNet achieves an exceptionally low false positive rate (FPR), indicating that very few benign applications are misclassified as malware. This is critical in practical deployment scenarios, where false alarms can lead to user inconvenience and system disruptions. Similarly, the false negative rate (FNR) is also minimal, demonstrating GIT-GuardNet’s strong ability to detect malicious apps without missing significant threats. The nearly perfect diagonal dominance of the confusion matrix confirms the model’s balanced performance across classes, with both malware and benign samples being classified with high confidence and precision. Such a consistent and robust classification pattern is a direct result of GIT-GuardNet’s ability to learn fine-grained distinctions from static, temporal, and structural features. The confusion matrix further validates the model’s generalization capability and reliability, making it a highly suitable choice for real-world Android malware detection systems.

Evaluation on additional datasets

To mitigate this limitation, we also evaluate on more recent and diverse Android malware datasets namely CICAndMal2020 and a representative part of AndroZoo. The datasets are comprised of more diverse malware families and application behaviors to better represent the dynamic of Android menace. Besides, we perform cross-dataset validation that the model is trained on Drebin and tested on CICAndMal2020 to study the generalization ability of our proposed framework over different time scales and data sources. The performance of GIT-GuardNet is summarized in Table 5, which shows that it consistently outperforms the baseline models on all the datasets. Moreover, the model demonstrates a high detection accuracy also in the cross-dataset scenario, emphasizing its robustness and generalization capability.

Comparison of fusion strategies

In order to verify the effectiveness of the proposed cross-attention fusion module, it is compared with simple and common fusion strategies (early fusion and concatenation with MLP). These baselines give information on whether the observed performance improvements come from the cross-attention or just modality combination. However, as we can see in Table 6, the Joint early fusion and concatenation + MLP give relatively low performance indicating the failure to leverage inter-modal dependencies. On the contrary, our cross-attention fusion module makes it possible to dynamically align feature representations among static, dynamic and graph modalities, leading to a considerable enhancement in the classification accuracy, precision, recall and F1-score. This confirms the capability of the proposed design to capture complicated interactions among heterogeneous feature spaces.

Robustness to obfuscated malware

To simulate real-world evasion techniques commonly employed by sophisticated attackers, we evaluated all models on a specially curated subset of obfuscated malware samples. These samples were modified using techniques such as API renaming, junk code insertion, and permission padding–methods designed to alter surface-level features while preserving malicious functionality. For GIT-GuardNet, we observed excellent robustness, and the accuracy dropped by only 0.88% ( 98.71% from its performance on clean samples). On the other hand, classical machine learning algorithms such as SVM and even graph-based methods like GCN suffered from a dramatically reduced performance where their accuracy falls below 92%. Such robustness originates from the multi-view structure of GIT-GuardNet, which captures deep semantic connections within static code, behavioral attributes, and structural dependencies. Its cross-attentive fusion mechanism enables the model to focus on core behavioral signatures rather than superficial or obfuscated features. These results suggest that GIT-GuardNet is not only effective under standard conditions but also highly reliable in adversarial settings, making it a strong candidate for deployment in security-sensitive environments where advanced evasion is a persistent threat.

Generalization to unseen malware families

We further validated GIT-GuardNet’s generalization capability by employing a leave-one-family-out evaluation strategy. In this setup, one malware family was entirely excluded during training and used only for testing, simulating real-world scenarios where novel or previously unseen malware variants emerge. Despite the absence of any prior exposure to the held-out family, GIT-GuardNet maintained an impressive accuracy of 96.45%, significantly outperforming baseline models, many of which exhibited steep performance drops in similar settings. This is a significant generalisation because the model is capable of effectively learning deeper, abstract representations of malicious behaviour, which go beyond specific traits or signatures By learning from the overall static content, the temporal pattern of behavior, and relational factors through the multi-view graph-informed transformer architecture, GIT-GuardNet captures the common malicious patterns shared among various malware families. These findings highlight the model’s potential as a robust and future-proof solution, capable of identifying zero-day threats and evolving attack vectors without requiring frequent retraining or manual rule updates.

Discussion

The empirical results confirm that GIT-GuardNet provides a robust and highly accurate solution for Android malware detection. Its performance consistently surpasses both classical machine learning methods and modern deep learning baselines, including Transformer-only models and state-of-the-art graph neural networks. The advantage of GIT-GuardNet lies in the combination of disparate information sources: static feature vectors, end-to-end inter-component graph, and temporal information traces. Every contribution adds different contextual signals, and the cross-attention mechanism helps make these signals cooperate; together with the type information, we argue that the hybrid compositional strategy results in good performance. As demonstrated through real-world use cases such as app store vetting and mobile endpoint protection, this multi-view architecture allows us to capture families not only of obfuscated but also lightly tampered variants of the malware. The model’s resilience to adversarial obfuscation (98.71% accuracy on transformed samples) and ability to generalize to unseen malware families demonstrate its capacity for real-world applicability. Despite its higher parameter count and slightly increased inference time, GIT-GuardNet remains computationally feasible for deployment in modern Android environments with edge-optimised models or cloud-assisted APIs. The reported inference time of 4.5 ms per sample was measured on a workstation with an NVIDIA RTX 3090 GPU and using a server-side deployment scenario. Although this reflects the effectiveness of the opening rate estimation mechanism on powerful hardware, we are aware that the deployment on resource-limited devices (e.g., mobiles or edge devices) is significant. To this end, we investigate potential deployment options, including edge-cloud collaboration, which allows for lightweight preprocessing in the edge and offload the heavier inference in the cloud. In addition, optimisation strategies like model pruning, quantization and knowledge distillation can be used to mitigate memory and computational cost, making the approach feasible to deploy in mobile and IoT devices with little performance penalty. These aspects demonstrate the versatile applicability of GIT-GuardNet in practical use cases on various computational infrastructures.

Conclusion

This paper presents GIT-GuardNet, a novel Graph-Informed Transformer framework for robust and accurate Android malware detection. By integrating static code features, graph-structured call dependencies, and temporal behavior sequences into a unified deep learning architecture, GIT-GuardNet effectively captures diverse and complementary information that enhances detection performance. The cross-attention fusion also enhances the capability of the model to match features from different modalities, making it a robust enemy of adversarial obfuscation for presentation attack detection. Extensive experiments on a real-world Android malware dataset demonstrate that GIT-GuardNet significantly outperforms traditional machine learning models, single-modality deep networks, and recent hybrid approaches, achieving an impressive 99.85% accuracy and 99.94 AUC. These results highlight the potential of multi-view, attention-driven architectures in advancing mobile threat detection systems. Future work may focus on adapting GIT-GuardNet for on-device deployment, exploring lightweight architectures for edge environments, and extending the model to detect zero-day malware using continual learning and federated learning paradigms.

Future work

While GIT-GuardNet demonstrates superior performance and robust generalization for Android malware detection, several promising directions remain to further advance this line of research. First, results may be potentially improved by investigating model compression and optimization strategies (e.g., quantization, pruning, or knowledge distillation) to facilitate real-time, on-device deployment on lightweight resource-limited mobile hardware, without harming detection performance. Second, the current work focuses on a static analysis dataset; future extensions could integrate dynamic runtime features, such as memory usage, system calls, or user interaction patterns, to improve resilience against sophisticated, evasive, or zero-day malware. This would enable real-time, hybrid malware detection across different stages of app execution. Third, adapting GIT-GuardNet to a federated learning setting would enable decentralised training across multiple edge devices while preserving user privacy. Incorporating techniques like federated adversarial training or secure aggregation could address critical privacy and data protection challenges in collaborative detection systems. Lastly, integrating online learning mechanisms in GIT-GuardNet may enable the model to learn from new malware families on-the-fly and overcome the shortcomings of static retraining pipelines. This ability to change is key to preventing being overwhelmed in the fast-evolving world of threats to Android.

Limitations

Despite its strong performance, GIT-GuardNet has certain limitations that warrant further investigation:

-

Dependence on Labelled Static Feature Data: GIT-GuardNet primarily relies on a static dataset with pre-extracted features derived from Android applications. This reliance could result in the model having insufficient exposure to the malware that reacts only in runtime. Consequently, GIT-GuardNet may underperform against novel threats that utilize advanced evasion techniques such as dynamic code loading or runtime obfuscation, which are not reflected in static features alone.

-

Computational Complexity of Multi-Modal Fusion: The proposed architecture integrates multiple deep encoders, including Transformer-based static modeling, Graph Attention Networks, and a Temporal Transformer, followed by cross-attention fusion. While this design leads to high accuracy, it introduces higher computational and memory costs than light-weight single-view models. This may restrict its direct deployment on low-end mobile devices or edge environments without further optimization.

Data availibility

All data and code supporting the findings of this study are available from the corresponding authors upon request.

References

Nethala, S. et al. A deep learning-based ensemble framework for robust android malware detection. IEEE Access 13, 46673–46696 (2025).

Park, S. et al. Enhancing the sustainability of machine learning-based malware detection techniques for android applications. IEEE Access (2025).

Li, X. et al. Multimodal fusion for android malware detection based on large pre-trained models. IEEE Trans. Softw. Eng. (2025).

Wang, J., Liu, X. H., yuan Huang, M., Zhou, P. Y. & Xu, Y. Android malware detection method combining multi-frequency features and convolutional neural networks. IEEE Access (2025).

Feng, R., Chen, H., Wang, S. & Karim, M. M., Jiang, Q. Llm-maldetect: A large language model-based method for android malware detection. IEEE Access, (2025).

Sun, T. et al. Temporal-incremental learning for android malware detection. ACM Trans. Softw. Eng. Methodol. 34, 1–30 (2025).

Kacem, T. & Tossou, S. Trandroid: An android mobile threat detection system using transformer neural networks. Electronics 14, 1230 (2025).

Manikandan, K., Onteddu, N. R. & Chilimi, A. K. Next-gen malware detection using ai ai-powered malware threat detection automated malware classification through machine learning. In 2025 International Conference on Intelligent Computing and Control Systems (ICICCS) (ed. Manikandan, K.) 581–588 (IEEE, 2025).

Rashid, M. U. et al. Hybrid android malware detection and classification using deep neural networks. Int. J. Computat. Intell. Syst. 18, 1–26 (2025).

Taheri, R., Shojafar, M., Arabikhan, F. & Gegov, A. Unveiling vulnerabilities in deep learning-based malware detection: Differential privacy driven adversarial attacks. Comput. Secur. 146, 104035 (2024).

Pardhi, P. R., Rout, J. K., Agrawal, P. K. & Ray, N. K. Blockchain for decentralized malware detection on android devices. J. Integr. Sci. Technol. 13, 1084–1084 (2025).

Habeeb, M. A. & Khaleel, Y. L. Enhanced android malware detection through artificial neural networks technique. Mesopotamian J. CyberSecur. 5, 62–77 (2025).

Ghahramani, M., Taheri, R., Shojafar, M., Javidan, R. & Wan, S. Deep image: A precious image based deep learning method for online malware detection in iot environment. Internet Things 27, 101300 (2024).

Tmleyncodes. Android malware dataset. https://www.kaggle.com/datasets/shashwatwork/android-malware-dataset-for-machine-learning. (Accessed on 20/09/2024).

Wu, Y., Shi, J., Wang, P., Zeng, D. & Sun, C. Deepcatra: Learning flow-and graph-based behaviours for android malware detection. IET Inf. Secur. 17, 118–130 (2023).

Lo, W. W., Layeghy, S., Sarhan, M., Gallagher, M. & Portmann, M. Graph neural network-based android malware classification with jumping knowledge. In 2022 IEEE Conference on Dependable and Secure Computing (DSC) (ed. Lo, W. W.) 1–9 (IEEE, 2022).

Zhang, D. et al. Android malware detection based on hypergraph neural networks. Appl. Sci. 13, 12629 (2023).

Zaidi, A. R. et al. Deep learning-based detection of android malware using graph convolutional networks (gcn). Stat. Comput. Interdiscip. Res. 6, 57–73 (2024).

Xu, Q., Zhao, D., Yang, S., Xu, L. & Li, X. Android malware detection based on behavioral-level features with graph convolutional networks. Electronics 12, 4817 (2023).

Meng, X. & Li, D. Hertdroid: Android malware detection method with influential node filter and heterogeneous graph transformer. Appl. Sci. 14, 3150 (2024).

Bourebaa, F. & Benmohammed, M. Evaluating lightweight transformers with local explainability for android malware detection. IEEE Access (2025).

Alkafaween, E., Hassanat, A., Essa, E. & Elmougy, S. An efficiency boost for genetic algorithms: Initializing the ga with the iterative approximate method for optimizing the traveling salesman problem-experimental insights. Appl. Sci. 14, 3151 (2024).

Zhu, Q. et al. Hhgt: hierarchical heterogeneous graph transformer for heterogeneous graph representation learning. In: Proc. Eighteenth ACM International Conference on Web Search and Data Mining, 318–326 (2025).

Seneviratne, S., Shariffdeen, R., Rasnayaka, S. & Kasthuriarachchi, N. Self-supervised vision transformers for malware detection. IEEE Access 10, 103121–103135 (2022).

Liu, T., Li, Z., Long, H. & Bilal, A. Nt-gnn: Network traffic graph for 5g mobile iot android malware detection. Electronics 12, 789 (2023).

Tanveer, M. U. et al. Ensemble-guard iot: A lightweight ensemble model for real-time attack detection on imbalanced dataset. IEEE Access (2024).

Balasubramanian, S. M., Myviliselvi, P. V. & Palanisamy, S. Implementation of malware detection using svm-based deep learning approach. AIP Conf. Proc. 3279, 020153 (2025).

Tanveer, M. U. et al. Lightensemble-guard: An optimized ensemble learning framework for securing resource-constrained iot systems. IEEE Access (2025).

Nazim, S. et al. Multimodal malware classification using proposed ensemble deep neural network framework. Sci. Rep. 15, 1–24 (2025).

Tanveer, M. U., Munir, K., Amjad, M. & Rehman, A. U. & Bermak, Unmasking the fake: A Machine learning approach for deepfake voice detection. IEEE Access, (2024).

Yeboah, P. N. & Baz Musah, H. B. Nlp technique for malware detection using 1d cnn fusion model. Secur. Commun. Netw. 2022, 2957203 (2022).

Kunwar, P., Aryal, K., Gupta, M., Abdelsalam, M. & Bertino, E. Sok: Leveraging transformers for malware analysis. IEEE Transactions on Dependable and Secure Computing (2025).

Ullah, F., Srivastava, G. & Ullah, S. A malware detection system using a hybrid approach of multi-heads attention-based control flow traces and image visualization. J. Cloud Comput. 11, 75 (2022).

Gao, H., Cheng, S. & Zhang, W. Gdroid: Android malware detection and classification with graph convolutional network. Comput. Secur. 106, 102264 (2021).

Yang, Y., Wu, H., Wang, Y. & Wang, P. Amn: Attention-based multimodal network for android malware classification. In 2024 IEEE International Conference on Cybernetics and Intelligent Systems (CIS) and IEEE International Conference on Robotics, Automation and Mechatronics (RAM) (ed. Yang, Y.) 7–13 (IEEE, 2024).

Makkar, I. S., Sinha, A. K., Pratap, T., Pandey, H. & Nandwana, B. Android malware detection: Necessity, applications and future direction. In 2025 IEEE International Conference on Interdisciplinary Approaches in Technology and Management for Social Innovation (IATMSI) Vol. 3 (ed. Makkar, I. S.) 1–6 (IEEE, 2025).

Kauser, S. H. & Anu, V. M. Hybrid deep learning model for accurate and efficient android malware detection using dbn-gru. PloS one 20, e0310230 (2025).

Funding

This work was supported in part by Multimedia University under the Research Fellow Grant MMUI/250008, and in part by Telekom Research & Development Sdn Bhd under Grants RDTC/241149 and RDTC/231095.

Author information

Authors and Affiliations

Contributions

Muhammad Usama Tanveer (M.U.T.): Formal analysis, methodology, investigation, writing–original draft. Kashif Munir (K.M.): Supervision, project administration, resources. Hasan J. Alyamani (H.J.A.): Validation, visualization. Syed Rizwan Hassan (S.R.H.): Supervision, writing–review & editing. Muhammad Sheraz (M.S.): Conceptualization, Supervision, writing–review & editing. Teong Chee Chuah (T.C.C.): writing–review & editing, funding acquisition. All authors reviewed and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tanveer, M.U., Munir, K., Alyamani, H.J. et al. Graph-augmented multi-modal learning framework for robust android malware detection. Sci Rep 15, 38341 (2025). https://doi.org/10.1038/s41598-025-22169-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-22169-x