Abstract

On resource-constrained platforms, small object detection in Unmanned Aerial Vehicle (UAV) images faces significant challenges due to the requirements of high-resolution input and real-time processing. We propose a real-time UAV aerial images small object detection framework, RT-UAV-SOD, which is based on the Transformer architecture and aims to improve the detection performance of small objects in UAV aerial images. In order to improve the detection performance, the model introduces two key innovations. First, we embed the Cascade Group Attention mechanism (CGA) into the inverted residual structure to construct the Cascade Group Attention-inverted Residual Mobile Block (CGA-iRMB) and form the backbone network. The optimized residual design strengthens the feature expression ability of the backbone network to enhance multi-scale feature extraction and to reduce computational complexity. Then, the cross-stage fusion module improves the multi-scale feature fusion process to achieve a balance between detection accuracy and inference speed. These improvements not only ensure the model’s compatibility with edge devices but also enhance the robust processing capability for aerial images. Experiments on the VisDrone2019-DET dataset demonstrate that our model increases precision by 3.3%, improves mAP50 by 4.5%, and enhances mAP50:95 by 2.5%. Experiments on the DOTA dataset demonstrate that our model increases precision by 4.3%, improves mAP50 by 1.1%, and enhances mAP50:95 by 2.6%. These results confirm that RT-UAV-SOD delivers an efficient solution for real-time object detection in UAV applications.

Similar content being viewed by others

Introduction

UAVs support critical applications, including search and rescue, traffic monitoring, and environmental analysis. They capture high-resolution aerial imagery, enabling precise observation and swift decision-making. However, detecting small objects remains a formidable challenge in these tasks. Targets such as pedestrians, vehicles, or crops typically occupy a small fraction of UAV aerial images. Limited pixel coverage, combined with high image resolution and complex backgrounds, complicates detection efforts. Moreover, UAV systems often operate on edge devices with constrained computational resources to minimize cloud dependency and to enhance processing efficiency. This constraint intensifies the difficulty of small object detection. As UAV technology advances, enhancing detection capabilities becomes increasingly critical to unlock its full potential across these domains.

Deep learning significantly advances object detection, yet small object detection in UAV aerial images continues to challenge existing models. Traditional lightweight architectures prioritize speed for real-time needs but struggle to detect small objects with limited pixel data. Their weak feature representation often leads to poor performance. Conversely, complex models leverage robust feature extraction to boost accuracy. However, their high computational demands make them impractical for resource-limited UAV platforms. Transformer-based methods balance feature modeling capability with inference efficiency. However, they face challenges in capturing fine-grained details of small objects and optimizing multi-scale feature fusion. These shortcomings underscore the need for tailored enhancements in UAV small object detection.

This paper presents RT-UAV-SOD, an efficient framework for small object detection in UAV aerial images. Built on a Transformer-based detection architecture, RT-UAV-SOD enhances small object recognition by refining feature extraction and fusion processes. It adapts seamlessly to edge deployment demands. The framework strengthens multi-scale feature modeling to capture fine details of small objects and improve cross-scale feature representations. Unlike traditional approaches, RT-UAV-SOD avoids reliance on computation-heavy operations. Instead, it employs innovative feature processing strategies to manage aerial scene complexity while sustaining detection performance. Its primary contributions include:

-

Enhancing the backbone network by integrating the Cascade Group Attention mechanism (CGA) into an optimized inverted residual structure to improve multi-scale feature extraction under real-time constraints.

-

Designing a compact and lightweight architecture that ensures compatibility with resource-limited edge devices commonly used in UAV applications.

-

Evaluating the model on the VisDrone2019-DET and DOTA datasets, demonstrating its effectiveness in UAV-based small object detection tasks.

Related work

Object detection methods

Over the past two decades, object detection has advanced significantly, transitioning from traditional methods before 2014 to deep learning-based approaches afterward. This transformation primarily stems from the emergence of convolutional neural networks (CNNs) and Transformer-based models. In 2012, CNNs gained widespread attention for their superior feature extraction capabilities1, which laid the foundation for modern detection techniques. In 2014, R-CNN2 was introduced, marking the first application of CNNs to region-based object detection and paving the way for a series of subsequent breakthroughs.

Modern deep learning-based object detection methods are generally classified into two categories: two-stage and single-stage detectors. Two-stage detectors, such as R-CNN2, Fast R-CNN3, Faster R-CNN4, Feature Pyramid Network (FPN)5, and Mask R-CNN6, first generate candidate regions before performing object classification and localization. While these methods achieve high accuracy, their computational complexity and slow inference speeds limit their suitability for real-time applications. In contrast, single-stage detectors, such as SSD7, FSSD8 and the YOLO family9,10,11,12,13,14,15,16,17, directly predict object classes and bounding boxes, prioritizing speed for applications like video surveillance and autonomous driving. However, their weaker feature extraction capability often impairs small object detection performance, especially under low-resolution conditions.

A major milestone in object detection was the introduction of a Detection Transformer (DETR)18, which reformulated object detection as a set-based prediction task. By eliminating the reliance on anchor boxes and non-maximum suppression (NMS), DETR enabled global feature modeling. However, its high computational cost hindered real-time applications. To address this, Deformable DETR19 incorporated deformable attention mechanisms, focusing on key regions to improve efficiency. RT-DETR20 further optimized the balance between speed and accuracy, surpassing YOLOv8 in performance. In 2024, YOLOv921 introduced programmable gradient information to enhance feature learning, achieving state-of-the-art results on standard datasets. These advancements, from CNN-based local feature extraction to Transformer-based global modeling, have significantly improved detection speed and accuracy, surpassing traditional methods22 and reinforcing applications such as UAV image processing and autonomous driving.

Transformer-based object detection

Transformer models significantly change the object detection landscape by overcoming the limitations of CNN-based methods. Although traditional methods such as Faster R-CNN4 and YOLO9 perform well in local feature extraction, they fall short in global context modeling. With its self-attention mechanism, Transformers can effectively capture long-range dependencies and show excellent performance in complex scenes. DETR18 pioneered this shift by adopting an end-to-end framework to directly predict bounding boxes and labels, eliminating the traditional region proposal step. To address the high computational complexity of DETR18, Deformable DETR19 introduces a deformable attention mechanism, enhancing efficiency and small object detection performance by focusing on key regions. Furthermore, RT-DETR20 further optimizes the real-time performance through efficient feature aggregation, which achieves a better balance between speed and accuracy.

Recently, Transformer-based methods have continued to advance the field. For example, DINO23 proposed in 2023 enhanced DETR by introducing contrastive denoising and a hybrid backbone network, which not only accelerated model convergence but also significantly improved detection accuracy. Moorthy et al.24 proposed HyMATOD, improving video object detection robustness with a hybrid multi-attention mechanism. These advances highlight the potential of Transformers for multi-scale object detection in real-time applications. However, high computational cost is still a major challenge for Transformer models, which also motivates research to shift to lightweight designs25 for resource-constrained practical application scenarios. A recent approach improves DETR for high-resolution UAV imagery by introducing a dual-head focus-attention mechanism to enhance small object detection and reduce computational redundancy26. In addition, hybrid architectures combining convolutional and Transformer components have been proposed to leverage both local and global feature modeling for enhanced small object detection in UAV imagery27. Collectively, these developments demonstrate that Transformer-based frameworks are becoming increasingly powerful and flexible, continually evolving to meet diverse application requirements.

Small object detection in UAV aerial images

Small object detection in UAV aerial images presents significant challenges due to wide-scene coverage, small object sizes, diverse scales, and complex backgrounds. These factors collectively complicate detection tasks, particularly in high-resolution images where small objects occupy only a few pixels. Feature extraction and recognition become even more difficult in cluttered environments. Models trained on general-purpose datasets such as COCO often fail to generalize effectively to drone-specific scenarios, leading to suboptimal performance on benchmarks like VisDrone2019 and UAVDT.To address this, some methods leverage scene context to improve localization accuracy. Nguyen et al.28 proposed a multi-task framework that incorporates road-region guidance to enhance vehicle detection in UAV imagery.

To address these challenges, researchers have developed specialized solutions. Lightweight CNN-based models, such as YOLOv5s16 and EfficientDet29, optimize feature pyramid structures and anchor designs to enhance small object detection while maintaining real-time processing capabilities. Cheng et al. introduced CSSDet30, which further improves the detection of small and dense objects in UAV aerial images by enhancing cross-scale feature fusion.

Compared with traditional CNN-based methods, Transformer-based models have emerged in the field of small object detection in recent years, with the significant advantage of effectively capturing long-range dependencies and making full use of global context information. For example, Zhang et al31 proposed a novel model that integrates an inverse residual enhancement module with a parallel sampling strategy, which not only significantly improves the accuracy of small object detection, but also effectively alleviates the interference caused by complex background. In addition, RT-DETR20 optimized for UAV application scenarios shows excellent robustness in multi-scale object detection tasks, which fully reflects the academic value and practical significance of adapting the cutting-edge architecture to specific application scenarios.

According to the characteristics of UAV aerial images, researchers have further developed a series of improved models based on the YOLO framework. Typical examples include YOLODrone32 and MFFSODNET33. These models significantly extend the application power of the original YOLO framework by incorporating optimization strategies specifically designed for UAV scenarios, such as enhancing the feature extraction ability of small objects, improving the adaptability to multi-scale objects, and improving the detection performance in cluttering environments. Tan et al.34 proposed RT-DETR-UAVs, enhancing small object detection in UAV imagery through global feature aggregation and dynamic attention mechanisms. In summary, both the innovative approach based on CNN and Transformer, and the variant of YOLO model for specific requirements, these research advances collectively highlight the need for customized methods to achieve efficient small object detection in UAV image processing. It also provides a more systematic and comprehensive technical tool for solving the inherent complex challenges in this field.

Method

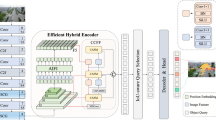

As shown in Fig. 1, which illustrates the overall architecture, the RT-UAV-SOD model aims to provide efficient and accurate small object detection capabilities for UAV applications. The model combines a backbone network with an optimized multi-scale fusion module to ensure real-time processing on edge devices. Additionally, the model incorporates a refined detection head to enhance the recognition performance of small objects in aerial images. By introducing advanced techniques in the feature extraction and fusion stages, RT-UAV-SOD achieves an excellent balance between computational efficiency and detection performance. In this section, its two core components are explored in depth: the Cascade Group Attention-inverted Residual Mobile Block (CGA-iRMB) backbone network and the Grouped Shuffle Fusion (GS-Fusion) multi-scale fusion module.

Cascade group attention-inverted residual mobile block

In UAV scenarios, fast inference and limited computing resources are crucial, and traditional backbone architectures such as deep convolutional networks are often not suitable for edge device deployment due to high computational requirements. Similarly, the attention mechanism adopted in some lightweight designs, which usually processes the whole feature map with the whole head, can introduce redundant computations and limit feature diversity, thus hindering the detection of small objects in complex aerial environments. To address these challenges, we propose CGA-iRMB, which significantly enhances the richness and diversity of extracted features while reducing computational overhead by integrating the CGA into the inverted residual structure.

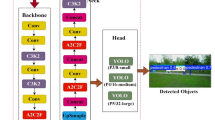

The CGA-iRMB backbone network replaces the traditional attention mechanism with CGA22. As shown in Fig. 2, CGA refines the information in the feature map layer by layer through multiple stages, dividing the input feature map into multiple channel groups, each processed independently by a dedicated attention head. Unlike standard multi-head self-attention, which processes the entire feature map across all heads, CGA restricts each attention head to a unique channel subset, reducing redundant computations while adjusting its attention weights based on the output of the previous stage. The output of each attention head is gradually integrated into the input of the subsequent heads in a cascade manner, enriching the feature representation without adding extra parameters. Subsequently, the outputs of all heads are aggregated and projected back to the original feature dimension, ensuring compatibility with downstream tasks.

As shown in Fig. 1, the CGA is embedded in iRMB35, following its typical sandwich structure. The input channel is expanded by pointwise convolution to enhance representation capability, followed by a depthwise convolution to efficiently capture local spatial patterns. Finally, another pointwise convolution is used to compress the features back to the original dimension. The CGA-iRMB backbone network employs a hierarchical multi-stage architecture to generate multi-scale feature maps across various resolutions. Each stage is configured by increasing the channel depth and repeating blocks, which gradually transition from shallow layers focusing on high-resolution details to deep layers capturing semantic information. This design not only optimizes the computational efficiency, but also provides diverse and robust feature support for UAV small object detection. We formulate the feature extraction process of CGA-iRMB as follows:

where the symbols are defined as follows: l is the Stage index, where \(l=1,2,\ldots ,L\) and L is the total number of stages. \(X^{l-1}\) is the Input feature map from the previous stage (or the original image for \(l=1\)). \(PW_1^l\) is the First pointwise convolution, expanding the channel dimension. \(DW^l\) is the Depthwise convolution, capturing local spatial patterns. \(\text {Attn}_g^l\) is the Attention mechanism applied to the g-th group. \(G^l\) is the Number of channel groups at stage l. \(PW_2^l\) Second pointwise convolution, compressing features to produce \(P^l \in {\mathbb {R}}^{H_l \times W_l \times C_l}\), the output feature map.

Multi-scale feature aggregation for small object detection

Multi-scale feature fusion actively enables UAV systems to detect targets of varying sizes. Traditional methods often employ computation-heavy operations, which impede efficient performance on resource-constrained edge devices. This issue proves critical in UAV detection tasks demanding rapid processing. We propose GS-Fusion, a multi-scale fusion module that enhances detection efficiency and robustness. GS-Fusion simplifies feature integration and reduces computational overhead, drawing inspiration from convolution techniques that blend standard and depthwise separable convolutions. This strategy preserves feature representation power while cutting complexity, paving the way for effective object detection on UAV edge platforms.

As shown in Fig. 1, GS-Fusion actively processes multi-resolution feature maps from the CGA-iRMB backbone to support object detection in UAV aerial images. It adopts a hybrid convolution strategy with two core operations. The first operation captures dense, channel-rich features to extract deep semantic information, similar to standard convolution. The second operation targets spatial context with low computational cost, akin to depthwise separable convolution, retaining object details. GS-Fusion combines these operations seamlessly. An aggregation mechanism then fuses features from multiple backbone stages in a single, efficient step. This method outperforms traditional multi-layer convolution stacks by actively reducing parameters and eliminating redundant computations. We formulate the GS-Fusion process as:

where the symbols are defined as follows: \(P^l \in {\mathbb {R}}^{H_l \times W_l \times C_l}\) is the Feature map from stage l. \(W_{SC} \in {\mathbb {R}}^{K \times K \times C_l \times C_{\text {out}}}\) is the Standard convolution weights, transforming \(P^l\) into features with \(C_{\text {out}}\) channels. \(W_{DW} \in {\mathbb {R}}^{K \times K \times C_l \times 1}\) is the Depthwise convolution weights, processing each channel independently. \(W_{PW} \in {\mathbb {R}}^{1 \times 1 \times C_l \times C_{\text {out}}}\) is the Pointwise convolution weights, adjusting channel dimensions. \(\alpha \in [0,1]\) is the Weight balancing contributions of Standard Convolution(SC) and Depth-wise Separable Convolution(DSC). \(F_{GS}^l \in {\mathbb {R}}^{H_l \times W_l \times C_{\text {out}}}\) is the Hybrid feature output.

As shown in Fig. 3 VoV-GSCSP applies a hybrid convolution via GSConv. We define this output as:

where the symbols are defined as follows: \(F_{GS}^l\) is the Hybrid feature output from GSConv (Eq. 2). \(\text {Up} \left( F^{l+1} \right)\) is the Upsampling operation on the deeper stage feature map \(F^{l+1}\), aligning to \(H_l \times W_l \times C_{\text {out}}\). \(\text {Down} \left( F^{l-1} \right)\) is the Downsampling operation on the shallower stage feature map \(F^{l-1}\), aligning to \(H_l \times W_l \times C_{\text {out}}\). \(\text {Agg}\) is the Aggregation operation, combining features via weighted summation or channel concatenation. \(F_{\text {fused}}^l \in {\mathbb {R}}^{H_l \times W_l \times C_{\text {out}}}\) is the Fused feature map for stage l.

Experiments

Datasets

VisDrone2019-DET

The VisDrone2019-DET dataset is a widely recognized benchmark for object detection in aerial imagery. It contains more than 10,000 images captured by UAV, covering diverse environments such as urban and rural. The dataset provides detailed annotations for multiple object categories, including pedestrians, vehicles, and small objects, with bounding box labels. One of the main challenges lies in the detection of small objects in high-density scenes, where occlusion and overlapping objects are common.

DOTA

The Dataset for Object Detection in Aerial Images (DOTA) focuses on object detection in aerial images taken by satellites and UAVs. It contains 2,806 high-resolution images covering 15 categories with over 188,000 annotated object instances. with a total of over 188,000 annotated object instances. Images in DOTA usually have a resolution of 800\(\times\)800 pixels, which provides rich samples and challenges for object detection research in aerial scenes.

Implementation details

All experiments were conducted on carefully configured computing platforms to ensure the reproducibility and consistency of results. The RT-UAV-SOD framework was implemented following strict specifications. As show in Table 1, the hardware setup includes an NVIDIA RTX 3090 GPU with 24 GB of video memory and an Intel i7-13700H CPU with 16 cores and 24 threads. The system operates on Ubuntu 20.04 with 32 GB of RAM, ensuring a stable training and inference pipeline. To fully leverage GPU acceleration, CUDA 11.8 is utilized for computational optimization. The RT-UAV-SOD model is developed using Python 3.8 and PyTorch 2.0. The input image resolution is fixed at 640\(\times\)640 pixels, with a training batch size of 4.

Experimental results

RT-UAV-SOD is designed for real-time object detection in UAV aerial images. We benchmarked other object detection models with similar parameter counts and model depth to ensure a fair comparison. Before our model was proposed, the field of object detection was mainly dominated by the YOLO series. We selected five mainstream YOLO series object detection models and used two datasets, VisDrone2019-DET and DOTA, to conduct comparative experiments.

As shown in Tables 2 and 3, the detection performance of the proposed model on both VisDrone2019-DET data set and DOTA data set is significantly improved. In the VisDrone2019-DET dataset, compared with the traditional RT-DETR model, RT-UAV-SOD has a 3.5% improvement on mAP50. Compared with the latest object detection model YOLOv11n, RT-UAV-SOD achieves an 11.6% improvement in mAP50. This represents an 11.7% improvement over the more popular YOLOv8 model. In the DOTA dataset, compared with the RT-DETR model, RT-UAV-SOD achieves a 1.1% improvement in mAP50. Compared with the latest object detection model YOLOv11n, 7.4% improvement; Compared with the more popular YOLOv8 model, it has a 6.2% improvement.

Ablation study

We performed ablation experiment on the VisDrone2019-DET dataset to systematically assess the effectiveness of the proposed components in RT-UAV-SOD. These experiments evaluate the contributions of various architectural enhancements within our model.The results of these ablation analyses are summarized in Table 4, showing the performance variations when different features and modules are incorporated or excluded. By comparing these findings, we demonstrate the contributions of CGA-iRMB and GS-Fusion to the model’s overall detection accuracy and computational efficiency. Figure 4 presents qualitative results for each ablation variant, showing the progressive improvements in small object detection and false positive suppression as each component is added.

Visual comparison of ablation variants on VisDrone2019-DET. Sequentially: Original image, Baseline (RT-DETR), iRMB only, CGA embedded into iRMB (CGA-iRMB), RT-UAV-SOD. The proposed improvements lead to progressively better detection of small and occluded objects, reducing false positives and missed detections compared to the baseline.

Qualitative analysis

We present qualitative results through Figs. 5 and 6 to evaluate the effectiveness of RT-UAV-SOD on VisDrone2019-DET and DOTA datasets, respectively. Figure 5 presents the detection results of RT-UAV-SOD on representative VisDrone2019-DET images, compared with those of the baseline model RT-DETR. RT-UAV-SOD achieves fewer missed detections and significantly lower false positives, particularly for small objects like pedestrians and vehicles. These improvements benefit from its enhanced feature modeling and fusion strategy.

Qualitative comparison of different methods on the VisDrone2019-DET dataset. Detection results from YOLOv5s, YOLOv8s, YOLOv9t, YOLOv10s, YOLOv11n, RT-DETR and the proposed RT-UAV-SOD are shown. RT-UAV-SOD demonstrates superior performance in detecting small and dense objects such as pedestrians and vehicles, with fewer false positives and missed detections.

Qualitative comparison of different methods on the DOTA dataset. Visualization of detection results from YOLOv5s, YOLOv8s, YOLOv9t, YOLOv10s, YOLOv11n, RT-DETR, and RT-UAV-SOD. The proposed method achieves better localization of aerial objects such as ships and aircraft, effectively reducing both false positives and missed detections.

Figure 6 shows the detection results of RT-UAV-SOD on selected images in the DOTA dataset, demonstrating its superior performance in detecting aerial objects, such as ships and aircraft. This method effectively reduces missed detections and false positives compared with RT-DETR. We attribute these improvements to the design of optimized backbone networks and fusion modules. Qualitative analysis of both sets of data confirms the superiority of RT-UAV-SOD, indicating its significant advantages in dealing with the challenge of small object detection in UAV aerial images.

Generalization experiment

Small object detection in UAV aerial images is often used in the field of energy detection, such as the detection of solar panels, wind turbines, high-voltage power lines, etc. To assess RT-UAV-SOD’s generalization beyond VisDrone2019-DET and DOTA, we performed an experiment on a UAV wind turbine inspection dataset, a critical case for energy infrastructure monitoring. This dataset comprises aerial images of wind turbines captured at varying altitudes, scales, and orientations, posing challenges such as object deformation and occlusion. As shown in Fig. 7, RT-UAV-SOD significantly outperforms RT-DETR in wind turbine detection, maintaining robust performance and demonstrating strong cross-domain generalization for real-world UAV-based inspection tasks.

Conclusion

To address the problem of high computational complexity of small object detection in high-resolution images, this paper proposes a real-time small object detection framework, RT-UAV-SOD, for UAV aerial images. Our framework integrates a backbone network enhanced by CGA, an optimized multi-scale fusion module, and a transformer-based detection head. These components work synergistically to significantly improve the multi-scale feature extraction capability, enhance the feature representation quality, and optimize the fusion process of cross-scale features, thus maintaining compatibility with resource-constrained edge devices commonly used in UAV systems and ensuring robust detection performance.

Experimental results on VisDrone2019-DET and DOTA datasetes demonstrate that RT-UAV-SOD achieves an excellent balance between detection accuracy and processing speed. Comparative analysis with existing real-time detection methods shows that the proposed framework is competitive in terms of accuracy and efficiency, and effectively deals with typical scale and density changes in aerial images. Ablation studies further verify the contributions of each component: CGA improves the ability to capture the critical multi-scale details of small objects, the optimized backbone design improves the computational efficiency and feature expression quality, and the fusion module significantly improves the detection performance by improving feature integration. Qualitative evaluation results further corroborate these findings, showing that RT-UAV-SOD significantly reduces missed detections and false positives in cluttered or dense scenes.

As a practical and efficient solution for UAV small object detection, RT-UAV-SOD provides a scalable framework that effectively balances detection accuracy and inference latency. It is well-suited for real-time applications on edge platforms, supporting a wide range of applications in surveillance, environmental analysis, and disaster response. Future research can further explore the adaptability of the framework to different UAV datasets and its performance in dynamic real-world scenarios, such as different flight altitudes. In addition, it is expected to further improve its generality by integrating adaptive mechanisms to cope with extreme scale changes or extending the model to multi-modal data. By laying a solid foundation for efficient small object detection, RT-UAV-SOD opens a new path for the sustainable development of UAV-based vision systems and promotes its expanding influence in practical applications.

Limitations and future work

While RT-UAV-SOD demonstrates strong performance in small object detection on UAV imagery, it still has several limitations. First, although our multi-scale fusion strategy improves detection across varied object sizes, extreme scale variations within a single image may still reduce detection accuracy. Second, the model has not yet been evaluated on real-time video streams or under diverse environmental conditions such as night, fog, or glare, which are common in UAV missions. Addressing challenges such as extreme scale variations and performance under adverse conditions, such as night or fog, will be part of our future research directions.

Data availability

The datasets generated and/ or analysed during the current study are not publicly available due further research work has not been completed but are available from the corresponding author on reasonable request.

References

Krizhevsky, A., Sutskever, I. & Hinton, G. E. Imagenet classification with deep convolutional neural networks. Adv. Neural Inform. Process. Syst. 25 (2012).

Girshick, R., Donahue, J., Darrell, T. & Malik, J. Region-based convolutional networks for accurate object detection and segmentation. IEEE Trans. Pattern Analysis Machine Intell. 38, 142–158 (2015).

Girshick, R. Fast r-cnn. In Proceedings of the IEEE international conference on computer vision, 1440–1448 ( 2015).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inform. Process. Syst. 28 (2015).

Lin, T.-Y. et al. Feature pyramid networks for object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 2117–2125 (2017).

He, K., Gkioxari, G., Dollár, P. & Girshick, R. Mask r-cnn. In Proceedings of the IEEE international conference on computer vision, 2961–2969 ( 2017).

Liu, W. et al. Ssd: Single shot multibox detector. In Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, October 11–14, 2016, Proceedings, Part I 14, 21–37 ( Springer, 2016).

Li, Z., Yang, L. & Zhou, F. Fssd: feature fusion single shot multibox detector. arXiv preprint arXiv:1712.00960 ( 2017).

Redmon, J., Divvala, S., Girshick, R. & Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE conference on computer vision and pattern recognition, 779–788 ( 2016).

Redmon, J. & Farhadi, A. Yolo9000: better, faster, stronger. In Proceedings of the IEEE conference on computer vision and pattern recognition, 7263–7271 ( 2017).

Redmon, J. & Farhadi, A. Yolov3: An incremental improvement. arXiv preprint arXiv:1804.02767 ( 2018).

Bochkovskiy, A., Wang, C.-Y. & Liao, H.-Y. M. Yolov4: Optimal speed and accuracy of object detection. arXiv preprint arXiv:2004.10934 ( 2020).

Ge, Z., Liu, S., Wang, F., Li, Z. & Sun, J. Yolox: Exceeding yolo series in 2021. arXiv preprint arXiv:2107.08430 ( 2021).

Li, C. et al. Yolov6: A single-stage object detection framework for industrial applications. arXiv preprint arXiv:2209.02976 ( 2022).

Wang, C.-Y., Bochkovskiy, A. & Liao, H.-Y. M. Yolov7: Trainable bag-of-freebies sets new state-of-the-art for real-time object detectors. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 7464–7475 ( 2023).

Ultralytics. Yolov5. GitHub repository https://github.com/ultralytics/yolov5 ( 2020). Accessed: 2025-2-27.

Wang, A. et al. Yolov10: Real-time end-to-end object detection. Adv. Neural Inform. Process. Syst. 37, 107984–108011 (2024).

Carion, N. et al. End-to-end object detection with transformers. In European conference on computer vision, 213–229 ( Springer, 2020).

Zhu, X. et al. Deformable detr: Deformable transformers for end-to-end object detection. arXiv preprint arXiv: 2010.04159 ( 2020).

Zhao, Y. et al. Detrs beat yolos on real-time object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 16965–16974 ( 2024).

Wang, C.-Y., Yeh, I.-H. & Mark Liao, H.-Y. Yolov9: Learning what you want to learn using programmable gradient information. In European conference on computer vision, 1–21 ( Springer, 2024).

Liu, X. et al. Efficientvit: Memory efficient vision transformer with cascaded group attention. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 14420–14430 ( 2023).

Zhang, H. et al. Dino: Detr with improved denoising anchor boxes for end-to-end object detection. arXiv preprint arXiv: 2203.03605 ( 2022).

Moorthy, S., KS, S. S., Arthanari, S., Jeong, J. H. & Joo, Y. H. Hybrid multi-attention transformer for robust video object detection. Eng. Appl. Artif. Intell. 139, 109606 (2025).

Li, H. et al. Slim-neck by gsconv: A lightweight-design for real-time detector architectures. J. Real-Time Image Process. 21, 62 (2024).

Hoanh, N. & Pham, T. V. Focus-attention approach in optimizing detr for object detection from high-resolution images. Knowl.-Based Syst. 296, 111939 (2024).

Nguyen, H., Ngo, T. Q., Uyen, H. T. T. & Duong, M. K. Enhanced object recognition from remote sensing images based on hybrid convolution and transformer structure. Earth Sci. Inform. 18, 1–15 (2025).

Hoanh, N. & Pham, T. V. A multi-task framework for car detection from high-resolution uav imagery focusing on road regions. IEEE Trans. Intell. Transport. Syst. (2024).

Tan, M., Pang, R. & Le, Q. V. Efficientdet: Scalable and efficient object detection. In Proceedings of the IEEE/CVF conference on computer vision and pattern recognition, 10781–10790 ( 2020).

Cheng, G. et al. Cssdet: Small object detection via cross-scale feature enhancement on drone-view images. Int. J. Digital Earth 17, 2414848 (2024).

Zhang, Y., Wu, C., Zhang, T., Liu, Y. & Zheng, Y. Self-attention guidance and multiscale feature fusion-based uav image object detection. IEEE Geosci. Remote Sensing Lett. 20, 1–5 (2023).

Sahin, O. & Ozer, S. Yolodrone: Improved yolo architecture for object detection in drone images. In 2021 44th International Conference on Telecommunications and Signal Processing (TSP), 361–365 ( IEEE, 2021).

Jiang, L. et al. Mffsodnet: Multi-scale feature fusion small object detection network for uav aerial images. IEEE Transactions on Instrumentation and Measurement ( 2024).

Tan, L., Liu, Z., Liu, H., Li, D. & Zhang, C. A real-time unmanned aerial vehicle (uav) aerial image object detection model. In 2024 International Joint Conference on Neural Networks (IJCNN), 1–7 ( IEEE, 2024).

Zhang, J. et al. Rethinking mobile block for efficient attention-based models. In 2023 IEEE/CVF International Conference on Computer Vision (ICCV), 1389–1400 ( IEEE Computer Society, 2023).

Ultralytics. Ultralytics. GitHub repository https://github.com/ultralytics/ultralytics ( 2024). Accessed: 2023-10-27.

Acknowledgements

This work was supported by the National Natural Science Foundation of China (62472010) and the Chongqing Natural Science Foundation (CSTB2024NSCQ-MSX0687).

Author information

Authors and Affiliations

Contributions

Li Tan conceived the research and designed the experiments. Chen Zhang conducted the experiments, including the implementation of the RT-UAV-SOD framework and testing on the VisDrone2019-DET dataset. Hua Bai and Zikang Liu analyzed the results, focusing on the performance evaluation of the module. Yibo Li contributed to data preparation and validation. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tan, L., Zhang, C., Bai, H. et al. A real-time and efficient detector for small object in UAV aerial images. Sci Rep 15, 39233 (2025). https://doi.org/10.1038/s41598-025-22855-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-22855-w