Abstract

In recent years, robust estimation of model parameters has attracted considerable attention in statistics and machine learning, particularly in the context of modeling data with outliers and related inverse problems. This paper introduces a novel log-truncated minimization estimator and a corresponding stochastic gradient descent (SGD) algorithm for quasi-generalized linear models. This approach provides a robust alternative to ordinary GLMs without assuming light-tailed error distributions. For independent non-identical distributed (i.n.i.d.) data, we derive non-asymptotic excess risk and \(\ell _2\)-risk bounds using log-truncated Lipschitz losses, assuming only a finite \(\beta\)-th moment for \(\beta \in (1,2]\). Notably, our estimator does not require higher moments or finite variance. Our contribution is to robustify the objective while allowing i.n.i.d. sampling. We also analyze the iteration complexity of SGD for finding stationary points of non-convex log-truncated minimization. Empirically, our SGD algorithm outperforms non-robust methods. We demonstrate its practical effectiveness through a real-data analysis of the German health care demand dataset using robust negative binomial regression.

Similar content being viewed by others

Introduction

Backgrounds

Quasi-generalized linear models (quasi-GLMs) extend ordinary and generalized linear regressions. They allow response variables to follow distributions beyond the normal and exponential families1,2,3,4,5. Robust estimation of heavy-tailed GLMs, especially those with infinite variance data, has recently gained attention6,7,8,9,10. In practice, data often contains noise or contamination, leading to heavier tails, as observed in finance, engineering, and genomic studies. For instance11, found that normalized gene expression levels often exhibit high kurtosis, indicating outliers and heavy tails. Outliers, although few, can introduce substantial bias in standard estimation. In finance, extreme events and outliers significantly affect models12,13, with infinite variance distributions like t-distributions and Pareto families modeling extreme risks. Ignoring outliers or applying overly aggressive bounded transformations can lead to inconsistent and inefficient estimates14.

Non-identically distributed data. An important factor contributing to data outliers is that samples may originate from different distributions. Classical machine learning and statistical theories often assume that n samples \(\{(X_i, Y_i)\}_{i=1}^n\) are i.i.d., which is often too restrictive for real-world data. The i.i.d. assumption is frequently violated for three main reasons15: 1. Data points are influenced by outliers, such as dataset bias or domain shift; 2. Distribution changes occur at some point (change point); 3. Data is generated from multiple distinct distributions rather than a single one, such as multi-armed bandit problems16.

The independent non-identically distributed (i.n.i.d.) data assumption is more relevant to real-world applications, particularly in industrial and complex social problems. I.n.i.d. data often arises in time-dependent contexts where distributions change over time9,10. In behavioral and social applications, significant heterogeneity exists that cannot be captured by the i.i.d. assumption17. For example18, performed a classification task on a dataset of cats and dogs with unknown breeds, violating the i.i.d. assumption. In computational biology, datasets often include multiple organisms and phenotypes19. In finance, factor models assume returns are driven by common factors, with effects varying across assets20. GLMs with i.n.i.d. data enable modeling such heterogeneity, improving understanding and prediction. Studies by21,22 have also developed distributionally robust i.n.i.d. learning methods for real-world applications.

Heavy-tailed data under finite moments. Extensive research assumes both input and output variables exhibit sub-Gaussian behavior23,24,25. When inputs or outputs are contaminated with measurement errors, these errors are also often assumed to be sub-Gaussian26. However, this assumption lacks robustness for unbounded covariates, as sub-Gaussian distributions have all k-th moments27. In practice, particularly in finance and extreme value theory, heavy-tailed data often lacks exponential moments, presenting additional challenges13.

Determining data corruption precisely is often impossible, making moment conditions a more practical assumption. We focus on data contaminated by heterogeneous, heavy-tailed errors, assuming heavy tails with bounded lower-order moments (e.g., Pareto distributions). Our goal is to develop robust estimators for general GLMs to ensure reliable estimation.

Notation. For \(\theta \in \mathbb {R}^p\), the \(\ell _q\)-norm is \({\Vert \theta \Vert _{\ell _q}} = {( \sum _{j=1}^p \theta _j^q )^{1/q}}\). The \(L_p\)-norm is \({\Vert Z \Vert _p} = (\textrm{E}|Z|^p)^{1/p}\) for \(p> 0\). Let \({\textrm{E}_n}f(X) = \frac{1}{n}\sum _{i=1}^n f(X_i)\) be the average moment for independent random variables \(\{X_i\}_{i=1}^n\) in a space \({\mathscr {X}}\). Let \((\Theta , d)\) be a metric space, and let \(K \subset \Theta\). A subset \({\mathscr {N}}(K,\varepsilon ) \subset \mathbb {R}^p\) is an \(\varepsilon\)-net for K if, for all \(x \in K\), there exists \(y \in {\mathscr {N}}(K,\varepsilon )\) such that \(\Vert x - y\Vert _{\ell _2} < \varepsilon\). The covering number \(N(K,\varepsilon )\) is the smallest number of closed balls of radius \(\varepsilon\) centered at points in K that cover K. For a smooth function f(x) on \(\mathbb {R}\), let \(\dot{f}(x) = df(x)/dx\) and \(\ddot{f}(x) = d^2 f(x)/dx^2\). For differentiable \(f: \mathbb {R}^p \rightarrow \mathbb {R}\), let \(\nabla f\) denote the gradient. For any scalar random variable Z, \(\Vert \cdot \Vert _{\psi _2}\) is the sub-Gaussian norm defined by \(\Vert Z\Vert _{\psi _2}=\sup _{p \ge 1} \frac{\left( \textrm{E}|Z|^p\right) ^{1 / p}}{\sqrt{p}}\).

Problem setup and contributions

Let the loss function be \(l(y, x, \theta )\), where \(y \in \mathbb {R}\) is the output, \(x \in \mathbb {R}^p\) is the input, and \(\theta \in \Theta\) is the parameter from the hypothesized space \(\Theta\). The common assumption that data is i.i.d. with a shared distribution F is often unrealistic in robust learning. Instead, we assume the random variables \(\{(X_i, Y_i) \sim F_i\}_{i=1}^n \in \mathbb {R}^p \times \mathbb {R}\) are independent, as concentration inequalities require either independence or a common mean. Define the expected empirical risk as: \(R_l^n(\theta ):= \frac{1}{n} \sum _{i=1}^n \textrm{E} l(Y_i, X_i, \theta )\). The true parameter \(\theta _n^*\in \mathbb {R}^p\) (which may not be unique) is defined as the minimizer of the empirical mean of the expected loss

We refer to \(\theta _n^*\) as the sample-dependent true parameter. In regression, the loss function is \(l(y, x, \theta ) = \ell (y, x^\top \theta )\), where \(\ell (\cdot , \cdot )\) is a bivariate function on \(\mathbb {R}^2\). Since \(\theta _n^*\) depends on n, it differs from the i.i.d. version: \(\theta ^*:= \arg \min _{\theta \in \Theta } \mathbb {E}[l(Y_1, X_1, \theta )]\), which is independent of n. The excess risk, \({{R}_l^{n}}(\hat{\theta }) - {{R}_l^{n}}({\theta ^*})\), is a commonly used measure of prediction accuracy for any estimator \(\hat{\theta }\) approximating the true value \({\theta ^*}\) in machine learning. When \({{R}_l^{n}}(\hat{\theta }) - {{R}_l^{n}}({\theta ^*})=o_p(1)\), it indicates prediction consistency in statistical regressions. The loss-function-based estimator is obtained through the empirical risk minimization (ERM):

However, \(\bar{\theta }\) is not a robust estimator under the heavy tailed assumption; see28 and the Remark 4 below.

This paper studies log-truncated minimization for quasi-GLMs under potentially unbounded Lipschitz losses. Although the resulting optimization is non-convex, we derive excess risk bounds for it. These bounds demonstrate robustness against heavy-tailed data and ensure predictive consistency, even for cases with finite or infinite variance. Additionally, we establish \(\ell _2\)-estimation error bounds using excess risk bounds from the log-truncated loss for quasi-GLMs. The contributions of this work are in 4 aspects:

-

Independent non-identical distributed data and \((1+\varepsilon )\)-moment conditions, \(\varepsilon \in (0,1]\). A major theoretical contribution of our work is relaxing the i.i.d. assumption for the random input \(X \in \mathbb {R}^p\) and output \(Y \in \mathbb {R}\), allowing for independent but non-identically distributed data. Additionally, we impose only weak moment conditions by the a log-truncated loss, better reflecting real-world scenarios.

-

Sharper excess risk bounds. Using refined proof techniques, we derive excess risk bounds with tighter constants, improving upon Theorem 2 in29, which had looser bounds. Additionally, our focus on the convex loss case addresses a gap in29, which primarily examined non-convex losses (e.g., regularized deep neural networks) without \(\ell _2\)-risk bounds.

-

Iteration complexity. Under mild moment conditions, we derive an iteration complexity of \(O(\epsilon ^{-4})\) for the stochastic gradient descent (SGD) log-truncated optimizer, guaranteeing that the average gradient norm is reduced to below \(\epsilon\).

-

Misspecified quasi-GLMs. We relax the requirement for a correctly specified output distribution, allowing for misspecification. Despite this, our results show that the SGD algorithm enables computationally feasible estimation for quasi-GLM regression, even with potential outliers.

Our goal is (i) to robustify against heavy-tailed noise via truncation and (ii) to allow non-identical sampling across i (drift in scale/design). Plain SGD tolerates i.n.i.d. under light tails(sub-Gaussian), but guarantees deteriorate with heavy tails with infinite-variance condition of data; our analysis shows truncation restores stability while retaining i.n.i.d. flexibility. The robust approach to GLMs with SGD is well-aligned with the goals of inverse problems, addressing challenges such as heavy-tailed noise and ill-posedness of Hessian matrix.

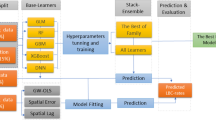

Outlines

The rest of the paper is organized as follows. In Section 2, we address the non-convex learning problem for quasi-GLMs using log-truncated loss functions. Section 3 establishes excess risk bounds under both finite and infinite moment assumptions, and analyzes the iteration complexity of the SGD algorithm for finding stationary points under mild conditions. We also demonstrate the robustness of log-truncated loss for misspecified GLMs. Sections 4 and 5 present simulations and real-world data analyses, showing the superiority of log-truncated quasi-GLMs over standard MLE-based GLMs in handling heterogeneous distributions and heavy-tailed noise. Proofs are provided in Supplementary Materials, along with key examples of quasi-GLMs, such as negative binomial and self-normalized Poisson regression, demonstrating the established excess risk bounds.

Robust quasi-GLMs for the i.n.i.d. data

Log-truncated loss

To relax exponential moment conditions to finite moment conditions, a robust procedure can be achieved by log-truncating the original estimating equations or loss functions, requiring only finite second moments30. For i.i.d. data \(\{X_i\}_{i=1}^{n}\) with finite variance, Catoni’s M-estimator is defined as the minimizer

where \(L_n(\theta ):=\frac{1}{\alpha ^2 n}\sum _{i=1}^{n}\phi (\alpha (X_i-\theta ))\) with \(\phi (x)=\int _{0}^{x}\psi (s)ds\); see31, and \(\alpha\) is a tuning parameter. Here, the truncation function is non-decreasing and satisfies

The rationale is that a function \(\psi (x)\) which grows significantly slower than a linear function–for example, a logarithmic function–reduces the impact of extreme outliers. This makes the outliers comparable to typical data points. The unbounded nature of the log-truncated function \(\psi (x)\) retains substantial data variability, offering flexibility without imposing restrictive bounds. This contrasts with traditional bounded M-estimators, which may overly constrain data and lose valuable information. For example, the Catoni log–truncated score function

The function \(\psi\) is odd, nondecreasing, and satisfies \(\psi (x)=x+O(x^{2})\) as \(x\rightarrow 0\) while \(|\psi (x)|\asymp \log (x^{2})\) as \(|x|\rightarrow \infty\), thereby downweighting extreme residuals. As is well known, the Cauchy distribution is heavy–tailed and has no finite moments; the classical Cauchy loss \(\rho _{c}(x):=\log (1+c x^{2})\) (\(c>0\)) is a standard robust alternative. Our choice may be viewed as an extension of the Cauchy loss/score, with the additional linear term inside the logarithm ensuring a smooth interpolation between the quadratic regime near zero and a logarithmic growth in the tails. We apply the \(\psi (x)\) to quasi-GLMs in below.

GLMs, quasi-GLMs and robust quasi-GLMs

The exponential family is a flexible class of distributions, including commonly used sub-exponential and sub-Gaussian distributions such as binomial, Poisson, negative binomial, normal, and gamma. This family has convexity properties that ensure finite variance, making it foundational in many statistical models. To explore the loss function from the exponential family, we begin with a dominating measure \(\nu (\cdot )\). Now, consider a random variable Y that follows the natural exponential family \(P_{\eta }\), which is indexed by the canonical parameter \(\eta\):

where c(y) is independent of \(\eta\), and \(\eta\) lies in \(\Theta = \left\{ \eta : \int c(y) \exp \{y \eta \} \, \nu (dy) < \infty \right\}\). This structure highlights the adaptability of exponential family models. They are effective at capturing diverse data-generating processes and are well-suited for supporting robust statistical analysis.

Let \(\eta _i = u(X_i^\top \theta )\) in \(dF_{Y_i}(y) = c(y_i) \exp \{y_i \eta _i - b(\eta _i)\}\), representing a non-identical distribution for \(i \in [n]\), where \(u(\cdot )\) is a known link function. The conditional likelihood of \(\{Y_i | X_i\}_{i=1}^{n}\) is the product of the n individual terms in (3). The average negative log-likelihood function is defined as the empirical risk

where the loss function is \(l(y, x^\top \theta ):= k(x^\top \theta ) - y u(x^\top \theta )\), with \(k(t):= b \circ u(t)\). If \(u(t) = t\), this is called the canonical link, aligning the model with the natural parameterization of the exponential family.

In robust GLMs, we do not assume that \(Y_i\) follows an exponential family distribution as in (3). For example,32 assumed that \(Y_i\) is sub-Gaussian. Additionally, the sample may contain outliers, and the data may not be i.i.d. Instead, we assume that \(\{ Y_i\}_{i=1}^{n}\) satisfies certain lower-order moment conditions(established in Theorem 1 below). The function in (4) is called the quasi-log-likelihood, from which we obtain the quasi-GLM loss \(k(x^\top \theta ) - y u(x^\top \theta )\). Let \(\alpha\) and \(\rho\) be tuning parameters. The robust log-truncated ridge-penalized estimator \(\hat{\theta }\) for quasi-GLMs is given by:

where \({{{\hat{R}}}_{\psi \circ l}}(\theta ):=\frac{1}{{n\alpha }}\sum _{i = 1}^n \psi [\alpha (k(X_{i}^ \top \theta )-Y u(X_{i}^ \top \theta ))]\) with Catoni’s log-truncated function \(\psi (x) = \mathrm{{sign}}(x)\log (1 + |x| + {x^2}/2)\). Note that Catoni’s score function is \(\dot{\psi }(x) = \frac{{1 + |x|}}{{1 + |x| + {x^2}/2}}\in (0,1]\), and thus the gradient of the penalized loss is given by

where the approximation follows from

Note that \(\ddot{\psi }(x) = \frac{{ - 2x(2 + \left| x \right| )}}{{{{(2 + 2\left| x \right| + {x^2})}^2}}}\in (-0.5,0.5)\), and the Hessian matrix of \({{{\hat{R}}}_{{\psi } \circ l} }(\theta )\) is

as \(\rho \rightarrow 0\). The last approximation holds by (6), provided that \({\mathrm{{E}}_n}\{ XX_{}^ \top {{\dot{l}}^2}(Y,X,{\theta _n^*})\ddot{\psi }[\alpha l(Y,X,{\theta _n^*})]\mathrm{{\} < }}\infty .\) The empirical Hessian matrix of the log-truncated ERM approximates the Hessian matrix of the original ERM. Therefore, an appropriate choice of a sufficiently small parameter \({\alpha }\) ensures that the resulting estimating equation closely resembles the original one.

The classical Newton’s algorithm becomes inapplicable when the empirical Hessian matrix is singular or lacks finite moments, which often occurs when the dimension p is large. This is due to the instability of inverting the Hessian. The problem is exacerbated by heavy-tailed data and the non-convexity of the log-truncated ERM problem. SGD is effective for solving non-convex optimization problems due to its simple iterative updates and avoidance of computationally expensive Hessian inversion33. This makes SGD particularly well-suited for robust machine learning, where traditional second-order methods are impractical. In Section 5, we use SGD to avoid Hessian inversion, relying only on high-probability bounds on the Hessian and moment conditions of the loss. This approach simplifies optimization, enhances efficiency, and effectively handles robust GLM estimation.

Main result

Excess risk bound under only variance conditions

Technically, we consistently impose the following compact space assumption (G.0) to simplify the analysis of the excess risk bound. This assumption allows us to leverage concentration inequalities for certain suprema of empirical processes defined over the compact space.

-

(G.0) Compact parameter space: the domain \(\Theta \subseteq \mathbb {R}^{p}\) and its radius is bounded with a constant r: \(\Vert \theta \Vert _{\ell _2} \le r, \quad \forall \theta \in \Theta\).

Assumption (G.0) ensures the existence of a finite covering number for the \(\varepsilon\)-net. That is, for any \(\varepsilon>0,\) there exists a finite \(\varepsilon\)-net of \(\Theta\). Next, we introduce several regularity conditions that are essential for establishing excess risk bound and the rate of convergence of the proposed log-truncated ERM estimators.

-

(G.1): Given \(0<A<\infty\), assume that u(x) is continuous differentiable and \(\dot{u}(x)\ge 0\) is locally bounded. That is, there exists a positive function \({g_A}({x})\) such that: \(0 \le \dot{u}(x^ \top \theta ) \le {g_A}(x),~\text {for}~\Vert \theta \Vert _{\ell _2}\le A\).

-

(G.2): Suppose that k(x) is continuous differentiable and \(\dot{k}(x)\ge 0\) is locally bounded. That is there exists a positive function \({h_A}({x})\) such that: \(0<\dot{k}(x^ \top \theta ) \le {h_A}(x),~\text {for}~\Vert \theta \Vert _{\ell _2}\le A.\)

-

(G.3): \(\mathrm{{E}}_n\Vert {{X}{g_{r + \frac{1}{n} }}({X}){Y}} \Vert _{\ell _2}^2 <\infty\) and \(\mathrm{{E}}_n\Vert {{X}{h_{r + \frac{1}{n} }}({X})}\Vert _{\ell _2}^2<\infty\).

-

(G.4): Let \(R_{{l^2}}^n(\theta ): = {\mathrm{{E}}_n}[k(X_{}^ \top \theta ) - Yu(X_{}^ \top \theta )]^2\). We have \(\sigma _R^\mathrm{{2}}(n): ={\sup }_{\theta \in {\Theta }} {R_{l^2}^n}(\theta )<\infty\).

Remark 1

The (G.1) and (G.2) are technical conditions to ensure the local Lipschitz condition of the loss function \(l(y, x, \theta ) = k(x^\top \theta ) - y u(x^\top \theta )\), that is, \(\exists\) a Lipschitz function of the data H(y, x) s.t.

see (C.3) in29. Lipschitz modulus to control the supremum over \(\theta \in \Theta\) via covering numbers. In covering arguments, the local Lipschitz condition translates the pointwise concentration at a net point to the whole parameter ball. By the first-order Taylor expansion of \(l(y,x,\cdot )\) as the following

We can choose H(y, x) satisfying \(H(y,x)\ge \sup \limits _{{\eta _1},{\eta _2} \in B_{r_n}} \Vert {\nabla _\eta l(y,x,(t{\eta _2} + (1 - t){\eta _1}))}\Vert _2\) with \(r_n>0\). Fix a \(\eta \in B_{r_n}\), we compute the gradient function \(\nabla _\eta l(y,x^\top \eta )= [-y\dot{u}(x^\top \eta )+\dot{k}(x^\top \eta ) ]x^\top\). From (8), H(y, x) is given by

Under condition (G.2) and put \({r_n}=r+1/n\), one has \({\mathrm{{E}}_n}H^2(Y,X)\le 2(\mathrm{{E}}_n\Vert {{X}{g_{r + \frac{1}{n} }}({X}){Y}} \Vert _{\ell _2}^2+\mathrm{{E}}_n\Vert {{X}{h_{r + \frac{1}{n} }}({X})}\Vert _{\ell _2}^2) <\infty\), which is exactly the moment condition (C.4) in29.

A trivial example of (G.1) is \(u(x)=x\) with \({g_A}(x) \equiv 1\), which corresponds to the natural link function in GLMs. Recall that \(k(t):=b \circ u (t)\), giving \(\dot{k}(t) = \dot{u}(t)\dot{b}(u(t))\). If \(\{{Y_i}|{X_i}\}_{i=1}^n\) follows a distribution in the exponential family, then we have \(\mathrm{{E}}\{ {Y_i}|{X_i}\} = \dot{b}(u(X_i^ \top \theta _n^*)), i = 1,2, \cdots ,n.\) Under (G.0) and (G.1), it follows that (G.2) holds with \(A=r\) and \({h_r}({X_i}) = {g_r}({X_i})\mathrm{{E}}\{ {Y_i}|{X_i}\}\), as implied by the inequality

The (G.3) only need \(\mathrm{{E}}_n\Vert {{X}{Y}} \Vert _{\ell _2}^2 <\infty\) and \(\mathrm{{E}}_n\Vert X\Vert _{\ell _2}^2<\infty\), provided that both \({h_A}({x})\) and \({g_A}({x})\)are bounded. For quasi-GLMs with i.i.d. data34, imposed the moment condition \(\mathrm{{E}}{Y_1^{7/3}} < \infty\) to establish the laws of iterated logarithm for the quasi-maximum likelihood estimator in GLMs with bounded inputs; a similar bounded input condition is required in3. However, our (G.4) only requires the second moment condition on the responses, that is,

where \(C_1\) and \(C_2\) are constants, assuming that both \({h_A}({x})\) and \({g_A}({x})\) are bounded.

The first main result is presented as follows.

Theorem 1

(Excess risk bound under finite variance conditions) Let \(\hat{\theta }\) be defined by (5) and \({\theta _n^*}\) be given by (1) with \(l(y, x,\theta ):=k(x^ \top \theta )-y u(x^ \top \theta )\). If \(\alpha \le \sqrt{\frac{1}{{2n}}\mathop {\sup }\nolimits _{\theta \in {\Theta }}[ R_{l^2}^{ n}(\theta )]^{-1}[\log (\frac{1}{{{\delta ^2}}}) + p\log ( {1 + 2rn})]}\) with \(\delta \in (0,1)\), under (G.0)-(G.4), we have

with probability at least \(1-2\delta\). Further more, if \(u(t)=t\), we have

Remark 2

If \(\rho \rightarrow 0\), Theorem 1 provides non-asymptotic excess risk bounds, forming the theoretical foundation for establishing the convergence rate

and the prediction consistency of the proposed estimators. In the data i.i.d. setting, the constants \(3/(2\sqrt{2})\approx 1.06\) and \(\sqrt{2}\) of \(\frac{\mathrm{{E}}_n[\Vert {{X}{g_{r + \frac{1}{n} }}({X}){Y}} \Vert _{\ell _2}^2 + \Vert {{X}{h_{r + \frac{1}{n} }}({X})}\Vert _{\ell _2}^2]}{{{\sigma _R(n)}{n^2}}}+ \sqrt{2}{\sigma _R}(n)\) in Theorem 1 is sharper than the constants \(2/\sqrt{3}\approx 1.15\) and 6 in Theorem 2 of29 (also summarized in Corollary 2 with \(\beta =2\) in the following subsection). To see this constant, Theorem 4 (\(\beta =2\)) and equation (43) in29 gives

with probability at least \(1 - 2\delta\), where \({R_l}(\hat{\theta }_{n} )\), \({R_l}({\theta ^*})\) and \(\sigma _R\) are the i.i.d. version of \({R_{l}^n}(\hat{\theta })\), \({R_{l}^n}({\theta _n^*})\) and \(\sigma _R(n)\), respectively. So the constant of \(\frac{{{\mathrm{{E}}}[\Vert {{X}{g_{r + \frac{1}{n} }}({X}){Y}} \Vert _{\ell _2}^2 + \Vert {{X}{h_{r + \frac{1}{n} }}({X})}\Vert _{\ell _2}^2]}}{{{{\sigma _R}n^2}}}\) is \(2/\sqrt{3}\) and the constant of \(\sigma _R\) is 6.

Remark 3

If the tuning parameter \(\alpha\) is misspecified, the excess–risk bound deteriorates. Letting \(\alpha \downarrow 0\) recovers the untruncated estimating equation \(\frac{1}{n}\sum _{i=1}^n X_i\,\dot{\ell }\!\big (Y_i, X_i^\top \theta \big )=0,\) corresponding to the ERM without truncation. Thus \(\alpha\) plays the usual variance–bias principle. Theorem 1 yields consistency when \(\alpha\) balances stochastic and truncation errors; optimizing the bound gives \(\alpha \asymp \sqrt{\frac{p\log n}{n}}.\) In the proof we enforce the balance by equating the “variance” and “bias” terms, \(2\alpha \sup _{\theta \in \Theta } R^{\,n}_{\ell ^{2}}(\theta ) \;=\; \frac{1}{n\alpha }\,\log \!\left( \frac{{\mathscr {N}}(\Theta ,\varepsilon )}{\delta ^{2}}\right) ,\) where \(R^{\,n}_{\ell ^{2}}(\theta )=n^{-1}\sum _{i=1}^{n}\ell ^{2}\!\big (Y_i,X_i^\top \theta \big )\) and \(N(\Theta ,\varepsilon )\) denotes the \(\varepsilon\)-covering number of \(\Theta\). This choice yields the stated order for \(\alpha\) and the resulting excess–risk rate.

Remark 4

In the i.i.d. setting,25 analyzed the high-probability performance of the excess risk bounds for the ERM estimator \(\bar{\theta }\), stating that \(\bar{\theta }\): \({R_l}(\bar{\theta }) - {R_l}({\theta _n^*})\le \frac{O(1)}{{n }}({{ {{\Vert {{\left\| {{X_1}} \right\| _{\ell _2}}} \Vert }_{{\psi _2}}}}})\) with high probability. This result holds under the assumptions of a three-times continuously differentiable self-concordant and convex loss function, as well as the sub-Gaussian exponential moment condition on \(X\). Similarly, for regression with quadratic loss35, established the high-probability excess risk bound: \({R_l}(\bar{\theta }_H) - {R_l}({\theta _n^*})\le O(\frac{d}{{n }}+\frac{1}{{n^{3/4} }})\) under the 8th moment condition for the regression noise, where \(\bar{\theta }_H\) is the ERM of a smoothed convex Huber loss.

However, if \({{ {{| {{\left\| {{X_1}} \right\| _{\ell _2}}}\Vert }_{{\psi _2}}}}}= \infty\) or the higher moments do not exist, consistency of the excess risk fails, and \(\bar{\theta }\) becomes non-robust under heavy-tailed assumptions. In contrast, under the moment conditions (G.3) and (G.4), our estimator \(\hat{\theta }\), based on the log-truncated loss, is more robust than \(\bar{\theta }\).

In the following result, we present an explicit upper bound for the \(\ell _2\)-error \({\Vert {\hat{\theta }- \theta _n^*} \Vert _{\ell _2}^2}\) under two additional assumptions: one concerning model specification and the other regarding the Hessian matrix condition.

-

(G.5) Specification of the average model: Assume that

$$\begin{aligned} \sum _{i=1}^n\mathrm{{E}}\{X_i\dot{u}(X_i^ \top \theta _n^*)[Y_i - \dot{b}(u(X_i^ \top \theta _n^*))]\}=0. \end{aligned}$$(11) -

(G.6) Hessian matrix condition(local strong convexity): Assume that there exists a positive constant \({c_{2r} }\) such that

$$\begin{aligned} \inf _{\Vert \eta \Vert _{\ell _2}\le 2r}{\mathrm{{E}}_n}\left\{ { - XX^ \top \{ \ddot{u}(X^ \top \eta )[Y - \dot{b}(u(X^ \top \eta ))] + {{\dot{u}}^2}(X_{}^ \top \eta )\ddot{b}(u(X^ \top \eta ))\} } \right\} \succ \textrm{I}_{p}{c_{2r} }. \end{aligned}$$(12)

(G.5) is the population first-order stationarity of \(R_l^n(\theta )\); it does not require pointwise correct specification. It only requires that misspecification be on average to zero, when residuals are mean-zero and orthogonal to \(X_i\dot{u}(\cdot )\). (G.6) is a local strong-convexity: the average negative Hessian on the ball \(\{\Vert \eta \Vert _2\le 2r\}\) is uniformly positive definite with constant \(c_{2r}>0\). With the canonical link \(u(t)=t\), it reduces to \(\mathrm{{E}}_n[X X^\top \ddot{b}(X^\top \eta )]\succeq c_{2r} I_p\), which holds if \(\mathrm{{E}}_n[XX^\top ]\succeq \kappa I_p\) and \(\ddot{b}\) is bounded below on the relevant range (e.g., logistic: \(\ddot{b}(z)\in (0,1/4]\); Poisson: \(\ddot{b}(z)=e^z\ge e^{-M}\) over \(|z|\le M\)). For smooth non-canonical links, the term with \(\ddot{u}(\cdot )[Y-\dot{b}(u(\cdot ))]\) is neutralized by (G.5); positive curvature comes from \(\dot{u}^2\,\ddot{b}\).

With (G.5) and (G.6), we can obtain the following non-asymptotical \(\ell _2\)-estimation error.

Corollary 1

(\(\ell _2\)-estimation error) Under the notations and assumptions in Theorem 1, and assuming that Conditions (G.5) and (G.6) hold, then we have with probability at least \(1 - 2\delta\)

Corollary 1 with (G.5) implies that our robust quasi-GLMs can be misspecified, i.e., \({\textrm{E}}\{ {Y_i}|{X_i}\} \ne \dot{b}(u(X_i^ \top \theta _n^*))\) for some i. Conditions (G.5) and (G.6) are the minimal additional requirements necessary to ensure consistency in terms of the \(\ell _2\)-estimation error, provided that the excess risk bound in Theorem 1 holds.

Excess risk bound under infinite variance conditions

Motivated by the log-truncated loss for mean estimation under \((1+\varepsilon )\)-moment condition in29,36 and references therein, we employ the almost surely continuous and non-decreasing function \(\psi : \mathbb {R} \rightarrow \mathbb {R}\) as the truncated function, that is,

where \(\lambda (x)>0\) is a higher-order function of |x| that satisfies29:

-

(C.1) The function \(\lambda (x): \mathbb {R}_+ \rightarrow \mathbb {R}_+\) is a continuous non-decreasing function: \(\lim _{x \rightarrow \infty } \frac{\lambda (x)}{x}=\infty\). Moreover, there exist some \(c_2>0\) and a function \(f: \mathbb {R}_+ \rightarrow \mathbb {R}_+\) such that

-

(C.1.1) \(\lambda (tx) \le f(t)\lambda (x)\) for all \(t, x \in \mathbb {R}_+\), where \(\lim _{t \rightarrow 0^{+}} {f(t)}/{t}=0\);

-

(C.1.2) \(\lambda (x+y)\le c_2[\lambda (x)+\lambda (y)]\) for all \(x, y \in \mathbb {R}_+\).

-

Throughout this section, we specifically choose \(\lambda (x)=|x|^{\beta }/{\beta }\) in (13), resulting in the following expression:

According to (C.1), for sufficiently small values of x, we have \(\psi _\beta (x) \approx x\), whereas for larger values of x, \(\psi _\beta (x)\) is significantly smaller than x (\(\psi _\beta (x) \ll x\)). Based on (14), the log-truncated robust estimator \(\hat{\theta }\) is defined as

where \(l(y, x,\theta ):=k(x^ \top \theta )-y u(x^ \top \theta )\), and \(\rho>0\) is the penalty parameter.

Next, following the theoretical framework of29, we present the second result, which provides insights into determining the appropriate order of the tuning parameter \(\alpha\) in Theorem 2. Our proposed tuning parameter is variance-dependent, ensuring that the SGD optimization remains computationally feasible for a wide class of loss functions.

Corollary 2

(Excess risk bound under infinite variance conditions) Let \(\hat{\theta }\) be defined by (15) with \(\beta \in (0,2]\) and \({\theta _n^*}\) be given by (1). Under (G.0)-(G.4), and with the tuning parameter \(\alpha \le \frac{1}{n^{1/\beta }}\left( \frac{\log ({\delta ^{ - 2}}) + p\log \left( {1 + 2rn} \right) }{[(2^{{\beta }-1} + 1)\sup _{\theta \in \Theta } R_{\lambda \circ l}^n(\theta )]}\right) ^{1/\beta }\), we have with probability at least \(1 - 2\delta\),

where we allow \(p=p_n\) to grow slowly with n.

Remark 5

If Condition (G.4) does not holds, we additionally assume that \({\mathrm{{E}}_n}\{[|Y|{g_{r}}(X)+{h_{r}}(X)]\Vert X \Vert _2\} \ll O(n)\), \({\mathrm{{E}}_n}\{ ||Y|{g_{r}}(X)+{h_{r}}(X)]\Vert X \Vert _2|^\beta \} = O({n^\beta })\) and \(p = o({n^{ - 1}}\log n).\) Under these conditions, the consistency of the excess risk holds, i.e.,

\({R_l^n}(\hat{\theta }) - {R_l^n}({\theta _n^*})=O\left( {{{\left( {\frac{{p\log n}}{n}} \right) }^{ \frac{{\beta - 1}}{\beta }}}}+\rho \right) =o_p(1),\) provided that \(\rho =o(1)\).

By the definition of the true risk minimization (1), we have \({R_l^n}(\hat{\theta }) - {R_l^n}({\theta _n^*})>0\) for all \(\theta\) such that \(\Vert \theta \Vert _{\ell _2} \le r\). Furthermore, the lower bound condition for excess risk in terms of \(\ell _2\)-error is indispensable.

-

(G.7) Hessian condition for the risk function:

\({R_l^n}(\theta ) - {R_l^n}({\theta _n^*})\ge C_L{\left\| {\theta - \theta _n ^*} \right\| _{\ell _2}^2}\) for all \(\theta \in \Theta\), where \(C_L\) is a constant.

Suppose that \({R_l^n}(\theta )\) admits a Taylor expansion at \({\theta _n^*}\), i.e.,

for some \(t\in (0, 1)\). Since \(\nabla {R}_l^n(\theta _n^*)=0\) by the definition of the true risk minimization (1), the first-order term vanishes. Under (G.0), Condition (G.7) holds naturally if the Hessian matrix \({\nabla ^2{R}_l^n}(t\theta _n^* + (1 - t)\theta )\) is positive definite under the restriction \(\left\| {t\theta _n^* + (1 - t)\theta } \right\| \le \left\| {\theta _n^*} \right\| + \left\| {\theta } \right\| \le 2r\). Combing this with (G.7), Theorem 2 immediately yields the \(\ell _2\)-risk bounds.

Corollary 3

Under the same conditions as in Theorem 2, if (G.7) holds with a constant \({C_L}\), then with probability at least \(1 - 2\delta\), we have

i.e., \({\Vert {\hat{\theta }- \theta _n^*} \Vert _{\ell _2}^2}=O_p( {{{( {\frac{{p\log n}}{n}})}^{\frac{{\beta - 1}}{\beta }}}}+\rho )\).

The SGD algorithm

SGD for Parameter Estimations

Consider the general log-truncated regularized optimization with a \(\ell _2\)-regularization penalty:

where \(l(y,x^\top \theta ):=b(u(x^ \top \theta ))-y u(x^ \top \theta )\) and \({\psi }(x)\) satisfies (13). Here \(\rho> 0\) serves as the penalty parameter to control model complexity, and \(\alpha> 0\) is a robust tuning parameter that needs to be calibrated appropriately. In our simulations, the optimization problem is addressed using SGD, which is implemented as follows:

where \(i_t\) is an index randomly sampled from the data, and \(\{r_t\}\) is the learning rate sequence.

The SGD algorithm aims to approximate the minimizer of the empirical risk by iteratively updating the parameter vector \(\theta\) in the direction opposite to the gradient of the objective function. This iterative approach provides a computationally efficient way to solve the non-convex optimization problem, particularly in robust learning scenarios.

To select the two tuning parameters, \(\alpha\) and \(\rho\), we employ a five-fold cross-validation (CV) procedure to identify the optimal parameter pair \((\alpha , \rho )\) within an effective subset of \(\mathbb {R}_{+}^2\). The selection criterion is based on minimizing the mean absolute error (MAE) between the observed outputs \(Y_i\) and the estimated outputs \(\hat{Y}_i\), is computed as \(\frac{1}{n_0}\sum _{i=1}^{n_0}|\hat{Y}_i(\alpha ,\rho )-Y_i|.\)

For comparison, we also consider the standard ridge regression without truncation, where the optimization problem is \(\hat{\theta }_n(\rho ): =\textrm{argmin }_{\theta \in {\Theta }}\{\frac{1}{{n}}\sum _{i = 1}^n l({Y_i}, {{X}}_i^\top \theta )+\rho \Vert \theta \Vert _{\ell _2}^2\}\), where \(\rho\) is the regularization parameter. The corresponding SGD iterations for solving this optimization problem are given by: \(\theta _{t+1}=\theta _{t}-r_t \nabla _{\theta }l(Y_{i_t}, X_{i_t}^\top \theta _{t}) - 2r_t\rho \theta _t, t=0,1,2,\cdots\), where the penalty parameter \(\rho\) is also selected via cross-validation. This approach ensures that the model complexity is controlled while minimizing the empirical risk, thereby providing a balance between bias and variance.

Iteration Complexity of SGD

In this section, we study the iteration complexity of SGD for minimizing the proposed log-truncated loss functions. Our results show that that the number of iterations required to make the average gradient norm less than \(\epsilon\) is \(O(\epsilon ^{-4})\).

Recall that the gradient of the empirical log-truncated loss function is

The Hessian matrix of the empirical log-truncated GLM’s loss function is

where \(l(y, x^\top \theta )=b(u(x^\top \theta ))-y u(x^\top \theta )\). The bounded Hessian matrix is required to ensure the iteration complexity of SGD.

We establish the iteration complexity theory for SGD, based on the second-moment assumption of the gradient function of data33,37. This assumption ensures that the gradient’s variance is controlled, allowing the algorithm to converge efficiently. The results indicate that, under appropriate conditions, the SGD algorithm can reliably minimize the log-truncated loss function, even in non-convex settings.

Theorem 2

Let \(F_n(\theta ):= \frac{1}{{n\alpha }}\sum _{i = 1}^n {\psi }[\alpha l({Y_i}, {X}_i^\top \theta )]+\rho \Vert \theta \Vert _{\ell _2}^2\) be the penalized empirical log-truncated loss function. Define the input vector \(\varvec{X}:= \{X_i\}_{i=1}^n\) and \(\theta ^*: = \mathop {\arg \min }\nolimits _{\theta \in {\Theta }}\textrm{E} [F_n(\theta )\mid \varvec{X}]\), where \(l(y, x^\top \theta )\) is the quasi-GLMs loss function. Suppose the following high-probability Hessian matrix condition holds:

where \(\Vert \cdot \Vert\) is the spectral norm of the matrix. Assume the following moment condition on the gradient function:

holds for a randomly selected data \((Y_{i_t}, X_{i_t})\) from \(\{(Y_{i}, X_{i})\}_{i=1}^n\).

-

(a)

. If \(r_t\) satisfies (19) in the SGD algorithm (16), then with probability at least \(1-\varepsilon\)

$$\begin{aligned} \textrm{E}_R[\textrm{E}[\Vert \nabla F_n(\theta _R )\Vert ^{2}\mid {\varvec{X}}] \le \frac{2J_\epsilon (X) \textrm{E}[F_n(\theta _{1}) - F_n(\theta ^*)\mid \varvec{X}]}{\sqrt{T}} + \frac{\sigma _{\alpha }^2(X)}{{\sqrt{T}}}, \end{aligned}$$(20)where \(R \sim U([T])\) (U([T]) is the discrete uniform random variable on the support [T]).

-

(b)

. Under the setting in (a), if \(T = O\left( \frac{\max \{\sigma _{\alpha }^4(X), J_\epsilon (X)^2\}}{\epsilon ^4}\right)\) with \(\epsilon> 0\), then the SGD sequence \(\{\theta _t\}_{t \ge 1}\) finds an approximate stationary point with probability at least \(1-\varepsilon\) .

\(\textrm{E}_R[\textrm{E}\left\| \nabla F_n(\theta _R )\right\| _{\ell _2}^{2}\mid \varvec{X}] \le \epsilon ^2\) for \(R \sim U([T])\)

Remark 6

The high-probability Hessian bound \(\Vert \nabla ^2 F_n(\theta )\Vert \le J_\epsilon (X)\) is a local smoothness condition (with that yields the one-step descent inequality underlying (20). The moment condition in (19) upper-bounds the conditional variance of the stochastic gradient; the log-truncation (via \(\psi\) and the scale \(\alpha\)) makes \(\sigma _\alpha ^2(X)\) finite even under heavy tails. When the data is i.i.d., we set \(r_t = 1/(J_\epsilon (X) \sqrt{t})\) in the SGD algorithm (16) to satisfy (19)[See the proof in A.5].

Compared with standard SGD analyses37 that assume uniformly bounded second moments of stochastic gradients, the log-truncated loss induces bounded effective gradients (captured by \(\sigma _\alpha ^2(X)\)) without requiring sub-Gaussian tails. This is analogous in spirit to gradient clipping schemes, but keeps the update as an unbiased gradient of a smoothly modified objective.

The theorem matches the state-of-the-art nonconvex SGD iteration complexity under smoothness and bounded variance, while extending it to heavy-tailed settings via log-truncation of loss function.

Simulations

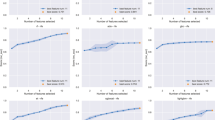

In this section, we conduct simulations to evaluate the performance of our proposed roobust GLMs. The experiments compare the estimation errors of log-truncated logistic and negative binomial regressions against those of standard models. All models are optimized via SGD and are evaluated under various data-generating processes.

For these two types of GLMs, we first generate their covariates, assuming that the covariates are contaminated by heavy-tailed distributions. Specifically, the covariates \(\{X_i\}_{i=1}^n\) for each \(X_i \in \mathbb {R}^p\) can be expressed as \(X_i=X_i'+\xi _i\), where \(\{X_i'\}_{i=1}^n\) are \(\mathbb {R}^p\)-valued random vectors sampled from different normal distributions \(N(\textbf{0},{\textbf{Q}}(\varsigma ))\), each characterized by a distinct covariance structure. The covariance matrix \({\textbf{Q}}(\varsigma )\) takes the form of either an identity matrix (when \(\varsigma = 0\)) or a Toeplitz matrix (when \(\varsigma \ne 0\)). The Toeplitz matrix is given by

In our simulations, we set \(\varsigma\) to 0, 0.3, and 0.5. For a heterogeneous sample set \(\{X_i'\}_{i=1}^n\), \(\lceil n/3 \rceil\) samples are drawn from \(N({\textbf{0}}, {\textbf{I}})\), \(\lceil n/3 \rceil\) samples from \(N({\textbf{0}}, {\textbf{Q}}(0.3))\), and \(\lfloor n/3 \rfloor\) samples from \(N({\textbf{0}}, {\textbf{Q}}(0.5))\). The noise terms \(\{\xi _i\}_{i=1}^n\) are i.i.d. \(\mathbb {R}^p\)-valued random vectors, with each component \(\xi _i = (\xi _{i1}, \dots , \xi _{id})^\top\) independently drawn from a Pareto distribution with a scale parameter of 1 and shape parameters \(\tau \in \{1.6, 1.8, 2.01, 4.01, 6.01\}\). We set \(\beta = 1.5\) if \(\tau < 2\), and \(\beta = 2\) if \(\tau \ge 2\). The true regression coefficients for both GLMs are sampled independently from a uniform distribution on [0, 1]. Tables 1 and 2 present the average \(\ell _2\)-estimation and standard errors of 100 times for the logistic regression coefficient with and without regularization, respectively.

Table 1 compares the average \(\ell _2\)-estimation errors and standard errors for logistic regression under Pareto-distributed noise across various settings of the noise tail parameter \(\tau\), robust loss parameter \(\beta\), and dataset dimensions (n, p). The results demonstrate that truncation significantly reduces \(\ell _2\)-estimation errors compared to non-truncation, particularly for heavy-tailed noise (\(\tau \le 2.01\)) and smaller datasets (\((n, p) = (100, 50), (200, 100)\)). For instance, at \(\tau = 2.01\), \(\beta = 2.0\), and \((n, p) = (100, 50)\), truncation yields an error of 0.351, while non-truncation results in 0.503, a 30.2% reduction. Optimal performance is observed with larger datasets, such as \((n, p) = (700, 300)\) and (1000, 1000), where errors drop to 0.169–0.185 under truncation. For heavy-tailed noise (\(\tau = 1.60, 1.80, 2.01\)), \(\beta = 2.0\) consistently outperforms \(\beta = 1.5\) for smaller datasets, reducing errors (e.g., 0.351 vs. 0.369 at \(\tau = 1.60\), \((n, p) = (100, 50)\)). For lighter-tailed noise (\(\tau \ge 2.01\)), \(\beta = 2.0\) achieves comparable or better performance, particularly for larger datasets.

Table 2 highlights the superior performance of the robust method (Truncation) in logistic regression under Pareto noise, with low and stable \(\ell _2\)-estimation errors (0.318–0.340) and standard errors (0.008–0.013) across all settings. The method without regularization struggles in the Non-truncation setting with heavy-tailed noise (\(\tau = 1.60, 1.80\)), exhibiting high errors (up to 63.558) and variability (up to 5.744), but improves for lighter-tailed noise (\(\tau = 4.01, 6.01\)). The Robust method is preferable for heavy-tailed noise, while Ridge becomes competitive as noise tails lighten. It should be noted that the logistic regression has bad behavior without ridge penalty and truncation, since the Hessian matrix of optimization under heavy-tailed input is unbounded and thus is unstable.

Tables 3 and 4 present the average \(\ell _2\)-estimation and standard errors of the negative binomial regression parameters \(\theta _n^*\) with and without regularization, respectively. Table 3 reports average \(\ell _2\)-estimation errors for robust negative binomial regression under Pareto noise, varying the noise tail parameter \(\tau\), robust loss parameter \(\beta\), and dataset dimensions \((n, p)\). Consistent with Table 1, truncation significantly outperforms non-truncation, especially for heavy-tailed noise (\(\tau \le 2.01\)) and smaller datasets. For instance, at \(\tau = 2.01\), \(\beta = 2.0\), and \((n, p) = (100, 50)\), truncation achieves an error of 0.250 versus 0.300 for non-truncation (16.7% reduction). Lowest errors occur at \((n, p) = (700, 300)\) and \((1000, 1000)\), with truncation errors of 0.330–0.358. For heavy-tailed noise (\(\tau = 1.60, 1.80\)), \(\beta = 1.5\) slightly outperforms \(\beta = 2.0\) in smaller datasets (e.g., 0.263 vs. 0.250 at \(\tau = 1.60\), \((n, p) = (100, 50)\)), while \(\beta = 2.0\) performs comparably for lighter-tailed noise (\(\tau \ge 4.01\)).

From Table 4, it can be seen that the robust method (truncation) slightly outperforms the non-robust method (without regularization), showing a lower \(\ell _2\text {-error}\) (0.284–0.365 vs. 0.316–0.392) and \(\text {standard errors}\) (0.038–0.172 vs. 0.056–0.296). The differences compared to a regularized scenario are likely minimal due to the inherent stability of the Robust method through truncation and the negative binomial model’s capability to manage overdispersed noise. In comparison to logistic regression, the negative binomial model demonstrates more consistent performance across noise levels, thereby reducing the necessity for regularization to achieve stable estimates.

Real data analysis

To investigate count data regressions, we apply the robust negative binomial regressions to the German health care demand database, which is provided by the German Socioeconomic Panel (GSOEP). This dataset can be accessed at:

http://qed.econ.queensu.ca/jae/2003-v18.4/riphahn-wambach-million/.

The dataset contains 27,326 patient observations (each identified by a unique ID) collected over seven years: 1984, 1985, 1986, 1987, 1988, 1991, and 1994. The sample sizes for each year are {3874, 3794, 3792, 3666, 4483, 4340, 3377}.

For each patient, there are 21 covariates available for analysis, along with two alternative dependent variables: DOCVIS (the number of doctor visits in the three months preceding the survey) and HOSPVIS (the number of hospital visits in the last calendar year). Table 5 presents the descriptive statistics of the variables in the dataset. It is noteworthy that the distributions of some covariates, such as age, handper, hhninc, and educ, exhibit heavy tails, as indicated by their high kurtosis coefficients. Consequently, we employ our proposed robust NBR to separately explore the relationships between the independent variables DOCVIS, HOSPVIS, and the 21 covariates. One challenge with the NBR model is the unknown dispersion parameter \(\eta\). To address this, we first estimate \(\eta\) by maximizing the joint log-likelihood function of the model.

Once we obtain the estimated \(\hat{\eta }\), we incorporate it into the NBR optimization process. For each year, we randomly split the German health care demand dataset into a training set \((X_{\text {train}}, Y_{\text {train}})\), comprising 33% of the observations, and a testing set \((X_{\text {test}}, Y_{\text {test}})\), comprising the remaining 67%. We use the truncation function \(\lambda (|x|) = |x|^{\beta }/\beta\), where \({\beta } \in (1, 2]\) is incremented in steps of 0.1 to determine the optimal moment order. The training dataset is used to fit the NBR models, after which we compute the mean absolute errors (MAEs) of the predicted values of \(Y_{\text {test}}\) to evaluate model accuracy. A standard NBR is also trained using the same procedure.

Table 6 reports MAEs for NBR on the German health care demand dataset, comparing non-truncation and truncation (gradient clipping) methods for doctor visits (DOCVIS) and hospital visits (HOSPVIS) across 1984–1994. Truncation consistently achieves lower MAEs than non-truncation for both outcomes. For DOCVIS, truncation reduces MAEs by 5.0–9.5%, with the largest improvement in 1991 (\(\beta = 2.0\): 2.167 vs. 2.350, 7.8% reduction). For HOSPVIS, truncation yields 49.7–50.7% lower MAEs, with the smallest error in 1984 (\(\beta = 1.9\): 0.127 vs. 0.256). In contrast to synthetic datasets with heavy-tailed Pareto noise (cf. Table 3), where truncation also excels, these results suggest truncation’s robustness extends to real-world health care data, likely due to effective handling of outliers or noise in the dataset.

Discussion

In this paper, we introduce log-truncated robust regression models specifically designed to address heavy-tailed contamination in both input and output data, with a particular emphasis on quasi-GLMs. Under the independent non-identical distributed data, we derive sharp excess risk bounds for the proposed log-truncated ERM estimator, accommodating both finite and infinite variance conditions. The optimization of the log-truncated ERM estimator is carried out via SGD, and we analyze the iteration complexity of SGD in minimizing the associated non-convex loss functions. This ensures the computational feasibility of the proposed methods even for non-identical distributions datasets with potential outliers.

Our numerical simulations demonstrate that the proposed log-truncated quasi-GLMs consistently outperform standard approaches in terms of robustness, particularly in scenarios involving non-identically independently distributed data and heavy-tailed noise. By considering various covariance structures and Pareto-distributed noise, the simulation results reveal that our models achieve substantially lower estimation errors compared to conventional models.

Furthermore, we applied our robust NBR model to the German health care demand dataset, which is characterized by heavy-tailed covariates, to examine the relationship between medical visit frequencies and socio-economic factors. The results indicate that our truncated NBR model yields significantly smaller prediction errors than standard NBR models, thereby underscoring the practical advantages of our approach. These findings underscore the effectiveness of our robust models in handling real-world data with heavy-tailed distributions. The practical relevance of our methods extends beyond healthcare to various domains involving count data regression, making them particularly valuable for applications in fields with unpredictable or non-Gaussian data behavior, such as finance, insurance, and epidemiology.

Data availability

Data is provided in http://qed.econ.queensu.ca/jae/2003-v18.4/riphahn-wambach-million/.

References

McCullagh, P. & Nelder, J. A. Generalized Linear Models Vol. 37 (CRC Press, 1989).

Chen, X. Quasi likelihood method for generalized linear model (in chinese) (Press of University of Science and Technology of China, 2011).

Yang, X., Song, S. & Zhang, H. Law of iterated logarithm and model selection consistency for generalized linear models with independent and dependent responses. Front. Math. China 1–32 (2021).

Yu, J., Wang, H., Ai, M. & Zhang, H. Optimal distributed subsampling for maximum quasi-likelihood estimators with massive data. J. Am. Stat. Assoc. 117, 265–276 (2022).

Xue, L. Doubly robust estimation and robust empirical likelihood in generalized linear models with missing responses. Stat. Comput. 34, 39 (2024).

Zhu, Z. & Zhou, W. Taming heavy-tailed features by shrinkage. In International Conference on Artificial Intelligence and Statistics 3268–3276 (PMLR, 2021).

Awasthi, P., Das, A., Kong, W. & Sen, R. Trimmed maximum likelihood estimation for robust generalized linear model. Adv. Neural Inf. Process. Syst. 35, 862–873 (2022).

Chen, J. Robust estimation in exponential families: from theory to practice (University of Luxembourg, 2023).

Baraud, Y. & Chen, J. Robust estimation of a regression function in exponential families. J. Stat. Plan. Inference 233, 106167 (2024).

Chen, J. Estimating a regression function in exponential families by model selection. Bernoulli 30, 1669–1693 (2024).

Wang, L., Peng, B. & Li, R. A high-dimensional nonparametric multivariate test for mean vector. J. Am. Stat. Assoc. 110, 1658–1669 (2015).

Coles, S., Bawa, J., Trenner, L. & Dorazio, P. An introduction to statistical modeling of extreme values Vol. 208 (Springer, 2001).

Peng, L. & Qi, Y. Inference for heavy-tailed data: applications in insurance and finance (Academic press, 2017).

Sun, Q. Do we need to estimate the variance in robust mean estimation? arXiv:2107.00118 (2021).

Darrell, T., Kloft, M., Pontil, M. & Rätsch, G. Machine learning with interdependent and non-identically distributed data (dagstuhl seminar 15152). In Dagstuhl Reports Vol. 5 (Schloss Dagstuhl-Leibniz-Zentrum fuer Informatik, 2015).

Zhou, P., Wei, H. & Zhang, H. Selective reviews of bandit problems in ai via a statistical view. Mathematics 13, 665 (2025).

Cao, L. Non-iidness learning in behavioral and social data. The Comput. J. 57, 1358–1370 (2014).

Casteels, W. & Hellinckx, P. Exploiting non-iid data towards more robust machine learning algorithms. arXiv:arXiv:2010.03429 (2020).

Widmer, C., Kloft, M. & Rätsch, G. Multi-task learning for computational biology: Overview and outlook. Empir. Inference 117–127 (2013).

Pourbabaee, F. & Shams Solari, O. Tail probability estimation of factor models with regularly-varying tails: Asymptotics and efficient estimation. Available at SSRN 4078304 (2022).

Li, X., Huang, K., Yang, W., Wang, S. & Zhang, Z. On the convergence of fedavg on non-iid data. In International Conference on Learning Representations (2019).

He, Y., Shen, Z. & Cui, P. Towards non-iid image classification: A dataset and baselines. Pattern Recognit. 110, 107383 (2021).

Lecué, G. & Mendelson, S. Learning subgaussian classes: upper and minimax bounds. Top. Learn. Theory-Societe Math. de France,(S. Boucheron and N. Vayatis Eds.) (2013).

Loh, P.-L. Statistical consistency and asymptotic normality for high-dimensional robust \(m\)-estimators. The Annals Stat. 45, 866–896 (2017).

Ostrovskii, D. M. & Bach, F. Finite-sample analysis of \(m\)-estimators using self-concordance. Electron. J. Stat. 15, 326–391 (2021).

Belloni, A., Rosenbaum, M. & Tsybakov, A. B. Linear and conic programming estimators in high dimensional errors-in-variables models. J. Royal Stat. Soc. Ser. B 79, 939–956 (2017).

Zhang, H. & Chen, S. X. Concentration inequalities for statistical inference. Commun. Math. Res. 37, 1–85 (2021).

Lerasle, M. Lecture notes: Selected topics on robust statistical learning theory. arXiv:arXiv:1908.10761 (2019).

Xu, L., Yao, F., Yao, Q. & Zhang, H. Non-asymptotic guarantees for robust statistical learning under infinite variance assumption. J. Mach. Learn. Res. 24, 1–46 (2023).

Catoni, O. Challenging the empirical mean and empirical variance: a deviation study. In Annales de l’IHP Probabilités et statistiques 48, 1148–1185 (2012).

Yao, Q. & Zhang, H. Asymptotic normality and confidence region for catoni’s z-estimator. Stat 11, e495 (2022).

Cui, C., Jia, J., Xiao, Y. & Zhang, H. Directional fdr control for sub-gaussian sparse glms. Commun. Math. Stat. (2025).

Xu, Y. et al. Learning with non-convex truncated losses by sgd. In Uncertainty in Artificial Intelligence 701–711 (PMLR, 2020).

Xiao, Z. & Liu, L. Laws of iterated logarithm for quasi-maximum likelihood estimator in generalized linear model. J. statistical planning and inference 138, 611–617 (2008).

Mathieu, T. & Minsker, S. Excess risk bounds in robust empirical risk minimization. Inf. Inference: A J. IMA (2021).

Lee, K., Yang, H., Lim, S. & Oh, S. Optimal algorithms for stochastic multi-armed bandits with heavy tailed rewards. In Advances in Neural Information Processing Systems, vol. 33 (2020).

Ghadimi, S. & Lan, G. Stochastic first-and zeroth-order methods for nonconvex stochastic programming. SIAM journal on optimization 23, 2341–2368 (2013).

Acknowledgements

H.Z. is supported in part by National Natural Science Foundation of China Grant (No. 12101630) and the Beihang University under Youth Talent Start up Funding Project (No. KG16384201); P.W. is supported by Natural Science Foundation of China Grant (12301333) and the Department of Education of Liaoning Province Grant (No. JYTQN2023169); Q.Y is supported by Research on Privacy and Security of Artificial Intelligence Using Robust Statistical Learning (No. 2024KQNCX160). B.Z. is supported by the National Key Research and Development Program of China (Grant No. 2023YFC3306401), Zhejiang Provincial Natural Science Foundation of China under Grant No. LD24F020007, Beijing Natural Science Foundation L223024, L244043, Z241100001324017 and “One Thousand Plan” projects in Jiangxi Province Jxsq2023102268.

Author information

Authors and Affiliations

Contributions

H.Z. and P.W. conceived the experiments, W.T. and Q.Y. conducted the experiments, W.T. and B.Z. analysed the results. H.Z. gave the proofs. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Zhang, H., Tian, W., Yao, Q. et al. Robust learning for ridge-penalized quasi-GLMs under non-identical distributions. Sci Rep 15, 39955 (2025). https://doi.org/10.1038/s41598-025-23598-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-23598-4