Abstract

The retinal fundus images are extensively utilized in diagnosis, and their quality may affect diagnostic results. However, due to limitations in the datasets and algorithms, current fundus image quality assessment (FIQA) methods often lack the granularity required to meet clinical demands. To address these limitations, we introduce a new benchmark FIQA dataset, Fundus Quality Score, which contains 2,246 images annotated with continuous mean opinion scores ranging from 0 to 100 and three-level quality categories. Meanwhile, we also design a novel FIQA Transformer-based Hypernetwork (FTHNet). The FTHNet can treat FIQA as a regression task to predict the continuous MOS, diverging from common classification-based approaches. Results on our dataset show that FTHNet predicts quality scores, achieving a Pearson Linear Correlation Coefficient of 0.9423 and a Spearman Rank Correlation Coefficient of 0.9488, significantly outperforming compared methods while utilizing fewer parameters and lower computational complexity. Furthermore, model deployment experiments demonstrate its potential for use in automated medical image quality control workflows. We have released the code and dataset to facilitate future research in this field.

Similar content being viewed by others

Introduction

The retinal fundus image is one of the most commonly used ophthalmology graphics. Many ophthalmologists use fundus images to assist clinical diagnosis of diabetic retinopathy (DR)1,2,3, age-related macular degeneration (AMD)4, polypoidal choroidal vasculopathy (PCV)5, and other retinal diseases6,7. The precise diagnosis of eye diseases relies on high-quality(HQ) fundus images. However, fundus images captured with different equipment by ophthalmologists with various levels of experience have large variations in quality. A screening study of 5,575 patients found that about \(12\%\) of fundus images are of inadequate quality to be readable by ophthalmologists8. Moreover, another study based on the UK BioBank also shows that more than 25\(\%\) of fundus images need to be of higher quality to allow accurate diagnosis. Consequently, low-quality(LQ) fundus images cover a significant percentage of clinical fundus images. According to the experience of ophthalmologists, the common degradation types of LQ fundus images include out-of-focus blur, motion blur, artifact, over-exposure, and over-darkness. The degradation of fundus images may prevent a reliable clinical diagnosis by ophthalmologists or computer-aided systems. Thus, fundus image quality assessment (FIQA) is proposed to help ophthalmologists control the quality of fundus images. The FIQA tasks can be bonded with the collection process of fundus images, which can boost its speed and avoid useless repeats. Moreover, the quality control process in the medical record system can also benefit from FIQA methods. Thus, the research in FIQA is important.

Traditional FIQA methods9,10,11 primarily rely on hand-crafted models. However, these approaches often achieve unsatisfactory performance and limited generalizability. In recent years, Convolutional Neural Networks (CNNs) have been widely adopted in image quality assessment (IQA)12,13,14,15. Inspired by the success of IQA, CNNs have likewise been applied to FIQA16,17. While achieving impressive results, CNN-based methods struggle to capture long-range dependencies effectively. More recently, the Transformer architecture18 was introduced to computer vision and has surpassed CNN-based methods in numerous tasks. The Multi-head Self-Attention (MSA) mechanism within the Transformer excels at modeling non-local similarity and long-term dependencies. This inherent strength of the Transformer has the potential to overcome the limitations observed in CNN-based models. Consequently, Transformer-based methods have been extensively applied in fundus-related tasks, such as disease screening19,20,21, focus segmentation22,23, and image restoration24,25. The compelling performance of the Transformer architecture also demonstrates significant potential for the FIQA task. Therefore, we selected the Transformer as one of the foundational feature extraction architectures for our model.

Meanwhile, most present FIQA methods are data-driven, which means the performance of these methods relies on the quality of the data. Unfortunately, a professional and available clinical benchmark has yet to be explored. Firstly, some datasets16,26,27 treat the FIQA task as a classification task rather than a regression task. The fundus images are roughly divided into several categories, such as “Good”, “Usable”, and “Bad” quality. The roughly labeled images can lead to images of prominently different quality classified in the same category. Secondly, when a quality scale is used, some datasets do not consider that the image quality of different fundus regions has different effects on clinical diagnosis. For example, the influence of optic disc regions on the fundus image quality should be more significant than that of edge regions. Thirdly, many works train and test on their private datasets, which are labeled using their own standard and are not publicly available. Therefore, it is not convenient to benchmark the performance of the FIQA methods.

Examples of the Fundus Quality Score (FQS) dataset. In the FQS dataset, each fundus image has two labels: a three-level classification label (good, reject, and usable) and a continuous MOS varying from 0 to 100. Our FQS dataset covers the most common degradation types in clinical diagnoses, such as out-of-focus blur, haze, uneven illumination, and over-darkness.

In this paper, we set out to address the limitations of algorithms and datasets in FIQA. To begin with, we establish a new clinically acquired dataset, Fundus Quality Scores (FQS), including 2246 fundus images with continuous mean opinion scores (MOSs) ranging from 0 to 100 and three-level labels (“Good”, “Reject”, and “Usable”). Based on this dataset, we propose a novel method for FIQA, namely the FIQA Transformer-based HyperNetwork (FTHNet). The proposed FTHNet consists of four parts: the Transformer Backbone, the Distortion Perception Network, the Parameter Hypernetwork, and the Target Network. Specifically, the Transformer Backbone is built up by Basic Transformer Blocks (BTBs). The self-attention mechanism in BTBs can capture the non-local self-similarity and long-term dependencies, which are the main limitations of existing CNN-based methods. The proposed Distortion Perception Network can collect distortion information in different resolutions. We introduce the Parameter Hypernetworks, which can dynamically generate weights and biases according to fundus image contents. Furthermore, the Target Network receives the weights and biases and predicts fundus image quality scores. This proposed method is supposed to give prediction scores consistent with ophthalmologists’ experience and perception.

Our contributions can be summarized as follows:

-

We establish a new clinical dataset, FQS, to evaluate FIQA algorithms. This is the first professional and available FIQA dataset with both continuous MOSs and three-level classification labels.

-

We propose a novel FIQA model with the Transformer-based hypernetwork, FTHNet. It is the first attempt to introduce the Transformer, aligning with the hypernetwork for FIQA tasks.

-

Experimental results demonstrate that our FTHNet significantly outperforms current algorithms in the FIQA tasks with fewer parameters and less computation complexity, and thus has excellent potential in real-time diagnosis assistance.

Material and methods

Fundus quality score dataset

This subsection details the Fundus Quality Score (FQS) clinical dataset, which contains 2246 fundus images. The original images possess spatial dimensions approximating \(1942\times 1942\) pixels and were standardized to \(1024\times 1024\) pixels for consistent processing. Each image is accompanied by two labels: a categorical quality rating (’Good’, ’Usable’, ’Reject’), and a Mean Opinion Score (MOS). The MOSs represent perceiving image quality on a continuous scale from 0 (minimum) to 100 (maximum). The data is available at https://figshare.com/articles/dataset/FIQS_Dataset_Fundus_Image_Quality_Scores_/28129847?file=51531041, which can be downloaded and utilized in reproduction and any other FIQA research. The samples of this dataset are shown in Fig. 1.

Data collecting

We include all fundus images collected from the partner hospital between December 2021 and Jun 2022. Over 10,000 eye instances were collected during this period. To compile a dataset reflecting the natural distribution of image quality and degradation types, all degradation types were maintained for fundus images encountered in clinical practice. Subsequently, duplicate images and images of similar content and quality were excluded from the dataset. The data duplication and potential leakage in our dataset have been carefully managed during the selection process. The same images will not appear in different sub-datasets. Therefore, 2246 images were left after the collection, selection, and exclusion. These raw images have spatial sizes of \(2656 \times 1992\). Following image acquisition, the preprocessing was applied to these images. Initially, black peripheral borders were removed from each fundus image. This cropping procedure resulted in images with dimensions of \(1942\times 1942\) pixels. To ensure maximum fidelity and prevent data loss, these processed images were subsequently saved in the lossless PNG format. Finally, to standardize input size and improve computational efficiency in subsequent analyses, all images were then resized to \(1024\times 1024\).

The fundus images are captured by ophthalmologists using a ZEISS VISUCAM200 fundus camera or a Canon fundus camera, which are the mainstream products of fundus cameras. Sensitive information, such as names and diagnosis results, is deleted from the beginning of data collection. The EXIF metadata contains other data points that could cause risks to confidentiality, which are thus deleted. It can include: GPS coordinates, timestamps, and device identifiers. These data elements could inadvertently lead to the re-identification of patients, particularly if correlated with other available information. Therefore, to minimize any potential risk of identity leakage, we removed all EXIF metadata from every image included in the FQS dataset. This action aims to ensure patient anonymity and wider research use without compromising privacy.

The collection process of our FQS dataset is approved and supervised by Shenzhen Eye Hospital. This study was conducted in accordance with the ethical standards of the institutional and national research committee and with the 1964 Helsinki declaration and its later amendments under supervision. The study protocols and experimental procedures for the collected data were approved by the Institutional Ethics Committee of Shenzhen Eye Hospital (ETHICAL NUMBER: 2022KYPJ062). The use of historical clinical data met the following criteria: (1) All personally identifiable information was removed to ensure patient anonymity and privacy. (2) The research posed minimal or no risk to the subjects both during and after the study. (3) Patients provided consent for their data to be used in future research during the initial data acquisition. Therefore, a formal waiver of written consent was granted by the Institutional Ethics Committee for this specific research project (Fig. 2).

The statistical information of our FQS. (a) The MOS distribution histogram. Most of the MOSs are distributed between 60 and 80, which is consistent with actual clinical experience, and images of either extremely high or low quality are rare. (b) The standard deviation distribution histogram of MOSs. Half of the images have standard deviations under 4.34. Low SDs indicate that, though the opinion scores are given independently, the scoring criteria are consistent. (c) The three-level label distribution. The numbers of ‘Good’, ‘Usable’, and ‘Reject’ are 516, 793, and 937. There are more ‘Reject’ images to cover more degradation types.

The GUI of our FundusMarking software. This software was developed during the labeling of the FQS dataset for the better convenience of ophthalmologists. The graphical user interface (GUI) of the FundusMarking software is designed to be straightforward. We have released the code for this tool. Thus, it can be easily modified to build any new IQA datasets.

Labelling

First, a reference set of 330 fundus images was established. Experienced ophthalmologists assigned each image to one of three broad quality categories: “Good,” “Usable,” or “Reject.” This initial classification was based on their clinical expertise and aligns with quality control procedures existing at the cooperating hospital. Correspondingly, initial score ranges were assigned: ’Good’ (80–100), ’Usable’ (60–79), and ’Reject’ (0–59), reflecting a common 100-point quality assessment paradigm.

Second, within each broad category, images were further leveled by the ophthalmologists into five classes based on the severity of image degradation (e.g., extent of out-of-focus blur, haze, uneven illumination). This step refined the initial broad score ranges into more detailed sub-ranges. For instance, images initially in the “Good” (80–100) category could be further refined into five classes, 100-95-90-85−80, based on this detailed assessment.

Third, the scores derived from the second stage were then subject to a final fine-tuning adjustment. Experts considered the following four aspects to adjust the score, ranging in ±2 points:

-

(1)

Comparative degree of fundus image degradation relative to other images within the same refined sub-category.

-

(2)

The extent and clarity of discernible structural information for diagnosis (like blood vessel morphology and density).

-

(3)

The presence and assessability of pathological features relevant to clinical diagnosis.

-

(4)

The visibility and clarity of critical anatomical landmarks (like macula, optic disc).

This adjustment mechanism can differentiate images with intricate quality differences, resulting in the final score values ranging from 0 to 100 with a minimum resolution of 1 point.

The final MOS for each image was determined by weighted averaging the scores from six experts. This approach is common in the development of image quality assessment datasets, such as KonIQ-10k28, LIVE29, CSIQ30, and TID201331, which also utilize MOS to capture perceived quality. Then, three ophthalmologists and three experienced ophthalmologists gave their opinion scores for 2246 images. To make the labeling process more convenient, we built the software FundusMarking. This software has a straightforward GUI and can be easily modified to build any new IQA datasets. The sample of its GUI is shown in Fig. 3. The codes have been released at https://github.com/HudenJear/FIQA-GUI-Labeling. Note that scores will be discussed and adjusted if there is a significant difference between the opinion scores of the same image.

Finally, we obtain the MOS by the weighted average of six independent scores:

where \(\textbf{O}_i\) and \(\textbf{Oj}_i\) denote the opinion scores of ophthalmologists and experienced ophthalmologists. \(\lambda _1\) and \(\lambda _2\) represent the weights of ophthalmologists and experienced ophthalmologists. To reflect the greater experience of the senior ophthalmologists, we assigned each experienced ophthalmologist double the weight of an ophthalmologist. As a result, the weight of ophthalmologists and experienced ophthalmologists can be 1/9 and 2/9.

Dataset splitting

The train, validation, and test subsets are split proportionately 80%, 5%, and 15% randomly. We have applied a strict inclusion and exclusion standard, so that all images will not be replicated or similar. Therefore, there will be only one image included in the datasets for all images captured during a short period of time for one patient. There is no chance that the same individual’s images for the same eyes may fall into different datasets. However, the two images from the same individuals but from different eyes (left and right) might be included in different datasets (train, validation, or test). As they have completely different features in image quality, it will not cause data leaking across datasets. To definitively prevent such data leakage and adhere to best practices in medical image analysis, we ensure that our random dataset splits are performed at the image level. This means that images will not be replicated in the datasets.

Statistic information

The FQS dataset comprises 2246 fundus images in total. Specifically, 92% of selected images are from the usual clinical diagnosis across all ages and genders, and the other 8% are from teens’ myopia screening. The mean and standard deviation of age are 45.24 and 25.17. Regarding eye laterality, the dataset contains 1141 images of the right eye and 1105 images of the left eye. The images were obtained from a cohort of 1170 male and 1076 female patients. The geographic origin of this patient cohort is predominantly East Asian (99.55%), with smaller representations (0.45%) from Central and Southeast Asia. We also summarize more statistical information in the following figures and description.

MOS Distribution Histogram Figure 2(a) depicts the distribution of MOS. It can be observed that most of the MOSs are distributed between 60 and 80. The number of images with MOS distributed between 70 and 80 is the largest, and the number of images from 90 to 100 is the lowest, with only 15. The MOS distribution in our FQS is natural and consistent with actual clinical experience. Affected by the equipment and patient coordination, it is difficult to obtain very high-quality fundus images (MOS: 80 or above) in actual clinical collecting. Meanwhile, retinal fundus images of extremely low quality are also rare. The quality of most clinical fundus images is in the upper middle of the score scale.

Three-level Label Distribution The distribution of the three-level classification label is shown in Fig. 2(c). Since the HQ images are similar, but the LQ images are different in numerous aspects, there are more “Reject” images in the FQS dataset to cover more degradation types. The numbers of fundus images labeled “Good”, “Usable”, and “Reject” are 516, 793, and 937, respectively. The distribution of the three-level classification label is consistent with the MOSs, which is common in actual clinical fundus image collecting.

Standard Deviation Distribution Histogram Since the MOS of each fundus image consists of multiple independent opinion scores from several ophthalmologists, it is necessary to evaluate the uniformity of these six scores. The standard deviation (SD) can present the difference between opinion givers for each. Therefore, we calculate the SD of each image and make the following SD distribution histogram (Fig. 2(b)). About a quarter of the SDs are less than 2.52, half of the SDs are under 4.34, and three-quarters are under 6.37. Thus, the distribution of SD indicates the consistency of opinion scores and proves that prior knowledge of the labeling process is consistent between opinion givers.

Architecture of our FTHNet. (a) The Transformer Backbone includes a patch embedding layer and four feature extraction stages. (b) The Distortion Perception Network is designed to extract distorted information. (c) The Parameter Hypernetwork comprises five parameter-generating layers. (d) The Target Network contains five linear layers to predict the fundus image quality scores. (e) The structure of the Basic Transformer Block. (f) The distortion perception block extracts the distortion information from the feature maps in different resolutions. (g) Each parameter-generating layer includes two branches to generate weight and bias parameters.

Method

Overall architecture

The architecture of FTHNet is shown in Fig. 4, which consists of 4 parts: the Transformer Backbone, the Distortion Perception Network, the Parameter Hypernetwork, and the Target Network.

The Transformer Backbone contains a patch embedding layer and four stages in different resolutions. Each stage comprises several Basic Transformer Blocks (BTBs) and a downsampling layer. Firstly, assuming an input fundus image, \(\textbf{I}_{in} \in \mathbb {R}^{H\times W \times 3}\), the Transformer Backbone exploits a non-overlapping patch embedding layer consisting of a \(4\times 4\) convolution (conv) to extract shallow feature \(\textbf{I}_{0} \in \mathbb {R}^{\frac{H}{4}\times \frac{W}{4} \times C}\). Secondly, 4 stages are used for feature extraction on \(\textbf{I}_{0}\). We adopt a \(4 \times 4\) conv with stride 2 as the downsampling layer to the feature maps and double the channel dimension. Thus, the feature of the i-th stage is denoted as \(\textbf{X}_{i} \in \mathbb {R}^{\frac{H}{4\times 2^i} \times \frac{W}{4\times 2^i} \times 2^{i}C}\). Here, i = 0, 1, 2, 3 indicates the four stages.

We design the Distortion Perception Network to extract distorted information, as shown in Fig. 4(b). Each stage input is processed in the Distortion Perception Block (DPB). The input feature maps are \(\textbf{X}_{i}, i=0,1,2,3\). Then, we get four distorted information vectors through the DPB. Finally, distorted information vectors are concatenated to obtain the semantic vector \(\textbf{V} \in \mathbb {R}^{L \times 1}\).

The parameters of the Target Network are generated from the Parameter Hypernetwork shown in Fig. 4(c). We design five stages for processing the parameters: one \(1\times 1\) conv layer for channel merging and one Parameter Generating Layer (PGL). The Parameter Hypernetwork gets input directly from the final stage of the Transformer Backbone. The input feature map is denoted as \(\textbf{X}_{3} \in \mathbb {R}^{\frac{H}{32} \times \frac{W}{32} \times 8C}\). We adopt a \(1\times 1\) conv layer to merge channels by half. After channel merging, the feature of the i-th stage is denoted as \(\textbf{P}_{i} \in \mathbb {R}^{\frac{H}{32} \times \frac{W}{32} \times \frac{8c}{2^i}}\). Here, i = 0, 1, 2, 3, 4 indicates the five stages. Then, the Parameter Hypernetwork exploits the PGL to generate the weights and biases of the linear layers for the Target Network. The weights and bias are denoted as \(\textbf{WP}_{i}\)and \(\textbf{BP}_{i}\).

The Target Network consists of five linear layers. The input is the \(\textbf{V}\) from the Distortion Perception Network. The weights and biases of the Target Network are from the Parameter Hypernetwork. We utilize five linear layers to generate the predicted fundus image quality scores.

In the implementation, we change the patch embedding channel C and the combination (\(N_1, N_2, N_3, N_4\)) in the Transformer Backbone to establish two different FTHNet models: FTHNet-S: (2,4,6,2), C:32; FTHNet-L: (2,4,6,2), C:64.

Basic transformer block

The emergence of Transformer provides an alternative to address the limitations of CNN-based methods in modeling non-local self-similarity and long-range dependencies. However, the computational cost of the standard global Transformer is quadratic to the spatial size of the input feature (HW). Therefore, to avoid this problem, we apply the transformer blocks with the Window-based Multi-head Self-Attention (W-MSA)32 in the Transformer Backbone. The computational complexity of W-MSA is linear to the spatial size, which is more wieldable than standard global MSA. We also add the alternate window-shifting operation in the BTB to introduce cross-window connections. The BTB consists of one W-MSA, one Multilayer Perceptron (MLP), and two normalization layers, as shown in Fig. 4(e). BTB can be formulated as eq.2.

where \(\textbf{F}_{in}\) represents the input feature maps of a BTB. \(\text {LN}(\cdot )\) represents the layer normalization. \(\textbf{F}^{\prime }\) and \(\textbf{F}_{out}\) denote the output feature of W-MSA and MLP respectively.

where Concat\((\cdot )\) denotes the concatenating operation and \(\textbf{W}^{O}\in \mathbb {R}^{{C \times C}}\) are learnable parameters. We reshape \(\textbf{X}^{i}_{o}\) to obtain the output window feature map \(\textbf{X}^{i}_{out} \in \mathbb {R}^{{L \times L \times C }}.\) Finally, we merge all the patch representations \(\{\textbf{X}_{out}^{1}, \textbf{X}_{out}^{2},\textbf{X}_{out}^{3}, \cdots , \textbf{X}_{out}^{N} \}\) to obtain the output feature maps \(\textbf{X}_{out} \in \mathbb {R}^{{H \times W \times C }}\).

Multilayer Perception

The Multilayer Perception(MLP) in the BTB resembles the most utilized methods in Transformers, consisting of a linear layer with a GELU activation, two dropout layers, and another linear layer.

Distortion perception block

We design the DPB in the Distortion Perception Network to extract the distortion information from the feature maps in different resolutions. Distortions of the fundus images are widely distributed on different scales. For example, spots and flares affect only some small areas; over-darkness and over-exposure influence the whole image. Rather than dealing with the final feature map, the DPBs get inputs from four backbone stages at different resolutions.

As depicted in Fig.. 4(f), the DPB consists of one \(1\times 1\) conv layer, one SoftPool33 layer, and one linear layer. We adopt the \(1\times 1\) conv and SoftPool to merge the channels and to downscale the spatial size of feature maps, respectively. The DPB can be formulated as follows:

where \(\textbf{X} \in \mathbb {R}^{H \times W \times C}\) are the input feature map of a DPB. \(\text {SoftPool}\), \(1\times 1 ~ \text {Conv}\), \(\text {Linear}\), and \(\text {Flatten}\) represents the SoftPool layer, the \(1\times 1\) conv layer, the linear layer, and flatten operation, respectively. \(\textbf{X}^{\prime } \in \mathbb {R}^{\frac{H}{12} \times \frac{W}{12} \times \frac{C}{8}}\) denotes the output feature. \(\textbf{V} \in \mathbb {R}^{l \times 1}\) is the distortion information vector.

Parameter generating layer

The parameters of the Target Network are processed by the Parameter Generating Layer (PGL) shown in Fig. 4(g). The PGL consists of two branches, one for generating the weight parameters and another for generating the bias parameters. The weight branch is formulated as follows:

Where \(\textbf{P}\) denotes the input feature map of PGL. \(\textbf{WP}\) represents the weight parameter. \(3\times 3 ~ \text {Conv}\) and \(\text {Reshape}\) represent the \(3 \times 3\) conv layer and reshape operation respectively.

Similarly, the bias branch can be formulated as follows:

where \(\textbf{BP}\) represents the weight parameter. \(\text {Linear}\) and \(\text {SoftPool}\) denote the linear layer and SoftPool layer respectively.

Though this process seems complicated, it can directly generate the parameter matrix with desired shape and length if we calculate the output channel using \(\frac{Channel_{in}\times H\times W}{\frac{L}{2^{i-1}} \times \frac{L}{2^i}}\), where \(H\times W\) represents the size of the feature map, and L represents the input length of Target Network in the ith stage.

Loss function

We choose the smooth L1 loss34 as our model’s loss function. To be specific, the smooth L1 loss is formulated as

where \(\textbf{y}\) denotes the ground-truth fundus image quality score, \(\textbf{y}^{\prime }\) denotes the predicted score.

Metrics

We chose root mean square error (RMSE), Pearson correlation coefficient (PLCC), and Spearman’s rank correlation coefficient (SRCC) as the metrics to evaluate the performance of our model.

The RMSE in eq. 7 shows the deviation between the prediction and target values. The lower the RMSE, the more robust the model in prediction.

where \(y_{i}\) denotes the ground-truth MOS, \(y^{\prime }_{i}\) denotes the predicted MOS.

The PLCC and SRCC are both standard metrics applied in the IQA works. The PLCC shown in eq.8 varies from −1 to 1 and shows the correlation between prediction and target values. The higher PLCC means the model’s predictions are closer to the images’ actual scores.

where \(\bar{y}^{\prime }\) represents the average value of \(y^{\prime }_{i}\) and \(\bar{y}\) represents the average value of \(y_{i}\).

The SRCC in eq.9 varies from −1 to 1 and measures the monotonicity of the models’ prediction.

where \(d_{i} = y^{\prime }_{i} - y_{i}\) denotes the difference between \(y^{\prime }_{i}\) and \(y_{i}\).

Availability of data and materials

The codes of training and validating are available at https://github.com/HudenJear/BasiQA. The code for dataset collecting and labeling is available at https://github.com/HudenJear/FIQA-GUI-Labeling. The data that is used in this research can be accessed at https://figshare.com/articles/dataset/FIQS_Dataset_Fundus_Image_Quality_Scores_/28129847?file=51531041. Please contact the corresponding author if there are any questions about data and code.

Results

Implementation details

The train, test, and validation subsets are split proportionately 80%, 15%, and 5% randomly in each round, and 10-round cross-validation is applied in training. During the training procedure, Fundus images are resized to \(384 \times 384\). The Adam35 optimizer is adopted. We also apply data augmentation consisting of horizontal/vertical flip and random crop. The learning rate is set to \(0.5 \times 10^{-4}\) with linear annealing, and the batch size is set to 16. We use PyTorch 1.9 and CUDA 11.2. Every model is trained for 120000 iterations with a warming-up of 1000 iterations, equivalently 853 epochs. It takes about 12 hours to use an NVIDIA RTX3090 GPU to finish the training process.

Comparisons with state-of-the-art methods

To evaluate the performance of the proposed FTHNet, we compare our FTHNet with several SOTA methods. These methods include four model-based methods (BRISQUE37, ILINIQE38, BIQI39, and DIVINE40). Meanwhile, 5 deep learning-based methods: DeepIQA12, HyperIQA13, GraphIQA14, SaTQA36, and TRIQ15, are also included in the comparison methods. These methods encompass a comprehensive range from algorithm-based methods to the newest deep learning method leveraging the Transformer and contrastive learning.

As shown in the Tabel.1, The model-based methods mentioned above can merely reach the performance of FTHNet, though these methods are retrained on our FQS dataset. Since the model-based methods do not have network parameters and computing flops, the corresponding data is not shown in the table.

The IQA methods had not been applied to FIQA tasks before this work, and we retrained HyperIQA, GraphIQA, and TRIQ for their best performance in the comparison study. The quantitative comparisons on our FQS dataset are shown in Table.1. Our best model, FTHNet-L, achieves 0.0133, 0.0183, 0.5161, and 0.6690 improvements in PLCC compared to GraphIQA, HyperIQA, TRIQ, and DeepIQA. Meanwhile, FTHNet-L has 0.0143, 0.0072, 0.5270, and 0.6459 better performance in SRCC, respectively. These improvements of FTHNet-L are significant enough for IQA tasks. Furthermore, although FTHNet-L is already smaller than most other models, our lightweight model, FTHNet-S, outperforms other methods by 0.008, 0.013, 0.5108, and 0.6637 in PLCC, respectively, while requiring 5.67 M Params.

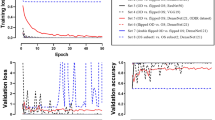

We also evaluated the performance of our model on a 3-level quality classification task using the FQS dataset. Although the FTHNet model is designed to provide a continuous quality score, this classification task provides a simplified metric for internal validation. The images were categorized into three quality levels based on their predicted scores, using the same criteria described in Section 1.1.2. The results, presented in Fig. 5a, show that very few images fell in incorrect classification. This analysis confirms that the FTHNet model exhibits strong performance on internal validation.

The confusion matrices of internal and external dataset test. We applied our pretrained model to (a) the FQS dataset and (b) EyeQ44 dataset. The image quality level is decided by its quality score prediction following the same criteria as in Section.1.1.2. The FTHNet achieves great performance in quality level classification on internal dataset validation. The external test also indicates that FTHNet works effectively on the external dataset. The results of classification show great precision and generalizability of our FTHNet.

External validation

To perform the external validation, we conducted a complementary experiment using the heterogeneous EyeQ dataset44. This evaluation was designed to assess the generalizability of our model on an independent dataset. The 28,790 images from the EyeQ dataset were processed by our model to obtain quality score predictions. These predictions were then converted to quality levels using the same criteria outlined in Section 1.1.2 and compared against the ground-truth labels to calculate the performance metrics.

The results, presented in Fig. 5b, demonstrate that FTHNet achieves good performance on external data validation, confirming its generalizability. We observed a slightly higher error rate in the “Usable” category, which may be attributed to differences in the quality assessment criteria between our dataset and the EyeQ dataset.

Ablation study

Transformer backbone

To analyze the effect of the Transformer Backbone, we compare our BTB with three solid Transformer Backbones in Table. 2, including two CNN-based backbones (ResNet45 and ConvNeXt42) and one Transformer-based backbone(MSG-Transformer43) with other structure of FTHNet unchanged. It can be observed in the table that our proposed BTB achieves the best performance.

Window shift operations

The window shift operations are designed to augment the information exchange between adjacent windows in the BTBs. We conducted the ablation study to analyze the effect of the window shift operations. The results are reported in Table. 2. The results indicate that the window shift operations can build cross-window connections and improve the performance of FTHNet.

Depth and patch embedding channel

We explore the effect of different patch embedding channels C and combinations (\(N_1, N_2, N_3, N_4\)) in the extraction backbone on model performance after choosing the BTB with W-MSA as the extraction block. The results are shown in Table. 3.

It can be observed that our FTHNet-L ((2, 2, 6, 2), C:64) can achieve the best performance. Compared with other patch embedding channels, 64 is the optimal choice. Considering the performance and model parameters, we finally chose ((2, 4, 6, 2), C:32) as our FTHNet-S.

Downsampling structure

We conduct this ablation study to explore the effect of downsampling structure in the Parameter Hypernetwork. Two downsampling structures are available in the Parameter Hypernetwork: stepwise and direct downsampling. As shown in Table. 2, compared with the direct structure, the stepwise downsampling structure achieves almost the same performance with fewer model parameters and computational complexity. Thus, we choose the stepwise downsampling structure in our FTHNet.

Loss function

We test different loss functions, including L1 loss, L2 loss, and Smooth L1 loss34, while training the models for better performance. The L1 loss is the most common function in the IQA. In addition, L2 loss and Smooth L1 loss are also applied in the IQA.

As listed in Table.2, compared with other loss functions, Smooth L1 loss achieves the best performance in PLCC and RMSE. Meanwhile, Smooth L1 loss gets the second-best performance in SRCC. Thus, we choose the Smooth L1 loss as the loss function of our FTHNet.

Discussion

Deployment experiment

We deploy our FTHNet as an Application Program Interface (API) on a GPU server and bind our FTHNet with a new automatic diagnosis system. The implementation of the FTHNet in the diagnosis system is demonstrated in Fig. 6. This system can process fundus images and provide ophthalmologists with pathology information and the reliability of images. If the score of one image is high, the pathology information distinguished by the system shall be more trustworthy. The ophthalmologists utilizing this system can choose how to use the result based on the score of the FTHNet.

Potential of real-time assessment

The inference speed is one of the most critical factors affecting the application of our FTHNet in clinical diagnosis. We deploy our FTHNet as an API on a GPU server to test the inference time of our FTHNet, and the results are in Table.4. The single test means the average inference time of a single fundus image.

We can observe from Table.4 that the inference time of FTHNet-S is 44.45 ms, and even FTHNet-L is only 56.31 ms. With the short inference time, our FTHNet can provide real-time assessment in clinical diagnosis.

Effect of hypernetwork structure

In this subsection, we discuss the effect of the hypernetwork structure on FIQA tasks.

To explore the importance of hypernetwork structure in the FIQA task, we conduct this experiment and show the results in Table.2. Note that w/o hyper network means the parameters of the Target Network are learned by backpropagation rather than provided by the hypernetwork.

It can be observed that the performance of FTHNet improves significantly with the hypernetwork structure despite a slight increase in model parameters. It indicates the importance of hypernetwork structure in the FIQA tasks. Our FTHNet is constructed based on the hypernetwork structure. The Target Network has flexible parameters from the hypernetwork varying with the input image, which is essential for the quality assessment with fundus images of complex and diverse degradation.

Failure cases

Although FTHNet demonstrates robust overall performance, an analysis of its failure cases reveals limitations under specific, challenging conditions. Fig. 7 illustrates instances from our FQS dataset where FTHNet’s prediction values exhibit a deviation when compared to the ground truth. Further observation indicates that these failure cases predominantly belong to images in the “Reject” category.

These shown failure cases are frequently associated with complex degradation profiles, primarily combinations of severe blur and dense haze. Such coexistence of severe degradations is relatively rare within the current FQS dataset. The sample number for these combined degradation categories may influence model generalizability. Still, for most of these instances, while the predicted MOS value may be inaccurate, their quality scores are within the range of the “Reject” quality category. The limited exposure to such specific degradation combinations degrades the precision of the quality score prediction for these failure cases. A systematic augmentation of our training dataset by incorporating more examples of these complex and rare degradation types may improve performance. Increasing the representation of these challenging cases is expected to enhance the model’s robustness and generalizability.

Therefore, a key direction for our future work involves the enrichment and improvement of the FQS dataset. We plan to specifically augment the dataset with more examples containing images of combinations of severe degradations. This will be crucial for enhancing FTHNet’s robustness and precision of prediction for images at the lowest end of the quality scale. We acknowledge the potential benefits of addressing the data imbalance, specifically the underrepresentation of severely degraded images. As a future direction, we plan to investigate the use of oversampling techniques during model training. We believe that improving FTHNet’s robustness to unbalanced data distributions will also be effective in minimizing failure cases and further enhancing its performance.

Conclusions

For traditional FIQA methods, most give classification prediction; it is difficult to compare results within each category; algorithms are old and not deployed in practice. However, our FTHNet is trained to give continuous quality scores, which are intuitive and convenient to compare; it is lightweight and easy to deploy in automatic diagnosis systems; it has a new transformer-based hypernetwork architecture and leading performance among IQA methods.

Our extensive experimental validation has demonstrated FTHNet’s leading performance in FIQA, consistently outperforming a range of existing methods. Furthermore, ablation studies have systematically validated the efficacy of FTHNet’s individual architectural components. However, an analysis of failure cases has also highlighted areas for continued advancement. Specifically, FTHNet’s performance is less robust for certain rare and complex combinations of severe degradations, primarily due to their underrepresentation in the current iteration of our FQS dataset. Our future work will therefore concentrate on two key areas. First, we will expand the proposed FQS dataset by collecting more data, specifically targeting these complex cases. Second, we will focus on enhancing FTHNet’s robustness to unbalanced data distributions. This will involve investigating more advanced training techniques, such as oversampling the underrepresented classes, to further minimize failure cases and improve overall model performance.

To facilitate continued research and reproducibility, we have publicly released the source code for FTHNet and the complete FQS dataset with detailed annotations (as specified in the Availability of Data and Materials section). Additionally, tools developed for constructing the FQS dataset are also made available to support the broader fundus image research community. We hope this work can serve as a baseline for FIQA and benefit the fundus image research by providing a new quantitative metric in the future.

References

Majumder, S. & Kehtarnavaz, N. Multitasking deep learning model for detection of five stages of diabetic retinopathy. arXiv preprint arXiv:2103.04207 (2021).

Hua, C.-H. et al. Convolutional network with twofold feature augmentation for diabetic retinopathy recognition from multi-modal images. IEEE J. Biomed. Heal. Informatics (2020).

Peng, Y. et al. Deepseenet: a deep learning model for automated classification of patient-based age-related macular degeneration severity from color fundus photographs. Ophthalmology 126, 565–575 (2019).

Burlina, P., Freund, D. E., Joshi, N., Wolfson, Y. & Bressler, N. M. Detection of age-related macular degeneration via deep learning. In 2016 IEEE 13th International Symposium on Biomedical Imaging (ISBI), 184–188 (IEEE, 2016).

Cheung, C. M. G. et al. Polypoidal choroidal vasculopathy: definition, pathogenesis, diagnosis, and management. Ophthalmology 125, 708–724 (2018).

Mojab, N., Noroozi, V., Philip, S. Y. & Hallak, J. A. Deep multi-task learning for interpretable glaucoma detection. In 2019 IEEE 20th International Conference on Information Reuse and Integration for Data Science (IRI), 167–174 (IEEE, 2019).

Liao, W. et al. Clinical interpretable deep learning model for glaucoma diagnosis. IEEE journal of biomedical and health informatics 24, 1405–1412 (2019).

Philip, S., Cowie, L. & Olson, J. The impact of the health technology board for scotland’s grading model on referrals to ophthalmology services. Br. J. Ophthalmol. 89, 891–896 (2005).

Lee, S. C. & Wang, Y. Automatic retinal image quality assessment and enhancement. In Medical imaging 1999: image processing, vol. 3661, 1581–1590 (SPIE, 1999).

Lalonde, M., et al. Automatic visual quality assessment in optical fundus images. In Proceedings of vision interface, vol. 32, 259–264 (Ottawa, 2001).

Köhler, T. et al. Automatic no-reference quality assessment for retinal fundus images using vessel segmentation. In Proceedings of the 26th IEEE international symposium on computer-based medical systems, 95–100 (IEEE, 2013).

Bosse, S., Maniry, D., Müller, K.-R., Wiegand, T. & Samek, W. Deep neural networks for no-reference and full-reference image quality assessment. IEEE Transactions on Image Processing 27, 206–219. https://doi.org/10.1109/TIP.2017.2760518 (2018).

Su, S. et al. Blindly assess image quality in the wild guided by a self-adaptive hyper network. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3667–3676 (2020).

Sun, S., Yu, T., Xu, J., Zhou, W. & Chen, Z. Graphiqa: Learning distortion graph representations for blind image quality assessment. IEEE Transactions on Multimed. (2022).

You, J. & Korhonen, J. Transformer for image quality assessment. In 2021 IEEE International Conference on Image Processing (ICIP), 1389–1393, https://doi.org/10.1109/ICIP42928.2021.9506075 (2021).

Fu, H. et al. Evaluation of retinal image quality assessment networks in different color-spaces. In Shen, D. et al. (eds.) Medical Image Computing and Computer Assisted Intervention – MICCAI 2019, 48–56 (Springer International Publishing, Cham, 2019).

Shen, Y. et al. Multi-task fundus image quality assessment via transfer learning and landmarks detection. In International Workshop on Machine Learning in Medical Imaging, 28–36 (Springer, 2018).

Vaswani, A. et al. Attention is all you need. In Advances in neural information processing systems, 5998–6008 (2017).

Nazih, W., Aseeri, A. O., Atallah, O. Y. & El-Sappagh, S. Vision transformer model for predicting the severity of diabetic retinopathy in fundus photography-based retina images. IEEE Access 11, 117546–117561. https://doi.org/10.1109/ACCESS.2023.3326528 (2023).

Fan, R. et al. Detecting glaucoma from fundus photographs using deep learning without convolutions: Transformer for improved generalization. Ophthalmol. Sci. 3, 100233 (2023).

Xu, K. et al. Automatic detection and differential diagnosis of age-related macular degeneration from color fundus photographs using deep learning with hierarchical vision transformer. Comput. Biol. Medicine 167, 107616 (2023).

Wang, J. et al. Odformer: Semantic fundus image segmentation using transformer for optic nerve head detection. Inf. Fusion 112, 102533 (2024).

Zhang, H. et al. Tunet-lbf: Retinal fundus image fine segmentation model based on transformer unet network and lbf. Comput. Biol. Medicine 159, 106937 (2023).

Deng, Z. et al. Rformer: Transformer-based generative adversarial network for real fundus image restoration on a new clinical benchmark. IEEE J. Biomed. Heal. Informatics 26, 4645–4655. https://doi.org/10.1109/JBHI.2022.3187103 (2022).

Gong, Z. et al. Versatile cataract fundus image restoration model utilizing unpaired cataract and high-quality images. Sci. Reports 15, 11171. https://doi.org/10.1038/s41598-025-88444-z (2025).

Raj, A., Tiwari, A. K. & Martini, M. G. Fundus image quality assessment: survey, challenges, and future scope. IET Image Process. 13, 1211–1224 (2019).

Strauss, R. W. et al. Image quality characteristics of a novel colour scanning digital ophthalmoscope (sdo) compared with fundus photography. Ophthalmic Physiol. Opt. 27, 611–618 (2007).

Hosu, V., Lin, H., Sziranyi, T. & Saupe, D. Koniq-10k: An ecologically valid database for deep learning of blind image quality assessment. IEEE Transactions on Image Process. 29, 4041–4056 (2020).

Ghadiyaram, D. & Bovik, A. C. Massive online crowdsourced study of subjective and objective picture quality. IEEE Transactions on Image Process. 25, 372–387. https://doi.org/10.1109/TIP.2015.2500021 (2016).

Larson, E. & Chandler, D. Most apparent distortion: Full-reference image quality assessment and the role of strategy. J. Electron. Imaging 19, 011006 (2010).

Ponomarenko, N. et al. A new color image database tid2013: Innovations and results. In Blanc-Talon, J., Kasinski, A., Philips, W., Popescu, D. & Scheunders, P. (eds.) Advanced Concepts for Intelligent Vision Systems, 402–413 (Springer International Publishing, Cham, 2013).

Liu, Z. et al. Swin transformer: Hierarchical vision transformer using shifted windows. arXiv preprint arXiv:2103.14030 (2021).

Stergiou, A., Poppe, R. & Kalliatakis, G. Refining activation downsampling with softpool. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 10357–10366 (2021).

Girshick, R. Fast r-cnn. In 2015 IEEE International Conference on Computer Vision (ICCV), 1440–1448, https://doi.org/10.1109/ICCV.2015.169 (2015).

Kingma, D. P. & Ba, J. Adam: A method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014).

Shi, J., Pan, G. & Jie, Q. Transformer-based no-reference image quality assessment via supervised contrastive learning. In AAAI (2024).

Mittal, A., Moorthy, A. K. & Bovik, A. C. No-reference image quality assessment in the spatial domain. IEEE Transactions on Image Process. 21, 4695–4708. https://doi.org/10.1109/TIP.2012.2214050 (2012).

Zhang, L., Zhang, L. & Bovik, A. C. A feature-enriched completely blind image quality evaluator. IEEE Transactions on Image Process. 24, 2579–2591. https://doi.org/10.1109/TIP.2015.2426416 (2015).

Moorthy, A. K. & Bovik, A. C. A two-step framework for constructing blind image quality indices. IEEE Signal Process. Lett. 17, 513–516. https://doi.org/10.1109/LSP.2010.2043888 (2010).

Moorthy, A. K. & Bovik, A. C. Blind image quality assessment: From natural scene statistics to perceptual quality. IEEE Transactions on Image Process. 20, 3350–3364. https://doi.org/10.1109/TIP.2011.2147325 (2011).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proc. IEEE/CVF Conf. on Comput. Vis. Pattern Recognit. (CVPR), 770–778 (2016).

Liu, Z. et al. A convnet for the 2020s. Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR) (2022).

Fang, J. et al. Msg-transformer: Exchanging local spatial information by manipulating messenger tokens. In CVPR (2022).

Fu, H. et al. Evaluation of retinal image quality assessment networks in different color-spaces. In International Conference on Medical Image Computing and Computer-Assisted Intervention, 48–56 (Springer, 2019).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), 770–778, https://doi.org/10.1109/CVPR.2016.90 (2016).

Acknowledgements

This work was supported by the Project from the Science and Technology Innovation Committee of Shenzhen-Platform and Carrier (International Science and Technology Information Center) and Shenzhen Bay Lab [KCXFZ20211020163813019]. This work involved human subjects in its research. Approval of all ethical and experimental procedures and protocols was granted by the Shenzhen Eye Hospital under the ETHICAL NUMBER 2022KYPJ062.

Author information

Authors and Affiliations

Contributions

Zheng Gong: Conceptualization, Methodology, Software, Validation, Data Curation, Writing - Original Draft, Visualization. Zhuo Deng: Methodology, Software, Validation, Data Curation, Writing - Original Draft, Visualization. Run Gan: Conceptualization, Data Curation, Writing - Review and Editing. Zhiyuan Niu: Data Curation, Validation, Writing - Review and Editing. Weihao Gao: Validation, Writing - Review and Editing. Lu Chen: Data Curation, Project administration. Canfeng Huang: Data Curation. Jia Liang: Data Curation. Fang Li: Project administration. Shaochong Zhang: Funding acquisition. Lan Ma: Funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The author(s) declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gong, Z., Deng, Z., Gan, R. et al. Acquire continuous and precise score for fundus image quality assessment: FTHNet and FQS dataset. Sci Rep 15, 40524 (2025). https://doi.org/10.1038/s41598-025-24423-8

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-24423-8