Abstract

Approximate computing comes to the fore as an alternative paradigm to enhance efficiency in computing systems by trading off the system’s accuracy for better performance. This paper seeks to leverage the principles of approximate computing to design efficient multiplier architectures for FPGA platforms. Specifically, this work presents FPGA implementations of one accurate and two approximate multiplier units based on the Dadda algorithm. The multipliers employ a novel partial product reduction technique that minimizes the utilized resources and the critical path delay, offering a more resource-efficient alternative than traditional multipliers. Our accurate and best-performing approximate 8 × 8 multiplier shows an improvement of 28% and 37% in PDAP over the Xilinx exact multiplier and the most performance-efficient existing approximate multiplier, respectively. Further evaluation based on the processing of images with different modalities shows a substantial improvement in PSNR over the existing approximate multipliers, especially in the healthcare domain, thereby highlighting the possible application of the proposed multipliers in error-resilient medical imaging tasks.

Similar content being viewed by others

Introduction

Approximate computing has attracted much interest in error-resilient applications such as multimedia processing and machine learning, where the applications can afford minor errors in return for improvement in performance parameters such as area, power, and delay. The conventional method of computing, which focuses on attaining maximum accuracy, becomes less viable with rising energy costs and growing complexity in modern systems1. Approximate computing comes to the fore as an alternative paradigm to enhance efficiency in computing systems by trading off accuracy for better performance or reduced energy consumption2. This approach can be implemented across various computing stack layers, from hardware to software. At the hardware level, approximate computing involves designing circuits that sacrifice some level of accuracy in exchange for faster operation or lower power consumption. On the software side, approximate computing enables algorithms to prioritize speed or memory efficiency over strict accuracy3. A key motivation behind approximate computing is that not all applications require accurate computations. Human recognition can endure a certain degree of inaccuracies, especially with media like pictures and videos4. By intentionally introducing approximations into the computational process, specifically at the hardware level, it becomes possible to simplify the logic of the circuit. This rational simplification shows decreased circuit complexity, power consumption, and processing time3.

Approximate computing leverages the error tolerance of applications like image and audio processing to reduce hardware cost significantly. In error-tolerant tasks, human perception can mask minor numerical errors to simplify multipliers for power and speed gains. For example5, shows that an FPGA-tailored approximate multiplier can cut LUT usage by 45.9% and reduce critical path delay by 30.6% with only a 0.14% average error1. Such reductions in area and delay typically come at the cost of a modest accuracy loss. Multiplication is essential in digital signal processing (DSP)6. Most signal processing algorithms, such as convolution, filtering, fast Fourier transform (FFT), and discrete cosine transform (DCT), are based on the multiplication operation7. Therefore, the multiplier unit’s efficiency directly impacts the overall efficiency of DSP hardware8. The overall efficiency of these systems is often bottlenecked by critical factors such as propagation delay and silicon area, which are primary concerns in designing Very Large-Scale Integration (VLSI) circuits. All multipliers typically involve three processes: partial product production, partial product reduction, and final addition. The primary performance constraint on the multiplier unit comes at the partial product reduction (PPR) phase9. While the literature discusses compressors, which are multi-input full adders with various compression ratios extensively10, the 4:2 compressor is considered the optimal choice for application in Wallace structures11. A new 4:2 compressor-based Wallace multiplier has been proposed in12. This architecture features an error correction unit that enhances the accuracy of the results. Comparable methods are reported in11,13, where an error detection/correction unit is a fundamental component of the multiplier design. Optimized implementations of 4:2 compressors based on approximate computing are reported in9,14,]15. In16, the authors propose an OR gate–based compressor tree that reduces the accumulation layers, thereby reducing the overall complexity of the multiplier. Similarly, two approximate multipliers are presented based on an approximate 4:2 compressor and imprecise 4-bit full adders14. Another methodology aims to minimize the computational complexity by segmenting the partial PPR into several stages15. The execution is centered around utilizing an approximate 4:2 compressor, a full adder, and a sum generation network. Dynamically reconfigurable parallel multipliers that utilize dual-mode 4:2 compressors are introduced in17. Depending on the application’s requirements, these multipliers can switch between approximate and precise modes. Beyond the incorporation of approximate 4:2 compressors, a similar strategy for designing approximate multipliers relies on the use of approximate adders18. In19, five distinct approximation adders are introduced. The use of these approximate adders provides less power consumption and on-chip resources, as observed in20,]21.

While it is common practice to use approximate compressor trees to design multipliers for application-specific Integrated circuit (ASIC) implementations, the method does not especially lend itself to field programmable gate arrays (FPGAs). The primary reason for this is the distinction in architecture of the ASICs and FPGAs, which precludes optimizations at the gate level. Most of the current approximate multiplier designs have been described and compared essentially for ASIC implementations, where fine-grained library control over standard-cell libraries allows exact gate-level approximation and highly optimized timing closure18. In ASICs, approximation is normally carried out at gate or compressor levels, with designers taking advantage of complete control over physical design, placement, and routing in order to reduce critical path delay and area overhead. In contrast, FPGA implementations use fixed look-up table (LUT) based logic blocks and pre-defined routing structures. Gate-level approximation is not always an efficient mapping onto FPGA LUT primitives; several simplified gates might still occupy entire LUTs, and interconnect delays tend to dominate critical paths. Additionally, FPGA timing closure relies heavily on the packing of logic into LUTs and routing congestion management, which is very different from ASIC flows. Such differences mean that a direct porting of ASIC-tailored approximate multiplier designs to FPGAs will have suboptimal resource utilization, timing, and power. The fundamental framework of FPGAs includes LUTs and some built-in primitives such as Carry4. Both these elements are constrained by the number of inputs they can support. Therefore, any optimization strategy for FPGA platforms must leverage these components.

Many recent efforts are focused on the design of approximate multipliers for FPGA technology. One common method includes deploying an approximate 4 × 2 multiplier cell based on truncating the least significant partial product terms22. Using the cell as a building block, the authors in22 propose two different multiplier designs: one focuses on accurate summation of partial products, whereas the other emphasizes approximate summation. Both configurations are well-suited for FPGA implementation. A similar modular approach is proposed in23 wherein the authors use the concepts of LUT-sharing and carry-switching to design FPGA-specific 4 × 4 multipliers. The basic multiplier cell is then used to construct higher-order multipliers. Compared to the Xilinx exact multiplier, their 8 × 8 approximate design achieves up to 38.75% power savings, 17.29% lower delay, and 28.17% area reduction. The author/s in24,]25 use different methods called approximate mirror adder (AMA) on FPGA technology. AMA aims to reduce the chip area and power by introducing errors in the Sum or Cout in full adders at the cost of accuracy. Further, AMA-based five different units of approximate multiply-accumulate (MAC) are presented in26. These designs have a remarkable reduction in chip resources and dynamic power, and increase the maximum frequency of operation compared to the exact MAC implemented designs. The authors in4 cite a comparison suggesting that the Dadda multiplier can be faster and less complex than the Wallace tree multiplier at the gate level. Amongst the various architectures developed for fast multiplication, the Dadda multiplier is highlighted as an advanced form of column compression multiplier, building upon earlier parallel multiplier designs. The Dadda approach can minimize the number of adder stages required to sum the partial products, enabling a total delay that scales logarithmically with the operand size27. Recent work has explored embedding approximation within the partial-product reduction stages of Dadda multipliers3,28 originally proposed simplified 4:2 compressor and adder circuits that drop certain carry outputs to reduce logic and power, trading off a small accuracy loss. Building on these ideas4,27, developed Dadda multipliers using approximate adders/compressors. For example, an almost‐full‐adder cell was used in an 8-bit Dadda tree to cut device usage, and gate‐level 4:2 compressors were introduced in 32-bit and 8-bit Dadda designs27,29. Likewise, several error‐tolerant adders were inserted into an 8 × 8 Dadda multiplier and characterized for results18.

While approximate multipliers based on truncation-based cells, reduced-compressor cells, and approximate adder cells have shown attractive area and power reductions on FPGA platforms, they have their shortcomings. For instance, while truncation-type 4 × 2 and 4 × 4 building blocks22,23 save logic at the cost of dropping least-significant partial products, they provide only rough, magnitude-oriented error control and do not take full advantage of FPGA LUT packing or routing properties. They are also constrained in terms of the operand bit widths as higher order multipliers are constructed using recursive usage of lower order 4 × 2 and 4 × 4 multiplier cells. Approximate mirror adders minimize transistor count and dynamic power24,25,26, but these approaches mainly target gate-level optimizations and describe resource-count or dynamic power improvements without handling the interconnect and routing-constrained delay that dominates FPGA performance. Works that integrate approximation into Dadda trees and 4:2 compressors show that carry-dropping and reduced-compressor methods can lower logic depth and energy3,27,28, but such methods tend to address approximation at the gate/topology level and thus have limited control over where error is inserted, e.g., they tend not to be able to maintain MSB correctness while aggressively approximating LSB blocks. Evidently, they fail to deliver fine-grained, design-time, or run-time precision scaling. To summarize, while the above-mentioned methods do provide promising results, they do not present an integrated FPGA-aware methodology that (i) re-arranges Boolean expressions to accommodate LUT primitives more efficiently, (ii) encodes and packs partial-product logic to reduce routing and LUT fragmentation, and (iii) unveils fine-grained precision scaling, which is area and timing-aware. Our work specifically addresses such FPGA-specific issues with Boolean restructuring, LUT encoding, and precision scaling methodology with the aim of minimizing LUT packing inefficiency, alleviating routing congestion, and providing fine-grained accuracy–performance tuning within the limits of FPGA fabrics.

Besides these multiplier and compressor-based designs, some recent studies have been devoted to constructing hybrid and input-conscious arithmetic components that also exhibit the diversity of approximate computing. In30, the authors present an approximate hybrid square rooter (AHSQR) architecture that comparatively utilizes array-based and logarithmic square rooters in a carefully balanced manner regarding hardware cost and accuracy of computation. By using an exact restoring array unit to process the most important bits and approximating the rest by logarithmic operation, the design achieved up to 70% area reduction, 2.5x speedup, and 75% power reduction when compared to exact square roots, while keeping reasonable error margins. In the same way, in31, the designers proposed a considerable input extraction-based approximate adder (SIEAA) that detects and handles only the most important bits of the operands dynamically using a minimum-width exact adder while bypassing or approximating the rest of the bits. Similarly32, investigated array and logarithmic structures in arithmetic circuits to illustrate how bit-width partitioning, pattern-aware truncation, and simplified functional units can be used to implement scalable and energy-efficient approximate computing hardware.

Although these pieces of work evidently identify the promise of hybrid and significance-driven approximation methods, their emphasis has mostly been on high-level algorithmic and architectural innovations, frequently in ASIC-focused settings or without specifically targeting the FPGA-specific issues of LUT mapping, routing congestion, and accuracy tuning. Specifically, issues like LUT packing efficiency, fine-grained precision scalability, and timing optimization in FPGA fabrics are not addressed or are only implicitly managed. Our suggested contribution overcomes these very particular limitations by integrating three FPGA-focused methods into one unified, systematic approach. First, Boolean restructuring rearranges and factors the arithmetic operations so that influential terms (MSBs and key carry paths) are maintained precise while less influential terms are algebraically rearranged for more compact combinational packing; this lowers logic depth and carry propagation without compromising the main contributors to result accuracy (distinguish from strict truncation or carry-drop schemes in3,22. Second, LUT packing and encoding maps compressor and partial-product functions into a single LUT (and, where necessary, into FPGA carry-chains) to keep inter-LUT routes at a minimum. This minimizes setup/hold violations, and leverages the FPGA fabric for greater achievable maximum frequency — a mapping process not undertaken or documented by many earlier compressor/adder designs25,26,27. Third, precision scaling yields block-level, fine-grained control of approximation degree (not merely whole-word truncation), allowing designers to balance error metrics (e.g., NMED, MED) against timing and power budgets with known granularity. In contrast to fixed truncation or single-design AMA methods, our scaling is parameterizable by operand width and quality of service requirements and can be combined with Boolean restructuring such that MSB blocks are exact and LSB blocks are heavily approximated (solving for the limited granularity of18,26. Together, these three components constitute a consolidated FPGA-aware design flow wherein: (i) our approach analytically places bounds on error propagation for the precision map selected, (ii) our approach applies Boolean-level transformation to yield LUT-friendly logic chunks, and (iii) our approach uses an LUT-packing phase that minimizes interconnect and enhances operating frequency. To the best of our knowledge, this is the first attempt to consciously combine Boolean restructuring, FPGA LUT-based encoding, and fine-grained precision scaling as an overall methodology for approximate multipliers and to analyze its advantages against earlier truncation/AMA/compressor-based approaches on real-world FPGA targets27,28,29. The outcome is a series of multipliers that preserve the resource and power benefits found in earlier work yet provide better LUT usage, lower routing delay, tunable accuracy, and verifiable frequency gains on FPGA mappings.

This work begins by revisiting the exact 8-bit Dadda multiplier. A robust, well-structured, accurate multiplier delivers predictable performance and sets the upper bound for accuracy, so that controlled simplifications can be easily introduced without unacceptable degradation. Building on that foundation, this paper presents an accurate and approximate 8-bit Dadda multiplier. It provides a comprehensive evaluation of its accuracy, power consumption, and resource utilization on a modern FPGA platform. Moreover, FPGAs have become a powerful enabler in the healthcare domain due to their ability to deliver high performance, low latency, and energy-efficient computation to cater to various healthcare applications. The aim is to strike an optimal balance between these metrics, ensuring the design remains lightweight and energy-efficient while maintaining image-processing quality within acceptable bounds, which is crucial to any medical imaging task. The main contributions of this paper are as follows:

-

Accurate Dadda Multiplier Baseline: A fully accurate 8-bit Dadda multiplier is implemented and rigorously characterized on an FPGA, with precise measurements obtained for critical-path delay, power consumption, and LUT utilization. These measurements serve as a baseline for evaluating the impact of approximation.

-

Controlled Approximation Methodology: A technique referred to as precision scaling is introduced, implemented at the architectural level through the removal of hardware corresponding to the least significant bits (LSBs) in the Dadda reduction tree.

-

Boolean-Network-Driven FPGA Mapping: In contrast to previous approaches that directly map Boolean equations onto LUTs or carry chains, the proposed method extracts and restructures the multiplier’s Boolean network to eliminate redundant logic. The resulting netlist is optimally mapped onto both LUTs and dedicated Carry4 primitives, yielding a more efficient implementation than conventional synthesis flows.

-

Comprehensive Metric Evaluation: The proposed approximate multiplier is evaluated in comparison with the exact baseline and recent related works, with accuracy metrics (MED and NMED), dynamic power consumption, and area utilization (LUTs) reported for a Xilinx 7 Series device.

-

Image-Processing Case Studies: To demonstrate the practical applicability of the proposed multiplier, it is integrated into three representative medical image processing tasks: edge detection, image smoothing, and image sharpening. The perceptual quality of the processed images is evaluated using the Peak Signal-to-Noise Ratio (PSNR), confirming the multiplier’s effectiveness for healthcare-oriented imaging applications.

The remaining paper is organized as follows. “Preliminary work” describes the initial steps in developing an effective methodology. “Dadda algorithm” gives an insight into the Dadda algorithm and how it is used in binary multiplication. “Proposed Multipliers” describes the design of the proposed accurate and approximate 8-bit Dadda Multipliers. In “Results and discussion”, a comparative analysis with state-of-the-art multipliers is done, and the results are shown. “Conclusion” describes the use of the proposed design in image processing applications to validate the design. Finally, conclusions are drawn and references are listed at the end.

Preliminary work

In Xilinx FPGAs, LUTs and 4-bit fast Carry4 primitives are the fundamental building blocks used to implement digital logic. By changing the programmed INIT value of the LUTs, one can implement any logic function or even configure the LUT as a small RAM or shift register, as supported in modern FPGAs. Notably, Xilinx provides a LUT6_2 primitive – a 6-input LUT with two outputs. The LUT6_2 can be used either as a single 6-input logic element or as two separate 5-input logic elements sharing inputs, as shown in Fig. 1.

Carry4 is another device primitive designed to handle carry propagation efficiently, which is essential in arithmetic operations like addition and multiplication. Unlike a general routing fabric, the Carry4 chain provides a fast and dedicated pathway for propagating carry signals across bits, significantly reducing delay in multi-bit operations. The schematic of a Carry4 primitive is shown in Fig. 2.

Some error metrics are frequently used to quantify the accuracy of approximations3. Error distance (ED) is the magnitude of the difference between the exact and the approximate output. The mean error distance (MED) is the average of the ED values for a collection of input-output samples. The MED normalized by the maximum exact result for a collection of input patterns is known as the normalized mean error distance (NMED). The output image quality concerning a standard image is measured using the peak signal-to-noise ratio (PSNR). The mathematical formulations for these metrics are given in Table 1.

Dadda algorithm

The Dadda algorithm is a classic tree-based partial-product reduction method for binary multipliers, introduced by Luigi Dadda in 1965. The approach arranges the AND-generated partial products into columns and then compresses them in stages using (3:2) counters (full adders) and (2:2) counters (half adders). Unlike the Wallace tree, Dadda design delays reduction until necessary; it defines a sequence of maximum column heights, e.g., 2, 3, 4, 6, 9…Only the columns that are higher than the current height are compressed by Dadda at each stage using the fewest adders required to reach that threshold. Until there are just two rows of bits left for the last carry-propagate addition, this operation is repeated. The reduction tree that results from Dadda’s schedule has fewer gates and, therefore, less hardware space than a less-optimized design because it decreases the overall number of adders. Figure 3 shows the configuration of the 8-bit Dadda multiplier. All five stages of Dadda’s multiplication are demonstrated.

An 8-bit Dadda multiplier’s distinctive dot diagram is the first step in its design. Each dot is replaced with its corresponding logic node, AND gates for partial products, full/half adders for reduction, and these nodes are connected by the reduction topology to convert this graphical representation into a Boolean network. The result is a directed acyclic graph of Boolean functions in which each adder is decomposed into its sum and carry output functions. By structurally extracting these equations from the dot diagram, the Boolean network faithfully represents the data flow and logic dependencies of the multiplier as shown in Fig. 4, laying the groundwork for further optimization, synthesis, and approximation studies.

Proposed multipliers

This section presents two key methodologies integrated into our proposed accurate Dadda multiplier design, aimed at enhancing hardware efficiency in arithmetic computing. The first approach introduces a Hybrid Carry-Look Ahead (HCLA) adder, which is strategically applied in the initial stages of partial product reduction. This technique leverages simplification in carry propagation to reduce switching activity and logic complexity, thereby lowering power consumption. In the subsequent reduction stages, we propose a novel approach of Boolean restructuring, which is integrated into the Dadda tree architecture to optimize the hardware cost. This combination facilitates a compact and power-aware implementation while preserving the structural advantages of the traditional Dadda multiplier. Furthermore, once the proposed accurate multiplier is completed, the design of an approximate multiplier is presented by introducing a technique called precision scaling, in which approximation is done at the architectural level. In this technique, all the hardware associated with the generation of lower significant product bits of an exact multiplier is removed. The overarching objective of our proposed design is to achieve minimal device utilization and reduced power consumption, while maintaining computational performance that closely approximates the results of exact multiplication.

Proposed 8 × 8 accurate multiplier

In our proposed methodology, the classical 8‑bit Dadda multiplier’s structure is revisited, and then two enhancement strategies are introduced. The exact 8-bit Dadda tree is constructed as shown in Fig. 5, which highlights the various types of adder stages and the final adder. This precise layout pinpoints the locations where HCLA can be integrated in the multiplier and how Boolean restructuring can be applied throughout the reduction network.

Hybrid carry look-ahead adder

For the mentioned configuration, the HCLA adder is used in four stages as shown in Fig. 5. In this approach, the carry chain is connected to four 6-input LUTs. The four LUTs produce the carry-generate from O5 and carry-propagate signals from O6 for the carry chain. The carry chain performs the function of a 4-bit carry-look-ahead adder. The single LUT is behaving as a Half Carry Look-Ahead Adder, but when the carry-generate and carry-propagate signals are used to generate full Sum and full Carry, carry signals propagate sequentially through the chain, effectively mimicking the behaviour of a Ripple Carry Adder. This architectural blend of localized carry-lookahead logic with ripple-based propagation motivates the naming of our approach as the HCLA adder. Figure 6(a) shows the Stage 1 partial products that need to be reduced to move on to Stage 2, and Fig. 6(b) shows how the suggested HCLA adder is used in this Dadda multiplier stage, emphasizing its role in maximizing carry propagation.

Boolean restructuring- a novel approach

For the mentioned configuration, the Boolean Restructuring approach is used in the last three stages, as shown in Fig. 5. In this approach, the original Boolean network is restructured so that all the LUTs utilize their full inputs while implementing the logic function. For better understanding, this approach is demonstrated on the last reduction stage of the proposed multiplier. Output O6 of four LUTs produces the Half Sum for signals S44-S47. These O6 outputs are used in two ways: XORing in Carry4 to generate a full Sum for signals S44-S47, and as a select line for each MUX in Carry4 to generate full Carry for signals C44-C47, along with the help of Cin and one of the inputs of the half-adder, which acts as an input to each MUX. Now the O5 of the first LUT is used to generate a full Sum for signal S48, the O5 of the second LUT is used to generate a full Carry for signal C48, the O5 of the third LUT is used to generate a full Sum for signal S49, and the O5 of the last LUT is used to generate a full Carry for signal C49. Figure 7(a) shows the Stage 5 partial products that need to be restructured; Fig. 7(b) shows the use of a conventional Ripple Carry Adder to generate the outputs. Each LUT in Fig. 7(b) implements the same logic as the rightmost one. Figure 7(c) illustrates how our novel approach is used in this Dadda multiplier stage, emphasizing its role in minimizing hardware resources. The last two LUTs in Fig. 7(c) implement the logic of the first and the second LUT, respectively.

With this approach, the hardware is successfully reduced from 6 LUTs to just 4 LUTs, with optimized inputs and better critical path delay. This novel approach can be extended to design an adder of any bit length.

LUT encoding

The LUT coding approach is designed to make maximum use of the input capability of FPGA LUTs by packing several arithmetic sub-operations, including partial-product generation and local compressor functions, into one LUT primitive. Traditional designs of multipliers usually employ gate-level approximations or insertions of compressors that are subsequently mapped automatically to LUTs by the synthesis tool. This tends to result in inefficient LUT usage, where each LUT achieves only a fraction of logic and has unused inputs. In contrast, our methodology systematically examines the Boolean formulas of the arithmetic blocks, refactors them, and packs sets of similar signals into one LUT truth table. This decreases the overall amount of LUTs employed, decreases the number of inter-LUT wires, and thus alleviates routing congestion. By minimizing routing and logic depth, this approach directly helps to enhance the critical path delay and overall maximum operating frequency of the multiplier.

In the proposed accurate 8 × 8 Dadda multiplier, LUT encoding is systematically carried out by identifying logic redundancies and reusing LUTs with identical logical functionalities across different stages. In the first stage, only Type 1 (T1) LUTs are used, which are responsible for bitwise ANDing of the multiplicand and multiplier to map the partial products. To directly interface with the Carry4 primitives, these T1 LUTs also use Half Adders (HAs) to produce generate and propagate signals, which are mapped to the O5 and O6 outputs, respectively. In the second stage, three different LUT types T1, Type 2 (T2), and Type 4 (T4) are incorporated. T2 LUTs are configured to map Full Adder (FA) outputs from two Sum/Carry bits of the previous stage and a new partial product, while T4 LUTs map FA operations involving two partial products and one Sum/Carry bit from the preceding stage. The third stage exhibits the highest structural complexity and utilizes seven distinct LUT types T1, Type 3 (T3), T4, Type 5 (T5), Type 7 (T7), Type 8 (T8), and Type 9 (T9). Among these, T5 LUTs are used to map two independent outputs; O6 computes the HA Sum from a previously generated Sum/Carry bit and a partial product, while O5 maps an additional partial product. Similarly, T7 and T8 LUTs also generate two independent outputs, both of which use O6 for HA Sum computation from prior Sum/Carry bits, while O5 in T7 maps an FA Sum, and O5 in T8 maps an FA Carry from previously generated bits. In the fourth stage, seven LUT types T1, T2, T3, T4, Type 6 (T6), T7, and T8 are utilized. Notably, T6 LUTs are designed to perform similar dual-output mapping as T5, with slight variations in the input logic to reflect positional shifts in the Dadda tree compression hierarchy. Finally, the fifth stage consolidates the reduction using four LUT types: T1, T2, T7, and T8, whose functionalities have been previously discussed. These structured and hierarchical LUT encodings ensure an efficient and scalable mapping of the Dadda tree logic onto the FPGA’s fabric, maintaining both logic regularity and optimal use of the available O5/O6 resources across LUT6_2 and Carry4 elements. The different types of LUTs used and their associated INIT values are shown in Fig. 8; Table 2, respectively.

Design of 8 × 8 accurate Dadda multiplier

In this subsection, the complete schematic of the accurate 8 × 8 Dadda multiplier is presented. Each LUT6_2 cell in the schematic is explicitly labelled with its Sum and Carry outputs, and the interconnect follows the reduction stages. Figure 9 describes the complete block-level schematic. Through this schematic, one can verify timing, resource utilization, and subsequent approximation and optimization strategies.

Proposed 8 × 8 approximate multipliers

As illustrated in Figs. 10 and 11 and a precision‑scaling approximation is applied by entirely removing the LUTs responsible for generating the Least Significant Products (LSP) of the 8 × 8 multiplier. In Design 1, four LSPs (P₀–P3) are removed, as shown in Fig. 10(a) & (b), and in Design 2, this truncation is extended to five LSPs (P₀–P4) as shown in Fig. 11(a) & (b). To recover some of the lost accuracy in Design 2, a carry‑propagation mechanism is introduced for P₄’s contribution, reconstructing its effect without allocating any additional LUTs. The proposed designs immediately reduce combinational depth and LUT utilization by truncating these LSB bits at the AND-array stage, thereby avoiding any downstream reduction and summation logic for those positions. This results in a shorter critical path through the Dadda reduction tree and fewer look-up resources used in the partial-product network on the FPGA, which reduces dynamic power and increases the achievable clock frequency. This precision‑scaling approach thus offers a straightforward level for designers to trade off accuracy for resource efficiency in error‑resilient applications. In addition to the nine LUT configurations used in the accurate 8 × 8 Dadda multiplier, the approximate design incorporates a few more types of LUTs. These LUTs and their associated INIT values are shown in Fig. 12; Table 3, respectively.

Note that in the exact multiplier, the carry chains are employed extensively to propagate the carry across the adder tree, so that key additions (such as the last accumulation of partial products) can be implemented with little LUT delay. While this helps to achieve high operating frequencies, it also may lead to an increase in the carry propagation paths, which can dominate the overall critical path. In the proposed approximate multiplier, Boolean restructuring and LUT encoding minimize reliance on long carry chains in lower significance areas by replacing or simplifying some stages of adders with approximated logic. The most significant areas continue to use the carry-chain design to maintain accuracy and performance, with the approximated lower-part logic being compacted in LUTs without needing full carry propagation. This selective utilization of carry chains minimizes the effective carry path, decreases routing pressure, and results in both lower delay and better LUT utilization. By striking a balance between LUT-based approximation and carry-chain acceleration, the new design outperforms the exact equivalent with higher operating frequency while keeping error levels tolerable.

Results and discussion

Experimental setup

In this paper, the proposed multipliers have been implemented in VHDL and synthesized for the xc7a15tcpg236-2 device of Xilinx Artix-7 family using Vivado 2022.2 to generate detailed reports about area, latency, and dynamic power values. Area is measured in terms of LUTs, registers (RG), and slices (SL) utilized. Since LUTs are the basic logic elements in FPGAs, performance-accuracy trade-offs are drawn with respect to LUT usage only. Timing concerns are associated with the critical path delay (CPD) and the maximum operating frequency (Fmax). CPD includes logic delay and the routing delays. All the designs are pipelined, and inherent Digital Clock Managers (DCMs) have been used to control the mapping of the clocking resources onto the underlying FPGA fabric. This helps in keeping the clock skew to a minimum. Additionally, placement and routing congestion that may occur during implementation are mitigated by enabling the phys_opt_design property in the implementation flow, which helps with the congestion by performing timing-based physical optimization. Additionally, the keep_hierarchy option in the synthesis settings has been turned off to ensure that the software tool can do all possible optimizations. Owing to these settings, the report_design_analysis command post implementation reports no timing violations for the proposed designs. Apart from verifying the functionality of the design, an important result from timing analysis is the switching activity information captured in the Simulation Activity Interchange Format (SAIF) file. Since design node activity is critical for getting accurate power estimation and needs to realistically represent the actual scenario for data entering into the synthesized block, the SAIF-based approach for estimating the dynamic power (DP) dissipation has been used. Note that for FPGAs, dynamic power dissipation consists of logic, clock, and signal power. Logic power depends on the number of on-chip resources being utilized. Clock power varies with the clock frequency, and signal power depends on the switching activity and interconnect density. Dynamic power analysis is done at a clock frequency of 250 MHz for all the designs. All the multiplier designs are implemented using Xilinx Vivado 2022.2 synthesizer. The timing and physical constraints are duly provided, and a complete timing closure is ensured. For optimum results, all the multiplier designs are pipelined by inserting registers after every computation stage. Further, for the proposed multiplier, the critical path is kept to a minimum by passing the O5 output of every LUT through the register.

Evaluation of proposed multipliers

To provide a comprehensive view of the hardware cost and performance, our accurate 8 × 8 Dadda multiplier is evaluated against various accurate designs from the literature, as shown in Table 4. The performance across all designs is assessed by LUT, register, and slice count, critical‑path delay, and dynamic power dissipation. Compared to the area-optimized Xilinx IP multiplier, our proposed accurate multiplier provides up to 4%, 19%, and 9% reduction in LUT, register, and slice utilization, respectively. When compared against the speed-optimized Xilinx IP multiplier, our proposed design shows an improvement of 8% in maximum achievable operating frequency. Similarly, a reduction of 13% and 5% in dynamic power dissipation is observed when compared to area-optimized and speed-optimized Xilinx IP multipliers, respectively. Building on this robust baseline, our approximate multipliers are evaluated for accuracy in terms of MED and NMED. The comprehensive evaluation of the approximate multiplier is presented in Table 5, which compares the hardware performance and error metrics of the proposed 8 × 8 approximate multipliers with state-of-the-art approximate multipliers. Compared with the area-optimized Xilinx IP Multiplier, our approximate multiplier 1 shows an improvement of 15%, 29% and 13% in LUT, register, and slice count, respectively. For approximate multiplier 2, the improvement factors are 23%, 31% and 13% respectively. Similarly, an improvement of 67% and 54% for approximate multipliers 1 and 2 in the maximum achievable frequency is observed when compared to the speed-optimized Xilinx IP multiplier. Dynamic power dissipation is also reduced by 25% and 18% for approximate multiplier 1 and by 41% and 35% for approximate multiplier 2 when compared to area-optimized and speed-optimized Xilinx IP multipliers. When compared to the state-of-the-art approximate multipliers, only AXM-8_1 from38 shows slightly better MED than our proposed approximate multiplier 1. However, this small improvement is achieved at an extra overhead of 46%, 54% and 32% in LUT count, maximum operating frequency, and dynamic power dissipation, respectively. To get a better perspective of the accuracy versus performance trade-offs, Figs. 13(a) and (b) present the Power Delay Product (PDP)-LUT-NMED and Power Delay Area Product (PDAP)-NMED plots for different multipliers. Our proposed approximate multipliers 1 and 2 show an improvement of 18% and 37% in PDAP over the most efficient design (HSLP_1134) available in the literature. Similarly, an improvement of 83% and 87% in PDAP is reported over the most accurate design (AXM-8_1) reported in the literature. This performance improvement is achieved at the cost of a minor reduction in accuracy. The proposed multipliers achieve higher accuracy primarily due to the selective optimization of LSBs. Unlike conventional approximate multipliers that apply uniform approximation across all bits, our method selectively preserves critical bits, resulting in a significant reduction of mean error distance, as demonstrated in Table 5. Similarly, using LUT-based encoding and controlled carry propagation enables an efficient packing of the logic nodes, thereby increasing the integration level of the underlying fabric. A high integration level ensures that the interconnects between the LUTs are mostly local, which reduces the critical paths. Additionally, careful placement of registers along global interconnects ensures that the FPGA routes do not become a bottleneck for our designs. Theoretically, our accurate and proposed multipliers will have a critical path that is only limited by the delay associated with a single LUT and the Carry4 primitive.

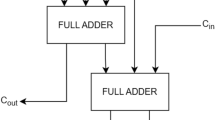

The proposed 8-bit designs can also be extended to higher-order multipliers. However, as the operand word length increases, the manual encoding of LUTs and their placement becomes complicated. This may lead to larger interconnect delays and, in some cases, routing congestion. To overcome this problem, higher-order multipliers are synthesized by recursively using the lower-order 8-bit multipliers. This has two advantages: first, the 8-bit multipliers are already optimized with respect to resource utilization and timing closures, hence no routing congestion is observed. Second, this modular approach gives designers the flexibility to choose an appropriate approximate multiplier as per the application requirement. For example, a 16 × 16 multiplier can be synthesized by using four 8 × 8 multipliers as per the schematic of Fig. 14. The level of accuracy can be controlled by choosing different multipliers for M1 – M4. In the foregoing analysis, four approximate 16 × 16 multipliers are presented based on different permutations for multipliers M1 – M4 as specified in Table 6. For an exact 16-bit multiplier, all 8-bit multipliers are exact. For approximate 16-bit multipliers, only the most significant 8-bit multiplier (M1) is exact. For approximate-16-1, all the least significant 8-bit multipliers (M2 – M4) are of the type “Approximate 8-bit multiplier 1.” For approximate-16-2, the least significant 8-bit multiplier (M4) is of the type “Approximate 8-bit multiplier 2,” and the next two significant multipliers (M2 and M3) are of the type “Approximate 8-bit multiplier 1.” Similarly, approximate-16-3 and approximate-16-4 are constructed using different combinations of the proposed approximate 8-bit multipliers as specified in Table 6. The performance and accuracy parameters for these multipliers and their comparison against some state-of-the-art 16-bit approximate multipliers are presented in Table 7. Again, it is observed that the proposed approximate multipliers outperform all the existing state-of-the-art approximate multipliers.

Image processing applications

The practical applicability of our approximate multipliers is validated using three image processing applications, namely image smoothing, image sharpening, and edge detection. In all the applications, the test images are imported as text files in the Vivado synthesis environment, and then filtering is performed by designing a two-dimensional convolution unit in VHDL. The filtered pixel values are written to a text file and then exported to the MATLAB environment for display and PSNR calculations. For smoothing, the 256 × 256 “cameraman” image is contaminated with the Gaussian noise of variance 0.01. An averaging 3 × 3 filter is then used to smooth the image using different approximate multipliers. The results are shown in Fig. 15. Image sharpening is performed on the 256 × 256 MR image of the brain tumour “Meningioma” by subtracting the smoothed image from the amplitude-intensified original image. The results are shown in Fig. 16. For edge detection, a 256 × 256 MR image of the brain tumour “Glioma” is used. The image is first subjected to Gaussian filtering, followed by the application of horizontal and vertical filters for edge detection. The results are shown in Fig. 17. It is observed that the processed images based on the proposed approximate multipliers have a high value of PSNR. While this may be attributed to the low MED/NMED values of the proposed multipliers, the quality of the processed images also has a strong correlation with the maximum error distance (EDmax) and its frequency of occurrence for a particular multiplier. Evidently, while the image smoothing application has the best PSNR for the AXM-8-1 multiplier, which has the least MED/NMED, the image sharpening and edge detection applications have the best PSNR for the proposed multipliers. This is due to the fact that the proposed multiplier 1 and 2 has an EDmax of 37 and 62, with a frequency of occurrence of 256 and 64, respectively. On the other hand, AXM-8-1 has an EDmax of 64 with a frequency of occurrence of 768. Therefore, there is a higher probability of image pixels being in error in AXM-8-1. During processing, a higher number of pixel values of the test images will, therefore, experience deviation from their true value, resulting in a lower PSNR of the processed image. It is, therefore, imperative to consider MED, NMED, EDmax, and the frequency of occurrence of EDmax while choosing a particular approximate multiplier for a given application.

Conclusion

In this paper, we presented the design of an accurate and approximate 8-bit multiplier based on the Dadda algorithm, with the focus on utilizing the FPGA primitives such as LUT6_2 and Carry4 optimally. Our analysis reveals that the proposed accurate multiplier provides the indispensable baseline performance, ensuring better timing, resource utilization, and power characteristics, while controlled approximation unlocks substantial hardware savings for error‑tolerant tasks. While most of the existing approaches rely on the in-built optimization strategies of the synthesis tool, our methodology emphasized the importance of Boolean restructuring to achieve optimized resource utilization, timing performance, and power efficiency in FPGA-based designs. The detailed analysis carried out in this work concludes that our proposed designs strike a better accuracy-performance trade-off when compared with the current state of the art. The performance improvement was not only observed at the stand-alone level but also propagated to the application level. A major limitation of the proposed designs is the larger number of LUT types that resulted from the Boolean restructuring of the Dadda multiplier trees. As many as 13 different types of LUTs were needed to design the approximate multipliers. While this resulted in optimal resource utilization, the logical effort required in the manual encoding of these LUTs is quite intense. Our future endeavours will, therefore, focus on trading off some accuracy in lieu of developing restructuring methodologies that result in a smaller number of LUT types. This will reduce the logical effort for LUT encoding and will also ease the direct design of higher-order multipliers without relying on the recursive usage of lower-order multipliers, which always have some area overhead.

Data availability

No data were used in the study presented in this article.

References

Liang, J., Han, J. & Lombardi, F. New metrics for the reliability of approximate and probabilistic adders. IEEE Trans. Comput. 62 (9), 1760–1771 (2014).

Jiang, H., Liu, C., Liu, L., Lombardi, F. & Han, J. A Review, classification and comparative evaluation of approximate arithmetic circuits. ACM J. Emerg. Technol. Comput. Syst. 13 (4), 1–34 (2017).

Reddy, K. M., Vasantha, M. H., Kumar, Y. B. & Dwivedi, D. Design and analysis of multiplier using approximate 4–2 compressor. AEU - Int. J. Electron. Commun. 107, 89–97 (2019).

Pathak, K. C., Sarvaiya, J. N., Darji, A. D. & Diwan, S. An efficient Dadda multiplier using approximate Adder. In 2020 IEEE Region 10 Conference (TENCON), Osaka (2020).

Niemann, C. & Rethfeldt, M. Timmermann approximate multipliers for optimal utilization of FPGA resources. In 24th International Symposium on Design and Diagnostics of Electronic Circuits & Systems (DDECS), Vienna, Austria (2021).

Adelgawad, A. Low-power multiply accumulate unit (MAC) for future wireless sensor networks. In IEEE Sensors Applications Symposium Proceedings, Galveston, TX, USA (2013).

Nakahara, H. & Sasao, T. A Deep convolutional neural network based on nested residue number system. In 25th International Conference on Field Programmable Logic and Applications (FPL), London, UK (2015).

Tung, C. W. & Huang, S. H. A high-performance multiply- accumulate unit by integrating additions and accumulations into partial product reduction process. IEEE Access. 8, 87367–87377 (2020).

Edavoor, P. J., Raveendran, S. & Rahulkar, A. D. Approximate multiplier design using novel dual-stage 4:2 compressors. IEEE Access. 8, 48337–48351 (2020).

Radhakrishnan, D. & Preethy, A. P. Low power CMOS pass-logic 4 – 2 compressor for high-speed multiplication. In Proceedings of the 43rd IEEE Midwest Symposium on Circuits and Systems, Lansing, MI, USA (2000).

Wang, Z., Jullien, G. A. & Miller, W. C. A new design technique for column compression multipliers. IEEE Trans. Comput. 44 (8), 962–970 (1995).

Lin, C. H. & Lin, I. C. High accuracy approximate multiplier with error correction. In 31st International Conference on Computer Design (ICCD), Asheville, NC (2013).

Ha, M. & Lee, S. Multipliers with approximate 4 – 2 compressors and error recovery modules. IEEE Embed. Syst. Lett. 10 (1), 6–9 (2018).

Rashidi, B. Efficient and low-cost approximate multipliers for image processing. Integr. VLSI J. 94, 102084 (2024).

Anguraj, P. & Krishnan, T. Design and realization of area- efficient approximate multiplier structures for image processing applications. Microprocess. Microsyst. 102, 104925 (2023).

Yang, T., Ukezono, T. & Sato, T. Low-power and high-speed approximate multiplier design with a tree compressor. In Proceedings of the 35th International Conference on Computer Design (ICCD), Boston, MA, USA (2017).

Akbari, O., Kamal, M., Kusha, A. & Pedram, M. Dual-quality 4:2 compressors for utilizing in dynamic accuracy configurable multipliers. In IEEE Transactions on Very Large Scale Integration (VLSI) Systems. Vol. 25(4). 1352–1361 (2017).

Anusha, G. & Deepa, P. Design of approximate adders and multipliers for error tolerant image processing. Microprocess. Microsyst. 72, 7 (2020).

Gupta, V., Mohapatra, D., Raghunathan, A. & Roy, K. Low-power digital signal processing using approximate adders. IEEE Trans. Comput. Aided Des. Integr. Circuits Syst. 32 (1), 124–137 (2013).

Mirzaei, M. & Mohammadi, S. Low-power and variation-aware approximate arithmetic units for image processing applications. Int. J. Electron. Commun. 138, 153825 (2021).

Ahmadi, F., Semati, R. M., Daryanavard, H. & Minaeifar, A. Energy-efficient approximate full adders for error-tolerant applications. Comput. Electr. Eng. 110, 108877 (2023).

Ullah, S., Rehman, S., Shafique, M. & Kumar, A. High-performance accurate and approximate multipliers for FPGA-based hardware accelerators. IEEE Trans. Computer-Aided Des. Integr. Circuits Syst. 41 (2), 211–224 (2022).

Guo, Y., Zhou, Q., Chen, X. & Sun, H. Hardware-efficient multipliers with FPGA-based approximation for error-resilient applications. IEEE Trans. Circuits Syst. I. 71 (12), 5919–5930 (2024).

Yang, Z., Li, X. & Yang, J. Approximate compressor-based multiplier design methodology for error-Rrsilient digital signal processing. J. Circuits Syst. Computers. 29 (14), 1–24 (2020).

Masadeh, M., Hasan, O. & Taher, S. Comparative study of approximate multipliers. In Proceedings of the ACM Great Lakes Symposium on VLSI, Chicago, IL, USA (2018).

Masadeh, M., Hasan, O. & Taher, S. Input-conscious approximate multiply-accumulate (MAC) unit for energy-efficiency. IEEE Access 7, 147129–147142 (2019).

Chanda, S. et al. An energy efficient 32 Bit approximate Dadda multiplier. In IEEE Calcutta Conference (CALCON), Calcutta (2020).

Momeni, A., Han, J., Montuschi, P. & Lombardi, F. Design and analysis of approximate compressors for multiplication. IEEE Trans. Computers. 64 (4), 11 (2015).

Naresh, K., Sai, Y. P. & Majumdar, S. Design of 8-bit Dadda multiplier using gate level approximate 4: 2 compressor. In 35th International Conference on VLSI Design and 2022 21st International Conference on Embedded Systems (VLSID) (2022).

Bandil, L. & Nagar, B. C. Hardware Implementation of Unsigned Approximate Hybrid Square Rooters for Error-Resilient Applications (IEEE Transactions on Computers, 2024).

Bandil, L. & Nagar, B. C. SIEAA: significant input extraction-based error optimized approximate adder for error resilient application. Integration 101, 102317 (2025).

Bandil, L. & Nagar, B. C. Modified restoring array-based power efficient approximate square root circuit and its application. Integration 94, 102106 (2024).

Ullah, S., Sahoo, S. S., Ahmed, N. & Chaudhary, D. AppAxO: designing application-specific approximate operators for FPGA-based embedded systems. ACM Trans. Embedded Comput. Syst. 21 (3), 1–31 (2022).

Madasu, V. & Kumres, L. Enhancing efficiency and effectiveness: An innovative design for multipliers on FPGA. In Proceedings of the IEEE International Students’ Conference on Electrical, Electronics and Computer Science, Bhopal, India (2024).

Thamizharasan, V. & Kasthuri, N. High-speed hybrid multiplier design using a hybrid adder with FPGA implementation. IEEE J. Res. 69 (5), 2301–2309 (2021).

Thamizharasan, V. & Kasthuri, N. FPGA implementation of proficient vedic multiplier architecture using hybrid carry select adder. Int. J. Electron. 111 (08), 1253–1265 (2023).

Thamizharasan, V. & Kasthuri, N. Design of efficient binary multiplier architecture using hybrid compressor with FPGA implementation. Sci. Rep. 14 (8492), 1–11 (2024).

Deepsita, S., Karthikeyan, T. & Mahammad, N. Energy efficient multiply-accumulate unit using novel recursive multiplication for error-tolerant applications. Integration 92, 24–34 (2023).

Toan, N. V. & Lee, J. G. FPGA-based MulLevel approximate multipliers for high-performance error-resilient applications. IEEE Access. 8, 25481–25497 (2020).

Sayadi, L., Timarchi, S. & Akbari, A. S. Two efficient approximate unsigned multipliers by developing new configuration for approximate 4:2 compressors. IEEE Trans. Circuits Syst. I Regul. Pap. 70 (4), 1649–1659 (2023).

Khurshid, B. FPGA-based resource‐optimal approximate multiplier for error‐resilient applications. Int. J. Circuit Theory Appl. (2024).

Satti, P., Agrawal, P. & Garg, B. LORAx: A high-speed energy-efficient lower-order rounding-based approximate multiplier. Natl. Acad. Sci. Lett. 44(6), 533–539 (Springer, 2021).

Garg, B., Patel, S. K. & Dutt, S. LoBA: A leading one bit based imprecise multiplier for efficient image processing. J. Electron. Test. 36, 429–437 (2020).

Garg, B. & Patel, S. Reconfigurable rounding based approximate multiplier for energy efficient multimedia applications. Wireless Pers. Commun. 118, 919–931 (2021).

Acknowledgements

The authors extend their appreciation to the Deanship of Research and Graduate Studies at King Khalid University for funding this work through a small Research project under grant number RGP1/189/46.

Author information

Authors and Affiliations

Contributions

Aqib Amin Rather prepared the initial draft of the manuscript. Aqib Amin Rather also did the formal analysis and implemented the proposed designs, and did the post-placement and route analysis to derive the performance parameters. Aqib Amin Rather was also responsible for the error analysis presented in the work. Burhan Khurshid conceptualized the idea of designing approximate multipliers based on the Dadda algorithm. Burhan Khurshid prepared the figures for the manuscript and revised the entire manuscript to prepare the draft for the journal. Shoeib Amin Banday and Ali Ayed Algarni were responsible for acquiring the funding. Shoeib Amin Banday also revised the manuscript and was responsible for designing the application in which the proposed multiplier designs were used for evaluation.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Rather, A.A., Khurshid, B., Banday, S.A. et al. Design of high-performance, accurate, and approximate Dadda-tree multipliers for image processing applications. Sci Rep 15, 41338 (2025). https://doi.org/10.1038/s41598-025-25239-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-25239-2