Abstract

Optical Character Recognition (OCR) is a part of transformative Artificial Intelligence (AI) technology which translates printed or handwritten texts into digital, machine-readable form. These OCR systems act as an assistive tool for visually impaired people helping them with real-time text recognition and to interact with their surroundings. This paper presents “OCRNet”, a robust deep learning approach to detect and recognize alphanumeric characters in dynamic environments. Firstly, an optimized neural network with 43 layers is designed to capture the spatial features of the 62 alphanumeric characters. A Gated Recurrent Unit (GRU) is then added to capture the temporal dependencies of these characters in order to enhance feature learning. This hybrid model outperforms state-of-the-art Convolutional Neural Networks (CNNs) like EfficientNetB7, MobileNetV2, ResNet50, DenseNet121 and others by achieving a notable accuracy of 95%, precision of 94%, recall of 95% and F1-score of 96%. In order to promote portability and affordability, this model is implemented and tested on a Raspberry Pi platform and has an inference time of 120ms. Tailored for visually impaired users, the proposed system provides real-time text recognition and audio feedback thus enabling seamless interaction with textual content in everyday scenarios like street signs, documents, and digital displays.

Similar content being viewed by others

Introduction

The ability to read and interpret text is an integral part of everyday life, from navigating street signs to understanding product labels. However, for visually impaired individuals, accessing textual information remains a significant challenge, often affecting their ability to live independently. Optical Character Recognition (OCR) has emerged as a transformative assistive technology, that helps to overcome this barrier by converting visual text into machine-readable formats1. When combining this OCR technology with real-time audio feedback, it helps the visually impaired users to interact with the textual content in their surroundings. This improves their quality of life and they can become more independent. Despite significant advancements in OCR, achieving reliable performance in real-world dynamic environments remains a challenge. Traditional OCR systems were primarily designed for less dynamic environments in a controlled manner such as scanned documents. These systems often struggle with complex real-world scenarios involving diverse fonts, uneven lighting, noisy backgrounds, and varying text orientations2,3. Additionally, deploying these systems on portable low-cost devices introduces computational constraints, necessitating lightweight yet accurate models4,5.

Recently advancements in deep learning have greatly enhanced the OCR capabilities, primarily through the use of Convolutional Neural Networks (CNN) and Recurrent Neural Networks (RNN)1,6. Architecture techniques such as Convolutional Recurrent Neural Network (CRNN) and Efficient and Accurate Scene Text Detectors (EAST) have greatly improved the scene text detection and recognition of text in robust environment allowing for more reliable text extraction. Additionally, the irregular and distorted text are managed properly by transformer-based models such as Vision Transformers (ViT) and Text transformers (TrOCR) and this further boosted the performance of OCR7,8,9,10. All these high-performance models require hefty computational demands. So, these are not suitable for deployment on edge devices such as Raspberry Pi.

To overcome these challenges, OCRNet, a hybrid deep learning-based OCR system is designed specifically to support the visually impaired individuals in real world environments. The primary objective is to build a lightweight, real-time and high accuracy OCR solution that can be deployed on edge devices with limited computational resources. The proposed architecture integrates a Convolutional Neural Network (CNN) for extracting spatial features and a Gated Recurrent Unit (GRU) to efficiently model the sequential nature of text. The model is trained using a comprehensive dataset of alphanumeric characters that includes a wide range of fonts and distortions, ensuring strong generalization across varied visual conditions. To further improve robustness against noise and poor image quality, classical image preprocessing methods—including binarization, dilation, and segmentation—are applied before feature extraction. The final system is implemented on a Raspberry Pi, demonstrating its potential as a cost-effective and portable assistive device capable of converting visual text into audio output in real time, thus supporting greater independence for individuals with visual impairments. The key contributions of this work include

-

Hybrid CNN-GRU model: A deep learning-based OCR system that integrates CNN for feature extraction and GRU for efficient sequence modeling, achieving high recognition accuracy in dynamic environments.

-

Real-time assistive system: Tailored for visually impaired users, the system provides real-time text recognition with immediate audio feedback to support everyday navigation and reading tasks.

-

Edge device deployment: Implementation on Raspberry Pi, demonstrating the feasibility of a lightweight, portable, and cost-effective OCR solution for real-world assistive applications.

The organization of the paper is as follows: Section “State-of-the-art” presents the state-of-the-art methods, Section “Methodology” explains the proposed methodology, Section “Results and discussions” discusses the results and Section “Conclusion” concludes the paper.

State-of-the-art

In recent years, optical character recognition (OCR) has progressed rapidly through the integration of deep learning methods. These modern approaches have replaced earlier systems that relied on manually crafted features, offering greater adaptability and improved accuracy through end-to-end learning frameworks. Early OCR systems, such as Tesseract, relied on handcrafted features and Hidden Markov Models (HMMs) but struggled with recognizing distorted, noisy, or complex text. With the advancement of deep learning, the emergence of CNN and RNN allowed models to learn features directly from raw images without manually extracting features. A significant breakthrough was the addition of Bidirectional Long Short-Term Memory (Bi-LSTM) networks with CNNs, enabling sequence modeling by capturing both forward and backward contextual information, leading to notable improvements in recognition accuracy1. Combining the CNNs with Bi-LSTMs formed a hybrid architecture and this was able recognize the text even though it is irregular or distorted especially in natural scene images. These hybrid architectures demonstrated a superior text recognition performance. Under more challenging conditions, several studies mentioned that CNN-BiLSTM-CTC (Connectionist Temporal Classification) architectures can be used to improve the text detection accuracy. These architectures play more beneficial role in scenarios involving curved or perspective-transformed text, where traditional OCR techniques fail11,12. Beyond sequence modeling, pre-trained deep learning architectures such as EfficientNet, MobileNetV2, and DenseNet121 have gained popularity in OCR due to their efficient feature extraction capabilities with lower computational overhead13,14,15. EfficientNet architecture demonstrated superior accuracy while maintaining computational efficiency in OCR applications by optimizing the scaling of convolution layers. MobileNetV2 is designed for light weight processing and has been successfully deployed in OCR systems for resource-constrained environments. To capture the fine-grained details that are crucial for text recognition tasks, DenseNet121 plays an important role with its densely connected layers.

More recently, transformer-based models have revolutionized OCR by overcoming the limitations of recurrent architectures. Initially developed for Natural Language Processing (NLP), transformers have been adapted for OCR due to their ability to recognize and retain long-range dependencies and contextual relations within images. Architectures such as the Vision Transformer (ViT) and Text transformers (TrOCR) have demonstrated remarkable performance by eliminating the need for recurrent layers. These transformers use self-attention mechanisms to process sequential information across an entire image in parallel, unlike CNN-RNN models. This feature makes them effective for recognizing complex, irregular, or noisy text7. The accuracy of OCR is improved by integrating transformers into the text recognition pipeline in TrOCR8.

Traditional computer vision preprocessing techniques plays an important role in OCR systems to tackle the challenges such as distortions, noise, and poor image quality. Binarization remains a common preprocessing step, converting grayscale images into binary formats to enhance text visibility before neural network processing16. Dilation and morphological operations are often employed to refine character structures, making text easier to segment and recognize. Additionally, attention mechanisms have been integrated into OCR models to focus on important text regions dynamically. Residual attention networks, for instance, have shown improvements in handling cluttered or overlapping text in complex images17. Various CNN-based frameworks have been reported in the literature for optical character recognition. Table 1 highlights some of the most notable studies and their corresponding methodologies and performance outcomes.

In spite of all these advancements, various challenges still persist in OCR systems. The most challenging are recognizing distorted text, handling multilingual datasets, and optimizing models for embedded systems. The promising approach to tackle these challenges are by integrating deep learning with traditional computer vision techniques. Modern OCR systems utilize CNNs for effective feature extraction, RNNs for sequential text processing, and attention mechanisms for dynamic focus on adaptive text region. Moreover, emerging techniques such as lightweight architectures, model quantization, and Edge AI optimizations are being explored to enable real-time performance on resource-constrained embedded devices, ensuring efficient and scalable OCR solutions18,19.

Recent advancements in unsupervised ensemble learning, especially particularly through the use of generative models like autoencoders, offer the ability to learn from unlabeled or noisy data which is a promising aspect to improve OCR robustness in unconstrained environments29. Similarly, Federated Learning frameworks30 have been getting popular for training models in a collaborative manner across distributed devices without sharing raw data. This paradigm is particularly important for OCR systems used in privacy-critical applications like personal assistive devices. Federated learning can improve the OCR generalization and protect user’s data privacy by performing distributed training and continual model attuning on edge devices. Together, these developments unlock new opportunities for scalable, privacy-sensitive, and practical OCR systems that are more suitable for various and quick-changing real-world applications.

Methodology

The development of a robust OCR system for real-world dynamic environments necessitates an intricate combination of advanced machine learning techniques, careful data preparation, and efficient deployment strategies. The flow diagram of OCR system is shown in Figure 1. The proposed system has three pipelines:

-

Data preprocessing pipeline- Enhance the character edges and ensure consistent lighting using binarization, noise reduction and normalization.

-

Optical character recognition pipeline- Design of an optimized neural network for accurate prediction of alphanumerical characters.

-

Real time deployment pipeline-Deploys the OCRNet on Raspberry Pi 4 to recognize the text and converts it to audio to assist the visually impaired.

Data preprocessing pipeline

This pipeline (shown in Figure 2) initially converts the captures images into binary format via Otsu’s thresholding31. Let I be the captured image with pixel intensity I(x,y). Otsu’s method computes a global threshold T which effectively separates the foreground pixels from the background pixels. The binarized image \({I}_{b}\)after applying T is given in Eq. (1).

where threshold \(T\) is computed using the between class variance \({\sigma }_{b}^{2}\left(\tau \right)\) for a potential threshold \(\tau .\) It is given in Eq. (2) as,

After obtaining the binarized image, morphological operations such as dilation followed by erosion is employed to decrease the noise and enhance the edges of the characters. Let \({I}_{d}\left(x,y\right)\) and \({I}_{e}\left(x,y\right)\) be the dilated image and eroded image, respectively. Both operations use a small structuring element \(s\) with pixel intensities \((i,j)\). The dilation operation, defined in Eq. (3) closes small gaps while the erosion operation, described in Eq. (4) removes small noise and isolate distinct edges.

Lastly, the pixel intensities are scaled to the range [0,1] to reduce sensitivity to changes in lighting conditions, as outlined in Eq. (5).

Optical character recognition pipeline

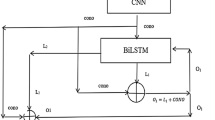

This section introduces OCRNet, a hybrid CNN-GRU architecture (shown in Figure 3) for alphanumeric characters recognition. The CNN component processes normalized grayscale input I of size 32×32 through a feature extraction module, consisting of six convolution blocks. Each block progressively learns spatial features from the input image. The first four blocks include convolution operations, batch normalization, Rectified Linear Unit (ReLU) activation, pooling, and dropout while the 5th and 6th block use separable convolutions to save the computational resources.

CNN feature extraction module

In each convolution layer, the feature map at layer \(l\) having pixel intensity \((x,y)\) after the convolution operation with the kernel size \(W\times W\) is computed as shown in Eq. (6),

where the weights, \({w}_{l}\) and biases, \({b}_{l}\) at layer \(l\) and the count of input and output channels are denoted by \({c}_{in}\) and \({c}_{out}\) respectively. After the convolution operation, the ReLU activation function shown in Eq. (7) introduces non-linearity to the feature maps which is described as,

Next, batch normalization is applied to stabilize the learning process by normalizing the feature maps. To further reduce spatial dimensions and computational complexity, max pooling is employed, retaining the most significant features in a given pooling region. For a pooling region \(R(x{\prime},y{\prime}),\) the pooled value \(P(x{\prime},y{\prime})\) is calculated in Eq. (8) as,

This hierarchical feature extraction process efficiently learns the spatial patterns in the input text images. While Blocks 1 to 4 are responsible for capturing low- and mid-level spatial features, Blocks 5 and 6 are designed to capture more abstract, high-level patterns. Experimental results indicated that model performance plateaued at around 92–93% accuracy after the fourth block. To enhance both accuracy and computational efficiency, Blocks 5 and 6 were implemented using depth wise separable convolutions. A separable convolution decomposes a standard convolution into two operations: depth wise convolution and pointwise convolution (Eq. (9) and Eq. (10)). This decomposition reduces the number of parameters and computations significantly, enabling faster inference suitable for edge deployment.

Depthwise convolution: Applies a single \({D}_{k}\times {D}_{k}\) filter per input channel:

Pointwise convolution: Applies a \(1\times 1\) filter to combine the outputs of all channels:

This reduces the computational cost from \({D}_{k}^{2}\cdot {c}_{in}\cdot {c}_{out}\) (standard conv) to \({D}_{k}^{2}\cdot {c}_{in}+{c}_{in}\cdot {c}_{out}\) (separable conv) making the architecture efficient without sacrificing learning capability. These final features are passed on to the sequential module for temporal modeling.

Sequential processing module

To further reduce the spatial dimensions while preserving the critical features of an image, global average pooling (GAP) operation is performed. For a feature map \(F(x,y,c)\) with size \(M\times N\) and \(C\) channels, the GAP operation creates a compact feature vector of size \(1\times 1\times C\) for each channel by computing the average of all the pixels in the spatial map. This is given by,

The resulting feature vector from Eq. (11) is now passed to GRU to capture the sequential patterns and to model the temporal dependencies. GRU utilizes two gates namely, reset gate and the update gate. The reset gate in Eq. (12) regulates how much previous information should be discarded, while the update gate in Eq. (13) determines the extent of past information to retain. Mathematically, the above process can be described as,

Reset gate (\({r}_{t}):\)

Update gate (\({U}_{t}):\)

Candidate hidden state \({(h}_{t}{\prime}):\)

Output hidden state (\({h}_{t}):\)

where \(\sigma\) is the sigmoid activation function, \({W}_{r}, {W}_{u},{W}_{h}, {U}_{r}, {U}_{u},{U}_{h} \text{and }{b}_{r}, {b}_{u},{b}_{h}\) are the weight matrices and biases respectively. Eq. (14) combines the current input \({x}_{t}\) and previous hidden state \({h}_{t-1}\) while Eq. (15) computes the output hidden state by the combination of old and new hidden states.

Classification module

The output hidden states of the GRU were passed through a fully connected layer, which mapped the hidden states to character logits. The logits were then converted into a probability distribution over possible characters using the softmax activation function, as given in Eq. (14).

where \(P\left({C}_{q}|I\right)\) is the probability for the character \({C}_{q}\) given the input \(I\), \({W}_{q}\) and \({b}_{q}\) are the weights and bias for the qth neuron and \(Q\) is the possible number of characters. The character with highest probability is selected as the model’s prediction for each segment of the input image.

To improve generalization and prevent overfitting, kernel regularization and dropout were applied. Kernel regularization introduced a penalty to the loss function which is determined by the magnitude of the weights as expressed in Eq. (16).

where \(\lambda\) denotes the regularization parameter, \(H\), the total number of weights, and \({w}_{i}\) represents the individual weights. Dropout randomly deactivated a few of the neurons during training phase in order to prevent co-adaptation. For a dropout rate \({p}_{r}\), the probability of retaining a neuron \({1-p}_{r}.\)

Real-time deployment pipeline

Figure 4 illustrates the schematic hardware setup of the OCRNet using Raspberry Pi 4, USB camera and speaker. The Raspberry Pi 4 equipped with a quad-core Cortex-A72 processor and 4 GB RAM, was selected due to its balance of computational power and affordability.

The USB camera acts as a visual input device, capturing real-world scenes with alphanumeric text. These images are processed onboard and fed into the quantized OCRNet model. For edge deployment, post-training quantization was done to convert weights and activations from 32-bit floating point to 8-bit integer format. Hence, this has greatly reduced its memory consumption and computational load, allowing quick inferences. Next, the optimized model was converted into the TFLite format for smooth execution on the Raspberry Pi. A Python-based custom script was developed to work with the camera module, preprocessing the input during binarization, resizing, and normalization, afterward placing it into the OCRNet model for inference. The predicted output was then converted into text, further converted to speech via the pyttsx3 text-to-speech binding, and played through the attached speaker. This real-time audio feedback helps interpret the text for the visually impaired. The hardware components were powered through the Raspberry Pi’s GPIO and USB interfaces, ensuring portability and compact deployment. This setup demonstrates a low-cost, fully embedded, and real-time OCR assistive system suitable for practical use in dynamic environments.

Results and discussions

Dataset description

The effectiveness of an OCR system relies on the diversity and quality of the dataset. For this work, a comprehensive dataset of alphanumeric characters was taken (https://www.kaggle.com/datasets/harieh/ocr-dataset), encompassing 3,475 fonts styles.

The dataset captured the complexities of real-world conditions, including varying illumination to ensure robustness to different lighting conditions, synthetic noise and blurring to simulate degraded images, random rotations and skews to account for dynamic environments, and background variations to include complex and noisy backgrounds for improved generalization. The dataset consists of 62 characters, including 10 numeric characters, 26 uppercase letters, and 26 lowercase letters, with 3,475 images per character as shown in Table 2. This resulted in a total of 2,15,450 images. This massive set is then divided into three subsets: 80% allocated for training, 10% for validation, and 10% for testing, which is presented in Table 3.

Performance assessment

In order to evaluate the efficiency of the OCRNet, various metrics such as precision, recall, F1-score and accuracy were measured. The supporting equations of which are included in Eq. (18) - Eq. (21).

where \(\alpha\) represents the true positives, \(\beta\) represents the false positives, \(\gamma\) denotes false negatives and \(\varphi\) represents the true negative samples. Another measure of a classification model’s capacity to distinguish between positive and negative classes is the Receiver Operating Characteristic - Area Under Curve (ROC-AUC) and the Precision-Recall Area Under Curve (PR-AUC). The ROC curve shows the relationship between the True Positive Rate (TPR) and the False Positive Rate (FPR) at different threshold levels. A curve closer to the top-left corner of the plot represents more favorable model performance, as it shows a greater TPR and lower FPR. The Precision-Recall (PR) curve, however, emphasizes precision-recall trade-off and thus is especially useful for imbalanced datasets.

Training environment

All simulations were performed on Google Colaboratory using an NVIDIA Tesla T4 GPU (16GB GDDR6 VRAM, 2,560 CUDA cores, and 320 Tensor cores), with Python as the programming language.

Simulation results

The development of OCRNet, a hybrid CNN-GRU model involved an iterative process, with four main configurations evaluated:

-

1.

CNN: A baseline model using only convolutional and pooling layers.

-

2.

CNN with batch normalization (CNN+BN): This configuration added batch normalization to stabilize and accelerate training.

-

3.

CNN with batch normalization and kernel regularization (CNN+BN+KR): Kernel regularization was introduced to improve generalization.

-

4.

OCRNet-CNN with batch normalization, kernel regularization, and GRU (CNN+BN+KR+GRU): The GRU layer was added to capture sequential dependencies.

Figure 5 (a) to Fig. 5 (d) illustrate the accuracy and loss plot comparisons for the above-trained models. The addition of batch normalization and kernel regularization reduced the validation loss, while further adding the GRU layer demonstrated better generalization compared to the other configurations. The proposed hybrid model significantly enhanced both training and validation accuracy, displaying its ability to model sequential dependencies effectively. The ROC curves and PR curves of the above-trained models are shown in Figure 6. From Figure 6, it is clear that the proposed OCRNet (CNN+BN+KR+GRU), achieves an AUC of 0.96 for both numeric and lowercase characters and AUC of 0.97 for uppercase characters. Similarly, the PR curve demonstrates good performance with AUC of 0.96 for all the character classes, indicating the strong robustness of the model to classify and differentiate between positive and negative classes efficiently. The objective metric comparison of the proposed model with other trained models are discussed in Table 4. The hybrid OCRNet demonstrates superior classification performance, surpassing other models in accuracy, precision, recall, and F1-score.

To substantiate the custom structure of OCRNet, the dataset in this work is evaluated against the best-performing architectures and the results are reported in Table 5. OCRNet achieves the highest accuracy of 0.95 and F1-score of 0.96 surpassing the state-of-the-art CNN models32. However, VGG19 seems to follow OCRNet closely with the accuracy of 0.94 and an F1-score of 0.93. Table 6 provides a detailed comparison of model complexity measures, including the number of parameters, the model size, inference time, and training time per epoch for various models. Among them, SqueezeNet is the lightest one with the lowest parameter counts, size, and inference time. On the other hand, models like EfficientNetB7 and VGG19 are way heavier compared to the other models, requiring more computational effort in terms of parameter count and inference time. In contrast to all the other models, OCRNet achieves a balance between complexity and performance. With 15 million parameters, model size of 50 MB, and inference time of 120ms, OCRNet achieves better performance in terms of accuracy, precision, recall, and F1-score and is moderately lightweight compared to other models like VGG19 and ResNet50. This demonstrates that OCRNet offers a favorable trade-off between model complexity and classification performance, making it suitable for real-time applications while maintaining robust results.

To further evaluate the generalization ability of OCRNet, K-fold cross-validation process33 is performed on capital letters, small letters, and digits. In this technique, the data set is divided into K subsets (folds), while the model is trained on K-1 subsets and tested on the remaining subset. This operation is repeated K times, and the final performance score is computed by averaging the result of all iterations. Tables 7 and Tables 8 present the 5-fold and 10-fold validation results on alphanumeric characters, with average values highlighted in blue. In Table 7, the highest objective values for uppercase letters, lowercase letters, and numbers were observed in the third iteration. While, in Table 8, the highest objective values were achieved in the first iteration itself. These results indicate that the proposed model demonstrates strong statistical performance in terms of K-fold cross-validation. The proposed model is compared with other OCR systems in the literature and the results are tabulated in Table 9. Models like CRNN with Bi-LSTM, CNN + LSTM, and Attention-based CNN perform well on ICDAR datasets, while Deep Text Spotter and Mask R-CNN incorporate object detection techniques and achieve an F1-score of 0.87 and 0.85 respectively. EfficientNet and MobileNetV2 balances accuracy and speed for multilingual text. DenseNet121 and Residual Attention Network achieve high F1-scores, with the latter reaching 0.92. Although a custom CNN model27 reports a higher accuracy (98%), it is limited to only uppercase characters and trained on relatively simpler datasets (MNIST and EMNIST). In contrast, OCRNet is designed for multi-class alphanumeric recognition, including uppercase, lowercase, and numeric characters, and is evaluated under more complex and varied conditions. OCRNet achieves 0.94 precision, 0.95 recall, and 0.96 F1-score, while maintaining real-time performance (8.6 FPS) on a Raspberry Pi platform, proving its superior recognition capability.

The proposed model also required fewer parameters, reducing memory usage and enabling deployment on resource-constrained devices such as mobile and edge computing platforms. The qualitative performance of OCRNet across various font styles are illustrated in Figure 7. The model was evaluated on noisy, distorted, and low-resolution text images, showcasing its robustness in handling challenging scenarios. The results confirm the proposed model’s adaptability to diverse fonts, reinforcing its effectiveness for real-world OCR applications. Additionally, Figure 8 (a) presents the hardware setup of the OCR system on a Raspberry Pi device connected with Zebronics Zeb-crisp pro digital web camera with 5P lens and Foxin FMS-475 plus 2.0 multimedia speaker, while Figure 8 (b) displays the detection results, demonstrating its suitability for deployment on resource-constrained platforms. The system efficiently detects and processes text in real-time, further emphasizing the practicality and effectiveness of the proposed solution.

To further evaluate the custom design of OCRNet, the model was tested on several benchmark datasets such as MNIST, ICDAR 2013, ICDAR 2015, and MLT 2017. As listed in Table 10, OCRNet achieved a character accuracy of 96% on MNIST dataset, which is expected due to the dataset’s clean and well-aligned grayscale images, each containing a single digit with minimal background interference. The model benefits from strong digit supervision during training, with each numeral rendered in 3,475 different fonts and subjected to extensive augmentation. On the more challenging ICDAR 2013 and 2015 datasets—comprising real-world scene text with varying orientations and background complexity—OCRNet maintained competitive accuracies of 95% and 92%, respectively, supported by strong recall and precision. Finally, when evaluated on the MLT 2017 dataset, which includes multilingual and multi-orientation scene text, OCRNet achieved 89% accuracy, reflecting its robustness under diverse and unconstrained visual conditions. The model’s ability to sustain high performance across datasets is attributed to its robust preprocessing, language-agnostic feature extraction, and GRU-based sequential context modeling, confirming its adaptability to both structured and natural scene text environments.

To enhance transparency, word-level error heatmaps based on normalized edit distance between OCRNet predictions and ground truth are generated and displayed in Figure 9. Specifically, Levenshtein distance \(d\left(x,y\right)\) is computed between the predicted string \(y\) and the ground truth string \(x\), and normalized it by the word length \(\left|x\right|\). This provides an error score as defined in Eq. (22).

A score of 0 indicates a perfect match while values closer to 1 represent completely incorrect prediction. Figure 9 illustrates an example heatmap, where shorter frequent words such as “on” and “and” were recognized with minimal error (0.0–0.1), whereas longer words such as “Systems” exhibited higher error values (\(\sim\) 0.29). This analysis provides an interpretable diagnostic tool to complement the aggregate performance metrics, thereby improving the transparency of OCRNet’s evaluation.

Ablation study

A detailed layer-wise analysis is conducted to evaluate the contribution of each convolutional block to OCRNet’s performance and the results are listed in Table 11. The first four convolutional blocks primarily extract low-to mid-level spatial features such as edges and basic shapes.

However, experimental observations revealed that the model’s performance showed minimal improvement across these blocks alone, with validation accuracy saturating around 92–93% after the fourth block. The inclusion of the fifth and sixth convolutional blocks significantly enhanced the network’s representational capacity, capturing more complex textual features and patterns. Initially implemented with standard convolutions, these two blocks increased the accuracy to 94%. Upon replacing them with depthwise separable convolutions, we observed a further gain in accuracy (up to 95%) while also reducing the model’s parameter count and inference latency. This validates the architectural decision to use separable convolutions in later layers, enabling both performance enhancement and computational efficiency, particularly suited for edge deployment on Raspberry Pi platforms. Initially implemented with standard convolutions, these two blocks increased the accuracy to 94%. Upon replacing them with depthwise separable convolutions, we observed a further gain in accuracy (up to 95%) while also reducing the model’s parameter count and inference latency. This validates the architectural decision to use separable convolutions in later layers, enabling both performance enhancement and computational efficiency, particularly suited for edge deployment on Raspberry Pi platforms.

Conclusion

This study presented OCRNet, a hybrid CNN-GRU deep learning model designed for robust and accurate OCR in dynamic environments. By integrating a 43-layer CNN for spatial feature extraction with a GRU module for sequential learning, the model achieved 95% accuracy and an F1-score of 96%, surpassing state-of-the-art CNN-based approaches. The system’s successful deployment on a Raspberry Pi with a real time inference time of 120 ms demonstrates its suitability for low power edge devices, making it an efficient OCR solution for assisting visually impaired users. Although OCRNet shows strong performance in recognizing printed alphanumeric text, its current scope does not extend to handwritten or multilingual content.

Future work will focus on enhancing OCRNet to handle diverse scripts, including handwriting and multiple languages, as well as scene text from visually complex backgrounds. In addition, the implementation of model compression strategies such as quantization-aware training and pruning will be explored to lower computational overhead and memory usage. These improvements aim to make the system more adaptable for deployment on a broader range of low-resource embedded devices.

Data availability

The dataset analyzed in this study is available in https://www.kaggle.com/datasets/harieh/ocr-dataset.

Abbreviations

- AI:

-

Artificial intelligence

- CNN:

-

Convolutional neural network

- GRU:

-

Gated recurrent unit

- RNN:

-

Recurrent neural network

- OCR:

-

Optical character recognition

- OCRNet:

-

Optical character recognition network

- TTS:

-

Text-to-speech

- ReLU:

-

Rectified linear unit

- BN:

-

Batch normalization

- KR:

-

Kernel regularization

- GAP:

-

Global average pooling

- AUC:

-

Area under curve

- ROC:

-

Receiver operating characteristic

- PR:

-

Precision-recall

- TPR:

-

True positive rate

- FPR:

-

False positive rate

- CTC:

-

Connectionist temporal classification

- ViT:

-

Vision transformer

- TrOCR:

-

Transformer-based optical character recognition

- HMM:

-

Hidden markov model

- ICDAR:

-

International conference on document analysis and recognition

- FPS:

-

Frames per second

- GPU:

-

Graphics processing unit

References

Shi, B., Bai, X. & Yao, C. An end-to-end trainable neural network for image-based sequence recognition and its application to scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 39(11), 2298–2304 (2017).

Wang, K., Babenko, B. & Belongie, S. End-to-end scene text recognition. IEEE Trans. Pattern Anal. Mach. Intell. 42(6), 1351–1363 (2020).

Long, S. et al. Scene text detection and recognition: The deep learning era. Int. J. Comput. Vis. 129(1), 161–187 (2021).

Huang, W., Gao, J. & Wang, Y. Lightweight scene text recognition with attention mechanism. Pattern Recogn. Lett. 153, 22–29 (2022).

Busta, M., Neumann, L. & Matas, J. Deep TextSpotter: An end-to-end trainable scene text localization and recognition framework. IEEE Trans. Pattern Anal. Mach. Intell. 42(10), 2327–2340 (2020).

X, et al. EAST: An efficient and accurate scene text detector. IEEE Trans. Image Process. 27(4), 1675–1689 (2019).

Khan, S. et al. Transformers in vision: A survey. ACM Comput. Surv. 54(10), Article 200 (2022).

Baek, J. et al. Character region awareness for text detection. IEEE Trans. Pattern Anal. Mach. Intell. 42(5), 1038–1052 (2019).

Lyu, P., Zhang, X., Wang, W., Wu, Y. & Bai, X. Mask text spotter: An end-to-end trainable neural network for text spotting with masked text detection. IEEE Trans. Image Process. 30, 5693–5705 (2021).

Smith, M. J. & Raskin, A. R. Assistive technologies for the visually impaired: Real-world applications and future trends. J. Assist. Technol. 14(2), 123–138 (2020).

Baek, J. et al. What is wrong with scene text recognition model comparisons? Dataset and model analysis. Int. J. Comput. Vis. 127(5), 662–684 (2019).

Wang, T., Zhu, Y., Jin, L., Luo, C. & Wang, C. Decoupled attention network for text recognition. IEEE Trans. Image Process. 28(5), 2560–2571 (2019).

Tan, M. & Le, Q. V. Efficient Net: Rethinking model scaling for convolutional neural networks. IEEE Trans. Process Anal. Mach. Intell. 42(2), 307–321 (2019).

Sandler, M., Howard, A., Zhu, M., Zhmoginov, A. & Chen, L. C. MobileNetV2: Inverted residuals and linear bottlenecks. IEEE Trans. Pattern Anal. Mach. Intell. 41(10), 2261–2268 (2018).

Huang, G., Liu, Z., Van Der Maaten, L. & Weinberger, K. Q. Densely connected convolutional networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(12), 2261–2269 (2017).

Kasar, T., Kumar, J., & Ramakrishnan, A. G. Font and background color independent text binarization. In Proc. IEEE International Conference on Image Processing (ICIP) (2012).

Wang, F. et al. Residual attention network for image classification. IEEE Trans. Pattern Anal. Mach. Intell. 39(3), 652–661 (2017).

Liu, A. Q., Lin, Y. C. & Zhao, T. F. OCR-based assistive devices for visually impaired individuals: A review. Assist. Technol. J. 13(4), 241–252 (2021).

Uchida, S. Text recognition in the wild: A survey. Pattern Recogn. J. 48(4), 1443–1466 (2015).

Gao, Hao, Ergu, Daji, Cai, Ying, Liu, Fangyao & Ma, Bo. A robust cross-ethnic digital handwriting recognition method based on deep learning. Proced. Comput. Sci. 199, 749–756 (2022).

Khan, Mohammad Meraj, Uddin, Mohammad Shorif, Parvez, Mohammad Zavid & Nahar, Lutfur. A squeeze and excitation ResNeXt-based deep learning model for Bangla handwritten compound character recognition. J. King Saud Univ. –Comput. Inform. Sci. 34(6), 3356–3364 (2022).

Harsha, S. S., Madhu Kumar, B. P. N., Raju Battula, R. S. S., John Augustine, P., Sudha, S. & Divya. T. Text Recognition from Images using a Deep Learning Model, In 2022 IEEE Sixth International Conference on I-SMAC (IoT in Social, Mobile, Analytics and Cloud) (I-SMAC) 926-931 (2022).

Sharma, N. et al. Deep learning and SVM-based approach for Indian licence plate character recognition. Comput. Mater. Contin. 74(1), 881–895 (2023).

Podder, K. K. et al. Self-ChakmaNet: A deep learning framework for indigenous language learning using handwritten characters. Egypt Inform. J. 24(4), 100413 (2023).

Rizky, A. F., Novanto, Y. & Santoso, E. Text recognition on images using pre-trained CNN, arXiv preprint arXiv:2302.05105 (2023).

Varshini, C., Yogeshwaran, S., & Mekala, V. Tamil and English handwritten character segmentation and recognition using deep learning. In IEEE 2024 International Conference on Communication, Computing and Internet of Things (IC3IoT) 1-5 (2024).

Karpagalakshmi, R. C. et al. Deep learning-based recognition of handwritten english characters. Proc. Comput. Sci. 258, 1783–1792 (2025).

Singh, G., Kalpna, G., Shagun, S. Robust optical character recognition using DenseNet121: Leveraging google fonts for dataset diversity. In 2025 International Conference on Multi-Agent Systems for Collaborative Intelligence (ICMSCI) 882 886 (2025).

Xie, Z., Wu, J., Tang, W. & Liu, Y. Advancing image segmentation with DBO-Otsu: Addressing rubber tree diseases through enhanced threshold techniques. PLOS ONE 19(3), e0297284 (2024).

Rajasekar, E., Chandra, H., Pears, N., Vairavasundaram, S. & Kotecha, K. Lung image quality assessment and diagnosis using generative autoencoders in unsupervised ensemble learning. Biomed. signal proc. control 102, 107268 (2025).

Kumarappan, J., Rajasekar, E., Vairavasundaram, S., Kotecha, K. & Kulkarni, A. Federated learning enhanced MLP–LSTM modeling in an integrated deep learning pipeline for stock market prediction. Int. J. Comput. Intell. Syst. 17(1), 267 (2024).

Hossain, M. A., Sakib, S., Abdullah, H. M. & Arman, S. E. Deep learning for mango leaf disease identification: A vision transformer perspective. Heliyon 10(17), e36361 (2024).

Mahesh, T. R. et al. The stratified K-folds cross-validation and class-balancing methods with high-performance ensemble classifiers for breast cancer classification. Healthc. Anal. https://doi.org/10.1016/j.health.2023.100247 (2023).

Acknowledgments

The authors extend their appreciation to Princess Nourah Bint Abdulrahman University, Riyadh, Saudi Arabia for funding the publication of this research through the Researchers Supporting Project number (PNURSP2025R435), Princess Nourah Bint Abdulrahman University, Riyadh, Saudi Arabia.

Funding

This work was supported in part by Princess Nourah bint Abdulrahman University, through the Researchers Supporting Program under grantPNURSP2025R435.

Author information

Authors and Affiliations

Contributions

Aishwarya N: Writing – original draft, Methodology, Investigation, Conceptualization. Almazyad: Writing – review & editing, Visualization, Project Administration. Sakthi Abirami: Writing-review & Editing, Formal Analysis, Validation. Bharath Ram: Software, Data curation, Conceptualization. Devisowjanya: Writing – original draft, Data Curation, Project Administration. Mohammed Zakariah: Supervision, Resources, Funding acquisition.Deema Mohammed:review & editing, Project Administration, Funding acquisition.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Nagasubramanian, A., Almazyad, A.S., Balakrishnan, S.A. et al. OCRNet a robust deep learning framework for alphanumeric character recognition to assist the visually impaired. Sci Rep 15, 41344 (2025). https://doi.org/10.1038/s41598-025-25278-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-25278-9