Abstract

The rising prevalence of retinal diseases is a significant concern, as certain untreated conditions can lead to severe vision impairment or even blindness. Deep learning algorithms have emerged as a powerful tool for the diagnosis and analysis of medical images. The automated detection of retinal diseases not only aids ophthalmologists in making accurate clinical decisions but also enhances efficiency by saving time. This study proposes a deep learning-based approach for the automated classification of multiple retinal diseases using fundus images. For this research, a balanced dataset was compiled by integrating data from various sources. Artificial Neural Networks (ANN) and transfer learning techniques were utilized to differentiate between healthy eyes and those affected by diabetic retinopathy, cataracts, or glaucoma. Multiple feature extraction methods were employed in conjunction with ANN for the multi-classification of retinal diseases. The results demonstrate that the model combining Artificial Neural Networks (ANN) with MobileNetV2 and DenseNet121 architectures, along with Principal Component Analysis (PCA) for feature extraction and dimensionality reduction, as well as the Discrete Wavelet Transform (DWT) algorithm, achieves highly satisfactory performance, attaining a peak accuracy of 98.2%.

Similar content being viewed by others

Introduction

The human retina plays a vital role in vision, and any structural or functional abnormalities within it can result in visual impairments or even blindness1. Vision impairment has profound consequences for individuals across all age groups, particularly those over the age of 50. In young children, severe vision problems at an early age have been linked to lower educational attainment, while in adults, they are associated with higher rates of depression, reduced productivity, and decreased workforce participation. The causes of vision impairment and eye diseases vary significantly across different regions. For example, in low- and middle-income countries, a larger proportion of vision impairment is attributed to untreated cataracts. In contrast, conditions such as age-related macular degeneration and glaucoma are more prevalent in high-income countries.

Globally, at least 2.2 billion people suffer from near- or distance vision impairment2. Of these cases, nearly half—approximately 1 billion—involve vision impairment that could have been prevented or remains untreated3. Among this group, the primary conditions causing distance vision impairment or blindness include cataracts (94 million), refractive error (88.4 million), age-related macular degeneration (8 million), glaucoma (7.7 million), and diabetic retinopathy (3.9 million). Presbyopia (826 million) is the leading cause of near-vision impairment. Geographically, the prevalence of distance vision impairment is estimated to be four times higher in low- and middle-income regions compared to high-income areas. For instance, over 80% of individuals in western, eastern, and central sub-Saharan Africa are estimated to have untreated near-vision impairment, whereas rates in high-income regions such as North America, Australasia, western Europe, and Asia-Pacific are reported to be below 10%. Population growth and aging are expected to further increase the number of individuals affected by visual impairment.

The detection of retinal diseases represents a significant public health challenge. Traditional diagnostic methods, which rely on subjective interpretations by human experts, are often time-consuming and prone to errors. Fundus photography4 is a medical imaging technique used to detect retinal diseases and assist healthcare professionals in monitoring and treating various ocular disorders. This method provides high-resolution images of the posterior eye, capturing detailed views of the retina and its vascular network. Additionally, it offers a non-invasive and cost-effective approach to managing retinal diseases and mitigating the risk of vision loss.

There are numerous types of retinal diseases5,6 as in Figure 1, some of which include:

-

Diabetic Retinopathy7: This condition occurs when the small blood vessels (capillaries) in the retina deteriorate, leading to fluid leakage into and behind the retina. This can cause retinal swelling, resulting in blurred or distorted vision. In some cases, abnormal new blood vessels may form and rupture, further impairing vision.

-

Macular Hole8: A macular hole is a small defect in the macula, the central part of the retina. It can result from abnormal separation of the retina and vitreous or from an eye injury.

-

Glaucoma9: Glaucoma is an eye condition characterized by damage to the optic nerve, which transmits visual information from the eye to the brain. This damage is often associated with elevated intraocular pressure, though it can also occur with normal pressure levels. Glaucoma is a leading cause of blindness, particularly in individuals over the age of 60.

-

Cataract10: A cataract is an eye condition where the normally clear lens becomes cloudy, obstructing the passage of light. It is a progressive condition and the leading cause of blindness worldwide.

-

Retinal detachment11: This condition occurs when fluid accumulates beneath the retina, often due to a retinal tear. The fluid causes the retina to separate from the underlying tissue layers, leading to vision loss if not treated promptly.

Difference between retinal diseases1.

Research objectives

The proposed approach aims to achieve the following research objectives:

-

Develop a Deep Learning (DL) model for multi-class retinal image classification.

-

Attain high accuracy in the automated identification of prevalent eye disorders, Diabetic Retinopathy (DR), and Glaucoma.

-

Evaluate the model’s effectiveness using large and diverse datasets to ensure its generalizability and reliability.

-

Investigate the model’s role in early disease detection, emphasizing improvements in patient outcomes and reductions in vision loss.

Research contribution

The key contributions of the proposed methodology include:

-

Early identification of retinal diseases, such as Diabetic Retinopathy (DR) and Glaucoma, is essential to prevent irreversible vision loss.

-

Extensive evidence in biomedical research demonstrates that deep convolutional networks pre-trained on large datasets outperform deep models trained from the ground up.

-

The experiments utilize the balanced, publicly available Retinal Diseases Fundus Images Generalized dataset, which combines various datasets, averaging 1,760 images for each class: Diabetic Retinopathy, Glaucoma, and Normal.

Related work

In recent years, machine learning has emerged as a transformative tool in the field of medical diagnostics, revolutionizing the detection and treatment of various diseases. The application of machine learning algorithms has enabled the rapid and accurate analysis of large volumes of retinal images, facilitating early detection of retinal diseases. This early intervention can prevent permanent vision loss and improve patient outcomes. Additionally, machine learning techniques have been employed to classify retinal diseases into distinct categories.

Bilal et al.12,13,14 focused on the classification of diabetic eye diseases (DED), particularly diabetic retinopathy (DR) and its various stages to improve the automated diagnosis and and support precision medicine. Convolutional neural networks (CNNs) are utilized with transfer learning for multi-stage DR classification. Additionally, a novel combination of Genetic Grey Wolf Optimization (G-GWO) and Kernel Extreme Learning Machines (KELM) is introduced to enhance the classification performance. Furthermore, a hybrid model integrating an Enhanced Quantum-Inspired Binary Grey Wolf Optimizer (EQI-BGWO) with a Self-Attention Capsule Network (SACNet) is proposed to address the detection of vision-threatening DR.

Ankitha15 proposed a model for classifying fundus images into normal, cataract, glaucoma, and diabetic retinopathy categories. The preprocessing stage involved binarization, while feature extraction was performed using the Grey Level Co-occurrence Matrix (GLCM). Feature scaling was achieved through normalization and standardization. The study experimented with classifiers such as Random Forest (RF), Support Vector Machine (SVM), K-Nearest Neighbor (KNN), and Convolutional Neural Network (CNN) on a cataract dataset16. The CNN classifier achieved the highest accuracy of 68%.

Singh, Law Kumar, et al.17 introduced an innovative system for enhancing glaucoma care by integrating Customized Particle Swarm Optimization (CPSO) with four advanced machine learning classifiers. Key features were selected using univariate methods and feature importance analysis. The CPSO-K-Nearest Neighbor hybrid approach achieved a remarkable accuracy of 99%.

Mongia et al.18 developed a Convolutional Neural Network (CNN) model for detecting and classifying eye diseases from retinal images. The model comprised three convolutional layers for feature extraction and three max-pooling layers to focus on lighter pixels. A Rectified Linear Unit (ReLU) activation function was used to transfer weighted inputs to the output layer. The model classified images into "Normal," "Cataract," "Glaucoma," and “Retinal diseases” with an accuracy of 78%.

Thanki19 created a model to distinguish between glaucomatous and normal fundus images. SqueezeNet was utilized for feature extraction due to its efficiency and adaptability to hardware with limited memory. Classification was performed using multiple machine learning algorithms, including KNN, SVM, Decision Tree (DT), Naïve Bayes (NB), Logistic Regression (LR), and Random Forest (RF). The SVM classifier achieved the highest accuracy of 76.2% on the ORIGA dataset20.

Sait21 proposed a ShuffleNet V2 model fine-tuned with the Adam optimizer (AO) for classifying fundus images. Noise and artifacts were removed using autoencoders, and key features were generated using the Single-Shot Detection (SSD) approach. Feature selection was performed using the Whale Optimization Algorithm (WOA) with a Levy Flight and Wavelet search strategy. The model achieved accuracies of 99.1% and 99.4% on the ODIR and EDC datasets, respectively.

Almustafa et al.22 utilized the STARE dataset23 to classify 14 ophthalmological defects using models such as ResNet-50, EfficientNet, InceptionV2, a 3-layer CNN, and Visual Geometry Group (VGG). EfficientNet achieved the highest accuracy of 98.43%.

Choudhary et al.24 employed a large dataset of labeled Optical Coherence Tomography (OCT) and Chest X-ray images20 to classify urgent referrals into choroidal neovascularization, diabetic macular edema, drusen, and normal retinal OCT images. Their 19-layer CNN model achieved an accuracy of 99.17%.

Sengar et al.25 used multi-class images from the multi-label RFMiD26 dataset to classify conditions such as diabetic retinopathy, media haze, optic disc cupping, and normal images. They introduced a data augmentation technique and compared their EyeDeep-Net algorithm with models like VGG-16, VGG-19, AlexNet, Inception-v4, ResNet-50, and Vision Transformer, achieving validation and testing accuracies of 82.13% and 76.04%, respectively.

Pan et al.27 developed a model to classify macular degeneration, tessellated retinas, and normal retinas using fundus images collected from Chinese hospitals. They implemented Inception V3 and ResNet-50 models, achieving accuracies of 91.76% and 93.81%, respectively, after hyperparameter tuning.

Kumar & Singh28 gathered data from datasets such as Messidor-229, EyePACS30, ARIA, and STARE31 to classify images into 10 categories, including different stages of diabetic retinopathy. Their methodology involved preprocessing, a matched filter approach, and post-processing segmentation and classification, achieving an accuracy of 99.71%.

Singh, Law Kumar, et al.32 focused on glaucoma detection using the Gravitational Search Optimization Algorithm (GSOA) for feature selection. Six machine learning models were trained, achieving a best accuracy of 95.36%.

Singh, Law Kumar, et al.33 developed a computer-assisted diagnosis (CAD) system using the Emperor Penguin Optimization (EPO) and Bacterial Foraging Optimization (BFO) algorithms for feature selection. Their ML classification approach achieved an overall accuracy of 96.55%.

Singh, Law Kumar, et al.34 proposed a hybrid feature selection approach combining the Whale Optimization Algorithm (WOA) and Grey Wolf Optimization Algorithm (GWO). This method achieved an accuracy of 96.50% on the ORIGA dataset20.

Arslan et al.35 presented a deep learning model with five CNN architectures—DenseNet, EfficientNet, Xception, VGG, and ResNet—to detect cataracts, diabetic retinopathy, and glaucoma. The dataset included 2,748 retinal fundus images36, and the 10-fold cross-validation technique was used. EfficientNet achieved the highest classification accuracy of 94.88%.

Proposed methodology

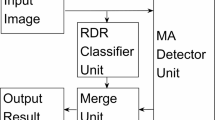

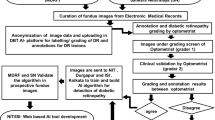

As illustrated in Figure 2, The proposed architecture is designed to classify retinal eye diseases into three categories: Diabetic Retinopathy, Glaucoma, and Normal, to assist doctors in diagnosis. The architecture employs three models: Artificial Neural Networks (ANNs), DenseNet121, and MobileNetV2. Artificial Neural Networks (ANNs) have significantly advanced medical imaging by improving the accuracy of disease detection and classification. DenseNet121, with its dense connectivity patterns, is particularly effective at capturing intricate features from retinal images, thereby enhancing diagnostic precision. Meanwhile, MobileNetV2’s lightweight architecture facilitates rapid inference and deployment, making it highly suitable for real-time applications in clinical settings. Together, these ANN-based models not only enhance the automated classification of retinal diseases but also contribute to the development of scalable, efficient, and accurate diagnostic solutions. This advancement holds the potential to improve patient care and outcomes in ophthalmology. The proposed model operates through four key stages: image acquisition, pre-processing, feature extraction, and classification.

Image acquisition phase

This research utilizes multiple datasets to evaluate the prevalence of diabetic retinopathy, glaucoma, and normal eye conditions. The data has been meticulously collected from diverse sources, providing a comprehensive overview of these ocular conditions. The retina dataset consists of color fundus images depicting normal eyes as well as retinal diseases such as diabetic retinopathy, cataracts, and glaucoma. Each disease class contains precisely 100 images, while the normal class includes 300 images.

The Ocular Disease Intelligent Recognition (ODIR) dataset37 is a structured ophthalmic database comprising data from 5,000 patients, including age, color fundus images of both left and right eyes, and diagnostic keywords provided by doctors. The Indian Diabetic Retinopathy Image Dataset (IDRiD)38 was created from real clinical examinations conducted at an eye clinic in India. It includes 516 images categorized into five Diabetic Retinopathy (DR) and three Diabetic Macular Edema (DME) classes, adhering to international clinical standards.

The Joint Shantou International Eye Centre (JSIEC) dataset16 contains 1,000 fundus images representing 39 classes, which are part of a larger collection of 209,494 images. The Messidor dataset29 focuses on the detection and classification of diabetic retinopathy, a common complication of diabetes. The EyePACS dataset30, widely used for diabetic retinopathy detection, includes high-resolution fundus images with annotations indicating the severity of the disease, categorized as 0 - No DR, 1 - Mild, 2 - Moderate, 3 - Severe, and 4 - Proliferative DR.

The ORIGA dataset20 comprises eye images, such as fundus photographs, with annotations indicating the presence or absence of glaucoma. The Glaucoma Dataset from EyePACS23 is a balanced subset of standardized fundus images from the Rotterdam EyePACS AIROGS set, optimized for machine learning applications. The REFUGE dataset39 focuses on retinal diseases, particularly glaucoma, and includes normal cases. The BinRushed dataset40 consists of images labeled for the presence or absence of glaucoma. The STARE (Structured Analysis of the Retina) dataset31 includes normal retinal images, playing a critical role in advancing retinal image analysis and improving disease detection accuracy.

The combined dataset, as summarized in Table 1, has been made publicly available on Kaggle41 for free access. It includes a total of 5,281 color fundus images across three classes: Diabetic Retinopathy, Glaucoma, and Normal. By integrating these datasets, the research enables robust analysis and comparison, facilitating advancements in the automated detection and classification of retinal diseases. This comprehensive approach supports the development of accurate diagnostic tools, ultimately contributing to improved patient care in ophthalmology.

Image preprocessing phase

Preprocessing is a critical step in the machine learning pipeline, playing a significant role in enhancing the effectiveness, efficiency, and accuracy of the training process, as well as the overall performance of the resulting model. In this research, the preprocessing stage involves several key steps to prepare the retinal fundus images for analysis.

First, the images undergo contrast-limited adaptive histogram equalization (CLAHE), a technique that adapts to the characteristics of multiple regions within the images43. This step enhances the contrast of the fundus images, ensuring that a broader range of details is captured, which is particularly important for accurate disease detection. Following this, the images are converted from the RGB color space to grayscale (GRAY), simplifying the data while retaining essential features.

Next, the pixel values of the images are rescaled by dividing by 255 to normalize the data, ensuring that all values fall within the range of (0, 1). This normalization step is crucial for improving the stability and convergence of the training process. Finally, the images are resized to a uniform dimension of 512x512 pixels, matching the size of the smallest image in the dataset. This standardization ensures consistency across the dataset and reduces computational complexity during model training, enabling more efficient processing.

By implementing these preprocessing steps, the dataset is optimized for machine learning, ensuring that the models can effectively learn from the data and achieve high accuracy in classifying retinal diseases.

Feature extraction phase

Feature extraction44 is a crucial process in machine learning and data analysis aimed at transforming raw data into meaningful, high-level representations that can be effectively utilized by models. By identifying and extracting the most relevant information from the data, feature extraction enhances the efficiency, accuracy, and generalization capabilities of models while reducing computational complexity. In this research, pretrained models are employed during the feature extraction phase to leverage advanced deep learning techniques, thereby improving diagnostic accuracy, efficiency, and accessibility, ultimately leading to better patient outcomes.

Pre-trained models such as DenseNet121 and MobileNetV2 are utilized to learn optimal ways to fuse features through their processing layers. These models excel at capturing intricate patterns and representations from input data. Additionally, Principal Component Analysis (PCA) is applied to reduce dimensionality while preserving the variance in the data, effectively combining features for improved efficiency. Feature fusion, which involves integrating multiple diverse features into a single representation at the input level, is also implemented. This approach enhances the representation of the data, leading to more comprehensive and accurate diagnoses, particularly in medical imaging. By combining the strengths of all those steps, this research achieves robust and efficient representations of retinal fundus images. This approach not only improves the performance of classification models but also contributes to the development of scalable and accurate diagnostic solutions in ophthalmology.

DenseNet12145 is a convolutional neural network architecture renowned for its dense connectivity pattern, where each layer receives input from all preceding layers. As illustrated in Figure 3, DenseNet121’s structure enables it to efficiently encode the salient characteristics of input images using its deep convolutional layers. This architecture is particularly advantageous in transfer learning scenarios, where features learned by DenseNet121 on large datasets like ImageNet can be repurposed for specific classification tasks. By leveraging these pretrained features, the need for large-scale labeled datasets is reduced, and model convergence is accelerated, making it a powerful tool for medical image analysis.

MobileNetV246,47 is a lightweight convolutional neural network architecture optimized for mobile and embedded vision applications. As a feature extractor, MobileNetV2, with its structure shown in Figure 4, emphasizes efficiency without sacrificing performance, making it suitable for scenarios with constrained computational resources. Through transfer learning, MobileNetV2’s pre-trained weights, typically trained on large-scale datasets like ImageNet, can be utilized to extract robust features from input images. These features capture both low-level details and high-level semantic information, facilitating effective generalization to new tasks with minimal additional training data.

MobileNetV2 and DenseNet121 were selected due to their complementary strengths in performance and computational efficiency48. They are ideal for medical image analysis tasks like retinal disease classification. The combination of these two architectures not only boosts the model’s overall accuracy and generalization ability but also ensures efficient resource usage, making the solution practical and scalable.

Principal Component Analysis (PCA) is an unsupervised dimensionality reduction technique that retains the most informative components while filtering out low-variance features, thereby reducing computational overhead during artificial neural network (ANN) training49. Unlike supervised methods such as Linear Discriminant Analysis (LDA), PCA does not rely on labeled data, enhancing its versatility as a preprocessing step across diverse datasets. While PCA excels in capturing global variance for linearly separable data, t-Distributed Stochastic Neighbor Embedding (t-SNE) prioritizes local structure preservation, making it more suitable for visualization rather than feature reduction50.

450 principal components retained the most critical discriminative information required for effective classification. Iterative experimentation showed that increasing the number of components beyond this point yielded negligible performance gains, while fewer components led to a decline in classification accuracy.

The Discrete Wavelet Transform (DWT)51 is a feature extraction technique that decomposes an image into wavelet coefficients, capturing both spatial and frequency information. This multi-resolution analysis allows DWT to decompose images into four components: one approximate (LL) and three detailed (LH, HL, and HH) sub-bands. The low-pass filter (LL) captures the approximate parameters, while the horizontal (LH), diagonal (HH), and vertical (HL) detail coefficients are derived from their respective filters. DWT computes statistical measures such as mean, standard deviation, and variance for each sub-band to preserve essential characteristics.

A hybrid approach combining DWT and PCA leverages their complementary strengths to enhance the model’s discriminative power, computational efficiency and generalizability. DWT provides localized time-frequency representations that preserve pathological signatures in retinal images, while PCA optimizes the feature space by eliminating feature redundancy and retaining maximally informative dimensions. As a result, this apporach generates 450 features from PCA and 12 features per image (3 features per sub-band × 4 sub-bands) from DWT, are then passed to the classification phase.

Classification phase

The classification phase52,53,54,55,56,57,58 is a critical step in the machine learning pipeline, where data is categorized into pre-defined classes or labels based on learned patterns and features. This phase transforms the insights derived from feature extraction into actionable predictions, enabling the identification and differentiation of various categories within the dataset. In this research, the feature extraction results are classified into three classes: Diabetic Retinopathy, Glaucoma, and Normal, using an Artificial Neural Network (ANN). The selection of an Artificial Neural Network (ANN) as the final classifier is informed by insights drawn from prior studies employing CNN, EfficientNet, and Vision Transformer-based architectures54. While these deep models achieve remarkable accuracies in retinal disease classification, they often entail substantial computational costs, large parameter counts, and limited interpretability, which may restrict their deployment in clinical environments. ANNs are well-suited for this task due to their ability to learn complex patterns and relationships from numerical features extracted from pre-processed data, as well as their flexibility in handling multi-class classification problems. The ANN used in this study consists of 3 hidden layers, each employing the Rectified Linear Unit (ReLU) activation function to introduce non-linearity and improve learning efficiency. The output layer comprises three neurons, corresponding to the three target classes (Diabetic Retinopathy, Glaucoma, and Normal). A softmax activation function is applied to the output layer to produce probability distributions across the classes, ensuring that the sum of the probabilities equals one. For this multi-class classification task, the Categorical Cross-Entropy (CCE) loss function is utilized. CCE is well-suited for multi-class problems as it measures the difference between the predicted probability distribution and the true distribution.

The proposed model’s hyperparameters were optimized using validation-based tuning across multiple trials. Specifically, the batch size (16, 32, 64) and learning rate (0.001, 0.0005, 0.0001) were empirically evaluated. The configuration of 20 epochs, batch size = 32, and learning rate = 0.001 yielded the most stable convergence and lowest validation loss. A Dropout rate of 0.5 and L2 regularization (λ=0.001) were used to mitigate overfitting and reduce potential bias. A fixed learning rate was used, as preliminary tests indicated stable convergence without requiring learning rate scheduling. This systematic validation-based optimization ensured robust hyperparameter selection and reliable model generalization. The model is compiled using the Adam optimizer, with a learning rate set to 0.001 to ensure efficient and stable training. Additionally, 10-fold cross-validation is employed during training to ensure robustness and reliability. After training, the best-saved weights are loaded and used to evaluate the model’s performance on the testing images. By leveraging the ANN’s ability to learn complex patterns and relationships, combined with a well-defined architecture and optimization strategy, the proposed model achieves accurate and reliable classification of retinal diseases. This approach not only enhances diagnostic precision but also contributes to the development of scalable and efficient solutions for retinal disease detection.

Experimental results

This work demonstrates the highest accuracy of ANN classifier with different feature extraction methods. The dataset is divided into a ratio of 8:2. The model achieved a classification speed of 166.7 frames per second (FPS) and inference time of approximately 6 milliseconds per image. All experiments were conducted on a Kaggle-hosted NVIDIA Tesla P100 GPU with 16 GB HBM2 memory. Memory consumption remained within the GPU’s available VRAM, indicating that the model is well-suited for deployment in moderately resource-constrained environments. The performance of the proposed models is evaluated by these measures’ precision (1), recall (2), F1-score (3), and accuracy (4) in the testing phase equations shown in Table 2.

Model A: Deep feature extraction by DenseNet121 with ANN classifier

This model is a modified pre-trained model of DenseNet121 for feature extraction and Artificial Neural Networks (ANN) as a classifier. The ANN classifier took the 2048 extracted features of images from DenseNet121 after having dimensionality reduction by PCA to have 450 features. The accuracy (a) and loss (b) curves in Figure 5 show an early rise in accuracy and a rapid initial decrease in training loss to 0.07. Also, the confusion matrix indicates that 1030 out of 1057 samples were correctly classified as in Figure 6 achieving a final validation accuracy of 97.4%. Misclassifications primarily occurred between Glaucoma (class 1) and DR (class 0) due to overlapping retinal texture features.

Model B: Features extraction by MobileNetV2 and DWT with ANN classifier

This model is a modified pre-trained model of MobileNetV2 and DWT as feature extractors. Features of MobileNetV2 were reduced by PCA and got 450 features from 2048 features. 462 features are composed from12 features of DWT in addition 450 features of MobileNetV2 are classified by ANN classifier. The accuracy (a) and loss (b) curves in Figure 7 exhibit a rapid convergence as model A with slightly higher loss 0.062 at early epochs. Also, the confusion matrix indicates that 1021 out of 1057 samples were correctly classified as in Figure 8 achieving a final validation accuracy of 96.6%. The analysis revealed increased misclassification in Glaucoma (class 1), potentially because frequency-domain features alone were insufficient to capture subtle structural differences.

Model C: Features extraction by MobileNetV2, DWT, and DenseNet121 with ANN classifier

This model is a modified pre-trained model of DenseNet121 and MobileNetV2 as feature extractors to extract 2048 features in each model separately from images, after that PCA was done to reduce features to 450 features for each model. Also, the model includes DWT as a feature extractor besides the 2 models to extract 12 features, so all features are fused to be 912 features classified. The accuracy (a) and loss (b) curves in Figure 9 demonstrate the most stable convergence with a low final loss of 0.059 and nearly diagonal confusion matrix that indicating robust separability across all three classes. Also, the confusion matrix indicates that 1038 out of 1057 samples were correctly classified as in Figure 10 achieving a final validation accuracy of 98.2%. Only a few DR (class 0) samples were misclassified as Normal (class 3), possibly due to mild disease manifestations in those images.

Model D: Deep feature extraction by MobileNetV2 with ANN classifier

This model is a modified pre-trained model of MobileNetV2 for feature extraction and Artificial Neural Networks (ANN) as a classifier. The ANN classifier took the 2048 extracted features of images from MobileNetV2 after having dimensionality reduction by PCA to have 450 features, then used these features to classify the images. The accuracy (a) and loss (b) curves in Figure 11 show a consistent convergence while loss decreased to 0.073 with minor confusion between Normal (class 2) and Glaucoma (class 1) classes that reflecting feature similarities in optic disc regions. Also, the confusion matrix indicates that 1037 out of 1057 samples were correctly classified as in Figure 12 achieving a final validation accuracy of 98.1%.

Additional comparative experiments are conducted in Table 3 between the ANN-based classifier and CNN/ViT baselines, using identical dataset splits and preprocessing. The ANN-based models maintain competitive accuracy up to 98.2% while reducing trainable parameters compared to CNN and ViT baselines. This notable reduction in model complexity translates to significantly shorter training times approximately 1–2 minutes versus 10–40 minutes. These findings demonstrate that the proposed ANN classifier effectively exploits deep feature representations and frequency-domain information, offering a highly efficient and computationally economical alternative to conventional CNN- and transformer-based classifiers.

Discussion

The dataset used in this work included 1761 images for Diabetic Retinopathy (DR), 1760 for Glaucoma, and 1760 for Normal classes. The equal number of images of each class, ensuring a balanced distribution across all classes. It was divided into training 80% and testing 20% subsets using a stratified sampling strategy with class-proportion preservation. Consequently, each subset retained approximately 33.3% of images representing Diabetic Retinopathy, Glaucoma, and Normal cases, respectively. No augmentation, oversampling, or under-sampling techniques were applied, as the dataset was inherently balanced. A detailed per-class evaluation of the proposed models confirms consistent and equitable performance across all classes in Table 4. For Model A, precision ranged from 0.96–0.99, recall from 0.95–0.99, and F1-scores from 0.96–0.99, with slightly more misclassifications in Glaucoma (Class 1). Model B achieved slightly lower recall 0.93 for Normal (Class 2), resulting in an overall accuracy of 96.6%. Model C attained the highest overall accuracy of 98.2%, with per-class precision, recall, and F1-scores between 0.96–1.00, indicating robust separability. Model D showed balanced per-class metrics with minimal misclassification and an overall accuracy of 98.1%. These results quantitatively demonstrate that the equal class distribution effectively mitigated bias, maintaining high precision, recall, and F1-scores across all classes without the need for additional augmentation or resampling techniques. Consequently, the models achieve stable and reliable performance across all diagnostic categories. As indicated in Table 4, Model C demonstrates superior performance, achieving an accuracy of 98.2% and 95% Confidence Interval for the classification of Diabetic Retinopathy (class 0), Glaucoma (class 1), and Normal (class 2) retinal conditions. It significantly outperforming Model D, as confirmed by McNemar’s test (p < 0.05), indicating a statistically significant improvement in paired prediction errors. 10-fold cross-validation further supports the model’s robustness, yielding a mean accuracy of 98.2% ± 0.2% (standard deviation), demonstrating consistent performance across splits. Additionally, the Area Under the Curve (AUC) values exceed 0.98 for all classes, highlighting the model’s strong discriminative capability, as illustrated in Figure 13.

Despite this high accuracy, several limitations are prevalent in existing studies within this domain. While some studies report reasonable classification accuracies, they often encounter challenges related to increased computational complexity due to the utilization of deep architectures with a large number of layers and parameters. This complexity can hinder the practical deployment of such models in real-world clinical settings.

The performance of the proposed model is benchmarked against other state-of-the-art models in Table 5.. In prior experiments, the same dataset was employed for both training and testing, which may lead to overfitting and inflated performance metrics. To rigorously assess the generalization capability of the proposed model, an independent and unseen dataset, ODIR59, was utilized for testing. The ODIR dataset comprises a structured ophthalmic database of 5,000 patients, including color fundus photographs of both eyes. It is collected from multiple hospitals and medical centers across China using various fundus cameras resulting in heterogeneous image resolutions and illumination conditions. The training dataset remained unchanged, consisting of 5,281 fundus images, while the ODIR dataset was exclusively reserved for external validation. Despite incorporating advanced regularization techniques such as L2 regularization, dropout layers, and checkpoint saving to mitigate overfitting, Model C achieved an accuracy of only 74% on the ODIR dataset. Class-wise analysis revealed accuracy values of 78.6% for Normal, 71.2% for Glaucoma, and 72.4% for Diabetic Retinopathy. This indicating that the largest performance drop occurred in the Glaucoma class due to inter-device variations and subtle optic disc texture differences. This performance decline is primarily attributed to domain shift arising from variations in imaging devices and illumination as in Figure 14 leading to distributional inconsistencies between the training and testing datasets. Standard image normalization and contrast-limited adaptive histogram equalization (CLAHE) were applied in preprocessing phase. However, no domain adaptation or fine-tuning was performed to preserve experimental isolation between datasets.

Conclusion & Future work

The integration of deep learning algorithms into medical diagnostics represents a significant advancement in the detection and classification of retinal diseases, such as diabetic retinopathy and glaucoma. Traditional diagnostic methods, which often rely on subjective interpretations by clinicians, are prone to variability and potential errors. These limitations are being effectively addressed by the rapid, precise, and scalable analysis capabilities of deep learning technologies. This research demonstrates the potential of deep learning models to achieve remarkable accuracy in diagnosing retinal conditions by leveraging a comprehensive dataset of 5,281 color fundus images collected from different public sources. Contrast Limited Adaptive Histogram Equalization (CLAHE) was applied to enhance image contrast, while all images were resized to 512×512 pixels and normalized to a (0, 1) range. This approach ensured a consistent and balanced representation without the need for oversampling or synthetic techniques like SMOTE, which may introduce artifacts in medical imaging. In contrast to CNN and ViT models, the ANN-based classifier adopted in this study provides a computationally lighter and more interpretable alternative capable of maintaining competitive performance. This balance between efficiency, accuracy, and practical applicability supports the rationale for employing ANN as the final classification component in the proposed framework. Four distinct models were evaluated, each employing Artificial Neural Networks (ANNs) in conjunction with various feature extraction and dimensionality reduction techniques. Among these, Model C, which combines the MobileNetV2 and DenseNet121 architectures with Discrete Wavelet Transform (DWT) for feature extraction, emerged as the most effective, achieving a classification accuracy of 98.2%. The other evaluated models, which utilized different combinations of feature extraction methods, also demonstrated strong performance. DenseNet121 achieved an accuracy of 97.4%, MobileNetV2 with DWT achieved 96.6%, and MobileNetV2 alone achieved 98.1%. For future work, it is planned to expand the application of ANNs to classify a broader range of retinal diseases, including age-related macular degeneration (AMD), retinal detachment, macular edema, retinopathy of prematurity (ROP), cardiovascular disease, hypertensive retinopathy, and cataracts, while ensuring balanced datasets for each category in multilabel classification tasks. Moreover, future efforts will focus on improving the model’s generalization performance by evaluating it on unseen datasets with similar retinal color distributions, as well as by exploring color normalization and domain adaptation techniques to enhance model robustness and ensure more consistent generalization across diverse clinical settings. In addition, advanced deep learning techniques68,69,70,71 provide methodologies that could further enhance the preprocessing and feature extraction stages of the proposed framework. super-resolution and denoising networks68,69 could be applied to fundus images to improve vascular and lesion visibility, potentially increasing the discriminative power of features extracted by DenseNet121 and MobileNetV2. Graph-based modeling approaches70 could complement the feature fusion strategy by explicitly capturing structural relationships between retinal regions, while generative deep learning methods71 could reconstruct subtle pathological patterns, mitigating class imbalance. Integrating these enhancements into the preprocessing or ANN feature fusion pipeline may improve overall classification accuracy and robustness in future work.

Data availability

The datasets generated and/or analyzed during the current study are available from the corresponding author on reasonable request.

References

“World report on vision.” www.who.int, www.who.int/publications/i/item/world-report-on-vision. (2019)

World Health Organization. “Blindness and vision impairment.” World Health Organization, www.who.int/news-room/fact-sheets/detail/blindness-and-visual-impairment. (2023)

Zarafshan , Shiraz. “Glaucoma, the “Silent Thief of Sight”: Diagnosis, Ways to Tackle Vision Loss.” Hindustan Times, www.hindustantimes.com/lifestyle/health/glaucoma-the-silentthief-of-sight-diagnosis-ways-to-tackle-vision-loss-101679214075314.html. (2023)

“Color fundus photography | Department of Ophthalmology.” Med.ubc.ca, ophthalmology.med.ubc.ca/patient-care/ophthalmic-photography/color-fundus-photography/. (2019)

“Seeing Disease Differently.” Spectacle Optometry. www.spectacleoptometry.com/blog/seeing-disease-differently.

“Retinal diseases - Symptoms and - Mayo Clinic,” Mayoclinic.org, https://www.mayoclinic.org/diseases-conditions/retinal-diseases/symptoms-- causes/syc-20355825. (2024)

National Eye Institute. “Diabetic retinopathy | National Eye Institute.” Nih.gov www.nei.nih.gov/learn-about-eye-health/eye-conditions-and-diseases/diabetic-retinopathy. (2019)

National Eye Institute. “Age-Related Macular Degeneration | National Eye Institute.” Nih.gov www.nei.nih.gov/learn-about-eye-health/eye-conditions-and-diseases/age-related-macular-degeneration (2021)

“Glaucoma - Symptoms and causes.” Mayo Clinic, www.mayoclinic.org/diseases-conditions/glaucoma/symptoms-causes/syc-20372839.

Nizami, Adnan A., and Arun C. Gulani. “Cataract.” PubMed, StatPearls Publishing www.ncbi.nlm.nih.gov/books/NBK539699. (2024)

“Retinal Detachment | National Eye Institute.” Nih.gov, 2019, www.nei.nih.gov/learn-about-eye-health/eye-conditions-and-diseases/retinal-detachment. (2019)

Khan, A. Q., Sun, G., Khalid, M., Farrash, M. & Bilal, A. Multi-deep learning approach with transfer learning for 7-stages diabetic retinopathy classification. Int. J. Imaging Syst. Technol. 34(6), e23213. https://doi.org/10.1002/ima.23213 (2024).

Khan, A. Q. et al. A novel fusion of genetic grey wolf optimization and kernel extreme learning machines for precise diabetic eye disease classification. PLOS ONE 19(5), e0303094. https://doi.org/10.1371/journal.pone.0303094 (2024).

Bilal, A., Liu, X., Shafiq, M., Ahmed, Z. & Long, H. NIMEQ-SACNet: A novel self-attention precision medicine model for vision-threatening diabetic retinopathy using image data. Comput. Biol. Med. 171, 108099. https://doi.org/10.1016/j.compbiomed.2024.108099 (2024).

Ankitha, Gajaram. “An approach to classify ocular diseases using machine learning and deep learning.” MSc Research Project Data Analytics norma.ncirl.ie/6120/1/ankithamallikarjunagajaram.pdf.

“1000 Fundus images with 39 categories.” www.kaggle.com, www.kaggle.com/datasets/linchundan/fundusimage1000.

Singh, L. K. et al. A novel hybrid robust architecture for automatic screening of glaucoma using fundus photos, built on feature selection and machine learning-nature driven computing. Expert Syst. 39, 10. https://doi.org/10.1111/exsy.13069 (2022).

Mongia, Anvesha, et al. Eye disease detection and classification from retinal images using convolutional neural networks. Eye 10 10 106-118, https://www.academia.edu/88901925/Eye_Disease_Detection_and_Classification_from_Retinal_Images_using_Convolutional_Neural_Networks. (2022)

Thanki, Rohit. A deep neural network and machine learning approach for retinal fundus image classification. Healthcare Analytics 3 100140 https://doi.org/10.1016/j.health.2023.100140. (2023)

Srivastava, Gaurav. “Glaucoma OCT Scans (Origa) Dataset.” Kaggle.com www.kaggle.com/datasets/scipygaurav/glaucoma-oct-scans-origa-dataset (2022)

Sait, Wahab, and Abdul Rahaman. Artificial Intelligence-Driven Eye Disease Classification Model. Applied Sciences 13 20 11437, https://doi.org/10.3390/app132011437. 2023

Khaled, M. A. et al. STARC: deep learning algorithms’ modelling for structured analysis of retina classification. Biomed. Signal Process. Control 80, 104357. https://doi.org/10.1016/j.bspc.2022.104357 (2023).

“Glaucoma Dataset: EyePACS-AIROGS-light-V2,” Kaggle.com. www.kaggle.com/datasets/deathtrooper/glaucoma-dataset-eyepacs-airogs-light-v2

Choudhary, Amit, et al. A deep learning-based framework for retinal disease classification. Healthcare 11 2 212 www.ncbi.nlm.nih.gov/pmc/articles/PMC9859538/https://doi.org/10.3390/healthcare11020212. (2023)

Sengar, Neha, et al. EyeDeep-Net: a multi-class diagnosis of retinal diseases using deep neural network. Neural Computing and Applications https://doi.org/10.1007/s00521-023-08249-x. (2023)

Pachade, S. et al. Retinal fundus multi-disease image dataset (RFMiD): a dataset for multi-disease detection research. Data 6(2), 14. https://doi.org/10.3390/data6020014 (2021).

Pan, Y. et al. Fundus image classification using inception v3 and resnet-50 for the early diagnostics of fundus diseases. Fronti. Physiol. https://doi.org/10.3389/fphys.2023.1126780 (2023).

Kumar, K. . S. & Singh, N. P. Retinal disease prediction through blood vessel segmentation and classification using ensemble-based deep learning approaches. Neural Comput. Appl. https://doi.org/10.1007/s00521-023-08402-6 (2023).

YongH. “Messidor.” Kaggle.com www.kaggle.com/datasets/hanhan2010/messidor?select=Messidor. (2020)

A. Neo, “Diabetic Retinopathy Arranged,” Kaggle.com www.kaggle.com/datasets/amanneo/diabetic-retinopathy-resized-arranged?select=0. (2021)

“STARE Dataset,” Kaggle.com. www.kaggle.com/datasets/vidheeshnacode/stare-dataset

Singh, Law Kumar, et al. Efficient feature selection based novel clinical decision support system for glaucoma prediction from retinal fundus images. Medical Engineering & Physics 123 104077 www.sciencedirect.com/science/article/abs/pii/S1350453323001327, https://doi.org/10.1016/j.medengphy.2023.104077. (2024)

Singh, L. K. et al. Feature subset selection through nature inspired computing for efficient glaucoma classification from fundus images. Multimed. Tools Appl. https://doi.org/10.1007/s11042-024-18624-y (2024).

Singh, L. K. et al. A three-stage novel framework for efficient and automatic glaucoma classification from retinal fundus images. Multimed. Tools Appl. https://doi.org/10.1007/s11042-024-19603-z (2024).

Arslan, Gözde, et al. View of detection of cataract, diabetic retinopathy and glaucoma eye diseases with deep learning approach. Imiens.org imiens.org/index.php/imiens/article/view/25/15. (2024)

Kermany, Daniel. Large dataset of labeled optical coherence tomography (OCT) and chest X-Ray images. Mendeley.com 3 data.mendeley.com/datasets/rscbjbr9sj/3, https://doi.org/10.17632/rscbjbr9sj.3. (2018)

“Ocular Disease Recognition.” Kaggle.com, www.kaggle.com/datasets/andrewmvd/ocular-disease-recognition-odir5k.

MariaHerreroT. “IDRiD: Diabetic Retinopathy – Grading.” Kaggle.com www.kaggle.com/datasets/mariaherrerot/idrid-dataset?select=Imagenes. (2021)

J. Vasudeva, “REFUGE-2020_classificaton,” Kaggle.com www.kaggle.com/datasets/jayesh0vasudeva/refuge2020-classificaton (2020)

with_me_saikat, “BinRushed1-Corrected-Annotated,” Kaggle.com www.kaggle.com/datasets/withmesaikat/binrushed1correctedannotated (2022)

Congesta. “Retinal Diseases Fundus Images Generalized.” Kaggle.com www.kaggle.com/datasets/congesta/retinal-diseases-fundus-images-generalized/data. (2024)

“cataract dataset.” Kaggle.com, www.kaggle.com/datasets/jr2ngb/cataractdataset. (2019)

Abood, L. K. Contrast enhancement of infrared images using Adaptive Histogram Equalization (AHE) with Contrast Limited Adaptive Histogram Equalization (CLAHE). Iraqi J. Phys. (IJP) 16(37), 127–135. https://doi.org/10.30723/ijp.v16i37.84 (2018).

Hoque, M. E. & Kipli, K. Deep learning in retinal image segmentation and feature extraction: a review. Int. J. Online Biomed. Eng. (IJOE) 17(14), 103–18. https://doi.org/10.3991/ijoe.v17i14.24819 (2021).

Nandakumar, R. et al. Detection of diabetic retinopathy from retinal images using densenet models. Comput. Syst. Sci. Eng. 45(1), 279–9. https://doi.org/10.32604/csse.2023.028703 (2022).

S.Gokul Siddarth, and Sp Chokkalingam. DenseNet 121 framework for automatic feature extraction of diabetic retinopathy images. International Conference on Emerging Systems and Intelligent Computing (ESIC), Bhubaneswar, India 338-342, https://doi.org/10.1109/esic60604.2024.10481664 (2024)

Das, S. et al. Automatic detection of diabetic retinopathy from retinal fundus images using mobilenet model. Lecture Notes Netw. Syst. Springer International Publishing https://doi.org/10.1007/978-981-97-2053-8_23 (2024).

Taifa, I. A., Setu, D. M., Islam, T., Dey, S. K. & Rahman, T. A hybrid approach with customized machine learning classifiers and multiple feature extractors for enhancing diabetic retinopathy detection. Healthcare Anal. 5, 100346. https://doi.org/10.1016/j.health.2024.100346 (2024).

Jolliffe, I. “Rainfall modelling”. Weather 57(10), 390–391. https://doi.org/10.1256/wea.80.02

van der Maaten, L., & Hinton, G. Visualizing data using t-SNE Laurens van der Maaten. Journal of Machine Learning Research 9 2579–2605. Retrieved from https://www.jmlr.org/papers/volume9/vandermaaten08a/vandermaaten08a.pdf. (2008)

Ghazali, Kamarul Hawari, et al. Feature Extraction technique using discrete wavelet transform for image classification. 5th Student Conference on Research and Development, Selangor, Malaysia 1-4 https://doi.org/10.1109/scored.2007.4451366. (2007)

Fati, S. M., Senan, E. M. & Azar, A. T. Hybrid and deep learning approach for early diagnosis of lower gastrointestinal diseases. Sensors 22(11), 4079. https://doi.org/10.3390/s22114079 (2022).

Guron, A. et al. Classification of the cause of eye impairment using different kinds of machine learning algorithms. Passer 5(2), 410–416. https://doi.org/10.24271/psr.2023.397078.1328 (2023).

Muchuchuti, Stewart, and Serestina Viriri. Retinal disease detection using deep learning techniques: a comprehensive review. Journal of Imaging 9 4 84, www.mdpi.com/2313-433X/9/4/84, https://doi.org/10.3390/jimaging9040084. (2023)

Babaqi, Tareq, et al. Eye disease classification using deep learning techniques. 2023 https://arxiv.org/pdf/2307.10501.

Aslam, A. et al. Convolutional neural network-based classification of multiple retinal diseases using fundus images. Intell. Autom. & Soft Comput. 36(3), 2607–22. https://doi.org/10.32604/iasc.2023.034041 (2023).

Samir Benbakreti, et al. The classification of eye diseases from fundus images based on CNN and pretrained models. Acta Polytechnica 64 1 CTU Central Library 1–11, https://doi.org/10.14311/ap.2024.64.0001. (2024)

Cen, Ling-Ping. et al. Automatic detection of 39 fundus diseases and conditions in retinal photographs using deep neural networks. Nat. Commun. https://doi.org/10.1038/s41467-021-25138-w (2021).

Cunha de Carvalho, L. ODIR-5K Classificação . Kaggle. https://www.kaggle.com/datasets/lucascunhadecarvalho/odir5kclassificacao (2023)

Tamim, Nasser et al. Accurate diagnosis of diabetic retinopathy and glaucoma using retinal fundus images based on hybrid features and genetic algorithm. Appl. Sci. 11(13), 6178. https://doi.org/10.3390/app11136178 (2021).

Haraburda, Patrycja, and Łukasz Dabała. Eye diseases classification using deep learning. Image Analysis and Processing – ICIAP 2022 Springer Cham –172. (2022)

Bulut, B. et al. Classification of eye disease from fundus images using efficientnet. Artif. Intel. Theor. Appl 2(1), 1–7 (2022).

Benbakreti, S. et al. The classification of eye diseases from fundus images based on CNN and pretrained models. Acta Polytechnica 64(1), 1–11. https://doi.org/10.14311/ap.2024.64.0001 (2024).

Pandey, P. U. et al. An ensemble of deep convolutional neural networks is more accurate and reliable than board-certified ophthalmologists at detecting multiple diseases in retinal fundus photographs. British J. Ophthalmol. https://doi.org/10.1136/bjo-2022-322183 (2023).

Nguyen, Toan Duc, et al. Retinal disease diagnosis using deep learning on ultra-wide-field fundus images. Diagnostics 14 1 105 www.mdpi.com/2075-4418/14/1/105, https://doi.org/10.3390/diagnostics14010105. (2024)

Das, S. et al. A deep learning-based approach for detecting diabetic retinopathy in retina images. Int. Things-Based Mach. Learn. Healthc. https://doi.org/10.1201/9781003391456-5 (2024).

Ejaz, S. et al. A deep learning framework for the early detection of multi-retinal diseases. PLoS One 19(7), e0307317. https://doi.org/10.1371/journal.pone.0307317 (2024).

Luan, S. et al. Deep learning for fast super-resolution ultrasound microvessel imaging. Phys. Med. Biol. 68(24), 245023. https://doi.org/10.1088/1361-6560/ad0a5a (2023).

Yu, X. et al. Deep learning for fast denoising filtering in ultrasound localization microscopy. Phys. Med. & Biol. 68(20), 205002. https://doi.org/10.1088/1361-6560/acf98f (2023).

Zhang, X. et al. Fast virtual stenting for thoracic endovascular aortic repair of aortic dissection using graph deep learning. IEEE J. Biomed. Health Inform. 29(6), 4374–4387. https://doi.org/10.1109/JBHI.2025.3540712 (2025).

Lu, L. et al. Generative deep-learning-embedded asynchronous structured light for three-dimensional imaging. Adv. Photonics 6(4), 046004. https://doi.org/10.1117/1.AP.6.4.046004 (2024).

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB). This research received no specific grant from any funding agency in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Contributions

MS collected the input data and performed the preprocessing on it. AF applied the methodology of the manuscript. SH performed the validation and was a major contributor in writing the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Hussein, S.A., Farouk, A.A. & Saeid, M.M. Intelligent retinal disease detection using deep learning. Sci Rep 15, 43282 (2025). https://doi.org/10.1038/s41598-025-28376-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-28376-w