Abstract

The classification of traditional patterns is of great significance for their digital protection. Most studies focus on classifying patterns of different categories, while this study addresses the difficulty of classifying patterns of different types within the same category by selecting traditional cloud patterns (TCP) with complex structures and numerous types for classification. Due to the large number of label annotations required by deep learning algorithms relying on supervised learning, this paper proposes a traditional cloud pattern classification algorithm based on semi-supervised learning, which achieves high-precision classification with a small number of label annotations. Meanwhile, this paper proposes a novel data augmentation strategy called Random Line Augment (RLA) based on the line features of cloud patterns and edge detection algorithms. The algorithm also introduces WideResNet as the backbone network, which comprehensively captures local detail features in cloud pattern images by increasing the number of feature channels. The experimental results show that the algorithm has a significant effect on cloud pattern classification with obvious line features, with an accuracy of 97.41%, reaching a high level in pattern classification work.

Similar content being viewed by others

Introduction

In the digital age, transforming traditional patterns into images and classifying them is the fundamental task of digital preservation1. The traditional manual classification method relies on professional background knowledge and rich experience, and is also susceptible to the influence of individual subjective differences2. Therefore, exploring more efficient and accurate classification methods is particularly important. With the advancement of deep computer learning techniques in recent years, its application within the realm of image classification has become increasingly widespread. Although many scholars have applied deep learning to pattern classification, most scholars only classify patterns of different categories. For example, Sun Xuanming et al.3 used VGGNet, ResNet, and MobileNet to classify a pattern sample library that covers four major categories: floral patterns, bird patterns, swastika patterns, and cloud patterns, achieving an average accuracy of 83.51%. Zhao Kaiwen et al.4 conducted classification work on traditional textile patterns of four different nomadic ethnic groups in Xinjiang, and used an optimized ResNet 18-CA model, which improved the accuracy by 3.72% compared to the original model. Due to the subtle differences between different types of patterns in the same category, classification work is relatively difficult, so there is a relative lack of research specifically targeting different types of patterns in the same category. This study selects cloud patterns with complex structures and numerous types as research objects to overcome the classification problem of different types under the same category.

At the same time, most existing pattern classification algorithms rely on supervised learning and require a lot of label annotation work. For example, Ge Mengjia et al.5 used LabelImg open-source software to annotate information for a large number of patterns when classifying Xinjiang stamp printing patterns, which increased the cost and complexity of classification. To address this issue, we propose a traditional cloud pattern classification algorithm TCP-RLA based on semi-supervised learning, which enables it to accurately complete complex cloud pattern classification tasks with only a few label annotations.

Secondly, in most existing pattern classification algorithms, scholars only use relatively simple data augmentation methods. For example, Duan Yongli et al.6 proposed a traditional window lattice pattern classification algorithm based on the VGG16 model in northern Shaanxi, which uses operations such as rotation, mirroring, noise addition, brightness and blur for data augmentation. In the Qin embroidery pattern recognition and classification algorithm based on GoogLeNet architecture proposed by Yang Huijun et al.7, only the data augmentation methods of mirroring and rotation were used. This study focuses on the prominent line features of cloud patterns and combines edge detection algorithms in the data augmentation process to design an innovative line augment strategy. By enhancing the line features in pattern images, the model’s sensitivity and capture ability to line information are improved. At the same time, we introduced WideResNet as the backbone network. With its powerful feature extraction ability, WideResNet can capture more local detail features in pattern images and better obtain line information in cloud pattern images by increasing the number of feature channels.

Therefore, our main contributions in this study can be summarized as follows:

-

We chose cloud patterns that mimic the flow and changes of clouds in nature, with similar structures and features, as the research object for different types of classification problems under the same category in pattern classification. At the same time, we abandoned the traditional fully supervised algorithm that requires a large number of labels and used a semi-supervised algorithm, achieving a high-precision cloud pattern classification of 97.41% with only a few label annotations. We also introduced WideResNet as the backbone network, which extracts and integrates various local detail features by increasing the number of feature channels, enabling us to perform high-precision classification work equally well when facing different types of classification problems under the same category.

-

This study proposes an innovative data augmentation strategy, Random Line Augment (RLA), based on the line features of cloud patterns and edge detection algorithms. By incorporating edge detection algorithms, the study has broken the current situation of a single data augmentation strategy for pattern classification, and has achieved significant results in cloud pattern classification with obvious line features.

-

We collected cloud pattern patterns from traditional clothing and further divided them into 7 types through professional refinement and segmentation, creating a brand new cloud pattern database with a total of 840 images. This database not only expands the traditional pattern database, but also further subdivides patterns of the same category, providing examples of high-precision pattern partitioning.

Related work

Semi-supervised image classification

In order to solve the problem of deep learning models relying on a large amount of labeled data, semi-supervised learning(SSL)8 has gradually emerged as a research hotspot in the field of image classification. Semi-supervised learning is a learning method that combines the advantages of supervised learning9 and unsupervised learning10. Specifically, it allows the model to train on a small amount of labeled data while fully utilizing a large amount of unlabeled data, thereby improving the model’s generalization ability and ultimately enhancing classification accuracy and generalization performance. At present, the main techniques for semi-supervised image classification include graph based methods, consistency regularization methods, pseudo labeling methods, and hybrid methods.

The graph based semi-supervised learning methods utilize the graphical structure of data to assist classification or regression tasks, such as Bayesian networks or graph neural networks(GNN)11.In recent years, the Semi-Supervised Graph Convolutional Network (SGCN)12 has become a new image classification method that combines the advantages of graph convolution and semi-supervised learning. By transforming the image into a graph structure and utilizing graph convolution to learn local features and structural information, it can achieve good classification results on limited labeled data and handle a large amount of unlabeled data.

The consistency regularization method was gradually developed and improved by Bachman et al.13 and subsequent Sajjadi et al.14 in related research. The smoothing assumption and clustering assumption provide support for the core principles of consistency regularization. The core idea of these assumptions is that data points that are similar to each other will produce similar outputs. Specifically, for an unlabeled data point, even minor perturbations (such as data augmentation, dropout, etc.) should not cause significant changes in its prediction results.

In the field of semi-supervised image classification, pseudo labeling technology is an important technique. It was proposed by Lee et al.15, and the principle is to use a model trained on labeled data to predict unlabeled data, and use the predicted results as “pseudo labels”. This simple and effective pseudo labeling technique can utilize unlabeled data to improve model performance, while reducing annotation costs and enhancing the model’s generalization ability.

The mainstream hybrid methods now mainly combine consistency regularization methods and pseudo labeling techniques. MixMatch16 constructed an efficient semi-supervised learning system by integrating consistency regularization, minimum entropy principle (generating low entropy pseudo labels), and MixUp17 data augmentation techniques. RemixMatch18 improves the MixMatch algorithm by introducing two new techniques: distribution alignment and enhanced anchoring, significantly enhancing data efficiency and reducing the amount of label data required to achieve the same accuracy. FixMatch19 achieves efficient semi-supervised learning by simplifying the consistency regularization process and combining strong and weak data augmentation with pseudo labeling techniques. The Temporal Ensemble20 method, Mean Teacher21, and others also combine consistency regularization and pseudo labeling methods.

Data augmentation

Data augmentation involves various transformations and processing operations on existing data to generate new training samples, thereby enhancing the diversity and quantity of the dataset.

With the deepening of research, scholars have also developed more comprehensive and effective data augmentation methods. AutoAugment22 is a reinforcement learning based method that automatically optimizes the process of data augmentation by searching for the best data augmentation strategy. RandAugment23 is a simplified version of AutoAugment that reduces the complexity of the search process by directly selecting the operation and strength of the augment strategy for random search, while still achieving good results. The concept represented by Mixup17 is to fuse two images in a linear combination to ultimately generate a new image. By mixing two images together and weighted averaging the labels, the model can learn more abstract features and enhance its generalization ability. CutMix24, as a data augmentation method, integrates the concepts of Cutout25 and Mixup. By cropping rectangular regions in an image and replacing them with corresponding regions from another image, a completely new image can be generated. In addition, the Random erasing data augmentation proposed by Zhong et al.26 simulates the occlusion of objects by randomly erasing some regions in the image, and enhances the robustness of the model. The Differentiable randaugment technology proposed by Xiao et al.27 further optimized the data enhancement process and significantly improved the performance of the model on different tasks by learning to select the weight and amplitude distribution of image transformation. The Sample-Aware RandAugment(SA-RandAugment) proposed by Xiao et al.28 effectively improves the image recognition performance through an automatic data enhancement strategy without search, and provides a new idea for data enhancement in semi supervised learning.In agricultural image recognition, the SpemNet proposed by Qiu et al29. Combined with multi-scale attention mechanism effectively improves the ability of feature extraction under complex background. These methods provide a useful reference for the data augmentation strategy in this study.

In this study, various data augmentation strategies were integrated and combined with edge detection to achieve optimal results for cloud patterns.

Image edge detection

Edges are important visual features in images, and they are crucial for both human and machine vision systems to recognize images.Therefore, edge detection is highly significant in various fields, including high-order feature extraction, feature description, object recognition, and image segmentation30.

Early exploration of edge detection was mainly based on the drastic changes in grayscale values of image edge regions, and researchers attempted to use this grayscale variation characteristic to locate edges. Subsequently, gradient detection operators based on convolutional templates emerged, and classic operators such as Roberts31, Prewitt32, Sobel33 were proposed successively. The Laplacian34 and Canny35 operators based on second-order derivatives are also milestone achievements in image edge detection. In the context of the continuous evolution and development of computer technology, two technologies, edge detection based on wavelet transform and edge detection based on deep learning, are gradually emerging. Wavelet transform captures local features of images through multi-scale analysis and combines different strategies to achieve edge extraction. Convolutional neural networks (CNNs) are trained on a large amount of annotated data to automatically learn complex edge features in images. Among them, methods such as Xie et al.36 proposed Global Nested Edge Detection (HED), Maninis et al.37 used multi-scale information of images, combined with pixel classification and contour direction, to propose COB algorithm, He et al.38 used multi-scale proposed bidirectional cascaded network BDCN structure, etc.

In this study, different edge detection operators were used to extract the contour of cloud patterns, and comparative experiments were conducted to determine the optimal operator that conforms to our method.

Methods

Model construction

Backbone network

Traditional cloud patterns have complex and varied forms and fine details, usually containing rich combinations of lines, curves, and patterns. These rich texture information and complex local structures pose high requirements for image recognition algorithms. Therefore, to address the specific issues of traditional cloud patterns, we have chosen WideResNet as the backbone network model. By increasing the network width, we can extract richer features and better capture subtle texture changes and local structural information in cloud pattern images. Moreover, WideResNet has strong feature extraction and generalization capabilities, which can better learn discriminative features between different categories of cloud patterns, while reducing the impact of intra class differences on classification results. At the same time, the data volume of cloud pattern images in this experiment is relatively limited. Compared with deeper networks, WideResNet has fewer parameters and is easier to train on limited datasets, avoiding overfitting.

The basic building blocks of WideResNet39 and ResNet40 are similar, both based on Residual Blocks. In order to achieving specific information transmission, each residual block is equipped with two 3x3 convolutional layers, and the input is directly added to the output of the second convolutional layer using skip connections to construct a unique structure. The model structure diagram can be referred to in Fig. 1. The WideResNet28*2 model used in this experiment has a parameter of about 3.8M, a training time of about 1.5 hours (100 epochs), and a single training iteration time of about 1.3 ms. In contrast, the Resnet50 has a parameter of 25.6M, a training time of about 3 hours, and a single training iteration time of about 3.1 ms. While maintaining high accuracy, WideResNet significantly reduces the computational overhead, meets the real-time requirements, and is also suitable for resource constrained practical application scenarios.

Semi-supervised learning model

By integrating consistency regularization and pseudo labeling strategies, this paper constructs a semi-supervised learning model to fully tap into the potential of unlabeled data and improve model performance. Next, the training of the model will be carried out in the following two stages:

Pseudo label generation stage: To ensure the generation of reliable pseudo labels, in the initial stage, the model uses weak augment data and Random Line Augment data to generate high confidence pseudo labels, and sets a high confidence threshold of 0.95. Only when the maximum probability value predicted by the model exceeds this threshold, will the corresponding prediction result be considered as a pseudo label.

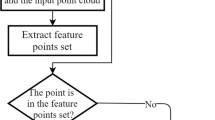

Consistency regularization stage: After generating pseudo labels, the model generates diverse views by applying different augment methods to the same input data. Subsequently, calculate the cross entropy loss between the predicted results of strong augment data and Random Line Augment data and the pseudo labels, and minimize this loss through optimization algorithms. The predicted label results generated by pseudo labeling are obtained through \(\textrm{argMax}()\). The model method is shown in the Fig. 2.

The loss function l used in this model includes two cross entropy losses, supervised loss \(l_s\) and unsupervised loss \(l_u\) , as shown in Equation (1).

The definition of the supervised loss function \(l_s\) is presented in Equation (2). Specifically, let \(X = \{(x_b, p_b): b \in (1, \ldots , B)\}\) represent a set of B labeled samples in a batch, where \(x_b\) is the input training sample and \(p_b\) is the true label distribution corresponding to \(x_b\).Meanwhile, \(U = \{u_b : b \in (1, \ldots , B)\}\) represent the set of B unlabeled samples in the same batch. The model predicts the class distribution of the input x as \(p_m(y|x)\) .In order to quantify the difference between two probability distributions p and q, this paper uses cross entropy CE(p, q) as the metric function. During training, two data augmentation techniques are introduced: weak augmentation (wA) and strong augmentation (SA). For specific forms of wA and SA, as well as their implementation details, refer to section Random Line data augmentation method.

The definition of the unsupervised loss function \(l_u\) is presented in Equation (3).The generation of pseudo labels relies on the predicted distribution \(p_m(y|wA)\) output by the model for weakly augmented data. Specifically, for each unlabeled sample \(u_b\), a weak augment operation wA is first applied to obtain the augmented sample \(wA(u_b)\). Subsequently, the model performs forward propagation on \(wA(u_b)\) and outputs its class prediction distribution \(p_m(y|wA(u_b))\).In order to generate high-confidence pseudo labels, this paper employs the \(\textrm{argMax}()\) to extract the category index with the highest probability value from the predicted distribution, specifically \(\wedge _{q_b} = argMax(p_m(y|wA))\), where \(q_b\) represents the pseudo label of sample \(u_b\).

On the other hand, the model’s predicted distribution for SA is denoted as \(p_m(y|SA(u_b))\). During the training process, the cross-entropy loss is calculated between the strongly augmented prediction distribution \(p_m(y|SA(u_b))\) and the pseudo label \(q_b\). This approach effectively constrains the consistency of the model’s predictions for the same sample across different augmented views, thereby improving the model’s efficiency in utilizing unlabeled data.

Random Line Augment strategy

Pattern edge detection

Pattern images often contain complex backgrounds (such as fabric textures and cultural relics), and direct classification is susceptible to background noise interference. At the same time, the structure of the pattern is the key basis for classification. Using edge detection can extract the contour of the pattern, filter out redundant background information, and transform complex image problems into classification based on contour features, significantly reducing the sensitivity of the model to background changes. In this experiment, the following 8 classic operators were used to extract edge features from cloud patterns, namely Sobel operator, Canny operator, Laplacian operator, LOG operator, Prewitt operator, Roberts operator, Scharr operator, and DOG operator. The edge detection performance is shown in Fig. 3.

Random line data augmentation method

Therefore, this paper proposes a RLA strategy for the main form of cloud pattern formation - lines, which includes weak augment and strong augment. Weak augment adopts a flipping augment strategy, combining strong augment with random horizontal flipping, random cropping, and RandAugment, followed by Cutout operation. The RLA strategy mainly consists of two core steps. Firstly, a portion of labeled samples are randomly selected proportionally for feature extraction; Then, according to a certain proportion, randomly select strong and weak augment examples from unlabeled samples for feature extraction. Then, the Roberts edge detection operator is used to extract line features (for a comparison of edge detection operators, please refer to Section 5.2.1). Afterwards, the extracted line information and samples processed through strong and weak augment are sent to the backbone network for training.

The RLA strategy follows Equation (4), where w(.) represents weak augment, S(.) represents strong augment, R(.) represents the Roberts edge detection operator extracting line features, X and U represents labeled and unlabeled samples.The parameter \(\alpha\) is a random proportion. To determine the optimal line enhancement ratio \(\alpha\), we tested a series of candidate values (e.g., 0.1, 0.2, ..., 0.8) and selected \(\alpha\) = 0.2, which achieved the best performance on the validation set. Table 1 shows the experimental results of different parameters with 7 labels using WideResNet as the backbone network.

In a semi-supervised learning model, firstly, generate w(X) for batch X. Subsequently, w(X) is randomly selected for edge texture feature extraction based on the proportion of parameter \(\alpha\). This process is called RLA, represented by R(.). Similarly, for batch U, apply strong augment to create S(U), and then extract pattern edge features through RLA.

Experiments and results

Dataset

This experiment sorted out a total of 840 typical cloud patterns from traditional Chinese clothing throughout history, including cloud thunder patterns, rolled cloud patterns, clustered cloud patterns, Ruyi cloud patterns, square Ruyi cloud patterns, Ren shaped cloud patterns and double hook cloud pattern. This experiment not only uses data augmentation strategies to expand the dataset, but also divides the dataset into training and testing sets in an 8:2 ratio to ensure the training effectiveness and validation accuracy of the model. The experimental cloud patterns dataset is shown in Fig. 4.

Experimental environment and settings

This experiment focuses on the classification of complex cloud patterns and proposes a RLA strategy based on semi-supervised learning. The main core points of this method are: firstly, using Wideresnet as the backbone network; secondly, combining edge detection operators to extract pattern edge features and propose a RLA strategy. This experiment was run on an RTX 4090D GPU using the PyTorch framework. During the experiment, WideResNet was used as the backbone network, and the optimizer of WideResNet was SGD. The input dataset size was adjusted to 64 \(\times\) 64 with a learning rate of 0.0003, with a batch size of 64 to ensure efficient training. The epochs were set to 64 and 100, and the number of labels was set to 7 and 70, respectively. The changes in accuracy and loss function are shown in Fig. 5 and 6.

Comparative experiments

Comparative experiment of different edge detection operators

In this experiment, different edge detection operators were used to extract edge features, in order to select the best edge detection operator suitable for cloud pattern classification. This experiment set the number of labels to 7 and Epoch to 100, and conducted a classification comparison experiment in the WideResNet backbone network. The experimental results are listed in Table 2. This set of experimental data shows that Roberts operator performs best in the task of cloud pattern classification. Roberts operator is sensitive to thin lines and corners, and is suitable for the common curves and turning structures in cloud patterns; And its calculation is simple, it is not easy to introduce too much noise, and it retains the clarity of the original lines; When the binarization threshold is set to 128, it can better balance detail preservation and noise suppression. In contrast, although Canny and Sobel operators have good edge continuity, they have weak response to the fine structure of cloud patterns, which may lead to the loss of key features. Therefore, Roberts operator is selected in RLA strategy to extract the edge features of cloud pattern.

Comparison experiment between WideResNet model and ResNet model

The main differences between WideResNet and ResNet are as follows:

Width Factor: WideResNet has the characteristic of using a width factor to adjust the number of filters in each convolutional layer. In this experiment, the WideResNet28 * 2 model used has a depth of 28 layers and a width factor of 2. The setting of this width factor makes the number of filters in each convolutional layer of the model twice that of the original ResNet28. Experimental data shows that at the same network depth, the WideResNet model can achieve higher accuracy in pattern classification.

Network depth: To verify the performance characteristics of WideResNet, we conducted comparative experiments comparing the WideResNet28*1 and ResNet50 models. WideResNet is expected to achieve comparable or better performance than ResNet with fewer layers by increasing the width of each layer. From the experimental results, it can be clearly seen that only the 28 layer WideResNet28*1, as a shallow network model, has better classification results than the 50 layer ResNet50.The experimental data is shown in Table 3.

Comparative experiments of different network models

To further validate the superiority of the WideResNet model, this comparative experiment selected EfficientNet, ConvNeXt, Vision Transformer, MobileNetV2, MobileNet_XXS, and GoogLeNet as the backbone networks for comparison. In the experiment, the image input size was uniformly set to 64 \(\times\) 64, and different optimal learning rates (Lr) and Epoch were used to test the classification accuracy of the model under different label numbers. The results are shown in Table 4.

This set of experimental data shows that the WideResNet backbone network can achieve optimal classification accuracy even if each class has only one label and uses the same number of epochs.

Comparative experiments of different data augmentation methods

In order to determine the optimal combination of data augmentation strategies in RLA strategy, this experiment conducted comparative experiments using different data augmentation methods. The experimental results are listed in Table 5.

From the experimental data of this group, it can be seen that the optimal data augmentation strategy combination of RLA strategy is “Random horizontal flip+Random clip+RandAugment+Roberts edge detection operator”, which significantly improves the accuracy of cloud pattern classification. Specifically, Roberts edge detection operator(section Comparative experiment of different edge detection operators for the selection process) strengthens the line features of cloud patterns through edge detection, and enhances the model’s perception of key structures. Secondly, the combination of RandAugment and other data augmentation methods can enhance the diversity of data by simulating diversified morphological changes. At the same time, RLA strategy combines strong and weak augmentation and consistent regularization to improve the utilization efficiency of the model for unlabeled data. The experimental results show that the accuracy rate of RLA strategy in cloud pattern classification reaches 97.41%, which is significantly better than 92.24% using only Random horizontal flip and Random crop. This shows that RLA strategy effectively improves the classification performance by enhancing the diversity of line features and the sensitivity of the model to line information.

To demonstrate the correlation between the experimental results and the unique structure of cloud patterns, the researchers conducted a validation experiment by changing the dataset. The specific process and corresponding results of the experiment can be found in Verification test section.

Verification test

The aim of this experiment is to verify whether the differences in the effectiveness of different data augmentation methods observed in previous cloud pattern classification are related to the unique structure of cloud patterns, such as symmetry. On the conventional dataset CIFAR-10, classification experiments were conducted for the same four data augmentation methods, this study compares and analyzes the performance of these methods. The goal is to explore the applicability of data augmentation techniques across various datasets.

This experiment selected the CIFAR-10 dataset, which covers 10 categories with a total of 60000 32x32 color images. Firstly, prepare the experimental data, with a total of 50000 images for training and 10000 images for testing. Next, to ensure the controllability of variables and comparability of results in the experiment, WideResnet was chosen as the backbone network for the classification experiment. Finally, during the specific experimental process, the number of labels was set to 4000, the learning rate was set to 0.03, and the number of iterations was set to 100. The results are presented in Table 6.

The experimental results show that on the CIFAR-10 dataset, when using random horizontal flipping and random cropping separately, the classification accuracy may not show significant differences as in cloud pattern classification. With the combination of data augmentation methods and the increase in complexity (including RandAugment and Roberts edge detection operators), the classification accuracy of the CIFAR-10 dataset may not necessarily be improved, which is inconsistent with its performance in cloud pattern classification. Although RLA strategy performs well in the task of cloud pattern classification, its applicability may be affected by image features. For image recognition tasks with significant nonlinear characteristics (such as complex objects in natural images), RLA strategy may need to be further optimized. Future research can explore how to combine RLA strategy with other advanced feature extraction methods (such as self attention mechanism) to improve its applicability in a wider range of pattern recognition tasks.

Conclusion

This article breaks through the classification problem of different types of patterns under the same category, and effectively solves the problem of relying on a large number of label annotations in traditional cloud pattern classification through semi-supervised learning methods. At the same time, this article proposes a Random Line Augment (RLA) that combines edge detection operators for prominent line features, and introduces WideResNet as the backbone network. By increasing feature extraction channels, the model’s ability to capture cloud pattern features is significantly improved, further enhancing classification accuracy. The experimental results show that the algorithm can achieve high-precision classification even when there is only one label annotation for each class.

Although our semi-supervised method has achieved significant results in reducing the cost of cloud pattern classification, lightweighting the model remains a key focus for future work. At the same time, we will continue to establish databases for other categories of patterns, further dividing patterns of the same category to form more accurate and refined databases, and applying this method to different databases to demonstrate its effectiveness in pattern classification.

Data availability

The author confirms that all data generated or analysed during this study are included in this article. Furthermore, the cloud pattern database mentioned in the article has not been made public yet, and if there are reasonable reasons, you can send an email to the following email address to obtain it. Contact email: 2712386147@qq.com.

Code availability

The custom computer code used to generate the results reported in this paper is available for access and can be accessed via the following link: Chua, C. C., & TCP - RLA Project. (2025). CChuaCC/TCP - RLA [Software]. Zenodo. https://doi.org/10.5281/zenodo.17476552.

References

Changmin, Z., Zuoming, S. & Anli, W. Classification of batik patterns based on convolutional neural networks. Mod. Comput. 72, 117–121 (2021).

Yi, C. Research on Clothing Image Retrieval Technology Based on Convolutional Neural Networks. Master’s thesis, Zhejiang University of Technology (2020).

Xuanming, S. & Miao, S. Application of deep learning based silk cultural relic pattern recognition. Silk 60, 1–10 (2023).

Kaiwen, Z., Xianshu, B., Juan, Q. & Mingxing, Y. Classification of traditional textile patterns of Xinjiang nomad ethnic groups based on Resnet 18 model. Woolen Text. Technol. 52, 111–118. https://doi.org/10.19333/j.mfkj.20240408208 (2024).

Mengjia, G. & Dawei, L. Classification and recognition of pattern printing patterns based on deep learning. Chem. Fiber Text. Technol. 53, 7–12 (2024).

Yongli, D. & Na, Z. Research on the classification of traditional window lattice patterns in northern shaanxi based on convolutional neural networks. Beauty and Times (Part 1) 75–79, https://doi.org/10.16129/j.cnki.mysds.2024.09.023 (2024).

Huijun, Y. & Hewen, C. Research on recognition and classification of qin embroidery patterns based on googlenet. Furnit. Inter. Decor. 30, 38–42. https://doi.org/10.16771/j.cn43-1247/ts.2023.05.007 (2023).

van Engelen, J. E. & Hoos, H. H. A survey on semi-supervised learning. Mach. Learn. 109, 373–440. https://doi.org/10.1007/s10994-019-05855-6 (2020).

Simao, M. A., Mendes, N., Gibaru, O. & Neto, P. A review on electromyography decoding and pattern recognition for human-machine interaction. IEEE Access 7, 39564–39582. https://doi.org/10.1109/ACCESS.2019.2906584 (2019).

Wang, H., Zhang, Q., Wu, J., Pan, S. & Chen, Y. Time series feature learning with labeled and unlabeled data. Pattern Recognit. 84, 55–66. https://doi.org/10.1016/j.patcog.2018.12.026 (2018).

Kitson, N. K., Constantinou, A. C., Guo, Z., Liu, Y. & Chobtham, K. A survey of Bayesian network structure learning. Artif. Intell. Rev. 56, 8721–8814. https://doi.org/10.1007/s10462-022-10351-w (2023).

Li, H. et al. A semi-supervised graph convolutional network for early prediction of motor abnormalities in very preterm infants. Diagnostics 13, 1508. https://doi.org/10.3390/diagnostics13081508 (2023).

Bachman, P., Alsharif, O. & Precup, D. Learning with pseudo-ensembles. Adv. Neural Inf. Process. Syst. 27, 3365–3373 (2014).

Sajjadi, M., Javanmardi, M. & Tasdizen, T. Regularization with stochastic transformations and perturbations for deep semi-supervised learning. In Advances in Neural Information Processing Systems (NeurIPS), 1163–1171 (2016).

Lee, D.-H. Pseudo-label: The simple and efficient semi-supervised learning method for deep neural networks. In ACM International Conference on Multimedia Retrieval, 896–903 (Pittsburgh, PA, USA, 2013).

Berthelot, D. et al. Mixmatch: A holistic approach to semi-supervised learning. In Advances in Neural Information Processing Systems 32 (NeurIPS 2019) (2019). arXiv:1905.02249.

Zhang, H., Cissé, M., Dauphin, Y. N. & Lopez-Paz, D. mixup: Beyond empirical risk minimization. arXiv preprint arXiv:1710.09412 (2018).

Berthelot, D. et al. Remixmatch: Semi-supervised learning with distribution alignment and augmentation anchoring. arXiv preprint arXiv:1911.09785 (2019).

Sohn, K. et al. Fixmatch: Simplifying semi-supervised learning with consistency and confidence. In Advances in Neural Information Processing Systems 33 (NeurIPS 2020), 596–608 (2020).

Laine, S. & Aila, T. Temporal ensembling for semi-supervised learning. arXiv preprint arXiv:1610.02242 (2016).

Tarvainen, A. & Valpola, H. Mean teachers are better role models: Weight-averaged consistency targets improve semi-supervised deep learning results. In Proceedings of the 31st International Conference on Neural Information Processing Systems, 1195–1204 (ACM, Long Beach, California, USA, 2017).

Cubuk, E. D., Zoph, B., Mane, D., Vasudevan, V. & Le, Q. V. Autoaugment: Learning augmentation strategies from data. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition, 113–123 (IEEE, Long Beach, 2019).

Cubuk, E. D., Zoph, B., Shlens, J. & Le, Q. V. Randaugment: Practical automated data augmentation with a reduced search space. arXiv preprint arXiv:1909.13719 (2019).

Yun, S. et al. Cutmix: Regularization strategy to train strong classifiers with localizable features. In Proceedings of the IEEE/CVF International Conference on Computer Vision, 6023–6032 (2019).

DeVries, T. & Taylor, G. W. Improved regularization of convolutional neural networks with cutout. arXiv preprint arXiv:1708.04552 (2017).

Zhong, Z. et al. Random erasing data augmentation. Proc. AAAI Conf. Artif. Intell. 34, 13001–13008 (2020).

Xiao, A. et al. Differentiable randaugment: Learning selecting weights and magnitude distributions of image transformations. IEEE Trans. Image Process. 32, 2413–2427 (2023).

Xiao, A., Yu, W. & Yu, H. Sample-aware randaugment: Search-free automatic data augmentation for effective image recognition. Int. J. Comput. Vis. 133, 7710–7725 (2025).

Qiu, K. et al. Spemnet: a cotton disease and pest identification method based on efficient multi-scale attention and stacking patch embedding. Insects 15, 667 (2024).

Cuijin, L. & Zhong, Q. Overview of image edge detection algorithms based on deep learning. Comput. Appl. 40, 3280–3288 (2020).

LeCun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Marr, D. & Hildreth, E. Theory of edge detection. Proc. R. Soc. Lond. Ser. B, Biol. Sci. 207, 187–217 (1980).

Wang, Z., Zhu, S., Li, Y. & Cui, Z. Convolutional neural network based deep conditional random fields for stereo matching. J. Vis. Commun. Image Represent. 40, 739–750. https://doi.org/10.1016/j.jvcir.2016.08.022 (2016).

Canny, J. A computational approach to edge detection. IEEE Trans. Pattern Anal. Mach. Intell. PAMI–8, 679–698. https://doi.org/10.1109/TPAMI.1986.4767851 (1986).

Konishi, S., Yuille, A. L., Coughlan, J. M. & Zhu, S. C. Statistical edge detection: learning and evaluating edge cues. IEEE Trans. Pattern Anal. Mach. Intell. 25, 57–74. https://doi.org/10.1109/TPAMI.2003.1159946 (2003).

Xie, S. & Tu, Z. Holistically-nested edge detection. In Proceedings of the 2015 IEEE International Conference on Computer Vision, 1395–1403. https://doi.org/10.1109/ICCV.2015.164 (IEEE, 2015).

Maninis, K.-K., Pont-Tuset, J., Arbeláez, P. & Gool, L. V. Convolutional oriented boundaries: From image segmentation to high-level tasks. IEEE Trans. Pattern Anal. Mach. Intell. 40, 819–833. https://doi.org/10.1109/TPAMI.2017.2700300 (2017).

He, J., Zhang, S., Yang, M., Shan, Y. & Huang, T. Bi-directional cascade network for perceptual edge detection. In Proceedings of the 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition, 3828–3837. https://doi.org/10.1109/CVPR.2019.00395 (IEEE, 2019).

Zagoruyko, S. & Komodakis, N. Wide residual networks. CoRR abs/1605.07146. arXiv:1605.07146 (2016).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. CoRR abs/1512.03385. arXiv:1512.03385 (2015).

Funding

This work was supported by Beijing digital education research topic (Grant No.BDEC2023619056). Funding for the 2023 Project of BIGC, BIGC Project (Grant No.21090525009), the Publishing science emerging interdisciplinary platform construction project of Beijing Institute of Graphic Communication (Grant No. 04190123001/003). Open Foundation of State key Laboratory of Networking and Switching Technology (Beijing University of Posts and Telecommunications)(SKLNST-2023-1-12). Beijing Municipal Education Commission & Beijing Natural Science Foundation Co-financing Project(Grant No. KZ202210015019). Project of Construction and Support for high-level Innovative Teams of Beijing Municipal Institutions(Grant No.BPHR20220107).

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Material preparation, data collection and analysis were performed by CC and HJW. The first draft of the manuscript was written by CC and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, C., Wang, H. Traditional cloud pattern classification algorithm based on semi-supervision with Random Line Augment. Sci Rep 15, 45374 (2025). https://doi.org/10.1038/s41598-025-29225-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-29225-6