Abstract

This paper studies a conveyor belt speed detection method based on the fusion of optical flow and feature matching. This method combines the ability of the optical flow method in calculating the motion information of image pixels and the advantage of the feature matching method in accurately identifying feature points. Through the mutual assistance and fusion of the two, the accurate detection of the conveyor belt speed is realized. An optimized Bayesian algorithm is adopted to distribute the weights according to the accuracy and reliability of the optical flow method and the feature matching method, as well as the light intensity, and the final belt speed value is obtained through comprehensive calculation. This research method has significant advantages in improving the performance of conveyor belt speed detection, and provides support for the operation monitoring and fault diagnosis of the conveyor belt.

Similar content being viewed by others

Introduction

Research background

Belt conveyors in application scenarios such as coal mines usually operate at a constant belt speed under full load. When the material on the conveyor belt is missing or less, if the full load speed is still kept running, it will reduce the operating efficiency of the equipment, leading to excessive consumption of electric energy and damage to the components of the belt conveyor. In order to promote the development of energy saving and speed control technology, the study of measuring the belt speed is crucial, which can monitor the operation of the conveyor more effectively1,2.

With the innovation and development of the industrial system, the production research in the industry is also gradually advancing. In recent years, with the in-depth study of the belt conveyor speed measurement system by scholars at home and abroad, the most widely used belt conveyor speed measurement3 methods are mainly photoelectric speed measurement method, Hall speed measurement4 method, etc., but these speed measurement methods have certain defects. For example, the photoelectric speed measurement method, which is a non-contact measurement method. This method has no wear on the belt, fast response speed, and can accurately reflect the speed change of the belt, but the disadvantage is that the robustness is not strong and it is easily affected by the environment. There is also a Hall velocity measurement method based on Hall effect. This method is robust and suitable for some harsh working environments. However, the Hall element has a certain degree of temperature drift, which may affect the measurement accuracy. With the development of machine vision technology, the image-based speed measurement method has gradually occupied an important position. Image-based speed measurement has become a common method for acquiring motion information, and the application of image-based methods to the research on belt conveyor speed measurement is feasible.’

Related work

With the advancement of machine vision technologies in industrial applications, non-contact belt velocity measurement for conveyor systems has emerged as a viable approach. With the advancement of machine vision technology in industrial applications, non-contact belt conveyor speed measurement has emerged as a viable solution. Numerous in-depth studies on image feature point matching algorithms have laid a solid foundation for image-based speed measurement methods5,6,7. Gauglitz et al. proposed approaches for interest point detection and feature description in visual tracking8, while Ye et al.9 developed a SAR image matching algorithm based on shape properties. Collectively, these studies have provided a robust theoretical basis for image-based speed detection techniques. Additionally, Jin et al.10 presented a vehicle speed measurement method using sequential images, and Liu et al.11 investigated a belt speed detection approach for belt conveyors leveraging machine vision. This method acquires surface images of the moving belt conveyor via a fixed-interval imaging system. Utilizing the temporal consistency between adjacent frames in the image sequence, the relative pixel displacements of tracking points are calculated through feature correspondence algorithms. These displacements are then converted into physical speed measurements by means of calibrated image-to-world coordinate system transformation. However, the low-texture characteristic of the coal belt conveyor surface often results in insufficient feature saliency, which impairs the measurement accuracy and operational reliability of traditional feature matching methods.

Recent developments in optical flow-based motion estimation have demonstrated promising results in analogous fluid dynamics applications, particularly in river current velocimetry. As demonstrated in12, optical flow-based velocimetry exhibits reduced dependency on tracer particles while generating dense pixel-wise flow fields - attributes that prove particularly advantageous for belt speed detection scenarios. The parallel between river flow estimation and conveyor belt monitoring is noteworthy: both scenarios involve continuous material flow within constrained regions of interest without distinct tracking markers, making optical flow algorithms theoretically suitable for belt velocity quantification13,14. Cui et al. (2023) presented a canonical Lucas–Kanade (LK) formulation that assumes brightness constancy and solves the local optical flow within a small window via least-squares optimization15. Owing to its low computational cost and ease of deployment on embedded CPUs, LK has been widely adopted in resource-constrained industrial scenarios; nevertheless, its accuracy degrades sharply under large displacements, and its sensitivity to illumination variations hampers reliable operation in harsh underground environments. FlowNet 2.0 is an end-to-end convolutional architecture that stacks multiple sub-networks to estimate optical flow at multiple scales16. Pre-trained on large-scale synthetic data, FlowNet 2.0 achieves real-time inference within 8–12 ms on a GTX 1080 and performs well in medium- to high-speed structured scenes; however, edge-region errors and occlusion artifacts remain pronounced on low-texture conveyor surfaces. GMFlow proposed by Sun et al. reduces the optical flow estimation error in low-texture scenes by 25% based on a global matching mechanism, but it has not been applied to conveyor belt speed measurement17. Optical Flow Transformer proposed by Ranjan et al. improves the accuracy of long-distance motion matching through global attention modeling18, but its high computational complexity makes it difficult to meet the real-time requirements of industrial applications. RAFT (Recurrent All-Pairs Field Transforms) constructs a 4D correlation volume and iteratively refines the flow field through a gated recurrent unit19. RAFT attains the lowest end-point error on public benchmarks and exhibits strong generalization to large displacements and complex illumination conditions.Meanwhile, numerous optical flow-based speed measurement methods have been extensively investigated20,21,22. Nevertheless, practical implementation faces challenges stemming from inherent limitations of optical flow assumptions, particularly in operational environments susceptible to illumination variance violations of brightness constancy assumptions and large inter-frame displacements, which significantly degrade measurement precision.

Innovations of this paper

In this paper, we propose a conveyor belt speed detection method that integrates optical flow and feature matching. The optical flow method can roughly reflect the motion of the conveyor belt surface by calculating the motion vectors of pixels in the image. The motion vectors are used to provide information about the overall motion trend of the conveyor belt, which provides a preliminary reference for feature extraction and matching. The results of feature extraction and matching are used to verify the calculation results of the optical flow method23. The Bayesian algorithm is used for decision fusion, and the weights are assigned by calculating the information of image brightness and contrast. In the case of good lighting conditions and obvious surface features of the conveyor belt, the results of feature extraction and matching are highly accurate and are given higher weights; and in the case of large changes in lighting or noise interference, the results of the optical flow method are given higher weights and combined with the results of feature extraction and matching. Correction. Through this fusion detection method, the advantages of optical flow method and feature extraction and matching can be fully utilized to improve the accuracy and reliability of conveyor belt speed detection. The innovations of this paper are summarized as follows:

(1) The RAFT model is modified by incorporating the SENet module to enhance the feature adaptability under varying illumination conditions and low-texture scenarios.

(2) A Harris-BRIEF-RANSAC feature matching algorithm is proposed to improve the accuracy of traditional image-based algorithms in speed detection.

(3) A speed detection algorithm integrating feature matching with the modified optical flow is developed, which employs a Bayesian dynamic weight allocation method to enhance detection accuracy.

Principles of optical flow method and feature matching method

Optical flow algorithm

The optical flow method estimates the motion of an object by calculating the motion vectors of the pixels in an image, based on the following assumptions: constant luminance between neighboring frames and small displacements of the object motion24.

The Luminance constancy assumption means that the pixel luminance of the target object in an image remains constant between successive frames. That is, for a pixel point (x, y) at time T and T + ΔT the luminance value conforms to the equation.

where (x, y) is the pixel point, t is the time, x, y is the displacement of the pixel point in T time. Optical flow-based speed measurement is performed based on the principle that “the pixel brightness on the conveyor belt surface remains constant within adjacent frames” in conveyor belt speed measurement.

The brightness is obtained by Taylor expansion.

Due to the assumption of constant brightness, there is

.

Define the optical loss as (u, v), quorum u = \(\:\frac{\varDelta\:\text{x}}{\varDelta\:\text{t}}\),\(\:v=\frac{\varDelta\:\text{y}}{\varDelta\:\text{t}}\), Bringing into type 3 is available

.

Ix and Iy represent pixel gradients, and It denotes temporal grayscale variation. The motion velocity of pixels on the conveyor belt surface can be directly solved using this equation.

Feature matching method

The feature matching method performs feature matching on each adjacent two images in the camera acquisition sequence image, and calculates the pixel distance and coordinates of the matching feature points of the front and rear frames. According to the mapping relationship between the image coordinate system and the world coordinate system, the physical size represented by each pixel in the image can be obtained. The actual displacement and speed of the conveyor belt can be obtained by calculation9,25.

Image matching is the process of analyzing the similarity of structure, feature and gray between the reference image and the image to be matched, and then obtaining the corresponding relationship between the image groups. The reference image and the image to be matched are represented by two-dimensional matrices K1 and K2, respectively. Then K1 (X, Y) and K2 (X, Y) represent the gray value of the image at the point (X, Y). Its mathematical model can be expressed as

.

Among them, F(x, y) is a spatial transformation function, corresponding to the displacement of feature points caused by the movement of the conveyor belt; G(x, y) is a grayscale transformation function, which can compensate for grayscale deviations induced by illumination fluctuations in underground coal mines and provide a mathematical basis for converting pixel displacement into physical velocity. After improvement, the mathematical model of image matching is

.

Neglecting the secondary grayscale transformation G(x, y) and focusing on the “spatial position correspondence of feature points” in conveyor belt speed measurement can reduce computational complexity while ensuring matching accuracy under stable illumination conditions in the laboratory.

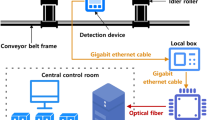

Conveyor belt speed detection method based on optical flow and feature matching fusion

The structure of the conveyor belt speed detection method based on optical flow and feature matching fusion proposed in this paper is shown in Fig. 1. The high-definition industrial camera is used to collect the conveyor belt image, and the camera calibration is performed at the same time. The image coordinates are matched with the world coordinates, and the pixel displacement in the image is converted into a real-world distance unit. Then, the RAFT-SEnet optical flow model and the Harris-BRIEF-RANSAC feature matching algorithm are used to calculate the belt speed respectively, and then the Bayesian algorithm is used for decision fusion to obtain the final conveyor belt speed information.

RAFT-SEnet model

In recent years, deep learning has been widely used in the field of computer vision and has achieved remarkable results. In order to solve the problems of traditional optical flow estimation methods, optical flow estimation methods based on deep learning have become the mainstream. In this paper, RAFT optical flow estimation is used as the benchmark network architecture for optimization. The RAFT algorithm is mainly composed of feature extractor, visual similarity calculator and updater.

Feature Extraction Network: The proposed feature extraction network is composed of two cascaded components: (i) a feature encoder and (ii) a context encoder.

Feature encoder: A siamese CNN whose two branches share identical weights is employed to extract discriminative representations from two consecutive frames of the input water-flow video. The resulting feature vectors are fused via an inner-product operation and forwarded to the visual-similarity calculator.

Context encoder: A separate CNN encodes contextual information from the reference frame; the produced feature map is supplied to the updater module.

Visual-Similarity Calculator: Given the inner-product similarity vector generated by the feature encoder, we build a four-level feature pyramid to progressively encode multi-scale semantics. A learnable mapping function is then applied at each pyramid level to translate similarity cues into rich, semantically meaningful representations. Performing matching across these levels enables the extraction of globally consistent features that are robust to local ambiguities.

Updater: The updater receives three inputs: (1) the current optical-flow estimate, (2) the global feature representation queried from the visual-similarity calculator, and (3) the contextual features produced by the context encoder. These concatenated inputs are processed by a gated recurrent unit (GRU) followed by two convolutional layers to predict a residual flow update. Through iterative refinement, the module generates a sequence of progressively improved optical-flow fields; the final estimate and its corresponding colour-coded flow visualization are output after convergence.

In this paper, the SENet8 attention mechanism is introduced in the motion feature extraction part of the feature vector. SENet can adaptively adjust the weight of features by learning the importance of each feature channel, thereby highlighting more valuable features for optical flow estimation.The Global Average Pooling and Sigmoid function modules of SENet learn the importance weights of each feature channel. Specifically, under strong light conditions, they suppress the weights of feature channels corresponding to overexposed regions; under low light conditions, they suppress the weights of feature channels related to underexposed regions, thereby enhancing the signals of normally illuminated areas. In low-texture scenarios (e.g., coal surfaces with indistinct textures), these modules increase the weights of feature channels containing details such as particles and scratches, while reducing background interference. For river scenes with light reflection and flicker, SENet can better focus on key areas and improve the quality of feature extraction. The improved network structure is shown in Fig. 2.

SENet is an attention mechanism for deep convolutional neural network (CNN), which aims to enhance the network ‘s representation ability on feature channels. By learning the importance weights of each channel, and then using these weights to re-weight the feature map, the representation of useful information is enhanced and irrelevant information is suppressed26.

The RAFT-SEnet optical flow network model focuses the motion feature extraction network on information-rich areas, such as pixels with particles or ripples, by adding the SENet attention module to reduce the influence of pixels in unrelated areas such as shadows and backgrounds. Figure 3 shows the RAFT-SEnet optical flow network model.

Harris-BRIEF-RANSAC feature matching algorithm

Aiming at the shortcomings of the image features of the conveyor belt of the belt conveyor are not significant and the overlapping area is not obvious, a Harris-BRIEF-RANSAC feature27 matching algorithm suitable for the image of the belt conveyor is proposed. The algorithm uses the Harris operator to extract the feature points of the conveyor belt image, uses the BRIEF algorithm to extract and match the descriptors, realizes the uniform distribution of the feature points, and uses the RANSAC algorithm to filter the matching points. And speed up the algorithm by defining the region of interest of the image. In this paper, the matching algorithm is divided into three parts : Harris extraction feature points, BRIEF algorithm matching descriptor, RANSAC screening matching points.The algorithmic framework is schematically depicted in Fig. 4.

Fusion of bayesian algorithm decision

Due to the bad working environment of the belt conveyor, the weight distribution is carried out according to the brightness and contrast information of the collected image, and the belt speed detection under different illumination and interference is realized. In this paper, Bayesian algorithm is used to realize the decision fusion of optical flow method and feature matching algorithm to realize the speed detection.

According to the brightness, contrast and other characteristics of the image, the Bayesian weight calculation function is designed. The features such as brightness and contrast are linearly combined for setting28.

Among them, w is the weight coefficient, a and b are the adjustment parameters, which are adjusted according to the actual field situation, L is the brightness, and C is the contrast.

In order to meet the constraint condition that the sum of the weight coefficients is 1, the weight coefficients are normalized.

Quorum,\(\:{{\upomega\:}}_{\text{o}\text{p}\text{t}\text{i}\text{c}\text{a}\text{l}}\) is the weight coefficient of optical flow method, \(\:{{\upomega\:}}_{\text{f}\text{e}\text{a}\text{t}\text{u}\text{r}\text{e}}\)is the weight coefficient of feature matching algorithm. \(\:{{\upomega\:}}_{{\text{o}\text{p}\text{t}\text{i}\text{c}\text{a}\text{l}}_{\text{r}\text{a}\text{w}}}\)and\(\:{{\upomega\:}}_{{\text{f}\text{e}\text{a}\text{t}\text{u}\text{r}\text{e}}_{\text{r}\text{a}\text{w}}}\)are the weight coefficients of optical flow method and feature matching algorithm before normalization, respectively.

According to the Bayesian algorithm, the estimated value of the fused belt speed can be expressed as\(\:\:{\text{V}}_{\text{f}\text{u}\text{s}\text{i}\text{o}\text{n}}={{\upomega\:}}_{\text{o}\text{p}\text{t}\text{i}\text{c}\text{a}\text{l}}\times\:{\text{V}}_{\text{o}\text{p}\text{t}\text{i}\text{c}\text{a}\text{l}}+{{\upomega\:}}_{\text{f}\text{e}\text{a}\text{t}\text{u}\text{r}\text{e}}\times\:{\text{V}}_{\text{f}\text{e}\text{a}\text{t}\text{u}\text{r}\text{e}}\)quorum\(\:{{\upomega\:}}_{\text{o}\text{p}\text{t}\text{i}\text{c}\text{a}\text{l}}\) and \(\:{{\upomega\:}}_{\text{f}\text{e}\text{a}\text{t}\text{u}\text{r}\text{e}}\) It is the weight coefficient calculated according to the image features.\(\:{\text{V}}_{\text{o}\text{p}\text{t}\text{i}\text{c}\text{a}\text{l}}\) and \(\:{\text{V}}_{\text{f}\text{e}\text{a}\text{t}\text{u}\text{r}\text{e}}\) are the belt speed estimation values obtained by optical flow method and feature matching algorithm respectively.

Experiment and result analysis

Dataset

The experimental dataset consists of two types of scenarios: laboratory scenarios and on-site coal mine scenarios. Each scenario includes a total of 100 video sequences under two lighting conditions (strong light and weak light). Each video contains 1000–2000 frames of image data with a resolution of 1920 × 1080 and a frame rate of 25 fps.

-

(1)

Laboratory scenarios: Illumination is set to 50 lx and 1000 lx. The conveyor belt speeds are configured as 0.5 m/s, 1 m/s, 1.5 m/s, 2 m/s, 3 m/s, 3.5 m/s, and 4.5 m/s (seven speed levels), with 30 videos for each level, amounting to a total of 10,000 frames.

-

(2)

On-site coal mine scenarios: Illumination ranges from 10 lx to 500 lx, and the conveyor belt speeds range from 1.0 m/s to 5 m/s. This subset includes 40 video sequences with dust interference, totaling 5,000 frames.

RAFT-SEnet model training

In this paper, in order to collect the running image of the conveyor belt under the working condition, the image acquisition memory is designed and installed in the working site of multiple belt conveyors for image data acquisition. In order to obtain a large number of conveyor belt running image data in real scenes, pixel displacement labeling is performed on the image data to generate a conveyor belt speed detection data set for RAFT-SEnet model training.

The training environment adopts WIN10 operating system, GPU: RTX3090,CPU: i9-14900k. The Adam optimizer is used in the training parameter setting. The initial learning rate is 0.0001, the weight attenuation is 1e-4, the momentum is 0.9, the batch is 4, the training rounds are 500, the luminosity loss weight is 1.0, and the smoothing loss weight is 0.01.

Optical flow method test: Video sequences were recorded across various belt conveyor operating conditions for speed detection experiments. Acquisition conditions for the images in Figs. 5 and 6: main conveyor belt in a coal washing plant, with an illumination of 120 lx; the camera was positioned 3 m away from the conveyor belt, and the image resolution was 1280 × 720. Figure 5 presents a representative coal transportation scene captured by an industrial camera, while Fig. 6 shows the corresponding velocity field estimated by RAFT-SEnet. In order to more intuitively reflect the effectiveness and generalization ability of the optical flow detection method, the optical flow image is visualized. Figures 7 and 8 show the image and optical flow diagram of the camera facing the conveyor belt and the camera deviating from the middle line of the conveyor belt, respectively.

To quantitatively validate the advantages of the proposed RAFT-SEnet model, three representative optical-flow algorithms—TV-L116, FlowNet218, and the original RAFT were selected for benchmarking. TV-L1 was executed with the default parameters recommended by OpenCV 4.3, whereas FlowNet2 and RAFT were fine-tuned on the same training set used for RAFT-SEnet. All tests were conducted on a belt conveyor running at a ground-truth speed of 4 m/s, and the resulting velocity estimates are depicted in Fig. 9.

Figure 9 presents the estimated belt speeds obtained by TV-L1, FlowNet2, RAFT, and the proposed RAFT-SEnet at a ground-truth belt velocity of 4 m/s. The corresponding estimates are 3.62 m/s, 3.73 m/s, 3.74 m/s, and 4.21 m/s, respectively. To quantitatively evaluate the accuracy and stability of the speed measurement methods, three evaluation metrics are selected: mean absolute error (MAE), root mean square error (RMSE), and error percentage.

(1) MAE.

It is defined as the arithmetic mean of the absolute differences between the estimated speeds and the true speeds across all test samples, with the calculation formula as follows:

Where N is the number of test samples, Vest, i is the estimated speed of the i sample, and Vtrue, i is the true speed of the i sample. This metric directly reflects the overall error level of the speed measurement method. Being insensitive to outliers, it can effectively characterize the average accuracy of the method under normal operating conditions.

(2) RMSE.

It is defined as the square root of the arithmetic mean of the squared differences between the estimated speeds and the true speeds across all test samples, with the calculation formula as follows:

This metric amplifies the weight of larger errors through squaring, making it more sensitive to abnormal errors under extreme conditions. It can be used to evaluate the error dispersion and robustness of the method. A smaller RMSE indicates better consistency of the speed measurement results and less fluctuation caused by environmental interference.The comparison results are shown in Table 1.Under the experimental conditions, the proposed RAFT-SEnet achieves a relative speed error of 5.25%. The MAE and RMSE of each algorithm are visually summarized in Figure 10, which intuitively reflects the superior stability and accuracy of the RAFT-SEnet model compared to the benchmark optical flow algorithms.

Feature-Matching Algorithm Experiment: To evaluate the proposed Harris-BRIEF-RANSAC pipeline, three classical feature extractors—SIFT, FAST, and ORB—were employed for comparison. Tests were again performed on a 4 m/s belt conveyor; the detected speeds are illustrated in Fig. 11.

Figure 11 compares the results of SIFT, FAST, ORB, and the proposed Harris-BRIEF-RANSAC pipeline under the same 4 m/s belt speed. The estimated values are 4.41 m/s, 3.66 m/s, 4.27 m/s, and 4.22 m/s. The comparison results are shown in Table 2. The proposed Harris-BRIEF-RANSAC algorithm yields the best accuracy with a relative error of 5.5%, and the comparison of MAE values and error percentages across all four feature extraction algorithms is visualized in Figure 12, further confirming the effectiveness of the proposed method.

Comparative test of conveyor belt speed detection: In order to verify the advantages of this method, video data with different speeds and different illumination conditions are used to verify the method. The conveyor belt speeds in the test video are 0.5 m/s, 1 m/s, 1.5 m/s, 2 m/s, 3 m/s, 3.5 m/s and 4.5 m/s, respectively. In this paper, the fusion algorithm, the RAFT-SEnet optical flow algorithm and the feature matching algorithm are used to detect the belt speed respectively, and the results and errors are recorded. The comparison results are shown in Table 3.

The comparison results of different algorithms are shown in the table. In the case of sufficient illumination, the maximum deviation of the optical flow method detection belt speed appears at the set belt speed of 0.5 m/s, and the deviation is −8%. The maximum deviation of the feature matching algorithm is −10%, and the maximum deviation of the fusion algorithm is only − 4%, which is increased by 6% points. In the case of insufficient illumination, the maximum deviation of the optical flow detection belt speed appears at the set belt speed of 2 m/s, the deviation is 19%, the maximum deviation of the feature matching algorithm is 32%, and the maximum deviation of the fusion algorithm is only 8%, which is increased by 24% points. Similarly, the mean square error, as the main parameter index of detection stability, has also been well improved in the fusion algorithm. On the whole, the fusion algorithm can provide more accurate and stable belt speed detection results under both sufficient and insufficient illumination conditions. The distribution of MAE and RMSE for the three methods (RAFT-SEnet, Harris-BRIEF-RANSAC, and the proposed fusion algorithm) across different conveyor belt speeds is further illustrated in Figure 13, which verifies the robustness of the fusion strategy in diverse operating scenarios.

Based on the belt conveyor system in the laboratory scene, the belt speed detection method based on optical flow and feature matching fusion proposed in this paper is tested on site. The experimental environment is shown in Fig. 14.

The traditional speed sensor detection results are used as the comparison data, and the experimental results are shown in Fig. 15.

According to the experiment, the maximum deviation of the speed sensor is −7% at different belt speeds, and the maximum deviation of the belt speed obtained by the algorithm in this paper is −4%. The results show that the speed measurement method in this paper is basically similar to the sensor speed measurement method in detecting the belt speed error, and both have good stability and reliability. Therefore, the method in this paper can effectively replace the traditional speed sensor for belt speed detection, realize the non-contact measurement of conveyor belt speed detection, effectively improve the service life and stability of the sensor, and make up for the defect that the sensor speed measurement is easily affected by the external environment during contact.

Conclusion

In this paper, a conveyor belt speed detection method based on optical flow and feature matching fusion is proposed. By introducing the improved RAFT optical flow algorithm based on attention mechanism and the Harris-BRIEF-RANSAC feature matching algorithm, the belt speed is calculated respectively, and the Bayesian algorithm is used for decision fusion. This method addresses the speed measurement bottlenecks of single methods in industrial scenarios (e.g., low-texture conditions and illumination fluctuations): Compared with traditional sensors, non-contact measurement avoids equipment wear and prolongs the maintenance cycle. Moreover, this method has been validated on a laboratory conveyor belt system and can be directly migrated to scenarios such as coal mines and ports, providing data support for energy-saving control and fault early warning of conveyor belts. In the future, we will employ higher-resolution industrial cameras for image acquisition to obtain clearer images. We will introduce Transformer-based optical flow models to address the issue of estimation errors in long-distance motion of conveyor belts. For underground coal mine environments, a dark-channel dehazing image preprocessing module will be added to further reduce environmental interference. Additionally, we will perform lightweight processing on the model to further enhance the engineering application capability of the method.

Data availability

The datasets used and analysed during the current study are available from the corresponding author on reasonable request.

References

Xu, R. Zhangqi. Research on the Speed Measurement Method of Incremental Optical Encoder Based on Kalman Filter (University of Science and Technology of China, 2018).

Wang, H. Design of the speed regulation system of belt conveyor based on fuzzy PID theory. Coal Mine Mach. 40 (9), 14–16 (2019).

Huang, X., Zhang, X., Song, Y. & Qi, Y. Research on the detection of belt breakage of belt conveyor based on optical encoder. Dev. Innov. Mach. Electr. Prod. 2008 (2), 113–114 (2008).

Meng, F. Research on the speed regulation and efficiency improvement of belt conveyor based on image processing [dissertation]. China University of Mining and Technology (2020).

Calonder, M. et al. BRIEF: Binary robust independent elementary features. In: Proceedings of the European Conference on Computer Vision (ECCV). 778–792 (2010).

Lowe, D. G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vision. 60 (2), 91–110 (2004).

Rublee, E. et al. ORB: An efficient alternative to SIFT or SURF. In: Proceedings of the IEEE International Conference on Computer Vision (ICCV). 2564–2571 (2011).

Gauglitz, S., Höllerer, T. & Turk, M. Evaluation of interest point detectors and feature descriptors for visual tracking. Int. J. Comput. Vision. 94 (3), 335–360 (2011).

Ye, Y. et al. Robust optical-to-SAR image matching based on shape properties. IEEE Geosci. Remote Sens. Lett. 14 (4), 564–568 (2017).

Jin, S., Hu, Y. & Tian, M. Vehicle-mounted speed measurement method and experiment based on sequence images. J. Hefei Univ. Technol. (Natural Science) 36 (3), 21–24 (2013).

Liu, L. & Miao, C. Research on the belt speed detection method of belt conveyor based on machine vision. Instrument Technique Sens. 2020 (7), 118–121 (2020).

Zhang, S. Research on the energy-saving optimization method of belt conveyor [dissertation]. (Northeastern University, 2012).

Jin, S., Hu, Y. & Tian, M. Vehicle-mounted speed measurement method and experiment based on sequence images. J. Hefei Univ. Technol. (Natural Sci. Edition) (3), 21–24 (2013).

Liu, L. & Miao, C. Research on the belt speed detection method of belt conveyor based on machine vision. Instrument Technique Sens. 7, 118–121 (2020).

Cui, Y. et al. Visual optical flow computation technology and its applications. J. Electron. Inform. Technol. 45 (8), 2710–2721 (2023).

Ilg, E. et al. FlowNet 2.0: Evolution of optical flow estimation with deep networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR). arXiv:1612.01925v1 (2017).

Sun, D. et al. GMFlow: Learning optical flow via global matching. In: Proceedings of the International Conference on Computer Vision (ICCV). (2021).

Ranjan, A. & Black, M. J. Optical flow transformer. IEEE Trans. Pattern Anal. Mach. Intell. 46(12), 11491–11506. (2023).

Teed, Z. & Deng, J. RAFT: Recurrent all-pairs field transforms for optical flow. In: Proceedings of the European Conference on Computer Vision (ECCV). arXiv:2003.12039v3. (2020).

Du, T., Wang, X. & He, J. An optical flow-based velocity detection algorithm for waterway channels under complex lighting conditions. Comput. Eng. 50 (4), 60–67 (2024).

Zhang, L., Li, J. & Wang, H. Belt conveyor speed detection based on improved ORB and optical flow. IEEE Sens. J. 23(15), 16938–16943 (2022).

Li, Y., Chen, X. & Zhang, Q. Lightweight optical flow model for embedded belt conveyor monitoring. In: Proceedings of the International Conference on Information and Automation (ICIA). (2024).

Wang, Z., Li, C. & Liu, Y. Robust feature matching for low-texture conveyor belt images. J. Sens. 49(7) (2023).

Zheng, C., Xiang, Z. & Liu, J. Monocular visual odometry by fusing optical flow and feature point matching. J. Zhejiang Univ. (Engineering Science). 48 (2), 279–284 (2014).

Hartley, R. & Zisserman, A. Multiple View Geometry in Computer Vision (Cambridge University Press, 2003).

Chan, K. C., Wang, X., Yu, K., Dong, C. & Loy, C. C. Basicvsr: The search for essential components in video super-resolution and beyond. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 4947–4956 (2021).

Chen, H. et al. Pre-trained image processing transformer. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition. 12299–12310 (2021).

Cho, S. et al. Cats: cost aggregation Transformers for visual correspondence. Annual Conference on Neural. Inf. Process. Syst. arXiv:2106.02520v4. (2021).

Acknowledgements

In this section, you can acknowledge any support given which is not covered by the author contribution or funding sections. This may include administrative and technical support, or donations in kind (e.g., materials used for experiments).

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62471329 and in part by the Tianjin Science and Technology Program under Grant 23YDTPJC00130.

Author information

Authors and Affiliations

Contributions

Conceptualization: D.Miao and YM.Wang; Methodology: YM.Wang; Software: D.Miao; Validation: D.Miao, YM. Bai and JY.WANG; Formal analysis: GL.Fu; Investigation: YM.Wang; resources, YM.Wang; Data curation: JY.WANG; Writing—original draft preparation: D.Miao, YM. Bai and JY.WANG; Writing—review and editing: YM.Wang; Visualization: YM.Wang.; Supervision: YM.Wang, YM. Bai and JY.WANG; Project administration: GL.Fu; Funding acquisition: GL.Fu.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Miao, D., Wang, Y., Bai, Y. et al. Conveyor belt speed detection via the synergistic fusion of optical flow and feature matching. Sci Rep 16, 908 (2026). https://doi.org/10.1038/s41598-025-30488-2

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-30488-2