Abstract

Amblyopia is the main cause of monocular vision loss in children. Early recognition and treatment are important to prevent vision loss. As public health awareness increases, short videos platforms like TikTok and Bilibili are increasingly being used to disseminate health information. However, due to the lack of peer review and supervision, short-video platforms tend to disseminate incorrect and incomplete health information. At present, the quality of videos on amblyopia has not been systematically evaluated. To evaluate the quality of videos related to amblyopia, this cross-sectional study used the Chinese term “amblyopia” as the search keyword to collect videos from TikTok and Bilibili. After applying exclusion criteria, 185 videos (94 from TikTok, 91 from Bilibili) were analyzed. Data on video length and characteristics, including engagement metrics (likes, collections, comments and shares) were collected. The assessment tools including the Global Quality Score (GQS), the modified DISCERN, the Journal of the American Medical Association (JAMA) benchmark criteria and the Video Information and Quality Index (VIQI) were used to evaluate video reliability and quality. Through statistical analysis, the quality and reliability among two platforms, video sources, and video quality were evaluated. On the TikTok, videos were mainly uploaded by specialists with accounting for 71.3%. While on the Bilibili, videos were mainly uploaded by individual users with accounting for 45%. TikTok videos scored higher in quality (GQS: 2.862 ± 1.033; modified DISCERN: 2.277 ± 0.8848; VIQI: 10.88 ± 2.531) compared to Bilibili (GQS: 2.242 ± 1.089 p < 0.0001; modified DISCERN: 1.846 ± 0.8154 p = 0.001; VIQI: 6.571 ± 1.910 p < 0.0001). Specialist-uploaded videos performed notably better in quality, with GQS, modified DISCERN, JAMA and VIQI scores of 3(3–4), 3(2–3), 3(2–3) and 11(9–13), respectively. On both platforms, the topic of amblyopia treatment was the most frequently discussed one, while the topic of prevention received the lowest level of discussion. The TikTok videos demonstrated a significantly higher level of audience engagement compared to Bilibili. Correlation analysis revealed that there were strong correlations between interaction data, but interaction data had no correlation with GQS, modified DISCERN, JAMA and VIQI scores. On the whole, the user engagement and quality of TikTok are both higher than those of Bilibili. However, both of two platforms fall short in terms of the quality and reliability of videos related to amblyopia. The reliability of specialist-uploaded videos is higher. This might be because they can provide information that is more valuable to the audience. The two platforms’ videos pay far more attention to the treatment of amblyopia than to its prevention. The proposed intervention measures include robust platform certification, active involvement of medical specialists in content creation, and enriching the video content.

Similar content being viewed by others

Introduction

Amblyopia is the most common cause of monocular visual impairment in children that affects 3–5% of the population1,2.It arises when the developing brain favors one eye, usually because that eye delivers a clearer image, while suppressing input from the fellow eye3,4. Typical risk factors include anisometropia, strabismus, or a combination of both, and, less frequently, visual deprivation such as congenital cataract5,6. The period from 7 to 8 years old is a crucial time for the plasticity of the cerebral cortex. As one grows older, the effectiveness of treatment for amblyopia gradually diminishes7. Treatment therefore consists of early refractive correction, occlusion or pharmacological penalization of the better-seeing eye, and, more recently, binocular training or perceptual-learning protocols designed to rebalance cortical activity8. Therefore, early identification, intervention, and raising public awareness about amblyopia are crucial for improving children patient health and societal well-being.

In the current era of rapid data development, the way the public acquires health information has changed9,10. With the widespread application of platforms such as Bilibili and TikTok, people tend to obtain health information either actively or passively through short videos. Although short video platforms can quickly provide an overview of the information, due to their lack of peer review and strict regulatory mechanisms, the quality of many videos varies greatly. This poses a significant risk of misleading the public and having a negative impact on health behaviors11,12. At present, there is still a lack of in-depth research on the quality and credibility of content related to medical topics such as amblyopia.

This study is to analyze the scientific accuracy, reliability, and potential impact of amblyopia-related short videos on TikTok and Bilibili to bridge this research gap. The overall objective of this study is to promote the development of short-video platforms such as TikTok and Bilibili, and to guide the public to gain a more comprehensive and responsible understanding of amblyopia, thereby facilitating the acquisition of reliable information about this disease.

Methods

Ethical considerations

This study used publicly available data and did not require ethics committee approval.

Search strategy and data extraction

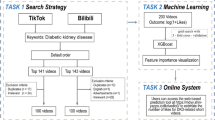

The primary goal of this study was to gather short-video data on the topic of “amblyopia” from Bilibili (https://www.bilibili.com) and TikTok (https://www.douyin.com). Firstly, in order to ensure the comprehensiveness and representativeness of the data, we used the Chinese term “amblyopia” as the search keyword and collected videos published before August 31, 2025. Data collection was carried out separately on both platforms. Secondly, we conducted all searches in the hidden mode using the newly created account to avoid any bias caused by personalized recommendations, and to ensure that there were no historical data or personalized algorithms influencing the search results. Finally, we collected the top 100 videos based on the random order of the platforms, using the Chinese term “amblyopia” as the keyword. We did not follow a specific sorting method, such as the number of likes or user preferences, etc. The purpose was to make the selection of videos more random. If these videos directly addressed the epidemiology, etiology, symptoms, diagnosis, treatment or prevention of amblyopia, and were publicly accessible (excluding private or restricted content), they would be included in the statistics. Videos were excluded if they (1) were identified as advertisements (n = 8), (2) were irrelevant self-promotion (n = 2) and (3) were released less than one week (n = 5). Thus, 185 videos were included in the following research. Meanwhile, during the data extraction process, we recorded the following data: the source of the video (Bilibili or TikTok), upload time, video length (in seconds), number of likes, number of collections, number of comments and number of shares. All data were extracted through the public API provided by the platform, ensuring accuracy and consistency.

Uploader characteristics

The uploaders of the videos were categorized as (1) specialist, (2) individual user and (3) organization. Specialists, including ophthalmologists and optometrists, were distinguished from individual users, who were mainly patients or relatives of patient.

Video quality assessment

This study employed the modified DISCERN, the GQS, the JAMA benchmark criteria and the VIQI assessments to evaluate the reliability and quality of the collected short videos. The modified DISCERN is developed from DISCERN, which is mainly used to evaluate the reliability of videos using five dimensions: (1) Is the video clear, concise, and understandable? (2) Are valid sources cited? (3) Is the content presented balanced and unbiased? (4) Are additional sources of content listed for patient reference? and (5) Are areas of uncertainty mentioned? The score for each dimension is “1 point for answer ‘yes’, 0 point for answer ‘no’ ”, with a cumulative score calculated (ranging from 0 to 5 points) (Supplementary Table 1)13. The GQS utilize a 5-point Likert scale to evaluate the overall quality of the video, with scores ranging from 1 (poor) to 5 (excellent)(Supplementary Table 2)14. The JAMA benchmark criteria are mainly used to evaluate the credibility of videos based on four dimensions: (a) Authorship; (b) Attribution; (c) Currency; and (d) Disclosure. The criteria of each aspect were scored separately, and 1 point for each criterion with a total score of 4 points (Supplementary Table 3)15,16. The VIQI scale include four sub-evaluations, including the flow of information (Fluency in presenting information related to the topic), the accuracy of the information, video quality (Videos including one point for each image, animation, interview, video captions, and summary), and precision (level of coherence between video title and content) (Supplementary Table 4)17,18. Furthermore, the completeness of the videos was assessed based on whether they included the following information: epidemiology, etiology, symptoms, diagnosis, treatment, and prevention. All of the above evaluations were conducted by two assessment personnel with relevant medical backgrounds (WZ&JX). They received unified training before the assessment to ensure the consistency of the evaluation standards. We used Cohen’s Kappa coefficient to evaluate the inter-rater reliability for quality and reliability assessments, with values > 0.8 indicating excellent agreement, 0.6–0.8 representing substantial agreement, 0.4–0.6 moderate agreement, and < 0.4 poor agreement.

Statistical analysis

This study employed descriptive analysis, differential analysis and correlation analysis to analyze the characteristics and quality indicators of the videos. Among them, the Mann-Whitney U test was used for the analysis of differences between two sets of data. The Kruskal-Wallis H test was used for the analysis of differences among multiple sets of data. And the Spearman test was used for correlation analysis. The statistical calculations were carried out using IBM SPSS Statistics 27.0, while GraphPad Prism 9.0 was used to generate the charts.

Results

Video characteristics

According to the established inclusion and exclusion criteria, we selected the top 100 videos from TikTok and Bilibili respectively, and finally obtained a sample of 185 videos. The detailed screening process is shown in Fig. 1, while Table 1 lists the characteristics of the included videos. Firstly, in terms of the platform, TikTok offered 94 videos, while Bilibili provided 91 videos. And as for the creators, the videos uploaded by specialists accounted for the largest proportion, at 49.7%. Followed by individual users’ videos, which accounted for 31.9%, and organizational videos, which accounted for 18.4%. (Table 1) It is very obvious that on the TikTok platform, videos uploaded by specialists account for the largest proportion, reaching 71.28%. While on the Bilibili platform, videos uploaded by individual users account for the largest proportion, at 45.05% (Fig. 2). The median video length was 85 s (50–233.5). The median number of likes, collections, comments and shares per video were 84 (15–555.5), 65 (9–323), 5 (0–90), and 39 (6–261). Overall, the interaction data of these videos is still acceptable. In terms of video quality, the median GQS score was 3 (2–3), the median modified DISCERN score was 2 (1–3), the median JAMA score was 3 (2–3), and the median VIQI score was 9 (6–11).

Video content

On both platforms, the topic of amblyopia treatment was the most frequently discussed one. Specifically, it accounted for 80.8% on the TikTok platform and 71.4% on the Bilibili platform. The topics of diagnosis and symptoms are ranked second and third respectively on both platforms. The topic of prevention received the lowest level of discussion on both platforms, with 28.7% on the TikTok platform and 14.2% on the Bilibili platform. (Table 2).

Comparison of characteristics across two platforms

Table 3 provides a detailed comparison of video characteristics between TikTok and Bilibili. There are many differences between the two platforms. On the TikTok, videos were mainly uploaded by specialists with accounting for 71.3%. While on the Bilibili, videos were mainly uploaded by individual users with accounting for 45%. The median of video length on TikTok is much longer than that on Bilibili. In terms of interaction, TikTok videos demonstrated a significantly higher level of audience engagement compared to Bilibili. Specifically, the median number of likes, collections, comments, and shares on TikTok were 345.5(110.3–1274), 175(51.5–615), 55.5(12.75–293), and181.5(40.5–814.5), respectively, compared to BiliBili’s median values of15(3–51), 13(4–85), 0(0–1), and10(2–39). In terms of video quality, TikTok videos scored higher in quality (GQS: 2.862 ± 1.033; modified DISCERN: 2.277 ± 0.8848; VIQI: 10.88 ± 2.531) compared to Bilibili (GQS: 2.242 ± 1.089 p < 0.0001; modified DISCERN: 1.846 ± 0.8154 p = 0.001; VIQI: 6.571 ± 1.910 p < 0.0001). (Fig. 3)

Comparison of characteristics across different video sources

We also compared the characteristics of the videos based on their sources. We found that the length of videos uploaded by individual users is significantly longer than that of videos uploaded by specialists and organizations. In terms of the popularity of the videos, statistically significant differences were observed in the number of likes, collections, comments, and shares across specialists, individual users, and organizations -uploaded videos. The specialists-uploaded videos demonstrated a significantly higher level of audience engagement compared to individual users and organizations -uploaded videos. Significant differences were also found in video quality. Specialist-uploaded videos performed notably better in quality, with GQS, modified DISCERN, JAMA and VIQI scores of 3(3–4), 3(2–3), 3(2–3) and 11(9–13), respectively. (Table 4) Specialist-uploaded videos had significantly higher GQS scores (3.380 ± 0.7823) compared to organization (1.765 ± 0.7410) and individual user (1.729 ± 0.6652) -uploaded videos (p < 0.0001), with no significant differences between organization and individual user videos. As for modified DISCERN, JAMA and VIQI scores, the situation is the same. (Fig. 4).

Correlation analysis between features and quality of videos

Figure 5 presents the correlation analysis between video features and video quality across two platforms. On both platforms, significant positive correlations were found among interaction data (likes, collections, comments, shares). For instance, on TikTok, the correlation coefficients between likes and comments, likes and shares, and likes and collections are 0.90, 0.85, and 0.85, respectively. On Bilibili, the correlation coefficients between shares and collections, shares and comments, and comments and collections are 0.96, 0.86, and 0.80, respectively. However, interaction data had almost no correlation with GQS, modified DISCERN, JAMA and VIQI scores.

Discussion

This study used the modified DISCERN, the GQS, the JAMA benchmark criteria and the VIQI to investigate the quality of videos about amblyopia on the TikTok and Bilibili platforms. Our cross-sectional study shows that for both TikTok and Bilibili, the health-related amblyopia videos uploaded by specialists received higher scores on all four assessment tools. This might be because specialists possess a richer background in medical knowledge and more professional clinical skills, indicating that the professional background of the uploader has a significant impact on the quality and credibility of the video content. This is consistent with some previous research findings, namely that the content provided by specialists is more scientific and standardized19,20,21. Compared with the Bilibili platform, people’s participation and activity levels on the TikTok platform are higher. This might be related to the fact that the videos on the Bilibili platform are longer.

However, the video quality on both platforms is rather mediocre. We can see that the majority of the content on both platforms is about the popularization of science of amblyopia treatment, with 80.8% on TikTok and 71.4% on Bilibili. Based on the evaluation data from this study, we can draw the conclusion that the videos need to enhance the comprehensiveness of its content. For instance, many videos rarely cite references, which may reduce the credibility of the content. Some videos adopt interactive methods such as question-and-answer sessions, which can closely align with the information that patients are seeking to know. A small number of videos adopt forms such as animated explanations, which might be able to arouse the audience’s interest and make complex medical knowledge more understandable.

We also have found several issues regarding the scientific accuracy and misleading content about amblyopia on short-video platforms. For instance, some videos exaggerate the therapeutic effects of amblyopia training, claiming things like “amblyopia rehabilitation”, “vision recovery training” and “the visual degree will only decrease and never increase”, which may cause viewers to give up conventional medical intervention. Furthermore, some of the training methods provided in the videos are overly simplistic, ignoring individual differences, the multi-factor nature of diseases, and the importance of medical intervention. They repeatedly push information to the patients, creating an information bubble for them. What’s more, many videos even have children directly appearing to participate in “amblyopia training challenges”. This not only exposes the privacy of minors but also poses the risk of encouraging imitation. These misleading contents not only affect the public’s understanding of amblyopia, but also may lead to incorrect health decisions and treatment delays. Therefore, it is of utmost importance to ensure that the health information disseminated on short-video platforms is scientifically accurate and based on solid evidence.

In this study, we found that the two platforms’ videos pay far more attention to the treatment of amblyopia than to its prevention. In fact, compared with the treatment of amblyopia, the prevention of amblyopia is equally important. Research indicates that early screening is crucial for preventing amblyopia, particularly during the critical period of visual development (from 6 months to 6 years of age). Multiple studies have found that early detection and intervention for issues such as refractive errors and strabismus can significantly reduce the incidence of amblyopia1,22.

With the rise of social media, especially the popularity of short-video platforms, the way the public obtains health information has undergone a significant transformation23,24. Platforms like TikTok and Bilibili have become major channels for disseminating health-related content, attracting a large number of users25,26,27. Although the scientific literature on social media is constantly expanding, research on ophthalmic diseases is still relatively limited. The existing research results are consistent with our findings. Cao J et al. analyzed the quality of videos on cataracts on TikTok using the JAMA benchmark criteria, GQS, modified DISCERN score, and PEMAT-A/V. They found that more videos were uploaded by institutions and physicians than by nonphysicians (p < 0.05). Doctors specializing in cataract uploaded videos of higher quality than nondoctors. For comprehensibility, 69% of videos had scores of 67–100%, indicating that the majority of videos are easy to understand. However, 62% of the videos scored 0–33% for operability, indicating more room for improvement28. Wang H et al. analyzed 152 videos (89 from TikTok, 63 from Bilibili) about thyroid eye disease. They found that TikTok videos scored higher in quality (GQS: 3.00 ± 0.58; modified DISCERN: 3.17 ± 0.73) compared to Bilibili (GQS: 2.65 ± 0.65; modified DISCERN: 2.21 ± 0.88; p < 0.001). The analysis revealed significant differences in the two platforms, with TikTok featuring predominantly Western medical specialists (46%) whose video quality scores surpassed those on Bilibili. In contrast, Bilibili exhibited a prevalence of Traditional Chinese Medicine content (62%) with lower reliability and interactive performance scores29.

The main significance of this study lies in providing a comprehensive method for evaluating the quality of health information on short-video platforms. By comparing TikTok and Bilibili, we have revealed how the quality of video content, the source of the videos, and audience interaction affect the dissemination of health information. Furthermore, we also conducted a more comprehensive and objective assessment of the quality of health information videos by integrating the modified DISCERN, the GQS, the JAMA benchmark criteria and the VIQI tools.

Of course, this study also has some limitations. First of all, the video quality rating is highly subjective, which can lead to errors. Moreover, the platform’s algorithm will recommend the retrieved videos based on the different user profiles. This will result in different outcomes for different users when searching for videos related to visual impairment. Secondly, this study only focused on the two major domestic platforms, TikTok and Bilibili, and did not cover the situations of global platforms such as YouTube. Thirdly, because this study only used the Chinese term “amblyopia” as the keyword and only collected the top 100 videos, there may be selection bias. Finally, with the advancement of technology and the popularity of artificial intelligence tools, we should actively learn to utilize advanced technologies to assist in data analysis. This might make our analysis process simpler and the data analysis more objective and reliable. This will be a direction worthy of in-depth exploration in future research.

Conclusions

In this study, the assessment tools including the modified DISCERN, the GQS, the JAMA benchmark criteria and the VIQI were used to evaluate the quality of 185 videos related to amblyopia on the TikTok and Bilibili platforms. Overall, we found that both of these platforms have deficiencies in terms of video quality and reliability. It is worth noting that, on the whole, the video quality on TikTok is slightly better than that on Bilibili. The videos uploaded by the specialists have higher quality and greater reliability, and can provide more valuable healthy information to the audience. Both the two platforms’ videos pay far more attention to the treatment of amblyopia than to its prevention. Based on the existing evaluation standards, the quality of these videos has big room for improvement. The proposed intervention measures include robust platform certification, active involvement of medical specialists in content creation, and enriching the video content. It requires the joint efforts of medical staff, short-video platforms and patients to maintain the effective dissemination of public health information.

Data availability

All data and materials that support the findings of this study is provided within supplementary information files.

Abbreviations

- GQS:

-

Global Quality Scale

- JAMA:

-

Journal of the American Medical Association

- VIQI:

-

Video Information and Quality Index

- PEMAT-A/V:

-

Patient Education Materials Assessment Tool for Audio Visual Content

- IQR:

-

Interquartile Range

References

Birch, E. E., Kelly, K. R. & Wang, J. Recent advances in screening and treatment for amblyopia. Ophthalmol. Ther. 10 (4), 815–830 (2021).

Ghasia, F. & Wang, J. Amblyopia and fixation eye movements. J. Neurol. Sci. 441, 120373 (2022).

Cruz, O. A. et al. Amblyopia Preferred Pract. Pattern® Ophthalmol. ;130(3) 136–178. (2023).

Kates, M. M., Beal, C. J. & Amblyopia JAMA ;325(4):408. (2021).

Mansouri, B., Stacy, R. C., Kruger, J. & Cestari, D. M. Deprivation amblyopia and congenital hereditary cataract. Semin Ophthalmol. Sep-Nov 28 (5–6), 321-6. (2013).

Vaughan, D., Harrell, F. E. & Donahue, S. P. A predictive model for amblyopia risk factor diagnosis after Photoscreening. Ophthalmology 129 (9), 1065–1067 (2022).

Cooke, S. F. & Bear, M. F. How the mechanisms of long-term synaptic potentiation and depression serve experience-dependent plasticity in primary visual cortex. Philos. Trans. R Soc. Lond. B Biol. Sci. 369 (1633), 20130284 (2013).

Tsaousis, K. T., Mousteris, G., Diakonis, V. & Chaloulis, S. Current developments in the management of amblyopia with the use of perceptual learning techniques. Med. (Kaunas). 60 (1), 48 (2023).

Liao, M. Analysis of the causes, psychological mechanisms, and coping strategies of short video addiction in China. Front. Psychol. 15, 1391204 (2024).

Kong, W., Song, S., Zhao, Y. C., Zhu, Q. & Sha, L. TikTok as a health information source: assessment of the quality of information in Diabetes-Related videos. J. Med. Internet Res. 23 (9), e30409 (2021).

Lei, Y., Liao, F., Li, X. & Zhu, Y. Quality and reliability evaluation of pancreatic cancer-related video content on social short video platforms: a cross-sectional study. BMC Public. Health. 25 (1), 1919 (2025).

Zhang, W. et al. Evaluation of the content and quality of schizophrenia on tiktok: a cross-sectional study. Sci. Rep. 14 (1), 26448 (2024).

Kanzow, P. et al. Quality of information regarding repair restorations on dentist websites: systematic search and analysis. J. Med. Internet Res. 22, e17250 (2020).

Zheng, S. et al. Quality and reliability of liver Cancer-Related short Chinese videos on TikTok and bilibili: Cross-Sectional content analysis study. J. Med. Internet Res. 25, e47210 (2023).

Bruce-Brand, R. A., Baker, J. F., Byrne, D. P., Hogan, N. A. & McCarthy, T. Assessment of the quality and content of information on anterior cruciate ligament reconstruction on the internet. Arthroscopy 29 (6), 1095–1100 (2013).

Saleh, D. et al. A systematic evaluation of the Quality, Accuracy, and reliability of internet websites about pulmonary arterial hypertension. Ann. Am. Thorac. Soc. 19 (8), 1404–1413 (2022).

Ekmez, F. & Ekmez, M. Evaluation of the quality and reliability of YouTube videos with Turkish content as an information source for gynecological cancers during the COVID-19 pandemic. Cureus 15 (9), e44581 (2023).

Kaya, H.D., Beyazgül, S., et al. Content analysis and quality evaluation of YouTube videos on Leopold’s Maneuvers: a mixed-method study. Educ Inf Technol 30(14), 20653–20672 (2025).

Shi, A. et al. Mpox (monkeypox) information on tiktok: analysis of quality and audience engagement. BMJ Glob Health. 8, e011138 (2023).

Matthews, M. R. et al. Oropharyngeal cancer and the HPV vaccine: analysis of social media content. Laryngoscope 135(8), 135(18) 2770-2776 (2025) .

Song, S. et al. Short-video apps as a health information source for chronic obstructive pulmonary disease: information quality assessment of TikTok videos. J. Med. Internet Res. 23, e28318 (2021).

Zhang, W., Shi, X. F. & Li, X. T. [Further Raising prevention and treatment for amblyopia in China]. Zhonghua Yan Ke Za Zhi. 61 (1), 1–6 (2025). Chinese.

Ma, M. et al. Evaluation of medical information on male sexual dysfunction on Baidu encyclopedia and wikipedia: comparative study. J. Med. Internet Res. 24, e37339 (2022).

Guan, J. L. et al. Videos in Short-Video sharing platforms as sources of information on colorectal polyps: Cross-Sectional content analysis study. J. Med. Internet Res. 26, e51655 (2024).

Zhang, J. et al. Short video platforms as sources of health information about cervical cancer: A content and quality analysis. PLoS One. 19 (3), e0300180 (2024).

Zhu, W. et al. Information quality of videos related to esophageal cancer on tiktok, kwai, and bilibili: a cross-sectional study. BMC Public. Health. 25 (1), 2245 (2025).

Wang, J. et al. Assessing the content and quality of GI bleeding information on Bilibili, TikTok, and youtube: a cross-sectional study. Sci. Rep. 15 (1), 14856 (2025).

Cao, J., Zhang, F., Zhu, Z. & Xiong, W. Quality of cataract-related videos on TikTok and its influencing factors: A cross-sectional study. Digit. Health. 11, 20552076251365086 (2025).

Wang, H., Zhang, H., Cao, J., Zhang, F. & Xiong, W. Quality and content evaluation of thyroid eye disease treatment information on TikTok and bilibili. Sci. Rep. 15 (1), 25134 (2025).

Acknowledgements

The authors would like to express their gratitude to the participants who participated in the study.

Funding

The authors received no financial support for the research, authorship, and/or publication of this article.

Author information

Authors and Affiliations

Contributions

LM did conceptualization and writing the original draft. WZ did formal analysis and validation. JX performed data curation and did formal analysis. All authors contributed to the article and approved the submitted version.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical considerations

The data used in this study were sourced from publicly available video content published on platforms such as Bilibili and TikTok. These videos are publicly accessible, and no personal privacy information was involved during the data collection process. All analyzed content was publicly available, and the study did not involve the collection or processing of users’ private information. In accordance with relevant ethical review guidelines, ethical approval for this study was not required.

The authors declared no potential conflicts of interest with respect to the research, authorship, and/or publication of this article.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, M., Wang, Z. & Jiang, X. The quality and reliability of short videos about amblyopia on TikTok and bilibili: cross-sectional study. Sci Rep 16, 1946 (2026). https://doi.org/10.1038/s41598-025-31758-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-31758-9