Abstract

Lifestyle factors play a major role in atrial fibrillation (AF) incidence, but the effectiveness of lifestyle counseling varies among individuals. Due to limited consultation time, physicians often provide only brief guidance, leaving patients to manage changes on their own. This study assessed the clinical utility of three Large Language Models (LLMs) for delivering accurate and personalized lifestyle guidance: (1) GPT-4o, (2) a retrieval-augmented model using a curated Q&A database (DB GPT), and (3) a modular RAG model retrieving evidence from PubMed (PubMed GPT). Sixty-six questions from 16 AF patients were categorized into exercise, diet, lifestyle, and other domains. Five experienced electrophysiologists independently evaluated LLM-generated lifestyle guidance and physician-provided counseling responses using ten dimensions. GPT-4o demonstrated a comparable level of scientific consensus to electrophysiologists, while achieving a lower error rate and significantly higher levels of specialized content, empathy, and helpfulness. DB GPT and PubMed GPT showed similar error rates, proportions of specialized content, empathy, and helpfulness compared to electrophysiologists, but exhibited strengths in specialized content in exercise-related and accuracy in diet-related dimensions. These findings suggest that integrating complementary model strengths may help develop safer and more reliable medical AI systems.

Similar content being viewed by others

Introduction

Many studies have highlighted the importance of lifestyle changes in preventing atrial fibrillation (AF) from occurring. However, this crucial aspect of care is often overlooked due to the limited time physicians have for each patient during the outpatient clinic1,2.

Since the advancement of generative AI’s conversational capacity lead by ChatGPT-kind of Large Language Models (LLMs), LLMs have gained significant attention in various fields, as well as in the medical field. They demonstrate vast potentials in supporting a myriad of tasks from summarizing medical evidence to assisting diagnosis. For example, AI-based clinical decision support systems (CDSS) have been reported to be useful as diagnostic assistance tools for physicians3,4. In addition, research on lifestyle behavior change mediated by AI systems5 has shown the effectiveness of AI chatbots in facilitating behavioral changes in lifestyle, such as improved eating habits, smoking cessation, and medication adherence6,7,8,9. Thus, generative AI has great potential for application in the medical field, but at the same time, concerns exist regarding the appropriateness, safety, and reliability of responses due to hallucination (generation of false information)10,11,12.

This study aims to assess the appropriateness, safety, and reliability of LLM-generated lifestyle guidance for patients with AF. It will also evaluate the models’ bedside manner, empathy, and adherence to scientific consensus when presenting this guidance, and determine their overall clinical utility.

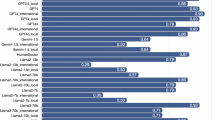

Results

The five evaluators were all experienced physicians, with an average of 17.2 years of clinical practice (range, 14–21 years). All evaluation dimensions were analyzed using a Generalized Linear Mixed Model (GLMM) with post-hoc multiple comparisons (Dunnett’s test, using the electrophysiologist as the control group). As shown in Table 1, Scientific Consensus was observed in 80.0% of responses for the electrophysiologist, 67.0% for DB GPT, 62.0% for PubMed GPT, and 88.0% for GPT-4o. Compared with the electrophysiologist, DB GPT (OR 0.46, 95% CI 0.20–1.05) and PubMed GPT (OR 0.35, 95% CI 0.16–0.81) showed lower odds of achieving consensus, whereas GPT-4o (OR 1.95, 95% CI 0.73–5.21) demonstrated a comparable rate. When compared with the electrophysiologist, the proportions rated as “No/mild” for Extent of Possible Harm were 75.8% for DB GPT, 78.0% for PubMed GPT, and 90.9% for GPT-4o, with no statistically significant differences observed (DB GPT: OR 0.82 [0.37–1.86]; PubMed GPT: OR 0.94 [0.41–2.14]; GPT-4o: OR 2.71 [0.98–7.51]). For Evidence of Incorrect Comprehension, GPT-4o demonstrated a significantly lower error rate compared with the electrophysiologist (OR 3.41, 95% CI 1.40–8.30), whereas DB GPT and PubMed GPT showed comparable performance (OR 1.06 [0.49–2.29] and 0.64 [0.30–1.37], respectively). Regarding Evidence of Incorrect Retrieval, GPT-4o also exhibited a significantly lower error frequency compared with the electrophysiologist (OR 3.46, 95% CI 1.28–9.31), while DB GPT and PubMed GPT showed no significant differences (both OR 0.91 [0.39–2.14]). For Evidence of Incorrect Reasoning, none of the LLMs showed statistically significant differences compared with the electrophysiologist (DB GPT: OR 0.57 [0.23–1.39]; PubMed GPT: OR 0.50 [0.20–1.22]; GPT-4o: OR 1.75 [0.62–4.92]). In Inappropriate Content, PubMed GPT demonstrated a significantly lower rate than the electrophysiologist (OR 0.32, 95% CI 0.10–0.99), while DB GPT and GPT-4o showed comparable rates (OR 0.76 [0.24–2.41] and 1.00 [0.31–3.19], respectively). For Incorrect Content, no significant differences were observed among any of the LLMs compared with the electrophysiologist (DB GPT: OR 0.67 [0.28–1.59]; PubMed GPT: OR 0.67 [0.28–1.59]; GPT-4o: OR 2.51 [0.92–6.91]). In contrast, for Specialized Content, GPT-4o produced a significantly higher proportion of responses containing specialized information than the electrophysiologist (OR 10.80, 95% CI 4.28–17.29), whereas DB GPT and PubMed GPT showed no significant differences (OR 1.45 [0.64–3.28] and 1.69 [0.75–3.79], respectively). As shown in Fig. 1, for Bedside Manner (rated on a 5-point scale, 1 = very empathetic, 5 = not empathetic), GPT-4o demonstrated significantly higher empathy compared with the electrophysiologist (odds ratio = 5.55, 95% CI 2.60–11.82), whereas DB GPT (OR = 0.70, 95% CI 0.34–1.45) and PubMed GPT (OR = 0.65, 95% CI 0.31–1.36) showed comparable levels. For Helpfulness of the Answer (rated on a 4-rank scale, 1 = best, 4 = worst), GPT-4o was rated as more helpful than the electrophysiologist (OR = 6.74, 95% CI 3.05–14.88), while DB GPT (OR = 0.85, 95% CI 0.43–1.68) and PubMed GPT (OR = 0.81, 95% CI 0.41–1.61) did not differ significantly from the electrophysiologist.

The distribution of Bedside Manner (empathy to the user) and Helpfulness across the four groups. Mean empathy scores (1 = highest, 5 = lowest) and distribution of ranking scores (1st–4th) among the four models. Lower ranking values indicate higher accuracy. Bars represent least-squares means and 95% confidence intervals obtained from the Generalized Linear Mixed Model (GLMM) analysis. For binary analysis, empathy scores of 1–2 were coded as “1” and 3–5 as “0”, while ranking scores of 1st–2nd were coded as “1” and 3rd–4th as “0”. p values were adjusted for multiple comparisons using Dunnett’s test with the electrophysiologist as the control.

Subsequently, each behavioral aspect (Exercise, Diet, Lifestyle, and Other) were analyzed separately. In the Exercise category (Table 2), with the exception of the response from PubMed GPT on the “Specialized Content”, there were no significant differences observed between the responses of the three LLMs and those of the electrophysiologist. In the Diet category (Table 3), the three LLMs were evaluated to be comparable to the electrophysiologist across all dimensions. In the General Lifestyle category (Table 4), no significant differences were observed between GPT-4o and the electrophysiologist across all dimensions. The rates of agreement with Scientific Consensus for DB GPT and PubMed GPT were 56.7% and 60.0%, respectively, which were lower figures compared to the electrophysiologist’s 93.3%. The responses from these models exhibited higher rates of Incorrect Retrieval and Reasoning, as well as Inappropriate and Incorrect Content, compared with those of the electrophysiologist. In the Other category (Table 5), the percentage of Specialized Content was significantly higher for GPT-4o at 84% compared to the electrophysiologist at 48.0%. The mean score for Helpfulness of the Answer was 1.20 for GPT-4o and 2.72 for the electrophysiologist, indicating that GPT-4o was significantly more helpful. The mean score for Bedside Manner (empathy to the user) was 1.48 for GPT-4o and 3.12 for the electrophysiologist, indicating that GPT-4o had significantly higher empathy. For all other dimensions, the three LLMs were evaluated to be comparable to the electrophysiologist.

Discussion

The incidence and recurrence of AF are strongly influenced by lifestyle factors, including alcohol consumption, blood pressure control, exercise habits, diet, and obesity. Higher alcohol intake has been linked to increased recurrence after ablation, whereas even modest reductions in consumption can lower recurrence risk13,14. Blood pressure control is also important, as antihypertensive treatment reduces cardiovascular events in AF patients15, and non-dipper hypertension is associated with higher recurrence risk after ablation16. With regard to physical activity, regular aerobic exercise can improve AF-related symptoms, quality of life, and exercise capacity17. Analyses from the TRIM-AF trial indicated that reduced physical activity in patients with CIEDs was associated with increased AF burden18. Similarly, findings from the PREDIMED-Plus trial showed that a weight-loss-focused dietary and lifestyle intervention led to decreases in NT-pro BNP and hs-CRP levels, highlighting the importance of proactive lifestyle interventions19. Additionally, obesity (BMI ≥ 30 kg/m2) and overweight (BMI > 25 kg/m2) are associated with higher recurrence rates after ablation, with each 5 kg/m2 increase in BMI raising recurrence risk by 13%20. In this way, lifestyle modification plays a crucial role in AF management, underscoring the importance of tools to assess and guide such interventions.

In recent years, research has surged in using generative AI, such as ChatGPT, to coach people toward healthier lifestyle habits21,22. However, while they often perform well in offering general lifestyle advice, their accuracy can vary when applied to disease-specific contexts23,24,25. To address this limitation, we developed RAG-AI, which integrates specialized databases, and evaluated its accuracy in generating responses to lifestyle-related questions for AF patients. More recently, the usefulness of combining generative AI with RAG models has been demonstrated in multiple fields. For example, Mashatian et al. reported that a RAG-AI model incorporating GPT-4 and Pinecone achieved 98% accuracy in answering questions on diabetes and diabetic foot care26. Malik et al. also found that GPT-4o-RAG outperformed GPT-3.5 and GPT-4o alone in providing accurate recommendations for anticoagulation management during endoscopic procedures27. Similarly, in the field of nutrition, integrating zero-shot learning with RAG improved accuracy by 6% in extracting information on malnutrition in the elderly28. In the present study, our RAG-AI (DB-GPT model and PubMed GPT model) achieved accuracy comparable to electrophysiologist’s responses, particularly in the “exercise” and “diet” categories. This suggests that the use of specialized databases can provide reliable information and reduce hallucinations. However, the RAG models showed weaker performance than GPT-4o alone in areas requiring comprehensive understanding and reasoning. According to Tang et al., hallucinations in large language models can be broadly classified into misinterpretation errors, fabricated errors, and attribute errors12. In our evaluation, several patterns were consistent with this framework. For example, PubMed GPT occasionally produced answers that deviated from the original intent of the question (see Appendix 1), which corresponds to a misinterpretation error. Similarly, ChatGPT sometimes generated highly empathetic responses even when no direct evidence existed (see Appendix 2), which can be interpreted as an attribute error. These findings highlight differences between models: RAG-AI is designed to avoid hallucinations by restricting answers to cited guideline articles and PubMed abstracts, whereas ChatGPT, by searching the broader Internet, may generate persuasive but less evidence-grounded responses.

To address these issues, we propose enhancing RAG-AI with fact-checking AI. This approach has been shown to be effective in other domains; for instance, incorporating explainable fact-checking AI into x-ray report generation improved accuracy by more than 40%29. Applying similar strategies could enhance the reliability of RAG-AI responses, reduce hallucinations, and broaden its applicability in clinical question answering.

Limitation

The clinical application of this study has several limitations. First, the PubMed GPT processes Japanese questions by translating them into English, extracting keywords, and citing relevant PubMed abstracts. This workflow may alter the intent of the original question, and inadequate keyword extraction can lead to errors in cited information, increasing the risk of LLM-specific hallucinations. Previous studies using Chat GPT reported reference-based accuracy as low as 7% and about 20% for medical questions30,31, suggesting that translation and keyword selection errors could affect results. Second, Chat GPT responses were obtained in Japanese, and LLM accuracy in non-English languages is reported to be about 10% lower than in English31. The RAG-AIs generated responses in English, which were then translated to Japanese via DeepL. Therefore, results might differ if all responses were originally in English. Accuracy could be improved through optimized prompts and multi-step validation. Third, although GPT-4o was used, results may vary with other chatbots or future versions. Reports indicate GPT-4o outperforms GPT-3.527, but overall response accuracy remains suboptimal according to Li et al.’ report32, consistent with our findings. Fourth, 66 questions were collected from AF patients, but they may not cover all potential queries, and editing questions could improve AI accuracy. Fifth, responses were obtained only once per question. While reproducibility may vary, existing research suggests single-response evaluation is sufficient, though future studies should verify consistency to improve reliability. Lastly, all evaluations were performed by electrophysiologists. Although their expertise in specific areas such as exercise and nutrition was limited, they routinely provide lifestyle guidance to AF patients and therefore possess sufficient knowledge regarding lifestyle counseling for AF patients. As such, the variability in evaluation results is expected to be limited. However, expanding the number and diversity of evaluators, along with prompt optimization, will be important for developing more accurate and safer medical AI systems.

In conclusion, original GPT-4o was a balanced model in providing medical information, showing high reasoning ability and empathy. On the other hand, RAG-AI (DB GPT and PubMed GPT) suppressed hallucination and was confirmed to provide highly accurate answers, especially in the areas of exercise and diet. Combining the advanced reasoning ability and empathy of GPT-4o with the hallucination suppression function of DB GPT and PubMed GPT, which utilize RAG, may enable the construction of more accurate and safer medical AI systems. In particular, the use of RAG models would be effective in areas where specialized knowledge is required, and the strengths of GPT-4o would be demonstrated in situations where more flexible and comprehensive information provision is required.

Method

This observational study was conducted at a single institution (Kyoto Prefectural University of Medicine Hospital). The study protocol was approved by the Ethics Committee of the institution (IRB-ERB-C-3102) and carried out in accordance with the principles outlined in the Declaration of Helsinki.

Implementation of the LLM for medical question and answering

When examining the use of LLM for medical question and answering tasks, three different approaches were examined in this study. This includes 1) direct prompting using a state-of-the-art general purpose large language model (GPT-4o model), 2) a retrieval augmented generation (RAG) approach which retrieves external medical knowledge from a database of question and answers curated by the authors (DB-GPT model) and 3) a three-stage modular RAG approach which analyses and summarizes external medical knowledge from relevant academic papers indexed in the PubMed database to provide responses that are better grounded in academic literature (PubMed GPT model).

Baseline model: GPT-4o

The answers in the “GPT-4o” condition were generated using the latest GPT model available at the time of the study, namely the GPT-4o model, developed by OpenAI33. This model has shown strong performance in medical question and answering tasks34, even achieving an overall correct response rate of 93.2% on the 2024 Japanese Medical Licensing Exam35 without prompt engineering. In this study, the model was prompted with a simple instruction, aimed at setting the role of the model as a doctor offering medical advice (e.g. “You are a doctor offering advice. Please answer the following questions”) and was used to provide answers to the medical questions.

RAG based on a curated Q&A knowledge base: (DB-GPT)

While traditional large language models have demonstrated strong performance, they still face critical challenges, particularly in a domain as sensitive as healthcare. A key issue concerns their tendency to hallucinate information, generating responses which at a first glance may seem plausible, but are factually incorrect thus potentially causing harm or undermining trust36. Retrieval Augmented Generation (RAG), refers to a mechanism in which the model enhances its response by retrieving information from an external knowledge base, one which is timely and carefully verified. Such an approach has been shown to produce responses which are less prone to hallucinations36. The model used in the “DB-GPT” condition adopted this approach. More specifically, the model was programmed to retrieve information from a local FAQ-style database to help answer user questions. The database consists of a collection of question–answer pairs that was constructed based on Review article “Lifestyle and Risk Factor Modification for Reduction of Atrial Fibrillation: A Scientific Statement from the American Heart Association”37. To retrieve relevant information, the questions from the database and the user query were vectorized into embeddings using the all-MiniLM-L6-v2 model. The similarity between the user query vector and the vectors of each question was compared using their dot product, and the 6 most relevant question and answer pairs (determined by those which showed the highest degree of similarity to the user query) were selected and then combined into a single prompt for GPT-4. The GPT-4 model was asked to generate a synthesized answer based only on the retrieved text. If the question cannot be addressed by the data, the model was instructed to clearly indicate that it was not able to provide an answer. More specifically, the following prompt was used: (System: “Synthesize a comprehensive answer from the following q&a dataset for the given question. Always use the provided text to support your argument. If the question cannot be answered in the document, say you cannot answer it”) and (assistant: “dataset: {DocumentList}, Question: {question}, Answer:”), with the “DocumentList” representing the relevant Question and Answer pair retrieved from the database and “question” representing the current user question.

RAG based on academic literature knowledge base: PubMed GPT

For the “PubMed GPT” model, we adopted an Advanced/Modular RAG approach. This involves decomposing the RAG system into smaller modules which specialize on specific tasks38,39. Previous studies have shown that such a multi-layer approach could perform particularly well in medical Q&A tasks40. In our case, we adopted a three-layer approach, consisting of a “pre-retrieval”, “post-retrieval” and “generation” process40. When processing Japanese questions, the PubMed GPT first translates them into English, extracts relevant keywords, and cites pertinent PubMed abstracts. In the first “pre-retrieval” stage, a GPT-4 model was used to construct and refine a query (using 10 few-shot examples) to search for relevant literature on the PubMed Database. The query was then used to retrieve the 50 most relevant research papers from the PubMed database through the Entrez API. In the following “post-retrieval stage”, a selection process was carried out, in which another GPT-4 model was used retain only the papers which likely contained answers to the user question based on the title and abstract of the papers. In the final “generation” stage, the original user question, along with the title and abstract of the related papers selected from the second stage were provided as an input to another GPT-4 model which was tasked with using the selected abstracts to generate an answer to that question.

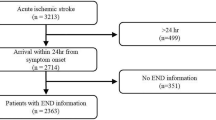

Development of a lifestyle questionnaire

In this study, about 10 lifestyle-related questions were obtained for each of 16 AF patients, for a total of 100 responses. Among the participants, 13 (81.3%) were male, the mean age was 69.4 ± 7.5 years, and 62.5% had paroxysmal AF. Duplicate questions were eliminated from the questions obtained, resulting in a final list of 66 unduplicated questions. These questions were grouped into four categories (exercise, diet, general lifestyle, and other) (see Supplementary Table 1 for details). The 66 questions obtained were then answered using each of the three types of generative AI (original Chat GPT4o, PubMed GPT and DB-GPT). To provide a physician’s response representative of usual clinical care for comparison with the three LLM models, we obtained answers to the same 66 questions from one electrophysiologist (S.S.), who was allowed to use the Internet when formulating responses. This resulted in a total of four responses being generated for each question (Fig. 2).

Evaluation of AI-generated medical responses and an usual clinical care (electrophysiologist’s response)

Five evaluators (T.M, Y.K, T.H, N.N, K.K), who are board-certified electrophysiologists, were sent a Google form with 20 randomly selected questions out of 66 questions and 4 response models to them. The 20 randomly selected questions were chosen with weighting based on the number of questions in each category: 5 from the exercise category, 4 from the diet category, 6 from the general lifestyle category, and 5 from the other category. Evaluation dimensions included Scientific Consensus, Extent of Possible Harm, Evidence of Incorrect Comprehension, Evidence of Incorrect Retrieval, Evidence of Incorrect Reasoning, Inappropriate Content, Incorrect Content, Specialized Content, Bedside Manner and Helpfulness of the Answer41. Of these, Extent of Possible Harm was rated on a 3-point scale of mild, moderate, severe or deadly, while the other measures were rated on a 2-point scale. In addition, for Helpfulness of the Answer, the responses of the four models were ranked, and Bedside Manner was rated on a 5-point scale. For example, For the evaluation of Scientific Consensus, responses from the four models, including three GPT models and one electrophysiologist, S.S., were independently evaluated by five evaluators. Five evaluators assessed all responses in a blinded manner. The source of each response (LLM or electrophysiologist) was not disclosed to the evaluators to minimize potential subjective bias and to ensure a scientifically rigorous and reliable study design. Each evaluator judged whether a response represented content that could achieve scientific consensus. The exercise category included five questions, resulting in twenty-five Yes/No evaluation, the diet category included four questions, resulting in twenty Yes/No evaluation, the lifestyle category included six questions, resulting in thirty Yes/No evaluation, and the other category included five questions, resulting in twenty-five Yes/No evaluation. In total, one hundred Yes/No evaluation were obtained for Scientific Consensus. Other dimensions, such as Incorrect Reasoning and Inappropriate Content, were evaluated in the same way.

Statistical analysis

For each clinical question (Scientific Consensus, Extent of Possible Harm, Evidence of Incorrect Comprehension, Evidence of Incorrect Retrieval, Evidence of Incorrect Reasoning, Inappropriate Content, Incorrect Content, Specialized Content, Bedside manner and Helpfulness of the answer), four sets of responses (three LLM models and one board-certified electrophysiologist) were evaluated by five independent evaluators. All evaluation results were analyzed using a generalized linear mixed model (GLMM). The model included model type (electrophysiologist or LLM models), category of question (i.e. lifestyle, exercise, diet, others), and evaluator as fixed effects, and question ID as a random effect to account for correlations among responses to the same clinical question. For each model, odds ratios and 95% confidence intervals were estimated, and significance levels were adjusted using Dunnett’s test with the electrophysiologist as the reference group. For the GLMM analysis, the ordinal variables were dichotomized as follows; 1) For “Extent of Possible Harm” variable, the “moderate” and “severe/death” categories were combined, 2) For “Bedside Manner” variable, which was evaluated on a 5-point scale (1 = very empathetic, 5 = not empathetic), 1–2 were coded as 1 (high empathy) and 3–5 as 0 (low empathy), 3) For “Helpfulness of the Answer” variable, which was evaluated on a 4-point scale (1 = best, 4 = worst), 1–2 were coded as 1 (highly helpful) and 3–4 as 0 (less helpful). All analyses were performed using Microsoft Excel and JMP statistical software version 18.

Data availability

The data supporting the findings of this study are available upon request from the corresponding author. Due to privacy and ethical concerns, the data are not publicly available.

References

Kolasa, K. M. & Rickett, K. Barriers to providing nutrition counseling cited by physicians: A survey of primary care practitioners. Nutr. Clin. Pract. 25, 502–509 (2010).

Kushner, R. F. Barriers to providing nutrition counseling by physicians: A survey of primary care practitioners. Prev. Med. 24, 546–552 (1995).

Aleksandra, S. et al. Artificial intelligence in optimizing the functioning of emergency departments; a systematic review of current solutions. Arch. Acad. Emerg. Med. 12, e22 (2024).

She, W. J. et al. An explainable AI application (AF’fective) to support monitoring of patients with atrial fibrillation after catheter ablation: Qualitative focus group, Design Session, and Interview Study. JMIR Hum. Factors. 12, e65923 (2025).

Martinengo, L. et al. Conversational agents in health care: Scoping review of their behavior change techniques and underpinning theory. J. Med. Internet Res. 24, e39243 (2022).

Aggarwal, A. et al. Artificial intelligence-based chatbots for promoting health behavioral changes: Systematic review. J. Med. Internet Res. 25, e40789 (2023).

Fitzsimmons-Craft, E. E. et al. Effectiveness of a chatbot for eating disorders prevention: A randomized clinical trial. Int. J. Eat. Disord. 55, 343–353 (2022).

He, L. et al. Effectiveness and acceptability of conversational agents for smoking cessation: A systematic review and meta-analysis. Nicotine Tob. Res. 25, 1241–1250 (2023).

Fadhil, Ahmed. A conversational interface to improve medication adherence: towards AI support in patient’s treatment. Preprint at arXiv:1803.09844 (2018).

Ji, Z. et al. Survey of hallucination in natural language generation. ACM Comput. Surv. 55, 1–38 (2023).

Tam, D. et al. Evaluating the factual consistency of large language models through news summarization. Preprint at arXiv:2211.08412 (2022).

Tang, L. et al. Evaluating large language models on medical evidence summarization. NPJ Digit. Med. 6, 158 (2023).

Grindal, A. W. et al. Alcohol consumption and atrial arrhythmia recurrence after atrial fibrillation ablation: A systematic review and meta-analysis. Can. J. Cardiol. 39, 266–273 (2023).

Takahashi, Y. et al. Alcohol consumption reduction and clinical outcomes of catheter ablation for atrial fibrillation. Circ. Arrhythm. Electrophysiol. 14, e009770 (2021).

Pinho-Gomes, A. C. et al. Blood pressure-lowering treatment for the prevention of cardiovascular events in patients with atrial fibrillation: An individual participant data meta-analysis. PLoS Med. 18, e1003599 (2021).

Watanabe, T. et al. Impact of nocturnal blood pressure fall on long-term outcome for recurrence of atrial fibrillation after catheter ablation. Circulation 148, A15200–A15200 (2023).

Osbak, P. S. et al. A randomized study of the effects of exercise training on patients with atrial fibrillation. Am. Heart J. 162, 1080–1087 (2011).

Kim, H. S. et al. Device-recorded physical activity and atrial fibrillation burden: A natural history experiment from the COVID-19 pandemic in the TRIM-AF clinical trial. Circulation 150, A4146592–A4146592 (2024).

Li, L. et al. Effect of an intensive lifestyle intervention on circulating biomarkers of atrial fibrillation related pathways among adults with metabolic syndrome: Results from a randomized trial. J. Clin. Med. 13, 2132 (2024).

Wong, C. X. et al. Obesity and the risk of incident, post-operative, and post-ablation atrial fibrillation: A meta-analysis of 626,603 individuals in 51 studies. JACC Clin. Electrophysiol. 1, 139–152 (2015).

Ponzo, V. et al. Is ChatGPT an effective tool for providing dietary advice?. Nutrients 16, 469 (2024).

Zaleski, A. L., Berkowsky, R., Craig, K. J. T. & Pescatello, L. S. Comprehensiveness, accuracy, and readability of exercise recommendations provided by an AI-based chatbot: Mixed methods study. JMIR Med. Educ. 10, e51308 (2024).

Yano, Y. et al. Relevance of ChatGPT’s responses to common hypertension-related patient inquiries. Hypertension 81, e1–e4 (2024).

Sarraju, A. et al. Appropriateness of cardiovascular disease prevention recommendations obtained from a popular online chat-based artificial intelligence model. JAMA 329, 842–844 (2023).

Ayers, J. W. et al. Comparing physician and artificial intelligence chatbot responses to patient questions posted to a public social media forum. JAMA Intern. Med. 183, 589–596 (2023).

Mashatian, S. et al. Building trustworthy generative artificial intelligence for diabetes care and limb preservation: A medical knowledge extraction case. J. Diabetes Sci. Technol. 19, 1264–1270 (2024).

Malik, S. et al. Assessing ChatGPT4 with and without retrieval-augmented generation in anticoagulation management for gastrointestinal procedures. Ann. Gastroenterol. 37, 514–526 (2024).

Alkhalaf, M., Yu, P., Yin, M. & Deng, C. Applying generative AI with retrieval augmented generation to summarize and extract key clinical information from electronic health records. J. Biomed. Inform. 156, 104662 (2024).

Mahmood, R. et al. Anatomically-grounded fact checking of automated chest X-ray reports. Preprint at arXiv:2412.02177 (2024).

Soong, D. et al. Improving accuracy of GPT-3/4 results on biomedical data using a retrieval-augmented language model. PLOS Digit. Health. 3, e0000568 (2024).

Alonso, I., Oronoz, M. & Agerri, R. MedExpQA: Multilingual benchmarking of large language models for medical question answering. Artif. Intell. Med. 155, 102938 (2024).

Li, Z. et al. Evaluating performance of large language models for atrial fibrillation management using different prompting strategies and languages. Sci. Rep. 15, 19028 (2025).

Hurst, A. et al. Gpt-4o system card. Preprint at arXiv:2410.21276 (2024).

Liu, C. et al. Custom GPTs enhancing performance and evidence compared with GPT-3.5, GPT-4, and GPT-4o? A study on the emergency medicine specialist examination. Healthcare 12, 1726 (2024).

Miyazaki, Y. et al. Performance of ChatGPT-4o on the Japanese Medical Licensing examination: Evalution of Accuracy in text-only and image-based questions. JMIR Med. Educ. 10, e63129 (2024).

Gao, Y. et al. Retrieval-augmented generation for large language models: A survey. Preprint at arXiv:2312.10997 (2023).

Whelton, P. K. et al. 2020 International Society of Hypertension global hypertension practice guidelines. Circulation 141, e750–e772 (2020).

Gao, Y., Xiong, Y., Wang, M. & Wang, H. Modular rag: Transforming rag systems into lego-like reconfigurable frameworks. Preprint at arXiv:2407.21059 (2024).

Bora, A. & Cuayáhuitl, H. Systematic analysis of retrieval-augmented generation-based LLMs for medical chatbot applications. Mach. Learn. Knowl. Extr. 6, 2355–2374 (2024).

Das, S. et al. Two-layer retrieval-augmented generation framework for low-resource medical question answering using reddit data: Proof-of-concept study. J. Med. Internet Res. 27, e66220 (2025).

Singhal, K. et al. Large language models encode clinical knowledge. Nature 620, 172–180 (2023).

Acknowledgements

We sincerely thank Dr. Satoshi Shimoo, MD, PhD. for his invaluable contributions in providing expert medical answers, which served as a benchmark for our analysis. We also extend our heartfelt appreciation to the five physician evaluators (Dr. Ken Kakita, MD, Dr. Naoto Nishina, MD, Dr. Tetsuro Hamaoka, MD, Dr. Yukinori Kato, MD, Dr. Tomonori Miki, MD, PhD) who carefully assessed the questions and both sets of responses. Their professional judgments were essential for validating the relevance and safety of the information presented. We further express our deep gratitude to Ms. Nakata for her expert statistical advice and analytical support, which greatly contributed to the methodological rigor of this study.

Funding

The study was not supported by any grant.

Author information

Authors and Affiliations

Contributions

KS and MM contributed to the conception and design of the work. WS and PS contributed to the development of the RAG-based LLM in this study. MM contributed to the analysis of data for the work. SM contributed to the interpretation of data for the work. MM drafted the manuscript. The other authors critically revised the manuscript. All gave final approval and agree to be accountable for all aspects of work ensuring integrity and accuracy.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval

The study was approved by the Medical Ethics Review Committee of the Kyoto Prefectural University of Medicine (approval number: ERB-C-3102).

Patient consent

All patients gave informed and written consent.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Makino, M., She, W.J., Siriaraya, P. et al. Evaluating the appropriateness and safety of generative AI in delivering lifestyle guidance for atrial fibrillation patients. Sci Rep 16, 3961 (2026). https://doi.org/10.1038/s41598-025-34079-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-34079-z