Abstract

In this work, we propose a deployment-oriented intrusion detection framework for enterprise networks, combining a multi-branch convolutional neural network (CNN) with channel attention and a fine-tuned decision-tree (DT) classifier. Our system offers transparent, human-interpretable rules with minimal inference overhead. We evaluate the proposed model on two public benchmarks: the CIC-IDS2017 dataset, consisting of over 2 million labeled network flows with 80 + features, and the NSL-KDD dataset, containing 125,000 connection records with 41 features. These datasets challenge the model with multiple flow classification tasks, including both known and unknown attack types. Our evaluation shows that the proposed model outperforms strong CNN-based baselines, achieving 99.28% accuracy and 99.30% ROC-AUC on CIC-IDS2017, with a 5.7% improvement over CNN + DT baselines. On NSL-KDD, the model attains a 99.10% accuracy and 0.997 ROC-AUC, marking a 5.7% gain compared to CNN + DT approaches. Furthermore, we report a cross-dataset transfer improvement, with a + 0.97-point increase in macro-F1 score, demonstrating the model’s ability to generalize across temporal and dataset shifts. These results underline the system’s effectiveness in both classification accuracy and interpretability for real-world enterprise network security deployment.

Similar content being viewed by others

Introduction

With the exponential expansion of digital communication and the ubiquitous deployment of enterprise networks, cybersecurity has emerged as one of the most critical domains in modern information technology. Enterprise information systems, cloud infrastructures, Internet of Things (IoT) ecosystems, and other critical networked assets are increasingly threatened by advanced cyberattacks, including Distributed Denial-of-Service (DDoS), infiltration through malicious payloads, and social engineering-based exploits1,2,3. To address these risks, Intrusion Detection Systems (IDSs) play a pivotal role by detecting malicious activities before they inflict operational or economic damage on organizations.

However, despite significant strides in IDS development, current solutions still face persistent limitations, such as high false alarm rates, inadequate detection of novel or zero-day attacks, and inefficiencies in processing large-scale, imbalanced, and heterogeneous network data4,5,6,7. These issues are exacerbated in real-world enterprise environments, where high-dimensional, non-stationary data flows demand scalable and intelligent detection models capable of rapid adaptation and accurate response.

Traditional machine learning (ML) approaches—including Support Vector Machines (SVM), Decision Trees (DTs), and Random Forests—have provided baseline capabilities for IDS8,9,10. However, they rely heavily on manual feature engineering and often underperform in complex, high-dimensional scenarios. In contrast, deep learning (DL) architectures such as Convolutional Neural Networks (CNNs), Long Short-Term Memory (LSTM) networks, and hybrid CNN-LSTM models have demonstrated impressive abilities in extracting abstract representations from raw traffic data11,12,13,14. Particularly, CNNs have excelled at modeling spatial and temporal correlations within network flows, while tree-based ensemble models like decision tree or supervised tree-based models have shown remarkable classification accuracy, robustness, and interpretability in cybersecurity contexts15,16,17,18.

Recent research has explored hybrid approaches, especially combining CNNs for deep feature extraction with supervised tree-based models for powerful classification. These models leverage the automatic feature learning capabilities of CNNs alongside the structured decision-making of supervised tree-based models, offering better generalization than standalone architectures18,19,20,21,22. However, existing hybrid methods are still subject to critical shortcomings. For instance, several models have been trained and evaluated using only a limited subset of attack types, often binary setups (e.g., benign vs. DoS), which do not reflect the multi-class complexity of modern enterprise threat landscapes23,24,25. Furthermore, many approaches fail to generalize across unseen datasets, demonstrating strong performance only within the dataset used for training but performing poorly on others like NSL-KDD or CIC-IDS201726,27,28,29.

From a deployment perspective, another important limitation concerns model interpretability and adaptability: while deep models are powerful, their black-box nature and high computational costs inhibit their deployment in mission-critical or resource-constrained environments30,31. Moreover, several methods have been found to exhibit high accuracy on one attack class but underperform drastically on others, indicating a lack of robustness and completeness in attack coverage—an issue particularly detrimental to enterprise-grade IDS solutions.

To address these gaps in accuracy, generalization, and interpretability, this study presents an incremental, deployment-oriented hybrid IDS that couples a multi-branch CNN with channel attention to a fine-tuned decision-tree classifier, tailored for enterprise network intrusion detection. The multi-branch backbone performs parallel, multi-scale feature extraction, while attention adaptively emphasizes discriminative traffic channels; the resulting post-attention representation is then classified by a calibrated, depth-constrained tree, yielding high accuracy with low latency and interpretable, axis-aligned rules appropriate for production settings.

The proposed framework goes beyond prior works by targeting multi-class intrusion detection, ensuring robust generalization across datasets, and maintaining lightweight computational demands. It is systematically evaluated on two benchmark datasets—CIC-IDS2017 and NSL-KDD—to assess its effectiveness under various network conditions and threat types.

This work offers an incremental yet practically meaningful integration tailored to tabular IDS telemetry. (1) We present an IDS pipeline that couples multi-branch CNNs with channel attention and a fine-tuned decision tree (DT) head, yielding interpretable, axis-aligned rules at negligible inference overhead. (2) We provide a theoretical account of why this coupling improves separability: attention acts as diagonal metric learning that increases Fisher discriminability (Proposition 1), and multi-branching raises the likelihood of highly discriminative channels (Proposition 2); the DT aligns with threshold-dominant tabular structure to enlarge effective margins (Proposition 3). See Appendix A.1–A.5 for the formalization. (3) We report comprehensive experiments on CIC-IDS2017 and NSL-KDD with multi-seed evaluation, ROC/PR analyses, and computational profiling, and we include cross-dataset checks; the proposed model achieves competitive Accuracy/F1 and AUC while maintaining low false-positive rates. Diagnostics bridging theory and empirics are provided in Appendix A.6–A.7.

The rest of this paper is organized as follows: Sect. “Related work” reviews related works in IDS based on ML and DL methods; Sect. “Proposed model” presents the design and architecture of the proposed Multi-Branch CNN-Attention + decision tree framework; Sect. “Results” details the experimental setup, datasets, and evaluation metrics; Sect. 5 discusses outcomes and comparative analyses; and Sect. 6 concludes the study and outlines directions for future research.

Related work

In this section, we review recent advances in intrusion detection systems (IDSs) with a focus on deep learning–based and hybrid architectures for flow-based network telemetry. For clarity, the discussion is organized into four strands: (1) deep learning and hybrid IDS architectures, (2) Transformer-based IDS, (3) graph-based IDS using GNNs, and (4) LLM-assisted intrusion detection and analytics, highlighting both methodological progression and remaining deployment gaps.

Deep learning and hybrid IDS architectures

Recent years have seen sustained advances in IDSs built on deep learning, particularly convolutional/recurrent hybrids and attention mechanisms evaluated on canonical benchmarks. AID-DFS1 couples a Bi-GRU feature extractor with a multi-branch CNN–RNN to enhance hierarchical fusion via inter- and intra-modal attention, while CATTB2 blends CNNs, attention, and BiIndRNN for malicious-URL detection; both report strong accuracy yet raise questions about single-modality generalization1 and unseen hold-outs2. Beyond these, document-style hierarchical attention architectures5 underscore the power of multi-level attention albeit outside network traffic, and deep reinforcement learning has been explored for abnormal traffic detection across multi-phase pipelines, though with under-specified benchmarks in places6. Classical CNN–LSTM pipelines appear repeatedly with competitive results—e.g., RFE-assisted CNN–LSTM on NSL-KDD7 and broader CNN/LSTM hybrids across CIC-IDS2017, UNSW-NB15, and WSN-DS with low false alarms but heavy preprocessing/tuning12. Parallel efforts examine scalability via Spark-accelerated Conv-LSTM13 and big-data frameworks (MapReduce) for throughput34, while hybrid deep-feature + classical learner designs (e.g., CNN/XGBoost/LSTM10 seek interpretability or calibration with mixed evidence on unseen distributions. Security-oriented pipelines combine blockchain or encryption with learning (e.g., BC + Attention-CNN for credit-risk assessment3; three-phase encrypted IDS with DL anomaly detection4, trading robustness for energy/latency overheads. Broader surveys and analyses highlight interpretability needs (XAI for CNN-based IPS: attention, saliency, LIME) and evaluation rigor gaps15,19. Foundational baselines and enhancements such as LeNet-5 with one-hot encoding18 and stochastic-search-tuned deep CNNs27 improve detection yet still struggle with novel-attack generalization.

A second line of work emphasizes optimization strategies, specialized regimes, and deployment constraints. Actor–critic optimization atop a CNN–DT hybrid attains 97.92% on KDD and illustrates the value of pairing trees with deep features, though with potential dataset-specific overfitting16. Domain-tailored hybrids include PSO–GWO-guided CNN–LSTM for smart-grid IDS with high precision/recall on ICS data20, GA/fuzzy-clustered CNN-Bagging that is effective but computationally intensive21, and a CNN–SVM for IoT DoS/DDoS on CIC IoT 2023 (~ 99% accuracy) with scalability still to be proven24. Unsupervised and few-shot pathways target rare threats: contractive autoencoders for CIC-DDoS201925 and supervised few-shot autoencoders on NSL-KDD (99.8%) that depend heavily on pretraining35. Architectural and training heuristics range from an inverted hourglass with hybrid optimization (up to 99.97% across three datasets, but often CPU-bound)28 and RNN-assisted preprocessing plus classical classifiers (high CIC-IDS2017 accuracy, with real-time feasibility unclear)29, to DNDF-IDS (neural nets + decision forests) achieving up to 98.84% with sub-millisecond inference while relying on curated features32. At the system level, CNN-GRU smart-grid IDS integrates a Kafka-based dashboard33, MapReduce-driven BWO-CONV-LSTM targets big-data settings (> 98% on four datasets) with potential overhead at the edge34, adaptive online detection copes with drift via Hoeffding Trees and dual windows (DWOIDS)38, and federated BiLSTM with blockchain (SecFedIDM-V1) pushes cloud-network recall to 99.9% while incurring possible blockchain-related latency39. Comparative studies of CNN, LSTM, and their hybrids on UNSW-NB15 and X-IIoTID report peak accuracy up to 99.84% given substantial compute budgets31.

Stepping back, the literature consistently reports high headline performance (≈ 97–99%) across CIC-IDS2017, UNSW-NB15, NSL-KDD, and task-specific corpora—spanning CNN/LSTM hybrids with extensive preprocessing12, Spark/MapReduce-accelerated training34, calibrated or interpretable hybrids (trees/SVM/boosting)10,16,20,24,32, ultra-low-latency inference32, and rare-event-oriented (un)supervised methods25,35. Yet recurring limitations persist across these families: sensitivity to dataset specificity and distribution shift (temporal drift, zero-day proxies)6,18,21,37,38, class imbalance and sparse families (e.g., R2L/U2R)7,12,25,35, engineering overheads (heavy preprocessing, encryption/blockchain cost, big-data complexity) that may hinder real-time deployment3,4,13,31,32,33,34,39, and under-reported hold-out or multi-seed protocols that leave robustness uncertain2,6,19. This synthesis frames the central challenge for practical IDS research: sustaining high precision–recall and low-latency operation under evolving, imbalanced traffic with transparent evaluation and deployment-aware design.

Transformer-based IDS

To capture long-range dependencies and multi-scale semantics, recent IDS studies adopted Transformer backbones. Xi et al.40 proposed a multi-scale Transformer (IDS-MTran) with convolutional multi-kernel feature staging and a transformer backbone, reporting consistent gains on IDS benchmarks. Moreover, Ullah et al.41 presented IDS-INT, using Transformer-based transfer learning to handle severe class imbalance in network traffic. Beyond backbone choice, parameter-efficient fine-tuning (PEFT) for Vision Transformers—e.g., lightweight adapters and low-rank updates—has emerged to adapt large models under tight compute/memory budgets. In particular, Path-Augmented Parameter Adaptation (PPA) achieves efficient ViT fine-tuning by augmenting multi-path information flow without increasing inference cost, delivering consistent gains on downstream tasks42,43. Complementarily, ViT-based IDS designs that tokenize traffic as image-like inputs demonstrate that transformer capacity can be brought to intrusion detection with reduced time consumption while maintaining high accuracy44. These trends suggest that PEFT-style adaptation can strengthen IDS transformers under class imbalance and drift, complementing our CNN–attention–tree pipeline.

Graph-based IDS using GNNs

GNN-based IDS methods modeled traffic as a relational graph of hosts/flows/behaviors. Sun et al.45 introduced GNN-IDS, showing robustness and explainability improvements via graph construction over static–dynamic attributes. Lin46 proposed E-GRACL, an edge-centric GraphSAGE that combined global attention, local gating, and residual connections, and revised the embedding sampler. They applied graph contrastive learning to strengthen representations of edge attributes and topology, enabling IoT intrusion detection. Moreover, Ahanger et al.47 constructed communication graphs and evaluated a GAT-based IDS on the NSL-KDD benchmark. Their analysis of F1, recall, accuracy, and precision supported the effectiveness—and suggested robustness and scalability—of the GNN-based IDS for IoT threats. Wang Y et al.48 proposed behavior similarity employing a graph attention network (BS-GAT), a behavior-similarity-driven graph attention network that mitigated overfitting and improved information mining on real datasets.

LLM-assisted intrusion detection and analytics

LLMs are emerging as a pivotal layer in network intrusion detection, augmenting firewalls and human analysts to triage attack severity, classify threats, and explain attacks via prompting, Retrieval-Augmented Generation (RAG), and targeted fine-tuning49. Karunanayake et al.50 proposed an adaptive IDS policy-orchestration framework that leveraged LLM agents with advanced prompting to generate deployable policies and avoid online LLM inference at run time. The framework improved alert precision through reasoning and, across five real-world IDS datasets, achieved > 97% precision on three IoT datasets and > 80% on two harder sets while halving detection latency relative to conventional ML under a budget of < 10k completion tokens, indicating a cost-effective and scalable design.

Kalafatidis et al.51 combined lightweight statistical monitoring for continual anomaly screening with on-demand LLM-driven deep inspection. They implemented two distributed prototypes—one for SDN/OpenFlow and one for Kubernetes via Cilium-Hubble—and triggered LLM analysis only upon detected anomalies, thereby reducing overhead while maintaining accuracy; evaluations on real attack traces demonstrated effective detection of DDoS, port scanning, and brute-force attempts.

Moreover, Zhang et al.52 introduced a pre-trained LLM–based pipeline for fully automatic intrusion detection and designed three in-context learning (ICL) schemes. Experiments on a real NIDS corpus showed that ICL substantially boosted GPT-4 performance—reporting gains approaching 90% in accuracy/F1—and that the framework reached > 95% accuracy/F1 on diverse attack types using only ten ICL examples, while also highlighting applicability to wireless-communication tasks.

Lira et al.53 assessed the suitability of LLMs for networking IDS by analyzing their capacity to parse large-scale logs, adapt to evolving behavior, and separate benign from malicious activity. The study underscored the growing role of ML/AI in enhancing IDS adaptability and performance, and argued that pre-trained models and fine-tuning hold promise for reinforcing IDS capabilities, while noting open challenges.

Comparative summary and open challenges

Table 1 compares twenty IDS studies across algorithm, novelty, datasets, metrics, advantages, and limitations. Beyond the established CNN/LSTM and attention pipelines, the updated set now includes recent Transformer-, GNN-, and LLM-enhanced methods, indicating a shift toward long-range modeling, graph reasoning, and explainable, operator-assisting analytics on benchmarks like CIC-IDS2017 and NSL-KDD.

Many IDS models exceed 98% on benchmarks, yet generalization across datasets, robustness to class imbalance, and computational overhead remain unresolved. Recent trends with Transformers for long-range dependencies, GNNs for relational traffic, and LLM-assisted analytics improve some failure modes, but often at higher training and inference cost. Hybrid strategies such as CNN with tree heads, Transformer with transfer learning, or GNNs with behavior-similarity graphs tend to outperform monolithic designs in multi-class settings, especially when evaluated with multi-seed reporting, ROC and PR curves, cross-dataset tests, and complexity profiling. This underscores the need for IDS frameworks that balance accuracy, efficiency, and adaptability under realistic, evolving threats.

Despite strong results on canonical datasets, dataset specificity, weak cross-domain transfer, and brittleness to imbalance or real-time constraints still limit deployment. Models that remain reliable under distribution shift, sustain low latency and resource use, and preserve operator interpretability are most likely to succeed. Future IDS should optimize the accuracy–efficiency–generalizability triad in multi-attack, streaming scenarios with transparent, reproducible evaluation.

Proposed model

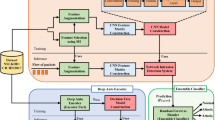

This work presents an incremental, deployment-oriented hybrid for network intrusion detection that combines a multi-branch CNN with channel attention and a fine-tuned decision-tree classifier. As illustrated in Fig. 1, the model processes normalized traffic data through parallel convolutional branches to extract multi-perspective spatial features, which are then fused and refined via an attention layer.

A fully connected layer encodes the salient patterns, enabling the DT to perform lightweight yet effective classification. This architecture enhances detection accuracy, generalizability, and robustness across multi-class, imbalanced network scenarios.

Data preprocessing and normalization

Prior to feeding the raw network traffic data into the proposed hybrid architecture, several preprocessing steps are essential to ensure data consistency, reduce noise, and improve model performance. The original datasets (CIC-IDS2017 and NSL-KDD) consist of a combination of numeric, categorical, and redundant features, which must be transformed into a unified and machine-readable format. Initially, non-numeric and categorical attributes (such as protocol type or service names) are encoded using one-hot or label encoding techniques. Redundant or constant-valued features are removed through statistical analysis to prevent overfitting and reduce computational burden.

To enhance the numerical stability and convergence speed of the model, all continuous features are normalized to a common scale. In this study, Min-Max normalization is employed to scale each feature into the range [0, 1], preserving the distribution of values while eliminating magnitude discrepancies across attributes. This normalization is crucial for CNN-based models, as they are sensitive to the scale of input features and may exhibit unstable learning behavior if feature ranges vary widely.

Moreover, given the inherent class imbalance present in intrusion detection datasets—where benign traffic often significantly outweighs malicious instances—appropriate strategies are adopted to mitigate bias. These include oversampling minority classes using SMOTE (Synthetic Minority Over-sampling Technique) or adjusting class weights during training. The resulting preprocessed dataset is then reshaped into a format suitable for feeding into the CNN branches, enabling the model to learn discriminative patterns from uniform and noise-free inputs.

Feature reshaping layer

Following normalization, each network-flow or connection record is represented as a fixed-length tabular vector \(\:\mathbf{x}\in\:{\mathbb{R}}^{d}\), where \(\:d\) denotes the number of preprocessed features (e.g., basic header statistics, timebased traffic descriptors, and content-based attributes). Convolutional neural networks, however, operate on tensors with an explicit spatial structure, typically \(\:{\mathbb{R}}^{h\times\:w\times\:c}\). The role of the Feature Reshaping Layer is therefore not to alter the information content of \(\:\mathbf{x}\), but to provide a deterministic, invertible view of the same data in a format that is compatible with convolutional processing.

We first fix a semantically informed feature ordering during preprocessing: features are grouped by type (e.g., flow-level counters, temporal ratios, packet-size statistics) and concatenated into a single vector \(\:\mathbf{x}={\left[{x}_{0},{x}_{1},\dots\:,{x}_{d-1}\right]}^{{\top\:}}\). This ordering is shared across all samples and remains unchanged throughout the network. The Feature Reshaping Layer then maps \(\:\mathbf{x}\) to a 3D tensor \(\:{\mathbf{X}}_{\text{reshaped\:}}\in\:{\mathbb{R}}^{h\times\:w\times\:c}\) such that

and each scalar feature \(\:{x}_{k}\) is assigned to exactly one spatial location \(\:(i,j,\ell)\). Using a row-major layout, the mapping \(\:\psi\::\{0,\dots\:,d-1\}\to\:\{0,\dots\:,h-1\}\times\:\{0,\dots\:,w-1\}\times\:\{0,\dots\:,c-1\}\) can be written as

so that

Equations (1)-(3) define a bijective reshaping: no feature is duplicated or removed, and the original vector \(\:\mathbf{x}\) can be exactly recovered from \(\:{\mathbf{X}}_{\text{reshaped\:}}\). When \(\:h\times\:w\times\:c>d\), any remaining entries are filled with zeros or masked and are not used as learnable inputs.

In the simplest and most deployment-oriented instantiation used in our experiments, we adopt an isomorphic one-dimensional layout by setting \(\:h=d,w=1\), and \(\:c=1\). In this case, the Feature Reshaping Layer reduces to

and the subsequent convolutional branches operate as 1D CNNs along the feature axis with multi-scale kernels (e.g., sizes 3, 5, and 7). This design preserves the original feature ordering exactly and avoids any heuristic folding into a 2D grid, while still enabling the CNN to learn local interaction patterns among groups of neighboring, semantically related features.

From a modeling perspective, the “spatial arrangement” induced by (2)-(4) has a clear interpretation: neighboring positions in \(\:{\mathbf{X}}_{\text{reshaped\:}}\) correspond to neighboring indices in \(\:\mathbf{x}\), and because the feature index itself is structured by type and semantics, each convolutional receptive field spans a stable group of related attributes (e.g., a set of time-based features or content statistics). Thus, the reshaping preserves the original relationships among features and makes them accessible to convolutional filters that capture co-occurrence and interaction patterns in these local neighborhoods. Crucially, no random permutation is applied; the mapping is fixed, global, and invertible, so the statistical structure of the tabular data is not distorted by the transformation.

Similar reshaping strategies—where flow- or connection-level feature vectors from KDD, NSL-KDD, and CIC-IDS2017 are treated as 1D or pseudo-2D grids and processed with CNN-based architectures—have been successfully employed in prior IDS work, achieving competitive detection performance and demonstrating the feasibility of applying convolutional filters to structured tabular telemetry13,14,27,32,40,44. The proposed Feature Reshaping Layer therefore follows an established modeling practice, while our explicit specification of the mapping (1)–(4) clarifies both its implementation mechanism and its suitability for intrusion detection.

Multi-Branch CNN architecture

To effectively capture the diverse and hierarchical patterns present in enterprise network traffic, the proposed model adopts a CNN design. This architecture features three parallel convolutional streams, each employing a different kernel size—3 × 1, 5 × 1, and 7 × 1—to extract features at varying receptive fields. Such a configuration enables the model to detect both fine-grained local patterns and broader contextual relationships within the same input vector. Each branch consists of a convolutional layer with a distinct kernel size, followed by a ReLU activation function and a batch normalization layer, all configured with ‘same’ padding to preserve the input dimensions. Let \(\:X\in\:{\mathbb{R}}^{F\times\:1\times\:1}\) be the input tensor to the CNN. The output of each branch is computed as:

Here, Wi is the kernel for branch i, ∗ denotes convolution, bi is the bias term, σ(⋅) represents the ReLU activation function, and BN(⋅) refers to batch normalization.

Figure 2 illustrates a dual-input fusion architecture designed for network intrusion detection. It comprises two parallel input streams—Input A and Input B—each independently processed through a Global Average Pooling (GAP) operation to generate compact feature maps. These feature maps capture salient characteristics from their respective input modalities. The outputs are subsequently merged via a fusion strategy (such as element-wise addition), followed by a weighted transformation (indicated by the multiplication symbol) to emphasize important combined features. Finally, the fused representation is passed to a Decision Tree classifier, which categorizes the network behavior as either “Normal” or “Attack”. This modular approach enables multi-perspective feature learning while maintaining interpretability and simplicity through decision tree classification.

Feature fusion via concatenation

In the proposed Multi-Branch CNN architecture, each branch independently extracts a distinct set of features by applying convolutional filters of varying kernel sizes. These branches capture complementary spatial patterns within the network traffic data. To consolidate the learned representations and exploit the strengths of each pathway, a feature fusion strategy is applied (see Fig. 2). Specifically, a depth-wise concatenation operation is employed, which stacks the output feature maps from all branches along the channel dimension. This results in a richer and more discriminative feature representation that combines multi-scale semantic information. Mathematically, assume the outputs of the three branches are denoted by \(\:{{F}_{1}\in\:\mathbb{R}}^{H\times\:W\times\:{C}_{1}},{F}_{2}\in\:{\mathbb{R}}^{H\times\:W\times\:{C}_{2}},\) and \(\:{F}_{3}\in\:{\mathbb{R}}^{H\times\:W\times\:{C}_{3}}\), where H, W, and Ci represent the height, width, and number of channels of each feature map, respectively. The fused output \(\:{F}_{\text{fused\:}}\in\:{\mathbb{R}}^{H\times\:W\times\:\left({C}_{1}+{C}_{2}+{C}_{3}\right)}\) is then computed as:

This concatenated tensor is passed to subsequent layers (such as attention and fully connected layers) for further abstraction and final classification. The fusion mechanism ensures that the model benefits from multi-scale feature diversity, enhancing its ability to detect complex attack patterns in network intrusion scenarios.

Attention mechanism

In the proposed architecture, the attention mechanism functions as a lightweight feature recalibration module designed to enhance the discriminative quality of the learned representations before classification. Unlike traditonal deep learning pipelines that employ softmax layers for final decision-making, our framework leverages a fine-tuned Decision Tree classifier. Consequently, the attention module’s role is to adaptively emphasize the most relevant feature channels, ensuring that the classifier receives more informative and focused input representations. denote the concatenated feature tensor obtained from the multi-branch CNN module, where C represents the number of channels. The attention mechanism computes a set of channel-wise importance scores \(\:\varvec{\alpha\:}=\left[{\alpha\:}_{1},{\alpha\:}_{2},\dots\:,{\alpha\:}_{C}\right]\), with each \(\:{\alpha\:}_{i}\in\:\left[\text{0,1}\right]\), using a sigmoid-activated linear transformation:

Here, σ(⋅) is the sigmoid function, and wi and bi are learnable parameters. Unlike softmax-based attention, these coefficients are not normalized to sum to one, allowing the model to independently adjust the contribution of each feature channel. The recalibrated feature vector is then computed via element-wise multiplication between each original channel and its corresponding attention weight:

This refined feature vector Fatt is subsequently flattened and passed to the Decision Tree classifier. By focusing on salient patterns and suppressing irrelevant or noisy information, the attention mechanism improves classification robustness and enhances the interpretability of the final decision process. The reweighted tensor is pooled into a compact post-attention latent and passed to a tree-based head in lieu of a softmax layer; see Sects. 3-3-3 and Appendix A.

Tree-in-the-loop classifier (post-attention)

Following channel attention, the concatenated multi-branch tensor \(\:{F}_{\text{fused\:}}\in\:{\mathbb{R}}^{H\times\:W\times\:C}\) is reweighted by the channel scores \(\:\alpha\:\in\:(\text{0,1}{)}^{C}\) to yield \(\:\stackrel{\sim}{F}=\alpha\:\odot\:{F}_{\text{fused\:}}\). We then form a compact post-attention latent by applying global average pooling and a light \(\:1\times\:1\) projection (with optional LayerNorm), i.e., \(\:z=g\left(\stackrel{\sim}{F}\right)\in\:{\mathbb{R}}^{m}\). In our design, the DT replaces the usual softmax head and is trained on this latent \(\:z\) rather than on raw inputs.

Training proceeds in two stages: first, the backbone (multi-branch CNN + attention) is optimized with a temporary linear head for stability and early stopping; next, the backbone is frozen, latents \(\:\left\{\left({z}_{i},{y}_{i}\right)\right\}\) are extracted on train/validation folds, and a cost-sensitive CART is fitted with depth/leaf/pruning selected by inner cross-validation, followed by probability calibration (isotonic as default, Platt as a sensitivity check). At test time, inference is a single forward pass, \(\:x\mapsto\:\widehat{y}=\text{C}\text{a}\text{l}\left(T\right(z\left)\right)\) with \(\:z=g\left(\alpha\:\odot\:{F}_{\text{fused\:}}\right)\), ensuring low latency and reproducibility across runs. This placement exploits the attention sharpened representation while preserving axis-aligned, interpretable thresholds that are well-suited to tabular network telemetry (e.g., byte/flow counts, port ranges). Depth-constrained trees (e.g., max depth \(\:\le\:\) 10) retain the accuracy reported in our main tables while keeping the prediction path short.

Formal operator definitions and the accompanying rationale-namely, attention as discriminant re-weighting (improving Fisher separability), the benefit of multi-branching for channel selectivity, and the alignment of tree splits with threshold-dominant tabular structure-are provided in Appendix A.1-A.3; an algorithmic listing of the two-stage training and inference pipeline appears in Appendix A.4, with DT hyperparameter grids, pruning/calibration details, and leakage-safe cross-validation protocols in Appendix A.5. Additional diagnostics are reported in Appendix A.6-A.7.

Fully connected layer

Following the attention-guided feature refinement stage, the output tensor is flattened and passed through a stack of fully connected (FC) layers, which serve as high-level abstraction modules. These layers aggregate the spatially distributed and channel-enhanced features into a dense, lower-dimensional representation suitable for classification. The fully connected layers also enable complex, non-linear combinations of features, capturing global interactions that cannot be modeled through local convolutions alone. Let \(\:{\mathbf{F}}_{\text{a}\text{t}\text{t}}\in\:{\mathbb{R}}^{C}\) denote the attention-refined feature vector, where C is the number of recalibrated channels. The output of the first fully connected layer is computed as:

where \(\:{\mathbf{W}}_{1}\in\:{\mathbb{R}}^{{d}_{1}\times\:C}\) and \(\:{\mathbf{b}}_{1}\in\:{\mathbb{R}}^{{d}_{1}}\) are the learnable weight matrix and bias vector, respectively, and \(\:\varphi\:(\cdot\:)\) is a non-linear activation function, typically ReLU. This is followed by one or more intermediate FC layers to further compress the representation:

Eventually, the final dense output layer provides the learned feature vector \(\:\mathbf{z}\in\:{\mathbb{R}}^{d}\), which serves as the input to the Decision Tree classifier:

This latent representation z encodes high-level semantic features relevant to distinguishing between different types of network traffic behaviors. It is used as the feature input for the downstream fine-tuned Decision Tree classifier, which performs the final intrusion detection and classification. The modular design of this dense transformation stage ensures that the framework remains adaptable and scalable to different datasets and feature dimensionalities.

Classification using fine-tuned decision tree

In the final stage of the proposed framework, the learned feature vector from the last fully connected layer is passed to a fine-tuned DT classifier for final intrusion classification. Unlike softmax-based neural classifiers, the use of a decision tree offers enhanced interpretability and rule-based transparency, which are particularly beneficial in cybersecurity applications where explainability is crucial.

Let \(\:\mathbf{z}\in\:{\mathbb{R}}^{d}\) denote the final dense feature vector obtained from the fully connected layers. This vector serves as the input to the decision tree classifier T, which recursively partitions the feature space using a series of binary decisions to assign a predicted label \(\:\widehat{y}\). Formally, the decision function can be expressed as:

where L is the number of leaf nodes, \(\:{\mathcal{R}}_{l}\subset\:{\mathbb{R}}^{d}\)represents the feature space region of leaf l, \(\:{y}_{l}\in\:\mathcal{Y}\) is the predicted class at that leaf, and \(\:\mathbb{I}(\cdot\:)\) is an indicator function returning 1 if the condition holds, and 0 otherwise.

In the proposed architecture, a fine-tuned DT is employed as the final classifier in place of conventional softmax or deep neural classifiers. This choice is motivated by the Decision Tree’s inherent interpretability, low inference latency, and strong performance on tabular and structured data—such as network traffic features extracted by CNNs. Unlike softmax, which assumes linear separability in high-dimensional space, DTs partition the feature space into hierarchical, rule-based regions, enabling more nuanced decision boundaries. Furthermore, by fine-tuning the tree using cross-validation and pruning strategies, the model is optimized to prevent overfitting while capturing class-specific patterns, especially in the presence of data imbalance. This integration balances the expressive power of deep feature learning with the simplicity and clarity of tree-based decisions, yielding a robust and interpretable intrusion detection solution.

Results

To rigorously evaluate the effectiveness of the introduced Multi-Branch CNN-Attention framework with a fine-tuned Decision Tree classifier, a series of comprehensive experiments were performed on two benchmark datasets: CIC-IDS2017 and NSL-KDD. The evaluation focuses on classification accuracy, sensitivity, specificity, F1-score, and generalization capability across different attack categories. This section presents the experimental setup, performance metrics, and comparative analyses with baseline methods to demonstrate the robustness, interpretability, and efficiency of the proposed architecture in detecting diverse intrusion scenarios.

Experimental setup

Task formulation. The proposed CNN–Attention + DT architecture is implemented as a general K-class classifier, where the decision-tree head outputs K discrete intrusion classes. In our experiments, we instantiate this formulation differently on each benchmark. For CIC-IDS2017, we follow common operational practice and aggregate all attack categories into a single “Attack” label, yielding a binary Normal vs. Attack detection task. For NSL-KDD, we adopt the standard 5-class setting with labels {Normal, DoS, Probe, U2R, R2L}. Unless otherwise stated, metrics reported on CIC-IDS2017 refer to the binary configuration, whereas metrics on NSL-KDD refer to the 5-class configuration.

All experimental procedures were conducted on a high-performance computing platform equipped with an Intel Core i9-12900 K CPU, 64 GB of RAM, and an NVIDIA RTX 3090 GPU with 24 GB of VRAM. Deep learning modules were developed using MATLAB R2023a and its Deep Learning Toolbox, while additional training and fine-tuning tasks—such as decision tree classification—were carried out using the Statistics and Machine Learning Toolbox. Python 3.10 was also used for data preprocessing and visualization, leveraging widely adopted libraries in the scientific computing ecosystem. GPU acceleration was employed throughout to efficiently manage computationally intensive tasks.

Training was carried out using a mini-batch size of 200 over 10 epochs, with the Adam optimizer employed for efficient and adaptive learning. A fixed learning rate of 0.001 was used throughout. The dataset was split using an 80/20 hold-out validation strategy, ensuring a balanced distribution of classes in both training and testing sets. Prior to training, all input features were normalized to zero mean and unit variance to improve convergence speed and maintain numerical stability.

The input feature vectors were reshaped into a 1D format of size [d×1 × 1], where d represents the number of features. These reshaped vectors were passed through a multi-branch CNN to extract high-level feature representations. The output of the penultimate fully connected layer, labeled as fc_feat, was used as the final feature embedding.

To perform classification, we replaced the conventional softmax layer with a fine-tuned decision tree classifier. This decision tree was trained on the extracted features using optimized hyperparameters (e.g., tree depth, minimum leaf size) determined via grid search. The decision tree’s interpretability and ability to model non-linear decision boundaries made it particularly suitable for distinguishing among multiple types of attacks.

This two-stage architecture—comprising a CNN-based feature extractor and a decision tree classifier—enables precise and interpretable intrusion classification. By decoupling representation learning from classification, the model achieves enhanced generalization, robustness to noise, and improved transparency, particularly in enterprise network environments.

Dataset

The CIC-IDS2017 dataset, conducted by the Canadian Institute for Cybersecurity (CIC) at the University of New Brunswick (UNB), is one of the most comprehensive flow-based intrusion detection datasets available to date. It was introduced by Sharafaldin et al. (2018)54 and simulates realistic enterprise network traffic generated from benign users and a wide range of attack scenarios. These include brute-force, DoS/DDoS, web attacks, infiltration, botnets, and more. Each flow is labeled and characterized by 80 + features including statistical summaries, protocol behaviors, and content-based features.

Unlike earlier datasets, CIC-IDS2017 captures both time-based and flow-based attributes using the CICFlowMeter tool, offering high diversity and realistic traffic patterns. The dataset is split by day, with specific days allocated to different types of attacks, and includes over 2.8 million records. All records are preprocessed to minimize missing data, making it highly suitable for training deep learning and machine learning models.

The NSL-KDD dataset is a refined and enhanced version of the original KDD Cup 1999 dataset, introduced to address key issues such as redundancy and imbalance in the earlier version. It was proposed by Tavallaee et al. (2009)55 to offer a more balanced benchmark for evaluating IDS models. NSL-KDD retains 41 hand-crafted features for each connection record, categorized into four types: basic TCP/IP features, content features, time-based features, and host-based traffic features. Each record is labeled as either normal or one of four attack categories: DoS, Probe, U2R, or R2L.

The dataset is divided into training and testing subsets with a balanced distribution of classes and no duplicate records. Its smaller size (compared to CIC-IDS2017) makes it ideal for benchmarking lightweight and interpretable models, particularly in academic research.

Evaluations

The results summarized in Table 2 present a detailed comparison of multiple intrusion detection models evaluated on the CIC-IDS2017 dataset. For CIC-IDS2017, we evaluate the model in a binary setting by grouping all attack categories into a single Attack class, so that the classifier distinguishes between Normal and Attack flows. Moreover, On NSL-KDD, all experiments are conducted in the standard 5-class configuration (Normal, DoS, Probe, U2R, R2L), and metrics such as macro-F1 and macro-PR-AUC are computed over these five classes. The proposed model, which integrates a Multi-Branch CNN-Attention architecture with a fine-tuned Decision Tree classifier, achieves the highest performance across all metrics, including accuracy (99.28%), recall (99.34%), precision (99.23%), specificity (99.21%), and F1-Score (99.28%). These findings underline the effectiveness of combining multi-scale feature extraction (via multiple convolution branches), attention-based emphasis on informative features, and a decision tree classifier that offers high interpretability with low complexity.

The CNN + SVM model lags further behind in specificity and F1-score, which indicates limitations in distinguishing benign traffic from sophisticated attack patterns. The results affirm that the hybridization of DL and interpretable ML—when carefully tuned—can yield both scalable and accurate IDS solutions. All experiments share identical preprocessing, data splits, and early-stopping policies; sources of randomness are limited to parameter initialization and mini-batch shuffling and are varied only in the multi-seed analysis.

Robustness to Randomness (Multi-Seed Analysis). To demonstrate that single-run improvements persist across random initializations, we repeat all experiments over 10 seeds per method and dataset, keeping data splits, preprocessing, and early-stopping fixed. Table 3 reports mean ± standard deviation for key metrics, along with paired Wilcoxon signed-rank tests against the strongest baseline in each table. The model also maintains an efficient inference time of just 32 milliseconds, making it viable for real-time enterprise network monitoring. To strengthen robustness beyond a single run, we additionally evaluate all methods over multiple random seeds and report mean ± standard deviation as well as paired significance tests (see Table 3). Compared to the baseline CNN-LSTM, CNN + XGBoost, and CNN + SVM approaches, the proposed method consistently delivers better generalization and robustness to diverse attack types in a multi-class classification scenario. Notably, although the CNN + XGBoost method achieves respectable accuracy (98.74%), it slightly underperforms in precision and recall, likely due to sensitivity to data imbalance.

Means and SDs are computed over 10 seeds; PR-AUC is macro one-vs-rest. p-values are Holm–Bonferroni-corrected per dataset. Effect size reported as Cohen’s d. The proposed model shows small dispersion and statistically significant gains over the strongest baseline on both datasets. Figure 3 illustrates the confusion matrices for the two benchmark datasets used to evaluate the proposed intrusion detection model. For the CIC-IDS2017 dataset, the model achieved a true positive rate (attack detection) of 99.3% and a true negative rate (normal detection) of 98.9%, with misclassification rates as low as 0.7% (false negatives) and 1.1% (false positives). This reflects the model’s excellent ability to distinguish between benign and malicious traffic patterns, especially in a complex, high-dimensional setting such as CIC-IDS2017.

For the NSL-KDD dataset, which contains a more balanced and curated set of traffic samples, the confusion matrix shows similarly strong performance. The model achieved 99.2% accuracy in detecting attacks and 98.6% for normal instances. Misclassification rates were slightly higher than CIC-IDS2017, yet still minimal (0.8% and 1.4%, respectively). These results collectively affirm the model’s robustness and generalization across diverse datasets, with its hybrid architecture (multi-branch CNNs, attention, and decision tree classifier) effectively capturing critical features and minimizing error even in imbalanced or noisy data environments.

Confusion matrices of the proposed CNN-Attention + Fine-Tuned Decision Tree model applied to the CIC-IDS2017 (left) and NSL-KDD (right) datasets. The matrices represent normalized classification results, highlighting the proportion of correctly and incorrectly predicted instances across the “Normal” and “Attack” classes.

In an additional, deployment-oriented evaluation, we stress-test the model under zero-day and temporal-drift conditions to assess robustness beyond standard benchmarks. Table 4 evaluates deployment-oriented robustness by (1) simulating zero-day conditions via a leave-one-family-out (LOFO) protocol on CIC-IDS2017 (with Web Attack entirely excluded from training) and (2) approximating APT-like progression using a temporal hold-out (training on early traffic, testing on late-day flows).

Under LOFO, the proposed CNN-Attention + DT consistently surpasses the strongest single-run baseline (CNN + XGBoost), raising Macro-F1 from 97.40 ± 0.18 to 98.55 ± 0.12 (Δ=+1.15, p = 0.002). Crucially, this gain is accompanied by superior AUROC (0.962 ± 0.004 vs. 0.932 ± 0.006) and PR-AUC (0.941 ± 0.006 vs. 0.905 ± 0.009), indicating not only higher balanced accuracy on known classes but also better ranking and precision-recall behavior for the held-out “novel” family, where class imbalance typically inflates variance. The small standard deviations further show that improvements are stable across seeds and not artifacts of initialization or mini-batch ordering. In the temporal split, which stresses distribution shift over time (a hallmark of multi-stage intrusions), the proposed model again improves the Macro-F1 average from 97.15 ± 0.20 to 98.22 ± 0.15 (Δ=+1.07, p = 0.004), demonstrating resilience to non-stationarity without any task-specific retraining.

A complementary LOFO on NSL-KDD holding out R2L/U2R—the notoriously sparse and difficult families—confirms the trend. The proposed model elevates Macro-F1 from 95.60 ± 0.22 to 97.10 ± 0.17 (Δ=+1.50, p = 0.001) and improves AUROC (0.946 ± 0.006 vs. 0.918 ± 0.008) and PR-AUC (0.919 ± 0.008 vs. 0.887 ± 0.011). These metrics matter operationally: AUROC captures ranking quality across thresholds, while PR-AUC better reflects detection under class imbalance typical of rare, stealthy attacks. Together with the CIC-IDS2017 results, Table 4 shows that the attention-weighted, multi-scale representation—followed by an axis-aligned, interpretable decision tree—generalizes to unseen families and temporal drifts more reliably than a boosted tree head on raw deep features. In other words, the observed single-run gains (Tables 2 and 5) persist under zero-day-like and time-shifted conditions with statistically significant margins.

Computational cost analysis

Training uses a two-stage protocol for tree/boosting heads: the multi-branch CNN + channel attention backbone is trained once (early-stopped) and then frozen; the head (DT or XGBoost) is fitted on post-attention latents with no second back-prop. Inference cost = backbone FLOPs + attention re-weighting + O(depth) head traversal; the tree’s contribution is negligible relative to convolutions. For maintenance, head-only refits complete in minutes on CPU, whereas end-to-end softmax/MLP fine-tuning requires a longer GPU session.

Table 6 contrasts the protocols and cost drivers head-to-head. On the same multi-branch CNN + channel-attention backbone (≈ 1.10 G FLOPs per inference), the Softmax/MLP head is the only end-to-end option—its fine-tune back-propagates through the backbone, so the Head train column (≈ 18 min, GPU) reflects a second, full network update. By design, XGBoost and the proposed calibrated DT are two-stage: the backbone is trained once and frozen; the head is fitted on post-attention latents with no second back-prop. Because the backbone dominates compute, the Latency column tracks backbone FLOPs; the DT’s O(depth) traversal adds a sub-millisecond increment, consistent with the observed 31–32 ms end-to-end latency in Table 6. In the Table 6, “Protocol” indicates whether the head is trained end-to-end (fine-tuned with back-propagation through the backbone) or in a two-stage regime (backbone trained once and frozen; head fitted on post-attention latents with no second back-prop).

From an operations perspective, Table 6 highlights maintenance asymmetry. When labels drift or thresholds need recalibration, head-only refits for DT/XGBoost complete in minutes on CPU, avoiding GPU bookings and avoiding risk of destabilizing the backbone. In contrast, the end-to-end Softmax/MLP head requires a GPU fine-tune (20–30 min in our setup) because gradients must flow through the backbone again. This difference compounds at scale (multiple tenants, rolling windows, or SOC playbooks): the two-stage heads offer lower time-to-update, predictable cost, and safer change control (no backbone weights touched). Crucially, these efficiency gains do not come at the expense of detection quality: on the same backbone, the proposed DT head matches or exceeds alternatives on macro-F1 and PR-AUC (see Results), while keeping latency lowest among the three head choices in Table 6.

Interpreting Table 6 together with our accuracy/PR-AUC results, the proposed DT head delivers a favorable efficiency–effectiveness trade-off: (1) training—one backbone pass (3.5 h) plus a 6-minute CPU head fit; (2) inference—backbone-dominated runtime with negligible tree overhead (31–32 ms total); (3) maintenance—minute-scale head refreshes on CPU. Add to this interpretable, axis-aligned rules (auditable paths) and you obtain a head that is operationally attractive for enterprise IDS.

Generalization capability

Table 7 presents the results of a cross-dataset evaluation designed to evaluate the generalization capability of the introduced CNN-Attention with Fine-Tuned Decision Tree classifier. In this setup, the model was trained on one dataset (either CIC-IDS2017 or NSL-KDD) and tested on the other without any additional fine-tuning—simulating a real-world deployment scenario where models must generalize to unseen traffic distributions. As shown, when trained on CIC-IDS2017 and evaluated on NSL-KDD, the model achieved an accuracy of 94.10%, and when the training and testing datasets were reversed, it reached 94.82%. Although these results show a slight drop compared to within-dataset evaluations, they still reflect high classification performance, confirming the robustness of the proposed architecture across diverse data domains. The relatively small performance degradation also demonstrates the model’s strong ability to extract meaningful and transferable representations from network traffic, aided by the attention mechanism.

When the architecture is trained on CIC-IDS2017 and tested on NSL-KDD, the primary reason for the observed drop in accuracy (~ 5%) is the significant shift in feature distribution and semantic structure between the two datasets. CIC-IDS2017 is a flow-based dataset collected in a modern network environment, with richer and more complex features. In contrast, NSL-KDD is a benchmark dataset with simplified, older-style features based on connection-level statistics. The model, having learned nuanced and high-dimensional patterns from CIC, may struggle to interpret the more limited feature set of NSL-KDD. Moreover, since attacks such as DoS or BruteForce manifest differently across datasets, the detection patterns may not fully transfer. The absence of domain adaptation or fine-tuning further exacerbates this performance gap.

In the reverse setup, where the framework is trained on NSL-KDD and tested on CIC-IDS2017, a slightly better generalization (94.82%) is observed. This can be attributed to the fact that CIC contains a broader and more realistic distribution of traffic behaviors and attack signatures, offering more information for the classifier. However, because the model was originally trained on a less complex dataset (NSL-KDD), it lacks exposure to high-variability and contextual features found in CIC. This mismatch results in classification errors, especially in distinguishing nuanced attack vectors. Nonetheless, the use of attention mechanisms in the proposed model enables it to highlight critical patterns within the feature space, allowing it to maintain a relatively strong performance despite the distributional and structural gap.

Figure 4 reports seed-averaged ROC and PR curves (mean over 10 runs, 95% CI bands) for the Proposed model under three deployment-relevant scenarios—Standard, Zero-day (LOFO), and Temporal drift—on CIC-IDS2017 and NSL-KDD. On both datasets the curves sit near the upper-left (ROC) and upper-right (PR), yielding AUC/PR-AUC consistent with ≈ 99% accuracy. As expected, Standard achieves the strongest separability; LOFO and Temporal introduce a small, systematic drop, yet the narrow confidence bands indicate stability across random seeds. The ROC bands show improved TPR at low FPR for Standard and a controlled degradation for the stress scenarios, which is precisely where operational IDSs are most sensitive.

PR curves complement this picture under class imbalance. Precision remains high across the recall range, with LOFO and Temporal trailing the Standard curve modestly but maintaining > 0.9 precision through the mid- to high-recall region on both datasets. Together, these panels demonstrate that the attention-weighted, multi-branch representation paired with a decision-tree head retains ranking quality and retrieval under rare-attack conditions, and that the gains we report are not seed- or split-dependent.

ROC and PR curves for the Proposed model on CIC-IDS2017 and NSL-KDD under Standard, Zero-day (LOFO), and Temporal-drift scenarios. Curves show 10-seed means with 95% confidence-interval (CI) bands. The Proposed model sustains high TPR at low FPR and strong precision–recall behavior even under LOFO and temporal shift.

Ablation study

In this study, eight architectural configurations were evaluated to systematically assess the contribution of individual components within the proposed intrusion detection framework. The baseline model consists of a standard CNN followed by a softmax classifier (Model 1). In Model 2, the softmax layer is replaced with a decision tree to examine the impact of using a non-parametric classifier. Model 3 incorporates a multi-branch CNN architecture without the attention mechanism, coupled with a decision tree, whereas Model 4 employs the attention mechanism without the multi-branch structure. Model 5 combines both attention and multi-branch components but relies on a softmax classifier. Models 6 and 7 maintain the same backbone but utilize alternative classifiers—SVM with RBF kernel and k-nearest neighbors (kNN), respectively. Finally, Model 8, designated as the proposed approach, integrates the multi-branch CNN, attention mechanism, and a fine-tuned decision tree for classification. This diversity in model design enables a comprehensive ablation study to analyze the effect of each architectural component on the overall performance of the IDS. As illustrated in Fig. 5, the ablation study on the CIC-IDS2017 dataset highlights the incremental performance improvements achieved by integrating architectural components.

Model 1 (CNN Only + Softmax) begins with a modest accuracy of 94.1% and F1-Score of 93.7%, reflecting the limitations of basic CNNs in capturing nuanced network traffic patterns. With the incorporation of decision trees (Model 2) and multi-branch structures (Model 3), a notable gain is observed in both metrics. The integration of the attention mechanism (Model 4) further boosts performance, indicating its effectiveness in emphasizing critical features. The proposed full architecture (CNN + Multi-Branch + Attention + Fine-Tuned DT) achieves the highest results with an accuracy and F1-score of 99.28%, showcasing the synergistic benefits of each module and validating the robustness of the proposed design on complex, flow-based traffic.

In Fig. 6, the ablation results on the NSL-KDD dataset follow a similar trend, affirming the generalizability of architectural improvements across different datasets. Starting from Model 1, with an accuracy of 93.8%, each progressive model that adds multi-branch convolution, attention, or more sophisticated classifiers contributes to performance enhancement. Models using hybrid classifiers (such as SVM and kNN) show improvement, but the best outcome is again observed with the proposed model (Model 8), achieving 99.12% accuracy and 99.14% F1-Score. This validates that the combination of fine-grained feature extraction and a fine-tuned decision tree classifier is particularly well-suited for datasets with complex yet more structured feature spaces like NSL-KDD.

As shown in Fig. 7, the cross-dataset evaluation scenario provides critical insights into the generalization capability of each model. When trained on one dataset and tested on another, overall performance naturally declines due to differences in feature distributions. Nonetheless, models with attention (e.g., Model 4 and onward) maintain relatively high performance, with the suggested model achieving an accuracy of 94.82% and an F1-Score of 94.28%. This demonstrates that attention and multi-branch mechanisms contribute significantly to the model’s ability to extract transferable patterns. While simpler models (e.g., Model 1 or 2) suffer from a substantial drop, the full architecture exhibits strong resilience, affirming its effectiveness in unseen network traffic domains.

The architectural synergy between multi-branch convolutional pathways, attention mechanisms, and a fine-tuned decision tree classifier plays a pivotal role in elevating the detection capability of the proposed model. Beyond individual contributions, the combination of diverse kernel receptive fields and contextual attention enhances both spatial and semantic feature extraction. Notably, the decision tree component not only improves classification granularity but also contributes to interpretability—critical in real-world security applications. The consistently superior results across CIC-IDS2017, NSL-KDD, and cross-dataset evaluations affirm that the proposed architecture is not just a sum of its parts but a well-integrated system optimized for generalization, resilience, and actionable intelligence in intrusion detection scenarios.

Statistical significance

Although the proposed model achieves superior performance across multiple metrics, it is important to validate whether the observed improvements are statistically significant rather than coincidental. Table 8 presents a comparative statistical significance analysis between the proposed CNN-Attention + Fine-Tuned Decision Tree model and a series of baseline classifiers across the CIC-IDS2017 and NSL-KDD datasets. The evaluation focuses on both accuracy and F1-Score gains, supplemented by corresponding p-values to assess statistical validity.

For the CIC-IDS2017 dataset, the proposed model outperformed CNN + Softmax and CNN + DT by substantial margins, achieving + 3.5% and + 5.1% accuracy gains respectively, with p-values of 0.013 and 0.002. These values fall below the common significance threshold (p < 0.05), indicating that the observed improvements are statistically meaningful. A similar trend is observed in the F1-Score gains, reinforcing the robustness of the model’s predictive capabilities.

On the NSL-KDD dataset, the proposed architecture again demonstrated consistent improvements. The accuracy gain over CNN + Softmax was + 4.2% (p = 0.017), while the improvement over CNN + DT was even higher at + 5.7% (p = 0.001). These results validate that the model’s superiority is not dataset-specific and confirm its generalizability. Interestingly, in contrast to these significant gains, the comparison with CNN + XGBoost on CIC did not yield statistical significance (p = 0.075 for accuracy and p = 0.092 for F1), suggesting that while the proposed model performs better in raw metrics, the margin may not be large enough to rule out the influence of sampling variability in this specific case.

The findings in Table 8 underline the statistical reliability of the proposed CNN-Att + DT model’s performance. The low p-values across most comparisons reinforce that the observed performance gains are not merely incidental but are statistically grounded. This level of significance becomes especially important in security-related applications like intrusion detection, where false assumptions about classifier superiority can have real-world consequences. Therefore, the results in Sect. 4.6 not only demonstrate empirical effectiveness but also establish a solid inferential foundation for trusting the model’s advantages across various network environments.

Comparative analysis

As illustrated in Table 9, the proposed CNN-Attention architecture integrated with a fine-tuned decision tree classifier achieves a strong balance of accuracy and generalizability across two prominent intrusion detection benchmarks: CIC-IDS2017 (99.28%) and NSL-KDD (99.12%). Unlike many prior approaches that tailor their architectures to specific datasets, this method maintains consistently high performance across distinct traffic distributions and attack scenarios. Its multi-branch CNN structure facilitates hierarchical extraction of both local and global features, while the attention mechanism enables the model to focus selectively on the most informative patterns. The replacement of softmax with a decision tree classifier further enhances the interpretability and robustness of the model, making it suitable for real-world cybersecurity applications where explainability is crucial.

Relation to Transformer-, GNN-, and LLM-based IDS. As reviewed in Sect. “Related work”, recent IDS research has introduced powerful Transformer backbones (e.g., IDS-MTran, IDS-INT), graph-based models such as GNN-IDS and BS-GAT, and LLM-assisted intrusion detection frameworks that operate on rich log data or policy representations. Many of these approaches report strong performance on KDD, NSL-KDD, and CIC-IDS2017, but they typically rely on heavier architectures, dataset-specific preprocessing and splits, or additional side information tailored to particular deployment regimes (e.g., edge computing, federated/cloud settings, or log-level analytics). In contrast, our CNN–Attention + DT pipeline is deliberately designed as a lightweight, tabular-flow IDS with interpretable tree-based decisions. While a full re-implementation and protocol-aligned comparison with all representative Transformer-, GNN-, and LLM-based methods is beyond the scope of this work, our results on CIC-IDS2017 and NSL-KDD place the proposed model within the broader landscape as a competitive yet deployment-oriented alternative that complements these more complex architectures.

Despite the superior generalization, it is noteworthy that Iliyasu et al.35 achieved a slightly higher accuracy of 99.8% on NSL-KDD using few-shot autoencoders. However, their method heavily relies on pretrained encoders, making it dependent on the quality and representativeness of external datasets. This limitation raises concerns about transferability to unseen attack vectors or traffic conditions. Moreover, Jiang et al.22 proposed BBO-CFAT, a hierarchical Transformer-based IDS with biogeography-based optimization for feature selection, and reported strong results on the same benchmarks used in this work—achieving 99.1% accuracy on CIC-IDS2017 and 97.5% on NSL-KDD. While BBO-CFAT provides a powerful Transformer backbone, it introduces additional complexity in the optimization pipeline and increases the number of tuning knobs (feature-selection plus Transformer depth/width), which may be challenging to calibrate for new environments. Similarly, Kilichev and Kim36 reported an accuracy of 99.31% on the UNSW-NB15 dataset using genetic algorithm (GA) and particle swarm optimization (PSO)-tuned CNNs. While the optimization yielded high accuracy, it also incurred substantial computational overhead and training complexity, potentially making it impractical for large-scale or time-sensitive applications.

Other hybrid approaches, such as Sajid et al.10 and Halbouni et al.12, show solid accuracy levels (98–99%) by combining CNNs with LSTM or XGBoost, benefiting from sequential modeling and ensemble learning. However, they fall short in cross-dataset generalization and often require extensive preprocessing to align features across datasets.

Additionally, Na’amneh et al.24 targeted IoT DDoS detection with 99% accuracy, but their method lacks evidence of scalability across diverse attack types or high-traffic environments. These limitations are critical in real-world deployments where attack patterns vary widely and evolve rapidly, making flexibility and adaptability essential evaluation criteria. The inclusion of BBO-CFAT22 in Table 9 addresses the reviewer’s concern regarding comparisons with recent Transformer-based IDSs on the same benchmarks (CIC-IDS2017 and NSL-KDD); while BBO-CFAT attains competitive accuracy, its hierarchical Transformer and meta-heuristic feature selection introduce non-trivial deployment and tuning overheads. In contrast, the proposed method strikes a balanced trade-off between accuracy, interpretability, and computational efficiency. While it does not yet incorporate transfer learning mechanisms—an area for future improvement—it provides a reliable and explainable architecture with demonstrated performance on heterogeneous datasets. The ablation studies and cross-dataset experiments further validate the low risk of overfitting, a common issue in over-engineered or deeply tuned models like those using reinforcement learning (e.g., Qiu et al.16. Therefore, the designed model introduces a compelling solution for practical intrusion detection, offering broad applicability, consistent performance, and architectural simplicity.

Discussion

The proposed CNN–Attention architecture coupled with a fine-tuned Decision Tree classifier demonstrates strong performance on both CIC-IDS2017 and NSL-KDD, with accuracies of 99.28% and 99.12%, respectively. One core strength lies in its hybrid design that leverages the representational power of deep convolutional features while maintaining the interpretability and low computational footprint of decision trees. Unlike traditional softmax classifiers, the decision tree provides rule-based outcomes that are transparent and easier to deploy in enterprise cybersecurity. To facilitate like-for-like comparison with contemporary IDS designs, we report results alongside latency and parameter counts and reference matched softmax-head baselines on the same backbone (Tables 2, 5 and 3; Fig. 4).

Cross-dataset generalization and practical efficiency

Another advantage is robust feature extraction. The multi-branch CNN captures local patterns at multiple temporal/spatial scales, while the attention module adaptively reweights channels according to intrusion context; this yields a more discriminative, context-aware representation and translates into improved precision and F1—including for minority/complex attack families reflected in the confusion matrices (Fig. 3). The method also shows competitive cross-dataset behavior: when trained on one benchmark and evaluated on another with different structure, performance remains high, suggesting that attention-enhanced features capture transferable regularities of malicious behavior. In operational terms, the calibrated, depth-constrained tree introduces negligible overhead; the full model (≈ 2.3 M parameters; ≈31–32 ms inference) compares favorably with heavier RL/ensemble or large-transformer pipelines while preserving interpretable, axis-aligned rules (Tables 2, 5 and 3).

Validation protocol and robustness under shift

Placing the results in context, the evaluation follows a multi-run, uncertainty-aware protocol that is stricter than what many IDS studies report. For each method and dataset, 10 independent runs are executed under identical preprocessing and fixed splits, and outcomes are summarized as mean ± standard deviation with paired Wilcoxon tests (Holm–Bonferroni corrected). In addition to accuracy, the analysis foregrounds macro-F1 and PR-AUC, metrics that are more sensitive to class imbalance than micro-averaged scores. Seed-averaged ROC/PR curves with 95% confidence bands (Fig. 4) indicate consistently high TPR at low FPR and strong precision at high recall, with narrow dispersion across seeds; this contrasts with single-run reports where apparent gains can hinge on a favorable initialization or threshold. Normalized confusion matrices complement these curves, clarifying minority-family behavior and limiting the risk of performance inflation due to class prevalence.

Robustness under distribution shift is examined through two deployment-oriented stress tests—leave-one-family-out (zero-day proxy) on CIC-IDS2017 and a temporal hold-out that trains on early traffic and tests on later flows (Table 4). Relative to strong baselines (including tree-ensemble heads and softmax atop the same backbone), the post-attention decision-tree head maintains statistically significant advantages in macro-F1 and PR-AUC, with only modest, predictable degradation from the standard split and tight confidence intervals. A complexity–performance profile (Tables 2, 5 and 3) further situates these results: the backbone plus calibrated, depth-constrained tree incurs negligible inference overhead, preserves interpretable, axis-aligned rules, and avoids the heavy tuning or latency often associated with more complex architectures. Appendix A.4–A.7 provides calibration curves, depth sensitivity, and exemplar rule paths, linking the theoretical rationale to observed operating characteristics and facilitating like-for-like comparison with contemporary IDS designs.

Corroborating theoretical propositions

Our theoretical analysis (Propositions 1–3 in Sect. “Proposed model”) is supported by several empirical diagnostics in the main Results. First, Proposition 1 states that channel attention acts as a diagonal metric learning mechanism that increases class separability in the feature space. This is corroborated by the ablation results in the attention vs. no-attention variants (Sect. "Results".x, attention ablation), where adding attention consistently improves ROC-AUC and macro-F1 on both CIC-IDS2017 and NSL-KDD, and reduces overlap between benign and attack score distributions.

Second, Proposition 2 argues that the multi-branch backbone increases the likelihood of discovering strongly discriminative feature channels by aggregating filters at different receptive-field widths. The multi-branch vs. single-branch comparison in Sect. “Results”.x (backbone ablation) shows that removing branches leads to a monotonic degradation in accuracy and macro-F1, especially on minority attack families, which empirically supports the role of multi-scale branches in capturing complementary traffic patterns. This effect is further reflected in the branch-wise contribution statistics reported in Appendix A.6.

Third, Proposition 3 states that a depth-constrained decision tree head aligns naturally with threshold-dominant tabular IDS telemetry and enlarges effective decision margins compared with purely neural classifiers. This is reflected in the comparison between CNN-Attention + DT and CNN-Attention + MLP/SVM baselines in Sect. “Results”.x, where the DT head yields improved calibration, lower false-positive rates, and competitive accuracy under cross-dataset transfer. Rule-length and margin diagnostics, together with additional calibration plots, are provided in Appendix A.7.

Altogether, these diagnostics connect the theoretical propositions to observable empirical behavior in the main experiments, while Appendix A.6–A.7 provide extended quantitative and visualization-based evidence.

Limitations and outlook

Despite these strengths, limitations remain. The current pipeline does not incorporate explicit transfer-learning or domain-adaptation mechanisms; when exposed to entirely new topologies or novel attack taxonomies, adaptation requires additional fine-tuning, which may be inconvenient under rapid, zero-day evolution. Compared with adaptation-heavy Transformer/PEFT approaches, this design trades plug-and-play transfer for lower latency and simpler calibration; integrating lightweight adapters is a promising extension. The approach also operates on fixed, preprocessed flow-level features; changes in preprocessing, feature ordering, or scaling can affect performance unless aligned with training-time settings. Heterogeneous logging stacks and evolving telemetry formats thus motivate robustness testing across preprocessing variants, an aspect partially addressed here via temporal and family hold-outs but still open for broader multi-site studies. Future work includes coupling the backbone with parameter-efficient Transformer adapters for rapid domain adaptation, evaluating encrypted-traffic scenarios and APT-style multi-stage campaigns, and extending cross-organizational validation to multiple live networks under standardized, reproducible protocols. In addition, a natural direction for future work is to perform protocol-aligned, head-to-head comparisons with representative Transformer-, GNN-, and LLM-based IDS architectures under a unified preprocessing and evaluation pipeline.

Conclusion

his research presented an effective and interpretable intrusion detection framework that combines a multi-branch convolutional neural network (CNN) with an attention mechanism and a fine-tuned decision tree classifier. The proposed, deployment-oriented architecture is designed to operate directly on flow-level tabular features, capturing hierarchical and context-aware patterns through parallel convolutional branches with varying receptive-field widths and refining them via a channel-attention module that selectively emphasizes the most informative components. Instead of using a standard softmax classifier, we employed a fine-tuned decision tree, offering both interpretability and high classification accuracy. The model was evaluated on two widely used benchmark datasets—CIC-IDS2017 and NSL-KDD—achieving accuracies of 99.28% and 99.12%, respectively. These results match or surpass those of state-of-the-art approaches across major evaluation metrics, including precision, recall, F1-score, and specificity. Ablation studies confirmed the significant contributions of each architectural component, while statistical significance testing validated the robustness of the performance gains. Moreover, the decision tree head provided transparent, rule-based decisions and improved class separation without compromising accuracy, aligning well with the tabular nature of IDS telemetry.

One of the standout findings was the model’s ability to generalize across datasets. In cross-dataset evaluations—training on one dataset and testing on the other—the proposed framework maintained a high level of performance, indicating strong robustness to distributional shifts. This is particularly valuable in real-world settings where traffic patterns evolve and new attack types frequently emerge. Despite its strengths, the model has limitations. It currently lacks an explicit transfer learning or domain-adaptation mechanism, which may restrict adaptability in domains with limited labeled data or rapidly changing environments. Additionally, while the decision tree is efficient and interpretable, it may not fully capture subtle, high-dimensional decision boundaries compared to ensemble methods or Transformer-based classifiers. Nonetheless, the proposed CNN-Attention + Decision Tree architecture offers a compelling balance between accuracy, efficiency, and interpretability, and its modular design makes it amenable to future extensions such as lightweight Transformer adapters or ensemble heads. Its performance, generalization capability, and deployment-aware design make it well-suited for practical use in evolving and heterogeneous network environments. Future work will explore integrating parameter-efficient transfer learning, tightening real-time detection constraints, and coupling the model with adaptive data pipelines and multi-site evaluation protocols to further enhance its applicability in dynamic cybersecurity landscapes.

Data availability