Abstract

Breast cancer (BC) is a global problem, largely due to a shortage of knowledge and early detection. The speed-up process of detection and classification is crucial for effective cancer treatment. Medical image analysis methods and computer-aided diagnosis can enhance this process, providing training and assistance to less experienced clinicians. Deep Learning (DL) models play a great role in accurately detecting and classifying cancer in the huge dataset, especially when dealing with large medical images. This paper presents a novel hybrid model of DL models combined a Convolutional Neural Network (CNN) and Long Short-Term Memory (LSTM) for binary breast cancer classification on two datasets available at the Kaggle repository. CNNs extract mammographic features, including spatial hierarchies and malignancy patterns, whereas LSTM networks characterize sequential dependencies and temporal interactions. Our method combines these structures to improve classification accuracy and resilience. We compared the proposed model with other DL models, such as CNN, LSTM, Gated Recurrent Units (GRUs), VGG-16, and RESNET-50. The CNN-LSTM model achieved superior performance with accuracies of 99.17% and 99.90% on the respective datasets. This paper uses prediction evaluation metrics such as accuracy, sensitivity, specificity, F-score, and the AUC curve. The results showed that our model CNN-LSTM can enhance the performance of breast cancer classifiers compared with others with 99.90% accuracy on the second dataset.

Similar content being viewed by others

Introduction

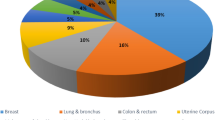

Cancer of the breast is a frequent and fatal illness that impacts women globally, and it’s responsible for more than 40,000 deaths each year1. This condition may be broken down into four distinct categories: benign tumours, normal tissue, in situ carcinoma, and invasive carcinoma2. It can be identified through a number of different detection technologies, including scans of mammography, X-rays, ultrasound images, Positron Emission Tomography (PET), Computed Tomography (CT) scans, thermography, and Magnetic Resonance Imaging (MRI)3. Histopathological imaging and genetics are crucial for cancer diagnosis and therapy4.

BC, one of the global diseases among women. Precision diagnosis and timely identification enhance treatment outcomes and decrease mortality. Nevertheless, the complexity of breast tissue and the somewhat small size of both benign and malignant tumours pose challenges in diagnosing even for highly trained professionals. Despite their popularity, mammography and ultrasonography might give false positives or negatives, resulting in needless treatments or missed clinical assessment. Nowadays, artificial intelligence (AI), machine learning (ML), and deep learning models are used for healthcare diagnostics5,6,7,8,9. In recent times, DL and powerful artificial intelligence have greatly increased the accuracy of BC diagnosis. The criticality of deep neural networks especially CNNs, in the domain of medical image analysis lies in their ability to independently acquire and extract characteristics without requiring human involvement. These models have exhibited impressive precision in tasks like the detection of BC and other problems related to classifying images10. CNNs, which are restricted in their ability to simulate sequential data and temporal relationships, which are essential in the field of medical imaging. LSTM, a form of Recurrent Neural Network, are promising for time-series analysis and sequence prediction. Acquiring long-term dependencies and capturing temporal dynamics within data is within their capabilities. Explicit gaps in existing studies, such as limited generalization across diverse datasets and insufficient integration of spatial and temporal features for medical image analysis. The model by emphasizing its optimized integration of CNN and LSTM layers for enhanced feature extraction and sequential analysis, evaluation on diverse datasets (IDC and BreaKHis) to ensure robustness, and superior performance with accuracies of 99.17% and 99.90%, outperforming existing models. Additionally, the model incorporates tailored preprocessing, advanced hyperparameter tuning via grid search, and computational efficiency evaluations, highlighting its practical applicability in clinical settings.

The research article is focused on develop the proposed hybrid DL model (CNN-LSTM) that concentrate on breast cancer classification. The method utilizes the advantages of both CNNs and LSTMs to improve classification accuracy. Public datasets of BC will be used to evaluate the model and compare it to current models using accuracy, sensitivity, specificity, F-score, and AUC. To help doctors diagnose breast cancer early and accurately, improve patient outcomes and reduce death rates by enhancing diagnostic tools.

This research paper is organized as follows: the section two reviews existing literature on the diagnosis of BC diseases. The section three presents the architecture of the CNN-LSTM model in classifying breast cancer diseases. The fourth section evaluates a novel hybrid model CNN-LSTM with other DL models, for instance CNN, LSTM, GRU, VGG-16, and RESNET-50. Finally, the conclusion and suggestions for future research are discussed.

Related works

DL may increase medical image analysis accuracy and efficiency, making it popular in breast cancer detection. Several models and approaches have been devised to tackle the intricate complexities of breast cancer diagnosis, namely in the identification of malignant tumours using mammograms and MRI images. This section discusses numerous major research that used different methodology, datasets, and optimization strategies to improve breast cancer classification models.

Wang et al.11 developed a hybrid DL model in order to detect breast intradural ductal carcinoma through utilizing the PCam Kaggle slide images dataset. The model achieves optimal performance measures when integrating CNN and GRU layers with an accuracy of 86.21%.

Zakareya et al.12 used granularity-based computing, shortcut connections, two configurable activation functions, and an attention mechanism in order to classify breast cancer. The model enhances diagnosis accuracy by capturing detailed cancer images. Compared to existing models, it achieved the accuracy of 93% and 95% on ultrasound and breast histopathology images, sequentially.

In the study of Jayandhi et al.13, they presented an efficient DL framework with a Support Vector Machine (SVM) models that combined some ideas. They used a simplified version of the VGG network with 16 layers in order to reduce computational complexity. The softmax in the VGG model assuming that each training sample belongs to only one class, which is not always the case in medical image diagnosis, replaced it with an SVM. By utilizing data augmentation approaches and testing with numerous SVM kernels, they developed a VGG-based model has ability to classify mammograms with an accuracy of 98.67%.

The article14 reviews the impact of the newly developed DL model evaluation, for which mammographic images from the Mammographic Image Analysis Society (MIAS) in addition to DDSM databases were employed. The developed CNN model proved highly effective, achieving an accuracy of 98.44% on mammograms from the DDSM and 99.17% on mammograms from the MIAS dataset.

Back Propagation Boosting Recurrent Wienmed model (BPBRW) with Hybrid Krill Herd African Buffalo Optimization (HKH-ABO) mechanism was created by Dewangan et al.15 in order to early breast cancer diagnosis utilizing breast MRI data. After preprocessing noisy MRI data, the model classifies tumours as either benign or malignant. Evaluation of the model’s performance using Python yielded an enhanced correctness of 99.6% with a 0.12% reduction in error.

Regarding the purpose of breast lesions segmentation and radiomic extraction, Vigil et al.16 constructed a convolutional deep autoencoder model. In order to minimize its size from 354 radiomics to 12 radiomics, the model takes advantage of high-capacity imaging data outputs and approaches with spectral embedding. The model was trained using 780 ultrasound pictures, and the maximal robust cross-validated model for a composite of radiomic groups achieved a binary classification accuracy of 78.5% while it was being trained.

Altan17 recommended Deep Belief Networks (DBN) to diagnose breast cancer using ROI pictures. In order to determine the effect that dimensionality has on ROI pictures, Iterative processing of photos was performed using DBN on varying sizes. The accuracy of DBN model is 96.32%.

A novel breast cancer diagnostic model was introduced by Kavitha et al.18. It makes use of digital mammograms and adopts an adaptive fuzzy logic-based median filter approach as well as an algorithm that combines Optimal Kapur’s Multilevel Thresholding with Shell Game Optimization. In order to identify the existence of BC, the model utilizes a CapsNet feature extractor and a Back-Propagation Neural Network classification structure. They tested model by utilizing the Mini-MIAS and Digital Database for Screening Mammography (DDSM) evaluation data. They proved that this model offers exceptional results, with an accuracy of 98.50% on the Mini-MIAS dataset and 97.55% on the DDSM dataset.

Lin et al.19 produced a stepwise BC model framework to increase accuracy and decrease misdiagnosis. The researchers utilized a dataset including known risk factors for breast cancer and employed Artificial Neural Network (ANN) and SVM algorithms to categorize tumours. Classification accuracy was 76.6% for ANN and 91.6% for SVM. The transfer learning and optimization methods based on ADAM optimization and Stochastic Gradient Descent with Momentum (SGDM) were used to train the AlexNet, ResNet101, and InceptionV3 networks. AlexNet attained an accuracy of 81.16%, ResNet101 earned 85.51%, and InceptionV3 obtained 91.3% accuracy. The soft-voting method computed the average of the prediction findings, resulting in a test accuracy of 94.20%. This technique is an invaluable instrument for radiologists assessing mammography radiographs.

In IMPA-ResNet50 architecture, Houssein et al.20 used the ResNet50 model and the IMPA algorithm as a hybrid model. Through utilizing datasets, which are called MIAS and the Curated Breast Imaging Subset of the Curated Breast Imaging Subset of Digital Database for Screening Mammography (CBIS-DDSM), the model was evaluated. The model outperformed previous techniques, representing an accuracy of 98.32%.

Rahman et al.21 presented a computational system that utilizes a ResNet-50 CNN to automatically identify mammography pictures for the purpose of detecting BC. The method employs transfer learning from the pretrained ResNet-50 CNN on ImageNet to provide training and classification of the INbreast dataset into benign or cancerous categories. In comparison to previous models trained on the same dataset, the suggested framework obtained an exceptional classification accuracy of 93%.

Saber et al.22 focused on DL model that applies pre-trained CNNs, including Inception V3, ResNet50, Visual Geometry Group networks (VGG)-19, VGG-16, and Inception-V2 ResNet, in order to sort mammograms from the MIAS dataset by their features. This model’s performance has been evaluated concentrating on a single evaluation metric with respect to accuracy. The evaluation indicates that the VGG16 model, when employed with TL, has demonstrated exceptional performance, achieving overall accuracy of 98.96% and 98.87% for the 80 − 20 method and 10-fold cross-validation, respectively. Accordingly, it could be effectively applied to breast cancer diagnosis.

The study of Hirra et al.23 introduced a newly developed patch-based DL model, Pa-DBN-BC, for BC detection and classification that employs the Deep Belief Network (DBN) on histopathological images. The model employs logistic regression in order to sort histopathological images by features that are autonomously selected from extracted image patches, using both unsupervised pre-training and supervised fine-tuning approaches. The model was developed for the detection phase, where features selected from image patches are fed into the model as input, and then the model outputs a probability matrix for detection, examining whether the patch sample is positive or negative. A large-scale histopathological image dataset has been chosen for the model test and evaluation, aggregating images from four different data cohorts. The test results indicate that the model surpasses other conventional techniques, achieving an accuracy of 86% and demonstrating its effectiveness in learning optimal features automatically compared to the other introduced DL models.

This paper presents a novel hybrid model of DL models combined CNN and LSTM for binary breast cancer classification on two datasets available at the Kaggle repository. CNNs extract mammographic features, including spatial hierarchies and malignancy patterns, whereas LSTM networks characterize sequential dependencies and temporal interactions. Our method includes these structures to improve classification accuracy and resilience. We compared the proposed model with other DL models, for instance CNN, LSTM, GRU, VGG-16, and RESNET-50. The CNN-LSTM model achieved superior performance with accuracies of 99.17% and 99.90% on the respective datasets. The results showed that the CNN-LSTM can enhance the performance of breast cancer classifiers compared with others with 99.90% accuracy on the second dataset.

Despite all of these researches, Table 1 displays relative analysis between our proposed model and the current leading approaches in breast cancer classification.

Materials and methods

In this part, we described the datasets that were utilized in this paper, followed by the preprocessing steps applied to the data. Subsequently, the layout of DL models that were used in this paper to assessment our proposed model, then the architecture of the hybrid model CNN-LSTM in detail.

Dataset description

In this research, we utilized 2 public datasets grabbed from Kaggle repository to evaluate the proposed model described as follows:

-

The first dataset available at https://www.kaggle.com/datasets/paultimothymooney/breast-histopathology-images/data, There are 277,524 patches and 162 40x-magnified breast cancer slide photos. IDC has 198,738 negative and 78,786 positive cases. Each patch is denoted with a patient ID, origin coordinates, and class designation. This data is utilized for the purpose of training a DL model that can differentiate between samples that are positive for IDC and those that are negative.

-

The second dataset available at https://www.kaggle.com/datasets/forderation/breakhis-400x/data, elicited from BreaKHis database included 400x optical zoom breast tumor pictures for training and assessment. Diverse photos with distinct tumor kinds improve the model’s capacity to generalize across breast cancer pathology.

The study uses two datasets, the IDC Breast Cancer Dataset and the BreaKHis Dataset, to represent real-world breast cancer cases. The IDC dataset consists of 162 whole mount slide images, while the BreaKHis dataset includes microscopic biopsy images from 82 patients. Both datasets cover diverse tumor types, sizes, and morphological variations, ensuring a balanced classification. However, the datasets have limitations, such as lack of clinical metadata and potential class imbalance.

Data preprocessing

Effective preprocessing is main stage to ensure that the input data is in a suitable shape for the DL model, enhancing the model’s performance and reducing computational complexity so we utilize resizing process in order to ensure consistency across datasets by resizing all patches from the IDC Breast Cancer Dataset and biopsy images from the BreaKHis Database to 244 × 244 pixels, facilitating efficient training and evaluation of the used DL models. Pixel values were normalized to the range [0, 1] by dividing by the maximum pixel intensity (255), ensuring consistent scaling, improving model convergence, and reducing computational complexity during training. Additionally, data augmentation techniques were applied to the training dataset to enhance model robustness and mitigate overfitting. These included random rotations (up to 15 degrees), horizontal and vertical flipping, and random zooming (up to 20%), introducing variability into the training data.

The impact of preprocessing on model performance. It highlights the importance of resizing images to ensure uniformity and compatibility with the deep learning model. Normalization scales pixel values to ensure numerical stability and improves model convergence. Data augmentation artificially expands the training dataset, increasing model robustness and allowing the model to learn invariant features. The study found that resizing images to 244 × 244 pixels maintained critical structural details while reducing processing time. The combination of these techniques improved model performance on diverse test samples, increased accuracy, and efficient resource utilization, making it more suitable for real-world clinical applications.

The study used Synthetic Minority Over-sampling Technique (SMOTE) to artificially augment the minority class of malignant cases, ensuring a balanced class distribution and improving the model’s ability to detect malignant cases. Random undersampling was used to prevent bias towards the majority class, but a hybrid approach was chosen to maintain dataset diversity. Data augmentation techniques like random rotations, flips, and zooming were also applied to enhance dataset variability. The balancing techniques led to a significant reduction in class bias, improved performance metrics, and a balanced confusion matrix. Future work may explore advanced resampling techniques.

The proposed methodology

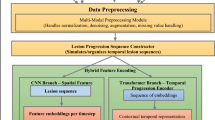

Figure 1 displays the framework of our model CNN-LSTM for BC classification (+ or -).

The proposed model for BC classification depicted in detail at Fig. 2, the relevant data needed for breast cancer is identified as the input pictures from two datasets that used in the study. The CNN is composed of several convolutional layers, which include feature maps, pooling layers, and a “Flatten” stage capable of transforming 2D information into 1D vectors. The flattened CNN incorporates an LSTM layer that establishes a link between the output and the LSTM layer, emphasizing sequential processing and temporal codependencies. A dense layer consisting of nodes linked to the LSTM output is incorporated, and an activation function is employed to specify the utilization of hidden layers. The output is a binary classification layer consisting of two nodes that indicate potential classes of BC (+/-). The probabilistic output is generated using a softmax function. To maximize CNN-LSTM hybrid model performance, hyperparameter adjustment was carefully done in this study. Grid search was used to find the optimum hyperparameters. CNN and LSTM hyperparameters are in Table 2. We used the Rectified Linear Unit (ReLU) activation function in CNN layers for computing efficiency and vanishing gradient mitigation. ReLU extracts complicated characteristics from input photos by adding non-linearity to a simple network. A sigmoid activation function was utilized for the output layer as the classification problem is binary BC (+/-). The generalization capabilities of the model were improved by the utilization of regularization techniques such as dropout layers, early stopping based on validation loss, and data augmentation through the utilization of random rotations, flips, and zooming. These strategies were employed to prevent overfitting and to strengthen the robustness of the model. The hybrid model incorporates dropout layers in both CNN and LSTM components, applying 0.5 dropout rates after convolutional blocks to reduce co-dependency and 0.2 dropout rates to prevent overfitting.

The CNN-LSTM hybrid model is a combination of CNNs and LSTMs, which enhances performance by combining their strengths. CNNs excel at spatial feature extraction, capturing local and global patterns like textures and malignancy structures in breast cancer images. They reduce dimensionality while preserving critical features through convolutional and pooling layers. LSTMs are proficient in capturing temporal dependencies and sequential relationships within the extracted features, which are crucial in medical imaging. They help in learning long-term dependencies, allowing the model to understand feature correlations better. The memory units in LSTM prevent issues like vanishing gradients, ensuring better retention of critical features. The hybrid approach mitigates model limitations, as CNNs alone may struggle with sequential dependencies and LSTMs alone may not effectively extract complex spatial features.

The integration of CNN and LSTM layers in breast cancer image processing has been enhanced to capture both spatial and sequential features. The CNN layer extracts spatial features from images, while the LSTM layer analyzes sequential dependencies to improve classification accuracy. The CNN filters act as feature extractors, while the LSTM’s gating mechanisms refine these features over sequential dependencies. However, challenges faced during integration include dimensionality mismatch, increased computational complexity, hyperparameter optimization, and overfitting concerns. The CNN layer extracts spatial features from breast cancer images, while the LSTM layer analyzes sequential dependencies. The hybrid architecture introduced higher computational demands than standalone models, requiring optimization techniques like dropout regularization and grid search to prevent overfitting. Balancing the number of CNN filters, kernel sizes, LSTM units, and dropout rates required extensive experimentation. Overfitting concerns were mitigated using data augmentation techniques like random rotations, flips, and zooming.

The study used grid search optimization to explore combinations of CNN and LSTM parameters. Key parameters included filters, kernel sizes, activation functions, pooling types, dropout rates, and learning rates. A 5-fold cross-validation approach was used to evaluate combinations. The optimal hyperparameters were selected, resulting in improved accuracy, better generalization, reduced overfitting, faster convergence, and balanced performance across training and validation datasets.

The study used regularization techniques to improve the CNN-LSTM model. Dropout regularization was applied to deactivate a fraction of neurons during training, reducing co-dependency between neurons. Early stopping was implemented to prevent overfitting and optimize training efficiency. Data augmentation techniques like random rotations, horizontal and vertical flipping, and random zooming were used to introduce diversity and improve generalization. L2 weight regularization penalized large weight values, reducing model complexity. Batch normalization was applied after convolutional layers to stabilize the learning process. The CNN-LSTM model achieved high accuracy and consistent evaluation metrics, demonstrating a stable trend without signs of overfitting.

DL models

CNN

The CNNs, DL model for grid-like data such as images, revolutionize computer vision by automatically recognizing patterns and characteristics26,27. Their layers include convolutional, pooling, and fully connected ones for classification or regression. Deep Neural Networks CNNs are highly proficient at acquiring hierarchical characteristics, ranging from basic edges and textures to intricate forms and objects in underlying layers.

LSTM

LSTM is a kind of Recurrent Neural Network (RNN) implemented to effectively gather and depict long-term correlations within sequential data28,29,30. They possess a memory cell capable of retaining information for long durations, controlled by input, forget, and output gates. LSTM models find utility in several applications such as time-series forecasting, audio identification, and natural language processing. Their integration with CNNs in medical imaging enables the examination of sequential characteristics, rendering them very advantageous for tasks such as video analysis and temporal pattern identification.

GRU

GRUs represent a custom form of RNN that are specifically developed for extracting and modeling sequential data dependencies31. As a simplified variant of LSTM networks, GRUs employ two gates in order to regulate the information transmission throughout the network and effectively minimize complexity related to data computations, having no impact on performance, making data dependencies optimal for processes that require inputs with sequential features, such as time-series analysis, and modeling sequential features in medical image applications.

VGG-16

VGG-16 model, an extensively adopted CNN model, has made considerable contributions to the progress made in medical image classification applications32. VGG-16, featuring its deep architecture of 16 weight layers, which consist of 13 convolutional layers and 3 fully connected layers. In merit of deep architecture, the model could sequentially stack small 3 × 3 convolutional filters to facilitate the retrieval of intricate features from the dataset, having no impact on the design simplicity. In addition, this deep architecture attributes high effectiveness in learning optimal feature representations, making the model highly powerful in medical image analysis.

RESNET-50

The ResNet-50 model, an effective CNN model, belongs to the ResNet (Residual Networks) family, and employs residual learning techniques as an effective resolution for the vanishing gradient problem inherent to the deep networks33. ResNet-50 has a substantially deeper architecture compared to other conventional CNNs due to its 50 layers, yet still demonstrates a high effectiveness in learning intricate features and achieves superior performance. The residual connections, a fundamental concept introduced by ResNet-50, enable the substantially deeper networks to learn residual functions and layer inputs rather than non-residual functions, thereby dealing with its degradation problem. This makes it realistic for such types of networks to learn more intricate patterns and features, having no impact on their performance.

Experimental setup

In this section, we depicted the trial environment, followed by the interpretation of evaluation metrics in the proposed model.

Implementation

We implemented our tests and experiments using Jupyter Notebook 6.4.6, a Python-based data analysis and visualization application. The software application has the capacity to support several programming languages, including Python 3.8, and may be run in a web browser interface. A computer with an Intel Core i7 CPU and 32 GB of random-access memory was used for the research including the studies. The machine was also equipped with the Microsoft Windows 10 operating system.

Evaluation metrics

This paper uses prediction evaluation metrics such as accuracy, sensitivity, specificity, F-score, and the AUC curve34,35,36. Accuracy calculated through the following Eq. (1):

Sensitivity is the proportion of true positive cases correctly estimated among all actual positive, using Eq. (2).

The specificity inquiries regarding the total number of accurately anticipated typical cases are given by Eq. (3).

F-measure is calculated using Eq. (4):

AUC is calculated using Eq. (5):

Results and discussion

In this part, we focus on the outcomes of the experiments carried out to assessment the performance of the CNN-LSTM model for BC classification. The study uses evaluation metrics such as accuracy, sensitivity, specificity, F-score, and the AUC curve. These metrics provide a comprehensive assessment of the model’s ability to separate between (+) and (-) breast cancer images. Additionally, the comparison between the proposed model and other DL models, such as CNN, LSTM, GRU, VGG-16, and RESNET-50.

Table 3 displays the capability of the CNN-LSTM model compared to others on the first dataset24 in terms of accuracy, sensitivity, specificity, F-score, and the AUC curve. The model that obtained high accuracy, sensitivity, specificity, F-score, and the AUC curve is CNN-LSM, with an accuracy of 99.17%, TRP of 99.17%, TNP of 99.17%, F-score of 99.17%, and the AUC of 0.995. The model that achieved the lowest result is RSENET-50, with an accuracy of 89.26%, a TRP of 89.26%, a TNP of 89.26%, an F-score of 89.26%, and an AUC of 0.887. Figure 3 represents the accuracy of the proposed model compared to others.

Representation of model’s accuracy on dataset24.

Table 4 displays the capability of the CNN-LSTM model compared to others on the second dataset25 in terms of accuracy, sensitivity, specificity, F-score, and the AUC curve. The model that obtained high accuracy, sensitivity, specificity, F-score, and the AUC curve is CNN-LSM, with an accuracy of 99.90%, TRP of 99.90%, TNP of 99.90%, F-score of 99.80%, and the AUC of 1.000. The model that achieved the lowest result is RSENET-50, with an accuracy of 91.47%, a TRP of 91.47%, a TNP of 91.47%, an F-score of 91.48%, and an AUC of 0.917. Figure 4 represents the accuracy of CNN-LSTM model compared to others.

Representation of model’s accuracy on dataset25.

The CNN-LSTM model was compared to established deep learning architectures like VGG-16, ResNet-50, and GRU in terms of accuracy, precision, recall, and computational efficiency. The CNN-LSTM model achieved the highest accuracy, with 99.17% and 99.90% across both datasets. It demonstrated higher recall and precision, indicating its superior capability in identifying malignant breast cancer cases while minimizing false positives and false negatives. Compared to GRU, CNN-LSTM performed better in precision due to the enhanced feature fusion process. Despite its superior performance, the CNN-LSTM model exhibited higher computational complexity, with training time of approximately 2 h per epoch and inference time of 2 h. The CNN-LSTM model generalizes better across diverse datasets due to its ability to analyze both spatial and sequential features, reducing overfitting risks.

The CNN-LSTM model is a hybrid model that requires 2 h per epoch on an Intel Core i7 processor, 32GB RAM, and an NVIDIA GPU. It requires more computational resources due to sequential dependencies introduced by LSTM layers. The model achieves real-time inference capabilities, taking an average of 50 milliseconds per image for prediction. It outperforms deeper architectures like ResNet-50, which require higher computational power. The CNN-LSTM model balances accuracy and computational efficiency, achieving superior performance compared to ResNet-50 and VGG-16 while maintaining lower computational costs during inference. The model’s high accuracy and rapid inference make it suitable for real-time clinical deployment, assisting radiologists in making quicker decisions. However, the training phase requires significant resources, limiting on-premises training in smaller healthcare facilities. Potential solutions include cloud-based inference services and edge AI implementations.

Figure 5 shows the training and testing for both loss and accuracy using CNN-LSTM model on dataset24.

Training and testing for both loss and accuracy using CNN-LSTM model on dataset24.

Figure 6 shows the training and testing for both loss and accuracy using the proposed CNN-LSTM model on dataset25. The proposed CNN-LSTM model, while achieving high accuracy, requires more computational resources than simpler models like standalone CNNs or LSTMs. Training takes 2 h per epoch on a system with an Intel Core i7 processor, 32GB of RAM, and an NVIDIA GPU. This complexity may limit scalability for edge devices or clinical settings with limited computational resources. Standalone CNN models took 60 min per epoch during training, while LSTM models required 60 min per epoch. The proposed CNN-LSTM model, while requiring longer training times due to its hybrid nature, maintained competitive inference times similar to standalone CNNs and outperformed ResNet-50 in inference speed.

Training and testing for both loss and accuracy using the CNN-LSTM model on dataset25.

Figure 7 shows the plot of actual and predicted values using the proposed model on the dataset24.

Plot for actual and predicted values using CNN-LSTM on the dataset24.

Figure 8 shows the plot of actual and predicted values using CNN-LSTM on the dataset25.

Plot for actual and predicted values using the proposed model on the dataset25.

Figure 9 displays the AUC value for CNN-LSTM model on the dataset24.

AUC value for CNN-LSTM model on the dataset24.

Figure 10 displays the AUC value for CNN-LSTM model on the dataset25.

AUC value for CNN-LSTM model on the dataset24.

The hybrid CNN-LSTM model benefits clinical practice by providing high accuracy, sensitivity, and specificity, enabling reliable breast cancer detection and supporting clinicians in early diagnosis. Its integration of spatial feature extraction (CNN) and sequential dependency modeling (LSTM) allows it to analyze complex patterns in medical images, reducing diagnostic errors and enhancing decision-making. Clinical integration faces challenges, including high computational requirements, the need for specialized hardware, and the black-box nature of deep learning, which may limit clinician trust. The main limitations of the CNN-LSTM model include its computational complexity and resource requirements, which may restrict its applicability in resource-limited settings. The hybrid architecture demands significant memory and processing power, especially during training, making it dependent on specialized hardware such as GPUs. Additionally, the preprocessing steps, such as image resizing and normalization, add an extra layer of preparation, which may not always be feasible in real-time clinical environments.

The study focuses on improving breast cancer diagnosis models by optimizing the classification threshold, assigning higher weights to malignant classes, implementing a post-processing decision mechanism, integrating explainable AI techniques, and handling borderline cases through data augmentation. The model’s F1-score remained consistently high, indicating a balanced performance between precision and recall. The model also incorporates a confidence scoring mechanism for ambiguous cases, and uses data augmentation techniques to ensure exposure to diverse tumor presentations. These strategies aim to minimize false negatives and ensure accurate diagnosis.

The hybrid CNN-LSTM model faces several challenges in clinical integration. Hardware requirements include a high-performance GPU or cloud-based infrastructure for efficient real-time inference. Cloud-based environments can mitigate the need for high-end local hardware. Software compatibility is crucial for integrating the model into existing clinical systems. The model should be compatible with standard healthcare communication protocols, deployed as a REST API service, and supported for multiple platforms. User training and adoption are also essential. Comprehensive training programs, clear documentation, and periodic workshops are necessary. Explainable AI techniques and intuitive dashboards can help clinicians interpret results. Meeting regulatory compliance and ethical standards is crucial for clinical deployment.

While the proposed CNN-LSTM model demonstrates high accuracy and robustness, its computational demands and interpretability challenges present barriers to widespread adoption in resource-constrained environments. Future work will focus on optimizing the model for efficiency, enhancing interpretability, and validating its performance across diverse clinical datasets.

Conclusion and future work

This paper introduces a novel hybrid model of DL models combined CNN and LSTM for binary breast cancer classification on two datasets available at the Kaggle repository. CNNs extract mammographic features, including spatial hierarchies and malignancy patterns, whereas LSTM networks characterize sequential dependencies and temporal interactions. Our method combines these structures to improve classification accuracy and resilience. We compared the proposed model with other DL models, for instance CNN, LSTM, GRU, VGG-16, and RESNET-50. The CNN-LSTM model achieved superior performance with accuracies of 99.17% and 99.90% on the respective datasets. This paper uses prediction evaluation metrics such as accuracy, sensitivity, specificity, F-score, and the AUC curve. The results showed that the CNN-LSTM can enhance the performance of breast cancer classifiers compared with others with 99.90% accuracy on the second dataset. The findings suggest to newcomers trying to investigate model variants of the CNN-LSTM architecture, such as including recurrent layers or modifying the LSTM unit count and integrate with other models37,38,39,40. Another suggestion using application41,42,43,44 of transfer learning and fine-tuning techniques enables the adaptation of current models to novel datasets, hence minimizing both training time and computational resources. The newcomers also can consider medical imaging datasets that used for cross-domain applications, and improved data augmentation can increase model resilience and generalization. another aspect they can integrate Explainable AI (XAI) approaches into the CNN-LSTM model that enables the provision of visual or textual explanations for predictions. The extension of our CNN-LSTM framework to integrate45,46,47,48 genetic and MRI data holds significant potential to enhance breast cancer diagnosis and treatment planning. We plan to explore multimodal approaches in future work to further improve the model’s relevance in clinical practice.

Data availability

The data that support the findings of this study are available at https://www.kaggle.com/datasets/paultimothymooney/breast-histopathology-images/data & https://www.kaggle.com/datasets/forderation/breakhis-400x/data.

References

El-Nabawy et al. A feature-fusion framework of clinical, genomics, and histopathological data for METABRIC breast cancer subtype classification. Appl. Soft Comput. 91, 106238. https://doi.org/10.1016/j.asoc.2020.106238 (2020).

Aggarwal, R. et al. Diagnostic accuracy of DL in medical imaging: a systematic review and meta-analysis. NPJ Digital Medicine 4(1). https://doi.org/10.1038/s41746-021-00438-z (2021).

Bhatt, C. et al. The state of the art of DL models in medical science and their challenges. Multimedia Syst. 27(4), 599–613. https://doi.org/10.1007/s00530-020-00694-1 (2020).

Nassif, A. et al. Breast cancer detection using artificial intelligence techniques: a systematic literature review. Artif. Intell. Med. 127, 102276. https://doi.org/10.1016/j.artmed.2022.102276 (2022).

Elshewey, A. M. et al. Orthopedic disease classification based on breadth-first search algorithm. Sci. Rep. 14(1), 23368. https://doi.org/10.1038/s41598-024-73559-6 (2024).

Elkenawy, El-Sayed, M. et al. Greylag goose optimization and multilayer perceptron for enhancing lung cancer classification. Sci. Rep. 14(1), 23784. https://doi.org/10.1038/s41598-024-72013-x (2024).

Elshewey, A. M. et al. EEG-based optimization of eye state classification using modified-BER metaheuristic algorithm. Sci. Rep. 14(1), 24489. https://doi.org/10.1038/s41598-024-74475-5 (2024).

El-Rashidy, N. et al. Multitask multilayer-prediction model for predicting mechanical ventilation and the associated mortality rate. Neural Comput. Appl. 1–23. https://doi.org/10.1007/s00521-024-10468-9 (2024).

Hosny, K. M. et al. Explainable ensemble deep learning-based model for brain tumor detection and classification. Neural Comput. Appl. 1–18. https://doi.org/10.1007/s00521-024-10401-0 (2024).

Litjens, G. et al. A survey on DL in medical image analysis. Medical Image Anal. 42, 60–88. https://doi.org/10.1016/j.media.2017.07.005 (2017).

Wang, X. et al. Intelligent Hybrid DL Model for Breast Cancer Detection. Electronics 11(17), 2767. https://doi.org/10.3390/electronics11172767 (2022).

Zakareya, S. et al. A New Deep-Learning-Based Model for Breast Cancer Diagnosis from Medical Images. Diagnostics 13(11), 1944. https://doi.org/10.3390/diagnostics13111944 (2023).

Jayandhi, G. et al. Mammogram Learning System for breast Cancer diagnosis using DL SVM. Comput. Syst. Sci. Eng. 40(2), 491–503. https://doi.org/10.32604/csse.2022.016376 (2022).

Kavitha, T. et al. DL Based Capsule Neural Network Model for Breast Cancer Diagnosis Using Mammogram Images. Interdiscip. Sci. Computat. Life Sci. 14(1), 113–129. https://doi.org/10.1007/s12539-021-00467-y (2021).

Dewangan, K. et al. Breast cancer diagnosis in an early stage using novel DL with hybrid optimization technique. Multimedia Tools Appl. 81(10), 13935–13960. https://doi.org/10.1007/s11042-022-12385-2 (2022).

Vigil, N. et al. Dual-Intended DL Model for Breast Cancer Diagnosis in Ultrasound Imaging. Cancers 14(11), 2663. https://doi.org/10.3390/cancers14112663 (2022).

Altan, G. Breast cancer diagnosis using deep belief networks on ROI images. Pamukkale Univ. J. Eng. Sci. 28(2), 286–291. https://doi.org/10.5505/pajes.2021.38668 (2022).

Raaj, R. & Sathesh Breast cancer detection and diagnosis using hybrid DL architecture. Biomedical Signal Processing and Control 82, 104558. https://doi.org/10.1016/j.bspc.2022.104558 (2023).

Lin, R. H. et al. Application of DL to construct breast Cancer diagnosis model. Appl. Sci. 12(4), 1957. https://doi.org/10.3390/app12041957 (2022).

Houssein, E. H. et al. An optimized DL architecture for breast cancer diagnosis based on improved marine predators algorithm. Neural Comput. Appl. 34(20), 18015–18033. https://doi.org/10.1007/s00521-022-07445-5 (2022).

Rahman, H. et al. Efficient Breast Cancer Diagnosis from Complex Mammographic Images Using Deep Convolutional Neural Network. Computat. Intell. Neurosci. 2023, 1–11. (2023). https://doi.org/10.1155/2023/7717712

Saber, A. et al. A Novel Deep-Learning Model for Automatic Detection and classification of breast Cancer using the transfer-learning technique. IEEE Access. 9, 71194–71209. https://doi.org/10.1109/access.2021.3079204 (2021).

Hirra, I. et al. Breast Cancer classification from histopathological images using Patch-based DL modeling. IEEE Access. 9, 24273–24287. https://doi.org/10.1109/access.2021.3056516 (2021).

Breast Histopathology Images. Kaggle, 19. Dec. 2017. www.kaggle.com/datasets/paultimothymooney/breast-histopathology-images/data (2017).

BreaKHis 400X. Kaggle. 29 July 2020. www.kaggle.com/datasets/forderation/breakhis-400x/data.

Alzakari, S. A. et al. Early detection of Potato Disease using an enhanced convolutional neural network-long short-term memory Deep Learning Model. Potato Res. 1–19. https://doi.org/10.1007/s11540-024-09760-x (2024).

Aljuaydi, F. et al. A deep learning prediction model to Predict Sustainable Development in Saudi Arabia. Appl. Math. Inform. Sci. 18, 1345–1366. https://doi.org/10.18576/amis/180615 (2024).

Elshewey, A. M. et al. Weight prediction using the Hybrid Stacked-LSTM Food Selection Model. Comput. Syst. Sci. Eng. 46(1), 765–781. https://doi.org/10.32604/csse.2023.034324 (2023).

Shams, M. Y. et al. Predicting Gross Domestic Product (GDP) using a PC-LSTM-RNN model in urban profiling areas. Comput. Urban Sci. 4(1), 3. https://doi.org/10.1007/s43762-024-00116-2 (2024).

Eed, M. et al. Potato consumption forecasting based on a hybrid stacked Deep Learning Model. Potato Res. 1–25. https://doi.org/10.1007/s11540-024-09764-7 (2024).

Tarek, Z. et al. An optimized model based on deep learning and gated recurrent unit for COVID-19 death prediction. Biomimetics 8(7), 552. https://doi.org/10.3390/biomimetics8070552 (2023).

Alzakari, S. A. et al. An enhanced long short-term memory recurrent neural network Deep Learning Model for Potato Price Prediction. Potato Res. 1–19. https://doi.org/10.1007/s11540-024-09744-x (2024).

Abdelhamid, A. A. et al. Potato harvesting prediction using an Improved ResNet-59 model. Potato Res. 1–20. https://doi.org/10.1007/s11540-024-09773-6 (2024).

Alkhammash, E. H. et al. A hybrid ensemble stacking model for gender voice recognition approach. Electronics 11(11), 1750. https://doi.org/10.3390/electronics11111750 (2022).

Fouad, Y. et al. Adaptive visual sentiment prediction model based on event concepts and object detection techniques in Social Media. Int. J. Adv. Comput. Sci. Appl. 14(7). https://doi.org/10.14569/ijacsa.2023.0140728 (2023).

Elshewey, A. M. et al. Enhancing Heart Disease classification based on Greylag Goose Optimization Algorithm and long short-term memory. Sci. Rep. 15(1). https://doi.org/10.1038/s41598-024-83592-0 (Jan. 2025).

Khatir, A. et al. A new hybrid PSO-YUKI for double cracks identification in CFRP cantilever beam. Composite Structures 311, 116803. https://doi.org/10.1016/j.compstruct.2023.116803 (2023).

Khatir, A. et al. Advancing structural integrity prediction with optimized neural network and vibration analysis. J. Struct. Integr. Maintenance 9(3). https://doi.org/10.1080/24705314.2024.2390258 (2024).

Khatir, A., Capozucca, R., Khatir, S. & Magagnini, E. Vibration-based crack prediction on a beam model using hybrid butterfly optimization algorithm with artificial neural network. Front. Struct. Civil Eng. 16(8), 976–989. https://doi.org/10.1007/s11709-022-0840-2 (2022).

Achouri, F. et al. Structural health monitoring of beam model based on swarm intelligence-based algorithms and neural networks employing FRF. J. Brazilian Soc. Mech. Sci. Eng. 45(12). https://doi.org/10.1007/s40430-023-04525-y (2023).

Mahmood, T. et al. Breast lesions classifications of mammographic images using a deep convolutional neural network-based approach. PLOS ONE 17(1), e0263126. https://doi.org/10.1371/journal.pone.0263126 (2022).

Khan, M. A., Kadry, S., Zhang, Y. D., Akram, T. & Sharif, M. A brief survey on breast cancer diagnostic with deep learning schemes using multi-image modalities. IEEE Access. 8, 165779–165809. https://doi.org/10.1109/ACCESS.2020.3021343 (2021).

Khan, M. A., Kadry, S., Zhang, Y. D., Akram, T. & Sharif, M. Breast cancer detection using artificial intelligence techniques: a systematic literature review. Expert Syst. Appl. 211, 123747. https://doi.org/10.1016/j.eswa.2024.123747 (2024).

Khan, M. A., Kadry, S., Zhang, Y. D., Akram, T. & Sharif, M. An automated in-depth feature learning algorithm for breast abnormality prognosis and robust characterization from mammography images using deep transfer learning. Biology 10(9), 859. https://doi.org/10.3390/biology10090859 (2021).

Mahmood, T. et al. Harnessing the Power of Radiomics and Deep learning for improved breast Cancer diagnosis with multiparametric breast mammography. Expert Syst. Appl. 249, 123747. https://doi.org/10.1016/j.eswa.2024.123747 (2024).

Mahmood, T. et al. Recent advancements and future prospects in active deep learning for medical image segmentation and classification. IEEE Access. 11, 113623–113652. https://doi.org/10.1109/access.2023.3313977 (2023).

Iqbal, S. et al. On The Analyses of Medical Images Using Traditional Machine Learning Techniques and Convolutional Neural Networks. Arch. Comput. Methods Eng. 30, 5, 3173–3233. https://doi.org/10.1007/s11831-023-09899-9 (2023).

Iqbal, S. et al. Improving the Robustness and Quality of Biomedical CNN Models Through Adaptive Hyperparameter Tuning. Applied Sciences, vol. 12, no. 22, p. 11870. https://doi.org/10.3390/app122211870 (2022).

Acknowledgements

This work was funded by the University of Jeddah, Jeddah, Saudi Arabia, under grant No. (UJ-24-DR-20387-1). Therefore, the authors thank the University of Jeddah for its technical and financial support.

Funding

This work was funded by the University of Jeddah, Jeddah, Saudi Arabia, under grant No. (UJ-24-DR-20387-1). Therefore, the authors thank the University of Jeddah for its technical and financial support.

Author information

Authors and Affiliations

Contributions

Mourad kaddes: Writing, Project administration and Conceptualization. Yasser M. Ayid: Writing, Investigation and Project administration. Ahmed M. Elshewey: prepared figures and recorded tables results. Yasser Fouad: Wrote the main manuscript, editing and Formal analysis. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kaddes, M., Ayid, Y.M., Elshewey, A.M. et al. Breast cancer classification based on hybrid CNN with LSTM model. Sci Rep 15, 4409 (2025). https://doi.org/10.1038/s41598-025-88459-6

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-88459-6

Keywords

This article is cited by

-

Enhancing breast cancer disease classification based on stacked machine learning models with hybrid Particle Swarm and Grey Wolf Optimization algorithms

Iran Journal of Computer Science (2026)

-

Multiscale wavelet attention convolutional network for facial expression recognition

Scientific Reports (2025)

-

COVID-19 mortality and nutrition through predictive modeling and optimization based on grid search

Scientific Reports (2025)

-

DentoMorph-LDMs: diffusion models based on novel adaptive 8-connected gum tissue and deciduous teeth loss for dental image augmentation

Scientific Reports (2025)

-

Cross-platform multi-cancer histopathology classification using local-window vision transformers

Scientific Reports (2025)