Abstract

Diabetic retinopathy (DR) is a foremost cause of vision impairment, characterized by the presence of microaneurysms, exudates, and other intricate retinal lesions. Traditional image segmentation methods often encounter challenges with the complex features due to poor boundary detection, noise sensitivity, and difficulties with low-contrast images. These limitations result in suboptimal segmentation, potentially compromising accurate diagnosis. This study introduces a hybrid segmentation approach that integrates K-Means clustering with Graph Cut optimization. K-Means clustering provides an initial segmentation by categorizing the image into distinct regions, while Graph Cut refines these regions by optimizing boundary delineations based on pixel similarity and spatial continuity. This integration effectively overcomes the noise sensitivity and boundary detection issues of traditional methods, especially in low-contrast scenarios. The proposed method has been verified on standard datasets, achieving a Mean Squared Error (MSE) of 0.044, a Peak Signal-to-Noise Ratio (PSNR) of 40.84, and a Structural Similarity Index (SSIM) of 0.92. These results significantly surpass recent benchmarks reported in the literature, where existing methods typically achieve PSNR values between 30 and 35 dB, MSE values between 0.038 ± 0.007 and SSIM values around 0.85 to 0.90. The improvements in PSNR and SSIM underscore the superior image quality and structural preservation offered by the approach. By merging the strengths of K-Means and Graph Cut, the proposed hybrid method provides a robust, scalable, and computationally efficient solution for retinal image segmentation, enhancing the early detection of DR and supporting global ophthalmic care initiatives. By leveraging this efficient algorithm, the proposed work promotes innovation in healthcare technology with focus on Sustainable Development Goal 9, ensuring accessible, accurate, and scalable solutions for ophthalmic care globally.

Similar content being viewed by others

Introduction

The primary resultant of Diabetes and key cause of vision loss is DR. For prompt intervention and efficient management of DR, early diagnosis and precise segmentation of retinal lesions are essential. This approach is made possible by image segmentation techniques which allow the pathological characteristics like hemorrhages, exudates and micro aneurysms shown in Fig. 1 to get distinguished from the fundus images, because of intricate features like micro aneurysms and exudates, DR lesions are difficult for traditional image segmentation approaches to identify with accuracy. Improved precision is promised by recent developments in hybrid approaches that combine graph-based optimisation and unsupervised learning. In order to minimize visual loss, improve early diagnosis, and improve lesion identification, this research presents a hybrid segmentation approach.

Considering its simplicity and ease of use, K-means clustering1 is a popular method for segmenting images. It functions by clustering pixels according to the similarity in colour or intensity. By allocating each pixel to one of K clusters, K-means clustering divides the retinal image into distinct regions, such as blood vessels, lesions, and background, in the context of diagnosing DR. K is set at 5 in this study to reflect these discrete zones. Although K-means works well for locating large regions inside an image, it has a number of shortcomings. Since it relies on simple pixel intensity values, one of its main drawbacks is that it cannot handle regions that are complicated or overlap, such the fine features of retinal diseases. Inaccurate segmentation might result from K-means’ inability to handle noise in images and its disregard for the spatial relationships between pixels, particularly when it comes to objects with irregular boundaries like lesions.

On the other hand, graph cut segmentation2 is a more advanced region-based segmentation method that employs an optimisation strategy based on graphs to provide more accurate outcomes. Using this, the image is denoted as a graph in which the edges illustrate the relationships between adjacent pixels and each pixel is considered a node. Graph cut is able to provide more precise and coherent segmentations, especially for complicated regions like lesions in retinal pictures, by cutting the graph in a way that minimizes an energy function balancing the data fitting term and the smooth boundaries. Although Graph cut does have certain restrictions, it is very accurate in segmenting process. When dealing with huge images, the algorithm requires a huge work and it is also sensitive to initialization. In addition, it has trouble with low-contrast or noisy images, which makes it less useful for various medical imaging tasks like identifying faint lesion borders.

This research suggests a hybrid image segmentation method that combines the advantages of these two techniques to increase lesion identification accuracy in retinal pictures, taking into account the limits of both K-means and graph cut when employed alone. In order to provide a broad first segmentation, the method first applies K-means clustering to divide the image into three regions called blood vessels, lesions, and backdrop. Graph cut segmentation, which optimizes the boundaries between these regions based on both pixel similarity and spatial continuity, is then used to further enhance the K-means results.

To overcome the limitations of each technique when applied separately, our hybrid methodology combines the speed and efficiency of K-means to rapidly group pixels with the precision of graph cut to fine-tune the segmentation. The combined methodology enhances the detection of DR lesions and is validated against state-of-the-art techniques using comprehensive evaluation metrics.

Related works

A semantic segmentation approach was presented by Qiao et al.1 with the purpose of classifying fundus. This technique separates the pixels according to their shared semantics in order to detect the microaneurysm feature. This method offers an automated approach which helps ophthalmologists assess the fundus. This paper by Chen et al.2 reviewed graph-based medical image segmentation methods, where pixels or regions are represented as graph nodes and segmentation is achieved through energy minimization using the s-t cut/maximum flow algorithm. The article covers various techniques, including graph based models and integrated approaches, and their integration with deep learning for region and surface segmentation.

The Ma et al.3 proposed a segmentation technique for medical visualization that enhances target feature extraction in 3D views. The method is verified on medical datasets, showing improved accuracy. A non-iterative mean-shift-based method that modifies the range kernel to be anisotropic, improving accuracy by incorporating global and local attributes is proposed by Cho et al.4. It reduces over segmentation using segment merging based on chromatic variance and speeds up processing with a region adjacency graph. Experimental results show improved segmentation accuracy and a 42 × faster merging process compared to benchmark methods.

Imran et al.5 presented a proportional analysis of various systems for automated retinal blood vessel segmentation, crucial for diagnosing cardiovascular and retinal diseases. The authors reviewed fundus photography, publicly available datasets, preprocessing/postprocessing techniques, and both supervised and unsupervised segmentation approaches, aiming to provide an understanding of vessel segmentation techniques. A Global and Local Enhanced Residual U-Net (GLUE) is proposed by Lian et al.6, addressing limitations of global pre-processing by incorporating local patch information and geometric constraints. The method improves segmentation accuracy, particularly for small vessels and optic disk discrimination, and outperforms conventional U-Net.

For automated DR lesion segmentation, He et al.7 suggested a method that measures the spatial-spectral characteristics of retinal pictures. It uses the feature vectors in all spectrum to create low-dimensional spatial-spectral representations by combining a supervised regularization term with a generalized low-rank approximation of matrices. Dodo et al.8 proposed a fuzzy image processing and graph-cut-based method for robust segmentation of images into five retinal layers, addressing challenges like speckle noise and intensity inhomogeneity. By establishing a region of interest and integrating Fuzzy C-means with continuous max-flow, the method enhances segmentation accuracy. Experimental results on 225 OCT B-scans demonstrate its effectiveness in aiding early diagnosis of major eye diseases.

A fully automated artery/vein classification method proposed by Pellegrini et al.9 for ultra-wide-field retinal fundus images using a scanning laser ophthalmoscope. A graph representation is formulated followed by a graph-cut approach for optimal separation. Tested on three datasets, the method reached 0.883 of accurateness.The paper by Annunziata et al.10 proposed an unsupervised vessel segmentation method for retinal images, addressing challenges posed by exudates and abnormalities. A novel inpainting filter to reduce false positives and enhances vessels using a multi-scale Hessian approach is implemented by achieving over 95% accuracy on the STARE and HRF datasets.

Shi et al.11 proposed a network to jointly address four visual prediction tasks including segmentation. A decoding mechanism is introduced to enhance recognition and a training strategy to mitigate domain and task gaps. Experiments on four datasets validate its effectiveness over separate task-specific models. A novel approach to emotion analysis using visuals by leveraging emotional prompts with multiple views to improve emotional representation is proposed by Deng et al.12. It introduces three key components namely prompt for context, which translates images into captions for background information; hybrid prompt for emotion, which integrates emotional visual and textual information; and prompt for label which strengthens emotional associations with labels. The proposed model’s metrics are highly satisfactory.

A Network proposed by Tu et al.13, addressed the issue of distinguishing background from anomaly features. This method uses a module to separate high from low frequency. It further introduced a module to emphasize anomalies and uses a self-attention mechanism for accurate recovery. A novel prediction method for traffic flow is proposed by Wang et al.14. By considering the variety of vehicles and using techniques that are predominant in visuals to quantify traffic. The proposed model’s metrics are highly optimal.

Review by Feng et al.15 discusses carbon quantum dots (CQDs). It covers the methods used to synthesize and purify CQDs. The review also explains the optical properties of CQDs. The main focus is on the use of CQDs in biomedical fields, including their role in detection, imaging and drug delivery for treatments like targeted therapy and photodynamic therapy. The paper concludes with a look at the challenges and future potential of CQDs in medicine. Zhang et al.16 suggested that evacuating a hematoma using an image-guided tract approach, while preserving the rest corticospinal tract, appears to be well for affected person with hemorrhages in intracerebral (ICH) who have high score of functional independence.

Proposed method

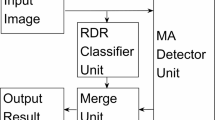

This segment includes overview of the recommended method, in which the input retinal image from online databases is taken and it is pre-processed to enhance the picture quality and hence to perform the subsequent operations optimally. It is then fed to the primary segmentation block where the centroid is calculated and thus nominal clusters are formed. The formed cluster is then fed as an input to the Graph cut block for further refinement which is displayed as Fig. 2 and the output across each block is in Fig. 3.

-

(a)

Represents input fed to the proposed method from the dataset, discussed in “Input retinal images” section

-

(b)

Represents the pre-processed input, discussed in “Preprocessing” section

-

(c) & (d)

Represents the initial segmentation of the pre-processed image by K-Means algorithm, discussed in “K-means algorithm for initial segmentation” & “Clustered image segments” section

-

(e)

Represents the final segmented image by Graph Cut algorithm, discussed in “Graph construction” section

Input retinal images

The input images, required to train the proposed method are taken from the following publicly available online database and its descriptions are given in Table 1. Here, 80% of the total images are used for training the algorithm, 10% were used for model validation, while another 10% were allocated for testing.

The healthy retina is labelled as zero, Retina with mild retinopathy is labelled as one, Moderate retinopathy is labelled as two, Severe retinopathy is labelled as three and Retina with Proliferate retinopathy is labelled as four.

Preprocessing

Image resizing

The input image from the database is of different dimensions and hence the images are to be resized initially displayed in Figs. 4 and 5b. Let the image taken be I; the resized image is obtained by the Iresized = Resize (I). An image from Messidor database whose dimension is 1266X1306 is taken and it is resized to the standard dimension of 256X256.

Colour space conversion

The resized image is then converted from RGB scale to grayscale by

The features namely microaneurysms, exudates, and hemorrhages are primarily intensity-based rather than color-dependent, making grayscale representation sufficient for retaining the diagnostic details essential for segmentation. Additionally, grayscale conversion enhances the efficiency and accuracy of segmentation algorithms of K-Means and Graph Cut, which benefit from the reduced noise and clearer intensity-based regions provided by grayscale images. This approach aligns with the analysis of fundus images in medical diagnostics, where intensity variations often highlight pathological regions, ensuring compatibility with standard practices while optimizing the segmentation process, shown in Fig. 5c.

Noise reduction

In fundus images, high-frequency noise obscure fine details like the edges of blood vessels or lesions. Convolution with a Gaussian kernel removes this noise, resulting in a clearer image with smooth intensity transitions. This improves the performance of subsequent segmentation algorithms K-Means and Graph Cut, enabling precise lesion detection.

The Gaussian kernel is a widely adopted technique for noise reduction in fundus images due to its ability to smooth high-frequency noise while preserving essential retinal features like blood vessels, microaneurysms, and hemorrhages. This is crucial for ensuring that diagnostic details remain intact, facilitating accurate lesion detection and segmentation. As the grayscale image is found to have some high frequency noises, Gaussian kernel is used to remove the noises shown in Fig. 5d.

Let Iconverted (x, y) is convoluted with the Gaussian kernel function G (x, y) to get the noise free image Ismoothed (x, y), mathematically

x, y are the pixel coordinates relative to the kernel’s centre.

σ is the standard deviation, controlling the degree of smoothing.

G (x, y) represents the weight assigned to the intensity of the pixel at position (x,y).

The input and its corresponding output across each stage of pre-processing is visualised in Fig. 5.

K-means algorithm for initial segmentation

The pre-processed image Ismoothed (x, y) is applied as an input to the primary segmentation. Using elbow method, the K- means algorithm13,14 runs for a range of K values and a graph of within cluster variance vs K value is plotted and found the optimum K value to be 5 in this method. The K initial cluster center’s are denoted as {µ1, µ2, µ3, µK}. Then each pixel at the position (x, y) computes the distance to each other cluster µi and it is assigned to the nearest cluster C (x, y). Mathematically,

Ismoothed (x, y) represents the pixel’s intensity value with the position (x, y).

µi is the cluster’s center.

Then the cluster center is updated and it is mean value is computed as

The above steps are repeated until the convergence is met. Once the algorithm converges, each pixel (x, y) is assigned to a cluster c(x, y).

Clustered image segments

For, initial segmentation using K-Means, if the cluster size is chosen to be 3, the resultant is found to be an under segmented image Fig. 6a. On the other hand, if the cluster size is chosen to be 6, the resultant is found to be an over segmented image Fig. 6b. The algorithm converges15,16. When the cluster size K is set to 5.

Graph construction

The cluster assignments obtained from K-Means clustering to construct a graph representation of the image. Each pixel in the suggested method is viewed as a node in a network, with edges denoting the connections between adjacent pixels. By allocating weights according to pixel similarity, cluster boundaries are kept in place. Next, by minimizing the energy function, the graph cut method improves the segmentation, guaranteeing precise and seamless lesion boundaries. Each pixel is connected to its 8 neighboring pixels. The weight of each edge represents the dissimilarity between the connected pixels. Pixels within the identical cluster have low dissimilarity, while pixels in unlike clusters have high dissimilarity.

Edge weighting

The weights assigned to the edges drive the final segmentation. Edges connecting pixels within the same cluster are assigned lower weights, encouraging the graph cut algorithm to keep similar pixels together. In contrast, edges connecting pixels from different clusters are assigned higher weights, promoting separation between distinct clusters and ensuring the isolation of dissimilar pixels.

Graph cut algorithm for refinement

The algorithm partitions the graph into 3 segments by reducing the cost function. The cost function is mathematically expressed as, E(C) = D(C) + λ S(C) where C—represents the segment assigned for each pixel 1 for circle and 0 for background, λ is the balancing parameter between data term and smoothness terms. Data tern, where if the similar pixels are assigned to different segments, then it is penalized and Smoothness term, where if some irregular or disconnected segments are created then it is penalized. The for-data term is given by:

where w_high-weight for dissimilar pixels; |I(i)–I(j)| is the absolute intensity difference between neighboring pixels,

C(i)! = C(j) is the indicator function that penalizes when pixels of different clusters are assigned to different segments. The mathematical expression for smoothness term is

w_low-weight for similar pixels, C(i)! = C(j) is the indicator function that penalizes when assigning neighboring pixels to different segments.

Minimizing the cost function

The graph cut algorithm finds the segment assignment S(C) that minimizes the overall cost function (E), by effectively segmented by considering K-Means output and the spatial relationships between the pixels.

Results and discussions

The aim is to provide effective segmentation of DR images, which leads to early detection and hence to prevent vision loss. The primary findings of the proposed method provide optimal, acceptable qualitative and quantitative outcomes.

Input retinal images

The retinal images used for the implementation of the proposed method are sourced from the database discussed in “Input retinal images” section. Figure 7 visualizes both a healthy retina and a retina exhibiting abnormalities.

Qualitative evaluation metrics

Qualitative metrics are used to assess the quality of an image or a process without relying on numerical measurements. These metrics are particularly useful when the assessment involves subjective judgments or when there isn’t a ground truth available for comparison. The qualitative evaluation metrics of the input image and its corresponding segmented output of suggested approach is in Figs. 8, 9 and 10

Qualitative representation of Input image and its corresponding segmented output.

Visual inspection of the segmented images revealed clear boundaries between different regions of interest, indicating the efficacy of the recommended method in capturing meaningful structures within the images.

Measurable evaluation metrics

Quantitative evaluation metrics play a critical role in measuring the accuracy and efficacy of retinal image segmentation techniques. In this study, three widely used metrics namely MSE, PSNR and SSIM were employed. These metrics collectively ensure a robust evaluation of segmentation performance by balancing pixel-level accuracy, image fidelity, and structural preservation, which are critical for the effective diagnosis of DR.

MSE

In retinal fundus images, MSE measures the average squared difference between the segmented regions of blood vessels, lesions or background and the ground truth annotated by experts. A lower MSE implies that the segmented image closely matches the reference, indicating fewer errors in identifying critical structures like microaneurysms, hemorrhages, or exudates.

Mathematically, MSE is given by:

m expresses the rows, n express the columns, I(i, j) is the intensity of pixel at the position (i, j) in the processed image, I′(i, j) is the intensity of the corresponding pixel at position (i, j) in the processed image. Typical value of MSE lies between 0.038 ± 0.007, in the proposed method the improved MSE is found to be 0.044.

PSNR

PSNR evaluates the segmentation quality by comparing the segmented retinal fundus image with the original ground truth in terms of signal fidelity. It is particularly useful for assessing the way the algorithm preserves detailed structures like blood vessel networks or lesion shapes while minimizing noise introduced during processing.

where MAX is the maximum possible pixel value of the images, MSE is the error between the segmented image and the ground truth, calculated using (7). Typical value of PSNR as per existing systems range between 30 and 35 dB, in the proposed method the improved PSNR is found to be 40.83 dB.

SSIM

The SSIM assesses the likeness amid the true and segmented images in terms of its characteristics such as luminance, contrast, and structural information. Mathematically SSIM

where, L, C, S represents the comparison of Luminance, Contrast and Structure values respectively. α, β, and γ are constants to adjust the relative importance of the three components, typically set to 1. SSIM values range from (− 1) to (1), where 1 indicates seamless similarity. The above discussed performance metrics are obtained and its numerical values are shown in Table 2. Typical value of SSIM as per existing systems range between 0.85 and 0.90, in the proposed method the improved SSIM is found to be 0.92.

The integration of K-Means clustering algorithm with Graph Cut for image segmentation produced promising results across the tested dataset as the numerical values are within its specific limit.

MSE, highlights numerical accuracy but penalizes small errors disproportionately, particularly in fine structures like blood vessels or microaneurysms. PSNR, indicates overall image quality and the ability of the method to minimize noise while retaining diagnostic features. SSIM, evaluates perceptual quality, emphasizing the preservation of clinically significant structures such as lesions, the optic disc, and vascular networks.

By combining these metrics, the evaluation provides a holistic assessment of segmentation performance, ensuring that both numerical accuracy and structural integrity are maintained. This is crucial for developing segmentation methods that align with clinical diagnostic needs in retinal fundus imaging.

Comparative analysis of existing and proposed method

When compared to standalone approaches, obtained results point to a significant improvement in lesion precision and image quality.

The proposed method achieves a PSNR of 40.82 and SSIM of 0.92, demonstrating superior performance compared to standalone K-Means (PSNR: − 38.21, SSIM: 0.006) and graph cut (PSNR: 12.05, SSIM: 0.104). The results demonstrate the superior performance of the proposed method across all metrics, emphasizing its clinical relevance and precision.

The Table 3 provides a comparative analysis of various image segmentation methods based on two key metrics PSNR and SSIM. These metrics are critical for evaluating the quality of image reconstruction and structural preservation.

Table 3 presents a comparison of various image segmentation methods for enhancing DR images, focusing on image quality and structural preservation. The proposed method demonstrates clear superiority over other approaches, showcasing its effectiveness in achieving optimal results. Its enhanced performance makes it particularly suitable for clinical applications, where high-quality image reconstruction is critical for accurate diagnosis and treatment planning in DR management.

From Table 4, it is visualized that the suggested method demonstrates superior performance with PSNR and SSIM values which are high enough, indicating significant improvements in image quality over existing methods.

To prove the suggested method is robust, additional metrics were computed and it is compared with other methods.

Table 5 provides a performance comparison of various models applied to the Messidor dataset for DR detection. The proposed method achieves the highest accuracy of 98%, surpassing the other state-of-the-art models.

Table 6 provides a performance comparison of various models tested on the Kaggle dataset for DR detection. The proposed method achieves the highest accuracy of 96.5%, outperforming the other listed approaches.

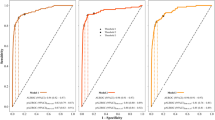

Figure 11 shows the graphical representation of performance metrics comparison between various segmentation algorithms with the proposed method.

The graph presents a thorough analysis of several image segmentation algorithms. Three primary measures are used to evaluate each algorithm’s performance PPV, sensitivity, and accuracy. These metrics offer information about the algorithms’ efficacy in terms of precision, detection ability, and overall correctness, respectively. Achieving great sensitivity and accuracy, the suggested method stands out, demonstrating its strength in precisely recognizing true positives while preserving overall reliability. The comprehensive examination highlights the distinct benefits of the suggested approach and deep learning models, designating them as more appropriate for contemporary image segmentation uses, particularly those that demand high sensitivity and accuracy.

All the obtained results are validated by an Ophthalmologist.

Conclusion

This study introduced a hybrid segmentation approach that combines K-Means clustering with Graph Cut optimization to enhance retinal image segmentation. By leveraging the strengths of both techniques, the proposed method effectively addresses challenges such as noise sensitivity, poor boundary detection, and low-contrast regions commonly encountered in traditional segmentation approaches. The integration of K-Means for initial clustering and Graph Cut for precise boundary refinement ensures improved segmentation accuracy, making it highly suitable for detecting diabetic retinopathy-related lesions. The proposed approach demonstrates significant improvements over existing methods, preserving structural details while minimizing segmentation errors. Its effectiveness has been validated on standard retinal image datasets, showing superior performance compared to standalone techniques. This development helps in the early and precise detection of diabetic retinopathy, supporting ophthalmologists in making informed clinical decisions Future research can explore the integration of deep learning models to further enhance segmentation precision and adaptability. Additionally, real-time deployment of the method in ophthalmic diagnostic systems can enable large-scale screening and telemedicine applications. The findings of this study reinforce the importance of hybrid segmentation techniques in medical imaging, offering a scalable and efficient solution for automated retinal image analysis.

Data availability

The datasets analysed during the current study are included as a supplementary file. Detailed information and access to the dataset can be found in the supplementary file attached to this manuscript.

References

Ma, J., Muad, Y. A. & Chen, J. Visualization of medical volume data based on improved K-means clustering and segmentation rules. IEEE Access 9, 100498–100512. https://doi.org/10.1109/ACCESS.2021.3096790 (2021).

Chen, X. & Pan, L. A survey of graph cuts/graph search based medical image segmentation. IEEE Rev. Biomed. Eng. 11, 112–124. https://doi.org/10.1109/RBME.2018.2798701 (2018).

Qiao, L., Zhu, Y. & Zhou, H. Diabetic retinopathy detection using prognosis of microaneurysm and early diagnosis system for non-proliferative diabetic retinopathy based on deep learning algorithms. IEEE Access 8, 104292–104302. https://doi.org/10.1109/ACCESS.2020.2993937 (2020).

Cho, H., Kang, S.-J. & Kim, Y. H. Image segmentation using linked mean-shift vectors and global/local attributes. IEEE Trans. Circuits Syst. Video Technol. 27(10), 2132–2140. https://doi.org/10.1109/TCSVT.2016.2576918 (2017).

Imran, A., Li, J., Pei, Y., Yang, J.-J. & Wang, Q. Comparative analysis of vessel segmentation techniques in retinal images. IEEE Access 7, 114862–114887. https://doi.org/10.1109/ACCESS.2019.2935912 (2019).

Lian, S. et al. A global and local enhanced residual U-Net for accurate retinal vessel segmentation. IEEE/ACM Trans. Comput. Biol. Bioinform. 18(3), 852–862. https://doi.org/10.1109/TCBB.2019.2917188 (2021).

He, Y. et al. Segmenting diabetic retinopathy lesions in multispectral images using low-dimensional spatial-spectral matrix representation. IEEE J. Biomed. Health Inform. 24(2), 493–502. https://doi.org/10.1109/JBHI.2019.2912668 (2020).

Dodo, B. I., Li, Y., Kaba, D. & Liu, X. Retinal layer segmentation in optical coherence tomography images. IEEE Access 7, 152388–152398. https://doi.org/10.1109/ACCESS.2019.2947761 (2019).

Pellegrini, E. et al. A graph cut approach to artery/vein classification in ultra-widefield scanning laser ophthalmoscopy. IEEE Trans. Med. Imaging 37(2), 516–526. https://doi.org/10.1109/TMI.2017.2762963 (2018).

Annunziata, R., Garzelli, A., Ballerini, L., Mecocci, A. & Trucco, E. Leveraging multiscale hessian-based enhancement with a novel exudate inpainting technique for retinal vessel segmentation. IEEE J. Biomed. Health Inform. 20(4), 1129–1138. https://doi.org/10.1109/JBHI.2015.2440091 (2016).

Shi, H., Hayat, M. & Cai, J. Unified open-vocabulary dense visual prediction. IEEE Trans. Multimed. 26, 8704–8716. https://doi.org/10.1109/TMM.2024.3381835 (2024).

S. Deng et al. Learning to compose diversified prompts for image emotion classification. Preprint at http://arxiv.org/abs/2201.10963 (2022).

B. Tu et al. Anomaly detection in hyperspectral images using adaptive graph frequency location. In: IEEE Transactions on Neural Networks and Learning Systems. https://doi.org/10.1109/TNNLS.2024.3449573.

Wang, Q., Chen, J., Song, Y., Li, X. & Xu, W. fusing visual quantified features for heterogeneous traffic flow prediction. Promet Traffic Transp. https://doi.org/10.7307/ptt.v36i6.667 (2024).

Feng, H. et al. Orexin neurons to sublaterodorsal tegmental nucleus pathway prevents sleep onset rem sleep-like behavior by relieving the REM sleep pressure. Research (Wash. D. C.) https://doi.org/10.34133/research.0355 (2024).

Zhang, C. et al. Hematoma evacuation via image-guided para-corticospinal tract approach in patients with spontaneous intracerebral hemorrhage. Neurol. Ther. 10(2), 1001–1013. https://doi.org/10.1007/s40120-021-00279-8 (2021).

Shen, Z., Fu, H., Shen, J. & Shao, L. Modeling and enhancing low quality retinal fundus images. IEEE Trans. Med. Imaging 40(3), 996–1006 (2021).

P. Cheng, L. Lin, Y. Huang, J. Lyu & X. Tang. I-secret: Importance guided fundus image enhancement via semi-supervised contrastive constraining. In Proceedings of International Conference on Medical Image Computing and Computer-Assisted Intervention 87–96 (2021).

Q.-C. Tian & L. D. Cohen. Global and local contrast adaptive enhancement for non-uniform illumination color images. In Proceedings of IEEE International Conference on Computer Vision Workshops 3023–3030 (2017).

Tai, Y.-W., Tan, P. & Brown, M. S. Richardson-Lucy deblurring for scenes under a projective motion path. IEEE Trans. Pattern Anal. Mach. Intell. 33(8), 1603–1618 (2011).

Y. Cai et al. Mask-guided spectral-wise transformer for efficient hyperspectral image reconstruction. In Proceedings of IEEE Conference on Computer Vision and Pattern Recognition 17502–17511 (2022).

X. Ji et al. Real-world super-resolution via kernel estimation and noise injection. In Proceedings of IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops 466–467 (2020).

X. Wang et al. ESRGAN: Enhanced super-resolution generative adversarial networks. In Proceedings of European Conference on Computer Vision (ECCV) Workshops 63–79 (2018).

Deng, Z. et al. RFormer: Transformer-based generative adversarial network for real fundus image restoration on a new clinical benchmark. IEEE J. Biomed. Health Inform. 26(9), 4645–4654 (2022).

X. Wang, M. Xu, J. Zhang, L. Jiang & L. Li. Deep multi-task learning for diabetic retinopathy grading in fundus images. In Proceedings of the AAAI Conference on Artificial Intelligence 2826–2834 (2021).

Wang, X. et al. Joint learning of multi-level tasks for diabetic retinopathy grading on low-resolution fundus images. IEEE J. Biomed. Health Inform. 26(5), 2216–2225 (2022).

Jia, Y., Chen, G. & Chi, H. Retinal fundus image super-resolution based on generative adversarial network guided with vascular structure prior. Sci. Rep. 14(1), 22786. https://doi.org/10.1038/s41598-024-74186-x (2024).

H. Pan, Z. Li, Y. Fu, X. Qin & J. Hu. Reconstructing visual stimulus images from EEG signals based on deep visual representation model. Preprint at http://arxiv.org/abs/2403.06532v1 (2024).

Wang, W. et al. Low-light image enhancement based on virtual exposure. Signal Process. Image Commun. 118, 117016. https://doi.org/10.1016/j.image.2023.117016 (2023).

Y.-W. Chen, T.-Y. Wu, W.-H. Wong & C.-Y. Lee. Diabetic retinopathy detection based on deep convolutional neural networks. In Proceedings of IEEE International Conference on Acoustics, Speech, and Signal Processing (ICASSP) 1030–1034 (2018).

Li, X. et al. ‘CANet: Cross-disease attention network for joint diabetic retinopathy and diabetic macular edema grading’. IEEE Trans. Med. Imaging 39(5), 1483–1493 (2019).

S. Sengupta, A. Singh, J. Zelek & V. Lakshminarayanan. Cross domain diabetic retinopathy detection using deep learning. In Proceedings of SPIE, vol. 11139, 111390V (2019). https://doi.org/10.1117/12.2529450.

He, A., Li, T., Li, N., Wang, K. & Fu, H. ‘CABNet: Category attention block for imbalanced diabetic retinopathy grading’. IEEE Trans. Med. Imaging 40(1), 143–153. https://doi.org/10.1109/TMI.2020.3023463 (2021).

Abbood, S. H. et al. Hybrid retinal image enhancement algorithm for diabetic retinopathy diagnostic using deep learning model. IEEE Access 10, 73079–73086. https://doi.org/10.1109/ACCESS.2022.3189374 (2022).

S. Simon. Retinal image enhancement and eye disease identification. In Proc. Int. Conf. Syst. Energy Environ. 1–7 (2019).

B. Harangi, J. Toth, A. Baran & A. Hajdu. Automatic screening of fundus images using a combination of convolutional neural network and handcrafted features. In Proceedings of 41st Annual International Conference of the IEEE Engineering in Medicine and Biology Society (EMBC) 2699–2702 (2019). https://doi.org/10.1109/EMBC.2019.8857073.

Cao, P., Ren, F., Wan, C., Yang, J. & Zaiane, O. Efficient multi-kernel multi-instance learning using weakly supervised and imbalanced data for diabetic retinopathy diagnosis. Comput. Med. Imaging Graph. 69, 112–124. https://doi.org/10.1016/j.compmedimag.2018.08.008 (2018).

Qummar, S. et al. A deep learning ensemble approach for diabetic retinopathy detection. IEEE Access 7, 150530–150539 (2019).

Acknowledgements

We thank the department of Science and Technology, DST-SERB India with order number CRG/2022/008041 for funding us the project.

Author information

Authors and Affiliations

Contributions

Both the authors contributed equally to this manuscript in terms of drafting paper and algorithm development.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Bhoopalan, R., Priyadharshini, S. A novel approach for the segmentation of retinal images by integration K-means clustering algorithm with graph cut for image segmentation. Sci Rep 15, 35950 (2025). https://doi.org/10.1038/s41598-025-89262-z

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-89262-z