Abstract

The study aimed to develop an AI-assisted ultrasound model for early liver trauma identification, using data from Bama miniature pigs and patients in Beijing, China. A deep learning model was created and fine-tuned with animal and clinical data, achieving high accuracy metrics. In internal tests, the model outperformed both Junior and Senior sonographers. External tests showed the model’s effectiveness, with a Dice Similarity Coefficient of 0.74, True Positive Rate of 0.80, Positive Predictive Value of 0.74, and 95% Hausdorff distance of 14.84. The model’s performance was comparable to Junior sonographers and slightly lower than Senior sonographers. This AI model shows promise for liver injury detection, offering a valuable tool with diagnostic capabilities similar to those of less experienced human operators.

Similar content being viewed by others

Introduction

The liver is one of the most frequently injured organs. Liver trauma is responsible for about 15% of all abdominal injuries1,2 and approximately 5% of all emergency admissions3. Liver trauma is often combined with further complications, such as secondary bleeding, bile duct injury, bile leakage, and peritonitis syndrome, leading to high mortality that can exceed 75%4,5. The prognosis of patients could be notably improved by quick evaluation of the injury and minimally invasive treatment6,7. Thus, timely assessment of the liver is the priority for trauma patients, especially under emergency conditions.

Ultrasound (US) is a commonly used method to assess liver trauma. It is an affordable real-time imaging approach, allowing dynamic, convenient, and continuous examination2,8. However, compared to other imaging techniques, the quality of US imaging is significantly affected by the physiological characteristics of patients and is more dependent on the operation methods and human factors9. Thus, artificial intelligence (AI)-empowered ultrasonography has the potential to accelerate further the use of medical ultrasound in various clinical settings by analyzing the patient’s multimodal imaging information, simplifying the operation steps and avoiding subjective differences, saving physician resources, shortening the reporting time, and improving the diagnostic efficiency10,11,12,13.

At present, AI studies are mainly focused on investigating thyroid nodules, breast nodules and liver tumors; Due to the smaller clinical sample size compared to superficial organs such as thyroid and breast, the complexity of images containing numerous tissues and organs, and the difficulty of computer learning, there are relatively few reports on artificial intelligence assisted diagnosis in obstetrics, gynecology, pelvic floor, and vascular diseases. In the context of liver injury, AI can potentially find the differences of subtle texture features in images that cannot be recognized by the naked eye14, which may be helpful for the early diagnosis of liver parenchymal contusion and laceration missed by doctors in US images. Aside from liver tumors, successful usage of the AI was reported for non-alcoholic fatty liver disease15, cholangitis16, and focal liver lesions17. Therefore, we established and validated a new convolutional neural network-based deep learning model(CNDLM) for identifying liver trauma (parenchymal contusion and laceration). We hypothesized the AI-assisted US model could achieved comparable diagnostic efficiency with sonographers, and could assist early identifying of liver trauma.

Results

Establishment of the CNDLM

A total of 1603 US images (1373 on the training set, 230 in the validation set; 116 were selected as the test set) were obtained from pigs and 126 from patients admitted to 3 centers (30 from the first center were selected as training set for fine-tuning of CNDLM, 18 were selected as a clinical internal validation set, and 78 were selected as clinical external validation set). There were 3 animal CV models and 3 clinical CV models after training and fine-tuning, with acceptable accuracy in animal test set (Table S1 and Fig. 1). Then the CNDLM was established.

Study flow chart. The three steps were: data preparation, model construction, and model evaluation. The data preparation included collecting animal and clinical US images. The model construction was a model training process. Three-fold cross-validation was used to train the animal CV model, which was then transferred into clinical data, finally ensembling 3 CV models. The model evaluation was performed as a comparison with the trained sonographers using visual methods and quantitative metrics.

Performance of CNDLM

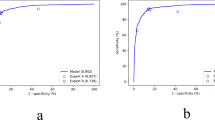

The CNDLM achieved significantly better performance (DSC = 0.91 [0.87–0.95]; TPR = 0.91 [0.86–0.95]; PPV = 0.95 [0.93–0.97]; 95_HD = 2.55 [1.85–3.56]) compared to Junior and Senior sonographers (all P < 0.001) (Table 1; Fig. 2) in animal test set. The CNDLM also demonstrated satisfactory performance (Dice = 0.88 [0.84–0.93]; TPR = 0.87 [0.81–0.92]; PPV = 0.92 [0.86–0.96]; 95_HD = 11.78 [4.08–22.04]) in the clinical internal test set (Table 1; Fig. 3), showing superior diagnostic performance compared to Junior (P = 0.001, 0.012, respectively) and Senior sonographers (both P = 0.004). In the external test set, the CNDLM achieved the DSC of 0.74 (0.66–0.81), TPR of 0.80 (0.73–0.87), PPV of 0.74(0.65–0.82), and HD-95 of 14.84 (8.34–23.11). The performance was significantly better than one of the junior sonographers, but significantly inferior to another junior sonographers (Table 1; Fig. 4).

Discussion

In this study, a deep learning model was proposed for liver injury detection, which could achieve satisfactory diagnostic performance in assessing clinical US images comparable to that of the trained sonographers. To the best of our knowledge, this is the first study that proposed the clinical DL model for liver trauma evaluation that demonstrated good accuracy. Applying AI methods in the liver US could potentially increase the quality of imaging while limiting the influence of the human factor to achieve an early, rapid, and accurate diagnosis of liver trauma.

The Bama miniature pig’s liver resembles the human liver in terms of anatomy, morphology, and texture echo18. Thus, these animals were selected as the experimental animal in this study. The automatic segmentation results of the model were compared with the standard range manually outlined by the trained sonographers, and DSC evaluated the output performance of the model. It is generally believed that the Dice coefficient > 0.7 indicates that the model has better performance19,20. In this study, the DSCs were all above 0.70, which confirmed that the automatically segmented liver injury area and the real injury area overlapped. The average positive predictive value (PPV) reached 0.884, and the average true positive rate (TPR) was about 0.780, which is comparable with the previous reports by Sato et al.21 or Saillard et al.22 for the DL models in the diagnosis of hepatocellular carcinoma. The obtained results confirmed that the model not only accurately located the wound location but also outlined the wound contour more reliably.

Despite the promising accuracy, peripheral false positives or internal false negatives were reported when the lesions were over-segmented or under-segmented. This may be at least partly explained by the following two points: firstly, the grayscale of the ultrasound image of liver trauma changes with time. Within a week of the formation of liver trauma, the trauma area often shows a heterogeneous echo area or a slightly hyperechoic area, and sometimes the grayscale contrast with the normal liver parenchyma is not significant. With time, the echo in the lesion area gradually decreases, and the grayscale contrast with normal tissue also gradually increases. Most lesions have echogenicity similar to the surrounding liver parenchyma in about two months23. Secondly, in the case of slight injury, the grayscale contrast with normal tissue is not significant, and the more severe the injury, the greater the grayscale difference from normal tissue. Therefore, the sensitivity and specificity of the proposed model should be further evaluated based on the above data.

One of the strengths of this study is the large number of animal US image data collected for training, which were used to guide the model and maintain the existing low-level features through pre-trained weights. Additionally, the proposed AI model was fine-tuned using ultrasound image data of patients to correct the difference between human and animals. This approach could potentially increase the diagnostic accuracy to the level of human sonographers, as was previously reported24. To test the potential of the model, this study compared the diagnostic accuracy of physicians with different seniority and AI. The results showed that the model outperformed some junior physicians in external test set, and the ensemble model had better diagnostic performance than physicians of all levels in the internal test set, which is consistent with previous studies24,25. Thus, in small hospitals with comparable conditions, as well as teaching hospitals that undertake the teaching work of young residents, the use of AI-assisted diagnosis could potentially help to improve the quality of ultrasound diagnosis.

Finally, the concept of “golden time” is emphasized in trauma first aid, with time becoming the most important factor affecting survival26. Therefore, the methods that shorten the assessment time before the surgery are most important. In emergency situations, US can effectively guide clinical classification and treatment, but its interpretation is significantly affected by the physiological characteristics of patients27. Moreover, ultrasound examination has certain subjective differences, and training professionals require a lot of long-term practice and learning. In contrast, combining US with AI can simplify operation steps, avoid subjective differences, save physician resources, shorten examination time, and improve diagnostic efficiency. This study demonstrated that the application of AI to diagnosing liver trauma could potentially maximize the portability and speed of real-time US interpretation, which was consistent with previous studies28,29,30.

This study has several limitations. Firstly, the number of clinical US images was limited due to the low incidence of liver trauma, resulting in an imbalance of image sources. For this issue, this study supplemented clinical data to fine-tune the animal model; through data amplification and batch normalization, overfitting was reduced as much as possible, and robustness was enhanced. Secondly, clinical data in this study were collected retrospectively, with could have led to a potential bias. The transfer learning method was adopted due to the lack of clinical US data, which cannot meet the needs of building deep learning models. Although we used methods such as increasing the data set, enhancing the data, reducing the learning rate, and using regularization parameters for data preprocessing, there was still a certain overfitting problem in the model training, which needs to be increased in future research. Finally, clinical data of patients (including weight and blood pressure) were not taken into account at this stage. Future studies should focus on validating the model in the clinical setting.

In conclusion, this study proposed a deep learning model based on RefineNet structure, where the encoding process uses ResNet101 based on pre trained weights from ImageNet. RefineNet is a generative multi-path enhancement network that utilizes residual connections to maximize the use of all information from the downsampling process when making high-resolution predictions. Therefore, this structure can capture advanced deep features related to damage in the image. This deep learning model is helpful for liver injury detection from 2D ultrasound images, which achieved comparable diagnostic performance with Junior sonographers.

Methods

The study was undertaken in two stages. The first stage included the animal experimental US images as a training set and validation set. The second stage included the clinical US images as a test set. The animal study strictly complied with the National Institute of Animal Health regulation in experimental design, animal feeding, and sample acquisition. This whole study was approved by the Ethics Committee of the PLA General Hospital (approval number: S2020-323-01). All methods were carried out in accordance with relevant guidelines and regulations.

Establishment of the experimental model

The animal experimental stage took place between January 2021 and April 2021. Eighteen Bama miniature pigs (Animal Experimentation Center, PLA General Hospital) weighing 30–35 kg underwent intramuscular anesthesia (0.3 ml/kg, mix midazolam and serrazine hydrochloride intramuscular injection in the ratio of 1:1) and endotracheal intubation. The animals were fixed, the venous channel was established through the marginal ear vein, and the liver parenchyma contusion and laceration were modeled with a self-made percussion device (Supplementary Fig. S1). After modeling, the contrast-enhanced ultrasound (Ultrasound Diagnostic Instrument with 5–15 MHz Convex probe, 3–5 MHz frequency) was performed to obtain multiple gray-scale (GS) ultrasound images of the trauma area from different angles. The liver trauma region was defined as region without enhancement in US images, evaluated by a junior sonographer (with less than 3 years of experience). The experimental animals were euthanized through deep anesthesia using Soothe 50 (10 mg/kg) and Xylazine Hydrochloride (8 mg/kg) intramuscular injection, followed by exsanguination to induce death.

Clinical data collection

Clinical US images were collected from patients with liver trauma treated in the first medical center of PLA General Hospital, the seventh medical center of PLA General Hospital, and Beijing Chaoyang emergency center between January 2015 and April 2021, to avoid data bias.

The inclusion criteria were: (1) trauma history within one week; (2) liver trauma confirmed by surgery or contrast-enhanced ultrasound; (3) complete clinical and imaging information. The exclusion criteria were: (1) poor image quality; (2) chronic liver lesions (such as liver cirrhosis); (3) non-traumatic liver rupture (such as rupture of liver tumor). The golden standard for liver trauma region was defined as a region of no enhancement in contrast-enhanced US imaging, drawn by the same sonographer. The true positive was defined as the overlap between the liver trauma region defined and the liver trauma region in the gold standard.

It is helpful for avoiding bias from single hospitals to combine the data from all hospitals and split them into 3 datasets. Thirty clinical US images from the data collected from the first medical center of PLA General Hospital and the seventh medical center of PLA General Hospital were randomly selected as fine tuning set, and the rest images from the two centers were selected as clinical internal test set. The clinical US images collected from Beijing Chaoyang emergency center were selected as clinical external test.

Establishment and evaluation of the deep learning (DL) model

In this study, animal experimental data was divided into training dataset, validation dataset, and testing dataset, with a ratio of 6:1 between the training dataset and validation dataset. The testing dataset was independent of the training and validation datasets. The training dataset is used to train the model, while the validation dataset is used to validate the trained model. After the training process, the model is evaluated using a test dataset. The detailed quantitative indicators are shown in Table S1 of the test set.

Clinical models are fine tuned from animal models by fine-tuning the dataset. We collected 48 patients from the First and Seventh Medical Centers of the People’s Liberation Army General Hospital, and 78 patients from the Beijing Chaoyang Emergency Center. Collecting data from different hospitals and obtaining differential features from animal and clinical data can be helpful in eliminating operational factors.

We constructed a three fold cross validation (CV) model using an animal dataset and trained it using pre trained weights from ImageNet. Triple fold cross validation is a method of dividing a sample set into three parts, using one part as the validation set and the other data as the training set for algorithm training, analysis, and validation. Cross validation is commonly used to evaluate machine learning model performance and hyperparameter tuning. The difference between these three models lies in the training and validation datasets. During this process, three models were trained in each experiment. Then, these three animal CV models fixed all parameters except for the last two convolutional blocks and fine tuned them using clinical training data to generate three clinical models. These three models were integrated into the final trauma assisted diagnosis model (CNDLM). And the performance of CNDLM was compared with data obtained by other ultrasound physicians using animal test sets, clinical internal validation sets, and clinical external validation sets.

The workflow of the segmentation DL model is shown in Fig. 1. The segmentation mask was extracted through the Visual Object Tagging Tool (VOTT, Microsoft, USA) and OpenCV package in python. Then, the images and segmentation mask were resized (224*224 pixels). The model was developed with the RefineNet (Supplementary Fig. S2). A Resnet101 network pre-trained on the ImageNet dataset was employed in RefineNet (Supplementary Fig. S3 and Table S2). The final model was evaluated by internal test cohort and external test cohorts with human US images. In the training process, the parameters of the model were iteratively updated through backpropagation, whose input and output was the image and segmentation mask, respectively. The dice loss of the output and segmentation mask was used as the loss function. The data enhancement strategy was employed to reduce the impact of overfitting, including random flipping and rotation of the input image. A detailed description of the model establishment and evaluation are shown in the Supplementary Methods section.

To compare the diagnostic efficiency of the final model, the animal and clinical images in validation were evaluated by two junior sonographers (with less than 3 years of experiences) and two senior sonographers (with more than 10 years of experiences).

Statistical analysis

R software (version 3.3.1; R 21 Foundation for Statistical Computing, Vienna, Austria) was used for statistical analysis. The Dice Similarity Coefficient (DSC), True Positive Rate (TPR), Positive Predictive Value (PPV), and 95% Hausdorff distance (HD-95) were used to evaluate the performance of CV, CNDLM, and sonographers. Models were compared for DSC in physician identification using the Kruskal-Wallis test. All statistical tests were two-sided, and the statistical significance level was set at two-sided P < 0.05.

Data availability

All data generated or analysed during this study are included in this article and its supplementary information file.

References

Buci, S. et al. The rate of success of the Conservative management of liver trauma in a developing country. World J. Emerg. Surg. 12, 24 (2017).

Tarchouli, M. et al. Liver trauma: What current management? Hepatobiliary Pancreat. Dis. Int. 17, 39–44 (2018).

Saviano, A. et al. Liver trauma: Management in the emergency setting and medico-legal implications. Diagnostics 12 (2022).

Pillai, A. S., Kumar, G. & Pillai, A. K. Hepatic trauma interventions. Semin. Interv. Radiol. 38, 96–104 (2021).

Sánchez-Bueno, F. et al. [Changes in the diagnosis and therapeutic management of hepatic trauma. A retrospective study comparing 2 series of cases in different (1997–1984 vs. 2001–2008)]. Cir. Esp. 89, 439–447 (2011).

Kanani, A., Sandve, K. O. & Søreide, K. Management of severe liver injuries: Push, pack, pringle—and plug! Scand. J. Trauma Resusc. Emerg. Med. 29, 93 (2021).

Naylor, J. F. et al. Emergency department imaging of pediatric trauma patients during combat operations in Iraq and Afghanistan. Pediatr. Radiol. 48, 620–625 (2018).

Pillai, A. S., Srinivas, S., Kumar, G. & Pillai, A. K. Where does interventional radiology fit in with trauma management algorithm?? Semin. Interv. Radiol. 38, 3–8 (2021).

Lada, N. E. et al. Liver trauma: Hepatic vascular injury on computed tomography as a predictor of patient outcome. Eur. Radiol. 31, 3375–3382 (2021).

Gong, J. et al. A deep residual learning network for predicting lung adenocarcinoma manifesting as ground-glass nodule on CT images. Eur. Radiol. 30, 1847–1855 (2020).

Veerankutty, F. H. et al. Artificial intelligence in hepatology, liver surgery and transplantation: Emerging applications and frontiers of research. World J. Hepatol. 13, 1977–1990 (2021).

Cai, S. L. et al. Using a deep learning system in endoscopy for screening of early esophageal squamous cell carcinoma (with video). Gastrointest. Endosc. 90, 745–753e742 (2019).

Li, C. et al. Development and validation of an endoscopic images-based deep learning model for detection with nasopharyngeal malignancies. Cancer Commun. 38, 59 (2018).

Ahn, S. H. et al. Comparative clinical evaluation of atlas and deep-learning-based auto-segmentation of organ structures in liver cancer. Radiat. Oncol. 14, 213 (2019).

Biswas, M. et al. A liver ultrasound tissue characterization and risk stratification in optimized deep learning paradigm. Comput. Methods Programs Biomed. 155, 165–177 (2018). Symtosis.

Ringe, K. I. et al. Fully automated detection of primary sclerosing cholangitis (PSC)-compatible bile duct changes based on 3D magnetic resonance cholangiopancreatography using machine learning. Eur. Radiol. 31, 2482–2489 (2021).

Schmauch, B. et al. Diagnosis of focal liver lesions from ultrasound using deep learning. Diagn. Interv. Imaging 100, 227–233 (2019).

Yekuo, L. et al. Contrast-enhanced ultrasound for blunt hepatic trauma: An animal experiment. Am. J. Emerg. Med. 28, 828–833 (2010).

Li, L. et al. Automatic multi-plaque tracking and segmentation in ultrasonic videos. Med. Image Anal. 74, 102201 (2021).

Tajbakhsh, N. et al. Embracing imperfect datasets: A review of deep learning solutions for medical image segmentation. Med. Image Anal. 63, 101693 (2020).

Sato, M. et al. Machine-learning approach for the development of a novel predictive model for the diagnosis of hepatocellular carcinoma. Sci. Rep. 9, 7704 (2019).

Saillard, C. et al. Predicting survival after hepatocellular carcinoma resection using deep learning on histological slides. Hepatology 72, 2000–2013 (2020).

Padalino, P. et al. Healing of blunt liver injury after non-operative management: Role of ultrasonography follow-up. Eur. J. Trauma Emerg. Surg. 35, 364–370 (2009).

Zhao, C. et al. Feasibility of computer-assisted diagnosis for breast ultrasound: The results of the diagnostic performance of S-detect from a single center in China. Cancer Manag. Res. 11, 921–930 (2019).

Li, J. et al. The value of S-Detect for the differential diagnosis of breast masses on ultrasound: A systematic review and pooled meta-analysis. Med. Ultrason. 22, 211–219 (2020).

Hussmann, B. & Lendemans, S. Pre-hospital and early in-hospital management of severe injuries: Changes and trends. Injury 45(Suppl 3), S39–42 (2014).

Akkus, Z. et al. A survey of deep-learning applications in ultrasound: Artificial intelligence-powered ultrasound for improving clinical workflow. J. Am. Coll. Radiol. 16, 1318–1328 (2019).

Ciompi, F. et al. Automatic classification of pulmonary peri-fissural nodules in computed tomography using an ensemble of 2D views and a convolutional neural network out-of-the-box. Med. Image Anal. 26, 195–202 (2015).

Wang, X. et al. Searching for prostate cancer by fully automated magnetic resonance imaging classification: Deep learning versus non-deep learning. Sci. Rep. 7, 15415 (2017).

Lassau, N. et al. Five simultaneous artificial intelligence data challenges on ultrasound, CT, and MRI. Diagn. Interv. Imaging 100, 199–209 (2019).

Acknowledgements

Not applicable.

Author information

Authors and Affiliations

Contributions

L.Y.K. and S.Q. conceived and supervised the study; L.Y.K., T.L. and W.K. designed experiments; W.Y.J., M.J., K.L.L. and H.P. performed experiments; W.Y.J. and H.X.L. analysed data; S.Q. and H.X.L. wrote the manuscript; G.H.J. and H.X.L. made manuscript revisions. All authors reviewed the results and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

The animal study strictly complied with the National Institute of Animal Health regulation in experimental design, animal feeding, and sample acquisition. This whole study was approved by the Ethics Committee of the PLA General Hospital (approval number: S2020-323-01). All methods were carried out in compliance with the ARRIVE guidelines. The written informed consent was obtained from all patients with liver trauma.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Song, Q., He, X., Wang, Y. et al. Clinical validation of AI assisted animal ultrasound models for diagnosis of early liver trauma. Sci Rep 15, 22513 (2025). https://doi.org/10.1038/s41598-025-91900-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-91900-5