Abstract

Contrast-enhanced UTE-MRA provides detailed angiographic information but at the cost of prolonged scanning periods, which may impose moving artifacts and affect the promptness of diagnosis and treatment of time-sensitive diseases like stroke. This study aims to increase the resolution of rapidly acquired low-resolution UTE-MRA data to high-resolution using deep learning. A total of 20 and 10 contrast-enhanced 3D UTE-MRA data were collected from healthy control and stroke-bearing Wistar rats, respectively. A newly designed 3D convolutional neural network called ladder-shaped residual dense generator (LSRDG) and other state-of-the-art models (SR-ResNet, MRDG64) were implemented, trained, and validated on healthy control data and tested on stroke data. For healthy control data, significantly improved SSIM, PSNR, and MSE results were achieved using our proposed model, respectively 0.983, 36.80, and 0.00021, compared to 0.964, 34.38, and 0.00037 using SR-ResNet and 0.978, 35.47, and 0.00029 using MRDG64. For stroke data, respective SSIM, PSNR, and MSE scores of 0.963, 34.14, and 0.00041 were achieved using our proposed model compared to 0.953, 32.24, and 0.00061 (SR-ResNet) and 0.957, 32.90, and 0.00054 (MRDG64). Moreover, by combining a well-designed network, suitable loss function, and training with smaller patch sizes, the resolution of contrast-enhanced UTE-MRA was significantly improved from 2343 μm3 to 1173 μm3.

Similar content being viewed by others

Introduction

To acquire comprehensive angiographic information of both arterial and venous cerebral vasculatures in animal models, contrast-enhanced three-dimensional (3D) ultrashort echo time magnetic resonance angiography (UTE-MRA) with monocrystalline iron oxide nanoparticles (MION) is a promising approach1,2,3,4,5. The impressive resolution of this imaging technique plays a critical role in delineating the anatomical structures of fine blood vessels; however, achieving such high resolution comes at the cost of longer scanning durations6,7, which may introduce motion artifacts and, more critically, impact the promptness of diagnosis and treatment for time-sensitive conditions like stroke8,9. To illustrate, Fig. 1 depicts the imaging of ischemic stroke through diffusion imaging (B and C) and angiographic methods (D and E). Notably, the smaller vasculatures exhibit enhanced visibility in the high-resolution (HR) contrast-enhanced UTE-MRA image (E), albeit requiring a fourfold increase in acquisition time.

Imaging of ischemic stroke. (A) Schematic of the MCAO model. (B) Diffusion-weighted image (DWI, axial view), and (C) trace map of the apparent diffusion coefficient (ADC) in µm2/s (axial view). (D) and (E) are coronal views of low- and high-resolution UTE-MRA data, respectively, after maximum intensity projection. The MCAO model schematic was generated by overlaying cerebral vasculature on a brain template. The hyperintensity in the DWI and hypointensity in the ADC map show the region of infarction. MCAO: middle cerebral artery occlusion.

Currently, deep learning has captured considerable interest due to its exceptional performance in diverse computer vision tasks. These include image classification, segmentation, localization, and the generation of super-resolution (SR) images, a capability that classical algorithms previously struggled to achieve9,10,11,12. As obtaining HR 3D MRA data poses considerable challenges, employing a convolutional neural network (CNN) to generate a SR angiogram from a quickly acquired low-resolution (LR) angiogram presents a promising solution. This approach holds potential for enhancing the sensitivity and specificity of angiographic investigations, ensuring model stability, and expanding preclinical availability9,10.

Based on the training strategy, single-image SR CNNs can be broadly categorized into peak signal-to-noise ratio (PSNR), flow, generative adversarial network (GAN), and diffusion-driven approaches11,12. PSNR-driven models are easier to implement and train but struggle to recover high-frequency edge detail in images. On the other hand, flow-based models exhibit a substantial computational footprint11. It is widely acknowledged that GAN-based models, due to their adversarial nature, encounter challenges in training and are susceptible to various failure modes9,10,11. Furthermore, even when these models are successfully trained, they tend to produce artifacts that appear natural, posing a significant challenge for their application in the medical field. Diffusion-based SR models are the most recent, which use LR data and stochastic noise as input to generate SR images11. While these models exhibit stability, their processing speed is notably slower compared to alternative methods. But it is also worth noting that, from a single LR image, the generation of multiple SR images is possible based on another input, introducing an element of randomness11. In this research, PSNR, as well as a combination of PSNR and GAN-oriented training, were implemented.

Beyond training methodologies, numerous researchers have put forth varied network architectures. The SR residual network (SR-ResNet) stands out as a pioneering example, utilizing residual blocks for feature extraction along with diverse up-sampling methods including interpolation-based techniques, inverse convolution, and pixel shuffling10,13. The memory-efficient residual dense generator (MRDG) enhances SR-ResNet by substituting residual blocks with residual dense blocks, which results in improved performance. However, this enhancement comes at the expense of increased computational demands and prolonged inference times9. For handling 3D and time-sensitive medical data, efficient deep learning models with reduced computational costs and inference times are crucial9,10,11. In this study, 3D SR-ResNet, MRDG64, and the newly proposed model were implemented and a comparative study was performed. The objective of the current study is to enhance the resolution of UTE-MRA data acquired within a short time frame by leveraging a tailored combination of CNN models, cost function, and training strategy. This refinement aims to position UTE-MRA as a more effective alternative in diagnosing conditions such as stroke and other vascular diseases, especially in animal models.

Methods

Data

Animal preparation

Adult male and female Wistar rats (body weight 250–350 g) were kept in cages with free access to food and water, housed inside a room with a 12-h light/12-h dark cycle. All animal experiments were carried out after receiving approval of animal care by the Institutional Animal Care and Use Committee (IACUC, IACUC-23–25) of the Ulsan National Institute of Science and Technology (UNIST) and carried out in accordance with the Animal Protection Act of Korea and Guide for the Care and Use of Laboratory Animals of the National Institute of Health. All the animal experiments in this study are reported in accordance with ARRIVE guidelines. Animals were anesthetized using 1.5–3% isoflurane mixed with 30% and 70% oxygen and nitrous oxide, respectively, throughout the experiment based on the breathing rate, which was kept at 30–45 breaths/minute. In addition, during the MRI experiments, the body temperature of the animals was kept constant (37 ± 1 °C) via the circulation of warm water through the animal bed. Ischemic stroke was induced by permanent occlusion of the left middle cerebral artery (MCA) and the acquisition of MRI data immediately after surgery14. An appropriately sized silicone rubber-coated monofilament (Doccol Corporation, Redlands, CA, USA) was inserted through a tiny hole in the common carotid artery (CCA) to maintain MCA occlusion throughout the experiment5,15.

MRI Acquisition

A total of 20 paired contrast-enhanced 3D UTE-MRA data were collected from healthy control (HC) animals using the UTE pulse sequence with an isometric matrix size of 1283 (LR) and 2563 (HR) with a voxel size of 2343 μm3 and 1173 μm3, respectively, time of repetition (TR) of 22 ms, and echo time (TE) of 0.012 ms after the intravenous bolus injection of MION at a dose of 360 μmol/kg5. The scanning times for HR and LR data were 66 and 16 min, respectively. Diffusion-weighted images (DWIs) were acquired with b = 1000 s/mm2, three orthogonal diffusion directions, matrix size of 128 × 128, and field of view of 30 × 30 mm2, and used only to evaluate the induction of stroke using an apparent diffusion coefficient (ADC) map, as shown in Fig. 1(C). Additionally, a total of 10 paired 3D UTE-MRA data were collected from animals with ischemic stroke using the same protocol. Both DWI and UTE-MRA data were acquired using a 7 T MR scanner (Bruker, Ettlingen, Germany) and a 40 mm volume coil. The raw MRA data were preprocessed and registered to reduce noise and motion artifacts, respectively16. To facilitate computation and achieve better performance, the original LR and HR data were fragmented into 323 and 643 patches, respectively, with 50% overlap between successive patches during training.

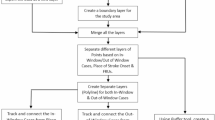

Convolutional neural networks

In this research, a new 3D single-image super-resolution generative network named the ladder-shaped residual dense generator (LSRDG) was designed and implemented using residual dense blocks. To our knowledge, all previously developed SR image generators up-sample the high-level features only at the distal portion of the network before the last convolution, while on the other hand, LSRDG up-samples features after each residual dense block. The up-sampled features from the previous layer are passed through a simple convolution layer and added to the next up-sampled features, thereby forming a ladder-shaped network as shown in Fig. 2. The network forms multiple routes or skip connections for the gradient of the loss from the output back to the input layer, which helps the network to be numerically stable and less prone to overfitting as well as to achieve increased performance17,18,19. As the convolution operation on high spatial features is computationally expensive, simple convolution followed by activation was used on the HR features track instead of the residual dense blocks in the LR features track of the network. Each building block of the model was included through extensive experiments to increase the generative ability of the model with time as a constraint. LSRDG was effectively optimized to increase performance, reduce the computational footprint, and reduce inference time using a feature depth of 32, kernel size of 3, dense block growth rate of 16, and 6 residual dense blocks with Gaussian error linear unit (GELU) activation20. During our ablation experiment, it was noted that adding more blocks, increasing the number of filters from 32 to 64, or using residual dense block instead of a single convolution layer in the HR feature of the model increases the performance with a longer inference time and higher computational cost. In addition to the architecture of the proposed model, different training strategies such as PSNR only and a combination of PSNR and GAN oriented loss were implemented and compared. The GAN version of our model, called the ladder-shaped residual dense generative adversarial network (LSRDGAN), uses a pyramid pooling discriminator with instance normalization and leaky rectified linear unit (ReLU) activation function, allowing the network to discriminate images at different image resolutions9.

For comparison to the developed method, other networks were additionally implemented in this work as follows. The first was 3D SR-ResNet with 6 residual blocks and batch normalization, according to Sánchez et al.10 A 3D super-resolution generative adversarial network (SRGAN) was also implemented using SR-ResNet as a generator, and a visual geometry group (VGG)-styled discriminator with the last fully connected classifier without a hidden layer to stabilize the training and be less prone to GAN failures10. The other network was MRDG64, implemented according to Wang et al., with 64 residual features, a dense block growth rate of 12, and 6 memory-efficient residual dense blocks each having 3 residual dense blocks9. Similar to SRGAN, an enhanced super-resolution generative adversarial network (ESRGAN) was also implemented using MRDG64 as a generator and pyramid pooling discriminator9. In all models, 3D up-sampling based on nearest neighbor interpolation was used, which has the lowest computational cost and better performance compared with transposed convolution and pixel shuffling methods10. For the binary classification task of the discriminator, soft labeling with decay was applied instead of hard labeling to suppress overconfidence and achieve stable training of the GANs as shown in Eq. 121,22.

All networks were first optimized to find the best hyperparameters. PSNR-oriented and the corresponding PSNR and GAN–oriented networks were trained using L2 loss and a combination of L2 and adversarial loss with a scaling factor set to 0.001, as shown in Eqs. 2 and 3, respectively. L2 loss was computed on the image as well as on the gradient of HR and SR images to recover the high-frequency components. The Nesterov-accelerated adaptive moment estimation (NADAM) optimizer, a batch of 16 patches, and an initial learning rate of 1e-4 that decays step-wise by half every 100 epochs were used23. The maximum number of epochs was set to 1000 and the training was halted before overfitting by evaluating the validation loss. All models were implemented in PyTorch (version 1.13.1, Meta AI, Menlo Park, CA, USA) and trained using a Nvidia A100 tensor core 80 GB graphics processing unit (Nvidia Corporation, Santa Clara, CA, USA). All networks were trained on 5145 patches, validated using 1715 patches from 15 and 5 HC experiments respectively using k-fold cross-validation, and then tested on 10 ischemic stroke data. To compare the network performances in generating SR images from the corresponding LR images, structural similarity index measure (SSIM), PSNR, and mean squared error (MSE) were used as evaluation metrics in addition to visual comparison. Lastly, the performance of our proposed model with and without GAN on HC and stroke data was compared with other models using the Mann–Whitney U test with statistical significance set at P < 0.05.

Loss equations

Discriminator loss

where \({y}{\prime}\) is the soft label with the decay of HR and SR images.

Generator loss

where \(\nabla\) is the gradient operator of the image along three axes, N is the dimension of the image (height = width = depth = N = 64), α is the scaling factor of the adversarial loss, and \({1}{\prime}\) is the soft label with decay corresponding to 1 in hard labeling.

Results

Table 1 summarizes the properties of the SR image generator models in terms of the number of parameters, number of floating-point operations (FLOPs), and inference time. Compared to the MRDG64 model, our proposed generative model has a significantly lower number of parameters (~ 1.42 M), and computational footprint (5.5 TFLOPs) while taking less time (~ 18 s) to transform LR into SR. Among all models, SR-ResNet has the smallest number of parameters and lowest computational cost as well as the shortest inference time.

The performances of the networks trained with and without GAN were compared using SSIM, PSNR, and MSE as shown in Tables 2 and 3. Our proposed model trained both with and without GAN significantly outperforms the other models in all evaluation metrics and reconstructs an image that is similar to the original HR image. Figures 3 and 4 show a visual comparison of SR images and residual maps respectively generated using PSNR-only oriented models on HC data. In addition, Fig. 5 shows the visual comparison of generated SR images using a combination of PSNR and GAN-oriented models on HC data. As indicated by the arrows in Figs. 3 and 5, the bifurcation of blood vessels was visualized better using our proposed model compared to others in both modes of training.

Visual comparison of generated images using PSNR-oriented training on HC data. The first row shows images after maximum intensity projection in the coronal direction. The second row shows the focused region (marked by the red square in the top row), and the third row shows the volume-rendered data. The yellow and red arrows on the images show the bifurcation points of the blood vessels that are successfully reconstructed by our model only (yellow arrows) and not reconstructed by any model (red arrows).

Visual comparison of absolute residual maps generated using PSNR-oriented training from HC data. The first row shows original HR images along with generated SR images using cubic spline interpolation and PSNR-oriented models after maximum intensity projection in the coronal direction. The second row shows the absolute difference map between the original data and the generated SR image and the third row shows the difference map of the focused region (marked by the red square in the top row).

Visual comparison of generated images using PSNR and GAN–oriented models on HC data. The first row shows images after maximum intensity projection in the coronal direction. The second row shows the focused region (marked by the red square in the top row), and the third row shows the volume-rendered data. The yellow and red arrows on the images show the bifurcation points of the blood vessels that are successfully reconstructed by our model only (yellow arrows) and not reconstructed by any model (red arrows).

In addition, the proposed model trained without and with GAN also performed better on stroke data compared to others and generated a very similar image to the original HR image, as shown in Figs. 6 and 7, respectively. For further comparison, Supplementary Figs. 1 and 2 as well as Supplementary Animations 1 and 2 show generated images using PSNR-oriented models and PSNR and GAN–oriented models on HC data, respectively. In addition, Supplementary Figs. 3 and 4 as well as Supplementary Animation 3 also show a visual comparison of different models trained on HC data and applied to stroke data. In general, compared with the cubic spline interpolation, all deep learning models performed significantly better on both HC and stroke data.

Testing PSNR-oriented models on stroke data. The first row shows the HR and LR along with the SR data generated using PSNR-oriented models after maximum intensity projection in the sagittal direction. The second row shows volume-rendered data. The yellow ellipse on the original image shows the region of infarction due to ischemic stroke, and the yellow arrows show the visual difference of the vessel in the contralateral hemisphere, which appears occluded in the LR image.

Testing PSNR and GAN–oriented models on stroke data. The first row shows the HR and LR along with the SR data generated using PSNR and GAN–oriented models after maximum intensity projection in the sagittal direction. The second row shows volume-rendered data. The yellow ellipse on the original image shows the region of infarction due to ischemic stroke, and the yellow arrows show the visual difference of the vessel in the contralateral hemisphere, which appears occluded in the LR image.

Average SSIM, PSNR, and MSE scores of 0.983, 36.80, and 0.00021, respectively, were achieved using our proposed model trained with PSNR-oriented loss compared to 0.964, 34.38, and 0.00037 using SR-ResNet and 0.978, 35.47, and 0.00029 using MRDG64 on HC data. In addition, when trained only on HC data and tested on stroke data, our model achieved SSIM, PSNR, and MSE scores of 0.963, 34.14, and 0.00041, respectively, compared to 0.953, 32.24, and 0.00061 using SR-ResNet and 0.957, 32.90, and 0.00054 using MRDG64. The image quality of LSRDG was also greatly improved compared to up-sampling using cubic spline interpolation, achieving SSIM, PSNR, and MSE scores of 0.848, 27.61, and 0.0019 on HC data and 0.831, 26.64, and 0.0025 on stroke data, respectively.

Statistically, our proposed PSNR-only driven model showed a significant improvement in terms of SSIM, PSNR, and MSE on HC data (all p < 10–5) and stroke data (p = 0.003, p < 10–4, and p < 10–4) compared with SR-ResNet. Similarly, it also achieved significant improvement for the same metrics on HC data (p = 0.0002, p < 10–4, and p < 10–4) and stroke data (p = 0.048, p = 0.0005, and p = 0.0005) compared with MRDG64. When trained with PSNR and GAN loss, LSRDGAN showed significant improvement on HC data compared with SRGAN (all p < 10–6) and with ESRGAN (p = 0.002, p = 0.007, and p = 0.006) in terms of SSIM, PSNR, and MSE, respectively. In addition, we found improvement compared with SRGAN on stroke data (p = 0.007, p < 10–4, and p < 10–4) with our model for SSIM, PSNR, and MSE, respectively. But while our proposed GAN-driven model provided better performance on stroke data compared with ESRGAN, statistically it was not significant in terms of the three respective metrics (p = 0.05, p = 0.240, and p = 0.281).

Discussion and conclusion

SR methods have been commonly applied to different categories of medical images8,9,10,24,25,26, but less so on 3D angiographic data. Previously, both 2D and 3D deep learning–based SR networks were applied to clinical MRA data to up-sample LR images generated from HR images by sub-sampling in k-space by a factor from 2 to 6 times, showing the possibility of reducing the scanning time by a factor of four27. Recently, the resolution of clinical time-of-flight magnetic resonance angiography (TOF-MRA) images was improved using a 2D-based pix2pix GAN, which led to significant improvements in diagnostic performance compared with LR TOF-MRA28. In previous works with the aim to increase the resolution of MRI data using deep learning, LR data were frequently synthetically generated by downgrading HR data in k-space or the image domain9,10,27. Comparing synthetically generated LR data and actual LR data from MR experiments, the noise characteristics may be different, which can create data mismatches and degrade performance when applying a model trained on synthetic LR data to real LR data. Specifically, UTE-MRA utilizes non-uniform radial sampling that must be re-grid to Cartesian format before applying a Fourier transform, and the optimum acquisition as well as reconstruction parameters may not be the same for both HR and LR data, resulting in additional sources of data mismatch29,30. To our knowledge, this is the first study to use contrast-enhanced UTE-MRA data to enable consistent registration between LR- (not synthesized) and HR-acquired 3D whole-brain angiograms.

The quality of a generated SR image is strongly influenced by numerous factors such as the model architecture, training data, optimization methods, and the chosen cost function. In comparison to SR-ResNet and MRDG64, our proposed model consistently produced superior SR images with less artifacts when applied to UTE-MRA data. Enhancing the network’s ability to generate more accurate data involves up-sampling features at different depths and connecting them after simple convolution. Although increasing the depth of the network leads to improved performance, it comes with increased computational cost and inference time. Given our goal of reducing the overall time to obtain SR data, we opted for a fixed depth of 6 residual dense blocks. In addition, training SR models using PSNR-oriented loss, such as L2 loss, tends to generate smoothed images, requiring different methods to be employed to recover the edge details of the images. On the other hand, while GAN loss exhibited the capability to generate both high- and low-frequency components of the image, it faced challenges related to artifacts and stability during training28. Since medical data are highly sensitive and artifacts may lead to misdiagnosis, training the model with a cost function that is sensitive enough for both contrast and edge detail and that generates less artifacts is considered appropriate. Notably, employing a smaller patch size and incorporating L2 loss on both the image and gradient of HR and SR significantly enhances the image quality10,31.

As MRA in general uses flow-induced contrast, it is well known that we can’t see the blood vessels that are fully occluded due to the absence of flow even in the original HR images32,33. Our work is exclusively focused on better visualization of healthy blood vessels which can be seen by UTE-MRA in a shorter time and using a less computationally intensive model. The LR data acquisition takes 16 min, only a quarter of the time needed for obtaining a HR image. But LR images lack details of smaller vasculatures that are clearly visible in HR images. Figures 6 and 7 as well as Supplementary Figs. 3 and 4 highlight the similarity between the ipsilateral and contralateral hemispheres in a LR image, with some blood vessels appearing occluded even in the contralateral region (indicated by the arrows in Figs. 6 and 7). This discrepancy poses the risk of causing confusion and misguided diagnosis and treatment. In contrast, such disparities are noticeably absent in both HR and SR images. Despite not being trained with stroke data, our model produced SR images that closely resembled the HR images, achieving this with a significantly reduced time (16 min acquisition and 18 s inference time). This approach demonstrates the potential for training deep learning models on HC data and subsequently applying them to pathological condition data. This is especially pertinent in cases where obtaining HR data from patients with time-sensitive diseases, such as chronic stroke, is clinically infeasible.

The quantity of training data in this research was limited, a constraint we mitigated through the utilization of k-fold cross-validation with acknowledgment of the well-established reliance of deep learning models on data abundance. In addition, likely due to the presence of dynamic vascular changes in the permanent stroke model during the acquisition of both HR and LR data, all methods showed lower performance compared with HC data, an effect that can be lessened by including stroke data in the training dataset. Due to the absence of a completely accurate SR model, it remains challenging to reconstruct all smaller blood vessels and bifurcations that are visible on an original HR image, as shown by the red arrows in Figs. 3 and 5, which can be improved by training the models on a larger dataset and applying further optimizations28. But despite the challenges to recover all image details of HR, the improvement is significant compared to using LR images9,10,27,28,34,35. Therefore, the proposed approach may be clinically advantageous if applied to time-sensitive cases where acquiring HR images is technically challenging or risky28,35. In addition, considering stroke and non-cooperative patients, 16 min may not be clinically feasible, but if clinically translated, the bigger blood vessels of humans than those of rodents may make it possible to start from lower resolutions, which takes a fraction of the time based on the matrix size. Upon clinical translation, this approach can help both clinicians as well as patients in reducing time and cost, thereby increasing patient safety as well as improving diagnostic accuracy of patients with different vascular malfunctions such as ischemia, hemorrhage, aneurysm, and others. Lastly, by synergistically combining with compressed sensing and faster imaging techniques, SR models using deep learning can greatly help to speed up acquisition and minimize the main limitation of MRI in general.

In conclusion, the resolution of contrast-enhanced UTE-MRA was significantly improved compared to LR by combining a well-designed network, suitable loss function, and training with a smaller patch size. Our proposed PSNR-only driven model was able to outperform other methods and provided SR data that is close to the desired HR data.

Data availability

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.

Abbreviations

- ADC:

-

Apparent diffusion coefficient

- BCE:

-

Binary cross-entropy

- CCA:

-

Common carotid artery

- CNN:

-

Convolutional neural network

- DWI:

-

Diffusion weighted image

- ESRGAN:

-

Enhanced super-resolution generative adversarial network

- FLOPs:

-

Floating-point operations

- GAN:

-

Generative adversarial network

- GELU:

-

Gaussian error linear unit

- HC:

-

Healthy control

- HR:

-

High resolution

- IACUC:

-

Institutional Animal Care and Use Committee

- LR:

-

Low resolution

- LSRDG:

-

Ladder-shaped residual dense generator

- LSRDGAN:

-

Ladder-shaped residual dense generative adversarial network

- MCA:

-

Middle cerebral artery

- MCAO:

-

Middle cerebral artery occlusion

- MION:

-

Monocrystalline iron oxide nanoparticle

- MRA:

-

Magnetic resonance angiography

- MRDG:

-

Memory efficient residual dense generator

- MRI:

-

Magnetic resonance imaging

- MSE:

-

Mean squared error

- NADAM:

-

Nesterov-accelerated adaptive moment estimation

- PSNR:

-

Peak signal to noise ratio

- ReLU:

-

Rectified linear unit

- SR:

-

Super resolution

- SRGAN:

-

Super-resolution generative adversarial network

- SR-ResNet:

-

Super resolution residual network

- SSIM:

-

Structural similarity index measure

- TE:

-

Echo time

- TOF-MRA:

-

Time of flight magnetic resonance angiography

- TR:

-

Time of repetition

- UNIST:

-

Ulsan National Institute of Science and Technology

- UTE-MRA:

-

Ultrashort echo time magnetic resonance angiography

- VGG:

-

Visual geometry group

References

Katsuki, M. et al. Usefulness of 3 tesla ultrashort echo time magnetic resonance angiography (Ute-mra, silent-mra) for evaluation of the mother vessel after cerebral aneurysm clipping: Case series of 19 patients. Neurol. Med. Chir. (Tokyo). 61(3), 193–203. https://doi.org/10.2176/nmc.oa.2020-0336 (2021).

Suzuki, T. et al. Usefulness of silent magnetic resonance angiography for intracranial aneurysms treated with a flow re-direction endoluminal device. Interv. Neuroradiol https://doi.org/10.1177/15910199231174546 (2023).

Suzuki, T. et al. Superior visualization of neovascularization with silent magnetic resonance angiography compared to time-of-flight magnetic resonance angiography after bypass surgery in moyamoya disease. World Neurosurg. 175, e1292–e1299. https://doi.org/10.1016/j.wneu.2023.04.119 (2023).

Suzuki, T. et al. Non-contrast-enhanced silent magnetic resonance angiography for assessing cerebral aneurysms after PulseRider treatment. Jpn. J. Radiol. 40(9), 979–985. https://doi.org/10.1007/s11604-022-01276-z (2022).

Kang, M. S., Jin, S. H., Lee, D. K. & Cho, H. J. MRI visualization of whole brain macro- and microvascular remodeling in a rat model of ischemic stroke: A pilot study. Sci. Rep. https://doi.org/10.1038/s41598-020-61656-1 (2020).

Chen, Y. et al. Efficient and accurate MRI super-resolution using a generative adversarial network and 3D multi-level densely connected network. Lect. Notes Comput. Sci. (including Subser Lect Notes Artif Intell Lect Notes Bioinformatics) https://doi.org/10.1007/978-3-030-00928-1_11/FIGURES/2 (2018).

Higo, Y., Komagata, S., Katsuki, M., Kawamura, S. & Koh, A. 1.5 Tesla non-ultrashort but short echo time magnetic resonance angiography describes the arteries near a clipped cerebral aneurysm. Cureus https://doi.org/10.7759/cureus.16611 (2021).

Chen Y, Xie Y, Zhou Z, Shi F, Christodoulou AG, Li D. Brain MRI super resolution using 3D deep densely connected neural networks. In Proc. - International symposium on biomedical imaging. 2018-April. 2018:739–742 https://doi.org/10.1109/ISBI.2018.8363679

Wang J, Chen Y, Wu Y, Shi J, Gee J. Enhanced generative adversarial network for 3D brain MRI super-resolution. In Proceedings - 2020 IEEE winter conference on applications of computer vision WACV 2020 ; 2020:3616–3625 https://doi.org/10.1109/WACV45572.2020.9093603

Sánchez I, Vilaplana V. Brain MRI super-resolution using 3D generative adversarial networks. Published online 2018 Accessed September 23 2023 https://github.com/imatge-upc/3D-GAN-superresolution

Li, H. et al. SRDiff: Single image super-resolution with diffusion probabilistic models. Neurocomputing 479, 47–59. https://doi.org/10.1016/j.neucom.2022.01.029 (2022).

Lugmayr A, Danelljan M, Van Gool L, Timofte R. SRFlow Learning the super-resolution space with normalizing flow In lecture notes in computer science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics). 12350 LNCS Springer Science and Business Media Deutschland GmbH 2020:715–732 https://doi.org/10.1007/978-3-030-58558-7_42

Ledig C, Theis L, Huszár F, et al. Photo-realistic single image super-resolution using a generative adversarial network. In Proc. - 30th IEEE conference on computer vision and pattern recognition CVPR 2017-Janua. ; 2017:105–114 https://doi.org/10.1109/CVPR.2017.19

Gubskiy, I. L. et al. MRI guiding of the middle cerebral artery occlusion in rats aimed to improve stroke modeling. Transl. Stroke Res. 9(4), 417–425. https://doi.org/10.1007/S12975-017-0590-Y/FIGURES/6 (2018).

Jang, M. J., Han, S. H. & Cho, H. J. Correspondence between development of cytotoxic edema and cerebrospinal fluid volume and flow in the third ventricle after ischemic stroke. J. Stroke Cerebrovasc .Dis. https://doi.org/10.1016/j.jstrokecerebrovasdis.2023.107200 (2023).

Maggioni, M., Katkovnik, V., Egiazarian, K. & Foi, A. Nonlocal transform-domain filter for volumetric data denoising and reconstruction. IEEE Trans. Image Process. 22(1), 119–133. https://doi.org/10.1109/TIP.2012.2210725 (2013).

Szegedy C, Ioffe S, Vanhoucke V, Alemi AA. Inception-v4, inception-ResNet and the impact of residual connections on learning. In 31st AAAI conference on artificial intelligence AAAI 2017 31 AAAI press 2017:4278–4284 https://doi.org/10.1609/aaai.v31i1.11231

He K, Zhang X, Ren S, Sun J. Deep residual learning for image recognition. In: Proc. of the IEEE computer society conference on computer vision and pattern recognition. 2016-Decem. ; 2016:770–778 https://doi.org/10.1109/CVPR.2016.90

Kim J, Lee JK, Lee KM. Accurate image super-resolution using very deep convolutional networks. In Proc. of the IEEE computer society conference on computer vision and pattern recognition. 2016-Decem. IEEE Computer Society; 2016:1646–1654 https://doi.org/10.1109/CVPR.2016.182

Hendrycks D, Gimpel K. Gaussian Error Linear Units (GELUs) Published online June 27, 2016. Accessed November 23 2023. https://arxiv.org/abs/1606.08415v5

Meister C, Salesky E, Cotterell R. Generalized entropy regularization or: There’s nothing special about label smoothing. In Proc. of the annual meeting of the association for computational linguistics. ; 2020:6870–6886 https://doi.org/10.18653/v1/2020.acl-main.615

Szegedy C, Vanhoucke V, Ioffe S, Shlens J, Wojna Z. Rethinking the inception architecture for computer vision. In Proc. of the IEEE computer society conference on computer vision and pattern recognition. 2016-Decem. ; 2016:2818–2826 https://doi.org/10.1109/CVPR.2016.308

Dozat T. Incorporating nesterov momentum into adam. ICLR Work 2016;(1):2013–2016. Accessed November 20 2023 https://openreview.net/forum?id=OM0jvwB8jIp57ZJjtNEZ

McDonagh S, Hou B, Alansary A, et al. Context-sensitive super-resolution for fast fetal magnetic resonance imaging. In lecture notes in computer science (including subseries lecture notes in artificial intelligence and lecture notes in bioinformatics). 10555 LNCS. ; 2017:116–126 https://doi.org/10.1007/978-3-319-67564-0_12

You, C. et al. CT super-resolution GAN constrained by the identical, residual, and cycle learning ensemble (GAN-CIRCLE). IEEE Trans. Med. Imaging. 39(1), 188–203. https://doi.org/10.1109/TMI.2019.2922960 (2020).

Hatvani, J. et al. Deep learning-based super-resolution applied to dental computed tomography. IEEE Trans. Radiat. Plasma Med. Sci. 3(2), 120–128. https://doi.org/10.1109/TRPMS.2018.2827239 (2019).

Koktzoglou, I., Huang, R., Ankenbrandt, W. J., Walker, M. T. & Edelman, R. R. Super-resolution head and neck MRA using deep machine learning. Magn. Reson. Med. 86, 335–345. https://doi.org/10.1002/mrm.28738 (2021).

Wicaksono, K. P. et al. Super-resolution application of generative adversarial network on brain time-of-flight MR angiography: Image quality and diagnostic utility evaluation. Eur. Radiol. 33(2), 936–946. https://doi.org/10.1007/s00330-022-09103-9 (2023).

Chan, R. W., Ramsay, E. A., Cunningham, C. H. & Plewes, D. B. Temporal stability of adaptive 3D radial MRI using multidimensional golden means. Magn. Reson. Med. 61(2), 354–363. https://doi.org/10.1002/MRM.21837 (2009).

Feng, L. Golden-Angle Radial MRI: Basics, Advances, and Applications. J. Magn. Reson. Imaging 56(1), 45–62. https://doi.org/10.1002/JMRI.28187 (2022).

Isola P, Zhu JY, Zhou T, Efros AA. Image-to-image translation with conditional adversarial networks. In Proc. - 30th IEEE conference on computer vision and pattern recognition CVPR 2017-Janua. ; 2017:5967–5976 https://doi.org/10.1109/CVPR.2017.632

CCS P, Gattás GS, Nunes DM, nunes RF, Bariani DB. A Case of idiopathic progressive middle cerebral arteriopathy in a child with a lenticulostriate stroke and the cerebral perfusion improvement after edas surgery. Open Access J Neurol Neurosurg 8(3):48–53. https://doi.org/10.19080/OAJNN.2018.08.555737 (2018)

Tong, E., Hou, Q., Fiebach, J. B. & Wintermark, M. The role of imaging in acute ischemic stroke. Neurosurg. Focus. 36(1), E3. https://doi.org/10.3171/2013.10.FOCUS13396 (2014).

Yasaka, K. et al. Impact of deep learning reconstruction on intracranial 1.5 T magnetic resonance angiography. Jpn. J. Radiol. 40(5), 476–483. https://doi.org/10.1007/S11604-021-01225-2/FIGURES/3 (2022).

You, S. H. et al. Deep learning-based synthetic TOF-MRA generation using time-resolved mra in fast stroke imaging. Am. J. Neuroradiol. 44(12), 1391–1398. https://doi.org/10.3174/AJNR.A8063 (2023).

Acknowledgements

This work was partially supported by grants from the National Research Foundation of Korea of the Korean government (Nos. 2018R1A6A1A03025810 and RS-2024-00508681). This research was also supported by KBRI basic research program through Korea Brain Research Institute funded by Ministry of Science and ICT (25-BR-03-02)

Funding

KBRI basic research program through Korea Brain Research Institute funded by Ministry of Science and ICT, 25-BR-03-02, National Research Foundation of Korea, RS-2024-00508681, National Research Foundation of Korea, 2018R1A6A1A03025810.

Author information

Authors and Affiliations

Contributions

H.J.C. and A.W.T. planned and designed the experiment. A.W.T., S.H.J., and Y.L.G. collected the data. H.J.C. and A.W.T. processed the data and implemented the deep learning models. H.J.C. and A.W.T. wrote the paper together. All authors read and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing financial interests or personal relationships that could have influenced the research in this study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Tessema, A.W., Jin, S., Gong, Y. et al. Robust resolution improvement of 3D UTE-MR angiogram of normal vasculatures using super-resolution convolutional neural network. Sci Rep 15, 9383 (2025). https://doi.org/10.1038/s41598-025-92493-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-92493-9