Abstract

Early screening of lung nodules is mainly done manually by reading the patient’s lung CT. This approach is time-consuming laborious and prone to leakage and misdiagnosis. Current methods for lung nodule detection face limitations such as the high cost of obtaining large-scale, high-quality annotated datasets and poor robustness when dealing with data of varying quality. The challenges include accurately detecting small and irregular nodules, as well as ensuring model generalization across different data sources. Therefore, this paper proposes a lung nodule detection model based on semi-supervised learning and knowledge distillation (SSLKD-UNet). In this paper, a feature encoder with a hybrid architecture of CNN and Transformer is designed to fully extract the features of lung nodule images, and at the same time, a distillation training strategy is designed in this paper, which uses the teacher model to instruct the student model to learn the more relevant features to nodule regions in the CT images and, and finally, this paper applies the rough annotation of the lung nodules to the LUNA16 and LC183 dataset with the help of semi-supervised learning idea, and completes the model with the accurate annotation of lung nodules. Combined with the accurate lung nodule annotation to complete the model training process. Further experiments show that the model proposed in this paper can utilize a small amount of inexpensive and easy-to-obtain coarse-grained annotations of pulmonary nodules for training under the guidance of semi-supervised learning and knowledge distillation training strategies, which means inaccurate annotations or incomplete information annotations, e.g., using nodule coordinates instead of pixel-level segmentation masks, and realize the early recognition of lung nodules. The segmentation results further corroborates the model’s efficacy, with SSLKD-UNet demonstrating superior delineation of lung nodules, even in cases with complex anatomical structures and varying nodule sizes.

Similar content being viewed by others

Introduction

Lung cancer is one of the most common malignant tumours1. Its incidence rate and mortality rate rank first in the world2. It is the main cause of cancer death for men and women worldwide. Lung cancer poses a serious threat to human health. In China, over \(75\%\) of patients are diagnosed with advanced or metastatic lung cancer3, and the mortality rate within five years is relatively high. Therefore, early diagnosis and precise treatment of lung cancer are crucial for improving patient survival rates4,5. In recent years, various imaging methods such as chest X-rays, positron emission tomography (PET), computer tomography (CT), and magnetic resonance imaging (MRI) have been used to monitor the occurrence and severity of lung cancer6. CT and MRI are currently important examination methods for early detection and auxiliary diagnosis of lung cancer. Through CT and MRI images, radiologists can identify lung nodules, judge their malignancy, and monitor the size changes and expansion trends of lung nodules, which is an important method in early screening and detection of lung cancer7. At the same time, as lung nodules are one of the early symptoms of lung cancer, early screening for lung cancer can be achieved by accurately detecting and judging the benign and malignant nature of lung nodules in the early stage, thereby improving the prognosis and survival rate of patients. However, with the increasing number of lung cancer patients, radiologists are facing various problems such as increased workload, insufficient human resources, improved diagnostic standards, and complexity of medical imaging8. Different radiologists are easily influenced by subjective consciousness when evaluating lung nodules, and factors such as differences in the location, size, and density of surrounding tissues in the lesion area can also affect radiologists’ judgment of lung nodules. This leads to frequent missed and misdiagnosed cases during the manual screening of lung nodules. The emergence of artificial intelligence has made it possible to use computers to automatically screen and diagnose early lung cancer through patient imaging. Artificial intelligence can use algorithms to learn the feature information of lung cancer from massive, high-dimensional medical data of lung cancer patients, and use the learned feature information to predict the incidence of lung cancer in new patients, thereby achieving the goal of assisting doctors in more effective and faster-automated screening of early lung cancer. With the continuous development of deep learning technology, more and more deep learning algorithms are being used to assist clinical doctors in disease diagnosis7,9,10. These deep learning algorithms improve the accuracy of lung cancer diagnosis and also reduce the workload of radiologists11,12. Xie et al.13 proposed a novel MV-KBC deep model to classify lung nodules using limited data, offering a new approach in the field, showing superior performance compared to existing methods. Pal et al.14 introduced a novel segmentation model named SAC UW-Net, which incorporated self-attention convolutional blocks in the decoder unit and a transient self-attention block between the encoder and decoder to improve feature representation and handle pixel intensity variations in multimodal medical images. Halder et al.15 presented an integrated lung nodule segmentation and characterization framework using atrous convolution, which effectively captured multi-scale features from HRCT images. The ATCNN2PR variant achieves high performance in both segmentation and characterization, outperforming other frameworks on the LIDC-IDRI dataset. Agnes et al.16 proposed a Wavelet U-Net++ approach for lung nodule segmentation, combining U-Net++ with wavelet pooling to capture high- and low-frequency image information. It achieved superior performance on the LIDC-IDRI dataset, effectively segmenting small and irregular nodules. Pal et al.17 proposed an attention UW-Net for automatic segmentation and annotation of chest X-ray images, aiming to improve accuracy and provide probabilistic maps from small datasets to reduce manual annotations. It introduced an intermediate layer between the encoder and decoder pathways, achieving high F1-scores for segmentations of various organs and outperforming other models. However, some existing lung nodule detection algorithms also face some challenges, such as the need for large medical image datasets with high-quality lung nodule annotations for training. The robustness of the algorithms is poor, and obtaining high-quality lung nodule annotations is expensive. To enhance model robustness, data augmentation techniques such as random cropping and flipping can be employed. Random cropping allows the model to learn from different regions of the image, while flipping (horizontal or vertical) introduces variability in the orientation of lung nodules, helping the model to generalize better and improve its performance on diverse and unseen data. Therefore, developing efficient and accurate lung nodule detection algorithms that can be trained using medical datasets with a small amount of lung nodule annotations is still meaningful. Knowledge distillation (KD) is a technique used to transfer knowledge from a large, complex model (the teacher) to a smaller, more efficient model (the student)18. The key idea is to train the student model to mimic the teacher model’s output, which includes not only the final predictions but also the intermediate reasoning steps. Semi-supervised learning (SSL) is a technique that combines a small amount of labeled data with a large amount of unlabeled data to improve the performance of a model. In the context of our research, we use SSL to leverage the unlabeled data to enhance the model’s ability to generalize.

Fine-grained annotation of lung nodules is relatively expensive, and the lack of annotation in a large amount of lung nodule image data poses two challenges for lung nodule segmentation tasks. To address these challenges, based on previous research, we have developed a new semi-supervised network for lung nodule segmentation based on the teacher-student framework. Our contributions in this paper are three-fold:

-

1.

We collect CT images from a total of 183 lung cancer patients to obtain a private lung dataset (hereinafter referred to as LC183), and perform windowing, resampling, and cropping operations on the original lung nodule CT images in the LUNA16 and LC183 dataset, and use the lung nodule coordinates and other information contained in the LUNA16 and LC183 dataset to locate and label the cropped CT images, obtaining fine-grained and coarse-grained labels for lung nodules. This process provides informative methods for the production of fine and coarse labels for lung nodules.

-

2.

We design a hybrid CNN-Transformer network for lung nodule segmentation, called SSLKD-UNet. This network improves the window module in the Transformer model to reduce computational complexity and encodes the labelled image blocks from the UNet feature map of the convolutional neural network into an input sequence for extracting global context, fully combining the advantages of CNN and Transformer networks.

-

3.

We design a network training process based on knowledge distillation, which trains simple lung nodule segmentation networks such as UNet using a small amount of coarse-grained annotated lung nodule data19,20. The trained network is used to label a large amount of unannotated lung nodule data, and the obtained annotations are used to train our network. This not only achieves the use of a large amount of unannotated lung nodule data but also improves the accuracy of the model’s recognition of lung nodules.

Methods

In this chapter, we first introduce the training process of our model based on the teacher-student architecture. Subsequently, we present the teacher network used for generating lung nodule annotation data. Finally, we introduce the network designed for lung nodule segmentation and the LUNA16 dataset, then introduce our preprocessing process for LUNA16, and introduce our implementation details.

Training scheme

Figures 1 and 2 illustrate the training process we designed. Before formally commencing the training, we initially divide the dataset into three parts: lung nodule image data \(X_{t}\) and corresponding coarse-grained annotation images \(SL_{t}\) for training the Teacher Annotator, lung nodule image data \(X_{s}\) for training the Student Segmentor, and lung nodule image data \(X_{test}\) along with corresponding fine-grained annotation images \(FL_{test}\) for evaluating the model. These three sets of data are mutually exclusive with no overlap.

The following is the specific training process:

-

1.

The first step of the training involves using lung nodule image data \(X_{t}\) and the corresponding coarse-grained annotation images \(SL_{t}\) to train the Teacher Annotator. In this process, we opt for a model in the lung nodule segmentation domain that exhibits good performance, has a simple structure, and is easy to implement, to serve as the Teacher Annotator.

-

2.

The second step of the training involves using the Teacher Annotator trained in the first step to generate lung nodule annotation images \(PL_{s}\) for the lung nodule image data \(X_{s}\). The lung nodule annotation images \(PL_{s}\) and the lung nodule image data \(X_{s}\) together constitute the data used to train the Student Segmentor. In this step, the lung nodule image data \(X_{s}\) is typically a large quantity of lung cancer patient images without lung nodule annotations.

-

3.

The third step of the training involves separately using lung nodule image data \(X_{s}\) and lung nodule annotation images \(PL_{s}\) to jointly train the Student Segmentor. This approach enables the model to not only incorporate the information learned by the Teacher Annotator from the lung nodule annotation images \(PL_{s}\) but also learn additional lung nodule-related features through \(X_{s}\).

-

4.

The fourth step of the training involves using lung nodule image data \(X_{test}\) and the corresponding fine-grained annotation images \(FL_{test}\) to validate the model.

Teacher annotator

The teacher-student framework is particularly well-suited for lung nodule segmentation in a semi-supervised context for several reasons. Firstly, it allows for the effective transfer of knowledge from a pre-trained teacher model, which has learned to recognize key features of lung nodules, to a student model. This transfer is crucial in scenarios where labeled data is scarce, as it provides the student model with a strong initial understanding of the task. Secondly, the framework enables the student model to learn from both the limited labeled data and the pseudo-labeled data generated by the teacher, thereby making efficient use of the available data and improving the model’s ability to generalize to new, unseen lung nodule images. This dual learning approach is advantageous in medical imaging, where acquiring large annotated datasets is often impractical, and the ability to learn from a combination of labeled and unlabeled data is essential for developing robust segmentation models. Following the setup of the training process, we initially chose a deep learning-based lung nodule segmentation network as the Teacher Annotator. Previous research as demonstrated the efficiency and effectiveness of the UNet network, so we select UNet as the foundational Teacher Annotator21. Subsequently, we select networks such as ResUNet22, AttUNet23, SwinUNet24, UTransformer25, among others, to annotate a large number of lung nodules.

Student segmentor

We design a hybrid CNN-Transformer architecture for medical image segmentation, called SSLKD-UNet, leveraging the strengths of both components to achieve improved performance, particularly in preserving fine-grained spatial details and capturing global context for accurate localization in medical images. The model integrates a Swin-Transformer based on the Transformer architecture into the bottleneck layer of the CNN-based U-Net to achieve a fusion of CNN and Transformer. Specifically, the U-Net encoder is first used to extract features from the image to obtain local information. Then, the features extracted by the encoder are input into the Swin-Transformer to obtain global information of the image. Finally, the U-Net decoder is used to upsample the image to restore its original resolution. Figure 3 shows the model. SSLKD-UNet is proposed as a medical image segmentation framework, presenting a novel approach that combines CNN and Swin-Transformer. The model addresses the challenge of losing feature resolution introduced by Transformers by adopting a hybrid CNN-Transformer architecture. This design aims to leverage detailed high-resolution spatial information from CNN features and the global context encoded by Swin Transformer. The sliding window mechanism in the Transformer is a technique that limits the attention span of each token to a fixed-size window around it, significantly reducing computational complexity from \(O(n^2)\) to \(O(n\times w)\), where n is the sequence length and w is the window size. This makes it more efficient for processing long sequences compared to global self-attention, which attends to all tokens and is computationally expensive. The sliding window approach effectively captures local context while maintaining scalability, making it suitable for tasks involving large inputs like long documents or high-resolution images. SSLKD-UNet is an architecture designed for medical image segmentation, particularly applied to the segmentation of lungs in CT images. The model’s architecture is rooted in the well-known U-Net structure, consisting of an encoder-decoder framework. Both the encoder and decoder in the SSLKD-UNet model have a three-layer structure. This encoding step before inputting into the Swin-Transformer aims to efficiently extract local image features, optimizing the utilization of computational resources in the initial stages of the model. The resulting feature map from the encoding process is then fed into the Swin-Transformer for further operations. On the decoder side, a structure similar to the encoder is employed.

The Swin-Transformer incorporates a sliding window operation to reduce the computational complexity of self-attention. It contains 12 layers of Swin-Transformer layer, each layer contains an attention computation. The attention computation is performed in two stages: first within a regular non-overlapping window and then in a new window obtained by shifting the first window to the right by half of its width. This sliding window operation significantly reduces the computational pressure of the model, making it more feasible for image segmentation tasks. Within the Swin-Transformer layer, the self-attention computation for each window is governed by specific equations. First, the two dimensions of H and W of the feature map are combined into the same dimension to finally obtain the input sequence \(z_0\in R^{d\times N}\) of Swin-Transformer, where \(N=\frac{H}{4}\times \frac{W}{4}\) is the number of elements corresponding to the incoming sequence. The self-attention computation for each window follows the equations shown in Equation 1 and Equation 2. These equations involve parameter matrices \(W_q \in R^{d \times d}\), \(W_k \in R^{d \times d}\), and \(W_v \in R^{d \times d}\), where d is the dimension of the features \(x_i \in R^{M^2 \times d}\) within each window. The multiplication of these matrices with the feature vectors produces three attention vectors: \(Q \in R^{M^2 \times d}\), \(K \in R^{M^2 \times d}\), and \(V \in R^{M^2 \times d}\). Additionally, the self-attention calculation incorporates positional information through a learnable relative positional deviation \(B \in R^{M^2 \times M^2}\).

To keep the feature map with the same size in the encoder, two consecutive convolution operations are used here to reshape its dimension to size \(d\times \frac{H}{4}\times \frac{W}{4}\). After the above operations, the feature map has the same shape as the output at the encoder side.

Dataset

In order to more fully measure the performance of our model, we test the performance of our model using an open source dataset and a built private dataset respectively. The following is a detailed description of the datasets.

Ethical approval

All methods used in this study followed relevant ethical guidelines and regulatory requirements. All experimental protocols have been approved by the ethics Review Committee of the First Affiliated Hospital of Dalian Medical University. Prior to the start of the study, all participants or their legal guardians signed informed consent forms to ensure that they were fully informed about the purpose, procedure, potential risks and benefits of the study.

LUNA16

The LUNA16 dataset26 is a subset of the largest public lung nodule dataset, LIDC-IDRI27, whose main purpose is to perform automatic detection and segmentation of lung cancer. 888 CT scans of the lung are included in the LUNA16 dataset, each containing 1-4 nodules, for a total of 1186 nodules. The image size range from \(512\times 512\times 95\) to \(512\times 512\times 733\), with the voxels size of \(0.78 \times 0.78 \times 1.25\) \(mm^3\). The ground truth was generated using an automatic segmentation algorithm28. It encompasses the right and left lungs and trachea as distinct labels. The LUNA16 annotations are presented in terms of nodule location (x, y, and z-axis coordinates), and the original image size is \(512\times 512\).

LC183

We collect CT data from 183 lung cancer patients at the First Affiliated Hospital of Dalian Medical University. The data of 183 patients is called LC183 and is collected with the informed consent of all participants and by the ethical guidelines of the Ethics Committee of the Hospital. Each patient has at least one pulmonary nodule and at most three pulmonary nodules in the images. The images size range from \(512\times 512\times 49\) to \(512\times 512\times 368\), with the voxels size ranging from \(0.50 \times 0.50 \times 0.50 mm^3\) to \(1.25 \times 1.25 \times 5 mm^3\). The lung nodule labelling file format of LC183 is nii.gz, which stores the masked image of the lung nodule.

Preprocessing

We perform a series of preprocessing operations on two datasets to make them more usable for model training.

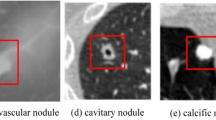

The lung cancer images of patients in the LUNA16 dataset are computed tomography (CT) images. CT imaging involves scanning a specific thickness of a cross-section of the human body using precisely directed X-rays. Based on the received X-ray signals, a 3D image of the body section is reconstructed, providing a higher spatial resolution and clear three-dimensional lesion images compared to X-rays. The LUNA16 dataset includes several CT images containing lung nodules. The information stored in the original CT files obtained through computed tomography includes images of all organs within the scanned area of the body (including interference areas such as air and blood vessels). To enhance the segmentation of lung nodules by the model, following29, we first perform windowing on the CT images to highlight the lung area. Several CT images from the LUNA16 dataset, before and after windowing, are shown in Fig. 4. Subsequently, we use a linear interpolation algorithm to resample the CT images in the LUNA16 dataset, ensuring that the spacing between various scan planes for each patient’s CT image is 1mm. This is done to address the issue of inconsistent spatial scales among scan planes caused by different scan plane spacings in CT images (Fig. 5).

The LUNA16 dataset includes coordinate information for lung nodules in CT images. Previous studies30 have generated fine-grained annotations for lung nodules based on the nodule coordinates. To explore our proposed model’s learning capabilities with coarse-grained annotations and its ability to recognize lung nodules, we utilize the nodule coordinate information to simultaneously generate fine-grained and coarse-grained annotations. As shown in Fig. 6, for an original CT image with dimensions of \(512 \times 512\), we first crop it around the lung nodule locations, creating images of size \(96 \times 96\). Subsequently, referring to the LUNA16 nodule coordinates information, we annotate the lung nodules in the CT image. The fine-grained annotation is irregular precisely covering the nodule region, while the coarse-grained annotation is a rectangular box annotation covering the nodule region and a surrounding portion. Based on the fine-grained and coarse-grained annotations, we generate detailed label maps and rough label maps for training and validation of the model.

The preprocessed LUNA16 dataset is randomly divided into three non-overlapping sets in a 5 : 3 : 2 ratio. The first set comprises lung nodule image data \(X_{t}\) and corresponding coarse-grained annotation images \(SL_{t}\), utilized for training the Teacher Annotator. The second set contains lung nodule image data \(X_{s}\), and using the Teacher Annotator generates lung nodule annotations \(PL_{s}\) for \(X_{s}\). Both \(X_{s}\) and \(PL_{s}\) are employed in training the Student Segmentor. The third set, \(X_{test}\), along with its corresponding fine-grained annotation images \(FL_{test}\), is reserved for testing the model’s performance.

We invite several clinicians with rich experience in the diagnosis of pulmonary nodules to label the CT images of patients in the LC183 dataset, and thelabelling the process is the same as that described above for the LUAN16 dataset. For example, Fig. 6 shows the images of one lung nodule in LC183 dataset. The tool used for clinician labelling is Slicer 5.2.1.

Implementation details

We use Pytorch 1.10 to realize our network and train our network on NVIDIA GeForce RTX 3090 for 150 epochs to ensure that the loss function converged and achieved the training effect. We employ the Adam optimizer to optimize our network, and the learning rate of the optimizer is set to \(10^{-5}\), which based on previous experience, balances training speed as well as granularity when performing training for similar tasks. If the verification loss did not improve within 10 epochs, we would terminate the training in advance to avoid network over-fitting. During testing, we use pixel accuracy, Dice coefficient, sensitivity, specificity and Hausdorff distance to evaluate network performance2,31.

Results

In this chapter, we first provide a brief description of evaluation metrics used in the experiment. And then we conduct ablation experiments to examine the influence of different parameter configurations on the model’s performance. Subsequently, we investigate the impact of changing the Teacher Annotator on the performance of the Student Segmentor in lung nodule segmentation. Finally, we explore the performance differences between our proposed SSLKD-UNet model and other competitive models in the field of lung nodule segmentation, along with the reasons behind these differences.

Influence of different teacher annotators

In the real world, teachers often need to possess a more extensive knowledge base than their students or play a guiding role in their learning. The UNet model in the field of medical image segmentation has proven to be effective and efficient not only in medical image segmentation but also in tasks such as lung nodule segmentation. Therefore, we have chosen the UNet model as the foundational Teacher Annotator, along with variants such as ResUNet22 and AttUNet23, SwinUNet24, UTransformer25, to thoroughly investigate and compare the impact of different Teacher Annotator models on the segmentation performance of the Student Segmentor.

Table 1 presents the segmentation performance of the SSLKD-UNet model under different Teacher Annotators on LUNA16 dataset. From the Table 1, it can be observed that SSLKD-UNet performs relatively well when the Teacher Annotator is either the UNet model, the ResUNet model, or the UTransformer. Particularly, when the Teacher Annotator is UNet, most indicators of SSLKD-UNet show relatively high performance. However, when the Teacher Annotator is the AttUNet model or the SwinUNet, SSLKD-UNet performs relatively poorly across various metrics. We analyze that this outcome might be attributed to the fact that the UNet, AttUNet, and ResUNet models serving as Teacher Annotators exhibit a relative increase in parameter count. Model parameters are typically associated with representational and learning capabilities, where more parameters often imply the ability to learn features and patterns more intricately, fitting the training data better. While having more parameters can enhance performance in some scenarios by adapting to complex data distributions, when the training data for the Teacher Annotator is limited, an excess of parameters can lead to inadequate model training. This results in insufficient learning from the data, inaccurate generation of lung nodule annotations for SSLKD-UNet learning, and ultimately a decline in SSLKD-UNet performance.

Table 2 illustrates the performance of SSLKD-UNet under various Teacher Annotators on the LC183 dataset. The Teacher list denotes the models utilized for Teacher Annotation. The bold result signifies the most favorable outcome among the pertinent indicators.

From Table 2, it’s apparent that SSLKD-UNet performs admirably when the Teacher Annotator is either the UNet model or the ResUNet model. Specifically, when the Teacher Annotator is the ResUNet model, SSLKD-UNet achieves the highest Dice coefficient and sensitivity among all models considered. Similarly, when the Teacher Annotator is the UNet model, SSLKD-UNet achieves the best accuracy and specificity among the models. However, the performance of SSLKD-UNet diminishes notably when the Teacher Annotator is either the SwinUNet model or the AttUNet model. This discrepancy in performance might be attributed to differences in the architectures and complexities of the Teacher models.

The segmentation performance of the SSLKD-UNet model under different Teacher Annotators on LUNA16 dataset, where SwinUNet, AttUNet, ResUNet, UTransformer, and UNet represent the names of the Teacher Annotator models used in the corresponding columns. GT represents fine-grained annotated images corresponding to lung nodule images.

The segmentation performance of the SSLKD-UNet model under different Teacher Annotators on LC183 dataset, where SwinUNet, AttUNet, ResUNet, UTransformer, and UNet represent the names of the Teacher Annotator models used in the corresponding columns. GT represents fine-grained annotated images corresponding to lung nodule images.

The Transformer architecture’s Teacher model SwinUNet has a relatively large number of parameters, which makes it difficult to obtain sufficient training on our experimental dataset. As a result, SwinUNet, as a teacher, is not accurate enough in recognizing lung nodules. The subsequent training process of SSLKD-UNet produces misleading guidance. However, the model UTransformer based on the Transformer architecture has a small number of parameters, which can fully leverage the advantages of both Transformer and CNN, allowing for sufficient training on the same dataset and generating guidance that is beneficial for SSLKD-UNet to learn lung nodule features.

Figure 7 also illustrates the segmentation of lung nodules by SSLKD-UNet under different Teacher Annotators on LUNA16 dataset. Figure 8 also illustrates the segmentation of lung nodules by SSLKD-UNet under different Teacher Annotators on LC183 dataset.

Ablation experiment

From the Table 3, it can be seen that on LUNA16 dataset whether we remove the Swin Transformer layer from the model or do not use the teacher-student training strategy during the model training process, it will lead to a decrease in model performance. This proves the effectiveness of our proposed training strategy and the superiority of our designed SSLKD-UNet in detecting lung nodules.

Based on Table 4, the results of the ablation experiment on the LC183 dataset demonstrate that both removing the Swin Transformer layer from the model and not utilizing the teacher-student training strategy during the model training process lead to a decrease in model performance. This further validates the effectiveness of our proposed training strategy and underscores the superiority of our designed SSLKD-UNet in detecting lung nodules.

Performance of different student segmentors

In order to investigate the competitiveness and superiority of our proposed lung nodule segmentation model, SSLKD-UNet, in the task of lung nodule segmentation, we conducted a horizontal comparison of the performance of SSLKD-UNet and other models such as UNet, ResUNet, and AttUNet on lung nodule segmentation tasks, keeping the Teacher Annotator constant.

The segmentation performance of different Student Segments under the same Teacher Annotator on LUNA16 dataset. UTransformer, AttUNet, ResUNet, SwinULCNet, and SSLKD-UNet respectively represent the names of the Student Segmentor models used in the corresponding columns. GT represents fine-grained annotated images corresponding to lung nodule images.

The segmentation performance of different Student Segments under the same Teacher Annotator on LC183 dataset. UTransformer, AttUNet, ResUNet, SwinULCNet, and SSLKD-UNet respectively represent the names of the Student Segmentor models used in the corresponding columns. GT represents fine-grained annotated images corresponding to lung nodule images.

It can be observed that when using the same Teacher Annotator, different Student Segmentors exhibit varying performances in lung nodule segmentation. From Table 5, it can be seen that the SSLKD-UNet model performs relatively well across various metrics. We analyze that when trained with lung nodule annotations generated by the Teacher Annotator, SSLKD-UNet, AttUNet, ResUNet, SwinUNet, and UTransformer, SSLKD-UNet exhibits superior learning and correction capabilities. It not only learns the lung nodule features provided by the Teacher Annotator but also has the ability to correct imprecise information supplied by the Teacher Annotator to some extent. For instance, when the Teacher Annotator inaccurately annotates regions around some lung nodules as actual nodules, SSLKD-UNet can learn to identify and correct such areas.

It is evident from Table 6 that under the same Teacher Annotator, different Student Segmentors exhibit distinct performances in lung nodule segmentation. Notably, the SSLKD-UNet model demonstrates superior performance across various metrics. This suggests that when trained with lung nodule annotations generated by the Teacher Annotator, SSLKD-UNet possesses exceptional learning and correction capabilities. It not only learns the lung nodule features provided by the Teacher Annotator but also has the ability to identify and rectify inaccurate information supplied by the Teacher Annotator to some extent. For example, when the Teacher Annotator erroneously annotates regions around some lung nodules as actual nodules, SSLKD-UNet can learn to recognize and correct such areas.

Figures 9 and 10 illustrates the segmentation results of our SSLKD-UNet and other models on lung nodules under the same Teacher Annotator on LUNA16 and LC183 dataset, respectively.

Discussion

In the context of existing literature, our study’s results underscore the transformative potential of semi-supervised learning and knowledge distillation in the realm of lung nodule segmentation. Traditional approaches have been hampered by the prohibitive cost and labor-intensity of acquiring large volumes of finely annotated medical images. Our SSLKD-UNet model, by effectively harnessing coarse annotations through a teacher-student framework, not only circumvents this limitation but also achieves a significant leap in segmentation accuracy, as evidenced by the improved Dice coefficients and reduced Hausdorff distances compared to models trained without this strategy. This aligns with emerging trends in deep learning for medical imaging, where innovative training paradigms are sought to optimize the use of available data.

The practical implications of our research are profound. The possibility of bias or errors in annotations is an important consideration. In clinical practice, inaccuracies in nodule annotations could lead to misdiagnosis or overlooked cases, impacting patient treatment plans and outcomes. Therefore, ensuring the reliability and accuracy of annotations used for training and validation is crucial for developing trustworthy lung nodule segmentation models that can assist in clinical decision-making. In clinical settings, the ability to accurately segment lung nodules from CT scans with minimal reliance on detailed annotations can greatly expedite the diagnosis of lung cancer, potentially leading to earlier interventions and improved patient outcomes. Theoretically, our work contributes to the body of knowledge on how hybrid architectures, combining the strengths of CNNs and Transformers, can be optimized for specific tasks. Moreover, it provides a robust framework for future research to explore the integration of even larger volumes of unlabeled data and the development of more sophisticated models that can further enhance the precision and efficiency of medical image analysis.

Despite the promising results of our SSLKD-UNet model, there are several limitations and computational considerations that warrant discussion. Firstly, while the model demonstrates robust performance with coarse annotations, the initial quality of these annotations can still significantly impact the final segmentation accuracy. In cases where the coarse annotations are particularly imprecise, the teacher model may propagate errors to the student model, potentially limiting the overall performance gains. Additionally, the computational complexity of the model is notably high due to the integration of both CNN and Transformer components. The Swin-Transformer layer, in particular, although optimized with a sliding window operation, still demands substantial computational resources, which may pose challenges for real-time applications or deployment in resource-constrained environments. The training process, especially the knowledge distillation phase, requires careful tuning and can be time-consuming, which might hinder rapid iteration and model updates in a clinical workflow. Future work will focus on optimizing the model architecture to reduce computational overhead while maintaining or improving performance, and exploring more efficient training strategies to enhance the practicality of the model in clinical settings.

Conclusion

In this study, we have designed a novel lung nodule segmentation network, SSLKD-UNet, based on a hybrid architecture of CNN and Transformer. This network aims to achieve precise detection of lung nodules, enabling early screening for lung cancer. To leverage a large amount of lung cancer CT image data without lung nodule annotations, we have devised a training process based on a teacher-student network. This involves training a teacher annotator to generate lung nodule annotations for lung cancer CT images, followed by training a student segment to improve its accuracy in lung nodule segmentation. The model’s computation of a single image is controlled in milliseconds. After a series of preprocessing steps on the LUNA16 and LC183 datasets, obtaining coarse-grained and fine-grained lung nodule annotation images, we conducted experiments to validate the superior performance of our proposed SSLKD-UNet network in lung nodule segmentation tasks. Additionally, we demonstrated the effectiveness of the proposed training process. Although the proposed model performs well on the aforementioned datasets, further validation on additional datasets is necessary to ensure its applicability in real-world clinical settings. In future work, we plan to further explore how to incorporate a large amount of data without lung nodule annotations into the training process of the lung nodule segmentation network. We also aim to design improved lung nodule segmentation networks.

Data availibility

Data supporting the findings of the current study are available from the corresponding author on reasonable request.

References

Siegel, R. L. et al. Colorectal cancer statistics, 2020. CA Cancer J. Clin, 70, 145–164 (2020).

Mederos, N., Friedlaender, A., Peters, S. & Addeo, A. Gender-specific aspects of epidemiology, molecular genetics and outcome: lung cancer. ESMO Open 5, e000796 (2020).

Zeng, H. et al. Cancer survival in China, 2003–2005: A population-based study. Int. J. Cancer 136, 1921–1930 (2015).

Rendon-Gonzalez, E. & Ponomaryov, V. Automatic lung nodule segmentation and classification in ct images based on svm. In 2016 9th International Kharkiv Symposium on Physics and Engineering of Microwaves, Millimeter and Submillimeter Waves (MSMW) 1–4 (IEEE, 2016).

Hosseini, S. H., Monsefi, R. & Shadroo, S. Deep learning applications for lung cancer diagnosis: A systematic review. Multimed. Tools Appl. 1–31 (2023).

Illa, P. K., Kumar, T. S. & Hussainy, F. S. A. Deep learning methods for lung cancer nodule classification: A survey. J. Mob. Multimed. 18, 421–450 (2021).

Monkam, P. et al. Detection and classification of pulmonary nodules using convolutional neural networks: A survey. IEEE Access 7, 78075–78091 (2019).

Zhang, J. et al. Detection-guided deep learning-based model with spatial regularization for lung nodule segmentation (2024). arXiv:2410.20154.

Winkels, M. & Cohen, T. S. Pulmonary nodule detection in ct scans with equivariant cnns. Med. Image Anal. 55, 15–26 (2019).

Zhang, G. et al. Automatic nodule detection for lung cancer in ct images: A review. Comput. Biol. Med. 103, 287–300 (2018).

Cao, W., Wu, R., Cao, G. & He, Z. A comprehensive review of computer-aided diagnosis of pulmonary nodules based on computed tomography scans. IEEE Access 8, 154007–154023 (2020).

Ghosal, P. et al. Autcd-net: An automated framework for efficient covid-19 diagnosis on computed tomography scans. In Machine Learning in Information and Communication Technology (eds Deva Sarma, H. K. et al.) 109–116 (Springer Nature Singapore, 2023).

Xie, Y. et al. Knowledge-based collaborative deep learning for benign-malignant lung nodule classification on chest ct. IEEE Trans. Med. Imaging 38, 991–1004. https://doi.org/10.1109/TMI.2018.2876510 (2019).

Pal, D., Meena, T., Mahapaatra, D. & Roy, S. Sac uw-net: A self-attention-based network for multimodal medical image segmentation. In 2024 IEEE International Symposium on Biomedical Imaging (ISBI) 1–5. https://doi.org/10.1109/ISBI56570.2024.10635611 (2024).

Halder, A. & Dey, D. Atrous convolution aided integrated framework for lung nodule segmentation and classification. Biomed. Signal Process. Control 82, 104527. https://doi.org/10.1016/j.bspc.2022.104527 (2023).

Akila Agnes, S., Arun Solomon, A. & Karthick, K. Wavelet u-net++ for accurate lung nodule segmentation in ct scans: Improving early detection and diagnosis of lung cancer. Biomed. Signal Process. Control 87, 105509. https://doi.org/10.1016/j.bspc.2023.105509 (2024).

Pal, D., Reddy, P. B. & Roy, S. Attention uw-net: A fully connected model for automatic segmentation and annotation of chest x-ray. Comput. Biol. Med. 150, 106083. https://doi.org/10.1016/j.compbiomed.2022.106083 (2022).

Umirzakova, S., Abdullaev, M., Mardieva, S., Latipova, N. & Muksimova, S. Simplified knowledge distillation for deep neural networks bridging the performance gap with a novel teacher-student architecture. Electronics https://doi.org/10.3390/electronics13224530 (2024).

Kim, S. et al. Federated learning with knowledge distillation for multi-organ segmentation with partially labeled datasets. Med. Image Anal. 95, 103156. https://doi.org/10.1016/j.media.2024.103156 (2024).

Gui, S. et al. Mt4mtl-kd: A multi-teacher knowledge distillation framework for triplet recognition. IEEE Trans. Med. Imaging 43, 1628–1639. https://doi.org/10.1109/TMI.2023.3345736 (2024).

Agarwal, R., Ghosal, P., Sadhu, A. K., Murmu, N. & Nandi, D. Multi-scale dual-channel feature embedding decoder for biomedical image segmentation. Comput. Methods Programs Biomed. 257, 108464. https://doi.org/10.1016/j.cmpb.2024.108464 (2024).

Ibtehaz, N. & Rahman, M. S. Multiresunet: Rethinking the u-net architecture for multimodal biomedical image segmentation. Neural Netw. 121, 74–87. https://doi.org/10.1016/j.neunet.2019.08.025 (2020).

Carmo, D., Rittner, L. & de Alencar Lotufo, R. Multiattunet: Brain tumor segmentation and survival multitasking. In BrainLes@MICCAI (2020).

Cao, H. et al. Swin-unet: Unet-like pure transformer for medical image segmentation. In Proceedings of the European Conference on Computer Vision Workshops(ECCVW) (2022).

Petit, O. et al. U-net transformer: Self and cross attention for medical image segmentation. In Machine Learning in Medical Imaging (eds Lian, C. et al.) 267–276 (Springer International Publishing, 2021).

Setio, A. A. A. et al. Validation, comparison, and combination of algorithms for automatic detection of pulmonary nodules in computed tomography images: The luna16 challenge. Elsevier (2017).

Armato, S. G. et al. The lung image database consortium (lidc) and image database resource initiative (idri): A completed reference database of lung nodules on ct scans. Acad. Radiol. 14, 1455–1463 (2007).

Eva, et al. Automatic lung segmentation from thoracic computed tomography scans using a hybrid approach with error detection. Med. Phys. 36, 2934–2947 (2009).

Zhao, L., Shao, Y., Jia, C. & Ma, J. Time-series lung cancer ct dataset. In 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM), 3914–3915. https://doi.org/10.1109/BIBM55620.2022.9995198 (2022).

Manivannan., D., Manikandan, N. & Kavitha, M. Discovering lung cancer cell using machine learning. In 2022 International Conference on Data Science, Agents & Artificial Intelligence (ICDSAAI), vol. 01, 1–3. https://doi.org/10.1109/ICDSAAI55433.2022.10028961 (2022).

Al-Shabi, M., Shak, K. & Tan, M. Procan: Progressive growing channel attentive non-local network for lung nodule classification. Pattern Recognit. 122, 108309 (2022).

Acknowledgements

This work was supported by the Natural Science Foundation of Liaoning Province (No.2022-B5-243), the Dalian Science and Technology Innovation Fund (No.2023JJ12SN029) and the First Affiliated Hospital of Dalian Medical University.

Author information

Authors and Affiliations

Contributions

Conception and design of study (Wenjuan Liu, Min Feng, and Yanxia Li.); Collection of data (Wenjuan Liu, Limin Zhang, Xiangrui Li); Analysis and interpretation of data (Yanxia Li, Min Feng, and Wenjuan Liu.); Writing the article (Wenjuan Liu, Min Feng, and Yanxia Li); All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Liu, W., Zhang, L., Li, X. et al. A semisupervised knowledge distillation model for lung nodule segmentation. Sci Rep 15, 10562 (2025). https://doi.org/10.1038/s41598-025-94132-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-94132-9

Keywords

This article is cited by

-

GLANCE: continuous global-local exchange with consensus fusion for robust nodule segmentation

npj Digital Medicine (2025)