Abstract

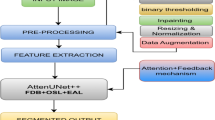

Skin lesion segmentation presents significant challenges due to the high variability in lesion size, shape, color, and texture and the presence of artifacts like hair, shadows, and reflections, which complicate accurate boundary delineation. To address these challenges, we proposed ARCUNet, a semantic segmentation model including residual convolutions and attention techniques to improve segmentation accuracy to address the challenges of skin lesion segmentation, By incorporating residual convolutions and attention mechanisms, ARCUNet enhances feature learning, stabilizes training, and sharpens focus on lesion boundaries for improved segmentation accuracy. Residual convolutions ensure better gradient flow and faster convergence, while attention mechanisms refine feature selection by emphasizing critical lesion regions and suppressing irrelevant details. The model was tested on the ISIC 2016, 2017, and 2018 datasets with outstanding segmentation results with accuracy measures of 98.12%, 96.45%, and 98.19%, Dice measures of 94.68%, 91.21%, and 95.34%, and Jaccard measures of 91.14%, 88.33%, and 93.53%, respectively. These findings signify the ability of ARCUNet to segment skin lesions accurately and thus as an effective tool for computerized skin disease diagnosis.

Similar content being viewed by others

Introduction

Skin lesions are any abnormal alteration in the texture or aspect of the skin. These can appear as sores, lumps, growths, or changes in skin color and texture1. Skin lesions can be benign, such as moles and warts, or malignant, such as skin cancer. Healthcare professionals typically commence the detection of skin lesions by conducting a visual examination, in which they seek out any irregularities or changes in existing skin patches or new growths2. A variety of diagnostic techniques, such as dermoscopy, may be employed by dermatologists to conduct a more thorough examination of the lesion3. The most prevalent form of cancer is skin cancer, with an estimated 2–3 million non-melanoma skin malignancies and 132,000 melanoma cases occurring annually on a global scale4. It is expected that several additional skin disorders also pose a major burden on the community. Around two to three percent of the world’s population, or more than 125 million people, are affected by psoriasis5. The kind of eczema known as atopic dermatitis affects as much as twenty percent of teenagers and three percent of adults all over the world. There are around fifty million people in the United States who are plagued by acne alone each year6. Acne is the most common skin disorder in the country.

The facts show that skin illnesses affect all demographics and regions of the world, emphasizing the need for early detection, diagnosis, and treatment. This method requires a special magnifying lens7. Skin biopsies (removal of a tiny sample of the lesion and microscopical inspection to determine malignancy) can provide a more complete investigation. Severity, origin, and kind determine skin lesion therapy8. Benign lesions may not need treatment until they hurt or seem bad. Benign lesions are treated with cryotherapy, topical medicines, or minor surgery9. For severe cases, skin cancer treatment may need for surgical excision, radiation treatment, chemotherapy, or immunotherapy—more invasive approaches10. To guarantee treatment success and identify recurrences, regular follow-up and monitoring are essential. patient should direct sunlight, apply sunscreen, wear protective clothing to avoid skin cancer11.

Image segmentation is necessary for the identification of skin lesions as it helps to separate the boundaries and characteristics of lesions from the tissue around them, therefore converting complicated visual data into understandable information12. Correct segmentation in dermatology is essential for accurate lesion isolation which is required for the diagnosis of many skin illnesses including melanoma and other skin cancers. Medical professionals can evaluate the degree and course of the disease by using segmented photographs, therefore obtaining a complete awareness of the size, form, and position of skin lesions13. Moreover, perfect segmentation helps identify lesion characteristics, therefore allowing better treatment planning and monitoring throughout the course of treatment. More efficient patient management and greater diagnostic accuracy follow from increased clarity and resolution of lesion borders arising from advanced segmentation algorithms, such as those used by UNet14. Since image segmentation significantly enhances the diagnosis, identification, and treatment of skin lesions15, overall, it is a vital approach in dermatological imaging.

Because of its specialized architecture which is especially meant for exact pixel-level classification—the UNet segmentation model is quite successful in segmenting skin lesions. Originally intended for biological image segmentation, UNet is especially sensitive in precisely and detailed recognizing structures inside pictures16. Essential for the preservation and use of spatial information during segmentation, the symmetric encoder-decoder structure of the model is marked by skip connections17.

This paper used the ARCUNet to segment the skin lesion disease. The following are the significant contributions of the proposed research.

-

In this paper a semantic segmentation model ARCUNet is proposed which is integrated with residual convolutions and attention mechanism to improve the segmentation accuracy.

-

Residual convolutions are employed in UNet to improve gradient flow and feature learning, thereby improving training stability and model accuracy. Convergence is improved through skipping redundant layers, which is made possible by residual connections. Improved segmentation accuracy is the result of this, especially in deeper models, without compromising performance.

-

While rejecting pointless data at the same time, the UNet attention mechanism increases the model’s ability for detecting significant features and enhances segmentations emphasising on the most important traits. For complex or noisy images, it allows more specific segmentations, better boundary placements, and usually higher accuracy.

-

Residual convolutions and attention mechanisms play important roles in UNet architecture for segmentation. Residual convolutions improve gradient flow, let the network learn complex features without performance loss, hence enabling more stable and effective training. With the application of the attention mechanism, the model refines its attention on the certain areas of the image, learning to emphasize the most relevant parts of the image while dropping irrelevant information. This pairing has the effect of creating faster convergence, more accurate boundary definition, and, generally, higher segmentation accuracy, particularly in difficult or noisy data.

-

Three distinct datasets—ISIC 2016, 2017, and 2018—are used to evaluate the proposed model ARCUNet, which is based on skin lesion illness. Performance metrics including accuracy, dice coefficient, and Jaccard are used to assess the proposed model.

The primary challenges in skin lesion segmentation are variability in lesion appearance and the occurrence of artefacts. Skin lesions vary greatly in size, shape, colour, and texture, making correct segmentation challenging. Moreover hair, shadows, and reflections often interfere with lesion boundaries, reducing segmentation precision. The Proposed ARCUNet model addresses these challenges by implanting Residual convolution and Attention Mechanism. Residual Convolutions improve gradient flow, allowing deeper feature learning and ensuring stable training while preserving fine details. Attention Mechanism focuses on important lesion regions while suppressing irrelevant background noise, improving boundary precision and segmentation accuracy.

The paper has been arranged as follows: “Literature survey” Section addresses the literature review for skin lesion disease segmentation. “Material and Methods” Section addresses the used input datasets of skin lesions. “Result and Discussion” Section mostly addresses the suggested ARCUNet architecture. “State of Art Analysis” Section deals with the outcome and analysis of the suggested model to assess the model’s performance. “Conclusion” Section is state of the art analysis; Sect. 7 marks the end of the paper.

Literature survey

Active study on the segmentation of skin lesions has led to the proposal of several approaches to increase the accuracy and resilience of segmentation models. By providing an overview of the datasets used, the techniques applied, and significant performance measures including the Jaccard index and accuracy, this section evaluates the recent developments in this area.

The abbreviation for High-order Spatial Interaction UNet is MHorUNet. Wu et al.1 designed their model specifically for lesion segmentation tasks. The developed model achieved Jaccard index of 78% alongside accuracy of 94% while utilizing ISIC 2018 data for evaluation. MHORUnet uses its high-order spatial interaction mechanism to identify complex spatial relationships during segmentation processes. Wu et al.2 created Ultralight VM-UNet through the application of parallel vision mamba techniques to decrease model parameter numbers significantly. The lightweight model used ISIC dataset from 2017 as its basis for validation producing a Jaccard Index score of 71% and an accuracy level of 91%. When deployed on limited devices the low parameter count makes this model the most suitable option.

Ahamed et al.3 used attention guidance as well as the UNet model to produce significant improvements in skin lesion segmentation Test Time Augmentation (TTA). When the model processed PH2 data it achieved 92% accuracy measurement and 74% Jaccard index value. The model performance achieved significant enhancement through this method. The attention directing mechanism produced better final results during segmentation by focusing on optimal spots. The DSEUNet represents a compact version of the UNet which employs dynamic space aggregation for improved skin lesion segmentation according to Li et al.4. When the model processed ISIC 2018 dataset its Jaccard index measurement reached 73%. The accuracy rate produced by the model reached 99.99 percent during the testing phase. Dynamic space clustering allows the model to achieve better segmentation results by discovering suitable cluster patterns for lesions that vary in shape and size.

The researchers from Zhu et al.5 introduced a UNet architecture which segments skin lesions using Multi-Spatial-Shift MLP layers. The accuracy rating along with Jaccard index measurement reached 89% and 69% respectively for testing the model on the ISIC 2017 dataset. The model acquires knowledge of global contextual information and successfully detects tough lesions as a result of applying MLP layers. The study by Liu et al.6 made use of Triple UNet to detect areas which had been segmented from skin lesions throughout the examination process. The model processed the 2018 ISIC database to achieve 70% Jaccard index performance with a 90% accuracy level. The method places primary importance on improving rough surface segmentation.

The research by Choudhary et al.7 confirmed that cutaneous lesion segmentation achieved enhanced outcomes with UNet and VGG topologies. Their method combined both architecture types to reach 92% accuracy with 72% Jaccard index on the ISIC 2017 dataset. The hybrid technique provides more accurate extraction and segmentation of the features. Kaur et al.8 transformed skin lesion segmentation by creating an attention residual UNet framework which performs autonomous lesion segmentation in 2024 using an efficient encoder-decoder structure. The model achieved amazing results while processing the ISIC 2018 dataset with 93% accuracy and 74% Jaccard index. The attention residual mechanism improves model segmentation because it focuses its attention most strongly on locations with important lesions.

Zhao et al.9 put forth an improved U-shaped network for skin lesion separation in 2024. On the ISIC 2017 data, this network obtained a Jaccard score of 71% and an accuracy of 90%. Improved U-shaped network architecture helped the model to segregate lesions of various diameters and forms more naturally. The authors Liang et al.10 presented an improved variation of the UNet grounded on contour attention in order to efficiently segment skin lesions. Analysed with the ISIC database for 2018, the model’s Jaccard index was 72% and its accuracy was found to be 91%. Furthermore, the contour attention technique improves the model’s capacity to precisely segment lesion borders, therefore increasing segmentation accuracy generally.

For skin lesion segmentation, Öztürk et al.11 had already created the CNN, which was enhanced. Their model using the ISIC 2018 data attained a Jaccard index of 70% and a 90% accuracy rate. Changes in CNN design helped to enhance lesion segmentation; optimising convolutional layers was therefore essential for that improvement. Ahammed et al.12 presented a machine-learning based approach for the identification and categorisation of skin disorders. This approach makes advantage of the picture segmentation technique. This model achieved 85% accuracy along with a 63% Jaccard index when evaluated on their specialized dataset. The research showed the necessity to connect disease diagnosis through combining segmentation and classification techniques for complete disease analysis.

Anand et al. designed a modified UNet architecture to segment skin lesions13. The new design structure introduced skip connections while adding additional convolutional layers. The model obtained 92% accuracy along with 74% Jaccard index during its complete testing stage by using the ISIC 2018 dataset. The model gained improved capabilities in detecting small characteristics along lesion edges due to the implemented improvements. Zafar et al.14 created a CNN-based technique that accomplished segmentation of dermoscopic skin lesions in 2020. The researchers applied their model to ISIC 2017 data achieving an accuracy level of 89% while preserving 69% of the available points as per the Jaccard index results. CNNs helped extract other features that improved segmentation accuracy through their own independent mechanism.

Kaymak et al.15 studied skin lesion segmentation through the implementation of FCNs while comparing their results experimentally. Test results based on the ISIC 2017 database indicate that FCNs successfully recovered lesion contours along with skin patterns achieving Jaccard measures of 70% and 89%. Anand et al.16 developed a hybrid model which uses dermoscopy photos to segment and classify skin lesions through combining CNN with UNet. The model showed a 91% accuracy rate together with a 72% Jaccard index after testing with 2018 ISIC database information. The model achieved improved performance for segmentation and classification duties through unification of these two methodologies.

The researchers from Fu and Deng17 developed MASDF-Net which features selective and dynamic fusion that applies multi-attention codec networking abilities to enhance skin lesion segmentation. Through an advanced functionality the model performed feature extraction that yielded high accuracy rates within ISIC dataset segmentation tasks. The researchers of Sumithra et al.18 demonstrated how diagnostic skin lesion capabilities emerge from combining segmentation with classification. The method uses traditional machine learning principles for its operation but modern methods require advanced deep learning technology.

Researchers Lameski et al.19 used convolutional neural networks (CNNs) within deep learning to advance skin lesion boundary detection capabilities. Medical image segmentation developed its first deep learning capabilities through the research approach adopted by these specialists. The researchers from Goyal et al.20 developed automatic deep extreme cut for boundary segmentation of skin lesions. The system produced by this team tracked lesions autonomously yet showed inconsistent performance when applied to different datasets.

Ibtehaz and Rahman21 designed MultiResUNet which optimizes the U-Net architecture specifically for biomedical multimodal image segmentation tasks. The integration of residual connections into the model structure allowed better utilization of features to achieve higher accuracy levels while handling intricate medical images. The authors of Ronneberger and Fischer22 improved the training processes of classic U-Net architecture for skin lesion segmentation applications. These modifications solved the problems with small lesion visibility which made U-Net perform better for medical imaging work.

The authors Salih and Viriri23 introduced a technique for skin lesion segmentation that combined stochastic region merging with pixel-based Markov random field methods. To improve segmentation refinement particularly for irregular lesion contours the probabilistic approach was developed. Nazi and Abir24 developed a transfer learning method which applied U-Net alongside DCNN-SVM for the segmentation of skin lesions and detection of melanoma. The integrated system which comprised feature extraction and classification functions reached competitive accuracy levels in their studies.

The authors of InSiNet designed a deep convolutional network to detect and segment skin cancer lesions as described by Reis et al.25. The network achieved high performance across various ISIC datasets thus proving its capability to handle diverse lesion types. The lightweight skin cancer segmentation network LSCS-Net uses dense connected multi-rate atrous convolution according to Din et al.26. The approach delivered faster segmentation results through enhanced efficiency without compromising detection accuracy.

The research team of Rehman et al.27 built Attention Res-UNet which combined attention mechanism and residual features from U-Net with focal Tversky loss to boost lesion segmentation procedures. The model successfully managed avoidable class problems and enhanced its segmentation performance. Ruan et al.28 presented MALUNet as a multi-attention lightweight U-Net system built for fast skin lesion segmentation. This model delivered high computational performance without compromising segmentation accuracy making it ideal for real-time processing needs29,30.

Xu et al.31 introduced an Attentive GAN for highlight removal in grayscale images, demonstrating the effectiveness of attention mechanisms in enhancing image quality. Similarly, our proposed ARCUNet leverages residual convolutions and attention mechanisms to improve skin lesion segmentation by refining feature extraction and suppressing irrelevant regions. The integration of attention-based techniques in both works highlights their significance in medical image processing, ensuring improved accuracy and robustness in challenging imaging conditions32.

Table 1 offers a concise summary of the several approaches and their main contributions to the study of skin lesion segmentation.

Material and methods

This section describes detailed architecture of proposed ARCUNet model. A detailed description of and the three employed input datasets based on skin Lesion: ISIC 2016, 2017 and 2018.

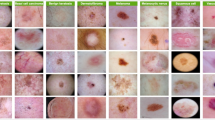

Input dataset

The research utilizes three different publicly accessible datasets including ISIC 2016, ISIC 2017, and ISIC 2018 which accumulate 1279, 3600, and 3700 dermoscopic images respectively. Included in this research are three datasets from the International Skin Imaging Collaboration (ISIC) challenge whose goal is to enhance melanoma detection capabilities. The collection of images came from different dermatology clinics and research centers to achieve diversity among lesions based on type and patient characteristics. The data was divided into training and validation (80%) and testing (10% + 12.5%) subsets according to a specific partitioning process. The three datasets follow different image distribution levels including 895 training images and 192 test images and 192 validation images for ISIC 2016 and 2520 training images alongside 540 test images and 540 validation images for ISIC 2017 and 2590 training images accompanied by 555 test images and 555 validation images for ISIC 2018. Dermatoscopic imaging technology provided standardized acquisition of the ISIC datasets through high-resolution RGB imaging of controlled pigmented skin lesions under standardized light conditions. The images display different lesion features in size, shape, color and texture which makes them optimal for deep learning segmentation models. The datasets contain expert dermatology annotations and lesion diagnoses that make up the metadata for reliable truth labels. Each yearly ISIC challenge involves growing complexities in segmentation tasks as ISIC 2016 uses a simple binary classification between melanoma and non-melanoma but ISIC 2017 added three classes then ISIC 2018 included seven classes to cover diverse skin conditions. Standardized evaluations may take place because datasets are publicly available which ensures that research can be replicated and state-of-the-art models can be properly compared. The current datasets primarily work with RGB dermoscopic images so future research should explore extending their capabilities to include work with non-dermatoscopic photography and multispectral imaging for clinical use. Figure 1a–c respectively show ISIC 2016, 2017, and 2018 dataset images (Table 2).

The model training performance alongside protection against overfitting can be achieved through data augmentation methods. Randomized image transformations that include transposition and flipping in both vertical and horizontal directions and random rotation are applied to these datasets.

The ISIC 2016 and 2017 and 2018 datasets became the selection because they provide diverse examples which are relevant to clinical practice and commonly used for evaluating skin lesion segmentation approaches. The datasets accurately represent real medical situations through their collection of different lesion measurements and color and texture attributes which suits robustness tests well. The scope of classification tasks changes from binary in 2016 to three classes in 2017 to seven classes in 2018 throughout the ISIC series of datasets. Standard evaluation and comparison against modern models becomes possible due to these datasets which are publicly available. The testing results on these datasets prove that ARCUNet shows high precision combined with versatile resilience across multiple medical imaging situations.

Proposed model

The proposed ARCUNet model enhances the segmentation by improving feature extraction, and spatial details, and concentrating on relevant regions. ARCUNet provides a robust architecture for precise segmentation, residual convolutions address gradient vanishing issues, and attention mechanisms refocus the model on important features. The performance of skin lesion segmentation is managed by ARCUNet through its combination of residual convolutions and attention mechanisms because it maintains continuous feature learning capabilities while elevating regional focus. Skip connections within residual convolutional blocks allow deep feature extraction in the encoder because they both protect training stability by reducing gradient vanishing. Proper segmentation accuracy results from the attention gates that operate between encoder and decoder sections to block unnecessary background sounds and better focus on vital lesion regions. The processor uses spatially-consistent operations to upsampling features and adds residual convolutional layers into its architecture for progressive refinement of output boundaries. The integrated approach enables superior gradient flow with optimized feature selection for boundary accuracy which results in improved segmentation performance according to higher scores of Dice coefficient and Jaccard index. Figure 2 shows the architecture of the proposed model.

The UNet encoder in the ARCUNet architecture is a crucial component designed to progressively extract and condense features from the input image while reducing its spatial dimensions. The study30 highlights the effectiveness of U-Net in capturing spatial features while preserving fine details crucial for accurate segmentation. The proposed encoder consists of four sequential blocks, each starting with a residual convolutional block. This block includes two 3 × 3 convolutional layers followed by batch normalization and ReLU activation. The residual connections within each block involve a 1 × 1 convolutional layer that is added back to the output of the convolutional layers, facilitating better gradient flow and learning. Every encoder block ends with a 2 × 2 max-pooling layer and residual convolutional block, which downscales the feature maps by half and lets the network pick more abstract features as it advances. Working together, each encoder—which consists of nine layers—three convolutional layers, three batch normalizing layers, two activation layers, and one max-pooling operation—compresses the image into a rich feature representation for later bottleneck and decoder stage processing.

Reconstructing the spatial dimensions of the feature maps while including high-level information acquired by the encoder is accomplished in the ARCUNet architecture using the upsampling path. Four consecutive decoder blocks make up it; they mimic the structure of the encoder but in reverse. Starting a transposed convolutional layer that upsamples the feature maps to double their spatial dimensions, each decoder block aligning the upsampled maps with corresponding features from the encoder via convolutional layers and a sigmoid activation, the attention block within the decoder refines the features. Preserving spatial details, this enhanced feature map is subsequently concatenated with the matching feature map from the encoder. To further hone the features, the concatenated map passes through a residual convolutional block comprising extra convolutional layers, batch normalization, and ReLU activation. With transposed convolution, attention mechanisms, concatenation, and residual convolution each decoder block totals sixteen layers overall. This complex mix lets the decoder segment the original image precisely and preserve fine features from the output of the encoder.

Residual convolutions

Deep networks often suffer from the vanishing gradient problem, where gradients become too small during backpropagation, leading to slow or stalled learning. Residual convolutions (ResConv) address this issue by introducing skip connections that allow gradients to bypass certain layers, ensuring stable training and preventing loss of crucial low-level features. The residual convolution block is essentially based on the integration of shortcut or skip connections that let the input feature map avoid some layers and be added straight to the output. Under the proposed model, the batch normalisation and a ReLU activation function follow a 3 × 3 convolution operation applied to the input feature map to form the residual convolution block. This first convolution catches key input characteristics. The activations are normalised in the batch normalising stage to stabilise the training process and raise convergence. The ReLU activation adds non-linearity to the network which is needed to understand complex patterns.

The output of the first convolution is next given to a second 3 × 3 convolution layer. This even more polishes the acquired traits and prepares them for the addition step. Another batch normalization ensures the stability of the activations after this convolution. The residual connection is added at last determines the block’s efficiency. The first 1 × 1 convolution serves as a shortcut layer to match the output dimensions of the convolutional layers. Two convolutional layers produce output that is added to the shortcut layer which can be seen in Fig. 3.

An extra ReLU activation function is added after the addition of the shortcut layer and convolved output. This addition helps the network to learn the residuals—that is, the fluctuation between the input and its learnt output. Concentrating on learning these residuals will help the network to more effectively collect complex information and sustain performance as its depth increases. This residual learning approach helps prevent the degradation problem often observed in very deep networks, where performance might degrade rather than rise with further layers.

Residual connections allow the residual convolution block to assure effective gradients flow, therefore improving the training of deep networks and hence guaranteeing performance gains with depth. Learning residuals instead of complete transformations helps the block to simplify the learning process, stabilise training, and enable the construction of more potent and accurate deep neural networks. Residual convolutions is calculated using following Eq. (1):

Y—is the output of the residual block, x—is the input to the residual block, {wi}—are the weights of the convolutional layers, F(x,{wi})—is the transformation function applied to the input x by the convolution layer and shortcut layer. This transformation function consists of two convolutional layers followed by batch normalization and an activation function represented below in Eq. (2):

W1 and W2—are the weights of the 1st and 2nd convolutional layers, *—denotes the convolution operations, BN—is the batch normalization layer, σ—is the activation function.

Attention mechanism

The attention mechanism is an advanced technique designed to enhance feature representation by focusing on the most relevant parts of the input data. The Attention Guided Segmentation (AGS) mechanism enables the model to focus on critical regions of interest in the images, improving segmentation accuracy29. Traditional convolutional models treat all pixels equally, leading to potential misclassification in complex lesion structures. Attention mechanisms help focus on relevant lesion areas while suppressing background noise, improving segmentation accuracy. The attention module in ARCUNet follows a gated attention approach, where: The encoder’s feature maps guide the decoder to focus on high-importance regions. Spatial and channel-wise attention filters out irrelevant regions. We can see in Fig. 4, the attention mechanism enables the proposed model eliminate less important features while selectively focus on key features. After passing through two separate convolutional layers, the input feature map ‘x’ obtained from the matching encoder and the up sampled feature map “g” are rendered. The spatial dimensions of x are reduced and local features are captured using a 2 × 2 convolution with stride 2, while the dimensions of ‘g’ are aligned with the attention map using a 1 × 1 convolution. The outputs of these convolutions are combined using an addition function to create an integrated feature representation that incorporates both inputs. After going through a ReLU activation function, this total brings in non-linearity and enhances feature learning. A preliminary attention map is created by 1 × 1 convolving the activated feature map; a sigmoid activation function is then applied to this map to give attention weights. The relative significance of the various feature map regions is determined by these weights. To make sure the attention weights are properly aligned spatially, they are upsampled using UpSampling2D until they are the same size as the original feature map x. These upsampled attention weights are then multiplied element-wise with the original feature map x, therefore scaling the features according to their importance. At last, the output is polished with another 1 × 1 convolution followed by batch normalisation, hence stabilising the learning process and changing the feature scale. This attention method enables the model to dynamically concentrate on critical areas of the input, therefore enhancing the capacity of the network to generate correct predictions by stressing important features and suppressing less relevant data. Incorporating attention helps the network to handle difficult jobs where feature importance fluctuates greatly over several spatial regions, hence improving general performance and interpretability. The mechanism of the attention block can be reparented mathematically by Eq. (3).

X—is the feature map from the encoder, g—is the upsampled feature map from the decoder, Wθ, Wϕ and Wψ—are learnable parameters, σ—is the sigmoid function, ⊙—Element-wise multiplication.

Result and discussion

The proposed ARCUNet model with Residual convolutions and Attention mechanism is trained and tested on three different datasets; ISIC 2016, 2017, and 2018 skin lesion datasets. The model is trained on the Adam optimizer, 8 batch size, and 30 epochs with early stopping. The proposed ARCUNet model is evaluated on several parameters i.e. accuracy, dice coefficient, and Jaccard.

Qualitative results on ISIC 2016 dataset

Over the 30 epochs, the dice coefficient for training keeps increasing and reaches 0.9668 at last. From this, it seems that the model learns from the more cases it encounters in segmenting the training data properly. The validation Dice coefficient shows a good increase first but calms down about epoch 20. It finally comes to 0.9468. This suggests that the model’s performance on yet unmet data-the validation set-is not considerably increasing beyond epoch 20.

Over the 30 epochs, the Jaccard index usually rises; at last, it achieves the final value of 0.9345. The model is learning to more precisely partition the training data. Though at a slower speed than the training Jaccard index, the validation Jaccard index also rises. It ends up with 0.9114. This implies that although not as quickly as on the training set, the performance of the model on unseen data the validation set is becoming better.

The training accuracy exhibits a consistent increase over the course of the 30 epochs, culminating in a final value of 0.9932. This indicates the model is improving its ability to classify the training data. The validation accuracy also increases, albeit at a slightly slower pace than the training accuracy. It ultimately attains a value of 0.9812. This implies that the model’s performance on the validation set is improving, albeit at a slower pace than its performance on the training set. Figure 5 qualitative results on ISIC 2016 dataset.

Qualitative results on ISIC 2017 dataset

The training Dice coefficient experiences a consistent increase over the course of the 30 epochs, culminating in a final value of 0.9831. It shows that the model is getting capable of segmenting the training images in successful manner. Though at a rather slower speed than the training Dice coefficient, the validation Dice coefficient also rises. It finally comes to 0.9645. This suggests that validity set performance of the model is becoming better.

Throughout the 30 epochs, the training Jaccard index exhibits fluctuations, including spikes and drops. It typically increases, resulting in a final value of 0.9065. This indicates that the proposed model is improving its performance on the training data, albeit with some degree of variability in its performance. The validation Jaccard index exhibits fluctuations, but it exhibits a similar pattern to the training Jaccard index. It attains a final value of 0.8833, which is significantly lower than the training Jaccard index.

The training accuracy consistently increases throughout the 30 epochs, resulting in a final value of 0.9831. This shows that the model is improving its ability to analyse the training data. The validation accuracy also increases, albeit at a slightly slower pace than the training accuracy. It ultimately achieves a value of 0.9645. This implies that the model’s performance on the validation set is improving, unfortunately at a slower pace than its performance on the training set. Figure 6 illustrates qualitative Results on ISIC 2017 dataset.

Qualitative results on ISIC 2018 dataset

The training Dice coefficient consistently increases throughout the 30 epochs, resulting in a final value of 0.9845. It implies that the model gained the ability to segment the training images in an efficient way. Though at a rather slower rate than the training Dice coefficient, the validation Dice coefficient also rises. It finally comes to 0.9534. This indicates that, while at a slower rate than on the training set, the model’s performance on the validation set is increasing.

The training Jaccard index shows oscillations including peaks and troughs over the 30 epochs. It rises till it gets to 0.9566 at last. His shows that although the model performs with some fluctuation, it is learning to better segment the training data. The validation Jaccard index exhibits fluctuations, but it generally exhibits a similar pattern to the training Jaccard index. It attains a final value of 0.9353, which is significantly lower than the training Jaccard index.

The training accuracy exhibits a consistent increase over the course of the 30 epochs, achieving a final value of 0.9909. This indicates that the model is learning to better classify the training data. The validation accuracy also increases, although at a slightly slower pace than the training accuracy. It ultimately attains a value of 0.9819. Figure 7 illustrates the qualitative results on ISIC 2018 dataset.

Visual analysis

Visual analysis of skin lesion segmentation offers a vital viewpoint on the performance of a segmentation model by comparing the input image, the ground truth image, and the predicted image created by the model. This is a parallel with the ground truth picture and the input image. The evaluation for this to occur is dependent on an input image—usually a dermoscopic picture of the skin lesion. Using accurate segmentation of the lesion, the ground truth image offers the basis for the model performance. Medical professionals sometimes annotate this division. For evaluating segmentation accuracy and quality, the proposed ARCUNet model produces a projected image that will be matched with the actual image. Figure 8a–c illustrate the predicted mask, ground truth mask, and original images from dataset ISIC 2016, 2017, and 2018 respectively, implemented through the proposed model.

Ablation study

An ablation study is necessary for understanding the contributions of various components and methodologies within a segmentation framework. The model is tested on various combinations i.e. UNet, UNet with Residual convolutions (RC), UNet with Residual convolutions, and Attention mechanism (AM). Table 3 exhibits performance analysis of three different datasets on three different models.

Table 3exhibits performance analysis of three models for the ISIC 2016 datasheet where it includes UNet and UNet with Residual Convolution (RC) and Attention Residual Convolutional UNet (UNet + RC + AM). The basic UNet produced validation results which fell slightly below its training performance oтмeчaя: accuracy level at 93.99% and Dice coefficient at 88.84% and Jaccard index at 85.66%. Additions of residual convolution to the network architecture (UNet + RC) boosted performance metrics to 97.91% accuracy and 90.09% Jaccard index and 92.93% Dice coefficient which proved that the network gained better generalization features. The Attention Residual Convolutional UNet (UNet + RC + AM) model produced the best results with 99.32% accuracy together with 96.80% Dice coefficient and 93.45% Jaccard index. Studies confirmed that this advanced model demonstrates the best performance because attention mechanisms combine with residual connections to boost training along with validation evaluation results.

Table 3 showcases three models including UNet and two variants: UNet with Residual Convolution (RC) and Attention Residual Convolutional UNet (UNet + RC + AM) for ISIC 2017 dataset. Using limited validation data the base UNet reached an accuracy of 91.99% and Dice coefficient of 86.42% as well as a Jaccard index of 85.66%. Residual convolution (UNet + RC) delivered superior outcomes that included an accuracy of 94.91% and both Dice coefficient of 91.93% and Jaccard index of 89.89%. This indicates improved feature extraction capability and better resilience. The Attention Residual Convolutional UNet (UNet + RC + AM) demonstrated the most successful performance reaching 98.31% accuracy and Dice coefficient 93.21% and Jaccard index 90.65%. The combination of residual and attention mechanisms in network architecture leads to better training and validation performance on the ISIC 2018 dataset, three models in Table 3 UNet, UNet with Residual Convolution (RC), and Attention Residual Convolutional UNet (UNet + RC + AM) have their performance shown in a table. With validation measures somewhat lower, the UNet model attained an accuracy of 84.29%, a Dice coefficient of 92.84%, and a Jaccard index of 88.90%. With an accuracy of 96.51%, a Dice coefficient of 95.93%, and a Jaccard index of 92.89%, including residual convolution (UNet + RC) produced a considerable improvement showing greater generalizing and feature learning. With an accuracy of 99.09%, a Dice coefficient of 98.45%, and a Jaccard index of 95.66%, the Attention Residual Convolutional UNet (UNet + RC + AM) performed best. These results show how well residual connections and attention processes help to improve training and validation measures on the ISIC 2018 dataset.

State of art analysis

Modern skin lesion segmentation studies expose the success of several advanced models and approaches. Reaching an accuracy of 95.67%, a Jaccard index of 93.40%, and a Dice coefficient of 96.12% on the ISIC 2018 dataset, Fu et al. presented MASDF-Net, a Multi-Attention Codec Network with Selective and Dynamic Fusion17. On skin lesion datasets Ronneberger and Fischer obtained an accuracy of 88.50%, a Jaccard index of 85.20%, and a Dice coefficient of 87.80% by using a U-Net model with optimal training procedures22. Salih and Viriri obtained an accuracy of 91.00%, a Jaccard index of 89.20%, and a Dice coefficient of 90.50%23 by using a stochastic region-merging technique in tandem with a pixel-based Markov Random Field. On the ISIC dataset24, Nazi and Abir used U-Net and DCNN-SVM in a transfer learning manner producing an accuracy of 93.40%, a Jaccard index of 90.10%, and a Dice coefficient of 92.70%. Showing an accuracy of 96.00%, a Jaccard index of 93.20%, and a Dice coefficient of 94.00% Reis et al. presented InSiNet, a deep convolutional network built for skin cancer detection and segmentation25. On relevant datasets, Din et al. then introduced LSCS-Net, a lightweight skin cancer segmentation network with densely linked multi-rate atrous convolution, obtaining an accuracy of 94.50%, a Jaccard index of 92.00%, and a Dice coefficient of 93.80%. Each of these models shows great accuracy, Jaccard index, and Dice coefficient in many datasets, especially the ISIC dataset, helping to contribute to the continuous developments in skin lesion segmentation. The proposed ARCUNet model was implemented on three datasets ISIC 2016, 2017, and 2018 focusing on skin lesion diseases. The value of the accuracy comes out to be 98.12, 96.45, and 98.19 for ISIC 2016, 2017, and 2018 datasets respectively. The value of Jaccard is evaluated as 91.14, 88.33, and 93.53 respectively whereas values of dice coefficient are measured as 94.68, 91.21, and 95.34 respectively. In Table 4 and in Fig. 8a–c illustrate the state of art analysis of ISIC 2016, 2017 and 2018 datasets respectively.

The scalability of the models in the Table 4 depends on their architectural complexity and adaptability across datasets. High-performing models like LSCS-Net and ARCUNet, with superior accuracy and segmentation metrics, show strong generalization but require significant computational resources, posing challenges for real-time deployment. Variability in skin tones, lesion types, and imaging conditions further complicates real-world implementation, necessitating large-scale clinical validation and regulatory approvals. While models like UNet with optimized training strategies perform well on a single dataset (ISIC 2018), they may struggle with cross-dataset generalization. InSiNet, with relatively lower computational demands, is more suitable for mobile health applications and edge computing. The best-performing models, such as ARCUNet, can be integrated into AI-assisted dermatology tools, automated screening systems, and telemedicine platforms, enhancing early diagnosis and treatment planning.

Conclusion

The proposed ARCUNet model acts positively regarding the challenge problems in skin lesion segmentation, which are the variability of the characteristics of lesions and the presence of artifacts. Attention mechanisms and residual convolutions are added for improved feature learning and stabilizing training, sharpening the focus on critical image regions that may provide more precise boundary delineation. The results of this model are implemented on three datasets, namely ISIC 2016, 2017, and 2018. This proposed model brought a huge improvement in segmentation accuracy, Dice coefficient, and Jaccard index, thereby proving its robustness and generalizability. The value of the accuracy comes out to be 98.12%, 96.45%, and 98.19% for ISIC 2016, 2017, and 2018 datasets respectively. The value of Jaccard is evaluated as 91.14%, 88.33%, and 93.53% respectively whereas values of dice coefficient are measured as 94.68%, 91.21%, and 95.34% respectively. ARCUNet seems to ensure the highest performance and eventually can be trusted for skin lesion segmentation in challenging medical images.

Data availability

The datasets used in this study are publicly available at Kaggle: https://www.kaggle.com/datasets/mahmudulhasantasin/isic-2016-original-dataset; https://www.kaggle.com/datasets/awsaf49/isic-2017/data; https://www.kaggle.com/datasets/tschandl/isic2018-challenge-task1-data-segmentation.

References

Wu, R. et al. MHorUNet: High-order spatial interaction UNet for skin lesion segmentation. Biomed. Signal Process. Control 88, 105517 (2024).

Wu, R., Liu, Y., Liang, P. & Chang, Q. Ultralight vm-unet: Parallel vision mamba significantly reduces parameters for skin lesion segmentation. arXiv preprint http://arxiv.org/abs/2403.20035. (2024)

Ahamed, M. F., Hossain, M. M., Mary, M. M. & Islam, M. R. Optimizing skin lesion segmentation with UNet and Attention-guidance utilizing test time augmentation. in 2024 6th International Conference on Electrical Engineering and Information & Communication Technology (ICEEICT) 568–573. (IEEE, 2024)

Li, J. et al. DSEUNet: A lightweight UNet for dynamic space grouping enhancement for skin lesion segmentation. Exp. Syst. Appl. 255, 124544 (2024).

Zhu, W., Tian, J., Chen, M., Chen, L. & Chen, J. MSS-UNet: A multi-spatial-shift MLP-based UNet for skin lesion segmentation. Comput. Biol. Med. 168, 107719 (2024).

Liu, G. et al. A region of interest focused triple UNet architecture for skin lesion segmentation. Int. J. Imaging Syst. Technol. 34(3), e23090 (2024).

Choudhary, M. & Chauhan, N. Enhancing skin lesion analysis: Leveraging UNet and VGG architectures in deep learning models. in 2024 International Conference on Integrated Circuits, Communication, and Computing Systems (ICIC3S), Vol. 1, pp. 1–8 (IEEE, 2024).

Kaur, R. & Kaur, S. Automatic skin lesion segmentation using attention residual U-Net with improved encoder-decoder architecture. Multimed. Tools Appl. https://doi.org/10.1007/s11042-024-18895-5 (2024).

Zhao, Y., Yan, T. & Yilihamu, Y. Skin lesion image segmentation based on improved U-shaped network. Int. J. Intell. Robot. Appl. 8(3), 609–618 (2024).

Liang, S., Tian, S., Yu, L. & Kang, X. Improved U-net based on contour attention for efficient segmentation of skin lesion. Multimed. Tools Appl. 83(11), 33371–33391 (2024).

Öztürk, Ş & Özkaya, U. Skin lesion segmentation with improved convolutional neural network. J. Digit. Imaging 33, 958–970 (2020).

Ahammed, M., Al Mamun, M. & Uddin, M. S. A machine learning approach for skin disease detection and classification using image segmentation. Healthc. Anal. 2, 100122 (2022).

Anand, V. et al. Modified U-net architecture for segmentation of skin lesion. Sensors 22(3), 867 (2022).

Zafar, K. et al. Skin lesion segmentation from dermoscopic images using convolutional neural network. Sensors 20(6), 1601 (2020).

Kaymak, R., Kaymak, C. & Ucar, A. Skin lesion segmentation using fully convolutional networks: A comparative experimental study. Exp. Syst. Appl. 161, 113742 (2020).

Anand, V., Gupta, S., Koundal, D. & Singh, K. Fusion of U-Net and CNN model for segmentation and classification of skin lesion from dermoscopy images. Exp. Syst. Appl. 213, 119230 (2023).

Fu, J. & Deng, H. MASDF-Net: A multi-attention codec network with selective and dynamic fusion for skin lesion segmentation. Sensors 24(16), 5372 (2024).

Sumithra, R., Suhil, M. & Guru, D. S. Segmentation and classification of skin lesions for disease diagnosis. Proc. Comput. Sci. 45, 76–85 (2015).

Lameski, J., Jovanov, A., Zdravevski, E., Lameski, P. & Gievska, S. Skin lesion segmentation with deep learning. in IEEE EUROCON 2019–18th International Conference on Smart Technologies 1–5 (IEEE, 2019).

Goyal, M., Ng, J., Oakley, A. & Yap, M. H. Skin lesion boundary segmentation with fully automated deep extreme cut methods. in Medical Imaging 2019: Biomedical Applications in Molecular, Structural, and Functional Imaging, Vol. 10953, pp. 131–137, (SPIE, 2019).

Ibtehaz, N. & Rahman, M. S. MultiResUNet: Rethinking the U-net architecture for multimodal biomedical image segmentation. Neural Netw. 121, 74–87 (2020).

Ronneberger, O. & Fischer, P. Skin lesion segmentation using a U-net and good training strategies. arXiv preprint http://arxiv.org/abs/1811.11314. (2020).

Salih, O. & Viriri, S. Skin lesion segmentation using stochastic region-merging and pixel-based Markov random field. Symmetry 12(8), 1224 (2020).

Nazi, Z. al. & Abir, T. A. Automatic Skin lesion segmentation and melanoma detection: Transfer learning approach with U-net and DCNN-SVM, 371–381 (2020).

Reis, H. C., Turk, V., Khoshelham, K. & Kaya, S. InSiNet: A deep convolutional approach to skin cancer detection and segmentation. Med. Biol. Eng. Comput. https://doi.org/10.1007/s11517-021-02473-0 (2022).

Din, S., Mourad, O. & Serpedin, E. LSCS-Net: A lightweight skin cancer segmentation network with densely connected multi-rate atrous convolution. Comput. Biol. Med. 173, 108303 (2024).

Rehman, A., Butt, M. A. & Zaman, M. Attention res-UNet: Attention residual UNet with focal tversky loss for skin lesion segmentation. Int. J. Decis. Support Syst. Technol. (IJDSST) 15(1), 1–17 (2023).

Ruan, J., Xiang, S., Xie, M., Liu, T. & Fu, Y. MALUNet: A multi-attention and light-weight unet for skin lesion segmentation. in 2022 IEEE International Conference on Bioinformatics and Biomedicine (BIBM) 1150–1156. (IEEE, 2022).

Ahamed, M. F. et al. Automated colorectal polyps detection from endoscopic images using MultiResUNet framework with attention guided segmentation. Hum.-Cent. Intell. Syst. 4(2), 299–315 (2024).

Faysal Ahamed, M., Robiul Islam, M., Hossain, T., Syfullah, K. & Sarkar, O. Classification and segmentation on multi-regional brain tumors using volumetric images of MRI with customized 3D U-Net framework. in Proceedings of International Conference on Information and Communication Technology for Development: ICICTD 2022, 223–234 (Springer Nature, 2023).

Xu, H., Li, Q. & Chen, J. Highlight removal from a single grayscale image using attentive GAN. Appl. Artif. Intell. 36(1), 1988441. https://doi.org/10.1080/08839514.2021.1988441 (2022).

Xia, J. et al. Enhanced moth-flame optimizer with quasi-reflection and refraction learning with application to image segmentation and medical diagnosis. Curr. Bioinform. 18(2), 109–142. https://doi.org/10.2174/1574893617666220920102401 (2023).

Acknowledgements

This work was supported by King Saud University, Riyadh, Saudi Arabia, through Researchers Supporting Project number RSP2025R498.

Author information

Authors and Affiliations

Contributions

Tanishq Soni: Conceptualization, Methodology, Investigation, and Data Analysis. Drafted the initial manuscript and contributed to experimental design and data processing. Sheifali Gupta: Methodology, Software Development, and Data Curation. Supported the development of the model architecture and performed preliminary experiments. Ahmad Almogren: Supervision, Project Administration, and Funding Acquisition. Provided resources and helped refine the study design and interpretation of results. Ayman Altameem: Data Interpretation, Visualization, and Review. Contributed to data analysis, graphical representation, and critically reviewed the manuscript for intellectual content. Ateeq Ur Rehman: Writing—Review and Editing, Validation, and Data Curation. Assisted in validation experiments and helped with the final manuscript revision. Seada Hussen: Experimental Design, Formal Analysis, and Validation. Participated in developing experimental protocols and contributed to analytical methods for data validation. Salil Bharany: Writing—Original Draft, Review & Editing, and Resources. Involved in manuscript writing, revision, and provided resources for model implementation.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Soni, T., Gupta, S., Almogren, A. et al. ARCUNet: enhancing skin lesion segmentation with residual convolutions and attention mechanisms for improved accuracy and robustness. Sci Rep 15, 9262 (2025). https://doi.org/10.1038/s41598-025-94380-9

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-94380-9