Abstract

The Science of Science (SoS) examines the mechanisms driving the development and societal role of science, evolving from its sociological roots into a data-driven discipline. This paper traces the progression of SoS from its early focus on the social functions of science to the current era, characterized by large-scale quantitative analysis and AI-driven methodologies. Scientometrics, a key branch of SoS, has utilized statistical methods and citation analysis to understand scientific growth and knowledge diffusion. With the rise of big data and complex network theory, SoS has transitioned toward more refined analyses, leveraging artificial intelligence (AI) for predictive modeling, sentiment annotation, and entity extraction. This paper explores the application of AI in SoS, highlighting its role as a surrogate, quant, and arbiter in advancing data processing, data analysis and peer review. The integration of AI has ushered in a new paradigm for SoS, enhancing its predictive accuracy and providing deeper insights into the internal dynamics of science and its impact on society.

Similar content being viewed by others

Introduction

Since its creation, the Science of Science (SoS), which takes science as a whole as its object of study, has always been closely linked with the development and evolution of science and technology (S&T), and has taken the study of the laws of science’s development and its social functions as the core of its disciplinary evolution. The development of S&T has promoted the transformation of SoS’s research paradigm. At present, the latest scientific and technological revolutions and industrial transformations are on the way, digital intelligence-driven scientific discoveries and scientific research paradigm transformation promote scientific development to step into the intelligent era. Moreover, “AI for Science” fully integrated into scientific research, decision-making, scientific management (governance), development strategy and other aspects. SoS witnesses unprecedented significant development opportunities and challenges.

The evolution of SoS has been significantly influenced by shifts in scientific research paradigms, tracing its roots back to philosophical and scientific thought before SoS was formally established. The spotlight on science’s role in societal development and human progress, especially noted in the early 20th century, paved the way for SoS’s formal inception. In 1925, Polish sociologist F. Znaniecki advocated for a “science of science,” laying foundational ideas for SoS. A pivotal moment came in 1931 when the former Soviet delegate N. Bukhari, at the Second International Conference on the History of Science in London, presented Boris Hessen’s report on Newton’s “Principia” linking Newtonian mechanics to social contexts, which is considered a landmark exploration in SoS. The 1939 publication “The Social Function of Science” by British physicist J. D. Bernal further established SoS, emphasizing the analysis of the connection between science and society. By the 1960s, the emergence of scientometrics shifted SoS from sociological analysis to a quantitative phase, leading to its maturity. This conventional phase of SoS has since fostered the growth of related fields such as S&T management, innovation, and policy.

In the 21st century, the emergence of data-driven scientific research has rejuvenated the Study of SoS, with “AI for Science” leading the charge into new research paradigms. This shift has sparked a greater emphasis on conducting research over understanding the deeper nuances of science itself, making the scientific process and knowledge system increasingly complex. This complexity demands a more profound understanding and explanation of science, facilitated by the advent of large-scale data, advanced computing, and precise algorithms, which significantly enhance our ability to explain scientific phenomena. Consequently, SoS is poised to evolve into a more integrated discipline, signaling its maturity and underscoring the need for a new, modern framework for SoS (Kuhn, 1962); (Shneider, 2009).

The paper delves into the evolution and history of the Study of SoS, emphasizing its pivotal role in blending science, technology, and society. By systematically examining the SoS’s growth, the paper aims to highlight how SoS has been crucial in linking and advancing both the natural and social sciences. It acknowledges and interprets the importance of science in societal roles, offering theoretical and practical frameworks to better grasp the intricate relationship between science, technology, and society. This approach aims to enrich our understanding of science by exploring the dynamic interactions among these fields.

The social function of science: Sociological research on SoS

Since the 19th century, S&T have become increasingly intertwined, forming an indissoluble bond. Unresolved scientific questions often lead to technological innovations, while scientific endeavors rely on technological advancements for experimental tools and methods. This interplay has deeply influenced modern society, which now fundamentally rests on the pillars of contemporary S&T. The impact of natural sciences on society has, in turn, become a focus of social sciences, with their methods and theories providing critical insights and approaches in social scientific inquiry. The concept of scientific labor’s socialization has also entered academic discourse, highlighted by Hessen’s (1931) exploration of the social and economic influences on Newton’s “Principia” and Merton’s works, such as “Science, Technology, and Society in Seventeenth-Century England” (Merton, 1973) and “Social Theory and Social Structure” (Merton, 1973), marking the advent of a sociological lens in science studies.

In the early 20th century, the field of ‘science studies’ began to take shape, notably with Polish sociologist Florian Witold Znaniecki coining the term in his 1925 article “The Object and Tasks of the Science of Knowledge.” The following year, N. Richevsky from the former Soviet Union expanded the concept to include the societal impacts of science. Further clarity and terminology, ‘science of science’ were introduced by Tadeusz Kotarbinski in 1929, and by Polish sociologists, the Ossowskis, in 1935, broadening the research scope within this emerging field. A landmark moment came with Boris Hessen’s 1931 presentation in London, focusing on the social factors influencing natural science, highlighting the field’s importance. American sociologist Robert King Merton, influenced by Hessen, delved into the science-society relationship in 17th-century England, adopting a sociological approach. Merton’s identification of the ‘Matthew effect’ played a crucial role in establishing the sociology of science and science studies, significantly advancing our understanding of the dynamics between science and societal structures.

In 1939, the British scientist J.D. Bernal, in his book “The Social Function of Science”, presented for the first time a comprehensive and extensive proposal to study the entire realm of scientific issues using the historical and sociological methods of natural science. Simultaneously, he advocated the integration of qualitative and quantitative research methods, aiming to conduct a comprehensive examination of the significant social phenomenon of “science” within the broader societal framework (Bernal 1939). This groundbreaking approach gave rise to a novel discipline known as the SoS. Upon its publication, this book swiftly became the foundational theoretical work in the field of SoS. Furthermore, Bernal authored numerous works that encapsulated the scientific achievements of his time, revealing the philosophical significance of science and its role in human history. His writings also addressed the contradictions in the development of science within class societies and the continuous progress of science under socialist systems. Due to Bernal’s prominent contributions to the study of the SoS, he is acknowledged in academia as the founder of the discipline of SoS.

Since the 1960s, the study of SoS has evolved to encompass a range of research methods and theories, introducing new dimensions of analysis. The historical dimension focuses on and examines the historical processes of scientific development, the evolution of scientific thought, as well as the formation and changes in scientific paradigms. In addition, one key area, scientometrics, focusing on the quantitative analysis of science, has since become a central discipline within SoS, revitalizing the field with its focus on measuring and understanding scientific output and influence.

From qualitative analysis to large-scale quantitative analysis: Scientometrics as a branch of SoS

The established research paradigm within SoS has traditionally hinged on the compilation of past experiences and the construction of speculative theories, thereby securing its academic standing and theoretical foundation. Nevertheless, the rapid progress in S&T has posed substantial challenges to this conventional approach. In the era of “big science,” the exponential expansion of scientific knowledge necessitates that the SoS discipline refine its ability to pinpoint the leading edge of scientific and technological advancements, disclose the underlying forces driving scientific progress, and furnish actionable insights for societal utility. The adoption of quantitative analysis methods that are both calculable and interpretable, grounded in the field’s extant theories, hypotheses, and models, is of paramount importance.

Statistical analysis of the laws of scientific development

The field of science studies, often traced back to the 1920s or 1930s, actually began in the late 19th century with natural scientists collecting statistical data on peers using mathematical statistical methods. Victorian naturalist Sir Francis Galton (1822–1911) was pivotal, in identifying the ‘regression toward the mean’ phenomenon (Galton, 1886) and introducing the correlation coefficient (Lee Rodgers & Nicewander, 1988). He analyzed traits of British scientists in ‘English Men of Science: Their Nature and Nurture’ using questionnaires (Galton, 1874). Inspired by Galton, James McKeen Cattell evaluated American scientists’ productivity based on peer review (Cattell, 1906).

Scientific literature became a focus of quantitative research. Alfred James Lotka (1880–1949) proposed Lotka’s law, stating that the number of authors who have written n papers is approximately 1/n2 of the number of authors who have written 1 paper (Lotka, 1926). Other milestones include Zipf’s Law and Bradford’s Law, revealing the distribution of word frequency in English literature (Kingsley Zipf, 1932) and the dispersion/concentration of scientific literature in journals (Bradford, 1934).

Early studies had limited understanding of science due to a lack of theoretical guidance. Galton proposed ‘genius is hereditary’ in ‘Hereditary Genius’, and Lotka focused on the applicability of Lotka’s law (Galton, 1891). However, these studies impacted SoS development, highlighting the value of quantitative methods. John D. Bernal (1901–1971), the founder of SoS, used data charts in ‘The Social Function of Science’ to analyze government funding, teaching staff income, and scientist numbers (Bernal, 1939).

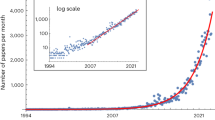

With the growth of scientific literature, establishing a quantitative research paradigm for SoS was crucial. Derek J. de Solla Price (1922–1983), influenced by Bernal, shifted from physics/mathematics to SoS. He identified the exponential growth of scientific literature, known as the Price Index (De Solla Price, 1975). Price’s works ‘Science since Babylon’ and ‘Little Science, Big Science’ organized and developed the research of Galton, Lotka, Bradford, and Zipf, proposing Price’s Law, a further deduction of Lotka’s law (D. J. D. S. Price, 1963). These works laid the foundation for quantitative research in SoS, attracting global interest.

Citation analysis and visualization in scientific knowledge networks

The development of SoS relies on scientific statistical data. American information scientist Eugene Eli Garfield (1925-2017) made significant contributions to this field by proposing in 1955 the use of citation relationships between scientific literature to analyze the dynamics and structure of scientific development (Garfield, 1955). This pivotal concept laid the groundwork for the subsequent establishment of databases like the Science Citation Index (SCI), Social Science Citation Index (SSCI), Journal Citation Report (JCR), and Arts and Humanities Citation Index (A&HCI), which collectively gather citation data from nearly 180 fields of books and journal articles. Garfield’s work not only provided a substantial data foundation for the quantitative research paradigm of SoS but also introduced new theories and methods, namely citation analysis, which greatly expanded the potential for SoS research development.

Citation frequency and its derived indicators, such as the h-index (Hirsch, 2005), have been widely adopted to quantify concepts like academic influence, scientific productivity, and labor efficiency. Garfield’s early work analyzing citation frequency identified patterns such as the correlation between rapid citation and Nobel Prize nominations (Garfield & Malin, 1968). The citation frequency has been a crucial tool for measuring the status and influence of American science (Board, 1981). Vasiliy Vasilevich Nalimov (1910-1997), the inventor of the term ‘scientometrics’ in the Soviet Union, emphasized the importance of citation rate over publication count for measuring scientific productivity (Granovsky, 2001).

Moreover, the distribution of citations over time has drawn attention, with scholars studying literature aging through citation curves. Bernal’s concept of “literature half-life” in 1958 (Xiao, 2011) and Price’s “Price index” (D. d. S. Price, 1976) in 1976 were key developments in quantifying literature aging. The “Sleeping Beauty in Science” phenomenon, identified by van Raan (Van Raan, 2004), refers to papers initially ignored that later received a surge in citations, which has attracted wide attention in SoS.

Citation relationships are used to construct networks to study the structure and evolution of scientific knowledge diffusion and cooperation. Price was the first scholar who discovered the phenomenon of ‘advantage accumulation’ in the growth of citation networks and gave a mathematical explanation for the evolution of citation networks (D. d. S. Price, 1976). The subsequent development of coupling analysis (Kessler 1963), co-citation (H. Small, 1973), co-authorship (Glänzel & Schubert, 2004), keyword co-occurrence (Callon, Courtial, & Laville, 1991), and reference co-occurrence (Porter & Chubin, 1985) have provided deeper insights into the dynamics of scientific knowledge. Social network analysis has further enriched the toolkit of scientometrics (Egghe & Rousseau, 1990).

Theoretical foundations for applying citation analysis to scientometrics have been established, considering citation motivation (Garfield, 1965) and information systems. Nalimov’s cybernetic model of science (Nalimov & Mul’chenko, 1969) and Merton’s sociological theories of citation (Merton, 1973) have contributed to understanding the role of citations in scientific evaluation (H. G. Small, 1978).

The paradigm shift from mathematical expression to visual representation in scientometrics has been facilitated by the introduction of information visualization techniques. Tools like CiteSpace (Chen, 2006), HistCite (Garfield, 2006), BibExcel (Persson, Danell, & Schneider, 2009), Vosviewer (N. Van Eck & Waltman, 2010), and CitnetExplorer (N. J. Van Eck & Waltman, 2014) have allowed for the visualization of citation networks, knowledge domains, and other complex data structures. This shift has led to the emergence of mapping knowledge domains as a new quantitative research field in SoS, enabling a more intuitive and comprehensive understanding of scientific knowledge networks and their evolution.

Large-scale and fine-grained shifts in SoS: leveraging big data and complex network

The evolution of SoS has witnessed a transformative shift towards large-scale and fine-grained analysis, driven by the emergence of big data and the application of complex network theory. This shift has been enabled by the accessibility of diverse data sources and the utilization of advanced computational tools, which have expanded the scope and depth of SoS research.

The availability of a wide range of data types, from social media to clinical trial records, has provided SoS researchers with a rich and interconnected dataset, such as OpenAlex, Publons, Dimensions, Semantic Scholar, Microsoft Academic Graph and so on. The opening of SciScinet in 2023, with its vast collection of over 134 million scientific documents and associated records, represents a significant leap in data availability and connectivity. This data deluge has not only increased the scale of SoS research but also facilitated more nuanced analyses (Z. Lin, Yin, Liu, & Wang, 2023). For instance, the study of the interaction between scientific papers and policies has become possible by constructing citation relationships between these two domains, revealing the integration of scientific research and policy-making (Yin, Gao, Jones, & Wang, 2021).

The scale of data in SoS research has exploded, enabling cross-validation of complex scientific problems and societal challenges. Research on scientists’ career dynamics (Huang, Gates, Sinatra, & Barabási, 2020; Wang, Jones, & Wang, 2019), collaboration patterns (Bu, Ding, Liang, & Murray, 2018; Bu, Murray, Ding, Huang, & Zhao, 2018; Wu, Wang, & Evans, 2019), and the evolution of science (Gates, Ke, Varol, & Barabási, 2019) itself have been enriched by the analysis of millions of scientific papers, grants, and mobility data. This scale has allowed for the exploration of subtle differences in career trajectories, collaboration dynamics, and disciplinary trends.

The introduction of complex networks has driven a shift towards more refined SoS research. These networks, characterized by self-organization, self-similarity, and scale-free properties, offer new perspectives on the dynamic processes, complex relationships, and underlying mechanisms of scientific development. Complex networks provide interpretability to the dynamic evolution of citation networks (Fortunato et al., 2018; Parolo et al., 2015), recognition of the intricate relationships between knowledge creation and diffusion (Sankar, Thumba, Ramamohan, Chandra, & Satheesh Kumar, 2020; X.-h. Zhang et al., 2019), and inference of the mechanisms driving scientific activities (Jia, Wang, & Szymanski, 2017; A. Zeng et al., 2019).

The field of complex networks has attracted notable scholars, leading to the emergence of a new paradigm in SoS research after 2010. However, this reliance on model characterization and interpretation has also led to the “trap of numbers,” (Sugimoto, 2021) highlighting the need for a balanced approach that combines quantitative data with qualitative insights to fully understand the complexities of SoS research.

From scientometrics to AI-driven research: The latest Paradigm of SoS

Since 2010, the development of scientific data infrastructures and the rise of artificial intelligence (AI) large models have led to a qualitative leap in SoS, both in terms of data sources and scale. The future of SoS lies in expanding its scope, particularly by incorporating more diverse methodologies and data sources (“Broader scope is key to the future of ‘science of science’,” 2022). This shift is crucial for addressing long-standing issues such as data scarcity, methodological limitations, and biases within the scientific community. In recent years, the ongoing global scientific and technological revolution, driven by digital intelligence and big data, has profoundly impacted traditional scientific research activities. This paradigm shift, characterized by “AI for science,” leverages massive datasets to learn scientific laws and natural principles, offering cutting-edge tools for advancing research (X. Zhang et al., 2023). AI plays a pivotal role in this transformation, acting as both a surrogate and a quant to enhance data collection, curation, and analysis (Messeri & Crockett, 2024), thereby improving the efficiency and scalability of SoS research. This evolution is propelling significant progress in SoS research and reshaping the landscape of scientific inquiry.

AI as Surrogate in SoS

In the face of complex research questions and the deluge of data collection and integration, AI serves as a powerful surrogate for scientometrics. It excels in processing vast amounts of data and generating alternative datasets, thereby reducing the burden on human researchers and enhancing the efficiency of data collection. Machine learning, deep learning and other artificial intelligence technologies address multi-dimensional, multi-modal, and multi-scenario data collection and simulation, assist researchers in a large number of experiments for verification (Peng et al., 2023), solve complex computing, and accelerate the pace of scientific research and technology development. For example, AI plays a vital role in sentiment annotation and entity extraction.

AI in sentiment annotation

Sentiment annotation is one of the most significant applications of AI in SoS. For instance, AI algorithms can analyze textual and audiovisual data from scientific publications, conference presentations, and online discussions to automatically label sentiments expressed by researchers. This not only streamlines the time-consuming process of manual annotation but also enables the detection of nuanced emotional trends within the scientific community that might otherwise be overlooked. Citation sentiment classification differs from general sentiment annotation tasks. While the latter focuses on semantic emotions in text (e.g., positive/negative/neutral), citation sentiment specifically refers to the stance (agreement, disagreement, or neutrality) that authors hold toward the cited work.

(1) Methods for sentiment annotation

Methods for sentiment analysis using artificial intelligence encompass rule-based approaches, supervised learning, semi-supervised learning, and unsupervised learning. These methods utilize predefined rules and dictionaries to identify sentiment words and phrases within a text, allowing for the judgment of the text’s sentiment. For example, a dictionary containing positive and negative words can be used to determine the sentiment of a text based on the frequency of these words. Supervised learning involves training a model to recognize sentiment words within a text and learn how to classify the text’s sentiment as positive, negative, or neutral. For instance, a large dataset of labeled text (i.e., text with known sentiment) can be used to train a classification model, such as a support vector machine (SVM) or a naive Bayes classifier, to predict the sentiment of new, unseen text. Semi-supervised learning leverages a small amount of labeled data and a large amount of unlabeled data. The model automatically labels the unlabeled data and then further trains itself using this newly labeled data. This approach is useful when labeled data is scarce and expensive to obtain. Unsupervised learning identifies sentiment patterns within a text, such as the co-occurrence of words, to infer the sentiment. For example, clustering algorithms can group similar texts based on their sentiment, allowing for the identification of different sentiment categories within a dataset.

(2) Sentiment annotation in SoS

AI has been successfully applied in analyzing the stance and sentiment within academic papers and reports to assess their scientific value and influence. For instance, citation sentiment analysis can evaluate how researchers view the contributions of cited documents—whether with agreement, disagreement, or neutrality—rather than only capturing the general semantic sentiment within the text. Using such methods, the stance in citations can be divided into positive (based on; corroboration; discovery; positive; practical; significant; standard; supply), neutral (contrast; co-citation; neutral), and negative (Aljuaid, Iftikhar, Ahmad, Asif, & Tanvir Afzal, 2021; Budi & Yaniasih, 2023; Kong et al., 2024). This distinction emphasizes that citation sentiment analysis is not just a subset of general sentiment classification, but a specialized task for academic discourse, providing a methodological foundation for the validation of classic SoS theoretical models such as social constructivist theory (Gilbert, 1977) and normative theory (Merton, 1973).

Moreover, with the rise of the “Public Understanding of Science” movement, the introduction of sentiment analysis methods helps the research of SoS to expand its research objects from the scientific community to the general public. This enables SoS to be more applicable to addressing issues regarding the relationship between modern society and science. AI-driven sentiment analysis can help policymakers identify and predict public opinions, providing essential support for decision-making processes. By analyzing large volumes of data from social media platforms and public reports, AI can track the emotional responses of different segments of the population toward scientific policies or projects. For example, during the COVID-19 pandemic, sentiment analysis of social media revealed key public concerns and emotional reactions, which could inform public health authorities on how to adjust their communication strategies to address fear and misinformation more effectively (Li et al., 2023). Besides, AI-driven sentiment analysis allows for the identification of emerging concerns that might not be immediately visible through traditional surveys or reports. This real-time analysis provides decision-makers with valuable insights to anticipate potential backlash or support for upcoming policies (Xue et al., 2020).

AI in entity extraction and annotation

Another illustrative example lies in the realm of entity extraction and annotation. AI tools can swiftly identify and classify key entities such as researchers, institutions, funding agencies, and research topics within vast corpora of scientific literature. This automation facilitates the construction of complex knowledge graphs, revealing intricate relationships and patterns that would be challenging to uncover manually.

Observation units are intelligently extracted from macro-units to micro-units of knowledge. In the past, scientific knowledge units are generally based on papers, patents, journals, or disciplines, and thus the research on scientific development in SoS is limited to macro-knowledge units (Y. Liu & Rousseau, 2010). Since the LDA was proposed in 2002, the efficiency of scientific or technical knowledge unit extraction based on scientific papers and patent texts has been greatly improved. After 2013, Large Language models (LLMs) such as Word2Vec, Top2Vec, and BERT, GPT which have powerful contextual understanding, language generation, and learning capabilities, optimize the analysis and annotation of full text, semantic recognition and prediction, strengthen the topic extraction efficiency and characterization ability of scientific text and technical text (Reimers & Gurevych, 2019), and promote the SoS’s research to explore the science from the fine-grained perspective. In SoS, AI-based entity extraction techniques have been applied to various tasks, advancing research in scientific knowledge mapping, bibliometric analysis, and the construction of knowledge graphs.

Researcher and institution identification: AI entity extraction techniques, such as those implemented in Sentence-BERT (Reimers & Gurevych, 2019) and SciSpaCy (Neumann, King, Beltagy, & Ammar, 2019), have been used to automatically identify and classify researchers, institutions, and research topics from vast corpora of academic papers and patents. This has facilitated the construction of large-scale knowledge graphs, which reveal the relationships and collaborations between different entities in scientific ecosystems. These graphs are then used to study collaboration networks, innovation patterns, and the evolution of research domains.

Patent and citation analysis: AI-based entity extraction models, such as BERT (Devlin, Chang, Lee, & Toutanova, 2018) and Word2Vec (Mikolov, Chen, Corrado, & Dean, 2013), are commonly employed in patent and citation databases to extract key entities like inventors, patents, journals, and disciplines. This allows researchers to analyze citation trends, measure scientific influence, and explore how knowledge flows between disciplines through patents and scholarly work.

Construction of fine-grained knowledge units: Since the introduction of models like LDA (Blei, Ng, & Jordan, 2003), and later enhanced by BERT-based models, entity extraction has been crucial for extracting fine-grained knowledge units from scientific texts. These models have improved the ability to identify technical terms, research outcomes, and domain-specific entities at a more granular level, advancing the study of micro-units of knowledge in SoS. Moreover, models specifically fine-tuned for scientific documents, such as SciBERT (Beltagy, Lo, & Cohan, 2019), SPECTER (Cohan, Feldman, Beltagy, Downey, & Weld, 2020), and SPECTER2 (Singh, D’Arcy, Cohan, Downey, & Feldman, 2022), have achieved high performance in tasks closely related to SoS. SciBERT significantly enhances the extraction and interpretation of scientific terms and named entities, making it highly effective for domain-specific knowledge tasks. SPECTER and SPECTER2, designed for document-level representation using citation-informed transformers, are particularly adept at capturing relationships between scientific documents based on citation networks, aiding in the construction of accurate, large-scale knowledge graphs that reveal complex interconnections within scientific research.

Biomedical and clinical research: In the context of biomedical research, entity extraction tools like Med7 (Kormilitzin, Vaci, Liu, & Nevado-Holgado, 2021) have been utilized to extract clinical entities (e.g., diseases, treatments) from scientific publications and electronic health records. These extracted entities are used to map scientific advancements in medicine, track the development of treatments, and understand trends in healthcare innovation.

AI in image recognition

AI has significantly advanced the detection of image-related issues in scientific publishing, particularly in biomedical research, ensuring data integrity and ethical compliance. Convolutional Neural Networks (CNNs), such as AlexNet, GoogleNet, ResNet, R-CNN and FCNN models are widely used to identify duplicated or manipulated images by analyzing features like contrast, brightness, and structural patterns (Yu, Yang, Zhang, Armstrong, & Deen, 2021). Similarly, Generative Adversarial Networks (GANs) and anomaly detection models are applied to recognize subtle image manipulations, such as artificial enhancements or duplicated sections, potentially leading to research misinterpretation (X. Liu et al., 2021). Further, algorithm like PCA (Plehiers et al., 2020), t-SNE (Schubert & Gertz, 2017), DBSCAN (Çelik, Dadaşer-Çelik, & Dokuz, 2011) or LSTM (Fernando, Denman, Sridharan, & Fookes, 2018) are usually used for anomaly detection, which ensured data authenticity in biomedical datasets. Additionally, Imagetwin (https://imagetwin.ai/) and Proofig (https://www.proofig.com/), are examples of tools that utilize AI to detect potential image anomalies, offering support to journals, publishers, and institutions in maintaining research integrity. In summary, the integration of AI in detecting image-related issues has become a powerful tool in SoS, reinforcing the accuracy and ethical standards of scientific research. Through advanced image recognition models and dedicated software, researchers and publishers are better equipped to monitor and verify visual data, contributing to the trustworthiness and quality of scientific knowledge.

AI as quant in SoS

AI’s prowess in computational methods significantly supplements human capabilities, particularly when dealing with immense datasets and intricate relationships. It bridges the gap between human limitations and the demands of SoS research, enhancing both predictability and interpretability.

Predictive algorithms

AI has become an influential tool in predicting various aspects of scientific careers, research team performance, and research project outcomes. By utilizing machine learning algorithms and data analytics, AI provides insights that were previously difficult or impossible to obtain through traditional methods.

Predicting citation performance and citing behavior: AI applications in citing behavior research involve analyzing citation patterns through machine learning (T. Zeng & Acuna, 2020)and natural language processing to uncover insights about scholarly communication. By utilizing algorithms such as neural networks and regression models, researchers can predict citation counts based on various factors, including publication attributes and author influence (Akella, Alhoori, Kondamudi, Freeman, & Zhou, 2021). Studies have shown that these models can effectively identify biases in citation practices and the impact of collaboration on citation dynamics (Iqbal et al., 2021). Such insights enhance our understanding of knowledge dissemination and the complex networks that shape academic impact.

Predicting scientists’ career trajectories: AI can model and predict the career patterns of scientists by analyzing their publications, citations, collaborations, and affiliations. Predictive algorithms such as decision trees, support vector machines (SVMs), and neural networks are employed to identify key factors influencing scientific productivity and career progression (Edwards, Acheson-Field, Rennane, & Zaber, 2023; Musso, Hernández, & Cascallar, 2020; Yang, Chawla, & Uzzi, 2019). For example, factors like the number of publications, the diversity of collaborations, and funding history can be fed into these algorithms to forecast future career success or the likelihood of achieving high-impact research outcomes (L. Liu, Dehmamy, Chown, Giles, & Wang, 2021; L. Liu et al., 2018; Wang et al., 2019).

Predicting research team performance: AI also plays a crucial role in evaluating and predicting the performance of research teams. By analyzing team composition, collaboration patterns, and historical project outcomes, predictive models can estimate the future productivity and success of a research team (Ghawi, Müller, & Pfeffer, 2021; Giannakas, Troussas, Krouska, Sgouropoulou, & Voyiatzis, 2022). Factors such as team size, team hierarchy, team distance, diversity in expertise, and the centrality of team members within their academic networks have been shown to correlate with team performance (Y. Lin, Frey, & Wu, 2023; Wu et al., 2019; Xu, Wu, & Evans, 2022).

Predicting research project outcomes: AI can significantly enhance the prediction of research project performance by analyzing multiple dimensions of project execution and outcomes. Through machine learning and data-driven models, AI can evaluate project success across various performance indicators, such as time management, resource allocation, collaboration dynamics, and the achievement of project goals (Yoo, Jung, & Jun, 2023). AI-powered predictive models often rely on historical data, such as past project performance, team expertise, funding amounts, and institutional resources, to make projections. These models can identify trends and patterns that forecast whether a project is likely to meet its objectives. The use of AI in predictive analytics offers insights into several key performance areas: timeline and milestones (Kim & Jang, 2024), budget and resource allocation (Jiang, Fan, Zhang, & Zhu, 2023), innovation and output (Gao, Wen, & Deng, 2022), team collaboration and productivity (Tohalino & Amancio, 2022; Yoo et al., 2023).

Machine learning for explainability

The construction of relationships in SoS spans from the investigation of extrinsic connections to the elucidation of intrinsic mechanisms. Examining the interconnections between elements within a scientific system has consistently been a pivotal aspect of SoS. During the sociological analysis phase, scientific inquiry typically employs deductive reasoning to establish relationships among elements. Regression and correlation analyses are frequently utilized to decipher the associations between elements in the realm of scientometrics. Since 2010, SoS research has leveraged causal inference models from econometrics, such as Difference in Differences, Regression Discontinuity Design, Granger Causality Test, Propensity Score Matching, and Instrumental Variables (Zhao et al., 2020), along with machine learning approaches like BART (Prado, Moral, & Parnell, 2021), TMLE (Schuler & Rose, 2017), and causal forest models (Wager & Athey, 2018) to delve into intrinsic causal mechanisms.

Machine learning methods play a crucial role in addressing the causal inference demands of large-scale and high-dimensional data. These methods are adept at processing extensive datasets, predicting nonlinear and complex relationships between causes and effects, and enhancing the precision of causal inferences. Furthermore, languages such as Python and R offer a plethora of causal inference packages, including Random Forest, XGBoost, and Super Learner, which facilitate intelligent inference of the intrinsic mechanisms governing scientific system elements within SoS research (Athey, Tibshirani, & Wager, 2019; Hill, Linero, & Murray, 2020). The application of machine learning-based causal inference is instrumental in unraveling the intricacies of complex relationships, providing deeper insights and more accurate predictions in the field of SoS research.

AI as arbiter in SoS

AI plays a significant role in the peer review process within SoS, offering the potential to enhance efficiency, reduce bias, and improve transparency. Generally speaking, the role of AI as an arbiter in peer review can be categorized into four types.

Automation of routine tasks: AI can automate many routine aspects of peer review, such as format checks, plagiarism detection, and manuscript matching. For example, tools like iThenticate help in detecting plagiarism, and Penelope.ai checks manuscript formatting (Kankanhalli, 2024). This helps to speed up the process and reduces the workload of human reviewers, allowing them to focus on more complex tasks like evaluating scientific rigor and originality. Similarly, StatReviewer and Statcheck assist with checking the statistical methods reported in papers (Nuijten & Polanin, 2020; Shanahan, 2016).

Reviewer-manuscript matching: AI tools, such as the Toronto Paper Matching System (TPMS), use machine learning algorithms to match reviewers with appropriate expertise to manuscripts (Charlin & Zemel, 2013). This process is more efficient than traditional keyword-based matching and ensures that the most suitable reviewers are selected (Kalmukov, 2020). Similarly, machine learning models have been employed by large conferences like NeurIPS to improve the accuracy of reviewer-paper matching (Kankanhalli, 2024; S. Price & Flach, 2017). By improving the quality of reviewer selection, AI helps to increase the quality and relevance of peer review feedback.

Bias mitigation: AI has the potential to reduce biases that are inherent in human peer review. Human reviewers may unintentionally introduce biases based on the author’s institution, country, or research field (Thelwall & Kousha, 2023). AI systems trained on diverse datasets can mitigate some of these biases by evaluating manuscripts based on objective criteria like novelty or methodological soundness. Recent studies have shown that AI-based tools can help assess articles more objectively, although there remain challenges in fully eliminating biases from AI algorithms (Checco, Bracciale, Loreti, Pinfield, & Bianchi, 2021).

Challenges and ethical concerns: Despite its benefits, AI in peer review also introduces concerns, particularly related to transparency and algorithmic bias. AI algorithms, especially those based on deep learning, are often “black boxes,” making it difficult for users to understand how decisions are made (Thelwall & Kousha, 2023). This raises concerns about fairness and accountability in the peer review process. Recent work has called for the development of more explainable AI models to enhance the transparency of decisions made by these systems (A & R, 2023; Vilone & Longo, 2020).

Conclusion and future work

Since the beginning of the 20th century, when the term “science of science” was introduced in Poland, SoS has witnessed over 100 years of development. From qualitative analyses of science’s social functions to large-scale quantitative approaches, such as scientometrics, the field has matured through the use of statistical methods, citation analysis, and big data. AI’s role in SoS, acting as a surrogate for data processing, a quant for data analysis, and an arbiter for peer review, marks a transformative moment in the discipline. By integrating AI into SoS, researchers can analyze vast datasets with greater precision, predict project performance, and derive actionable insights into scientific productivity and collaboration. Moving forward, AI’s capabilities will continue to reshape SoS, offering new opportunities to understand the dynamics of science and address societal challenges.

Looking into the future, after several research paradigm shifts and enlightenment, SoS’s research will open a new era of AI for SoS, facing the strategic needs and practical problems of scientific development, focusing on the political, economic, cultural, societal and other dimensions of science. First of all, it is necessary to strengthen the sense of disciplinary community in SoS, enhance the construction of disciplinary infrastructure, and cohesion of disciplinary core strength. Secondly, SoS’s research must be based on the “problem-oriented” items, especially those related to sustainable development and scientific governance. Lastly, researchers should go out of the “ivory tower” to convey valuable knowledge to the public, to expand the general public’s understanding of SoS.

Data availability

Data sharing is not applicable to this article as no datasets were generated or analysed during the current study.

References

Broader scope is key to the future of ‘science of science’. (2022). Nature Human Behaviour, 6(7), 899–900. https://doi.org/10.1038/s41562-022-01424-5

Akella AP, Alhoori H, Kondamudi PR, Freeman C, Zhou H (2021) Early indicators of scientific impact: Predicting citations with altmetrics. Journal Informetrics 15(2):101128. https://doi.org/10.1016/j.joi.2020.101128

Aljuaid H, Iftikhar R, Ahmad S, Asif M Tanvir Afzal M (2021) Important citation identification using sentiment analysis of in-text citations. Telematics and Informatics, 56. https://doi.org/10.1016/j.tele.2020.101492

Athey S, Tibshirani J, Wager S (2019) Generalized random forests. Annals Statistics 47(2):1179–1203. https://doi.org/10.1214/18-AOS1709

Beltagy I, Lo K Cohan A (2019) SciBERT: A Pretrained Language Model for Scientific Text

Bernal J D (1939) The Social Function of Science: The social function of science

Blei DM, Ng AY, Jordan MI (2003) Latent dirichlet allocation. J. Mach. Learn. Res. 3(null):993–1022

Board N S (1981) Science Indicators, 1980: Report of the National Science Board, 1981: US Government Printing Office

Bradford SC (1934) Sources of information on specific subjects. Engineering 137:85–86

Bu Y, Ding Y, Liang X, Murray DS (2018) Understanding persistent scientific collaboration. Journal Association Information Science Technology 69(3):438–448. https://doi.org/10.1002/asi.23966

Bu Y, Murray DS, Ding Y, Huang Y, Zhao Y (2018) Measuring the stability of scientific collaboration. Scientometrics 114(2):463–479. https://doi.org/10.1007/s11192-017-2599-0

Budi I, Yaniasih Y (2023) Understanding the meanings of citations using sentiment, role, and citation function classifications. Scientometrics 128(1):735–759. https://doi.org/10.1007/s11192-022-04567-4

Callon M, Courtial JP, Laville F (1991) Co-word analysis as a tool for describing the network of interactions between basic and technological research: The case of polymer chemsitry. Scientometrics 22:155–205

Cattell JM (1906) A Statistical Study of American Men of Science: The Selection of a Group of One Thousand Scientific Men. Science 24(621):658–665. https://doi.org/10.1126/science.24.621.658

Çelik M, Dadaşer-Çelik F, Dokuz A Ş (2011, 15-18 June 2011). Anomaly detection in temperature data using DBSCAN algorithm. Paper presented at the 2011 International Symposium on Innovations in Intelligent Systems and Applications

Charlin L Zemel R S (2013) The Toronto Paper Matching System: An automated paper-reviewer assignment system

Checco A, Bracciale L, Loreti P, Pinfield S, Bianchi G (2021) AI-assisted peer review. Humanities Social Sciences Communications 8(1):25. https://doi.org/10.1057/s41599-020-00703-8

Chen CM (2006) CiteSpace II: Detecting and visualizing emerging trends and transient patterns in scientific literature. Journal American Society Information Science Technology 57(3):359–377. https://doi.org/10.1002/asi.20317

Cohan A, Feldman S, Beltagy I, Downey D, Weld D S (2020) SPECTER: Document-level Representation Learning using Citation-informed Transformers

Devlin J, Chang M-W, Lee K, Toutanova K (2018) BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding

Van Eck N, Waltman L (2010) Software survey: VOSviewer, a computer program for bibliometric mapping. Scientometrics 84(2):523–538

Van Eck NJ, Waltman L (2014) CitNetExplorer: A new software tool for analyzing and visualizing citation networks. Journal Informetrics 8(4):802–823

Edwards KA, Acheson-Field H, Rennane S, Zaber MA (2023) Mapping scientists’ career trajectories in the survey of doctorate recipients using three statistical methods. Scientific Reports 13(1):8119. https://doi.org/10.1038/s41598-023-34809-1

Egghe, L & Rousseau R (1990) Introduction to informetrics. Quantitative methods in library, documentation and information science: Elsevier Science Publishers

Fernando T, Denman S, Sridharan S, Fookes C (2018) Soft + Hardwired attention: An LSTM framework for human trajectory prediction and abnormal event detection. Neural Networks 108:466–478. https://doi.org/10.1016/j.neunet.2018.09.002

Fortunato S, Bergstrom C T, Börner K, Evans J A, Helbing D, Milojević S, … Barabási A L (2018) Science of science. Science, 359 (6379). https://doi.org/10.1126/science.aao0185

Galton F (1874) English Men of Science; their Nature and Nurture, 1st ed.). Routledge, London

Galton F (1886) Regression Towards Mediocrity in Hereditary Stature. Journal Anthropological Institute Great Britain Ireland 15:246–263. https://doi.org/10.2307/2841583

Galton F (1891) Hereditary genius: D. Appleton

Gao Q, Wen T, Deng Y (2022) A novel network-based and divergence-based time series forecasting method. Inf. Sci. 612(C):553–562. https://doi.org/10.1016/j.ins.2022.08.120

Garfield E (1955) Citation indexes for science: A new dimension in documentation through association of ideas. Science 122(3159):108–111

Garfield E (1965) Can citation indexing be automated. Paper presented at the Statistical association methods for mechanized documentation, symposium proceedings

Garfield E (2006) The History and Meaning of the Journal Impact Factor. jama 295(1):90–93. https://doi.org/10.1001/jama.295.1.90

Garfield E Malin M V (1968) Can Nobel Prize winners be predicted. Paper presented at the 135th meetings of the American Association for the Advancement of Science, Dallas, TX

Gates AJ, Ke Q, Varol O, Barabási AL (2019) Nature’s reach: narrow work has broad impact. Nature 575(7781):32–34. https://doi.org/10.1038/d41586-019-03308-7

Ghawi R, Müller S, Pfeffer J (2021) Improving team performance prediction in MMOGs with temporal communication networks. Social Network Analysis Mining 11(1):65. https://doi.org/10.1007/s13278-021-00775-7

Giannakas F, Troussas C, Krouska A, Sgouropoulou C, Voyiatzis I (2022) Multi-technique comparative analysis of machine learning algorithms for improving the prediction of teams’ performance. Education Information Technologies 27(6):8461–8487. https://doi.org/10.1007/s10639-022-10900-4

Glänzel W Schubert A (2004) Analysing scientific networks through co-authorship. In Handbook of quantitative science and technology research: The use of publication and patent statistics in studies of S&T systems (pp. 257-276): Springer

Granovsky YV (2001) Is It Possible to Measure Science? V. V. Nalimov’s Research in Scientometrics. Scientometrics 52(2):127–150. https://doi.org/10.1023/A:1017991017982

Hill J, Linero A, Murray J (2020) Bayesian Additive Regression Trees: A Review and Look Forward. Annual Review Statistics Its Application 7(1):251–278. https://doi.org/10.1146/annurev-statistics-031219-041110

Hirsch JE (2005) An index to quantify an individual’s scientific research output. Proceedings National Academy Sciences USA 102(46):16569–16572. https://doi.org/10.1073/pnas.0507655102

Huang J, Gates AJ, Sinatra R, Barabási AL (2020) Historical comparison of gender inequality in scientific careers across countries and disciplines. Proceedings National Academy Sciences USA 117(9):4609–4616. https://doi.org/10.1073/pnas.1914221117

Ienna G, Rispoli G. The 1931 London Congress: The Rise of British Marxism and the Interdependencies of Society, Nature and Technology. HoST - Journal of History of Science and Technology, 2021, Ludus Association, 15(1):107–130. https://doi.org/10.2478/host-2021-0005

Iqbal S, Hassan S-U, Aljohani NR, Alelyani S, Nawaz R, Bornmann L (2021) A decade of in-text citation analysis based on natural language processing and machine learning techniques: an overview of empirical studies. Scientometrics 126(8):6551–6599. https://doi.org/10.1007/s11192-021-04055-1

Jia T, Wang D, Szymanski BK (2017) Quantifying patterns of research-interest evolution. Nature Human Behaviour 1(4):0078. https://doi.org/10.1038/s41562-017-0078

Jiang H, Fan S, Zhang N, Zhu B (2023) Deep learning for predicting patent application outcome: The fusion of text and network embeddings. Journal Informetrics 17(2):101402. https://doi.org/10.1016/j.joi.2023.101402

Kalmukov Y (2020) An algorithm for automatic assignment of reviewers to papers. Scientometrics 124(3):1811–1850. https://doi.org/10.1007/s11192-020-03519-0

Kankanhalli A (2024) Peer Review in the Age of Generative AI. Journal Association Information Systems 25(1):76–84. https://doi.org/10.17705/1jais.00865

Kim H, Jang H (2024) Predicting research projects’ output using machine learning for tailored projects management. Asian Journal Technology Innovation 32(2):346–363. https://doi.org/10.1080/19761597.2023.2243611

Kingsley Zipf G (1932) Selected studies of the principle of relative frequency in language: Harvard university press

Kong L, Zhang W, Hu H, Liang Z, Han Y, Wang D, Song M (2024) Transdisciplinary fine-grained citation content analysis: A multi-task learning perspective for citation aspect and sentiment classification. Journal of Informetrics 18(3). https://doi.org/10.1016/j.joi.2024.101542

Kormilitzin A, Vaci N, Liu Q, Nevado-Holgado A (2021) Med7: A transferable clinical natural language processing model for electronic health records. Artificial Intelligence Medicine 118:102086. https://doi.org/10.1016/j.artmed.2021.102086

Kuhn T S (1962) The Structure of Scientific Revolutions. Chicago: The structure of scientific revolutions

Lee Rodgers J, Nicewander WA (1988) Thirteen Ways to Look at the Correlation Coefficient. American Statistician 42(1):59–66. https://doi.org/10.1080/00031305.1988.10475524

Li W, Haunert J-H, Knechtel J, Zhu J, Zhu Q, Dehbi Y (2023) Social media insights on public perception and sentiment during and after disasters: The European floods in 2021 as a case study. Transactions GIS 27(6):1766–1793. https://doi.org/10.1111/tgis.13097

Lin Y, Frey CB, Wu L (2023) Remote collaboration fuses fewer breakthrough ideas. Nature 623(7989):987–991. https://doi.org/10.1038/s41586-023-06767-1

Lin Z, Yin Y, Liu L Wang D (2023) SciSciNet: A large-scale open data lake for the science of science research. Scientific Data 10(1). https://doi.org/10.1038/s41597-023-02198-9

Liu L, Dehmamy N, Chown J, Giles C L Wang D (2021) Understanding the onset of hot streaks across artistic, cultural, and scientific careers. Nature Communications 12(1). https://doi.org/10.1038/s41467-021-25477-8

Liu L, Wang Y, Sinatra R, Giles CL, Song C, Wang D (2018) Hot streaks in artistic, cultural, and scientific careers. Nature 559(7714):396–399. https://doi.org/10.1038/s41586-018-0315-8

Liu X, Gao K, Liu B, Pan C, Liang K, Yan L, Yu Y (2021) Advances in Deep Learning-Based Medical Image Analysis. Health Data Science 2021:8786793. https://doi.org/10.34133/2021/8786793

Liu Y, Rousseau R (2010) Knowledge diffusion through publications and citations: A case study using ESI-fields as unit of diffusion. Journal American Society Information Science Technology 61(2):340–351. https://doi.org/10.1002/asi.21248

Lotka AJ (1926) The frequency distribution of scientific productivity. Journal Washington academy sciences 16(12):317–323

Merton R K (1973) The sociology of science: Theoretical and empirical investigations: University of Chicago press

Messeri L, Crockett MJ (2024) Artificial intelligence and illusions of understanding in scientific research. Nature 627(8002):49–58. https://doi.org/10.1038/s41586-024-07146-0

Mikolov T, Chen K, Corrado G S Dean J (2013) Efficient Estimation of Word Representations in Vector Space. Proceedings of Workshop at ICLR, 2013

Musso MF, Hernández CFR, Cascallar EC (2020) Predicting key educational outcomes in academic trajectories: a machine-learning approach. Higher Education 80(5):875–894. https://doi.org/10.1007/s10734-020-00520-7

Nalimov V & Mul’chenko Z (1969) Naukometriya, the study of the development of science as an information process in Russian. In: Nauka, Moscow

Neumann M, King D, Beltagy I, Ammar W (2019) ScispaCy: Fast and robust models for biomedical natural language processing. Paper presented at the BioNLP 2019 - SIGBioMed Workshop on Biomedical Natural Language Processing, Proceedings of the 18th BioNLP Workshop and Shared Task

Nigel Gilbert G (1977) Referencing as persuasion. Social studies science 7(1):113–122

Nuijten MB, Polanin JR (2020) “statcheck”: Automatically detect statistical reporting inconsistencies to increase reproducibility of meta-analyses. Research Synthesis Methods 11(5):574–579. https://doi.org/10.1002/jrsm.1408

Parolo PDB, Pan RK, Ghosh R, Huberman BA, Kaski K, Fortunato S (2015) Attention decay in science. Journal Informetrics 9(4):734–745. https://doi.org/10.1016/j.joi.2015.07.006

Peng B, Wei Y, Qin Y, Dai J, Li Y, Liu A, Wen P (2023) Machine learning-enabled constrained multi-objective design of architected materials. Nature Communications 14(1):6630. https://doi.org/10.1038/s41467-023-42415-y

Persson O, Danell R, Schneider JW (2009) How to use Bibexcel for various types of bibliometric analysis. Celebrating scholarly communication studies: A Festschrift Olle Persson his 60th Birthday 5:9–24

Plehiers P P, Coley C W, Gao H, Vermeire F H, Dobbelaere M R, Stevens C V, … Green W H (2020) Artificial Intelligence for Computer-Aided Synthesis In Flow: Analysis and Selection of Reaction Components. Paper presented at the Frontiers in Chemical Engineering

Porter A, Chubin D (1985) An indicator of cross-disciplinary research. Scientometrics 8(3-4):161–176

Prado EB, Moral RA, Parnell AC (2021) Bayesian additive regression trees with model trees. Statistics Computing 31(3):20. https://doi.org/10.1007/s11222-021-09997-3

Price DDS (1976) A general theory of bibliometric and other cumulative advantage processes. Journal American Society Information Science 27:292–306

Price D J D S (1963) Little science, big science: Columbia University Press

Price S, Flach PA (2017) Computational support for academic peer review: a perspective from artificial intelligence. Commun. ACM 60(3):70–79. https://doi.org/10.1145/2979672

Van Raan AFJ (2004) Sleeping Beauties in science. Scientometrics 59(3):467–472. https://doi.org/10.1023/B:SCIE.0000018543.82441.f1

Reimers N, Gurevych I (2019) Sentence-BERT: Sentence Embeddings using Siamese BERT-Networks. Paper presented at the Proceedings of the 2019 Conference on Empirical Methods in Natural Language Processing and the 9th International Joint Conference on Natural Language Processing (EMNLP-IJCNLP)

Sankar CP, Thumba DA, Ramamohan TR, Chandra SSV, Satheesh Kumar K (2020) Agent-based multi-edge network simulation model for knowledge diffusion through board interlocks. Expert Systems Applications 141:112962. https://doi.org/10.1016/j.eswa.2019.112962

Saranya A, Subhashini R (2023) A systematic review of Explainable Artificial Intelligence models and applications: Recent developments and future trends. Decision Analytics Journal 7:100230. https://doi.org/10.1016/j.dajour.2023.100230

Schubert E Gertz M (2017, 2017//). Intrinsic t-Stochastic Neighbor Embedding for Visualization and Outlier Detection. Paper presented at the Similarity Search and Applications, Cham

Schuler MS, Rose S (2017) Targeted Maximum Likelihood Estimation for Causal Inference in Observational Studies. American Journal Epidemiology 185(1):65–73. https://doi.org/10.1093/aje/kww165

Shanahan D (2016) A peerless review? Automating methodological and statistical review. Retrieved from https://blogs.biomedcentral.com/bmcblog/2016/05/23/peerless-review-automating-methodological-statistical-review/

Shneider AM (2009) Four stages of a scientific discipline; four types of scientist. Trends Biochemical Sciences 34(5):217–223. https://doi.org/10.1016/j.tibs.2009.02.002

Singh A, D’Arcy M, Cohan A, Downey D & Feldman S (2022) SciRepEval: A Multi-Format Benchmark for Scientific Document Representations. Paper presented at the Conference on Empirical Methods in Natural Language Processing

Small H (1973) Co-citation in the scientific literature: A new measure of the relationship between two documents. Journal American Society Information Science 24(4):265–269. https://doi.org/10.1002/asi.4630240406

Small HG (1978) Cited documents as concept symbols. Social Studies Science 8(3):327–340

De Solla Price, D J (1975) Science since Babylon (300017987): Yale University Press New Haven

Sugimoto CR (2021) Scientific success by numbers. Nature 593(7857):30–31. https://doi.org/10.1038/d41586-021-01169-7

Thelwall M Kousha K (2023) Technology assisted research assessment: algorithmic bias and transparency issues. Aslib Journal of Information Management. https://doi.org/10.1108/AJIM-04-2023-0119

Tohalino JAV, Amancio DR (2022) On predicting research grants productivity via machine learning. Journal Informetrics 16(2):101260. https://doi.org/10.1016/j.joi.2022.101260

Vilone G Longo L (2020) Explainable Artificial Intelligence: a Systematic Review. arXiv:2006.00093. https://doi.org/10.48550/arXiv.2006.00093

Wager S, Athey S (2018) Estimation and inference of heterogeneous treatment effects using random forests. Journal American Statistical Association 113(523):1228–1242. https://doi.org/10.1080/01621459.2017.1319839

Wang Y, Jones B F Wang D (2019) Early-career setback and future career impact. Nature Communications, 10(1). https://doi.org/10.1038/s41467-019-12189-3

Wu L, Wang D, Evans JA (2019) Large teams develop and small teams disrupt science and technology. Nature 566(7744):378–382. https://doi.org/10.1038/s41586-019-0941-9

Xiao P (2011) A Review of the Scientific Information Conference in1958and Its Historical Significance. Information Documentation Services 32(05):27–31. https://kns.cnki.net/kcms2/article/abstract?v=tJ8vF22QX-oThckOgSpWscVgn4pI6CnfHyStwZgkqDBM9eTTx3dRS-2uoSBXiEUdi8FmAtMG1RQUiwLPBWyWNzBYdYuG6-8jtA6v8v9Lj462jyvWhDdPPXbmMGI8OjUH2_wvB4YFo-k=&uniplatform=NZKPT&language=CHS

Xu F, Wu L, Evans J (2022) Flat teams drive scientific innovation. Proceedings of the National Academy of Sciences of the United States of America, 119(23). https://doi.org/10.1073/pnas.2200927119

Xue J, Chen J, Chen C, Zheng C, Li S, Zhu T (2020) Public discourse and sentiment during the COVID 19 pandemic: Using Latent Dirichlet Allocation for topic modeling on Twitter. PLoS ONE 15(9):e0239441. https://doi.org/10.1371/journal.pone.0239441

Yang Y, Chawla NV, Uzzi B (2019) A network’s gender composition and communication pattern predict women’s leadership success. Proceedings National Academy Sciences USA 116(6):2033–2038. https://doi.org/10.1073/pnas.1721438116

Yin Y, Gao J, Jones BF, Wang D (2021) Coevolution of policy and science during the pandemic. Science 371(6525):128–130. https://doi.org/10.1126/science.abe3084

Yoo HS, Jung YL, Jun S-P (2023) Prediction of SMEs’ R&D performances by machine learning for project selection. Scientific Reports 13(1):7598. https://doi.org/10.1038/s41598-023-34684-w

Yu H, Yang LT, Zhang Q, Armstrong D, Deen MJ (2021) Convolutional neural networks for medical image analysis: State-of-the-art, comparisons, improvement and perspectives. Neurocomputing 444:92–110. https://doi.org/10.1016/j.neucom.2020.04.157

Zeng A, Shen Z S, Zhou J L, Fan Y, Di Z R, Wang Y G, … Havlin S (2019). Increasing trend of scientists to switch between topics. Nature Communications, 10. https://doi.org/10.1038/s41467-019-11401-8

Zeng T, Acuna DE (2020) Modeling citation worthiness by using attention-based bidirectional long short-term memory networks and interpretable models. Scientometrics 124(1):399–428. https://doi.org/10.1007/s11192-020-03421-9

Zhang X, Wang L, Helwig J, Luo Y, Fu C, Xie Y, … Ji S (2023). Artificial Intelligence for Science in Quantum, Atomistic, and Continuum Systems

Zhang X-h, Qian K, Ren J-j, Jia Z-p, Jiang T-p, Zhang Q (2019) Measurement and analysis of content diffusion characteristics in opportunity environmentswith Spark. Frontiers Information Technology Electronic Engineering 20(10):1404–1414. https://doi.org/10.1631/FITEE.1900137

Zhao Z, Bu Y, Kang L, Min C, Bian Y, Tang L, Li J (2020) An investigation of the relationship between scientists’ mobility to/from China and their research performance. Journal Informetrics 14(2):101037. https://doi.org/10.1016/j.joi.2020.101037

Acknowledgements

This research were supported by the Natural Science Foundation of Guangdong Province, China (No. 2021A1515012291).

Author information

Authors and Affiliations

Contributions

JH: design of the work, and supervised the development of the research; BZ: literature collection, wrote and revised the manuscript; HL: wrote and revised the manuscript; WL: wrote the manuscript. All authors contributed to manuscript revision and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethical approval

Ethical approval was not required as the study did not involve human participants.

Informed consent

Informed consent was not required as the study did not involve human participants.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Hou, J., Zheng, B., Li, H. et al. Evolution and impact of the science of science: from theoretical analysis to digital-AI driven research. Humanit Soc Sci Commun 12, 316 (2025). https://doi.org/10.1057/s41599-025-04617-1

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1057/s41599-025-04617-1