Abstract

This paper presents the results of a novel scoping review on the practical models for generating three different types of synthetic health records (SHRs): medical text, time series, and longitudinal data. The innovative aspects of the review, which incorporate study objectives, data modality, and research methodology of the reviewed studies, uncover the importance and the scope of the topic for the digital medicine context. In total, 52 publications met the eligibility criteria for generating medical time series (22), longitudinal data (17), and medical text (13). Privacy preservation was found to be the main research objective of the studied papers, along with class imbalance, data scarcity, and data imputation as the other objectives. The adversarial network-based, probabilistic, and large language models exhibited superiority for generating synthetic longitudinal data, time series, and medical texts, respectively. Finding a reliable performance measure to quantify SHR re-identification risk is the major research gap of the topic.

Similar content being viewed by others

Introduction

Application of digital technologies, e.g., artificial intelligence, to improve medical management, patient outcomes, and healthcare delivery, is known as digital medicine1,2. This context has significantly evolved towards intelligent decision-making after the development of deep learning (DL) models. A common feature of most DL models is the need for a large dataset for training and validation of the models. Preparing a large dataset that incorporates sufficient samples of various classes for training a DL model is sometimes problematic. This is particularly evident when it comes to medical data in which the privacy of patient data demands serious attention.

Social and medical communities rigorously assign escalating regulations at the different societal levels to avoid misconduct of patient data. In this light, the European Union has recently released the first regulation3 on artificial intelligence towards lowering the risk of data abuse and further governance on the developers. Such pertinent restrictions as well as difficulties in collecting medical data, act as the impeding factors in preparing a sufficiently large and fair dataset for training and validation of the DL models. Medical data is nowadays stored in a digital fashion, named Electronic Health Record (EHR), which is composed of a set of the health-related data collected from the individuals of a population during their visits to any care unit defined by the healthcare system of the population4. An EHR typically contains demographic data, clinical findings, lab values, procedures, medications, symptoms, diagnoses, medical images, physiological signals, and descriptive texts obtained from the individuals during the visits. During a visit to a care unit, and depending on the visit, an individual may undergo a sequence of investigations and/or examinations, called events, which are stored in the EHR of the individual, using the globally known codes, e.g., international classification code (ICD)5 for the diagnosis. A sequence of the tabular health records of an individual, resulting from several visits, constitutes longitudinal health data6,7.

Accessibility of the EHR can be of vital importance when it comes to the patient management, and hence, highest level of security considerations are administered to preserve privacy of EHRs. Healthcare systems set intensive restrictions and limitations for accessing EHR contents. As a result, preparing a rich clinical dataset is considered as an important research question for any study in the digital medicine context. A practical solution to this research question is to create a synthetic copy of the clinical dataset from the real one, and use it for research purposes. The created dataset, which is called synthetic health record (SHR), can be utilized and shared with the researchers, instead of the real ones, for the training and validation of DL models. A SHR is created to resemble the general characteristics of EHR data while ensuring the subjects of the EHR remain unidentifiable8,9,10. Such datasets can be employed by any machine learning method for training and optimization purposes.

Health records can incorporate tabular data of the medical records, longitudinal tables of different visits, time series of physiological signals, textual clinical data, and medical images. Recent progress in developing DL models for generating various modalities of synthetic data enabled researchers to address the diverse research gaps that existed in the preparation of high-quality health data. Topics such as privacy leakage, limited data availability, uneven class distribution, and scattered data, are regarded as some of the research topics of this domain.

Despite substantial progress in developing generative models for synthetic medical data, there is still a big room for studying the reliability of the generated data. As we will see in the discussion section, the reliability of the models is explored from two different perspectives: the quality of generated data in terms of conformity with real data, and the capability of learning models to preserve the privacy of the real data in terms of re-identification. Discrepancy in the study objectives for various data modalities, and inconsistency in the evaluation metrics, make it a complicated task to select an appropriate model for creating SHR along with a realistic evaluation of the model.

This paper presents the results of a scoping review study on the existing DL models for generating SHRs. The review examines the DL models deployed, the modalities utilized, the datasets invoked, and the metrics employed by the researchers to explore the scope and potential of using SHR for different medical objectives. The study taxonomy is performed in both technical and applicative manners to represent the relevance of the models in conjunction with their capabilities in producing time series, text, and longitudinal SHR. The main study objectives are the identification of the practical capabilities and the knowledge gaps of generative models for creating SHR, which are detailed as follows:

-

Finding state-of-the-art of the generative models for creating synthetic medical texts, time series, and longitudinal data, along with the methodological limitations.

-

Summarizing the existing performance measures in conjunction with the related metrics for evaluating the quality of SHR.

-

Listing the most used datasets employed by the researchers for generating SHR.

-

Finding the key research gaps of the field.

The unique features of this study are mainly the comprehensiveness of the review and the novel research taxonomy. As we will see in the discussion section, this paper introduces innovative features compared to the other review papers. These features initiate the following contributions to the field:

-

Introducing taxonomic novelty by defining various data modalities and applications. There are review papers on SHR for tabular and image data, however, less attention was paid to important topics such as medical texts and longitudinal data.

-

Evaluation of different machine learning (ML) methods for generating various forms of SHR.

-

Representing the performance measures and the evaluation metrics used for validating the quality of SHR in association with the related methodological capabilities for evaluating different data modalities.

-

Introducing available datasets for generating SHR in conjunction with the related applicability per the study taxonomy.

It is worth noting that the number of publications in the SHR domain has drastically increased in the last two years. The novel aspects of this study project the scope and the possibilities of state-of-the-art methods in this new domain of digital medicine.

Results

Overview of the findings

Figure 1a illustrates an overview of the identified publications. In total, 3740 citations resulted from the bibliographic search in PubMed (n = 352), Scopus (n = 2798), and Web of Science (n = 590) in the Identification phase, from which 935 of the citations were excluded due to duplication. In the screening phase, after carefully reading Tile and the Abstract of the publications, 2692 publications were filtered out because of the topical irrelevance. In the Eligibility phase, 52 publications fulfilled the inclusion and exclusion criteria and ultimately participated in the study (PubMed = 27, Scopus = 19, and Web of Science = 6). Note that half of the eligible publications (n = 26) were interestingly published after 2022. Additionally, we included the related review or survey papers, published between 2022 and 2023 (Table 4). All of the included review papers were articles in peer-reviewed journals.

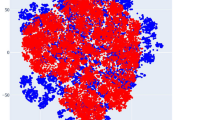

Figure 2 represents an overview of the findings. It is observed that methods based on generative adversarial networks (GANs) were dominantly employed for generating medical time series, and much less, for generating the longitudinal and the text modalities, respectively. The diffusion model was merely used for this data modality. Although large language models (LLMs) have been well-received mainly for generating synthetic texts, their application in generating longitudinal data showed promising results. The variational auto-encoder (VAE) method was used in a minority of the studies, equally for generating longitudinal and text data, but not so for the time series. The probabilistic models, e.g., Bayesian network, were mostly used for the longitudinal data, and trivially for the time series.

This Figure shows an overview of the findings in the paper. In summary, generative adversarial networks (GANs) were dominantly employed for generating medical time series. Large language models (LLMs) have been widely used for generating synthetic texts. The variational auto-encoder (VAE) method was employed in a minority of the studies, equally for generating longitudinal and text data, but it was not used for time series. Probabilistic models were dominantly utilized for longitudinal data.

Medical time series data

Generation of synthetic physiological time series was observed in 22 reports (42%) of the published peer-reviewed papers, from which synthetic Electrocardiogram is the most common case study (10 studies). Electroencephalogram was the main topic of the second most common objective where the diffusion model resulted in the optimal utility11. Data scarcity and privacy were found to be the main objectives of 12 and 6 studies, respectively. Class imbalance and imputation constitute the objective of the rest of the 4 studies. A great majority of the studies relied on the various fashions of the GAN-based methods where statistical models, such as the diffusion model and hidden Markov model were seen in a minority of 10% of the studies. Table 1 lists the findings of the survey on the generative models for synthetic time series.

Medical longitudinal data

Table 2 lists the survey’s findings on the generative models for synthetic longitudinal data. As can be seen, privacy is the main objective of the 16 out of the 17 studies with various case studies comprising kidney diseases, patients with hearing loss, Parkinson’s and Alzheimer’s diseases, chronic heart failure disease, diabetes, hypertension, and hospital admissions. The GAN-based methods were observed in the great majority of the studies as the optimal model outperforming the baseline studies.

Medical text data

Medical texts are an important part of an EHR, reflecting the medical assessments and decisions. Reading these texts can put the underlying EHR at risk of identification, and therefore, privacy is regarded as an important objective when it comes to the SHR. This was confirmed by our study as privacy was the objective of the 9 studies out of the 12 studies that participated in this survey with various case studies. Table 3 lists the findings of the survey on the generative models for synthetic text data.

In contrast with the synthetic health time series generation where the proposed models were dominantly based on the GAN, GPT-style models are employed in approximately 40% of studies, surpassing individual usage rates of other generative methods. This is justifiable considering the versatility of GPT models. Such elaborative capabilities of GPT provided the ability to generate synthetic medical texts in different languages, spanning from far eastern countries, e.g., Chinese, to European countries, e.g., Dutch, and English (see the case studies in Table 3). Nevertheless, the GAN-based models were seen in some studies.

Discussion

The need for a rich dataset for training ML methods on the one hand, and the difficulties in collecting patient data, e.g., privacy issues, on the other hand, make the generation of SHR a practical strategy. This is subjected to a high level of security in terms of re-identification along with acceptable fidelity. Countries adopt different regulations that intensively restrict the sharing of patient data, which is sometimes administered in a federated way. The use of SHR allows sharing of data, which can be employed by researchers to develop advanced ML methods for different medical applications, where access to the real data is problematic. Another application of SHR is the cases in which a heavy class imbalance negatively affects the learning process. In such cases, generative models are employed to create synthetic medical data for the minority classes. This is different from data augmentation where the minority data is reproduced, as the statistical distribution of the data is taken into account for generating SHRs.

Several surveys and review studies have been previously conducted on different models for generating SHRs. However, a comprehensive study on this topic with sufficient pervasiveness to explore different aspects of the studies, cannot be found in the existing literature, from a practical perspective. In addition, unlike other review papers (Table 4), ours covers more data modalities and DL models, thereby providing readers with novel perspectives on the topic. The outcomes of such a pervasive study can unveil practical limitations and bottlenecks of the existing methodologies to choose an appropriate model for such a demanding application.

This study provided a scoping review of the most popular generative models for producing SHRs. Our analysis showed that the researchers employed GAN-based models most for generating synthetic time series compared to the other alternatives (See Supplementary Note 1). In addition to the GANs, the probabilistic models were widely used for generating synthetic longitudinal data. However, several studies reported that GAN-based models generally suffered from (i) the mode collapse issue12,13,14,15,16, (ii) requiring preliminary experiments to identify optimal hyperparameters, and (iii) having biases towards high-density classes11. Diffusion models demonstrated promising results in synthesizing time series compared to GANs. Nevertheless, resolving expensive computational costs and interoperability difficulties of diffusion models, are considered ongoing research endeavors. Finally, current works could gain significant advantages by integrating domain-specific expertise from physicians into the learning process17,18.

Generating synthetic clinical notes is a less explored area in the literature. Recent advancements of LLMs19,20,21 have demonstrated significant improvements in generating synthetic clinical notes. Regardless, LLMs suffer from requiring massive processing power (21 leveraged 560 A100 GPUs for 20 days to train the LLM). Furthermore22,23, addressed that LLMs struggle with complex reasoning problems. Non-reasoned outputs for generating synthetic clinical notes lacked coherence, consistency, and certainty22. Chain-of-Thought prompting24 standed out as a leading method aimed to improve complex reasoning capabilities through intermediate suggestions. While Chain-of-Thought has shown promising results, its effectiveness in tackling the reasonability of LLMs for complex multi-modal input and tasks necessitating compositional generalization remains an unresolved problem22. Despite the success of generative models, tweaking effort was sometimes required to achieve optimal performance25. It is worth mentioning that despite the success of studied papers in generating SHRs, the reproducibility of results of several studies is under question as (i) the implementation code is not available, and (ii) details of training hyper-parameters have not been reported.

Generating SHR necessitates real datasets to train the generative model, and the quality of the training data accessible defines the caliber of synthetic data4,26,27,28,29,30. The EHRs collected at healthcare sites are usually multi-dimensional and longitudinal datasets, recording patient history over multiple visits. However, secondary use of this data is restricted by privacy laws31,32,33; nevertheless, there are several de-identified datasets available for generating synthetic data. The popular databases for generating SHRs used by eligible publications of this review study can be found in Supplementary Table 1. Our findings show that despite the existence of public datasets available for generating SHRs, the majority of public longitudinal medical datasets primarily focus on ICU records, prioritizing acute patient cases and overlooking non-acute medical conditions. Furthermore, these public datasets often lack a comprehensive representation of all demographic groups and geographic regions, which limits their relevance and generalizability to broader populations. Finally, it is worth noting that most longitudinal records are reported in English, underscoring the current shortage of public resources across diverse languages.

One of the objectives of this survey was to help researchers find an optimal generative model for SHR among a great variety of existing ones, which in turn demanded a set of objective performance measures for comparison. Various statistical and intuitive metrics have been employed for comparing the performance of generative models which made choosing an optimal model for a case study complicated. In addition, some of the metrics are based on the discrimination power of the physicians11,17,34,35, whereas some others rely on the performance of a benchmark binary classifier to distinguish between SHR and EHR8,36. Supplementary Note 2 elaborates further on the techniques used for evaluating SHRs, while Supplementary Table 2 compiles the metrics that the included papers employed to assess the generated synthetic data. An important challenge, observed in this study is the lack of generic methods and metrics for comparing the performance of different generative models. Figure 3 represents the distribution of the three performance measurement objectives: fidelity, utility, and re-identification, over the data modalities. As can be seen, generating high-fidelity SHRs appears to be the main objective for all data modalities. It is implied from Fig. 3 that introducing appropriate performance measures to address the privacy of SHR is a contemporary research gap due to the shortage of pertinent studies. This is the case for the utility when it comes to the longitudinal SHR.

Statistical diversity of the data has been recently introduced as a measure for comparing the utility and fidelity of SHRs28,37. Furthermore, fairness of SHR was also defined as another comparative objective for the performance measures38. These two measures were not widely accepted by the researchers according to the citation records. All in all, there are no best-established systematic criteria or practices on how to evaluate SHRs.

Generative models for time series prediction showed promising results in various medical applications for classification and identification problems39,40. This topic was indeed an initiation for creating SHR, as was previously reported by reviewed studies. Recent scientific endeavors revealed that the application of SHR is not limited to the privacy of patient data, but can be extended towards statistical planning for clinical trials41, and evermore, towards addressing ethical issues42 by solving bias (e.g., black patients were less likely to be admitted to cardiology for heart failure care43) in the original dataset. In terms of methodology, the recent methodological trend of the practical models for the creation of SHR shows a shift from the GAN-based methods to the statistical models such as graph neural networks and diffusion models9,11, and data fairness was addressed by one of the recent studies38.

For further reading, we recommend the following key papers that complement our work and provide a deeper understanding of the subject of generating SHR.27 demonstrated that the evaluation metrics currently available for generic LLMs lack an understanding of medical and health-related concepts, which aligns closely with the findings of our study. In addition, the authors introduced a comprehensive collection of LLM-based metrics tailored for the evaluation of healthcare chat-bots from an end-user perspective. Social determinants of health (SDoH) encompass the conditions of individuals’ lives, influenced by the distribution of resources and power at various levels, and are estimated to contribute to 80–90% of modifiable factors affecting health outcomes44. However, documentation of SDoH is often incomplete in the structured data of EHRs. In44, the authors extracted six categories of SDoH from the EHR using LLMs to support research and clinical care. To address class imbalance and fine-tune the extraction model, the authors leveraged an LLM to generate synthetic SDoH data.

Methods

Definitions

The methodological contents of the reviewed papers addressed different study objectives, identified by their applicative terms. The main objectives of the introduced methods are summarised as follows:

-

Privacy: Reliable SHRs can be generated based on patient data to be utilized for training and validation of ML methods.

-

Class Imbalance: In many applications of health studies, access to different classes of data is not feasible in a consistent form, and a single class is dominantly seen in the study population. This can introduce bias to ML methods towards better learning of the dominant class. A reliable SHR can be invoked to generate synthetic data for minority classes.

-

Data Scarcity: Access to data of a specific class can sometimes be problematic. In this case, the scarce class is identified and modeled using the absolute minority samples along with meta-learning methods. The SHR is generated based on the identified model to explore the characteristics of the scarce class.

-

Data Imputation: EHR is heavily sparse with missing values, which are not uniformly obtained over the visits. Data imputation implies the methods to estimate the missing values that happened systematically or randomly in data collection.

Generative models

The existing methods for generating synthetic data typically fall into two categories: probability distribution techniques and neural network-based methods4. Probability distribution techniques involve estimating a probability distribution of the real data and then drawing random samples that fit such distribution as synthetic data. Generative Markov-Bayesian probabilistic modeling is a technique used for synthesizing longitudinal EHRs35. On the other hand, Recent developments in synthetic data generation are adopting advanced neural networks. Below are the most commonly used neural network-driven generative models:

-

Generative adversarial networks (GANs)4, comprising a generator and a discriminator, produce synthetic data resembling real samples drawn from a specific distribution. The discriminator distinguishes between real and synthetic samples, refining the generator’s ability to create realistic data through adversarial training, enabling accurate approximation of the data distribution and generation of high-fidelity novel samples. GANs can generate sequences of data points that closely resemble the patterns observed in the original image or time series data.

-

Diffusion models18 gradually introduce noise into original data until it matches a predefined distribution. The core idea behind diffusion models is to learn the process of reversing this diffusion process, allowing for the generation of synthetic samples that closely resemble the original data while capturing its essential characteristics and variability.

-

Variational auto-encoders (VAEs)4 are a category of generative models that learn to encode and decode data points while approximating a probability distribution, typically Gaussian, in the latent space. VAEs are trained by optimizing a variational lower bound on the log-likelihood of the data, enabling them to learn meaningful representations and create new data samples.

-

Large language models (LLMs)21 can predict the probability of the next word in a text sequence based on preceding words, typically leveraging transformer architectures adept at capturing long-range dependencies. LLMs are effective for generating contextually appropriate text by learning the probability distribution of natural language data on vast corpora of text.

Performance Measurement

Evaluating the strengths and weaknesses of generative models has become increasingly critical as these models continue to advance in complexity and capacity. The evaluation of generative models can be seen from different perspectives. In this study, we categorized evaluation metrics based on three different objectives: (i) Fidelity: degree of faithfulness in which the synthetic data preserves the essential characteristics, structures, and statistical properties of the actual data. Fidelity can be seen in either population-based (e.g., examining marginal and joint feature distributions) or individual-based (e.g., synthetic data must adhere to specific criteria, such as not including prostate cancer in a female patient) levels, (ii) Re-identification: concerning protection of sensitive information and confidentiality of individuals’ identity, and (iii) Utility: using synthetic data as a substitute for actual data for training/testing any medical devices and algorithms.

Study Taxonomy

The study on SHRs covers a wide scope spanning from tabular medical data of a single record to longitudinal data from several visits and events. In contrast with the varieties in the adoption of different learning methods to create synthetic health data, the methodological suitability of the proposed methods depends merely on data modality. This review study is hence, performed according to the following taxonomy: (i) longitudinal medical/health data, (ii) medical/health time series, and (iii) medical/health texts.

Research method

To perform this scoping review, we followed the recommendations outlined in the PRISMA-ScR guidelines45. Supplementary Note 3 provides the PRISMA-ScR checklist. The research method was comprised of 5 steps, described as follows:

-

1.

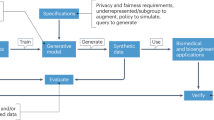

Search: A systematic search is performed on the three widely accepted platforms of scientific publications in this domain: PubMed, Web of Science, and Scopus using combinations of {Synthetic}, {Time Series, Text, Longitudinal}, and {Medical, Medicine, Health} keywords in the title and/or abstract of the publications. Our search queries are shown in Fig. 1b. We adapted the string for each database, using various forms of the terms.

-

2.

Identification: The outcomes of the search were explored in terms of duplication and repeated publications were excluded from the study.

-

3.

Screening: In this phase Title and Abstract of the identified papers were studied and the topical relevance of the publications was investigated. Some of the publications from a different scientific topic were identified to participate in the study because of similarities in keywords. These publications were detected and excluded from the study.

-

4.

Eligibility (inclusion criteria): After the search phase, only those publications fulfilling all the below criteria were allowed to participate in the study: (i) published within 2018–2023, (ii) the full paper is available, and (iii) addressing an ML topic for electronic health record (EHR) generation. Papers with only the Abstract available, cannot be analyzed and hence, excluded from the study, in addition to those addressing synthetic organs, without addressing the ML objectives.

-

5.

Included Studies: This study focuses on reproducible ML methods for generating synthetic, time series, longitudinal, or text contents of medical record. Therefore, the validity of the proposed methods in terms of implementation feasibility is an important criterion for consideration. We consolidated the scientific quality of the study by using the following Exclusion Criteria: (i) lack of the peer-reviewed process for the publication, (ii) EHR generation is not the major objective of the publication, and (iii) limited to tabular and image data only.

Data availability

The raw data employed for this study was entirely obtained from the public datasets using three well-known search engines: PubMed, Scopus, and Web of Science. The research methodology is fully described in the text, where inclusion and exclusion criteria are completely described.

Code availability

Not applicable. No codes were developed for the analysis.

References

Rajpurkar, P., Chen, E., Banerjee, O. & Topol, E. J. Ai in health and medicine. Nat. Med. 28, 31–38 (2022).

McGenity, C. et al. Artificial intelligence in digital pathology: a systematic review and meta-analysis of diagnostic test accuracy. NPJ Digital Med. 7, 114 (2024).

Regulation (eu) 2024/1689 of the european parliament and of the council of 13 june 2024 laying down harmonised rules on artificial intelligence https://eur-lex.europa.eu/legal-content/EN/TXT/PDF/?uri=OJ:L_202401689 (2024). Accessed on 13 June, 2024.

Ghosheh, G. O., Li, J. & Zhu, T. A survey of generative adversarial networks for synthesizing structured electronic health records. ACM Comput. Surv. 56, 1–34 (2024).

Khoury, B., Kogan, C. & Daouk, S. International classification of diseases 11th edition (icd-11). In Encyclopedia of Personality and Individual Differences, 2350–2355 (Springer, 2020).

Li, J., Cairns, B. J., Li, J. & Zhu, T. Generating synthetic mixed-type longitudinal electronic health records for artificial intelligent applications. NPJ Digital Med. 6, 98 (2023).

Mosquera, L. et al. A method for generating synthetic longitudinal health data. BMC Med. Res. Methodol. 23, 1–21 (2023).

Kaur, D. et al. Application of bayesian networks to generate synthetic health data. J. Am. Med. Inform. Assoc. 28, 801–811 (2021).

Nikolentzos, G., Vazirgiannis, M., Xypolopoulos, C., Lingman, M. & Brandt, E. G. Synthetic electronic health records generated with variational graph autoencoders. NPJ Digital Med. 6, 83 (2023).

Murtaza, H. et al. Synthetic data generation: State of the art in health care domain. Computer Sci. Rev. 48, 100546 (2023).

Alcaraz, J. M. L. & Strodthoff, N. Diffusion-based conditional ecg generation with structured state space models. Computers in Biology and Medicine 107115 (2023).

Boukhennoufa, I. et al. A novel model to generate heterogeneous and realistic time-series data for post-stroke rehabilitation assessment. IEEE Transactions on Neural Systems and Rehabilitation Engineering (2023).

Brophy, E., De Vos, M., Boylan, G. & Ward, T. Multivariate generative adversarial networks and their loss functions for synthesis of multichannel ecgs. Ieee Access 9, 158936–158945 (2021).

Brophy, E. Synthesis of dependent multichannel ecg using generative adversarial networks. In Proceedings of the 29th ACM international conference on Information & Knowledge Management, 3229–3232 (2020).

Lee, M., Tae, D., Choi, J. H., Jung, H.-Y. & Seok, J. Improved recurrent generative adversarial networks with regularization techniques and a controllable framework. Inf. Sci. 538, 428–443 (2020).

Nikolaidis, K. et al. Augmenting physiological time series data: A case study for sleep apnea detection. In Joint European Conference on Machine Learning and Knowledge Discovery in Databases, 376–399 (Springer, 2019).

Asadi, M. et al. Accurate detection of paroxysmal atrial fibrillation with certified-gan and neural architecture search. Sci. Rep. 13, 11378 (2023).

Koo, H. & Kim, T. E. A comprehensive survey on generative diffusion models for structured data. arXiv preprint arXiv:2306.04139v2 (2023).

Khademi, S. et al. Data augmentation to improve syndromic detection from emergency department notes. In Proceedings of the Australasian Computer Science Week, 198–205 (ACM Digital Library, 2023).

Hiebel, N., Ferret, O., Fort, K. & Névéol, A. Can synthetic text help clinical named entity recognition? a study of electronic health records in french. In 17th Conference of the European Chapter of Association for Computational Linguistics (2023).

Peng, C. et al. A study of generative large language model for medical research and healthcare. NPJ Digital Medicine 6 (2023).

Huang, J. & Chang, K. C.-C. Towards reasoning in large language models: A survey. In 61st Annual Meeting of the Association for Computational Linguistics, ACL 2023, 1049–1065 (Association for Computational Linguistics (ACL), 2023).

Valmeekam, K., Olmo, A., Sreedharan, S. & Kambhampati, S. Large language models still can’t plan (a benchmark for llms on planning and reasoning about change). arXiv preprint arXiv:2206.10498 (2022).

Wei, J. et al. Chain-of-thought prompting elicits reasoning in large language models. Adv. Neural Inf. Process. Syst. 35, 24824–24837 (2022).

Tornede, A. et al. AutoML in the age of large language models: Current challenges, future opportunities and risks. Transactions on Machine Learning Research https://openreview.net/forum?id=cAthubStyG (2024).

Bandi, A., Adapa, P. V. S. R. & Kuchi, Y. E. V. P. K. The power of generative ai: A review of requirements, models, input–output formats, evaluation metrics, and challenges. Future Internet 15, 260 (2023).

Abbasian, M. et al. Foundation metrics for evaluating effectiveness of healthcare conversations powered by generative ai. NPJ Digital Med. 7, 82 (2024).

Alaa, A., Van Breugel, B., Saveliev, E. S. & van der Schaar, M. How faithful is your synthetic data? sample-level metrics for evaluating and auditing generative models. In International Conference on Machine Learning, 290–306 (PMLR, 2022).

El Emam, K., Mosquera, L., Fang, X. & El-Hussuna, A. Utility metrics for evaluating synthetic health data generation methods: validation study. JMIR Med. Inform. 10, e35734 (2022).

Kaabachi, B., Despraz, J., Meurers, T., Prasser, F. & Raisaro, J. L. Generation and evaluation of synthetic data in a university hospital setting. In Challenges of Trustable AI and Added-Value on Health, 141–142 (IOS Press, 2022).

Hernandez, M., Epelde, G., Alberdi, A., Cilla, R. & Rankin, D. Synthetic data generation for tabular health records: a systematic review. Neurocomputing 493, 28–45 (2022).

Jordon, J. et al. Hide-and-seek privacy challenge: Synthetic data generation vs. patient re-identification. In NeurIPS 2020 Competition and Demonstration Track, 206–215 (PMLR, 2021).

van Breugel, B. & van der Schaar, M. Beyond privacy: Navigating the opportunities and challenges of synthetic data. arXiv preprint arXiv:2304.03722 (2023).

Wickramaratne, S. D. & Parekh, A. Sleepsim: Conditional gan-based non-rem sleep eeg signal generator. In 45th Annual International Conference of the IEEE Engineering in Medicine & Biology Society, 1–4 (IEEE, 2023).

Wang, X. et al. Using an optimized generative model to infer the progression of complications in type 2 diabetes patients. BMC Med. Inform. Decis. Mak. 22, 1–9 (2022).

Lee, D. et al. Generating sequential electronic health records using dual adversarial autoencoder. J. Am. Med. Inform. Assoc. 27, 1411–1419 (2020).

Bahrpeyma, F., Roantree, M., Cappellari, P., Scriney, M. & McCarren, A. A methodology for validating diversity in synthetic time series generation. MethodsX 8, 101459 (2021).

Bhanot, K., Qi, M., Erickson, J. S., Guyon, I. & Bennett, K. P. The problem of fairness in synthetic healthcare data. Entropy 23, 1165 (2021).

Gharehbaghi, A. & Lindén, M. A deep machine learning method for classifying cyclic time series of biological signals using time-growing neural network. IEEE Trans. Neural Netw. Learn. Syst. 29, 4102–4115 (2017).

Gharehbaghi, A., Linden, M. & Babic, A. An artificial intelligent-based model for detecting systolic pathological patterns of phonocardiogram based on time-growing neural network. Appl. Soft Comput. 83, 105615 (2019).

Ghosh, S., Boucher, C., Bian, J. & Prosperi, M. Propensity score synthetic augmentation matching using generative adversarial networks (pssam-gan). Computer Methods Prog. Biomedicine Update 1, 100020 (2021).

Ive, J. Leveraging the potential of synthetic text for ai in mental healthcare. Front. Digital Health 4, 1010202 (2022).

Eberly, L. A. et al. Identification of racial inequities in access to specialized inpatient heart failure care at an academic medical center. Circulation: Heart Fail. 12, e006214 (2019).

Guevara, M. et al. Large language models to identify social determinants of health in electronic health records. NPJ Digital Med. 7, 6 (2024).

Tricco, A. Prisma extension for scoping reviews (prisma-scr): Checklist and explanation. Angew. Chem. Int. Ed. 6, 951 (1967).

Yang, Z., Li, Y. & Zhou, G. Ts-gan: Time-series gan for sensor-based health data augmentation. ACM Trans. Comput. Healthc. 4, 1–21 (2023).

Haleem, M. S. et al. Deep-learning-driven techniques for real-time multimodal health and physical data synthesis. Electronics 12, 1989 (2023).

Festag, S. & Spreckelsen, C. Medical multivariate time series imputation and forecasting based on a recurrent conditional wasserstein gan and attention. J. Biomed. Inform. 139, 104320 (2023).

Li, X., Metsis, V., Wang, H. & Ngu, A. H. H. Tts-gan: A transformer-based time-series generative adversarial network. In International Conference on Artificial Intelligence in Medicine, 133–143 (Springer, 2022).

Wang, J., Chen, Y. & Gu, Y. A wearable-har oriented sensory data generation method based on spatio-temporal reinforced conditional gans. Neurocomputing 493, 548–567 (2022).

Foomani, F. H. et al. Synthesizing time-series wound prognosis factors from electronic medical records using generative adversarial networks. J. Biomed. Inform. 125, 103972 (2022).

Bing, S., Dittadi, A., Bauer, S. & Schwab, P. Conditional generation of medical time series for extrapolation to underrepresented populations. PLOS Digital Health 1, e0000074 (2022).

Habiba, M., Borphy, E., Pearlmutter, B. A. & Ward, T. Ecg synthesis with neural ode and gan models. In International Conference on Electrical, Computer and Energy Technologies, 1–6 (IEEE, 2021).

Lee, W., Lee, J. & Kim, Y. Contextual imputation with missing sequence of eeg signals using generative adversarial networks. IEEE Access 9, 151753–151765 (2021).

Maweu, B. M., Shamsuddin, R., Dakshit, S. & Prabhakaran, B. Generating healthcare time series data for improving diagnostic accuracy of deep neural networks. IEEE Trans. Instrum. Meas. 70, 1–15 (2021).

Li, X., Luo, J. & Younes, R. Activitygan: Generative adversarial networks for data augmentation in sensor-based human activity recognition. In ACM International Joint Conference on Pervasive and Ubiquitous Computing and Proceedings of the 2020 ACM International Symposium on Wearable Computers, 249–254 (2020).

Kiyasseh, D. et al. Plethaugment: Gan-based ppg augmentation for medical diagnosis in low-resource settings. IEEE J. Biomed. health Inform. 24, 3226–3235 (2020).

Wang, S., Rudolph, C., Nepal, S., Grobler, M. & Chen, S. Part-gan: Privacy-preserving time-series sharing. In Artificial Neural Networks and Machine Learning, 578–593 (Springer, 2020).

Dahmen, J. & Cook, D. Synsys: A synthetic data generation system for healthcare applications. Sensors 19, 1181 (2019).

Theodorou, B., Xiao, C. & Sun, J. Synthesize high-dimensional longitudinal electronic health records via hierarchical autoregressive language model. Nat. Commun. 14, 5305 (2023).

Li, R. et al. Improving an electronic health record–based clinical prediction model under label deficiency: Network-based generative adversarial semisupervised approach. JMIR Med. Inform. 11, e47862 (2023).

Shi, J., Wang, D., Tesei, G. & Norgeot, B. Generating high-fidelity privacy-conscious synthetic patient data for causal effect estimation with multiple treatments. Front. Artif. Intell. 5, 918813 (2022).

Wendland, P. et al. Generation of realistic synthetic data using multimodal neural ordinary differential equations. NPJ Digital Med. 5, 122 (2022).

Wang, Z. & Sun, J. Promptehr: Conditional electronic healthcare records generation with prompt learning. Conference on Empirical Methods in Natural Language Processing 2873–2885 (2022).

Zhou, N., Wang, L., Marino, S., Zhao, Y. & Dinov, I. D. Datasifter ii: Partially synthetic data sharing of sensitive information containing time-varying correlated observations. J. Algorithms Comput. Technol. 16, 17483026211065379 (2022).

Abell-Hart, K., Hajagos, J., Zhu, W., Saltz, M. & Saltz, J. Generating longitudinal synthetic ehr data with recurrent autoencoders and generative adversarial networks. Data Management, Polystores, and Analytics for Healthcare 153 (2021).

Zhang, Z., Yan, C., Lasko, T. A., Sun, J. & Malin, B. A. Synteg: a framework for temporal structured electronic health data simulation. J. Am. Med. Inform. Assoc. 28, 596–604 (2021).

Yoon, J., Drumright, L. N. & Van Der Schaar, M. Anonymization through data synthesis using generative adversarial networks (ads-gan). IEEE J. Biomed. Health Inform. 24, 2378–2388 (2020).

Christensen, J. H. et al. Fully synthetic longitudinal real-world data from hearing aid wearers for public health policy modeling. Front. Neurosci. 13, 850 (2019).

Baowaly, M. K., Lin, C.-C., Liu, C.-L. & Chen, K.-T. Synthesizing electronic health records using improved generative adversarial networks. J. Am. Med. Inform. Assoc. 26, 228–241 (2019).

Wang, Y., Meng, X. & Liu, X. Differentially private recurrent variational autoencoder for text privacy preservation. Mobile Networks and Applications 1–16 (2023).

Zhou, N., Wu, Q., Wu, Z., Marino, S. & Dinov, I. D. Datasiftertext: Partially synthetic text generation for sensitive clinical notes. J. Med. Syst. 46, 96 (2022).

Shim, H., Lowet, D., Luca, S. & Vanrumste, B. Synthetic data generation and multi-task learning for extracting temporal information from health-related narrative text. In Proceedings of the Seventh Workshop on Noisy User-generated Text, 260–273 (2021).

Kasthurirathne, S. N., Dexter, G. & Grannis, S. J. Generative adversarial networks for creating synthetic free-text medical data: a proposal for collaborative research and re-use of machine learning models. AMIA Summits Transl. Sci. Proc. 2021, 335 (2021).

Al Aziz, M. M. et al. Differentially private medical texts generation using generative neural networks. ACM Trans. Comput. Healthc. 3, 1–27 (2021).

Libbi, C. A., Trienes, J., Trieschnigg, D. & Seifert, C. Generating synthetic training data for supervised de-identification of electronic health records. Future Internet 13, 136 (2021).

Guan, J., Li, R., Yu, S. & Zhang, X. A method for generating synthetic electronic medical record text. IEEE/ACM Trans. Comput. Biol. Bioinform. 18, 173–182 (2019).

Kasthurirathne, S. N., Dexter, G. & Grannis, S. J. An adversorial approach to enable re-use of machine learning models and collaborative research efforts using synthetic unstructured free-text medical data. MEDINFO 2019: Health and Wellbeing e-Networks for All 1510–1511 (2019).

Syed, M., Marshall, J., Nigam, A. & Chawla, N. V. Gender prediction through synthetic resampling of user profiles using seqgans. In 8th International Conference on Computational Data and Social Networks, 363–370 (Springer, 2019).

Lee, S. H. Natural language generation for electronic health records. NPJ Digital Med. 1, 63 (2018).

Li, I. et al. Neural natural language processing for unstructured data in electronic health records: a review. Comput. Sci. Rev. 46, 100511 (2022).

Hahn, W. et al. Contribution of synthetic data generation towards an improved patient stratification in palliative care. J. Personalized Med. 12, 1278 (2022).

Acknowledgements

This study received no specific funding. We are thankful to the anonymous reviewers whose valuable insights and constructive feedback have significantly enhanced the quality of this paper.

Funding

Open access funding provided by Mälardalen University.

Author information

Authors and Affiliations

Contributions

The authors confirm their contribution to the paper as follows: conceptualization: A.Gh.; database searching: M.L., and M.A.; data curation and analysis: M.L., M.A., and A.Gh.; data interpretation: A.Gh., and M.L.; funding acquisition: not applicable; methodology: M.L., and A.Gh.; project administration: A.Gh.; resources: M.L., and A.Gh.; software: not applicable; supervision: A.Gh.; validation: M.L., A.Gh., and F.P.; visualization: M.L.; writing—original draft: M.L., and A.Gh.; and writing—review & editing: A.Gh., and M.L. All authors reviewed the results and approved the final version of the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Loni, M., Poursalim, F., Asadi, M. et al. A review on generative AI models for synthetic medical text, time series, and longitudinal data. npj Digit. Med. 8, 281 (2025). https://doi.org/10.1038/s41746-024-01409-w

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41746-024-01409-w

This article is cited by

-

Performance of large language model in cross-specialty medical scenarios

Journal of Translational Medicine (2025)

-

Synthetic data generation methods for longitudinal and time series health data: a systematic review

BMC Medical Informatics and Decision Making (2025)

-

KI-basierte Arztbriefe – Zukunft oder Gefahr?

Die Orthopädie (2025)

-

Methods for Generating and Evaluating Synthetic Longitudinal Patient Data: A Systematic Review

Journal of Healthcare Informatics Research (2025)