Abstract

Adolescent depression remains a major public health concern, and Behavioral Activation (BA), a brief therapeutic intervention designed to reduce depression-related avoidance and boost engagement in rewarding activities, has shown encouraging results. Still, few studies directly measure the hypothesized mechanism of “activation” in daily life, especially using low-burden, ecologically valid methods. This proof-of-concept study evaluates the validity of two technology-based approaches to measuring activation in adolescents receiving BA: smartphone-based mobility sensing and large language model (LLM) ratings of free-response text. Adolescents (n = 38, ages 13–18) receiving 12-week BA therapy for anhedonia completed daily ecological momentary assessment (EMA) reporting on positive and negative affect. GPT-4o was used to rate behavioral activation from EMA free-text entries. A subsample (n = 13) contributed passive smartphone sensing data (e.g., accelerometer activity, GPS-derived mobility). Activation and symptoms were assessed weekly via self-report. GPT-derived activation ratings correlated positively with passive sensing indicators (number of places visited, time away from home) and self-reported activation. Within-person increases in GPT-rated activation were associated with higher daily positive affect and lower negative affect. Passive sensing features also forecasted weekly improvements in anhedonia and depressive symptoms. Associations emerged primarily at the within-person level, suggesting that changes in activation relative to one’s own baseline are clinically meaningful. This study demonstrates the feasibility and validity of passively measuring behavioral activation in adolescents’ daily lives using smartphone data and LLMs. These tools hold promise for advancing data-informed psychotherapy by tracking therapeutic processes in real time, reducing reliance on self-report, and enabling personalized, adaptive interventions. Clinical Trial Registry: NCT02498925.

Lay summary

Behavioral Activation therapy helps depressed teens by encouraging them to engage in more rewarding activities. We tested whether smartphones and LLM could automatically track this 'activation' in daily life. Using movement data from phones and LLM-based ratings from 38 teens in therapy, we found these digital tools accurately detected when teens were more active and higher activation predicted better mood and fewer depression symptoms. This technology could help therapists monitor progress in real-time and personalize treatment more effectively.

Similar content being viewed by others

Introduction

Rates of depression increase significantly during adolescence, marking this as a critical developmental period for intervention [1]. Yet, even first-line treatments, such as cognitive behavioral therapy, are not consistently effective for youth [2]. Anhedonia, or loss of interest and pleasure, is a core feature of depression that predicts poorer course of illness and worse treatment outcomes [3]. Treatments that target anhedonia may lead to reductions in other depressive symptoms [4].

Behavioral Activation (BA) therapy aims to reduce anhedonia by targeting patterns of avoidance and withdrawal and increasing engagement with rewarding activities [5]. Given its relative simplicity and clear focus on behavioral reinforcement of rewards, BA may be especially well-suited to efficiently target adolescent anhedonia, and promising evidence exists in youth samples [6, 7]. A core assumption of BA is that increased “activation”(in this article, we use “Behavioral Activation” or “BA” to refer to the manualized treatment approach described by [5] and [6]; by contrast, we use “activation” to refer to the construct of behavioral activation, i.e., the putative mechanism through which patients become activated in this treatment. See [8] for a review of how this construct is often measured) in daily life leads to symptom change [9], but few studies have rigorously measured whether this is the case [8]. To assess activation most of these studies rely on self-report questionnaires, which are limited by recall bias, social desirability, and participant burden [8]. There is a pressing need for objective, low-burden, and ecologically valid methods to track activation in daily life. Tools that assess activation between sessions could help therapists monitor treatment progress and make timely adjustments, consistent with calls for data-informed psychotherapy [10].

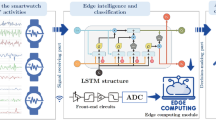

Passive sensing through smartphones offers a low-burden, continuous, and objective way to assess real-world behavior [11]. Mobility-related passive sensing features are particularly relevant for assessing behavioral proxies for activation, such as activity levels (accelerometer) or percent of time spent outside the home (GPS). Growing evidence suggests smartphone sensors can be used to predict daily and even hourly fluctuations in depressed mood [12], including in youth [13,14,15]. In a BA study [16], demonstrated congruence between questionnaire-assessed activation and several smartphone metrics, including step count, time spent at home, time in conversation, and screen unlocks, albeit in a very small sample (3 case studies) that requires replication. Despite substantial advantages, passive sensing measures alone lack qualitative context and therefore provide limited insight into the emotional or motivational aspects of behavior.

Unobtrusive language samples offer another key source of information with regard to both qualitative context and quantitative features (e.g., sentiment) that can be used in conjunction with passive sensing to estimate or forecast emotional states and behavior. For example, promising results have been obtained by applying Linguistic Inquiry and Word Count (LIWC) to measure activation in online therapy chat logs [17] obtained promising results by applying Linguistic Inquiry and Word Count (LIWC) to measure activation in online therapy chat logs. However, LIWC required a lexicon of activation-relevant words from their specific dataset, limiting generalizability. In contrast, large language models (LLMs) have been shown to accurately identify psychological constructs in text, while requiring no sample-specific training data [18]. Recent work has demonstrated the potential of LLMs to estimate emotion from daily free response text [19]. Additionally, frequent self-reports of daily activities provide ideal material for LLMs to rate activation, with less risk of typical self-report biases.

This proof-of-concept study aimed to evaluate the validity and utility of two novel, technology-based measures of activation: LLM-derived ratings of daily text entries and smartphone-based passive sensing of mobility patterns. These measures were examined in relation to daily positive and negative emotion, as well as weekly anhedonia, depressive symptoms, and a traditional self-report measure of activation. We hypothesized that the LLM-derived and passive sensing indicators of activation would be positively associated with each other and with self-reported activation, supporting their convergent validity. Furthermore, we hypothesized that these measures would also demonstrate criterion validity, such that higher activation as captured by these methods would be associated with increased daily positive emotion, decreased daily negative emotion, and greater weekly improvement in anhedonia and depression symptoms.

Materials and methods

Participants and procedure

Participants were 38 adolescents recruited from the greater Boston area for a BA treatment trial for adolescent anhedonia from January 2016 to November 2021. See supplement (Table S1) for demographic and clinical characteristics. For more information on study design and inclusion/exclusion criteria, see [7]. Approval was obtained from the Mass General Brigham IRB, and all participants gave informed consent and/or assent.

Participants received 12 weekly, 60-minute, individual therapy sessions, per the [6] BA manual. Before each session participants were asked to rate their anhedonia, using the 14-item Snaith‐Hamilton Pleasure Scale (SHAPS; [20, 21]), depression, using the 20-item Center for Epidemiological Studies Depression scale (CES-D; [22]), and activation, using the 9-item Behavioral Activation for Depression Scale - Short Form (BADS – SF; [23]). (See Supplement Section S1 for more details about the weekly self-report measures.) During the treatment, every other week participants completed a 5-day (2-3 surveys per day) bursts of ecological momentary assessments (EMA) using the MetricWire app. At each prompt, participants rated their positive affect (PA) and negative affect (NA) by responding to 6 items, each anchored by a 5-point Likert scale. Next, they were asked to provide free-text responses about what they were doing right before, with whom they were interacting, and the most enjoyable and stressful events since the previous prompt. (See Supplement Section S1, https://clinicaltrials.gov/study/NCT02498925 and [4] for further details about the EMA items and sampling protocol). Passive sensor data were collected continuously using the Beiwe [24] smartphone platform for a subset of participants (n = 13), as this data collection method was added to the protocol after initial participant enrollment had begun.

Feature derivation

EMA-derived PA was calculated as the mean score of “happy,” “interested,” and “excited” items; NA was the mean of “sad,” “nervous,” and “angry” items. Typed free-text responses provided by participants as part of their EMA surveys (see Section S2 for the specific open questions) were analyzed using OpenAI’s GPT-4o model, accessed via the Python openai package. Using the API ensured application of the same standardized prompt, fixed model parameters (e.g., temperature), and consistent, reproducible outputs across the dataset. The standardized prompt included the clinical definition of behavioral activation (Dimidjian et al., 2011), rating criteria (1 = least active/passive/avoidant to 5 = very high activity with strong reinforcement of pleasure, mastery, or problem-solving), and guidelines for evaluating current activities, social interactions, enjoyable events, and responses to stressful situations (see Supplement Section S2 for the full prompt). The model was instructed to output a single numeric rating [1,2,3,4,5] along with a rationale, based on explicit rating criteria (e.g., passivity vs. activity, social engagement, mastery/enjoyment, and problem-solving vs. avoidance). See Table S8 for examples of participant text with corresponding GPT ratings and explanations. Participant responses were manually deidentified prior to analysis by removing or replacing personal identifiers (e.g., names, places). To evaluate validity, a trained human rater applied the same prompt and criteria to a random 25% subsample (n = 478) of EMA responses. Human and GPT-4o ratings demonstrated substantial agreement (weighted Cohen’s κ = 0.77, 95% CI [0.74, 0.81]), supporting the validity of the automated rating.

Passive sensor features were extracted from participants’ smartphone data to characterize behavioral patterns linked to mood and functioning using the publicly available DPlocate and DPphone software packages [25]. Derived measures included: an hourly activity score (ActScore), derived from aggregated accelerometer data to reflect physical activity intensity throughout the day; daily percent home (percentHome), indicating the proportion of time spent at the inferred home location based on nighttime GPS clustering; daily distance from home (homDist), computed as the mean Euclidean distance between GPS samples and the home location; daily mobility area (radiusMobility), representing the spatial dispersion of movement via radius of gyration; and places visited daily (DayPlaces), estimated using spatiotemporal clustering to identify distinct locations visited each day. These features were selected based on prior evidence linking mobility, location stability, and physical activity to affective states. (See Supplement Section S3 for detailed definitions of each passive sensor variable.)

Analytic strategy

First, we computed daily means for the hourly passive sensing variables, EMA affect variables, and GPT ratings. The daily variables were used to examine associations with NA and PA at the daily level. For week-level analyses, we predicted BADS, SHAPS, and CES-D scores using weekly means of the GPT ratings and passive sensing variables. For all analyses, we used multilevel modeling (MLM) to estimate both within-person (person-mean centered) as well as between-person (participant mean) effects. Models included random intercepts for participants and were conducted using the lme4 package in R. To assess convergent validity, we examined associations between GPT ratings, passive sensing variables, and BADS scores. (We also conducted repeated measures correlation (rmcorr) analyses. Results from rmcorr were nearly identical to those obtained from multilevel models; therefore, we report them in the supplement only (see Supplementary Figure S1 to S4)). To assess criterion validity, we tested whether higher GPT and passive sensor-derived activation were associated with more positive and less negative daily affect, controlling for prior-day affect, and with lower weekly symptoms of anhedonia and depression, controlling for prior-week scores. Models were fit using restricted maximum likelihood (REML), which provides unbiased variance component estimates in multilevel models, especially with small or unbalanced samples. Missing data were handled automatically through REML estimation, which uses all available observations under the missing at random assumption, allowing participants to contribute data even when some time points are missing [26, 27]. Comprehensive evaluations of missing data mechanisms are reported in the Supplement Section S4. No a priori sample size calculation was conducted, as this was a secondary proof-of-concept analysis using data from an existing treatment trial. The analytic sample consisted of all participants who completed daily EMA (n = 38) and a subset who contributed passive smartphone sensing data (n = 13). All analysis code is publicly available at https://osf.io/jhczf/files/osfstorage.

Results

Thirty-eight participants completed 50.24 EMA surveys on average (SD = 27.52) across the entire 12-week treatment period. EMA compliance decreased over the course of treatment, from 62% in in the first two weeks to 52% in the last two weeks (Table S2). For the 13 subjects with passive sensor data, a total of 46,056 hourly observations were collected (n = 1919 days of data total; M = 147.62 days; SD = 99.82 days). Descriptive statistics for daily assessment of smartphone passive sensing features and GPT-derived ratings are presented in the supplement (Table S3).

Convergent validity: associations between activation indicators

Passive sensors and GPT-derived ratings

On days with higher-than-usual smartphone-derived activity scores (Est. = 0.178, p = 0.024) and more places visited daily (Est. = 0.045, p = 0.021), participants tended to have higher GPT-rated activation, while spending more time at home predicted lower GPT-rated activation that day (Est. = −0.007, p = 0.012). No significant between-person associations were found (see Table 1 and Figure S1).

Predicting self-report activation

In weeks when participants’ GPT-rated activation was higher, their BADS scores (Est. = 2.05, p = 0.005) were also higher (see Table 2). Figure S2 shows individual trajectories of GPT-rated activation and BADS score over time. For passive sensing, at the within-person level, days with greater number of places visited and less time spent at home were associated with higher BADS scores (all ps < 0.01; see Table 3 and Figure S3). At the between-person level, participants who generally spent more time at home had lower BADS scores. See Tables S5 and S6 for models using the BADS activation and avoidance subscales.

Criterion validity: associations with daily affect and weekly symptoms

Predicting daily affect

Higher GPT-rated activation was associated with lower same-day negative affect (Est. = −0.09, p < 0.001) and higher positive affect (Est. = 0.16, p < 0.001, see Table 4). Among passive sensing features, only daily mobility radius significantly predicted daily positive affect at the within-person level (Est.= 0.002, p = 0.024; see Table 4 and Figure S4). (Including lagged (t-1) affect scores in the models substantially reduced the number of observations, so we chose to report results from models without lagged predictors. However, the results were consistent when controlling for lagged affect (see Supplementary Table S4)).

Predicting weekly symptoms

At the weekly level, GPT-derived activation ratings were not significantly associated with changes in SHAPS or CES-D scores (see Table 2). For passive sensing variables, in weeks with greater number of places visited, participants had lower SHAPS (Est. = −0.82, p = 0.022) and CES-D scores (Est. = −2.51, p < 0.001). Conversely, weeks with more time spent at home were related to higher SHAPS (Est. = 0.15, p = 0.001) and CES-D scores (Est. = 4.47, p = <0.001). No between-person effects reached statistical significance (see Table 5 and Figure S5). A visual summary of the passive sensing results is presented in Table S7.

Discussion

This initial proof-of-concept study demonstrates the potential of scalable, smartphone-derived technologies to track therapeutic processes in adolescents’ daily lives. Specifically, we validated two novel technology-based measures of activation, mobility indicators from passive smartphone sensing and LLM-derived text ratings, against traditional questionnaire-rated activation. To our knowledge, this is the first study to integrate both LLM and passive sensing in adolescents’ daily lives to monitor therapeutic mechanisms.

LLM-derived activation ratings and select passive sensing indicators were positively associated with each other and with an established measure of behavioral activation (BADS), supporting their convergent validity. Notably, only some passive sensing features showed significant associations, suggesting that specific aspects of mobility may be more reliable markers of activation than others. Specifically, when adolescents visited more locations and spent less time at home, they also were found to have greater activation via self-report and LLM-derived ratings of their EMA text entries. In contrast, other mobility indicators, such as distance from home, were not associated with self-reported or LLM-derived activation, suggesting that activation is better reflected by time spent away from home and visiting different locations, rather than the distance traveled. These findings are consistent with prior studies linking fewer unique locations visited and more time spent at home to increased depression risk [15, 28]. However, previous research has typically assumed that mobility patterns are related to depression because they reflect activation, without directly testing whether this is the case. To that end, our results demonstrate a direct association of mobility with a therapeutic target—activation—rather than with depression symptoms alone.

The association between LLM-rated activation and BADS scores contributes to recent evidence suggesting that LLMs, such as GPT, can extract psychologically meaningful information from unstructured text [18]. Relevant to the treatment of adolescent depression, we build on emerging work showing that LLMs can be used to analyze language from psychotherapy sessions to inform clinical decision-making [29]. Importantly, our study shows that LLM-based assessments can also provide clinically relevant insights based on language generated outside the therapy room, offering scalable and unobtrusive ways to monitor therapeutic processes in patients’ daily lives.

These two technology-based approaches demonstrated distinct patterns in their associations with emotional (daily) and clinical (weekly) outcomes. LLM-rated activation was associated with same-day increases in positive affect and decreases in negative affect. In contrast, passive sensing features were more strongly related to weekly changes in symptoms. These distinct timescales suggest that linguistic measures may be better suited to capturing short-term emotional changes, whereas passive sensing may tap into behavioral processes that unfold over longer periods. This finding is clinically significant: it implies that daily text assessments could help clinicians monitor affective responses to activation efforts in real time, while mobility patterns could indicate whether treatment is gradually translating into symptom improvement.

Interestingly, associations between both the LLM-derived and passive sensing indicators of activation with BADS and symptom measures were significant at the within-person level, but not the between-person level. That is, when individuals showed more activation relative to their own mean via passive sensing or text, they also reported higher activation and lower symptoms; however, individuals who, on average, moved more or reported more engagement via EMA text were not necessarily less symptomatic. This pattern is consistent with recent research suggesting that daily mobility features, such as time spent at home or number of locations visited, are more predictive of within-person changes in depression than between-person differences [28]. Clinically, this highlights the need for personalized interventions that focus on deviations from an individual’s own baseline, rather than comparing them to normative averages that may not reflect any one individual. If replicated, our study suggests that digital measures of activation could be used to tailor treatment in real time, identifying when a patient’s activation is dropping and intervening accordingly.

Limitations and future directions

Several limitations should be noted. First, the small sample size, specifically for the passive sensor data collected from a subset of participants, limits generalizability, and replication in larger, more diverse samples is necessary. Second, the LLM-based ratings were generated using a specific prompt and model version. Although recent work has shown that LLM-based ratings using GPT-4o are surprisingly stable and human-like [18], slight variations in prompts or model updates could influence results. Another limitation is that passive sensing features capture only certain dimensions of activation, mainly physical mobility, and may miss other important nuances. Future work should integrate additional passive data streams (e.g., phone use patterns, conversation detection, sleep onset/offset) and physiological markers to capture a broader spectrum of activation-relevant processes. Similarly, while language-based ratings provide valuable context, they depend on participant compliance and openness within a specific EMA sampling scheme. Integrating insights from NLP as applied to continuously collected text data, such as from social media and text messages, would improve the richness and ecological validity of this data stream [30].

In conclusion, this proof-of-concept study provides initial evidence that passive smartphone sensing and LLM-based language analysis can be used to measure activation in adolescents during treatment for depression, offering insights into therapeutic processes as they unfold in daily life. By validating these digital tools against self-report measures and demonstrating their links to emotional and clinical outcomes, we illustrate their potential to enhance treatment monitoring, personalize interventions, and ultimately improve outcomes for depressed youth.

Data availability

Data are available upon reasonable request to the corresponding author (HF) and following the execution of a data use agreement.

References

Avenevoli S, Swendsen J, He JP, Burstein M, Merikangas K. Major depression in the national comorbidity survey- adolescent supplement: prevalence, correlates, and treatment. J Am Acad Child Adolesc Psychiatry. 2015;54:37–44.e2.

Eckshtain D, Kuppens S, Ugueto A, Ng MY, Vaughn-Coaxum R, Corteselli K, et al. Meta-analysis: 13-year follow-up of psychotherapy effects on youth depression. J Am Acad Child Adolesc Psychiatry. 2020;59:45–63.

Khazanov GK, Xu C, Dunn BD, Cohen ZD, DeRubeis RJ, Hollon SD. Distress and anhedonia as predictors of depression treatment outcome: a secondary analysis of a randomized clinical trial. Behav Res Ther. 2020;125:103507.

Webb CA, Murray L, Tierney AO, Gates KM. Dynamic processes in behavioral activation therapy for anhedonic adolescents: modeling common and patient-specific relations. J Consult Clin Psychol. 2024;92:454–65.

Martell C, Dimidjian S, Herman-Dunn R. Behavioral Activation for Depression: A Clinician’s Guide. 2nd ed. New York, NY: Guilford Press; 2022.

McCauley E, Schloredt KA, Gudmundsen GR, Martell CR, Dimidjian S. Behavioral activation with adolescents: A clinician’s guide. Guilford Publications; 2016.

Webb CA, Murray L, Tierney AO, Forbes EE, Pizzagalli DA. Reward-related predictors of symptom change in behavioral activation therapy for anhedonic adolescents: a multimodal approach. Neuropsychopharmacology. 2023;48:623–32.

Manos RC, Kanter JW, Busch AM. A critical review of assessment strategies to measure the behavioral activation model of depression. Clin Psychol Rev. 2010;30:547–61.

Kanter JW, Manos RC, Bowe WM, Baruch DE, Busch AM, Rusch LC. What is behavioral activation?: a review of the empirical literature. Clin Psychol Rev. 2010;30:608–20.

Lutz W, Vehlen A, Schwartz B. Data-informed psychological therapy, measurement-based care, and precision mental health. J Consult Clin Psychol. 2024;92:671–3.

Torous J, Kiang MV, Lorme J, Onnela JP. New tools for new research in psychiatry: a scalable and customizable platform to empower data driven smartphone research. JMIR Ment Health. 2016;3:e5165.

Jacobson NC, Chung YJ. Passive sensing of prediction of moment-to-moment depressed mood among undergraduates with clinical levels of depression sample using smartphones. Sensors (Basel). 2020;20:3572.

Ren B, Balkind EG, Pastro B, Israel ES, Pizzagalli DA, Rahimi-Eichi H, et al. Predicting states of elevated negative affect in adolescents from smartphone sensors: a novel personalized machine learning approach. Psychol Med. 2023;53:5146–54.

Sequeira L, Perrotta S, LaGrassa J, Merikangas K, Kreindler D, Kundur D, et al. Mobile and wearable technology for monitoring depressive symptoms in children and adolescents: a scoping review. J Affect Disord. 2020;265:314–24.

Funkhouser CJ, Weiner LS, Crowley RN, Davis JF, Koegler FH, Allen NB, et al. Early Changes in Passively Sensed Homestay Predict Depression Symptom Improvement During Digital Behavioral Activation. Behaviour Research and Therapy [Internet]. In press; Available from: https://doi.org/10.1016/j.brat.2025.104815.

Lee J, Solomonov N, Banerjee S, Alexopoulos GS, Sirey JA. Use of passive sensing in psychotherapy studies in late life: a pilot example, opportunities and challenges. Front Psychiatry. 2021;12:732773.

Burkhardt HA, Alexopoulos GS, Pullmann MD, Hull TD, Areán PA, Cohen T. Behavioral activation and depression symptomatology: longitudinal assessment of linguistic indicators in text-based therapy sessions. J Med Internet Res. 2021;23:e28244.

Rathje S, Mirea DM, Sucholutsky I, Marjieh R, Robertson CE, Van Bavel JJ. GPT is an effective tool for multilingual psychological text analysis. Proc Natl Acas Sci. 2024;121:e2308950121.

Fisher H, Jaffe N, Pidvirny K, Tierney A, Pizzagalli D, Webb C. Using Natural Language Processing to Track Negative Emotions in the Daily Lives of Adolescents [Internet]. Research Square; 2025 [cited 2025 Jun 23]. Available from: https://www.researchsquare.com/article/rs-6414400/v1.

Franken IHA, Rassin E, Muris P. The assessment of anhedonia in clinical and non-clinical populations: further validation of the Snaith-Hamilton Pleasure Scale (SHAPS). J Affect Disord. 2007;99:83–9.

Snaith RP, Hamilton M, Morley S, Humayan A, Hargreaves D, Trigwell P. A scale for the assessment of hedonic tone the Snaith–Hamilton Pleasure Scale. Br J Psychiatry. 1995;167:99–103.

Radloff LS. The CES-D scale: a self-report depression scale for research in the general population. Appl Psychol Meas. 1977;1:385–401.

Manos RC, Kanter JW, Luo W. The behavioral activation for depression scale–short form: development and validation. Behav Ther. 2011;42:726–39.

Onnela JP, Dixon C, Griffin K, Jaenicke T, Minowada L, Esterkin S, et al. Beiwe: A data collection platform for high-throughput digital phenotyping. Journal of Open Source Software. 2021;6:3417.

Rahimi-Eichi H, Coombs 3rd G, Onnela JP, Baker JT, Buckner RL Measures of Behavior and Life Dynamics from Commonly Available GPS Data (DPLocate): Algorithm Development and Validation. medRxiv. 2022–07 [Preprint]. 2022 https://www.medrxiv.org/content/10.1101/2022.07.05.22277276v1.full.

Bloom PA, Lan R, Galfalvy H, Liu Y, Bitran A, Joyce K, et al. Identifying factors impacting missingness within smartphone-based research: implications for intensive longitudinal studies of adolescent suicidal thoughts and behaviors. J Psychopathol Clin Sci. 2024;133:577–97. https://doi.org/10.1037/abn0000930.

Little RJ. Missing data analysis. Annu Rev Clin Psychol. 2024;20:149–73.

Zhang Y, Folarin AA, Sun S, Cummins N, Vairavan S, Bendayan R, et al. Longitudinal relationships between depressive symptom severity and phone-measured mobility: dynamic structural equation modeling study. JMIR Ment Health. 2022;9:e34898.

Kuo PB, Tanana MJ, Goldberg SB, Caperton DD, Narayanan S, Atkins DC, et al. Machine-learning-based prediction of client distress from session recordings. Clin Psychol Sci. 2024;12:435–46.

Funkhouser CJ, Trivedi E, Li LY, Helgren F, Zhang E, Sritharan A, et al. Detecting adolescent depression through passive monitoring of linguistic markers in smartphone communication. J Child Psychol Psychiatry. 2024;65:932–41.

Acknowledgements

We thank all participants who took part in this study and staff who helped acquire the dataset used in this analysis.

Funding

This research was supported by NIMH K23MH108752 and the Tommy Fuss Fund (CAW). CAW was partially supported by R01MH116969, R01MH135844, R01AT011002, a NARSAD Young Investigator Grant from the Brain & Behavior Research Foundation and the Klingenstein Third Generation Foundation. DAP was partially supported by the NIMH grants P50MH119467 and R37MH068376.

Author information

Authors and Affiliations

Contributions

HBF: conceptualization, methodology, formal analysis, visualization, writing-original draft. NMJ: conceptualization, investigation, formal analysis, writing-original draft. HRE: software, methodology, data curation, formal analysis, writing-review and editing. EEF: conceptualization, investigation, supervision, writing- review and editing. DAP: conceptualization, investigation, resources, supervision, writing-review and editing. JTB: methodology, software, data curation, resources, supervision, writing-review and editing. CAW: conceptualization, investigation, methodology, project administration, funding acquisition, supervision, writing- review and editing.

Corresponding author

Ethics declarations

Competing interests

Over the past three years, Dr. Pizzagalli has received consulting fees from Arrowhead Pharmaceuticals, Boehringer Ingelheim, Compass Pathways, Engrail Therapeutics, Neumora Therapeutics, Neurocrine Biosciences, Neuroscience Software, Sage Therapeutics, Sama Therapeutics, and Takeda; he has received honoraria from the American Psychological Association, Psychonomic Society and Springer (for editorial work) and from Alkermes; he has received research funding from the BIRD Foundation, Brain and Behavior Research Foundation, Dana Foundation, DARPA, Millennium Pharmaceuticals, NIMH and Wellcome Leap MCPsych; he has received stock options from Ceretype Neuromedicine, Compass Pathways, Engrail Therapeutics, Neumora Therapeutics, and Neuroscience Software. Dr. Webb has received consulting fees from King & Spalding law firm. Dr. Baker has received consulting fees from Sama Therapeutics and Tetricus Labs, Inc. Dr. Baker is currently a Senior Editor at Digital Psychiatry and Neuroscience. Dr. Pizzagalli’s, Dr. Webb’s, and Dr. Baker’s interests were reviewed and are managed by McLean Hospital and Mass General Brigham in accordance with their conflict of interest policies. No funding from these entities was used to support the current work, and all views expressed are solely those of the authors. The other authors declare no competing financial interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Fisher, H., Jaffe, N.M., Rahimi-Eichi, H. et al. Measuring activation during behavioral activation therapy: a proof-of-concept study using smartphone sensors and LLM-derived ratings in adolescents with anhedonia. NPP—Digit Psychiatry Neurosci 3, 24 (2025). https://doi.org/10.1038/s44277-025-00045-w

Received:

Revised:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s44277-025-00045-w