Abstract

Deep learning has shown strong performance in musculoskeletal imaging, but prior work has largely targeted conditions where diagnosis is relatively straightforward. More challenging problems remain underexplored, such as detecting Bankart lesions (anterior-inferior glenoid labral tears) on standard MRIs. These lesions are difficult to diagnose due to subtle imaging features, often necessitating invasive MRI arthrograms (MRAs). We introduce ScopeMRI, the first publicly available, expert-annotated dataset for shoulder pathologies, and present a deep learning framework for Bankart lesion detection on both standard MRIs and MRAs. ScopeMRI contains shoulder MRIs from patients who underwent arthroscopy, providing ground-truth labels from intraoperative findings, the diagnostic gold standard. Separate models were trained for MRIs and MRAs using CNN- and transformer-based architectures, with predictions ensembled across multiple imaging planes. Our models achieved radiologist-level performance, with accuracy on standard MRIs surpassing radiologists interpreting MRAs. External validation on independent hospital data demonstrated initial generalizability across imaging protocols. By releasing ScopeMRI and a modular codebase for training and evaluation, we aim to accelerate research in musculoskeletal imaging and foster the development of datasets and models that address clinically challenging diagnostic tasks.

Similar content being viewed by others

Introduction

Deep learning (DL) in medical imaging has transformed healthcare research, improving non-invasive diagnosis and creating clinical decision aids across numerous specialties1,2,3,4. In musculoskeletal imaging, DL has achieved impressive results in diagnosing conditions such as anterior cruciate ligament (ACL) tears, meniscus injuries, and rotator cuff disorders5,6,7. However, these studies target pathologies where clinicians already excel. For example, initial diagnosis of ACL tears rarely requires imaging because the Lachman test, a physical exam for ACL tears, achieves a sensitivity and specificity of 81%8. On magnetic resonance imaging (MRI), sensitivity and specificity are as high as 92% and 99%, respectively9. Similarly, radiologists routinely achieve high diagnostic accuracy for meniscus and rotator cuff tears10,11. This reduces the potential clinical impact of applying DL to the diagnosis of these pathologies.

In contrast, anterior-inferior glenoid labral tears, or Bankart lesions, represent a far more challenging diagnostic task. These lesions are a leading cause of shoulder instability, and frequently occur in patients with traumatic shoulder dislocations12. Without accurate diagnosis and timely treatment, Bankart lesions can lead to chronic instability, progressive bone loss, and significant reductions in quality of life12,13. However, diagnosing these lesions on standard (non-contrast) MRIs is exceptionally challenging due to their subtle imaging features and frequent overlap with normal anatomy, resulting in high inter-observer variability, even among experienced radiologists14.

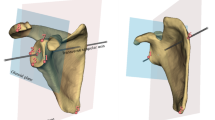

Current clinical practice often relies on MRI arthrograms (MRAs), where contrast is injected into the joint to enhance visualization of labral structures15,16. The improved visualization of intra-articular structures is apparent in Fig. 1, which depicts the same Bankart lesion on an MRA and a standard (non-contrast) MRI. While MRAs achieve sensitivity and specificity rates of 74–96% and 91–98%, respectively17,18,19, they are invasive, more expensive than standard MRIs, and associated with patient discomfort and risks such as allergic reactions and joint infections15,20,21,22,23,24. Standard (non-contrast) MRIs, on the other hand, are non-invasive and more widely available but are significantly less reliable for detecting Bankart lesions, with reported sensitivity rates as low as 52–55%25,26. This disparity underscores the urgent need for non-invasive diagnostic approaches that can match the diagnostic accuracy of MRAs while reducing patient burden and healthcare costs.

To address this unmet need, we introduce SCOPE-MRI (Shoulder Comprehensive Orthopedic Pathology Evaluation-MRI), the first publicly available, expert-annotated dataset for detecting Bankart lesions on shoulder MRIs. ScopeMRI provides a curated resource for advancing machine learning research in musculoskeletal imaging, a domain currently lacking accessible datasets. In addition to annotations for Bankart lesions, ScopeMRI includes expert labels for other clinically significant pathologies, such as superior labrum anterior-to-posterior (SLAP) tears, posterior labral tears, and rotator cuff tears. While this study focuses exclusively on Bankart lesions to demonstrate our approach, the inclusion of additional labels facilitates future research addressing diagnostic challenges in musculoskeletal imaging.

Our prior work demonstrated the initial feasibility of detecting Bankart lesions on standard MRIs using deep learning27. Building on that preliminary work, this article introduces the ScopeMRI dataset, incorporates external validation data, includes a detailed comparison of model architectures and pretraining strategies, uses stratified cross-validation for stable architecture selection, and provides an analysis of model interpretability. These enhancements provide a comprehensive evaluation of our approach and its implications for tackling challenging diagnostic tasks in musculoskeletal imaging.

We present a comprehensive workflow demonstrating best practices for leveraging deep learning in small, imbalanced datasets, using Bankart lesions as a representative case study of a challenging diagnostic task. Our workflow includes several components. (1) Domain-Relevant Transfer Learning: we evaluated several state-of-the-art architectures on MRNet28, including convolutional neural networks (CNNs) and transformers, and selected AlexNet29, Vision Transformer (ViT)30, and Swin Transformer V131 for fine-tuning on our dataset; comparisons with ImageNet-initialized32 models demonstrated superior performance with MRNet pretraining across all models and modalities, highlighting the value of domain-specific pretraining for specialized tasks. (2) Stratified Cross-Validation for Stable Architecture Selection: we employed an eight-fold stratified cross-validation strategy to evaluate model performance variability across dataset partitions. The most stable architecture for each view-modality was identified and re-trained on the initial dataset split, ensuring a consistent validation set for training the multi-view ensemble. (3) Multi-View Ensembling: by combining predictions across sagittal, axial, and coronal MRI views, we leveraged complementary diagnostic information to improve overall accuracy. (4) External Validation and Interpretability: external testing on an independent dataset provided initial evidence of generalizability. Grad-CAM33 visualizations highlighted alignment with clinically relevant features, offering insights into model decision-making.

This study underscores the importance of curating and publicly releasing datasets for challenging diagnostic tasks, as widespread availability of high-quality datasets is essential for developing DL models that can generalize across diverse patient populations and imaging protocols. By demonstrating that even small, imbalanced datasets can yield clinically relevant performance when combined with effective DL methodologies, we highlight the feasibility and value of DL in low data regimes. Notably, our models achieved diagnostic performance on standard MRIs comparable to radiologists interpreting MRAs—a less accessible and more invasive imaging modality—demonstrating the impact of targeted dataset creation. With the release of ScopeMRI, we enable future research in musculoskeletal imaging and encourage broader contributions in this underexplored domain. To further support these efforts, we also release a publicly available code repository containing a general-purpose training pipeline for medical imaging, including cross-validation, hyperparameter tuning, and Grad-CAM visualization, which is readily adaptable to other binary classification tasks on MRI and computed tomography (CT) data.

Results

Model selection and hyperparameter tuning

Results from the single best trial using the method described in Section “Model Architecture & Pretraining” for each of the nine model types are presented in Section S4. AlexNet, Vision Transformer, and Swin Transformer V1 performed the best on the MRNet dataset28 (highest AUC), so were selected for fine-tuning on our dataset.

To further optimize performance, we conducted 100 Hyperband34 hyperparameter tuning trials for each of the six view-modalities using the three model architectures (AlexNet, ViT, Swin Transformer). Each trial was initialized with MRNet-pretrained weights. We repeated this process with ImageNet initialization for comparison, resulting in 18 MRNet Hyperband loops and 18 ImageNet Hyperband loops—each loop consisting of 100 trials, with the “best” trial from each loop being the one achieving the highest validation AUC. The results of these “best” trials are presented in Fig. 2, where we show the AUC difference between MRNet and ImageNet pretraining for each model type and view-modality.

Positive values indicate higher performance with MRNet pretraining compared to ImageNet pretraining. Each cell represents the AUC difference for the corresponding model and view-modality pair, with results derived from the best-performing hyperparameter set for each model and view-modality. The AUC differences have been scaled by 100 for readability and are presented as percentages.

Model stability across splits

Table 1 and Fig. 3 show the cross-validation results for the models selected based on stability, defined by the lowest standard deviation in validation fold AUC. For Sagittal MRA, ViT was selected despite its lower mean AUC (0.725) compared to Swin Transformer (0.755), due to its substantially lower standard deviation (0.054 compared to 0.123). For the other view modalities, the model with the lowest standard deviation of AUC also had the highest mean AUC, demonstrating that stability was generally aligned with performance. The full cross-validation results for the final models selected for each view-modality are presented in Fig. 3. The hyperparameters for these models are provided in Section S5.

ROC AUC quantifies the model’s ability to distinguish between classes. Each box shows the interquartile range (IQR, 25th–75th percentile), with whiskers extending to 1.5 times the IQR. The horizontal line within each box represents the median AUC, while green triangles indicate the mean AUC. Black dots depict individual split AUCs, with dots outside the whiskers representing outliers. This visualization demonstrates the model’s performance stability on different validation folds across sagittal, axial, and coronal views for both standard MRIs and MRI arthrograms (MRAs).

Multi-view ensemble performance

The receiver operating characteristic (ROC) curves in Fig. 4 depict the performance of the single-view models and multi-view ensemble on the hold-out test set. For the model to have predicted a Bankart lesion diagnosis, the multi-view ensemble’s output probability must have exceeded the thresholds of 0.71 and 0.19 for standard MRIs and MRAs, respectively. These thresholds were chosen as described in Section “Re-Training on Initial Split and Multi-View Ensembling.” Results of the multi-view ensemble on the hold-out test set using these thresholds compared to radiologists are depicted in Table 2.

Results are shown on a internal standard MRIs, b MRI arthrograms (MRAs), and c external standard MRIs. The single-view models correspond to those included in the multi-view ensemble. Shaded regions around each curve represent 95% confidence intervals, calculated through bootstrapping with 1000 iterations. Radiologist performance is marked with red X symbols, illustrating sensitivity and false positive rates derived from original radiology reports (internal datasets only). The dashed diagonal line indicates the performance of a random classifier (AUC = 0.50).

Model interpretability

Grad-CAM33 visualizations highlight regions relevant to a model’s predictions. Figure 5 presents Grad-CAM heatmap visualizations for the axial view for four representative cases: an MRA without a tear, an MRA with a Bankart lesion, a standard MRI without a tear, and a standard MRI with a Bankart lesion. For all heatmaps, annotations highlight the anterior labrum, placed by a shoulder/elbow fellowship-trained orthopedic surgeon. The model attended to the relevant portions of the image, as determined by the surgeon, on all four cases.

Cases with and without Bankart lesions are presented. The model correctly classified all four cases. White circles highlight the anterior labrum (the region of interest), annotated by a shoulder/elbow fellowship-trained orthopedic surgeon. Heatmaps indicate regions most influential to the model’s prediction, with warmer colors (red/yellow) signifying higher relevance.

Discussion

The heatmap in Fig. 2 reveals that all model-view-modalities showed positive performance improvements when MRNet pretraining was used compared to ImageNet initialization, confirming the benefit of using domain-specific pretraining across all models and view-modalities. Interestingly, when comparing the AUC improvements between standard MRIs and MRAs, we observed a significantly higher mean AUC difference (two-tailed unpaired t-test p < 0.001) for standard MRIs (mean = 8.55%, s.d. = 1.06) compared to MRAs (mean = 4.42%, s.d. = 1.69). This suggests that MRNet pretraining has a more substantial positive impact on performance for the more challenging, imbalanced standard MRIs than for MRAs, where contrast makes lesion detection is easier. These results further highlight the importance of domain-specific pretraining when working with challenging datasets.

The cross-validation results illustrate the variability in model performance across dataset splits. This variability underscores the need for careful model selection when working with small and imbalanced datasets. While we prioritized stability as a selection criterion, it is important to acknowledge that even the most stable models showed some variation in performance (Fig. 3). This underscores the importance of re-training on the initial random split (where hyperparameter tuning was performed) to avoid potential bias in selecting particularly performant splits from the cross-validation. Notably, the MRA results exhibited lower variability compared to standard MRI. This is expected, as the intra-articular contrast in MRAs generally enhances labrum visualization15, possibly facilitating more stable and predictable model performance. For the easiest-to-interpret view-modality, the axial MRA35, the simplest architecture, AlexNet, was selected. In contrast, the Swin Transformer, being more complex than both AlexNet and ViT, was chosen for the two most challenging view-modalities—sagittal MRI and coronal MRI35. The Vision Transformer, which represents an intermediate level of complexity, was selected for the remaining view-modalities. This pattern suggests that simpler models perform more reliably on easier view-modalities, while more complex models are better suited for interpreting more challenging view-modalities.

The multi-view ensemble in Fig. 4 consistently outperformed single-view models across all datasets, demonstrating the value of integrating diagnostic information from sagittal, axial, and coronal views. Note that the single-view models in Fig. 4 performed better than during cross-validation (Fig. 3), likely due to differences in dataset size, tuning, and training schedules. The larger hold-out test set provided more stable estimates compared to the smaller validation folds, and hyperparameter tuning was specifically optimized for this dataset split. Additionally, the final re-training allowed for up to 100 epochs, compared to 30 epochs during cross-validation, potentially allowing the models to better learn Bankart lesion features. For standard MRIs (Table 2), the ensemble achieved a sensitivity of 83.33% and a specificity of 90.77%, substantially exceeding the radiology reports on this dataset (16.67% sensitivity, 86.15% specificity). These results also compare favorably to the literature-reported sensitivity (52–55%) and specificity (89–100%) for radiologists interpreting standard MRIs14,25. The ensemble’s strong performance on standard MRIs addresses a critical limitation of this non-contrast imaging modality, which often struggles to reliably detect subtle Bankart lesions. Notably, the ensemble’s sensitivity and specificity on standard, non-invasive MRIs compare to those typically reported for radiologists interpreting invasive MRAs (74–96% sensitivity, 91–98% specificity)17,18,19, further underscoring its utility. Of note, it is expected that the radiology report performance on our standard MRI cohort is lower than published ranges. This is because our figures reflect routine clinical reports, whereas many literature estimates come from retrospective reader studies with consensus from multiple radiologists, settings that typically produce higher sensitivities than day-to-day reporting26. The standard MRI test set also included only six Bankart-positive cases, making sensitivity volatile (one additional true positive would shift sensitivity by 16.7 percentage points). Together, the use of routine reports and the small number of positives plausibly explain the lower sensitivity observed here.

For MRAs (Table 2), the ensemble achieved a sensitivity of 94.12% and a specificity of 86.21%, exceeding the sensitivity and matching the specificity of radiology reports on this dataset (82.35% sensitivity, 86.21% specificity). These results align well with literature benchmarks for radiologists interpreting MRAs, which report sensitivity and specificity ranges of 74–96% and 91–98%, respectively17,18,19. The ensemble’s ability to achieve performance comparable to radiologists interpreting MRAs reinforces its ability to effectively combine diagnostic information across views and leverage the enhanced contrast provided by MRAs. Moreover, this performance on the less-imbalanced MRA test set (37% positive cases) increases confidence in the results, as the MRAs provide a more balanced evaluation compared to the standard MRI dataset.

The external dataset results in Fig. 4c and Table 2 provide preliminary evidence of generalizability, with the ensemble achieving an AUC of 0.90, perfect sensitivity (100%), and a specificity of 80%. These results suggest that the model’s performance is not overly dependent on the internal dataset’s characteristics. However, the small size of the external dataset (n = 12) and the low number of positive cases (n = 2) underscore the need for further validation on larger, more diverse datasets to confirm broader applicability. Overall, the ensemble’s performance highlights its ability to address the diagnostic challenges associated with Bankart lesions, a subtle pathology that often eludes detection on non-contrast imaging. By achieving sensitivity and specificity comparable to radiologists interpreting MRAs, the ensemble offers a potential non-invasive alternative to MRAs, particularly in settings where invasive imaging is less accessible or contraindicated. Further research is needed to validate its performance across diverse clinical settings and patient populations.

The Grad-CAM visualizations in Fig. 5 for the MRAs demonstrate focused activation within the anterior labrum for both the no-tear case and Bankart lesion case. For the no-tear MRA, the area of highest activation is in the anterior-inferior labrum region, but there is an additional lesser area of activation near the posterior

labrum. In the Bankart lesion case, there is strong overlap between the activation and the surgeon-annotated region highlighting the tear, likely indicating that the model successfully identified the pathological features associated with the tear. For the standard MRIs, the Grad-CAM visualizations show less localized activation compared to MRAs, with several peripheral areas of minor activation near the image edges in both cases. This reduced specificity in the activation maps may result from the lower visual clarity of labral structures on standard MRIs due to the lack of intra-articular contrast. Additionally, as noted in Table 3, the standard MRIs had a significantly lower percentage of 3.0 T exams (i.e. higher proportion of lower quality 1.5 T exams)—suggesting that the lower average image quality in the standard MRI group may also have contributed to the less focused heatmap. Although the standard MRI dataset was larger overall (335 MRIs vs. 251 MRAs), it contained far fewer positive cases (8.6% vs. 31.9%), creating substantial class imbalance. This imbalance likely limited the model’s ability to learn localized features, leading to more diffuse activations. The broader activations likely reflect a combination of these factors, underscoring the need for cautious interpretation. For the no-tear standard MRI case, the heatmap shows the highest activation in the anterior labrum, suggesting that the model still prioritizes this region as a key diagnostic feature, even in the absence of pathology. For the Bankart lesion case, the anterior labrum remains the area of highest activation, but the heatmap also strongly highlights additional regions within the joint space. Unlike for the MRA, the annotating surgeon noted no obvious tear characteristics for the standard MRI with a Bankart lesion. Consequently, the additional highlighted regions in the model’s heatmap may reflect subtle imaging cues associated with the tear that are not easily discernible to human observers. This suggests that the model may capture features indicative of the tear that are challenging for radiologists or surgeons to detect on standard MRIs due to the lack of intra-articular contrast.

Recent advancements in deep learning (DL) have significantly enhanced the detection of musculoskeletal pathologies in MRI scans. Notably, DL models have been developed for diagnosing anterior cruciate ligament (ACL) tears, meniscus injuries, and rotator cuff disorders4,6,7. For example, Zhang et al.5 applied DL algorithms to ACL tear detection, achieving diagnostic performance comparable to that of experienced radiologists. However, like many studies in musculoskeletal imaging, the data used in this work are not publicly available. While such studies demonstrate impressive results, they largely focus on pathologies where clinicians already excel. For instance, the Lachman test—a physical exam for detecting complete ACL ruptures—has a sensitivity and specificity of 81%4,8, and imaging is often used for confirmation and surgical planning rather than for primary diagnosis. Similarly, MRI-based models for meniscus and rotator cuff tears address diagnostic tasks where radiologists already achieve high performance10,11, limiting their clinical impact. In contrast, the detection of subtle musculoskeletal injuries, such as Bankart lesions, on MRI presents significant challenges, particularly in the absence of intra-articular contrast36. A recent survey of publicly available MRI datasets aimed to comprehensively catalog existing resources, but notably, no shoulder datasets were identified, underscoring a critical gap in the field37. In contrast, datasets like MRNet28 and fastMRI38 have catalyzed progress in knee and other anatomical regions by providing resources for reproducibility and benchmarking. The lack of comparable datasets for shoulder pathologies severely limits the development of DL models targeting several clinically challenging diagnoses. Existing studies on labral tears have primarily focused on superior labrum anterior-to-posterior (SLAP) tears. Ni et al.39 used a convolutional neural network (CNN) to detect SLAP tears on MRI arthrograms (MRAs), which improve diagnostic clarity but are invasive, costly, and associated with procedural risks15,21,22,24. Clymer et al., in contrast, examined SLAP tears on standard MRIs but focused on pretraining methodologies rather than addressing broader clinical challenges40. Importantly, no prior studies have targeted Bankart lesion detection—which, on standard MRIs, is a task that radiologists themselves find exceedingly difficult, achieving only 16.7% sensitivity in our dataset. Our study is the first to address this unmet clinical need by developing DL models for Bankart lesion detection on both standard MRIs and MRAs. Unlike prior studies, we use all available MRI sequences (e.g., T1, T2, MERGE, PD, STIR) across sagittal, axial, and coronal views, and explored state-of-the-art architectures, including both CNNs and transformers. By leveraging domain-specific pretraining, stratified cross-validation, and multi-view ensembling, our models achieve diagnostic performance on non-invasive standard MRIs that rivals radiologist performance on MRAs—addressing a longstanding clinical challenge. Additionally, we introduce ScopeMRI, the first publicly available, expert-annotated shoulder MRI dataset. ScopeMRI includes image-level annotations for Bankart lesions, SLAP tears, posterior labral tears, and rotator cuff injuries, providing a critical resource for advancing DL applications in shoulder imaging. By addressing both a clinically significant unmet need and the systemic lack of public datasets, our work demonstrates the potential of DL to improve diagnostic workflows for challenging diagnoses in musculoskeletal imaging.

Several limitations of this study should be noted. First is the small number of positive cases in the test set (6/71, 8.45%) for standard MRIs. This imbalance reflects current clinical practice, where standard MRIs are rarely ordered when a Bankart lesion is suspected due to their perceived lower diagnostic utility compared to MRAs. While our findings demonstrate the potential of deep learning to improve the utility of standard MRIs, the limited sample size and class imbalance constrained statistical power and may reduce the stability of reported performance metrics. Larger, multi-institutional datasets will be required for future validation. By publicly releasing ScopeMRI, our goal is to help enable such studies. Additionally, the external dataset of 12 standard MRIs provides an initial effort to assess generalizability by introducing variability in imaging protocols, including differences in manufacturers and acquisition settings. However, this dataset does not represent a distinct patient population, as all included patients underwent surgery at our institution. Further, all 12 scans were standard MRIs, so the generalizability of our MRA models to external scans remains untested. Despite these limitations, incorporating variability in standard MRI imaging protocols is an important step toward understanding how models might perform across diverse imaging conditions. Finally, while our cross-validation approach was designed to identify the most stable architectures, our results still demonstrated performance variability across splits. This variability reflects the inherent challenges of small datasets, and results derived from such datasets should always be interpreted with caution.

A future reader/observer study is critical to evaluate the model’s impact on clinical decision-making. While our models outperformed radiologists’ original reports on our dataset, they should be used as decision-support tools rather than standalone decision-makers1,4. A reader study would assess how clinicians interact with the model’s outputs and compare clinician model-assisted and model-unassisted performance, providing insights into the practical utility of the models and their potential to enhance diagnostic confidence and accuracy.

Enhancing model interpretability remains a critical step toward clinical adoption. This study used Grad-CAM33 as a post-hoc interpretability tool to visualize the regions most contributing to the model’s predictions. While Grad-CAM provides useful insights, it has limitations, including variability based on chosen model layer and input characteristics41,42,43. Given these challenges, Grad-CAM is best viewed as a tool for approximate understanding rather than a definitive validation mechanism. Future work should explore integrating interpretability techniques directly into the model architecture, such as the prototype-based “This Looks Like That” framework44, which enables models to reason through clinically interpretable features. This approach has shown promise in other medical domains, such as breast cancer1, electroencephalography45, and electrocardiography46, and could improve trust and usability among clinicians by aligning model reasoning with familiar diagnostic patterns.

A transformative direction for this research lies in exploring techniques to convert standard MRIs into MRA-like images. This would be similar to the “virtual staining” approach that has shown success in digital histopathology47, and could enhance interpretability and usability for radiologists by creating synthetic MRA-like images that preserve the diagnostic familiarity of traditional arthrograms. However, this would be most feasible with paired standard MRI-MRA datasets, which may be challenging to acquire. Future work should investigate the feasibility of generating such datasets and the performance of models trained on these synthetic images.

We propose a deep learning workflow that successfully detects Bankart lesions on both standard MRIs and MRAs, achieving high accuracy, sensitivity, and specificity on both modalities. Our models address a critical clinical need by enabling diagnostic performance on non-invasive standard MRIs comparable to radiologists interpreting MRAs, a more invasive, more costly, and less accessible modality. This approach has the potential to reduce reliance on arthrograms, minimizing patient burden and enhancing diagnostic accessibility, particularly in resource-limited settings. Alongside addressing this diagnostic challenge, we introduce ScopeMRI, the first publicly available, expert-annotated shoulder MRI dataset. By providing annotations for multiple clinically significant shoulder pathologies, ScopeMRI supports future research and facilitates the development of diagnostic models for complex musculoskeletal conditions. Our study demonstrates that even small, imbalanced datasets can achieve clinically relevant results if effectively used, highlighting the value of similar dataset creation efforts for other diagnostic challenges. To enable reproducibility and broader application, we also release a modular deep learning codebase designed for binary classification on MRIs and CTs. While developed for Bankart lesion

detection, the framework supports training, cross-validation, hyperparameter tuning, and Grad-CAM visualization, and is readily adaptable to other diagnostic tasks and imaging modalities. Future work should validate our findings on larger, external datasets to confirm their generalizability across diverse clinical environments. Furthermore, integrating interpretability methods into diagnostic workflows will be essential for fostering clinical trust and adoption. These efforts are critical for translating technological advancements into meaningful improvements in patient care.

Methods

SCOPE-MRI dataset collection

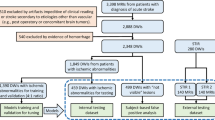

Figure 6 overviews the data collection and labeling protocol. The ScopeMRI dataset consists of patients who underwent shoulder arthroscopy for any diagnosis at University of Chicago Medicine between January 2013 and January 2024. Patients aged 12 to 60 who had received a standard shoulder MRI and/or MRI arthrogram (MRA) within one year prior to arthroscopic surgery of the ipsilateral shoulder were included. Children under the age of 12 typically have open physes and therefore different pathology of injury in the shoulder. We excluded patients greater than 60 years old as our study focused on acute, potentially operative labral tears, and older patients typically have degenerative labral tears. University of Chicago Medicine’s clinical research database was queried to identify medical record numbers (MRNs) and clinical information for patients meeting these criteria. This information included accession numbers for the appropriate pre-operative standard MRI/MRA for each patient. University of Chicago Medicine’s research imaging office then de-identified the scans and provided them along with a unique identifier key. This process yielded 601 patients with 743 MRIs. Additional exclusion criteria included patients with prior shoulder surgery on the ipsilateral shoulder, insufficient intraoperative photos, or operative notes with insufficient detail. Manual exclusion was performed for these criteria through chart review, narrowing the dataset down to 546 patients with 586 MRIs (335 standard MRIs and 251 MRAs). Labels were obtained during this manual chart review by accessing intraoperative arthroscopy photos alongside the operative note associated with the surgery following each standard MRI or MRA. The anterior-inferior labrum was then classified as either torn (Bankart lesion) or intact based on this information. Binary labels were also created for SLAP tears, posterior labral tears, and rotator cuff tears (i.e. any type of rotator cuff tear vs. no tear). Labels were curated by two shoulder/elbow fellowship-trained orthopedic surgeons and two orthopedic surgery residents trained by the surgeons. A common subset of 20 MRIs was labeled by all raters to measure inter-rater reliability—they achieved a Fleiss’s kappa = 1.0 for all four labels included in ScopeMRI, indicating complete agreement.

SCOPE-MRI dataset characteristics

After applying exclusion criteria (detailed in Fig. 6), our final dataset was narrowed down to 558 patients and 586 MRAs/standard MRIs (586 shoulders; some patients had bilateral surgeries). Dataset demographics and characteristics are in Table 3.

Out of the 586 MRIs, there were 109 (18.5%) with Bankart lesions based on intraoperative photos in correlation with the surgeon’s operative note (diagnostic gold standard). This ratio was approximately the same in the training, validation, and testing sets due to using random stratified sampling. The MRA and standard MRI groups varied significantly. Of the 251 MRI arthrograms, 80 (31.9%) had Bankart lesions, while only 8.6% (29/335) of standard MRIs had Bankart lesions (p < 0.001). MRA patients were significantly younger than standard MRI patients (31.0 versus 48.6 years old, p < 0.001). Moreover, there was a lower percentage of female patients in the MRA group than the standard MRI group (35.1% versus 50.1%; p < 0.001). The percent of right- sided exams between both groups was similar (61.0% MRA versus 63.3% standard MRI; p = 0.565). However, there was a higher proportion of 3.0 T scans for MRAs than for standard MRIs (73.3% versus 54.6%; p < 0.001). The remaining exams were all 1.5 T; all were taken with either a GE Medical Systems or Philips Healthcare machine.

The differences in the demographic and clinical characteristics between the MRA and standard MRI cohorts are consistent with current clinical practices. Younger patients, who are more likely to be suspected of having labral tears, are often preferentially given MRAs due to their demographic and activity levels. Consequently, the higher prevalence of labral tears in the MRA group aligns with the expectation that MRAs are ordered when there is a stronger suspicion of labral pathology19.

External dataset collection and description

In addition to the ScopeMRI dataset, we included an external dataset to evaluate the model’s ability to generalize to imaging protocols from other institutions. External dataset demographics and characteristics are displayed in Table 4. This external dataset consisted of 12 standard shoulder MRIs performed at outside institutions; no MRAs were available. These standard MRIs were imported into University of Chicago Medicine’s records because the patients subsequently underwent arthroscopic surgery at our institution. Ground truth labels for these MRIs were derived using the same method as for the ScopeMRI dataset: findings from arthroscopy photos and documented in the surgical notes. The external dataset differs from the ScopeMRI dataset in imaging protocols, including variations in MRI machine manufacturers, magnet strengths, and acquisition settings. Specifically, it includes four 3.0 T, seven 1.5 T, and one 1.0 T scan; manufacturer information is as follows: eight Siemens, one Philips Healthcare, one GE Health Systems, and two Hitachi machines.

Image preprocessing

Imaging data was preprocessed to prepare it for our deep learning study. Preprocessing involved resizing the volumes to a shape of n × 400 × 400 pixels, where n was the number of slices in the MRI. Then, the slices were center-cropped to 224 × 224 to isolate the region of interest. Standardization was performed for exams of each series/sequence type—T1, T2, MERGE, PD, or STIR (separately for with vs. without fat-saturation, as applicable)—after computing the corresponding intensity distribution from the training set. These values were used to adjust the intensities in all datasets, including for training, validation, and testing. Intensities were then scaled so that the voxel values ranged between 0 and 1. Both classes in the training set were augmented ten-fold using random rotations, translations, scaling, horizontal flips, vertical flips, and Gaussian noise.

Deep learning workflow

This section outlines the process of selecting and optimizing model architectures for detecting Bankart lesions.

We did not pool standard MRIs and MRAs into a single training set as the two modalities differed significantly in patient demographics and lesion prevalence (see Table 3), so mixing them without harmonization would risk the model learning modality or population-specific cues rather than pathology. We therefore trained and evaluated separate models per modality to avoid confounding.

We leveraged pretraining on the MRNet dataset28, a publicly available knee MRI dataset, to address the challenges posed by our small, imbalanced dataset. We also used pretrained weights from the ImageNet32 dataset for comparison. Model selection began with hyperparameter tuning across various architectures. Top-performing models underwent additional optimization on our ScopeMRI shoulder dataset (Section “Hyperparameter Tuning”).

To ensure stable model selection, we employed stratified cross-validation and prioritized stability across splits (Section “Dataset Split & Cross-Validation”). Final models were re-trained on the initial dataset split for sagittal, coronal, and axial views, which were combined into a multi-view ensemble for evaluation on a hold-out test set and an external dataset (Section “Re-Training on Initial Split and Multi-View Ensembling”). Performance metrics included accuracy, sensitivity, specificity, and area under the receiver operating characteristic curve (AUC). Post-hoc interpretability was assessed using Grad-CAM33, providing visual insights into model predictions (Section “Interpretability”). Implementation details and system requirements are described in Section S1 and Section S6, respectively. Figure 7 contains a schematic overview of our training and inference setup.

Model architecture and pretraining

We evaluated multiple state-of-the-art architectures to address the diagnostic challenges of Bankart lesion detection. These included AlexNet29, DenseNet48, EfficientNet49, ResNet3450, ResNet5050, Vision Transformer (ViT)30, Swin Transformer V131, and Swin Transformer V251, all initialized with ImageNet32 weights. For each MRI, individual slices were passed through the 2D model feature extractor, and features were aggregated across slices using max pooling, resulting in vector v. Vector v was then fed into a classifier layer followed by a sigmoid function to output a probability for each scan. Probabilities from MRI sequences from the same overall MRI were averaged together to get probabilities for each view. See Fig. 7 for additional details.

We also evaluated a custom 3D CNN. The custom 3D CNN architecture was inspired by AlexNet due to its strong performance in the detection of knee pathologies on MRIs28. It consisted of five convolutional layers with 96, 256, 384, 384, 256 filters, followed by ReLU activations, batch normalization, and max pooling after the first, second, and fifth convolutional layers. An adaptive average pooling layer and two fully connected layers (4096 neurons each) were included in the classifier, with dropout applied before the final layer.

For pretraining, we used the MRNet dataset28, a publicly available knee MRI dataset chosen for its anatomical and contextual relevance to musculoskeletal imaging. Pretraining for the initial architecture search was conducted on the sagittal view of MRNet using the “abnormal” label as the target. The top-performing architectures—AlexNet, Vision Transformer, and Swin Transformer V1—were identified based on their AUC on the MRNet validation set. These models were subsequently pretrained on the axial and coronal views of MRNet to prepare for fine-tuning on our shoulder dataset. Additional details on MRNet pretraining can be found in Section S3.

Hyperparameter tuning

Hyperparameter tuning was conducted separately for standard MRIs and MRAs using Hyperband optimization34. The search space included learning rate (log-uniform distribution: 1e-8 to 1e-1), weight decay (log-uniform distribution: 1e-6 to 1e-1), dropout rate (uniform distribution: 0–0.5), and learning rate scheduler (choice between CosineAnnealingLR with Tmax = 10 and ReduceLROnPlateau with factor=0.5 & patience=3).

To assess if MRNet pretraining resulted in performance improvements compared to ImageNet-initialization29, for each view (sagittal, axial, coronal) and modality (standard MRI, MRA), two Hyperband hyperparameter tuning runs were conducted: one where model weights were initialized via the MRNet pretraining strategy described in Section “Model architecture and pretraining” and Section S3, and one using ImageNet-weights29. For both cases, weights for the classifier were randomly initialized. The hyperparameter combination achieving the highest AUC on our dataset’s validation set was designated as the “best trial”. The hyperparameters and weight initialization strategy from this “best trial” were then applied to the stratified 8-fold cross-validation on our dataset.

Dataset split and cross-validation

Our dataset was initially split into a 20% hold-out test set, as well as an 80% training-validation (70% training & 10% validation) set using random stratified sampling to preserve class ratios. The initial training-validation split was used for all hyperparameter tuning.

All splitting was performed at the MRI (shoulder) level. Each shoulder contributed at most one standard MRI and/or one MRA; if repeat imaging was obtained due to poor quality, the initial scan was excluded. Patients with prior ipsilateral shoulder surgery or prior injuries were excluded. In rare cases where a patient underwent both a standard MRI and an MRA of the same shoulder, both scans were retained, but the standard MRI and MRA datasets were modeled and evaluated separately. This design ensured that all sequences/views from a given shoulder remained within a single split (train, validation, or test), preventing data leakage.

Given the variability inherent in small, imbalanced datasets, we conducted cross-validation to evaluate model stability across different training-validation splits. This was achieved by recombining the original 10% validation and 70% training sets (in total, comprising 80% of the original data), then using random stratified sampling to generate 8 folds. The cross-validation was performed using the hyperparameters identified from the best trial in the tuning phase described in Section “Hyperparameter tuning”. For each view-modality pair, models were trained on seven folds and validated on the remaining fold. Validation AUC was recorded for each fold, and the architecture exhibiting the highest stability (lowest standard deviation in validation AUC) was selected for each view-modality combination.

Re-training on initial split and multi-view ensembling

To minimize potential biases introduced by selecting specific cross-validation splits, we re-trained the most stable model for each view-modality pair on the initial random training-validation split. This re-training also ensured consistency in the validation set across views so it could be used for prediction threshold selection.

Probabilities from the coronal, sagittal, and axial view models were averaged to generate a multi-view ensemble prediction for both MRAs and standard MRIs. The prediction threshold for accuracy, sensitivity, and specificity was selected based on the point where sensitivity and specificity were equal on the unified validation set. This method was chosen to balance avoiding missed diagnoses while minimizing overcalls. The multi-view ensemble model was then evaluated on the hold-out test set and small external validation set, providing the final performance metrics for Bankart lesion detection.

Interpretability

Grad-CAM33 was used as a post-hoc interpretability tool to visualize model attention and identify regions within each image that contributed most to the model’s predictions. For convolutional architectures (i.e. AlexNet), the final convolutional layer was used to compute Grad-CAM outputs. For transformer-based architectures (i.e. Vision Transformer and Swin Transformer), the outputs from the final attention block were used.

Heatmaps were generated for representative examples from the axial view for both modalities (standard MRI & MRA) for both positive (Bankart lesion present) and negative cases. These heatmaps were scaled to the original preprocessed image dimensions and overlaid on the corresponding MRI slices for visualization. This allowed for a qualitative assessment of model attention alignment with clinically relevant features.

Ethics approval and consent to participate

This study received approval from the University of Chicago’s Institutional Review Board (IRB24-0025).

Data availability

SCOPE-MRI has been deposited to https://www.midrc.org/. Exact access instructions are found in the code repository. Due to Institutional Review Board (IRB) restrictions, data collected from external institutions cannot be publicly released, and therefore, the external dataset will not be included.

Code availability

To support reproducibility and broader applicability, we publicly release our training and evaluation code as a modular framework for binary classification on MRI and CT scans. The codebase includes support for training, hyperparameter tuning, cross-validation, and Grad-CAM visualizations. While originally developed for Bankart lesion detection, it is designed to apply deep learning to any binary classification task on MRIs or CTs. It is available at: https://github.com/sahilsethi0105/scope-mri/.

References

Barnett, A. J. et al. A case-based interpretable deep learning model for classification of mass lesions in digital mammography. Nat. Mach. Intell. 3, 1061–1070. https://www.nature.com/articles/s42256-021-00423-x (2021).

C, allı, E., Sogancioglu, E., van Ginneken, B., van Leeuwen, K. G. & Murphy, K. Deep learning for chest X-ray analysis: A survey. Medical Image Analysis 72, 102125. https://www.sciencedirect.com/science/article/pii/S1361841521001717 (2021).

Sun, R. et al. Lesion-Aware Transformers for Diabetic Retinopathy Grading, 10933–10942. https://ieeexplore.ieee.org/document/9578017/ (IEEE, 2021).

Fritz, B. & Fritz, J. Artificial intelligence for MRI diagnosis of joints: a scoping review of the current state-of-the-art of deep learning-based approaches. Skelet. Radiol. 51, 315–329 (2022).

Zhang, L., Li, M., Zhou, Y., Lu, G. & Zhou, Q. Deep learning approach for anterior cruciate ligament lesion detection: evaluation of diagnostic performance using arthroscopy as the reference standard. J Magn Resonance Imaging 52, 1745–1752. https://onlinelibrary.wiley.com/doi/pdf/10.1002/jmri.27266 (2020)

Rodriguez, H. C. et al. Artificial intelligence and machine learning in rotator cuff tears. Sports Med. Arthrosc. Rev. 31, 67 http://journals.lww.com/sportsmedarthro/fulltext/2023/09000/artificial_intelligence_and_machine_learning_in.3.aspxURL (2023).

Lin, D. J. et al. Deep learning diagnosis and classification of rotator cuff tears on shoulder MRI. Investig. Radiol. 58, 405 http://journals.lww.com/investigativeradiology/fulltext/2023/06000/deep_learning_diagnosis_and_classification_of.5.aspx (2023).

van Eck, C. F., van den Bekerom, M. P. J., Fu, F. H., Poolman, R. W. & Kerkhoffs, G. M. M. J. Methods to diagnose acute anterior cruciate ligament rupture: a meta-analysis of physical examinations with and without anaesthesia. Knee Surg. Sports Traumatol. Arthrosc. 21, 1895–1903 (2013).

Smith, C. et al. Diagnostic efficacy of 3-T MRI for knee injuries using arthroscopy as a reference standard: a meta-analysis. Am. J. Roentgenol. 207, 369–377. https://ajronline.org/doi/10.2214/AJR.15.15795 (2016).

Rutten, M. J. C. M. et al. Detection of rotator cuff tears: the value of MRI following ultrasound. Eur. Radiol. 20, 450–457 http://www.ncbi.nlm.nih.gov/pmc/articles/PMC2814028 (2010).

Bolog, N. V. & Andreisek, G. Reporting knee meniscal tears: technical aspects, typical pitfalls and how to avoid them. Insights Imaging 7, 385–398 https://insightsimaging.springeropen.com/articles/10.1007/s13244-016-0472-y (2016).

Rutgers, C. et al. Recurrence in traumatic anterior shoulder dislocations increases the prevalence of Hill–Sachs and Bankart lesions: a systematic review and meta-analysis. Knee Surg. Sports Traumatol. Arthrosc. 30, 2130–2140 https://www.ncbi.nlm.nih.gov/pmc/articles/PMC9165262/URL (2022).

Kang, R. W. et al. Complications associated with anterior shoulder instability repair. Arthrosc. J. Arthrosc. Relat. Surg. 25, 909–920 http://linkinghub.elsevier.com/retrieve/pii/S0749806309002059URL (2009).

Loh, B., Lim, J. B. T. & Tan, A. H. C. Is clinical evaluation alone sufficient for the diagnosis of a Bankart lesion without the use of magnetic resonance imaging? Ann. Transl. Med. 4, 419 http://www.ncbi.nlm.nih.gov/pmc/articles/PMC5124608/URL (2016).

Chang, E. Y. et al. SSR white paper: guidelines for utilization and performance of direct MR arthrography. Skelet. Radiol. 53, 209–244, https://doi.org/10.1007/s00256-023-04420-6 (2024).

Chandnani, V. P. et al. Glenoid labral tears: prospective evaluation with MRI imaging, MR arthrography, and CT arthrography. Am. J. Roentgenol. 161, 1229–1235 (1993).

Rixey, A. et al. Accuracy of MR arthrography in the detection of posterior glenoid labral injuries of the shoulder. Skelet. Radiol. 52, 175–181 (2023).

eview : Journal Type Magee, T. 3-T MRI of the shoulder: is MR arthrography necessary? Am. J. Roentgenol. 192, 86–92 http://www.ajronline.org/doi/10.2214/AJR.08.1097URL (2009).

Woertler, K. & Waldt, S. MR imaging in sports-related glenohumeral instability. Eur. Radiol. 16, 2622–2636, https://doi.org/10.1007/s00330-006-0258-6 (2006).

Liu, F. et al. Comparison of MRI and MRA for the diagnosis of rotator cuff tears. Medicine 99, e19579 http://www.ncbi.nlm.nih.gov/pmc/articles/PMC7220562/URL (2020).

Newberg, A. H., Munn, C. S. & Robbins, A. H. Complications of arthrography. Radiology 155, 605–606. https://pubs.rsna.org/doi/10.1148/radiology.155.3.4001360 (1985)

Hugo, P. C., Newberg, A. H., Newman, J. S. & Wetzner, S. M. Complications of arthrography. Semin. Musculoskeletal Radiol. 2, 345–348 https://www.thieme-connect.com/products/ejournals/abstract/10.1055/s-2008-1080115 (1998).

Giaconi, J. C. et al. Morbidity of direct MR arthrography. Am. J. Roentgenol. 196, 868–874 https://www.ajronline.org/doi/10.2214/AJR.10.5145 (2011).

Ali, A. H. et al. Radio-carpal wrist MR arthrography: comparison of ultrasound with fluoroscopy and palpation-guided injections. Skelet. Radiol. 51, 765–775 (2022).

Arnold, H. Non-contrast magnetic resonance imaging for diagnosing shoulder injuries. J. Orthop. Surg. 20, 361–364 (2012).

Magee, T. H. & Williams, D. Sensitivity and specificity in detection of labral tears with 3.0-T MRI of the shoulder. Am. J. Roentgenol. 187, 1448–1452 http://ajronline.org/doi/10.2214/AJR.05.0338 (2006).

Sethi, S. et al. Toward non-invasive diagnosis of Bankart lesions with deep learning, 13407, 860–869 (SPIE, 2025). https://www.spiedigitallibrary.org/conference-proceedings-of-spie/13407/134073G/Toward-non-invasive-diagnosis-of-Bankart-lesions-with-deep-learning/10.1117/12.

Bien, N. et al. Deep-learning-assisted diagnosis for knee magnetic resonance imaging: development and retrospective validation of MRNet. PLOS Med. 15, e1002699 http://journals.plos.org/plosmedicine/article?id=10.1371/ (2018).

Krizhevsky, A., Sutskever, I. & Hinton, G. E. ImageNet Classification with Deep Convolutional Neural Networks, Vol. 25. https://proceedings.neurips.cc/paper_files/paper/2012/hash/c399862d3b9d6b76c8436e924a68c45b-Abstract.html (Curran Associates, Inc., 2012).

Dosovitskiy, A. et al. An image is worth 16x16 words: transformers for image recognition at scale. http://arxiv.org/abs/2010.11929 (2021).

Liu, Z. et al. Swin transformer: hierarchical vision transformer using shifted windows. http://arxiv.org/abs/2103.14030 (2021).

Deng, J. et al. ImageNet: a large-scale hierarchical image database, 248–255 (2009).

Selvaraju, R. R. et al. Grad-CAM: visual explanations from deep networks via gradient-based localization, 618–626. https://ieeexplore.ieee.org/document/8237336 (2017).

Li, L., Jamieson, K., DeSalvo, G., Rostamizadeh, A. & Talwalkar, A. Hyperband: a novel Bandit-based approach to hyperparameter optimization. http://arxiv.org/abs/1603.06560 (2018).

Knight, J. A., Powell, G. M. & Johnson, A. C. Radiographic and Advanced Imaging Evaluation of Posterior Shoulder Instability. Curr. Rev. Musculoskelet. Med. 17, 144–156 http://www.ncbi.nlm.nih.gov/pmc/articles/PMC11068713/URL (2024).

Fallahi, F. et al. Indirect magnetic resonance arthrography of the shoulder; a reliable diagnostic tool for investigation of suspected labral pathology. Skelet. Radiol. 42, 1225–1233 (2013).

Dishner, K. A. et al. A survey of publicly available MRI datasets for potential use in artificial intelligence research. J. Magn. Reson. Imaging 59, 450–480 http://onlinelibrary.wiley.com/doi/abs/10.1002/jmri.29101URLeprint (2024).

Zbontar, J. et al. fastMRI: an open dataset and benchmarks for accelerated MRI. http://arxiv.org/abs/1811.08839 (2019).

Ni, M. et al. A deep learning approach for MRI in the diagnosis of labral injuries of the hip joint. J. Magn. Reson. Imaging 56, 625–634 (2022).

Clymer, D. R. et al. Applying machine learning methods toward classification based on small datasets: application to shoulder labral tears. J. Eng. Sci. Med. Diagn. Ther. 3. https://doi.org/10.1115/1.4044645 (2019).

Omeiza, D., Speakman, S., Cintas, C. & Weldermariam, K. Smooth Grad-CAM++: an enhanced inference level visualization technique for deep convolutional neural network models. http://arxiv.org/abs/1908.01224 (2019).

Buono, V., Mashhadi, P. S., Rahat, M., Tiwari, P. & Byttner, S. Expected Grad-CAM: Towards gradient faithfulness. http://arxiv.org/abs/2406.01274 (2024).

Lucas, M., Lerma, M., Furst, J. & Raicu, D. Visual Explanations from Deep Networks via Riemann-Stieltjes Integrated Gradient-based Localization http://arxiv.org/abs/2205.10900 (2022).

Chen, C. et al. This looks like that: deep learning for interpretable image recognition. http://arxiv.org/abs/1806.10574 (2019).

Barnett, A. J. et al. Improving Clinician Performance in Classifying EEG Patterns on the Ictal–Interictal Injury Continuum Using Interpretable Machine Learning. NEJM AI 1, https://doi.org/10.1056/aioa2300331, http://www.ncbi.nlm.nih.gov/pmc/articles/PMC11175595/ (2024).

Sethi, S. et al. ProtoECGNet: case-based interpretable deep learning for multi-label ECG classification with contrastive learning. Proceedings of the 10th Machine Learning for Healthcare Conference, Vol. 298 (Proceedings of Machine Learning Research, 2025). https://proceedings.mlr.press/v298/sethi25a.html.

Latonen, L., Koivukoski, S., Khan, U. & Ruusuvuori, P. Virtual staining for histology by deep learning. Trends Biotechnol. 42, 1177–1191 https://www.sciencedirect.com/science/article/pii/S0167779924000386 (2024).

Huang, G., Liu, Z., van der Maaten, L. & Weinberger, K. Q. Densely Connected Convolutional Networks http://arxiv.org/abs/1608.06993 (2018).

Tan, M. & Le, Q. V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks http://arxiv.org/abs/1905.11946 (2020).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. http://arxiv.org/abs/1512.03385 (2015).

Liu, Z. et al. Swin transformer V2: scaling up capacity and resolution. http://arxiv.org/abs/2111.09883 (2022)

Zlatkin, M. B., Hoffman, C. & Shellock, F. G. Assessment of the rotator cuff and glenoid labrum using an extremity MR system: MR results compared to surgical findings from a multi-center study. J. Magn. Reson. Imaging 19, 623–631 http://onlinelibrary.wiley.com/doi/abs/10.1002/jmri.20040URLeprint (2004).

Acknowledgements

We would like to thank the University of Chicago Center for Research Informatics (CRI) High-Performance Computing team for providing resources and support throughout this project. We would also like to thank the University of Chicago CRI Clinical Research Data Warehouse & Human Imaging Research Office (HIRO) for their roles in data collection. Finally, we would like to thank Steven Song and the UChicago AI in Biomedicine Journal Club for their constructive feedback on our manuscript. The CRI is funded by the Biological Sciences Division at the University of Chicago with additional funding provided by the Institute for Translational Medicine, CTSA grant number UL1 TR000430 from the National Institutes of Health. This project was supported by the National Center for Advancing Translational Sciences (NCATS) of the National Institutes of Health (NIH) through Grant Number UL1TR002389-07 that funds the Institute for Translational Medicine (ITM). The content is solely the responsibility of the authors and does not necessarily represent the official views of the NIH. M.S. was funded by the U.S. Department of Energy, Office of Science, Office of Advanced Scientific Computing Research, Department of Energy Computational Science Graduate Fellowship under Award Number DE-SC0023112.

Author information

Authors and Affiliations

Contributions

S.S., N.M., and L.S. designed the study. S.R., J.S., D.N., N.M., and L.S. collected data. S.S. and M.S. developed the code, ran the experiments, and analyzed the results. S.S., S.R., and M.S. wrote the main manuscript text. All authors reviewed and approved the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Sethi, S., Reddy, S., Sakarvadia, M. et al. SCOPE-MRI: Bankart lesion detection as a case study in data curation and deep learning for challenging diagnoses. npj Artif. Intell. 1, 41 (2025). https://doi.org/10.1038/s44387-025-00043-5

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s44387-025-00043-5