Abstract

Amid the heated debate on whether artificial intelligence possesses a human-like capacity for understanding, the compatibility and interaction between human and algorithmic visual attention remain unclear. Here, we address this issue through the lens of spatial and feature-based attention. Using autonomous driving as an epitome of safety-critical domains, we show that human attention in driving tasks can be divided into three phases, each characterized by spatial, feature-based, and mixed visual attention. Comparisons between each phase of human attention and algorithmic attention revealed a complex landscape of human-AI resemblance. For specialized detection and planning algorithms, incorporating semantic-rich, feature-based human attention markedly enhanced performance, suggesting these models lack human-like semantic visual understanding. In contrast, for large-scale Vision-Language Models, the effect was task-dependent, suggesting that while foundation models have bridged the “reasoning gap” through massive pre-training, a “grounding gap” persists in fine-grained visual tasks. Crucially, our findings demonstrate that incorporating human semantic attention offers an effective and economic pathway to compensating for these gaps, enhancing model understanding in safety-critical and grounding-heavy tasks without the need for massive scale.

Similar content being viewed by others

Introduction

Visual attention, as both a cognitive phenomenon and a mechanism for visual processing, is common to human and artificial intelligence1,2. Attention-based algorithms, particularly Transformer-based networks such as large language models3, are increasingly being utilized in various aspects of our daily lives. For instance, as active control of vehicles shifts gradually from human drivers to AI algorithms, autonomous driving has demonstrated the potential to revolutionize the way we travel4,5.

Despite this encouraging trend, however, there has been an ongoing debate over whether artificial intelligence truly possesses the capacity for understanding. In the realm of autonomous driving, recent years have witnessed exceptional technological advancements and impressive performance of attention-based algorithms in addressing visual challenges on the road2,6,7,8,9,10. Various strategies have been proposed to enhance these algorithms’ ability to identify and focus on critical information within a scene11,12,13,14,15. However, current autonomous driving systems still appear to lack a genuine understanding of driving scenes, as evidenced by their struggles in responding appropriately to situations such as rare long-tail cases16 and moral dilemmas17.

Human drivers, by contrast, utilize their semantic understanding and situational awareness18 when processing complex driving scenes. This understanding is often reflected in their attention distribution during driving tasks such as hazard detection19,20, maneuver decision making based on situational affordances21,22,23, and anomaly detection24. Most recently, efforts have been made to incorporate human attention data into autonomous driving algorithms to enhance model performance25,26,27.

Despite the promising results elicited by these approaches, it remains unclear whether incorporating human attention data can help algorithms achieve true “understanding”. In other words, we have yet to determine the compatibility between human and algorithmic visual attention, identify the aspects unique to human perception, and understand how this uniqueness could assist algorithms in visual processing.

This lack of alignment between human and algorithmic attention can obscure the explainability of the model’s decisions, making it challenging to interpret and trust the outcomes. It could also lead to unpredictable model behavior and reduce the system’s robustness, as the algorithm might learn the noise and biases inherently embedded in human attention data. These issues highlight the need for a theoretical framework of visual attention that is generalizable from the human to the AI domain and based on quantifiable metrics.

Psychological research on the time course of human visual attention suggests a distinction between visual spatial and feature-based attention28,29,30. Essentially, spatial attention determines the the locations to which attention is allocated, and feature-based attention determines what is attended at those specific locations. Following this vein, attention-based algorithms, which operate on algorithmic optimization and assign weight to locations of elements in the scene, seem not to have awareness of what these elements are.

Adopting this framework of visual attention, we parsed the spatial and feature-based components of human visual attention in driving tasks and compared them with algorithmic attention. To achieve this, we invited human drivers to perform driving tasks in a driving simulator while we recorded their eye movements. We divided the drivers’ visual attention between trial onset and response into three phases: scanning, examining, and reevaluating18,31 (Fig. 1a). The boundaries between these phases were defined by the time points at which the driver’s eye fixation entered and exited the most semantically salient region of the scene for the first time. Accordingly, the scanning phase was primarily spatial, the examining phase was primarily feature-based, and the reevaluating phase was a mixture of spatial and feature-based attention. (See the next section for a detailed explanation).

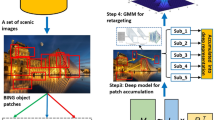

a An illustration of the three phases of human attention distribution in a driving task. The time points t0, t1, and t2 correspond to trial onset, the first time human fixation enters the area of interest (AOI), and the first time human fixation exits the AOI. b Instead of incorporating human attention data in its full length, the theoretical framework and results of the present study suggest that incorporating the examining phase of, or feature-based human attention data would most substantially enhance model performance. (The real-life driving scenes are selected from the DRAMA and BDD-OIA datasets.).

Then, the extent to which algorithmic attention resembled each phase of human attention should reflect the degree of human-likeness in algorithmic processing. Higher levels of resemblance observed after model training would suggest that the algorithm had learned visual information analogous to the corresponding component of human attention. Conversely, lower levels of resemblance would indicate aspects of visual attention that were unique to human visual processing.

To further elucidate what unique aspects of human visual processing might enhance algorithmic performance, we analyzed the outcomes of incorporating different phases of human attention into both specialized deep-learning models and Vision Language Models (VLMs) (Fig. 1b). We identified a critical divergence between spatial and semantic attention: while human spatial attention appeared redundant for algorithmic visual processing, the semantic information inherent in the examining phase–or feature-based human attention–markedly improved model performance in safety-critical detection and trajectory planning tasks. This suggests that specialized algorithms, despite their geometric precision, lack the intrinsic semantic prioritization that characterizes human driving. Furthermore, our investigation into foundation models revealed a complex landscape of human-AI resemblance. While large-scale VLMs appear to have bridged the "reasoning gap" for high-level logic, they continue to struggle with a "ground gap" in fine-grained visual tasks, where human semantic attention provided significant performance gains.

Thus, the findings of the present study suggest that it is the capacity for semantic understanding--specifically the precise grounding of semantics in the visual scene- that distinguishes human visual attention from algorithmic visual attention--regardless of scale. Crucially, we show that incorporating this human semantic prior offers an effective and economic pathway to compensating for these gaps, enhancing the safety and reliability of autonomous driving systems in complex, real-world environments.

Results

Three phases of human attention distribution

We invited expert and novice drivers to perform three driving tasks while we tracked their eye movements. These tasks, which are safety critical for autonomous driving, are common to autonomous vehicles and human drivers, namely, hazard detection, affordance recognition, and anomaly detection. In the hazard detection task, drivers and the autonomous vehicle must identify the most probable traffic hazard in the driving scene. In the affordance recognition task, they decided whether the ego vehicle could safely execute a turn or lane change to the left in the given situation. In the anomaly detection task, they identified the object least likely to appear in the driving scene.

All three tasks were target-oriented, with the targets being specific objects that rendered a driving scene safe or hazardous, providing or denying affordance, normal or anomalous. Hazard detection differed from anomaly detection in that the hazardousness of an object was determined by its interaction with the ego vehicle, whereas the anomalousness of an object was based on its semantic relationship with the other objects in the scene. The target in each scene contained the richest information critical for completing the task and was therefore the most semantically salient32. We hence referred to the target as the Area of Interest (AOI, see Methods) of the scene where critical task-related information resided24.

Previous research suggests the human visual system processes different types of information before, during, and after target detection. According to the feature-integration theory of attention33, low-level visual features such as color and shape are encoded at the early “preattentive” stage of visual processing34. During this stage, observers could perceive the gist of the scene35,36 but not how features are conjoined to be distinguished as a semantically meaningful object37. The latter requires serial focal attention allocated to each object. When observers detect a target, the sensory representation of the selected object is enhanced, and the properties associated with that object are processed more efficiently38,39. Activation of representation of the selected object also seems to activate representations of other objects semantically related to it40, which helps explain why, in a target detection task, observers often shift their attention in an object-based manner before their final decision making41,42. The shift could be to verify that, within all available and semantically related options, the selected object was indeed the target.

Based on these findings, we hypothesized that human attention in driving tasks could be divided into three phases as time-locked to its interaction with the AOI. The first, scanning phase, started at the timestamp of the trial onset (t0) and ended at the timestamp of the first fixation in the time-sorted list whose (x, y) coordinates fell within the pre-defined bounding box of the AOI (t1). During this phase, observers perceived the gist of the scene and oriented their attention to the AOI either overtly (i.e., through searching) or automatically41,43,44,45, which makes this phase primarily spatial. At t1, the target was already distinguished as semantically critical for the task. Then, the examining phase started at t1 and ended at the timestamp of the first subsequent fixation (i.e., the first fixation occurring after t1) whose (x, y) coordinates fell outside the AOI bounding box (t2). During this phase, observers examined the target, perceiving its features and evaluating its semantic meanings41,46, which determined the object-level feature-based nature of this phase. Then, the reevaluating phase started at t2 and lasted until the timestamp of the participant’s key press or mouse click response (treaction), during which time observers reevaluated and verified their judgment through an object-based comparison as objects served as convenient units organizing semantically related features47. The reevaluating phase was thus a mixture of spatial and feature-based human attention.

To test this hypothesis, we recruited both expert and novice drivers in an eye-tracking experiment (see Supplementary Table 1 for details). During the experiment (see Supplementary Fig. 1 for the experimental set up), participants completed the driving tasks in random order on each of three image sets which were randomly selected from the DRAMA, BDD-OIA, and ANOMALY datasets (see Methods). Supplementary Fig. 2 shows the experimental procedure in each trial. The participants completed all three tasks with high accuracy (hazard detection, acc = 0.88, SD = 0.14; affordance recognition, acc = 0.86, SD = 0.08; anomaly detection, acc = 0.98, SD = 0.04).

We computed the duration of each phase and the proportion of time each phase took relative to the participants’ reaction time (see Methods). We also calculated the participant’s number of fixations, average fixation duration, and horizontal variance of fixations in each phase. These variables have been shown to reflect the influence of bottom-up (visual salience, spatial density, configuration of the stimuli, etc.) and top-down (task goal, expertise, complexity of decision, etc.) factors on attention allocation48,49,50,51.

The results showed a significant main effect of phase on all dependent variables (Supplementary Table 2). There was a significant interaction between phase and expertise on average fixation duration (Supplementary Table 3), a significant interaction between phase and image set (Supplementary Table 4) as well as a significant three-way interaction between phase, image set, and task goal on all dependent variables except average fixation duration (Supplementary Tables 5). Although the interaction between phase and task goal was non-significant for all dependent variables (Supplementary Table 6), these results nevertheless supported the three-phase hypothesis of human attention allocation (see Fig. 2a for an illustration of human attention allocation in each phase) and urged for a phase-by-phase analysis of the effects of the other variables.

a Examples of human attention distribution in each task and phase. Red squares indicate areas of interest. Redder colors indicate more and longer fixations located in an area. b Factors impacting human attention distribution in each phase. The line charts show the results of phase proportion in each phase. Points in the line charts correspond to the mean values, and error bars represent the means minus and plus one standard error. See Supplementary Fig. 4 for a more detailed illustration of significant results. (The real-life driving scenes are selected from the DRAMA and BDD-OIA datasets.).

The results of a phase-by-phase analysis (Fig. 2b) showed that, in the scanning phase, there was a significant main effect of image set on phase duration, phase proportion, and number of fixations (Supplementary Table 7). On average, on the DRAMA image set, the scanning phase consisted 1.25 fixations (SD = 0.08) and lasted 399.52 ms (SD = 60.85 ms) which accounted for. 14 (SD = 0.02) of the average reaction time (M = 3017.34 ms, SD = 177.74 ms). On the BDD-OIA image set, the scanning phase consisted 0.76 fixations (SD = 0.06) and lasted 238.87 ms (SD = 28.48 ms) which accounted for. 10 (SD = 0.01) of the average reaction time (M = 2974.78 ms, SD = 148.06 ms). On the ANOMALY image set, the scanning phase consisted 3.73 fixations (SD = 0.19) and lasted 1259.45 ms (SD = 93.41 ms) which accounted for. 41 (SD = 0.02) of the average reaction time (M = 3432.66 ms, SD = 232.82 ms). The dominant role of image set in the scanning phase was consistent with previous findings that the preattentive, orienting stage of attention distribution was primarily stimulus-driven33,34.

As in Supplementary Fig. 3 and Supplementary Table 8, in the examining phase, expert drivers had a longer phase duration (M = 705.75 ms, SD = 27.69 ms) and average fixation duration (M = 284.02 ms, SD = 12.01 ms) than novice drivers (phase duration, M = 622.52 ms, SD = 27.69 ms; average fixation duration, M = 246.00, SD = 12.01 ms), which was consistent with previous findings that experienced drivers dwell and fixate longer on areas related to their task52 and corroborated the feature-based nature of this phase. The main effect of image set was significant for all dependent variables except average fixation duration. For phase proportion specifically, there was a significant three-way interaction between expertise, image set, and task, which suggested the influence of expertise was specific to particular combinations of tasks and image sets in this phase.

As in Supplementary Table 9, in the reevaluating phase, there was a significant main effect of image set on phase proportion, average fixation duration, and horizontal variance of fixations. The two-way interaction between expertise and task was significant for all dependent variables except average fixation duration, and the interaction between image set and task was significant for all dependent variables. These results suggested that task goal played a major role during the reevaluating phase, interacting respectively with expertise and image set. These results, particularly the significant effects on horizontal variance of fixation, went in line with our definition of the function of this phase–to finalize a decision through comparison between objects in order to fulfill the task goal, which further supported that human attention in this phase was both spatial and feature-based.

To summarize, the results from the eye-tracking experiment supported the hypothesis that human attention in target-oriented driving tasks could be divided into three phases each characterized by human visual spatial, feature-based, and mixed attention respectively.

Attention-based algorithm and its human-likeness

We went on to examine the resemblance between algorithmic attention and each phase of human attention. Towards this end, we constructed an attention-based deep learning algorithm and, on each dataset, performed the same tasks as in the human eye-tracking experiment with it. As illustrated in Fig. 3a, our algorithm comprised two primary components: an image encoder and a task-specific decoder. The encoder employed a conventional neural network for initial image feature extraction, which was augmented by a transformer encoder to enhance feature interaction. The decoder utilized a multi-layer Transformer decoder to aggregate image information into a learnable embedding. The learnable embedding was then processed by task-specific prediction heads, enabling translation of the embedding into specific outputs such as bounding boxes and maneuver decisions.

a Architecture of our deep learning algorithm. b Model accuracy of the non-pretrained algorithm with different model sizes. c Model accuracy with and without pretraining. Dashed line indicates human averages in each task. d Examples of the algorithm’s attention distribution as finetuning progressed. Red squares indicate areas of interest (The real-life driving scenes are selected from the DRAMA and BDD-OIA datasets.).

For clarity, we hereby refer to pretraining as the training process on a general object detection task and finetuning as the training process on specific tasks such as hazard detection, affordance recognition, and anomaly detection. Object detection serves as a prerequisite for hazard detection, affordance recognition, and anomaly detection, as one must first detect an object to determine if it is hazardous, affords maneuvers, or is anomalous. Additionally, the information required for each specific task differs; for instance, the algorithm does not need to know if an object impedes a maneuver when identifying it as hazardous. Therefore, pretraining can equip the algorithm with the foundational knowledge necessary for downstream tasks, and finetuning refines the algorithm to be more task-specific.

Given that a) the non-pretrained algorithm achieved sufficiently high accuracy on all three tasks starting from a medium model size (Fig. 3b), and b) when loaded with pretrained encoder weights, the algorithm exhibited higher performance post finetuning, surpassing human averages in hazard detection and affordance recognition (Fig. 3c), we used the pretrained algorithm with a medium model size (i.e., 59.10 M) for further analysis unless otherwise specified.

As an initial step, we examined whether algorithmic visual attention was influenced by bottom-up and top-down processes similarly to human visual attention. For this purpose, we calculated the correlation coefficient between different image sets (on the same task goals) and task goals (on the same image sets) for human visual attention in each phase (see Methods). As shown in Fig. 3d, the algorithm’s attention distribution changed with the training progress (here converging towards the AOI as finetuning progressed), we thus calculated the correlation coefficient between different image sets and task goals for algorithmic attention under each training setting (see Methods). This correlation coefficient should reflect the degree to which human attention and algorithmic attention exhibited consistent patterns across image sets, task goals, and training settings. To establish a more general human reference frame for algorithmic attention, we did not differentiate between novice and expert drivers.

As shown in Fig. 4a, human attention distribution appeared highly correlated across image sets and task goals during the scanning phase and relatively less correlated during the examining and reevaluating phases. As in Fig. 4b, pretraining decreased the correlation coefficient of algorithmic attention across image sets and task goals, with finetuning further so from the results post pretraining. Considering that human visual attention was increasingly influenced by task goals from the scanning phase to the reevaluating phase, and that algorithmic processing became task-specific through finetuning, these results indicated a similar tendency in human and algorithmic visual attention–they became less homogeneously distributed as task goal played a greater role in visual processing.

a Correlation coefficient of human attention distribution (absolute value) across image sets, tasks, and phases. b Correlation coefficient of algorithmic attention distribution (absolute value) across image sets, tasks, and training settings. “Without finetuning” was defined as one epoch of finetuning. c Human-likeness of our algorithm’s attention distribution under different training settings. Note that only positive correlation coefficients indicate similarity between algorithmic and human attention distribution. d Examples from the DRAMA and ANOMALY image sets of visualization of algorithmic attention under different training settings and human attention in each phase. (The real-life driving scenes are selected from the DRAMA and BDD-OIA datasets.).

Interestingly, comparing Fig. 4b to Fig. 4a, it is noticeable that the correlation coefficients for all phases of human attention were substantially higher than those for algorithmic attention under all training settings. This suggested that certain aspects of human visual attention were robust against bottom-up and top-down influences, and these aspects might be hard for the algorithm to acquire through model training.

To better investigate whether this was so, we directly compared human and algorithmic visual attention in each driving scene. For each phase of human visual attention, we calculated the correlation coefficients between human and algorithmic attention on the same tasks (see Methods). As mentioned earlier, there were a) two levels of human expertise: novices and experts, b) three phases of human visual attention: scanning, examining, and reevaluating, and c) three levels of model training: without pretraining and without finetuning, with pretraining and without finetuning, and with both pretrainign and finetuning.

As illustrated in Fig. 4c, the results of a repeated measures ANOVA showed that pretraining increased the correlation coefficient between human and algorithmic attention in all phases of human attention distribution (scanning phase, F(1, 49) = 49.23, p < 0.001, η2 = 0.50; examining phase, F(1, 57) = 14.62, p < 0.001, η2 = 0.20; reevaluating phase, F(1, 57) = 41.83, p < 0.001, η2 = 0.42; full duration, F(1, 57) = 33.73, p < 0.001, η2 = 0.37), suggesting that the algorithm did acquire a certain level of human-like visual attention. However, some of these human-like aspects of visual attention appeared to be sifted out during finetuning, as finetuning decreased the correlation coefficient between algorithmic attention and all but the examining phase of human visual attention (scanning phase, F(1, 49) = 8.43, p = 0.006, η2 = 0.15; examining phase, F(1, 57) = 0.28, p = 0.60, η2 = 0.005; reevaluating phase, F(1, 57) = 8.15, p = 0.006, η2 = 0.13; full duration, F(1, 57) = 2.37, p = 0.13, η2 = 0.04). Figure 4d provides examples from the DRAMA and ANOMALY image sets of algorithmic attention under different training settings and human attention in each phase. Please refer to Supplementary Fig. 5 for examples from all image sets and to Data Availability for a link to the full list of visualizations of human and algorithmic attention in each scene and task.

Human expertise had no significant impact on the correlation coefficient between human and algorithmic attention (main effect of human expertise, F(1, 49) = 0.04, p = 0.84, η2 = 0.001; interaction between human expertise and phase, F(2.09, 102.15) = 0.51, p = 0.61, η2 = 0.01; interaction between human expertise and training setting, F(2, 98) = 0.28, p = 0.76, η2 = 0.006; interaction between human expertise, phase, and training setting, F(3.13, 153.23) = 0.26, p = 0.86, η2 = 0.005). This suggested the human-like aspects of visual attention acquired by the algorithm through pretraining and retained through finetuning were common to both novice and expert human drivers.

It was notable that the correlation coefficient between algorithmic and human attention was low post both pretraining and finetuning (post pretraining, M = 0.15, SD = 0.04; post finetuning, M = 0.07, SD = 0.03), which suggested it was challenging for the algorithm to extract and capture the underlying information driving human attention through model training alone. This aligns with previous findings that incorporating human attention into attention-based algorithms could improve model performance; the incorporated human attention data likely contained information that the algorithms struggled to learn independently but could leverage effectively. This raises the question: what specific information underlying human attention, which could enhance model performance, was so difficult for the algorithm to learn?

With regards to this question, notice that pretraining increased and finetuning decreased the correlation coefficient between algorithmic attention and each phase of human attention differently (interaction between pretraining and phase, F(1.90, 93.09) = 12.06, p < 0.001, η2 = 0.20; interaction between finetuning and phase, F(1.62, 79.50) = 6.46, p = 0.005, η2 = 0.12), and recall that the three phases of human visual attention were primarily spatial, feature-based, and mixed, respectively. This raises the possibility that different components of human attention might carry information that affects model performance differently, and this information might vary in difficulty for the algorithm to learn. We tested this hypothesis through incorporating different phases of human attention data into the algorithm’s training process and examining the effects on model performance.

Incorporating human attention in the deep learning algorithm

As human performance exceeded algorithmic performance in the anomaly detection task both with and without pretraining (Fig. 3c), we used this task to test the effects of incorporating human attention data on enhancing model performance.

To collect the human attention data for incorporation, we recruited five novice drivers (see Supplementary Table 10 for details) who each performed the anomaly detection task on 1000 images from the ANOMALY dataset. This sample size was economical and yet sufficient for the purpose of incorporation (see the following paragraph). Then we extracted the participants’ fixation data and divided it into the scanning, examining, and reevaluating phases according to the phase proportions observed in the anomaly detection task performed on the ANOMALY image set in the eye-tracking experiment (see Methods). Due to the relatively small number of participants, we refrained from calculating the exact phase cutting points. On average, across trials and individuals, the three phases accounted for 41.79% (SD = 22.33%), 29.15% (SD = 24.20%), and 29.06% (SD = 28.80%) of the total reaction time of the task, respectively. During each phase, the percentage of time that human fixations fell within the AOI was 11.18% (SD = 19.43%), 61.21% (SD = 36.40%), and 62.23% (SD = 37.28%) of the phase duration, respectively.

We then utilized an eye-movement prediction model (see Methods) to emulate human attention distribution. As shown in Fig. 5a, our methodology involves a two-stage pipeline to prevent label leakage: A. “Offline” Generator Training: We first finetuned an eye-movement prediction model (the “Human attention generator”) using the collected human fixation data as supervision. B. “Online” Model Inference: This generator learns to predict a human-like attention map (“pseudo human attention”) using only the road scene image as input.

a AxANet’s algorithmic architecture. b Performance of the pretrained AxANet when incorporated with different phases of human fixation data. c Performance of the non-pretrained AxANet with different model sizes when incorporated with no and the examining phase of human fixation data. d Performance of the pretrained and non-pretrained AxANet when incorporated with no and the examining phase of human fixation data. e Examples of AxANet’s attention distribution when incorporated with no and the examining phase of human fixation data and the corresponding human attention distribution on the original input.

This predicted “pseudo human attention” map–not the ground-truth AOI–was then incorporated as an additional input into our main algorithm (AxANet) during its finetuning process. At no point during training or inference does the AxANet model have access to the ground-truth AOI coordinates, thus preventing label leakage.

As shown in Fig. 5b, as compared to incorporating no human fixation data (acc = 0.724), incorporating the scanning phase of human fixation data decreased model performance (acc = 0.709). Incorporating the examining phase increased model performance the most (acc = 0.736), surpassing the current state-of-the-art approach which incorporated the full duration of human fixation (acc = 0.729). Incorporating the reevaluating phase also increased model performance (acc = 0.731), though to a letter extent than incorporating the examining phase. Recall that the phases of human visual attention were characterized by human visual spatial, feature-based, and mixed attention, respectively. These results suggested that human visual spatial attention introduced information that might confound algorithmic attention. By contrast, human feature-based attention enhanced algorithmic attention by providing information that the algorithm could not acquire independently.

Note that these effects occurred in the pretrained AxANet. Going back to Fig. 4c, among all phases of human visual attention, algorithmic attention had the lowest similarity to the examining phase post pretraining (F(1.66, 81.33) = 10.75, p < 0.001, η2 = 0.18). This finding, coupled with the fact that incorporating the examining phase of human attention into the algorithm enhanced model performance the most, further suggested that the algorithm had difficulty learning information that supported human feature-based attention.

Additionally, the enhancement effect of incorporating feature-based human attention appeared to be unaffected by model complexity. As shown in Fig. 5c, with the same size of training data, we compared the performance of the non-pretrained AxANet across different model sizes after incorporating the examining phase of human attention data. The improvement in model accuracy remained consistent across model sizes (and therefore the model’s presumed learning abilities), which suggested the supplementary information provided by human feature-based attention was indeed challenging for the algorithm to learn on its own.

In fact, incorporating human fixation data enhanced model performance both with and without pretraining (Fig. 5d). With pretraining, incorporating the examining phase of human fixation data increased model accuracy from 0.724 to 0.736. Without pretraining, incorporating the examining phase of human fixation data increased model accuracy from 0.684 to 0.701. The increase was slightly greater without pretraining by 0.005, but the overall enhancement of incorporating human attention data in the non-pretrained algorithm was 0.017. These results suggested a minor overlap between the information the algorithm could learn on its own and the information supplemented by human attention data; the majority of the supplementary data was beyond the algorithm’s learning capacity.

Recall that, during the examining phase of human attention, the observers focused on the features of the most semantically salient region of the driving scene. Human attention in this phase thus reflected the observers’ ability to understand the semantic saliency distribution of the scene. In this sense, the above results suggested that the semantic understanding of the scene, which forms part of human drivers’ common driving knowledge, was challenging for the algorithm to learn. This semantic understanding appeared to differentiate human visual attention from algorithmic attention. A detailed discussion of this issue is provided below.

Generalizing to spatial-oriented task and VLM

The tasks we have examined so far–hazard detection, affordance recognition, and anomaly detection, fall along a spectrum of target-to-spatial orientation. Anomaly detection, serving as the most spatial among the three, assess human and algorithmic global spatial scene understanding through identifying semantically out-of-place targets. However, anomaly detection might not be sufficiently representative on the spatial nature of many driving tasks as it is in essence a target-oriented task. In this case, we went on to examine the effects of incorporating different phases of human attention in a highly spatial task, trajectory planning.

In the trajectory planning task, the primary goal is to compute a collision-free and dynamically feasible trajectory for the ego vehicle. Unlike the previous detection tasks, this requires a continuous understanding of the global spatial context and future vehicle states. We utilized two state-of-the-art end-to-end planning models, UniAD53 and VAD54, as our baselines. We incorporated the generated “pseudo human attention” from different phases into these models to observe their impact on planning accuracy, measured by L2 displacement error and Collision Rate (see Methods) 1 s, 2 s, and 3 s ahead of the present timestamp.

As shown in Table 1, the experimental results on the trajectory planning task provide a nuanced view of how human attention impacts algorithmic performance. Consistent with our original findings, the semantic attention embedded in the Examining Phase (t1-t2) yielded the most significant and robust improvements across architectures. Specifically, for the UniAD model, the examining phase reduced the average L2 error from a baseline of 0.90 m to 0.82 m. Similarly, for the VAD model, it reduced the average L2 error from 0.72 m to 0.62 m and achieved the lowest collision rate of 0.19%, compared to the baseline of 0.22%.

However, the results for the Scanning Phase (t0-t1) revealed a critical divergence between localization accuracy and safety metrics. While the scanning phase provided a slight benefit to the L2 displacement error–reducing it to 0.88 m for UniAD (from a baseline of 0.90 m) and 0.71 m for VAD (from a baseline of 0.72 m)–it negatively impacted the collision rate. For the UniAD model, incorporating the scanning phase increased the collision rate to 0.36% (from a baseline of 0.29%), and for the VAD model, it increased to 0.23% (from a baseline of 0.22%).

This suggests that while the spatial information in the scanning phase may assist with general trajectory curve fitting, its serial search pattern introduces noise that conflicts with the algorithm’s optimization for safety-critical collision avoidance.

To examine whether the performance gain elicited by incorporating human semantic attention was equivalent to introducing a linguistic module, we went on to apply this approach to DriveLM55 and TOD3Cap56, two state-of-the-art VLM benchmarks for autonomous driving. The idea is, if incorporating human attention data (particularly the Examining Phase) into a VLM could not enhance its performance, it would suggest the VLM had already acquired the semantic priors inherent in human visual attention, thus indicating a higher level of resemblance between the attentional information captured by the two. Otherwise, if incorporating human visual attention further enhanced VLM performance, it would suggest that even large-scale foundation models have not yet fully acquired the specific semantic priors inherent in human visual attention, indicating a lower level of resemblance.

As shown in Table 2, the results reveal an intriguing divergence between the two VLM tasks. For DriveLM, which focuses on high-level reasoning and QA, the baseline model achieved a final score of 0.6057. Incorporating the Examining Phase (t1-t2) resulted in a comparable score of 0.6001. Notably, incorporating the spatial Scanning Phase (t0-t1) and Reevaluating Phase (t2-end) led to a marked reduction in performance, dropping scores to 0.5847 and 0.5762, respectively. This suggests that for logic-heavy QA tasks, the VLM has already acquired sufficient semantic priors, rendering external attention redundant or noisy.

In contrast, we observed a different pattern with TOD3Cap, a benchmark focused on dense captioning in outdoor 3D scenes. Using the standard CIDEr metrics, the baseline model achieved a CIDEr score of 120.3 when IoU (Intersection-of-Union) was larger than 0.25 and a CIDEr score of 108.0 when IoU was larger than 0.50. Incorporating the Examining Phase yielded significantly improved scores of 139.3 and 122.5, respectively. However, consistent with the DriveLM results, the spatial Scanning Phase (122.4/110.7) and Reevaluating Phase (127.6/117.5) yielded significantly lower improvements compared to the semantic Examining Phase.

These results suggest a complex landscape of human-AI resemblance in foundation models. For high-level reasoning tasks (DriveLM), the VLM appears to have bridged the semantic gap, as human attention offers no benefit. However, for dense grounding and captioning tasks (TOD3Cap), where precise object-to-text alignment is required, the semantic priors provided by the Examining Phase still offer substantial value. This indicates that while VLMs possess a higher level of general resemblance to human drivers than specialized models, specific semantic gaps remain in tasks requiring detailed spatial-semantic grounding.

Discussion

When processing a semantically meaningful scene, human visual attention appears to be dominated by a “semantic saliency map” representing the spatial distribution of semantic informativeness across the scene32. It has been shown that semantic saliency–the amount of information a patch carries relative to the other parts of the scene–is more dominant than image salience in predicting attention allocation in real-life scenes57. Indeed, neural networks involved in semantic processing are activated during image salience processing as well58.

The establishment of a saliency map appears to be influenced by top-down factors. Observers assign meaning to features of the scene in a manner consistent with top-down influences such as task goals and expertise59,60,61. For example, in a hazard detection task, a pedestrian crossing the street might be considered a potential hazard because they could be hit by the ego vehicle, whereas in an anomaly detection task, the pedestrian might not be the target because it is normal for pedestrians to cross the street. Regarding expertise, expert drivers can detect early clues to hidden hazards more effectively than novices60, while novice drivers require a greater degree of danger to be present before recognizing a hazardous situation61. In this sense, non-spatial features become spatial cues when they are assigned meaning and hence semantic saliency from the top down.

Accordingly, in a real-life scene, human spatial attention seems inherently driven by feature-based semantic saliency, with visual attention consecutively being allocated to the next most semantically salient feature(s) until the task goal is fulfilled. In our case, this process involved shifting attention from the fixation cross to the AOI, and back and forth between the AOI and other potential targets. In contrast, our findings suggest that specialized algorithmic attention struggles with extracting this semantic saliency. This represents a critical gap. On the feature-based front, the examining phase of human attention–where the human eye fixated on the most semantically salient region (the AOI)–showed the lowest level of resemblance to algorithmic attention post pretraining. This finding, coupled with the fact that incorporating the examining phase of human attention into the algorithm enhanced model performance the most, strongly suggests that the algorithm had difficulty learning information that supported human feature-based attention.

The robustness of this finding was further confirmed when extending our approach to the spatial domain of trajectory planning. Even for state-of-the-art end-to-end planning models like UniAD and VAD, which require global context, incorporating the semantic-rich examining phase yielded the most significant safety improvements, achieving the lowest collision rates and displacement errors.

It is important to emphasize that this performance gain is not an artifact of label leakage. As detailed in our Methods, our AxANet model was never provided with the ground-truth AOI location. Instead, it was guided by a predicted attention map from a generator that had learned, from image features alone, to mimic the semantic exploration patterns characteristic of a human driver’s examining phase. The gain thus represents a genuine enhancement from incorporating learned, feature-based semantic priors that the algorithm could not discover on its own.

A more complex picture emerged on the spatial front. For the target-oriented detection tasks, algorithmic visual attention appeared incompatible with human spatial attention, as incorporating the “scanning phase” decreased performance. However, our results from the trajectory planning task reveal a critical distinction between geometric precision and semantic safety. While incorporating the scanning phase slightly improved the L2 displacement error in both UniAD and VAD models, it paradoxically increased the collision rate.

This divergence suggests that the serial, scanning nature of human spatial attention captures useful geometric priors–helping the algorithm “fit” the curve of the road better–but introduces noise regarding safety priorities. A Transformer’s attention, with its positional encodings, can “attend” to all parts of an image in a non-human-like, parallel manner. Forcing it to follow the serial search path of a human may assist in identifying navigable space (improving L2) but distracts the optimization process from the critical obstacles required for collision avoidance. Thus, while human spatial attention contains valid geometric information, it is the semantic attention–identifying what matters rather than where to look–that is essential for the safety-critical components of autonomous driving.

On the front of generalizing these results to VLMs, our findings regarding the gap in semantic understanding are primarily observed in specialized driving architectures (e.g., AxANet, UniAD, VAD). A critical question remains: does this gap persist in large-scale foundation models? Our investigation into DriveLM and TOD3Cap, two VLM benchmarks, reveals a nuanced landscape where the utility of human attention appears highly task-dependent.

For DriveLM, which focuses on high-level reasoning and question answering, incorporating the semantic “Examining” phase did not enhance performance compared to the baseline. This suggests that for logic-heavy reasoning tasks, VLMs have likely already acquired the necessary high-level semantic priors through massive pre-training, rendering external attentional guidance redundant.

In contrast, our results with TOD3Cap, a benchmark focused on dense captioning and grounding, showed a different pattern. While the baseline model achieved a strong CIDEr score, incorporating the Examining Phase yielded a substantial improvement. This indicates that while VLMs possess robust general reasoning capabilities, they may still lack the fine-grained, object-centric feature extraction capabilities required for precise dense captioning. In these “grounding-heavy” tasks, the semantic cues provided by human visual attention still offer unique, complementary information that foundation models have not fully learned from text-image pairs alone. Thus, the “semantic gap” appears closed for high-level reasoning but remains open for fine-grained visual grounding.

These findings carry significant economic implications for the development of autonomous driving systems. While foundation models (VLMs) demonstrate superior semantic understanding, deploying such massive models in real-time, resource-constrained vehicle environments remains computationally prohibitive. Our results demonstrate that incorporating human feature-based attention allows smaller, more efficient algorithms (e.g., AxANet, VAD) to acquire comparable semantic priors without the need for massive scale. Since our “pseudo human attention” generator was trained on a small, economical dataset of just five drivers, this approach offers a cost-effective pathway: rather than relying solely on ever-larger foundation models to achieve understanding, we can effectively “distill” human semantic intelligence into lightweight driving agents through targeted attention guidance.

Despite these promising findings regarding semantic attention, it is crucial to delineate the boundaries of our approach. First, our counter-intuitive finding that human spatial guidance can be detrimental is scoped to the specific tasks we investigated. We explicitly acknowledge that the utility of human spatial attention may indeed be beneficial in other space-oriented driving contexts, such as continuous lane-keeping or low-level motor control, which remains an important area for future work. Second, one might wonder whether combining different phases of human attention, such as the scanning and examining phases, could yield further performance gains. Given our findings that the spatial scanning phase decreased performance while the semantic examining phase enhanced it, a simple aggregation of the phases would likely introduce confounding signals and dilute the clear semantic benefit. However, while our work suggests these components are best understood as functionally distinct, more complex fusion models remain a potential avenue for future research.

To conclude, in the present study, we parsed the spatial and feature-based components of human visual attention in real-life driving scenarios. By comparing algorithmic attention and different components of human visual attention within safety-critical, target-oriented driving tasks, we propose that the ability to extract semantic saliency distinguishes human visual attention from specialized algorithmic attention. For the standard end-to-end planning and detection architectures investigated here, algorithms struggle to learn the semantic saliency of features through model training alone, a limitation that can be mitigated by incorporating human attention data. However, this limitation appears to be less pronounced in large-scale foundation models for high-level reasoning tasks. Nevertheless, our findings indicate that even foundation models benefit from human attention in tasks requiring fine-grained visual grounding, suggesting that while scale closes the “reasoning gap,” human data remains vital for closing the “grounding gap” in artificial visual attention.

Methods

Eye-tracking experiment

The eye-tracking experiment adopted a 3 × 3 × 2 mixed design. The within-subject variables were Image Set and Task. Each image set contained 30 images randomly selected from an original dataset (Supplementary Fig. 6). The first image set was from the DRAMA dataset62 and featured daytime urban driving scenes. The second image set was from the nighttime urban scenes in the BDD-OIA dataset63. The third image set was from the ANOMALY dataset that we generated using Tian et al.‘s protocol64 and featured daytime urban driving scenes simulated in the CARLA simulator65. The three tasks were hazard detection, affordance recognition, and anomaly detection as described in Introduction.

The between-subject variable was Expertise. As in Introduction, we invited both novice and expert drivers to participate in the experiment.

We recruited 18 novice drivers (7 females and 11 males, mean of age = 22.78, s.d. of age = 3.41, mean of years of driving = 1.58, s.d. of years of driving = 1.40) and 18 expert drivers (18 males, mean of age = 42.44, s.d. of age = 8.28, mean of years of driving = 16.76, s.d. of years of driving = 5.50) (see Supplementary Table 1 for details) each of whom went through all nine within-subject experimental conditions. All participants held a valid Chinese driver’s licence at the time of the experiment.

During the experiment, participants completed the three driving tasks in random order. Each task was performed on images from all three image sets; the sequence of the images was randomized. As in Supplementary Fig. 2, each task began with five self-paced introduction trials, showing examples of the most probable hazard in the scene, conditions under which the ego vehicle could and could not execute a turn or lane change to the left, and objects least likely to appear in the scene. Participants were instructed to press the SPACE key to see the next example when they had understood the previous one. The introduction trials were followed by 15 training trials and 90 experimental trials. Each trial began with a red cross presented at the center of the screen, and the participants were instructed to focus their attention at the intersection of the bars. Each cross lasted 800–1200 ms at random before a training or experimental image appeared. For the hazard detection and anomaly detection tasks, participants selected the target object by double-clicking on it with the mouse. For the affordance recognition task, participants pressed the ENTER key and the SPACE key respectively when the ego car could and could not make a turn or lane change to the left. Upon reaction, the display turned into a red fixation cross with which the subsequent trial began.

The study was approved by the Artificial Intelligence Internal Review Board Committee at Tsinghua University. All participants signed an informed consent form before participation. All participants were debriefed about the purpose of the study and received monetary compensation after the experiment.

As in Supplementary Fig. 1, participants were seated in front of a 24-inch Dell monitor (537.80 × 179.57 × 496.11 mm) with a resolution of 1920 × 1080 pixels and a refresh rate of 60 Hz. The screen was placed 60–80 cm away from the participants’ eyes. We used a 7invensun aSee Pro system to monitor participants’ eye movements during the eye-tracking experiment. The system was mounted to the bottom of the presenting screen with a sampling rate of 250 Hz and a spatial accuracy of within 0.5°. The system used a velocity-threshold identification (I-VT) algorithm to calculate point-to-point velocity for each sampling point in the experiment. A fixation was defined as a series of continuous sampling points with velocity below the threshold (3 pixels per ms by default) and which lasted for more than 60 ms; otherwise the sampled gaze was classified as a saccade. The stimuli were programmed and presented using E-Prime 3.0 (https://support.pstnet.com/) at a 16:9 aspect ratio with a resolution of 1920 × 1080 pixels.

Human data analysis

We defined an AOI as the smallest rectangular bounding box containing the critical object(s) in a traffic scene. AOIs in the daytime urban image set contained the most probable hazard in the scene and were marked according to the ground truth provided by the original DRAMA dataset. AOIs in the nighttime urban image set contained the objects prohibiting the left-turning maneuver when the ego vehicle could not take a turn or lane change to the left; we marked the AOIs manually according to the descriptions provided by the BDD-OIA dataset. When the ego vehicle could make a left turn, the dataset provided no description but a decision, in which case we used the left half of the scene as the AOI. AOIs in the simulated daytime urban image were marked according to the anomalous object placed in the simulated scene. We used the same AOIs across tasks to control for factors such as size and location of the AOI that could confound the effects of visual features and task goal.

Having acquired raw human eye movement data, we first matched the data with their corresponding experimental images based on the locations of pre-defined digital markers (see Supplementary Methods Table 3). Then we removed all saccade data, located the AOIs in each trial, and separated the remaining fixation data into the scanning, examining, and reevaluating phases according to their definitions (see Introduction). The Python scripts we used to preprocess the data are provided in the link in Code Availability.

Here is a step-by-step definition for the phase boundaries: To algorithmically and consistently define the three attention phases, we first processed the raw eye-tracking data for each trial to identify a time-sorted list of all fixations and their corresponding start timestamps and (x, y) coordinates.

For each trial, the phase boundaries were then defined as follows:

-

A.

t0 (Start of Scanning): The timestamp of the trial onset (i.e., stimulus appearance).

-

B.

t1 (End of Scanning/Start of Examining): The start timestamp of the first fixation in the time-sorted list whose (x, y) coordinates fell within the pre-defined bounding box of the AOI.

-

C.

t2 (End of Examining/Start of Reevaluating): The start timestamp of the first subsequent fixation (i.e., the first fixation occurring after t1) whose (x, y) coordinates fell outside the AOI bounding box.

-

D.

treaction (End of Reevaluating): The timestamp of the participant’s key press or mouse click response.

The phase durations were then calculated from these objective timestamps (e.g., Scanning Duration = t1 - t0).

To operationally define the dependent variables, durations of the scanning, examining, and reevaluating phases were calculated as t1 - t0, t2 - t1, and time of response - t2, respectively (see Introduction). Accordingly, phase proportion was calculated as the ratio of phase duration divided by reaction time (i.e., time of response - t0).

For number of fixations, we first removed all saccade samplings in each trial and counted the number of different fixation averages; each fixation average represented a unique fixation point. The duration of each fixation was calculated by deducting the onset timestamp of the fixation from the offset timestamp. Horizontal variance of fixations was attained by calculating the standard deviation of the horizontal coordinates of the fixations.

We calculated all dependent variables using Python scripts (see Code Availability) on a trial-by-trial basis first and then averaged over all trials for each participant.

Statistical analysis was conducted using SPSS Version 27. For all F-tests, we used the Greenhouse-Geisser corrected value when the assumption of sphericity was violated. We used two-tailed tests for all our analyses.

Architecture of our deep learning algorithm

As in Fig. 3a, our deep learning algorithm was generally composed of two components: an encoder that encoded the image into a latent representation and a decoder that utilized the latent representation to conduct downstream tasks.

On the encoder front, given an image I∈RH’*W’*3, we first used a conventional neural network66 as the image backbone to encode the image into feature maps F∈RH*W*C where H = H’/32, W = W’/32. Then we tokenized the image feature maps into H×W image tokens with a size of C.

These tokens were then passed as the input into the Transformer encoder where each token interacted with one another. Specifically, a 2D positional encoding was added to the image tokens first so the model could obtain location information67. Then the tokens were fed into N self-attention layers68 with nh heads, resulting in H×W latent tokens which we represent with Ri.

On the decoder front, we then utilized a decoder to conduct downstream tasks. Following Dosovitskiy et al., we concatenated a learnable embedding to the latent image tokens Ri, resulting in H×W + 1 tokens. These tokens were then fed into M encoder layers to interact with one another, which enabled the learnable embedding to capture the information of the image representation. Then the learnable embedding was fed into different prediction heads to perform different tasks. For hazard detection, we exploited a Multilayer Perceptron (MLP) to predict the bounding box of the hazard and a classification MLP to categorize it. For affordance recognition, we employed a binary classification MLP to determine whether or not the ego vehicle could make a turn or lane change to the left. For anomaly detection, we used a binary classification MLP to determine whether or not there was an anomaly in the scene and a regression MLP to regress the bounding box of an anomaly.

In terms of loss function, we referred to Mean Square Error (MSE) loss as L2, Generalized Intersection over Union (GIoU) loss69 as Lg, and Cross Entropy loss as Lc.

For hazard detection, the final loss was defined as the sum of the bounding box loss (MSE loss and GIoU loss) and the classification loss (Cross Entropy loss), formulated as Lh = L2 + Lg + Lc. For affordance recognition, the final loss was defined as a binary classification loss (Cross Entropy loss), formulated as Lt = Lc. For anomaly detection, the final loss was defined as the sum of the bounding box loss (MSE loss and GIoU loss) and the binary classification loss (Cross Entropy loss), formulated as La = L2 + Lg + Lc.

To decide which model size to adopt, we conducted experiments with different hyper-parameters of the model. The values of the hyper-parameters of the algorithm with an increasing model size were: N = 2, M = 2, C = 128, nh = 4; N = 4, M = 4, C = 256, nh = 8; N = 6, M = 6, C = 256, nh = 8; N = 8, M = 8, C = 512, nh = 8; N = 12, M = 12, C = 1024, nh = 16.

Pretraining was conducted by loading the pretrained model in DETR68.

Training Implementation Details

All models were implemented using PyTorch and DeepSpeed with mixed-precision training (fp16, zero optimization stage 1). We employed the AdamW optimizer with a warm-up scheduler for the first 10% of training steps followed by linear decay, and applied a smaller effective learning rate coefficient of 0.10 to the backbone. The hazard detection model was trained for 100 epochs with an initial learning rate of 2e-4 using 4 NVIDIA A100 GPUs. The affordance recognition model was trained for 300 epochs with an initial learning rate of 5e-4 on a single NVIDIA A100. The anomaly detection model was trained for 400 epochs with an initial learning rate of 2e-4 using 4 NVIDIA A100 GPUs. All tasks utilized a batch size of 32 per GPU.

Evaluation metrics

We adopted different evaluation metrics for different tasks.

For hazard detection, we evaluated algorithmic performance with Box Accuracy (BAcc) which was defined as:

where (x1,y1,x2,y2) and (x1’,y1’,x2’,y2’) were corner coordinates.

For the affordance recognition task, we evaluated algorithmic performance with Classification Accuracy which was defined as:

\({Classification\; Accuracy}=\frac{{\sum }_{{\rm{i}}=1}^{{\rm{n}}}I\left({\hat{{\rm{y}}}}_{{\rm{i}}}={{\rm{y}}}_{{\rm{i}}}\right)}{n}\), where n represented the number of test samples and I represented an indicator function. The indicator function was assigned a value 1 when the predicted label equated the ground truth, and 0 if not.

For the anomaly detection task, we evaluated algorithmic performance with Box Accuracy (BAcc).

Attention visualization

To visualize human attention, we separated human fixation data in the three phases according to the phase proportions in the eye-tracking experiment. When comparing within human participants (Fig. 4a), we averaged over tasks when comparing across image sets and over image sets when comparing across tasks.

Given the human fixation data in each phase, we converted the data into attention heatmaps using the PyGaze70 toolbox. It took two steps to generate a heatmap from the fixation data: (a) blurring the fixation points with a Gaussian filter, and (b) superimposing each point onto the original image.

The Gaussian function G(x,y) used to blur the fixation points was defined as:

\(G\left({\rm{x}},{\rm{y}}\right)=\frac{1}{2{\rm{\pi }}{\sigma }^{2}}{{\rm{e}}}^{-\frac{{{\rm{x}}}^{2}+{{\rm{y}}}^{2}}{2{\sigma }^{2}}}\), where σ was the standard deviation of the Gaussian distribution and impacted the blur radius.

The heatmap intensity at point H(x,y) was formulated as:

\(H\left({\rm{x}},{\rm{y}}\right)={\sum }_{i=1}^{n}G\left({\rm{x}}-{{\rm{x}}}_{i},{\rm{y}}-{{\rm{y}}}_{i}\right)\times {w}_{i}\) where G was the Gaussian function, n was the number of gaze points, (xi,yi) was the coordinates of the i-th fixation point, and wi was the weight (e.g., duration) associated with the i-th gaze point.

To visualize algorithmic attention, we utilized the Grad-cam65 to generate our algorithm’s attention heatmap. Let Y be the score of the result (the definition of the score follows pytorch-grad-cam, https://github.com/jacobgil/pytorch-grad-cam) provided by the network, and Ak be the k-th feature map of the last encoder layer. First, we computed the gradient of Yc with respect to Ak, denoted as \(\frac{\partial {Y}^{c}}{\partial {A}^{k}}\). Then we obtained the weights \({\alpha }_{k}^{c}\) by applying Global Average Pooling (GAP) to these gradients \({\alpha }_{k}^{c}=\frac{\overline{\partial {Y}^{c}}}{\partial {A}^{k}}\). Finally, we applied the ReLU activation function \({L}^{c}={ReLU}\left({{\sum }_{k}\alpha }_{k}^{c}{A}^{k}\right)\) to obtain the heatmap. This process generated a heatmap of the same size as the input image, highlighting the regions most critical for predicting class C.

Correlation coefficient

We first calculated the correlation coefficient between image sets and between tasks for human and algorithmic attention distribution respectively using the same methods.

For each task j (hazard detection, affordance recognition, and anomaly detection) and dataset k (DRAMA, BDD-OIA, and ANOMALY), the attention maps were denoted as \({M}_{j,k}={\left\{{m}_{j,k}^{n}\right\}}_{n=1}^{N}\), where N represented the total number of maps of each task and each dataset. Here, M can represent either human or algorithmic attention maps. Before calculation, each attention map was normalized to ensure the sum of its values equaled 1. We then resized the normalized attention map to a smaller, standardized size S.

For any two given tasks j = j0 \({and}\) j = j1, we first calculated the correlation coefficient between each corresponding image \({m}_{{j}_{0},k}^{n}\) and \({m}_{{j}_{1},k}^{n}\), where k was one of the three datasets. Then we averaged over the absolute values of the correlation coefficients over all 3 N images of the three image sets. We took the absolute values before taking an average because our goal here was to determine the extent to which each two sets of attention maps exhibited consistent patterns of attention distribution, and opposite signs of the correlation coefficients essentially reflected the same patterns being captured. Formally, the final correlation coefficient was calculated as follows:

For the correlation coefficients between any two given image sets k = k0 and k = k1, we followed the same method except that the absolute values were averaged over the three tasks.

We continued to compare between human and algorithmic attention distribution. Towards this end, we calculated the correlation coefficients for paired heatmaps of the two modalities. Specifically, for each human attention phase i (scanning, examining, and reevaluating), task j (hazard detection, affordance recognition, and anomaly detection), image set k (DRAMA, BDD-OIA, and ANOMALY), and level of driving experience l (novice and expert), we executed a cross-comparison between the human and algorithmic attention sets \({H}_{i,j,k,{l}_{h}}\) and \({A}_{j,k,{l}_{a}}\). Notably, while the task j and image k were consistently applied to both human and algorithmic attention sets, the phase i was exclusive to human attention, and the driving experience level l could vary between the two sets. The analysis was applied to each paired set of attention maps \({\left\langle {H}_{i,j,k,{l}_{h}},{A}_{j,k,{l}_{a}}\right\rangle =\left\{\left\langle {h}_{i,j,k,{l}_{h}}^{n},{a}_{j,k,{l}_{a}}^{n}\right\rangle \right\}}_{n=1}^{N}\), where N was the total number of maps. As before, each attention map was first normalized and then resized to the size S. During this process, any data pair \(\left\langle {h}_{i,j,k,{l}_{h}}^{n},{a}_{j,k,{l}_{a}}^{n}\right\rangle\) was discarded if \({h}_{i,j,k,{l}_{h}}^{n}\) was entirely zero-valued. The correlation coefficient was then calculated between \({h}_{i,j,k,{l}_{h}}^{n}\) and \({a}_{j,k,{l}_{a}}^{n}\) as follows:

where \({{h}_{i,j,k,{l}_{h}}^{n}}_{s}\) and \({{h}_{i,j,k,{l}_{h}}^{n}}_{s}\) represented the per-pixel values of the respective attention maps.

The significance level of these correlations was quantified as follows:

Note that, to compare human and algorithmic attention distribution without being influenced by the interaction between image set and task goal, we used attention maps derived from one out of the three tasks for each image set. In other words, we ensured that j = k in the calculation process.

Repeated-measures ANOVA

To examine the effects of factors influencing the similarity between human and algorithmic attention, we conducted a repeated-measures ANOVA on the correlation coefficient between human and algorithmic attention. Note that, this time, we did not take the absolute value of the correlation coefficients. The independent variables were Expertise (novice and expert drivers), Phase (the scanning, examining, and reevaluating phases and full duration) of human attention and Training Setting (without pretraining and finetuning, with pretraining and without finetuning, and with both pretraining and finetuning) of the attention-based algorithm.

Integrating human fixation data with the algorithm

For human eye-tracking data collection, as Expertise had no significant effect on human and algorithmic attention resemblance (see Introduction), we recruited novice drivers for human eye-tracking data collection (anomaly detection: 2 females and 3 males, mean of age = 26.20, s.d. of age = 6.94, mean of years of driving = 2.76, s.d. of years of driving = 2.14; trajectory planning: 2 females and 3 males, mean of age = 23.6, s.d. of age = 3.14, mean of years of driving = 3.4, s.d. of years of driving = 1.96). All drivers held a valid Chinese driver’s licence at the time of data collection. In the anomaly detection task, participants went through the same procedure as the anomaly detection task in the eye-tracking experiment except that there were 1000 experimental trials. In the trajectory planning task, the participants were instructed to press a button as fast as possible to indicated whether they considered a given driving command reasonable or unreasonable. The participants each viewed 835 driving scenes, each extracted from a video of the nuScenes66 dataset, in random orders. In both experiments, participants were encouraged to take a self-paced break after 400 and 750 trials, and they pressed the SPACE key to continue with the procedure.

To investigate the effects of incorporating human attention data on algorithmic performance, it was necessary to address the challenge of acquiring large-scale human eye movement data.

Collecting large-scale human eye-tracking data for the full training set was infeasible. We, therefore, used the abovementioned, smaller, dataset to revise an eye-movement prediction model, which could then generate “pseudo human attention” maps for the full dataset. The steps of this procedure can be described as follows:

-

A.

Data collection: We first collected a new human eye-tracking dataset for the anomaly detection task and the trajectory planning task respectively.

-

B.

Data segmentation: We segmented the raw fixation data into three separate datasets, one for each phase (scanning, examining, and reevaluating). For the anomaly detection task, this segmentation was done by applying the average phase porportions observed in our main eye-tracking experiment for the ANOMALY dataset. These quantified proportions were 41.79%, 29.15%, and 29.06% of total reaction time for the scanning, examining, and reevaluating phases, respectively. For the trajectory planning task, the phases were segmented following the strict definition of the phase boundaries.

-

C.

Model finetuning (offline pipeline, Fig. 5a): We used the Adaptive Driver Attention71 model as our base generator. We then trained three separate, specialized versions of this model. Each version was initialized with the pretrained model weights provided (ref. 71) and then finetuned exclusively on the data from its corresponding phase. For example, the “examining phase generator” was finetuned only on the “examining phase” fixation data.

-

D.

Output and integration (online pipeline, Fig. 5a): The heatmaps generated by these finetuned models are what we term “pseudo human attention”, which was then used as the “gaze image” input for the gaze encoder in our AxANet architecture.

For model finetuning specifically, the model consists of three main modules: a visual feature extraction module, a visual feature processing module, and a temporal processing module. The visual feature extraction module first passes each frame through AlexNet to extract feature maps from the conv5 layer. This module is pretrained on ImageNet and its weights are frozen during training. The extracted features are upsampled to a 64 × 36 spatial resolution before being passed to subsequent layers. The visual feature processing module includes three 1 × 1 convolutional layers, each followed by dropout layers, which reduce the feature dimensionality from 256 to 8 channels. The temporal processing module is a convolutional LSTM network with a 3 × 3 kernel that models temporal dependencies across frames. The final stage of the model includes a Gaussian smoothing layer (σ = 1.5) and a softmax layer, producing a normalized attention probability map.

During training, the model was optimized using a cross-entropy loss between the predicted attention distribution and the ground-truth human attention map for each frame, aligning the network’s output with human gaze behavior. The model was trained using the Adam optimizer with parameters: Learning rate = 0.001, β₁ = 0.9, β₂ = 0.999, ε = 1 × 10−8. Each training batch contained 10 sequences of 6 frames each. The model was trained for 5000 iterations, after which the testing error stabilized.

To incorporate the pseudo human attention data into our algorithm, we modified the anomaly detection framework. Towards this end, we added another gaze encoder to our original algorithm to encode the pseudo data (Fig. 5a). The framework of the gaze encoder was the same as the original image encoder, and it treated human eye movement data as a gaze image and encoded it into latent gaze tokens Rg. Note that the original image encoder encoded each image into latent image tokens Ri. We aggregated Ri and Rg through an aggregation function, which resulted in latent tokens \(\bar{{\rm{R}}}\). Then \(\bar{{\rm{R}}}\) was passed as input to the decoder to perform downstream tasks. The aggregation functions we used were mean pooling, max pooling, log sum, dot product and concatenation.

For the trajectory planning task, we adopted off-the-shelf end-to-end planning models, UniAD and VAD, as our backbones. These models take multi-camera images as input and output a sequence of future waypoints representing the ego-vehicle’s planned trajectory.

To incorporate human attention, we introduced a human-attention auxiliary branch on top of each backbone. The “pseudo human attention” maps (generated as described in the “Finetuning the eye movement prediction model” section) were first encoded by the attention transformer encoder (identical to the one used in AxANet), producing a compact human-attention feature representation aligned with the input images.

After the planning model generated its planning features, these human-attention features were fused with the planning features via a cross-attention module. The fused features were then fed into the planning head for trajectory decoding. During training, we followed the standard optimization settings of UniAD and VAD and jointly trained the added attention branch and cross-attention module, keeping the original task objective and loss formulation unchanged.

We utilized standard trajectory planning metrics: L2 Error (Displacement Error), the Euclidean distance between the predicted trajectory waypoints and the ground truth waypoints, averaged over time horizons of 1 s, 2 s, and 3 s. Collision Rate (Col), the frequency with which the predicted trajectory collides with other agents or obstacles in the scene.

For the VLMs, we modified DriveLM and TOD3Cap separately. For DriveLM, we utilized the official baseline of DriveLM as our primary VLM backbone. The model employs BLIP-2 as the vision-language learner, which consists of a frozen image encoder (EVA-CLIP), a Querying Transformer (Q-Former), and a Large Language Model (LLM). To incorporate human attention, we injected the “pseudo human attention” maps into the Q-Former stage. The attention maps were first processed by our Human Attention Encoder (identical to the one used in AxANet and the trajectory planning tasks) to generate human-attention features. These features were then concatenated with the queries in the Q-Former, allowing the human attention priors to interact with the visual features extracted by EVA-CLIP before being fed into the LLM. We finetuned the model on the DriveLM dataset following the official training protocols, optimizing for the Graph Visual Question Answering (GVQA) task. The performance was evaluated using the Final Score metric defined by the DriveLM benchmark, which aggregates the accuracy of the model’s answers across perception, prediction, and planning questions.

For TOD3Cap, we adopted the official architecture, which consists of a BEV-based detector, a Relation Q-Former, and a frozen LLM (LLaMA-7B) equipped with adapters. The Relation Q-Former is designed to capture context-aware relationships between object proposals and the environment. To incorporate human attention, we encoded the “pseudo human attention” maps using our Human Attention Encoder to obtain spatial attention features. These features were then fused with the object queries in the Relation Q-Former via an additional cross-attention layer. This integration allowed the human attentional priors to guide the model’s understanding of object relationships and scene context. The context-aware features were subsequently processed by the LLaMA-Adapter and fed into the LLM to generate dense captions. We finetuned the model on the TOD3Cap dataset, optimizing for the dense captioning task using the cross entropy loss.

Data availability

All data that support our findings are publicly available at https://osf.io/rc67f/overview?view_only=756b0bf1f69a4d9fa64b1ea5b03ada76. The data provided are experimental stimuli in the eye-tracking experiment, raw human eye-tracking and behavioral data in the eye-tracking experiment, raw human eye-tracking and behavioral data in the later eye-tracking data collecting session, full list of algorithmic attention visualization, and correlation coefficient between human and algorithmic attention.

Code availability

The scripts and code for human data collection, human data analysis, comparison between human and algorithmic attention distribution, and model training is available at https://github.com/sammichenco-z/AxANet.

References

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems, 30 (NIPS, 2017).

Xu, R. et al. V2x-vit: Vehicle-to-Everything Cooperative Scanning with Vision Transformer, 107–124 (ECCV, 2022).

Brown, T. et al. Language models are few-shot learners. Adv.Neural.Inf.Process.Syst. 33, 1877–1901 (2020).

Anderson, J. M. et al. Autonomous Vehicle Technology: A Guide for Policymakers (Rand Corporation, 2014).

Fagnant, D. J. & Kockelman, K. Preparing a nation for autonomous vehicles: opportunities, barriers and policy recommendations. Transp. Res. Part A Policy Pract. 77, 167–181 (2015).

Prakash, A., Chitta, K. & Geiger, A. Multi-modal fusion transformer for end-to-end autonomous driving. In Proceedings of IEEE/CVF Conference on CVPR 7077–7087 (IEEE, 2021).