Abstract

Continuous glucose monitoring (CGM) combined with AI offers new opportunities for proactive diabetes management through real-time glucose forecasting. However, most existing models are task-specific and lack generalization across patient populations. Inspired by the autoregressive paradigm of large language models, we introduce CGM-LSM, a Transformer decoder-based Large Sensor Model (LSM) pretrained on 1.6 million CGM records from patients with different diabetes types, ages, and genders. We model patients as sequences of glucose time steps to learn latent knowledge embedded in CGM data and apply it to the prediction of glucose readings for a 2-h horizon. Compared with prior methods, CGM-LSM significantly improves prediction accuracy and robustness: a 48.51% reduction in root mean square error in 1-h horizon forecasting and consistent zero-shot prediction performance across held-out patient groups. We analyze model performance variations across patient subgroups and prediction scenarios and outline key opportunities and challenges for advancing CGM foundation models.

Similar content being viewed by others

Introduction

Diabetes imposes significant burdens on individuals, families, and healthcare providers. Uncontrolled diabetes has been associated with a variety of severe medical complications, including heart attacks1, kidney failure2, and diabetic neuropathy3. In 2021, 38.4 million Americans (11.6% of the U.S. population) had diabetes, making it the eighth leading cause of death4. By 2022, diabetes management costs had soared to ~$412.9 billion annually (25% of total healthcare expenditures)4. The rising societal and economic burden of diabetes combined with a growing global shortage of healthcare professionals necessitates new approaches to diabetes care and prevention5.

Effective self-management is crucial for people with diabetes to manage their condition and avoid complications. To inform their daily decision-making processes, patients need actionable information. Artificial intelligence (AI) holds considerable promise to provide such information by generating personalized interventions tailored to individual needs. However, most AI applications in diabetes management have been centered on predicting the overall risk of diabetes progression over long time horizons, and such predictions are difficult for individuals to convert into daily diabetes management activities. This shortcoming underscores the need for AI solutions in diabetes management that provide timely, micro-level, personalized, actionable predictions to enable patients to improve their self-care6,7,8.

In recent years, continuous glucose monitoring (CGM) systems, which continuously measure glucose values every 5 min, have emerged as a critical tool in diabetes control9,10. One particularly promising approach to meeting the patient needs described above lies in combining CGM with AI to predict near-future glucose values11. However, little research has addressed this task, which is inherently challenging due to the complexity and variability of individual behaviors captured by CGM. This makes it difficult to accurately predict a patient’s glucose levels for the next 2 h12. Studies using simulated CGM data or datasets from a limited number of patients report poor prediction performance13,14,15,16,17,18. Such poor performance can be caused not only by inadequate data but also by training the model using random initialization with zero glucose generation knowledge. In this context, the question of how to utilize the hidden knowledge within CGM data patterns to empower prediction models remains unaddressed.

To address this gap, we present a new approach to glucose prediction for diabetes management that harnesses the pretraining technique exemplified in large language models (LLMs). We pretrained the model to learn the latent glucose generation mechanisms hidden in massive CGM data. Considering that language is represented as a sequence of tokens and LLMs learn through next-token prediction, we also modeled person-generated glucose data as a sequence of time steps (e.g., every 5 min) and proposed a large sensor model (LSM) to learn massive sensor data with next-step prediction. We hypothesize that an LSM pretrained on CGM data (CGM-LSM) could learn glucose fluctuation patterns and achieve high performance in predicting glucose values.

We developed the CGM-LSM on a large CGM dataset with 15.96 million glucose records from 592 patients with diabetes. We validated its effectiveness in improving near-future glucose prediction using metrics of accuracy and robustness. Benchmarked against the public OhioT1DM dataset, CGM-LSM achieved a root Mean Squared Error (rMSE) of 15.90 mg/dL for 1-h horizon prediction, which is 48.51% lower than that of the baseline approaches, even though our model was not trained on the OhioT1DM dataset16. The model’s robustness was confirmed through analyses assessing generalizability across varying prediction horizons, performance metrics, diabetes types, unseen patients, and age and gender groups. Notably, prediction accuracy declined during high-variability contexts, such as daytime hours, mealtimes, and glucose extremes. This highlights the need for future studies to address underrepresented scenarios.

This study proposes the LSM pretrained with glucose sensor data and demonstrates its significant improvement in near-future glucose prediction. It offers a new methodology not only for timely and personalized diabetes self-management but also for learning from massive sensor data.

Results

Datasets

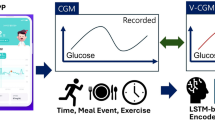

The key data point in our study is an “instance,” defined as a combination of a patient and an observation datetime. This datetime represents the time when the model is triggered to observe the patient’s records and generate predictions. As shown in Fig. 1B, inclusion as a valid instance requires 288 CGM entries from the 24 h prior to the datetime and 24 entries in the subsequent 2 h, for a total of 26 h of CGM data.

A The instances selection process to construct internal test set, temporal test set, held-out test set, and training set. B For one instance, the input-output pair construction process. Each instance is a combination of a patient and an instance datetime. The instance contains 288 CGM records before 24 h and 24 CGM records after 2 h. C The pretraining process for one instance given 26-h CGM records. D The prediction (generation) process for one instance with 24-h CGM records.

To pretrain our CGM-LSM model, we constructed a dataset of 592 individuals diagnosed with diabetes (291 T1D and 301 T2D). The dataset contains 15,961,183 valid instances, 7,788,836 from T1D patients and 8,172,347 from T2D. The distribution is consistent across various demographics, with 2,901,601 instances involving T1D individuals aged 18–39 and 524,354 involving their T2D counterparts. Female T1D patients account for 4,150,047 instances, while T2D females contribute 2,983,547 instances.

To benchmark its performance with baseline methods, we evaluate our pretrained model with the OhioT1DM dataset19. After a similar preprocessing method, we get 12 T1D patients with a total of 58,414 instances (44,275 from train, 4925 from validation, and 9214 for test). The details of the data processing are provided in the Supplementary Information.

CGM-LSM pretraining and generation

As shown in Fig. 1A, to test the model’s robustness and generalizability, we randomly split the dataset into two patient groups: held-in and held-out. Data from held-out patients (10% of patients) were excluded from the training phase and reserved exclusively as the “held-out” test set. Each “held-in” patient’s instances were chronologically sorted based on observation datetimes. The latter 10% were reserved to form the “temporal” test set, intended for evaluating model performance on unseen future periods. The remaining 90% of instances were further randomly split, with 10% used as the “internal” test set and 80% as the “training” dataset. This stratification allowed the model to be evaluated on unseen future periods and on unseen patients. To mitigate overfitting and enhance computational efficiency, a random 10% down-sampling was applied to each dataset. The final numbers of patients and instances are summarized in Table 1.

The training process (Fig. 1C) employed transformer-decoder architecture20 to develop CGM-LSM through autoregression training. This design is particularly advantageous for learning temporal dependencies in continuous glucose data. Compared to convolutional neural networks (CNNs)21, which are limited in receptive field unless heavily stacked, and recurrent models, such as RNNs22, LSTMs23, and GRUs24, which suffer from vanishing gradients and sequential bottlenecks, the Transformer decoder enables efficient access to all past timesteps via self-attention. This allows the model to flexibly capture both short- and long-range glucose dynamics without relying on fixed context windows or handcrafted lag features. Furthermore, unlike Transformer encoder-only architectures, which process sequences non-autoregressively and are commonly used for classification or embedding tasks, the decoder structure is well-suited for sequence generation. It operates autoregressively, predicting each future glucose value based on previously generated values, making it highly aligned with the objective of pretraining via next-step prediction. This autoregressive setup closely mirrors the downstream forecasting task and enables the model to learn structured temporal patterns in a self-supervised manner. As a result, CGM-LSM demonstrates improved generalization and robustness across patients and time horizons.

In the training process, the model predicts the glucose value in the next time step by iteratively processing each time step across a 26-h window. To model glucose trajectories, we discretize glucose values into categorical tokens and apply a cross-entropy loss over the token vocabulary. This design enables us to utilize autoregressive pretraining frameworks used in large language models. This is because glucose values have nonlinear clinical significance: a change from 80 to 100 mg/dL (crossing from hypoglycemia to euglycemia) carries different clinical implications than a change from 180 to 200 mg/dL, which remains within a hyperglycemic range. By learning token embeddings rather than using raw values, the model can capture these asymmetric and context-dependent relationships effectively. This token-based formulation also aligns well with recent time-series foundation models such as Time-LLM25 and Chronos26, which demonstrate that discretization combined with learned embeddings can generalize well across complex temporal sequences. In the generation process (Fig. 1D), for each instance, the model takes the previous 24 h of CGM data as input to predict the glucose values for the subsequent 2 h.

Evaluation of pretraining effectiveness

To assess whether the model benefits from large-scale pretraining, we begin by examining what it learns about the structure of glucose values during pretraining. The first question is whether the model, despite using a categorical tokenization scheme, can recover the inherent continuity and ordering of numerical glucose values. We tokenize glucose values into discrete bins to enable autoregressive sequence modeling with a Transformer decoder. While this discretization simplifies model input and increases semantic potentials, it may risk discarding the continuous nature of glucose signals unless the model can infer this structure implicitly.

To evaluate this, we visualize the CGM-LSM’s token embeddings after pretraining using t-SNE (Fig. 2). Each point corresponds to an individual glucose token, and points are colored by their glucose value range. The embeddings form smooth, coherent trajectories in the low-dimensional space, with adjacent tokens aligning in a physiologically meaningful order. This spatial organization suggests that the model learns to encode ordinal and contextual relationships among glucose values through pretraining. These results indicate that categorical tokenization, combined with large-scale pretraining, enables the model to learn the latent numerical structure of CGM data.

To evaluate whether the model’s learned token representations and attention-based dynamics contribute to meaningful improvements in forecasting, we assess CGM-LSM’s performance on the publicly available OhioT1DM dataset19. This benchmark was not used during pretraining or fine-tuning, providing a rigorous test of zero-shot generalization. As shown in Table 2, CGM-LSM significantly outperforms traditional sequence models across all forecasting horizons. At the 30-min horizon, it achieves an rMSE of 9.02 mg/dL, representing more than a 65% reduction in error compared to baseline models. At the 1-h horizon, CGM-LSM maintains strong performance with an rMSE of 15.90 mg/dL, representing a 48.51% reduction compared to vanilla Transformer27. At the 2-h prediction horizon, CGM-LSM achieves an rMSE of 26.88 mg/dL, outperforming the strongest baselines by 26.67%. These results indicate that CGM-LSM generalizes well across patients and longer time horizons.

We believe CGM-LSM’s strong performance on OhioT1DM can be attributed to the pretraining on a large and diverse corpus of CGM sequences spanning different patient types, diabetes subgroups, and glycemic patterns. Through exposure to a broad distribution of glucose trajectories, the model learns to capture latent structure and hidden physiological patterns that small datasets alone cannot reveal. This enables CGM-LSM to make accurate forecasts even for unseen patients by utilizing its attention mechanism and token representations. In contrast, models trained directly on limited datasets like OhioT1DM are prone to underfitting and often fail to exploit the full modeling capacity of deep architectures.

CGM-LSM performance for temporal test and held-out patients

Assessing the model’s ability to generalize to real-world scenarios, such as future periods and unseen patients, is crucial for practical disease management. To this end, with the advantage of the Welldoc dataset, we focus on two key evaluation settings: the temporal test, which simulates future glucose prediction for existing patients based on their prior CGM history, and the held-out test, which evaluates zero-shot performance on entirely new patients at onboarding. We hypothesized that both settings would pose greater challenges for CGM-LSM than the internal test set, due to temporal shifts in the future set and patient variability in the held-out set—both of which deviate from the training data distribution.

Despite these added complexities, CGM-LSM’s performance gap between the temporal and held-out sets versus the internal set remained minimal. For T1D-2H predictions, rMSE was 28.28 mg/dL for the internal set, increasing slightly to 29.43 mg/dL (+4.07%) for the temporal set and 29.81 mg/dL (+5.43%) for the held-out set. Similarly, for T2D-2H predictions, rMSE increased modestly from 22.65 mg/dL for the internal set to 23.22 mg/dL (+2.51%) for the temporal set and 23.49 mg/dL (+3.73%) for the held-out set. Though these results show that while temporal and held-out predictions posed additional challenges, CGM-LSM maintained high accuracy in both settings. Notably, performance on the temporal test was consistently better than on the held-out set, suggesting that access to a patient’s prior CGM data confers an advantage. This indicates that continued pretraining or adaptation using a patient’s longitudinal data may further enhance personalized forecasting accuracy. Additional model performances are available in the Supplementary Information.

Performance across diabetes type, age, and gender

Diabetes is a complicated disease with distinctive glucose generation mechanisms among patients. A patient’s diabetes type, age, and gender significantly influence how glucose levels evolve over time28,29. Considering this heterogeneity, we analyzed CGM-LSM’s performance across diabetes type, age, and gender.

CGM-LSM demonstrated strong performance for both T1D and T2D patients. For T1D patients, rMSE scores were 8.93 mg/dL (30 min), 16.91 mg/dL (1H), and 29.81 mg/dL (2H). Performance was better for T2D patients, with lower rMSE scores of 7.77 mg/dL (30 min), 13.88 mg/dL (1H), and 23.49 mg/dL (2H). These results underscore the model’s robustness across diabetes types. For T2D, the rMSE rose slightly by 3.71% from 22.65 mg/dL (internal) to 23.49 mg/dL (held-out), as shown in Table 2. For T1D, the rMSE increase was larger, rising by 5.41% from 28.28 mg/dL (internal) to 29.81 mg/dL (held-out), which was both proportionally and absolutely higher. This indicates that CGM-LSM has higher generalizability for unseen T2D patients than T1D patients.

Figure 3A shows rMSE-2H performance differences across age groups and evaluation sets. These results indicate stable CGM-LSM performance across age groups. In T1D held-out sets, rMSE scores ranged from 24.77 to 31.78 mg/dL, or 83.09% to 106.71% of the overall rMSE (29.81 mg/dL). For T1D, rMSEs peaked in the 18–39 group, decreased in the 40–64 group, and stabilized further in the 65+ cohort. The 65+ cohort also had the smallest gap between internal and held-out sets. These findings suggest that our model provides greater accuracy and robustness for older groups, possibly due to differences in disease management or progression. For T2D, CGM-LSM showed a high error rate in the held-out set for the 18–39 group. This spike likely reflects the small sample size, with only one T2D patient in the held-out set (Table 1). Excluding this outlier, T2D rMSEs were lower and more uniform across age groups compared to T1D. This indicates greater accuracy and robustness for T2D.

Figure 3B highlights CGM-LSM’s performance across gender, showing stable results across groups. For T1D held-out sets, rMSE scores ranged from 27.00 to 31.88 mg/dL (90.57–106.94% of the overall 29.81 mg/dL). Males consistently had better prediction performance than females across all evaluation sets for both T1D and T2D. Among T1D patients, the rMSE gap between internal and held-out sets was notably larger for females (3.19 mg/dL) than for males (−0.8 mg/dL). These findings suggest the need for tailored model improvements to better accommodate unseen female T1D patients. Additional model performances for age and gender are available in the Supplementary Information.

To explore the higher prediction errors observed in specific subgroups—particularly females, younger adults, and patients with Type 1 diabetes—we conducted an error analysis based on CGM-derived features. As summarized in Table 3, these groups exhibited greater glucose variability (higher standard deviation and coefficient of variation), faster glucose shifts (higher mean rate of change), and less time spent in the healthy glucose range (lower TIR). For example, females had a standard deviation of 45.18 mg/dL compared to 41.42 mg/dL in males, along with a higher time-above-range (32.7% vs. 29.5%). Similarly, the 18–39 age group showed the highest variability and fastest glucose dynamics. These physiological patterns likely contribute to increased modeling difficulty and reduced prediction accuracy.

Performance across prediction scenarios

We evaluated CGM-LSM’s prediction performance across diverse real-world contexts, focusing on two key factors at prediction time: the hour of day and the current glucose level. These factors reflect underlying physiological rhythms and behavioral influences that affect blood glucose dynamics.

Human glucose generation is influenced by lifestyle factors like diet, exercise, and medications. Glucose levels and fluctuations vary by time of day due to their association with human activities like eating and exercise. Given this link, understanding CGM-LSM’s performance at different hours is crucial for its safe application. Figure 3A, B shows CGM-LSM’s prediction performance at different hours of the day, measured via rMSE over 30-min, 1-h, and 2-h horizons using the held-out set for T1D and T2D patients. Each line in Fig. 4 represents the model’s rMSE values across different hours of the day. The model performed consistently well across prediction settings. Clear trends emerged based on prediction horizons and time of day. Shorter horizons (e.g., 30 min) had lower rMSE, reflecting better capture of short-term fluctuations. The rMSE score peaked at noon and evening hours. The higher rMSE during these hours likely reflects impacts from diet, exercise, and treatment routines. In contrast, rMSE was notably lower at night. Nocturnal glucose levels, which are less influenced by behavior, allowed CGM-LSM to predict more accurately. These findings demonstrate the importance of temporal factors and human behaviors in glucose prediction.

In addition to time-based variation, prediction performance also differed based on the glucose level at the time of prediction (Fig. 3C, D). These plots reveal rMSE across a range of glucose values, providing insight into the model’s reliability across glycemic states. Performance was strongest in euglycemic ranges (~100–150 mg/dL), where predictions remained relatively stable. However, rMSE rose sharply in both hypoglycemic (<70 mg/dL) and hyperglycemic (>250 mg/dL) ranges—particularly for longer horizons. This trend likely reflects a combination of physiological volatility and data imbalance. Extreme glucose states are rarer in the training set, leading to undertrained token embeddings in autoregressive prediction. Furthermore, glucose behavior in these regions is more dynamic and less predictable due to compensatory mechanisms (e.g., insulin action, counterregulation, carb intake). These findings emphasize that model performance is not uniform across the glucose spectrum and underscore the need for cautious interpretation in edge cases.

Discussion

Our study demonstrates that CGM-LSM is a robust, high-performance model for glucose forecasting across a range of real-world scenarios. This is made possible in large part by the richness and diversity of our pretraining dataset, which includes over 15 million CGM records from 592 patients spanning different diabetes types, age, and gender. This diversity ensures that the model is exposed to a wide spectrum of glucose trajectories during pretraining, enabling it to learn generalizable temporal patterns.

Our model is a decoder-only Transformer based on the GPT-2 architecture, designed to predict glucose trajectories by modeling CGM values as categorical tokens in an autoregressive manner. Each instance includes a 26-h CGM sequence, where the model predicts the next token one step at a time based on preceding values. This architecture is particularly suited for glucose forecasting due to its ability to capture both short- and long-range temporal dependencies via self-attention. Unlike RNNs (e.g., LSTM, GRU), which process sequences sequentially and may suffer from vanishing gradients or long context windows. Several prior works have explored various model architectures for glucose prediction including attention-based RNNs16, CNNs15, and ensemble approaches30 but face limited performance improvement. These approaches suffer from: (1) unsuitable model structures that either struggle with long-range dependencies or lack parallel processing capabilities; and (2) insufficient data leading to underfitting, as highlighted by transfer learning and augmentation attempts in recent studies17. The Transformer decoder attends over all prior time steps in parallel—effectively modeling complex physiological patterns such as insulin response or circadian rhythms. Importantly, the autoregressive setup aligns naturally with the forecasting task—the model is pretrained to generate the next glucose token based on past values, which directly mirrors the downstream prediction objective. This allows CGM-LSM to learn temporal patterns in a self-supervised fashion.

A key design decision in CGM-LSM is the treatment of glucose values as categorical tokens, learned through a Transformer decoder in an autoregressive fashion. This token-based design accounts for the nonlinear and asymmetric physiological meaning of glucose values. Quantitatively, CGM-LSM outperforms prior methods by a substantial margin. On the OhioT1DM dataset, CGM-LSM reduced rMSE by 48.51% at the 1-h horizon compared to widely used baselines. These gains were consistent across both short-term (30 min) and long-term (2 h) prediction horizons, demonstrating CGM-LSM’s ability to capture both immediate fluctuations and longer-range glucose dynamics. Moreover, on the Welldoc dataset, CGM-LSM achieved strong prediction performance in challenging generalization settings, including temporal splits (predicting future periods) and held-out patients. These findings imply that CGM-LSM can serve as a more reliable forecasting tool in dynamic and diverse clinical environments, where patient profiles and care contexts vary over time. The model also demonstrated demographic robustness, performing consistently across patient subgroups defined by age, gender, and diabetes type. This performance evaluation is essential for ensuring fair access and effectiveness across heterogeneous populations, and highlights the model’s suitability for broad deployment in real-world diabetes management16. We believe CGM-LSM’s superior prediction accuracy and robustness are driven by its pretraining on the large-scale CGM data, which allows the model to learn glucose generation mechanisms from sensor curves. Its success indicates the value of applying foundation models to large-scale sensor data for improved prediction.

At the same time, we found subgroups with higher prediction errors (e.g., T1D, younger, or female patients). These differences suggest that certain populations may present greater physiological complexity or variability, potentially requiring more tailored modeling strategies. Motivated by these disparities, we further examined the model’s performance across different application scenarios—including time of day and glucose level at time of prediction. Accuracy tended to decline during daytime hours, particularly around meals, and at glucose extremes (e.g., hypoglycemia and hyperglycemia). These are periods when human behavior (e.g., eating, exercising) or physiological instability likely introduce higher variability and fewer representative training examples. These findings are important for two reasons. First, they demonstrate the value of subgroup-aware and context-aware evaluations, extending beyond aggregate accuracy to assess where model performance may falter. Second, these results offer actionable guidance for future foundation model design. On one hand, they motivate personalization efforts, such as subgroup-specific fine-tuning or adaptive calibration. On the other hand, they highlight a need to incorporate human behavior and temporal rhythms more explicitly into model architectures, possibly through auxiliary inputs or multimodal pretraining. While large-scale pretraining enables CGM-LSM to generalize across many settings, these remaining limitations emphasize that scale alone is not sufficient. Continued progress will require models that are not only data-rich but behavior-aware and fairness-conscious, capable of adapting to the diversity inherent in real-world clinical deployment.

Our study has several key limitations that warrant future analysis. First, our approach models glucose values as discrete categorical tokens, enabling the model to learn meaningful representations through token embeddings. However, how a numeric representation strategy might work in a pretrained foundation model remains unexplored and requires further empirical research. Second, many modeling design questions remain unaddressed, such as how model size, model structure, and data scale affect forecasting performance. Investigating whether scaling laws, observed in language models, hold in the CGM context could yield insights into the trade-offs between scale and generalization. Third, we rely solely on CGM sequences without incorporating contextual information such as medication, meals, physical activity, or education events. These behavioral and clinical inputs are known to affect glucose dynamics but are often missing or sparsely recorded. Integrating such data streams into foundation models poses both challenges in both modeling and data availability. Fourth, we did not explore patient-specific fine-tuning or adaptation. While CGM-LSM generalizes well across populations, individual responses to interventions or lifestyle changes are highly personalized. Future work should examine methods for fine-tuning with a few days of CGM to support truly personalized diabetes management. Together, these limitations highlight both the promise and the complexity of building general-purpose foundation models for physiological time series. Addressing them will require innovations in representation learning, model architecture, data integration, and adaptive training strategies.

In conclusion, this study utilizes CGM sensor data to pretrain a large sensor model to improve near-future glucose prediction performance. This paper expands the AI for diabetes management from long-term risk level prediction to near future prediction to enable the patient to action to control the glucose in range. This glimpse to the future will empower the patients more about learning their near-future glucose to improve the diabetes self-management and receive the quick feedback.

Methods

Datasets

The protocol was approved by the Johns Hopkins School of Medicine Institutional Review Board (IRB00447704). For this study, we used two datasets for our analysis: CGM data collected from Welldoc, Inc. for pretraining and the OhioT1DM data for benchmarking.

Welldoc data

This dataset, used for pretraining the CGM-LSM model, initially contained 21,215,912 CGM records from 617 patients. The data were de-identified, with only patient age, gender, and diabetes type available for analysis.

To ensure compliance with de-identification requirements, we applied a random-day shift method to the datetime values associated with each patient, consistent with the approach utilized in the MIMIC-III dataset31. This technique enhances data security by anonymizing the temporal aspects of the dataset while preserving the relative patterns and temporal coherence of the datetime information for each individual. By maintaining the internal structure of temporal data, this method facilitates meaningful analysis without compromising patient confidentiality.

We constructed instances to align with the context where for a given patient and an instance datetime, the model predicted future health outcomes based on available patient information. Thus, each instance was associated with a patient and a specific instance datetime. Instance datetimes were derived from the entry times recorded in the CGM system. In the application scenarios, when new glucose data are recorded from the CGM system, our model is triggered to observe patient information and then predict glucose levels for the next 2 h.

To ensure that each instance had sufficient glucose readings for effective learning by the model, we applied specific filtration criteria to retain only valid instances from the raw dataset. First, we assessed the adequacy of input data based on the number of CGM records available prior to the instance datetime. Specifically, we required that a patient have 288 CGM readings recorded in the 24 h preceding the instance datetime to be included. Additionally, to mitigate the influence of consistent measurement errors that might skew the data, we calculated the mode rate of the CGM sequence. Any instance with a mode rate exceeding 40% was excluded to avoid potential noise in the dataset.

Second, for an instance to be considered valid, we imposed the restriction that it contains reliable “output” data for prediction. This required the data to include a complete record of the subsequent 2 h of CGM data (24 CGM records) following the instance datetime. We imposed the requirement of a mode rate below 40% for these 2-h CGM records as well.

We chose a 24-h window prior to the instance datetime to encapsulate daily patterns and provide a comprehensive view of a patient’s typical day. The subsequent 2-h window was selected because it is deemed clinically significant. Compared to shorter spans such as 30 min or 1 h, a 2-h prediction window offers a balance of enabling accurate short-term forecasts and avoiding the increased uncertainty associated with longer prediction horizons, such as 3 h, which may introduce unreliability due to numerous unobserved variables.

After filtering the data as described above, we retained 15,961,183 valid CGM records from 592 individuals. Of these patients, 291 have type 1 diabetes (T1D) and 301 have type 2 diabetes (T2D). The participants are 52.2% male (309 individuals) and 47.8% female (283 individuals). The dataset includes time-stamped glucose records that were utilized for model training. Compared with previous benchmark data, our novel Welldoc dataset is substantially larger, with CGM data from diverse patient types.

OhioT1DM data

The OhioT1DM CGM dataset19 is a publicly available collection of continuous glucose monitoring (CGM) data from patients with type 1 diabetes. The dataset was divided into a training and a test set. For comparison with baseline models from previous studies, we focused on the test dataset, which included 166,532 CGM records from 12 patients with time-stamped glucose values. After applying the inclusion criteria used for Welldoc data, we retained 58,414 valid instances from 12 T1D patients. This dataset did not include patient demographic information.

Training and evaluation datasets

To ensure robust evaluation of model performance in terms of accuracy and robustness, we adopted a stringent methodology for splitting data. For the Welldoc dataset, a held-out test set, comprising 10% of the patients, was excluded from the training process and reserved exclusively for evaluation. We also used the OhioT1DM data as a second held-out test set.

The rest dataset, included 90% of the patients, was processed further. We ordered each patient’s CGM recorded glucose values chronologically by instance datetime. The latest 10% of these instances were designated as the “temporal” test set, reserved for temporal validation. The remaining 90% of instances were randomly divided, with 10% forming the “internal” test set and 80% designated for training. This approach allowed the model to be tested against both time periods as well as against patients it had not previously encountered, thereby enhancing the evaluation of its predictive capabilities.

To prevent overfitting and to improve computational efficiency, we applied a random 10% down-sampling strategy to each dataset. This down-sampling was strategically chosen based on the proximity of instance datetimes within instances. Closely timed instances often have substantial overlap in their CGM inputs and outputs, potentially skewing the model’s learning with redundant information. For example, if a patient had two instances with instance datetimes of 11:00 am and 11:30 am on the same day, their CGM input data and CGM output data would overlap substantially. This down-sampling thus ensured a sparser distribution of instance times, optimizing training efficiency. All random splits were performed using a fixed random seed of 42 to ensure reproducibility.

Additionally, since each instance was associated with a specific patient and datetime, we were able to subdivide the data further into more granular datasets based on available patient characteristics of age, gender, and diabetes type, and the temporal feature of time of day. To illustrate, using the held-out set as an example, we generated specific test sets based on patient characteristics, such as “held-out T1D,” “held-out T1D female,” or “held-out T1D 18–30 years old.” Similarly, we created test sets based on datetime features, such as “held-out T1D 2 pm,” which would include instances from T1D patients in the held-out group with instance datetimes at 2 pm.

Problem definition for pretraining

We represented a sequence of 312 CGM values with timestamps as \(\left({s}_{1},{s}_{2},{s}_{3},\ldots {s}_{n}\right)\) for a 26-h period (24 h before the focal datetime and 2 h after). This sequence has a natural sequential ordering, similar to natural language. Following the logic of a language model, the large sensor model is trained to use the autoregression method to predict the next glucose value from the CGM sensor data sequence.

Mathematically, this can be described as maximizing the likelihood of a glucose value \({s}_{i}\) given the preceding glucose values \({s}_{1},\ldots ,{s}_{i-1}\). The objective function for training the CGM-LSM model is formalized as follows:

where \({\rm{\theta }}\) represents the parameters of the model, \(n\) is the length of the input sequence, and \({p}_{\theta }({s}_{i}|{s}_{1},...,{s}_{i-1})\,\)is the probability of the glucose value \({s}_{i}\) conditioned on the prior sequence of tokens, as computed by the model. This training paradigm encourages the development of internal representations that capture glucose generation mechanism dependencies between elements in the input sequence, enabling the model to generate coherent and contextually relevant glucose values in future glucose prediction tasks. By repeatedly adjusting \(\theta\) to maximize \(L\left(\theta \right)\), the CGM-LSM model learns to anticipate subsequent glucose value effectively. In our CGM-LSM model, we adopted the popular transformer decoder-only structure to model the above task, and we conducted our pre-training task based on the CGM data.

Problem definition for prediction/generation

After the CGM-LSM was trained, it could be used to generate new glucose sequences. The generation process of CGM-LSM is similar to that of the GPT models. It involves iteratively sampling a new generated glucose value (token) from a probability distribution of possible next glucose values (tokens), conditioned on a sequence of input glucose values (tokens). This is mathematically represented as:

where \(\theta\) denotes the model parameters, \({h}_{i}\) is the hidden state derived from transformer blocks, and \({\bf{W}}\) is the output projection matrix. The generation starts with an initial sequence, i.e., the preceding 24-h CGM data sequence. Each timestep’s hidden glucose state is computed and used to generate a probability distribution over the vocabulary from which the glucose value in the next time stamp is sampled. This can be done using random sampling or techniques like top-k or top-p sampling to balance creativity and coherence. For our application, we used the greedy method, which samples the glucose value with the highest probability. This predicted glucose value is then treated as the real value and appended to the original sequence for the next glucose value prediction. The process repeats until the maximum length, i.e., 24 glucose values for the next 2 h, is reached. Finally, the generated 2-h glucose values are compared with the real glucose values to measure the model’s prediction performance.

CGM-LSM model structure

Input representation

The tokens used in CGM-LSM for glucose prediction were the glucose values themselves. Various methods exist for converting numerical values into categorical tokens. For simplicity and without losing generality, we directly used the glucose readings as tokens; for instance, a glucose level of 153 becomes the token “153”. In total, we had 400 glucose value tokens in the vocabulary because the maximum value of glucose measured by the CGM device is 400. In the pretraining tasks involving next glucose value prediction, the model was expected to learn the semantic meanings of the embeddings for these glucose value tokens.

Model structure

CGM-LSM adopted the transformer decoder-only structure27, which is widely used in GPT model series20,32. In contrast to traditional recurrent neural networks23, this approach relies entirely on self-attention mechanisms to generate sequences. Such an architecture facilitates more parallelizable computations and effectively captures long-range dependencies in data.

In a decoder-only transformer, the model is composed exclusively of a stack of decoder blocks that process the input sequence to generate one glucose value token at a time. Each decoder block in the transformer comprises two main components: a multi-head self-attention mechanism and a position-wise fully connected feed-forward network.

The input to each decoder layer is initially transformed into three vectors—query (\({\rm{Q}}\)), key (\({\rm{K}}\)), and value (\({\rm{V}}\)) vectors—through linear projection of the embeddings of the input tokens. The self-attention mechanism in the decoder is mathematically represented as:

where \({d}_{k}\) is the dimension of the key vectors. This mechanism allows each position in the decoder to attend to all positions up to and including that position in the previous layer. This is crucial for autoregressive models where the prediction for the next token can only depend on the known previous tokens, thereby preserving the causality in the sequence generation.

Normalization and residual connections are employed around each of these sublayers (self-attention and feed-forward networks), which help in stabilizing the learning process. Mathematically, the output of each sublayer can be described as:

where \(\text{Sublayer}\left({\rm{x}}\right)\) is the function implemented by the sublayer itself, either self-attention or a feed-forward network, and \(x\) is the input to the sublayer.

Finally, after passing through the series of decoder blocks, the output is projected onto a vocabulary-sized vector using a linear transformation followed by a softmax layer to produce a probability distribution over possible next tokens:

where \({{\rm{h}}}_{{\rm{i}}}\) is the final layer’s output at position \({\rm{i}}\) and \(W\)is the weight matrix. The glucose value corresponding to the highest probability is then selected as the next token in the sequence. The decoder-only transformer architecture leverages the power of self-attention to efficiently process sequences in a manner that scales favorably with sequence length and allows for highly parallelizable implementation.

Loss function

Because we treated a glucose value as a unique token, we used cross-entropy to calculate the loss for the next glucose value prediction task. In the pretraining of the CGM-LSM model, the softmax function is instrumental in transforming the logits—raw output scores for each glucose value in the vocabulary—into a probability distribution over all potential next glucose values. This probabilistic framework is crucial for defining the cross-entropy loss, which quantifies the difference between the predicted probabilities and the actual distribution of the next glucose value. Specifically, given the logits \({{\bf{z}}}_{{\bf{t}}}\), for possible next glucose values at position \(t+1\), the softmax function calculates the probability of each token \({x}_{t}\) being the next token in the sequence as follows:

where \(V\) is the vocabulary size and \({z}_{t,k}\) represents the logit corresponding to the \(k\)-th vocabulary token. The cross-entropy loss for predicting a single token is thus:

This loss essentially measures the model’s effectiveness in predicting the actual next token \({{\rm{s}}}_{t+1}\) using the probabilities output by the softmax function. By minimizing the negative log-likelihood, or equivalently, the cross-entropy loss across all tokens in the training dataset, the GPT model learns to generate accurate and coherent text. The total loss for a batch of sequences, which aggregates the individual token losses, ensures comprehensive learning across diverse textual contexts:

Through iterative minimization of this total loss during pretraining, the CGM-LSM model effectively hones its parameter set \(\theta\), thereby enhancing its capabilities in language modeling and next-token prediction.

CGM-LSM implementation details

We utilized the GPT-2 model, as implemented in the Hugging Face library33, as the foundation for CGM-LSM. The model’s token vocabulary contained 17 special tokens and 400 glucose value tokens. For the CGM-LSM structure, the embedding size was 768 the number of multi-heads was 12, and the number of layers was 12.

For model training with the Welldoc training dataset, we set batch size to 256. For the optimizer, we used AdamW34 with \({{\rm{\beta }}}_{1}\) to be 0.9 and \({\beta }_{2}\) to be 0.999. Grid search was used to obtain the optimal hyperparameters. We set the learning rate to be 0.00005, the layer norm epsilon value as 0.00001, and dropout rate as 0.1. We conducted the training process on a single A100 80GB GPU over ten epochs. Notably, towards the end of the third epoch, we observed an increment in loss on the validation sets. We therefore used the model instance at the end of the third epoch as our final model.

Post-training, the model’s capability to generate new sequences was tested using Hugging Face’s generation functions on the same type of GPU. We employed the greedy sampling method35 to predict glucose values over a 2-h horizon, terminating after the 24th token. After that, we calculated evaluation metrics for the model’s prediction performance by comparing these predicted glucose sequences against actual glucose data.

Model evaluation

In this study, we evaluated model performance using four metrics: root Mean Squared Error (rMSE), Mean Absolute Error (MAE), MAE with a tolerance of 10 units (MAEWith10), and Region Accuracy. These metrics capture the precision and reliability of predictive models in continuous glucose monitoring (CGM) and have been widely used in previous studies of CGM glucose prediction12,18.

Root Mean Squared Error (rMSE) is a robust metric used to quantify the average magnitude of the error between predicted values and actual values. It is computed using the formula:

where \({y}_{i}\) are the actual values, \(\hat{{y}_{i}}\) are the predicted values, and \(n\) is the number of instances.

Mean Absolute Error (MAE) measures the average magnitude of the errors in prediction without considering their direction. It is simpler and particularly useful when you need to understand errors in the same units as the data. MAE is calculated as:

MAE with Tolerance (\(\mathrm{MAE}\left(\tau \right)\)), an adaptation of the traditional MAE, measures the proportion of absolute errors that fall within a specified tolerance level, here set at 10 units. This metric is particularly useful in applications where errors within a certain range are considered acceptable. The calculation is:

Glucose Region Accuracy evaluates the accuracy of predictions by categorizing continuous glucose values into predefined regions and comparing these categorical predictions to actual categorizations. The regions are defined as Very Low (<54 mg/dL), Low (54–69 mg/dL), Time-in-Range (TIR) (70–180 mg/dL), High (181–250 mg/dL), and Very High (>250 mg/dL)36. The accuracy is then calculated by:

Collectively, these metrics offered us a comprehensive view of the model’s performance, each adding a unique dimension to our understanding of model accuracy and reliability in prediction of glucose levels. The use of varied metrics ensured a balanced evaluation that addressed both the magnitude of errors and their clinical significance.

Baseline model evaluation

To establish baseline performance for glucose prediction on the OhioT1DM dataset, we evaluated a range of time series forecasting models, starting with classical recurrent neural networks and advancing to more sophisticated transformer architectures. Our evaluation began with fundamental recurrent architectures including vanilla RNN22, followed by the more capable GRU24 and LSTM23 networks, which better capture long-term dependencies in glucose time series. We then progressed to advanced transformer-based architectures, including the standard VanillaTransformer27, the more efficient Informer37, and the specialized Autoformer38 designed specifically for time series forecasting. All models were tasked with predicting future glucose values at clinically relevant horizons of 30, 60, and 120 min, using input sequences calibrated to capture meaningful patterns in continuous glucose monitoring data. We utilized the Nixtla NeuralForecast library39 to implement these models efficiently.

For hyperparameter optimization, we implemented an Optuna-based framework that dynamically scales training steps based on dataset size and batch configuration40. This approach allows for efficient exploration of the hyperparameter space while maintaining consistent epoch-based training across different model configurations. The framework automatically converts epoch-based training targets to step-based execution, enabling fair comparison between models with varying computational requirements. Each model underwent rigorous optimization with 10–20 trials to identify optimal configurations for hidden dimensions, number of layers, learning rates, and dropout rates. The models were trained on 289 (24-h) input to predict future 24 (2-h) values. All experiments were executed on RTX 4090 GPUs.

Data availability

The OhioT1DM dataset is publicly available at https://webpages.charlotte.edu/rbunescu/data/ohiot1dm/OhioT1DM-dataset.html. The Welldoc dataset is proprietary and can only be accessed upon request with prior approval from Welldoc. This research study was approved by the Johns Hopkins University Institutional Review Board (IRB# IRB00447704). The study is a retrospective analysis, and no patient protected health information (PHI) was used during model training or evaluation.

Code availability

All the analyses conducted for this study were performed using Python version 3.11.9, the python package PyTorch version 1.9.0, HuggingFace 4.44.2, and datasets version 2.21.0 was used for developing the CGM-LSM learning models. Visualization was obtained using Python packages matplotlib version 3.9 and Pandas version 2.2.2. The code is available in GitHub: https://github.com/JHU-CDHAI/cgmlsm.

References

Einarson, T. R., Acs, A., Ludwig, C. & Panton, U. H. Prevalence of cardiovascular disease in type 2 diabetes: a systematic literature review of scientific evidence from across the world in 2007–2017. Cardiovasc. Diabetol. 17, 83 (2018).

Reidy, K., Kang, H. M., Hostetter, T. & Susztak, K. Molecular mechanisms of diabetic kidney disease. J. Clin. Invest. 124, 2333–2340 (2014).

Pop-Busui, R. et al. Diabetic neuropathy: a position statement by the American Diabetes Association. Diab. Care 40, 136–154 (2017).

Parker, E. D. et al. Economic costs of diabetes in the U.S. in 2022. Diab. Care 47, 26–43 (2024).

Dall, T., West, T., Ritashree, C., Reynolds, R. & Iacobucci, W. Update the complexities of physician supply and demand: projections from 2016 to 2030 final report association of American Medical Colleges. https://doi.org/10.13140/RG.2.2.25694.48963 (2018).

Sheng, B. et al. Large language models for diabetes care: potentials and prospects. Sci. Bull. 69, 583–588 (2024).

Kolb, L. An effective model of diabetes care and education: the ADCES7 self-care behaviors. M. Sci. Diab. Self Manag. Care 47, 30–53 (2021).

Midyett, L. K. One size fits all versus individualized medicine in type 1 diabetes management. Diab. Technol. Therapeutics 25, S-42 (2023).

Klonoff, D. C. Continuous glucose monitoring. Diabetes Care 28, 1231–1239 (2005).

Galindo, R. J. et al. Increase access, reduce disparities: recommendations for modifying medicaid CGM coverage eligibility criteria. J. Diab. Sci. Technol. 18, 974–987 (2024).

Vettoretti, M., Cappon, G., Facchinetti, A. & Sparacino, G. Advanced diabetes management using artificial intelligence and continuous glucose monitoring sensors. Sensors 20, 3870 (2020).

Doorn et al. Machine learning-based glucose prediction with use of continuous glucose and physical activity monitoring data: The Maastricht Study. PLOS ONE 16, e0253125 (2021).

Zecchin, C., Facchinetti, A., Sparacino, G., De Nicolao, G. & Cobelli, C. Neural network incorporating meal information improves accuracy of short-time prediction of glucose concentration. IEEE Trans. Biomed. Eng. 59, 1550–1560 (2012).

Li, K., Daniels, J., Liu, C., Herrero, P. & Georgiou, P. Convolutional recurrent neural networks for glucose prediction. IEEE J. Biomed. Health Inform. 24, 603–613 (2020).

Li, K., Liu, C., Zhu, T., Herrero, P. & Georgiou, P. GluNet: a deep learning framework for accurate glucose forecasting. IEEE J. Biomed. Health Inform. 24, 414–423 (2020).

Zhu, T., Li, K., Herrero, P. & Georgiou, P. Personalized blood glucose prediction for type 1 diabetes using evidential deep learning and meta-learning. IEEE Trans. Biomed. Eng. 70, 193–204 (2023).

Deng, Y. et al. Deep transfer learning and data augmentation improve glucose levels prediction in type 2 diabetes patients. npj Digit Med. 4, 1–13 (2021).

Zhu, T. et al. Edge-based temporal fusion transformer for multi-horizon blood glucose prediction. In 2023 IEEE International Symposium on Circuits and Systems (ISCAS), 1–5 (IEEE, 2023).

Marling, C. & Bunescu, R. The OhioT1DM dataset for blood glucose level prediction: update 2020. CEUR Workshop Proc. 2675, 71–74 (2020).

Radford, A. et al. Language models are unsupervised multitask learners. OpenAI (2019).

Lecun, Y., Bottou, L., Bengio, Y. & Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 86, 2278–2324 (1998).

Rumelhart, D. E., Hinton, G. E. & Williams, R. J. Learning representations by back-propagating errors. Nature 323, 533–536 (1986).

Hochreiter, S. & Schmidhuber, J. Long short-term memory. Neural Comput. 9, 1735–1780 (1997).

Cho, K. et al. Learning phrase representations using RNN encoder–decoder for statistical machine translation. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (eds, Moschitti, A., Pang, B., Daelemans, W.) 1724–1734 (Association for Computational Linguistics, 2014).

Jin, M. et al. Time-LLM: time series forecasting by reprogramming large language models. In Proceedings of the International Conference on Learning Representations. (ICLR, 2024).

Ansari, A. F. et al. Chronos: learning the language of time series. Trans. Mach. Learn. Res. (2024).

Vaswani, A. et al. Attention is all you need. In Advances in Neural Information Processing Systems. vol 30. (Curran Associates, Inc.; 2017).

Kalyani, R. R. & Egan, J. M. Diabetes and altered glucose metabolism with aging. Endocrinol. Metab. Clin. 42, 333–347 (2013).

Tramunt, B. et al. Sex differences in metabolic regulation and diabetes susceptibility. Diabetologia 63, 453–461 (2020).

Zhu, T. et al. Enhancing self-management in type 1 diabetes with wearables and deep learning. npj Digit Med. 5, 1–11 (2022).

Johnson, A. E. W. et al. MIMIC-III, a freely accessible critical care database. Sci. Data 3, 160035 (2016).

Brown, T. et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems, vol 33, 1877–1901 (Curran Associates, Inc., 2020).

Wolf, T. et al. Transformers: state-of-the-art natural language processing. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing: System Demonstrationsm (eds, Liu, Q. & Schlangen, D.) 38–45 (Association for Computational Linguistics; 2020).

Loshchilov, I. & Hutter, F. Decoupled weight decay regularization. In Proceedings of the International Conference on Learning Representations. (ICLR, 2019).

Wiher, G., Meister, C. & Cotterell, R. On decoding strategies for neural text generators. Trans. Assoc. Computational Linguist. 10, 997–1012 (2022).

Battelino, T. et al. Clinical targets for continuous glucose monitoring data interpretation: recommendations from the international consensus on time in range. Diab. Care 42, 1593–1603 (2019).

Zhou, H. et al. Informer: Beyond efficient transformer for long sequence time-series forecasting. Proc. AAAI Conf. Artif. Intell. 35, 11106–11115 (2021).

Wu, H., Xu, J., Wang, J. & Long, M. Autoformer: decomposition transformers with auto-correlation for long-term series forecasting. In Proceedings of the 35th International Conference on Neural Information Processing Systems. NIPS ’21, 22419–22430 (Curran Associates Inc.; 2021).

Nixtla/neuralforecast: Scalable and user friendly neural forecasting algorithms. https://github.com/Nixtla/neuralforecast. (2022).

Akiba, T., Sano, S., Yanase, T., Ohta, T. & Koyama, M. Optuna: a next-generation hyperparameter optimization framework. In Proceedings of the 25th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining. KDD ’19, 2623–2631 (Association for Computing Machinery; 2019).

Acknowledgements

We thank Johns Hopkins University's Rockfish and Discovery high-performance computing (HPC) clusters for providing the computational resources used in this study. We also appreciate the thoughtful feedback from anonymous reviewers, which significantly improved the quality of this paper.

Author information

Authors and Affiliations

Contributions

J.L. led the study, drafted the manuscript, and conducted the study design and data analysis. A.K. extracted data, edited drafts, and contributed critical discussion points. A.I. assisted with study design and added critical discussion points. R.H. conducted data preprocessing, contributed to data analysis, and edited the draft. M.S. and G.A. provided clinical insights. A.R. provided critical editing and offered valuable suggestions to improve the manuscript. G.G., the principal investigator of the project, conceived the original idea, provided overall supervision, and critically edited the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

M.S., A.K., and A.I. are employees of Welldoc, Inc. The remaining authors declare no competing interests.

Additional information

Publisher’s note Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Luo, J., Kumbara, A., Shomali, M. et al. A large sensor foundation model pretrained on continuous glucose monitor data for diabetes management. npj Health Syst. 2, 35 (2025). https://doi.org/10.1038/s44401-025-00039-y

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s44401-025-00039-y