Abstract

Arousal is fundamental for affective experience and, together with valence, defines the core affective space. Precise brain models of affective arousal are lacking, leading to continuing debates of whether the neural systems generalize across valence domains and are separable from those underlying autonomic arousal or wakefulness. Here, we combine naturalistic fMRI with predictive modeling to develop a brain affective arousal signature (BAAS, discovery-validation design, n = 60, 36). We demonstrate its (1) sensitivity and generalizability across mental processes, valence, and stimulation modality and (2) neural distinction from autonomic arousal and wakefulness (24 studies, n = 868). Affective arousal is encoded in distributed cortical-subcortical (e.g., prefrontal, periaqueductal gray) systems with local similarities in thalamo-amygdala-insula systems between affective and autonomous arousal. We demonstrate application of the BAAS to improve specificity of established valence-specific neuromarkers. Our study provides a biologically plausible model for affective arousal that aligns with the affective space and has a high application potential.

Similar content being viewed by others

Introduction

The stream of subjective affective experiences (feelings) represents a continuous assessment of the internal state and its relation with the environment, facilitating adaptive responses, avoiding harm, and identifying opportunities1. The mental topology of the highly subjective affective experiences remains controversially debated2, but most current theories converge on a dimensional space with affective domains representing pleasure-displeasure (i.e., valence) and activation-deactivation (i.e., arousal or intensity)3,4, which together underlie conscious affective experiences5,6. These fundamental affective dimensions5 - which together form ‘core affect’ a consciously accessible neurophysiological state - represent critical defining features that distinguish affective experiences from other mental states7 and form a two-dimensional affective space encompassing specific emotional states (e.g., anger: negative valance and high arousal). Within this affective space arousal and valance exhibit a V-shaped architecture such that arousal increases in conjunction with either positive or negative valence8,9 (see Fig. 1), while increasing levels of intense arousal may progressively overshadow specific emotional states on the subjective, perceptual, and neural level10,11.

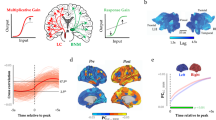

a Building a brain affective arousal signature. A whole-brain model (brain affective arousal signature, BAAS) was developed on the discovery cohort that underwent an arousal induction fMRI paradigm (study 1, n = 60) using the support vector regression algorithm and validated in study 2 (n = 36). b Evaluating the brain affective arousal signature. Based on the dimensional models of ‘core affect’, BAAS was evaluated in terms of generalizability in sixteen independent datasets (studies 3–18, n = 635) and in terms of specificity in nine independent datasets (study 2 and studies 19–26, n = 294). c Identifying the neurofunctional representation of affective arousal in the brain. Multivariate and univariate approaches were employed to determine the contribution of specific brain systems to predict subjective affective arousal. The thresholded affective arousal signature was compared with the conjunction of meta-analytic maps for positive and negative affect to further validate the biological plausibility of the identified affective arousal brain systems. Prediction analysis and conjunction analysis were next conducted to test performance of isolated brain systems in predicting subjective affective arousal experience. Finally, the neural representations between affective arousal and autonomic arousal signatures were evaluated to validate existed affective arousal-related domains. d Improving the specificity of neuroaffective signatures. Testing the specificity of two affective neural signatures (VNAS and VIDS) before and after BAAS response corrected. VNAS, visually negative affect signature from Čeko et al.34; VIDS, visually induced disgust signature from Gan et al.13. The icons were sourced from Pixabay under the Pixabay License or created in PowerPoint 2016. All icons are free to use in both commercial and noncommercial print and digital media.

The behavioral and neural dynamics of the valance dimension have been extensively mapped at different granularity levels ranging from neural signatures for general negative affect12 to signatures that distinguish emotion-specific mental states13,14 or subjective affective experiences from accompanying physiological responses15,16, respectively. In contrast, and despite its central position in both, classical theoretical accounts and everyday subjective experience of emotion17,18 an integrative and generalizable neural model for conscious affective arousal in humans is lacking.

Additionally, the more neurobiological studies to date primarily focus on physiological (autonomic) or wakefulness (vigilance) aspects of arousal19,20,21 rather than the conscious affective experience. Sophisticated animal studies have established comprehensive and intricate neurobiological models for wakefulness and autonomic arousal, encompassing subcortical systems such as the brainstem, thalamic, and hypothalamic nuclei22,23,24. While there is some overlap between the brain systems controlling wakefulness and hard-wired autonomic reactivity with those involved in affective arousal23,25,26, accumulating evidence suggests that the subjective experience that characterizes affective arousal in humans may operate through distinct neural mechanisms19,27. In contrast to the anatomical precision of the animal models, human studies on the subjective affective components of arousal have focused on large-scale brain systems (for review see e.g., Satpute et al.19), including default mode network subsystems (i.e., anterior medial prefrontal cortex) and the ‘salience network’ (including the anterior cingulate cortex, insula, and amygdala)28,29, as well as brainstem regions30. However, the specific neural systems underlying subjective affective arousal and whether they differ in terms of distributed neural representations from other forms of arousal remains to be determined.

Traditionally, neuroimaging research on affective processes in humans has relied on experimental paradigms employing isolated and sparsely presented stimuli in a single modality (e.g., affective pictures) and analytic approaches that aim at localizing brain regions that show the highest increase in activity by conducting numerous tests across individual ‘voxels’ or regions – strategies characterized by a low ecological validity for dynamic emotional processes in everyday life31 and low to moderate effect sizes32 and reliability33 in terms of characterizing affective mental processes12,14,16,34. Recent advances have successfully leveraged neuroimaging combined with machine learning algorithms (i.e., multivariate pattern analysis, MVPA) to develop predictive and more precise and comprehensive neural signatures for subjective affective experiences characterized by a specific emotional state and high negative affective arousal, including general negative affect12,34, pain35, disgust13, fear14,15 and anxious anticipation16. The advances allowed to predict the specific subjective affective state with greater effect sizes and robustness compared to traditional local region-based approaches36,37 and to demonstrate that the specific emotional states are characterized by common and distinct brain-wide neural representations between subjective emotional states or high affective arousal, respectively15,16. This holds the potential to improve the description of mental processes at the brain level38. This approach has refined our understanding of affective experiences by demonstrating that they are encoded in distributed brain systems, which is aligned with meta-analytic evidence indicating that affective experiences are linked to activations across a wide array of brain regions39.

While initial neuroimaging studies have demonstrated the potential of MVPA to decode stimulus-induced arousal, these studies have not tracked the subjective experience of arousal40 nor tested the generalization of the neurofunctional signatures across contexts, socio-cultural diverse samples, states of consciousness or valence, and paradigm classes41,42. These aspects form integral components of contemporary affective models, emphasizing the critical significance of subjective experience and appraisal as well as context-dependent affective and neurofunctional computations43,44,45,46. Very recent conceptualizations further stress the need to segregate neural representations of affective arousal from autonomic arousal and wakefulness, to establish an integrative framework and neural reference space for arousal (e.g., Satpute et al.19; for neurofunctional decoding-based support for differentiation in other affective domains see also Taschereau-Dumouchel et al.15 and Liu et al.16). Despite initial findings demonstrating the promise of neural decoding of arousal, it remains unknown whether affective arousal, a core aspect of affective experiences, exhibits generalizable distributed neural representations in the human brain and whether these representations are independent of valence and distinguishable from the neural representations of physiological arousal and wakefulness.

Moreover, consistent with conceptualizations of the affective space proposing that both positive and negative affect are associated with high levels of arousal, our recent study showed that neural signatures for negative emotions exhibited strong activations in response to positive stimuli compared to neutral ones47. This suggests that these signatures may partly capture the arousal inherent to strong affective experiences48,49,50. Together with the observed overshadowing effect of high arousal on the specific emotions across domains10,11 this leads to conceptual debates of whether the arousal dimension is represented across valence in the brain and whether accounting for arousal may increase the specificity of decoding specific emotional states from the brain.

Against this background we here aimed to (1) develop a sensitive and generalizable brain signature (i.e., brain affective arousal signature, BAAS) that can precisely capture the subjective experience of affective arousal under ecologically valid conditions combining naturalistic functional Magnetic Resonance Imaging (fMRI) with an MVPA-based neural decoding approach, (2) determine whether common neural representations of affective arousal can be determined across the valence domain, multiple senses and modalities, (3) to establish a comprehensive and biologically informed model on how affective arousal is represented in human brain. Given the intricate interaction between autonomic and affective arousal and initial studies demonstrating that the neural representations of autonomic and affective experience might be distinguishable15,16 we further examined (4) whether the subjective affective arousal experience is separable from autonomic arousal in humans. Finally, based on previous literature suggesting that high levels of progressively increasing arousal overshadow the representation of specific emotions11, we tested if (5) accounting for a precise neural signature of increasing arousal can enhance the specificity of existing affective neural signatures.

To this end, we combined naturalistic fMRI with machine learning-based neural decoding to identify a brain signature predictive of affective arousal intensity in healthy participants (study 1, n = 60) as they experienced a range of positive and negative emotions induced by carefully selected video clips. To enhance the ecological validity of the signature and facilitate immersive and intense arousal experience we utilized naturalistic fMRI experiments using visual-auditory movie clips and requested subjects to report their affective arousal experiences (Fig. 1a). The predictive performance and generalizability of the identified neural signature were assessed in an independent validation cohort (study 2, n = 36), using a modified arousal induction paradigm, with new and longer movie clips covering different aspects of the emotional space. We next employed 16 studies (total n = 610, details see Supplementary Table S1) to test its generalization and sensitivity across valence domains, modalities, paradigms, populations, and image acquisition systems (Fig. 1b), and additional 8 studies (total n = 258, see Supplementary Table S1) to evaluate its specificity. The neural representations underlying affective arousal were systematically determined using backward (prediction) and forward (association) encoding models. The neurobiological validity of the BAAS was further examined against meta-analytic maps of negative and positive emotions from large-scale meta-analysis51 and the separability from autonomic arousal was determined using a validated decoder for autonomic arousal (fMRI data from Taschereau-Dumouchel et al.15, n = 25) (Fig. 1c). Moreover, we investigated whether adjusting for arousal ratings and BAAS responses could enhance the precision of two previously developed and widely employed affective signatures for specific emotional states13,34 (Fig. 1d). Overall, this study aims to establish a comprehensive neural model of affective arousal, enhancing our comprehension of how the brain represents affective arousal and refining the prediction specificity of neural signatures for affective experiences.

Results

Behavioral results

In study 1, a total of 40 video stimuli (~25 s) were employed to induce varying levels of arousal experience. Participants were asked to experience video stimuli immersively and report their current level of affective arousal for each stimulus on a 9-point Likert scale ranging from 1 (very low arousal) to 9 (very high arousal). As shown in Supplementary Fig. S1a, stimuli induced a wide range of subjective arousal in the discovery cohort which was used to develop the neural signature of subjective affective arousal. Study 2, the validation cohort, employed 28 new video stimuli 9 (~60 s), which were entirely different from stimuli used in study 1, to elicit different levels of subjective affective arousal feelings in an independent sample (Supplementary Fig. S1b).

Identifying a brain signature for affective arousal

In line with previous studies12,13,14,15, we employed support vector regression (SVR) in study 1 to develop a brain activity-based signature for subjective affective arousal that predicted the intensity of self-reported arousal experience during the videos. To evaluate the performance of the BAAS, we conducted the 10 × 10-fold cross-validation procedure in the discovery cohort and additionally validated the signature in the independent study.

The developed BAAS was sensitive to subjective arousal experience in both discovery and independent validation cohorts, as evidenced by significant associations between observed and predicted ratings (Fig. 2a, b). Specifically, in the discovery cohort, the averaged within-subject correlation was 0.88 ± 0.01 (standard error (SE)), the average root mean squared error (RMSE) was 1.36 ± 0.05, the coefficient of determination (R2) was [0.61, 0.64], the overall (between- and within-subjects) prediction-outcome correlation was 0.82 (R2 = 0.65, bootstrapped 95% confidence interval (CI) = [0.80, 0.85]) (Fig. 2a). These findings were replicated in the validation cohort such that BAAS effectively predicted subjective arousal ratings yielding a high prediction-outcome correlation (average within-subject correlation coefficient r = 0.80 ± 0.03 SE, RMSE = 1.68 ± 0.08 SE, R2 = 0.42; overall prediction-outcome correlation coefficient r = 0.70, R2 = 0.49, bootstrapped 95% CI = [0.65, 0.75], Fig. 2b), indicating a sensitive and robust neurofunctional arousal signature. In addition, we categorized video clips in the validation cohort into low (ratings 1, 2, and 3), medium (ratings 4, 5, and 6), and high (ratings 7, 8, and 9) arousal conditions, according to self-reported arousal ratings and applied the two-alternative forced-choice test to examine whether the BAAS could distinguish different arousal conditions. We found that the BAAS response accurately classified high versus moderate (81 ± 6.9%, P = 5.35 ×10−4, Cohen’s d = 1.29), moderate versus low (92 ± 4.6%, P = 2.56 ×10−6, Cohen’s d = 2.56), and high versus low (100 ± 0.0%, P = 4.66 ×10−10, Cohen’s d = 3.21) conditions. Of note, in this analysis, we excluded 4 subjects because they did not have ratings of high or moderate arousal.

a and b depict predicted arousal experience compared to the actual level of arousal for the cross-validated discovery cohort (study 1, n = 60) and the independent validation cohort (study 2, n = 36), respectively. The colored lines show individual linear regression fits comparing predicted versus actual ratings, while the black line shows the regression fit for the complete dataset. The raincloud plots on the right show the distribution of within-participant predictions. c Distribution of within-subject trial-wise prediction-outcome Pearson correlation coefficient for each participant in the discovery and validation cohorts, respectively. Yellow color represents correlation coefficients in the discovery cohort (all P ≤ 3.8 × 10−3), while green color shows coefficients from the validation cohort, where all subjects except four showed significant correlations (P ≤ 0.04; four subjects had P > 0.05). Notably, the boxplot component of the raincloud plots in (a)–(c) represents the interquartile range with the box boundaries at the 25th and 75th percentiles, the median line at the 50th percentile, and the whiskers extending to the minimum and maximum values within 1.5 times the interquartile range from the quartiles. d BAAS could accurately predict (two-alternative forced-choice test) or strongly respond (one-sample t-test) to high arousal experiences induced by stimuli across multiple modalities. Upper panel: BAAS could accurately classify or predict high arousing negative affect in studies 3−6 (total n = 210, prediction performance is shown as forced-choice classification accuracy (Cohen’s d) or prediction-outcome Pearson correlation (uncorrected), P values in forced-choice tests were based on two-sided binomial tests); Left lower panel: BAAS could (marginally) significantly respond to high arousing positive affect in studies 7–16 (total n = 224, two-sided one-sample t-test); Middle lower panel: BAAS could accurately classify high arousing negative and positive affect in study 17 (total n = 150, prediction performance is shown as forced-choice classification accuracy (Cohen’s d), two-sided binomial test). Right lower panel: BAAS could strongly respond to high arousing positive and negative imaged events in study 18 (total n = 26, two-sided one-sample t-test, uncorrected). The violin and box plots in (d) show the distributions of the signature response. The boxplot’s boundaries are defined by the first and third quartiles, while the whiskers extend to the maximum and minimum values within the range of the median ± 1.5 times the interquartile range. See Supplementary Table S1 for the details of each contrast. # marginally significant, *P < 0.05, **P < 0.01, ***P < 0.001. BAAS Brain affective arousal signature. The icons were sourced from Pixabay under the Pixabay License or created in PowerPoint 2016. All icons are free to use in both commercial and noncommercial print and digital media. Source data are provided as a Source Data file.

Given that previous studies showed activity in the visual cortex in response to arousing emotional scenes, suggesting that visual processing contributes to arousal52,53,54,55, we tested to which extent the BAAS depended on the visual cortex. Therefore, we retrained the decoder excluding the entire occipital lobe. We observed similar performance including significant prediction-outcome correlations and coefficient of determination in this modified setup, suggesting that the prediction is not solely reliant on emotional schemas encoding within visual regions (further details see Supplementary Results and Supplementary Fig. S2).

Within-subject trial-wise prediction

Affective arousal experience is a highly subjective state and can rapidly fluctuate within a short period, typically on the order of minutes or even seconds27,56,57. We next examined to what extent the population-level BAAS can accurately predict individual-level variations in arousal, specifically on a trial-by-trial basis. To this end, the single-trial analysis was used to obtain individual-specific trial-wise activation maps. Subsequently, the BAAS pattern expressions of these single-trial activation maps were calculated and then correlated with corresponding true ratings for each subject separately. In the discovery cohort, the BAAS accurately predicted individual arousal experience (n = 40 trials; within-participant r = 0.55 ± 0.01 SE, 10 × 10 cross-validated; Fig. 2c). Of note, the BAAS successfully predicts trial-by-trial arousal ratings in all participants within the discovery cohort (all P ≤ 3.8 × 10−3). We additionally validated the predictive performance of the BAAS (developed on data from the discovery cohort) on the validation cohort with respect to predicting single trial responses in each participant in the discovery cohort. The predictive performance of the BAAS on the validation cohort was similar to those observed in the discovery cohort (n = 28 trials; within-participant r = 0.60 ± 0.03 SE, Fig. 2c), and the BAAS significantly predicted trial-wise affective experience for over 88.8% participants in the validation dataset. Taken together, our findings suggest that BAAS accurately predicts the level of momentary arousal feelings on the individual level.

Evaluating generalization of BAAS in independent datasets

We examined whether the BAAS could capture affective arousal across valence, various stimulus modalities and different experimental approaches. A substantial body of evidence suggests a V-shaped relationship between affective valence and arousal levels, wherein individuals experiencing more intense positive or negative emotions generally report heightened arousal9 (Fig. 1). We first determined whether the BAAS distinguishes high arousal negative experiences from low arousal negative experiences across affective domains, stimulus modalities and generalizes to the stimulus-free state (aversive anticipation) (Fig. 2d). Study 3 employed a naturalistic affective video-watching fMRI paradigm involving strong disgust-inducing, arousal-matched negative, and neutral video stimuli (affective ratings see Supplementary Table S2). We found that the BAAS successfully classified disgust and negative from neutral experiences with high accuracies (disgust versus neutral: accuracy = 97 ± 1.8% SE, P < 1.00 × 10−10, Cohen’s d = 2.64, negative versus neutral: accuracy = 100 ± 0.0% SE, P < 1.00 × 10−10, Cohen’s d = 3.08) supporting its generalizability (Fig. 2d ‘Study 3’). Of note, the BAAS could not classify arousal matched negative (arousal: mean ± standard deviation (SD) = 7.15 ± 0.41) from disgust experience-specific neural activity in this dataset (arousal: mean ± SD = 7.23 ± 0.50) (accuracy = 60 ± 5.5% SE, P = 0.09, Cohen’s d = 0.31, Supplementary Fig. S3), further supporting that the signature captures arousal across negative affective states. Study 4 induced negative experience with four types of aversive stimuli - painful heat, painful pressure, aversive images and aversive sounds34. The BAAS was able to effectively distinguish between high and low levels (i.e., level 4 and level 1) of aversive image (accuracy = 78 ± 5.6% SE, P = 3.31 × 10−5, Cohen’s d = 1.08), thermal pain (accuracy = 76 ± 5.7% SE, P = 1.14× 10 −4, Cohen’s d = 0.96), mechanical pain (accuracy = 73 ± 6.0% SE, P = 1.00 × 10−3, Cohen’s d = 0.90), and aversive sound (accuracy = 67 ± 6.3% SE, P = 0.01, Cohen’s d = 0.48) (Fig. 2d ‘Study 4’). Additionally, the BAAS could accurately predict the aversive ratings induced by aversive image (r = 0.30, P = 6.70 × 10−6), thermal pain (r = 0.24, P = 4.09 × 10−4), mechanical pain (r = 0.40, P = 6.04 × 10−10), and aversive sound (r = 0.16, P = 0.02). These results indicated that the BASS generalizes to pain-associated states and across stimulus modalities. In line with the extension to pain assessment, in study 558, the BAAS successfully predicted subjective pain ratings (prediction-outcome r = 0.41, P = 2.15 × 10−9) as well as accurately discriminated high levels of pain versus low levels of pain (44.8 °C vs. 48.8 °C) (accuracy = 85 ± 6.2% SE, P = 6.62 × 10−5, Cohen’s d = 1.16) (Fig. 2d ‘Study 5’). Moreover, we tested whether the BAAS is independent of the actual stimulus exposure by testing generalization to data from an ‘Uncertainty-Variation Threat Anticipation’ (UVTA) task in study 616. During this task participants anticipate aversive electrical stimulation with varying levels of uncertainty and retrospectively rated their anxious arousal during the anticipation period. The BAAS accurately predicted the subjective anxious experience with prediction-outcome r = 0.45 (P < 1.00 × 10−10) (Fig. 2d ‘Study 6’), confirming its generalization to stimulus-free internal mental processes. Together, these findings demonstrate that within the negative affect domain, the BAAS shows strong generalizability across stimuli and modalities, and this generalizability also extends to affective experiences that do not involve animate stimuli, such as noxious heat and pressure.

We next determined generalizability of the BAAS in the positive domain by capitalizing on data from 10 independent studies, employing different strategies and modalities to induce high arousing positive affect across the experience and anticipation period (e.g., music, images of appetizing food, erotic images, cues of monetary rewards, and socially relevant stimuli with 2 studies in each category)41,42,59,60,61,62,63,64,65,66. The results indicated that BAAS exhibited significantly stronger responses to positive compared to control conditions in nine out of ten studies (refer to Table 1 and Fig. 2d ‘Studies 7–16’), and a marginally significant stronger response (P = 0.07) in the remaining study, suggesting that the BAAS can capture high-arousal positive experiences across stimulus modalities (i.e., vision and auditory). Importantly, in line with the findings in the negative affect domain, the BAAS showed well predictive performances in positive affective experiences induced by both animate stimuli (e.g., erotic images) and inanimate objects (e.g., cues of monetary rewards).

These findings were further replicated in a large dataset with arousal-matched negative and positive affect induction67 (n = 150, Fig. 2d ‘Study 17’), where the BAAS accurately classified negative and positive from neutral conditions (negative vs. neutral: accuracy = 83 ± 3.1% SE, P < 1.00 × 10−10, Cohen’s d = 1.12; positive vs. neutral: accuracy = 92 ± 2.2% SE, P < 1.00 ×10−10, Cohen’s d = 1.78). Overall, BAAS showed remarkable generalizability across valence domains and multiple modalities.

Finally, we evaluated BAAS response in an imagination task (Fig. 2d ‘Study 18’). The task required participants to imagine various scenarios, including both positive and negative events during fMRI scanning68. We compared the neural responses to these positive and negative imagined events to a baseline condition. The BAAS exhibited significantly stronger responses to both positive (t(25) = 4.97, P = 4.03 × 10−5, 95% CI = [0.06, 0.13], Cohen’s d = 0.97) and negative (t(25) = 3.32, P = 2.80 × 10−3, 95% CI = [0.03, 0.11], Cohen’s d = 0.65) imagined events relative to the baseline. These results demonstrate that the BAAS, originally identified based on responses to external stimuli, is also capable of predicting internally generated affective experiences.

Notably, these sixteen datasets were collected with diverse MRI scanners, populations and were preprocessed using various pipelines. Our findings collectively highlight the robust generalizability and high sensitivity of the BAAS originally developed in the movie-watching paradigm in effectively predicting high affective arousal experiences across valence domains, diverse stimuli, modalities, MRI systems, preprocessing pipelines, and populations. Additionally, the BAAS is not limited to capturing animate objects in the affective domain (more evidence see Supplementary Results and Supplementary Table S6).

Validating specificity of BAAS in independent datasets

To sufficiently consider the specificity of BAAS, we conducted tests on BAAS in a series of independent datasets. We first tested whether valence, another core dimension of affective experience, contributes to BAAS predictions. Given that both positive and negative video clips induced high arousal in the validation cohort (positive: mean ± SD = 7.37 ± 0.35; negative: mean ± SD = 7.94 ± 0.29, details of videos see Supplementary Table S2), we hypothesized that if BAAS primarily captures arousal rather than valence information, it should accurately classify high arousing positive and negative video clips from low arousing neutral video clips, yet it might not differentiate between the negative and positive video clips. Consistent with our hypotheses, BAAS demonstrated significant discriminability of unspecific high arousal experiences (positive versus neutral: 97 ± 2.7%, P = 1.08 × 10−9, Cohen’s d = 2.63; negative versus neutral: 97 ± 2.7%, P = 1.08 × 10−9, Cohen’s d = 2.90) and poor accuracy in discriminating between two high-arousal experiences (negative versus positive: 53 ± 8.3%, P = 0.87, Cohen’s d = 0.04) (Supplementary Fig. S4), suggesting high specificity of the BAAS for capturing arousal across valence.

Next, to estimate the effect of potential confounding factors (e.g., attention, or working memory), we examined BAAS performances during several effortful cognitive tasks (studies 19–22). Specifically, we assessed the BAAS response to incongruent and congruent trials with low levels of discriminability in both the Eriksen Flanker task69 (t (25) = 0.34, P = 0.74, 95% CI = [−0.04, 0.06], Cohen’s d = 0.07) and the Simon task70 (t (20) = −0.16, P = 0.87, 95% CI = [−0.07, 0.06], Cohen’s d = −0.04) (Supplementary Fig. S5a). Additionally, the BAAS did not differentiate 1-back blocks from the baseline in the N-back task71 (accuracy = 67 ± 8.6% SE, P = 0.10, Cohen’s d = 0.72) (Supplementary Fig. S5b). Given the well-established role of emotional arousal regulation in memory consolidation72,73,74,75,76, we further evaluated the BAAS in an emotional memory task77. The BAAS exhibited significantly stronger responses to all remembered items compared to all forgotten items (t(26) = 9.43, P = 7.12 × 10−10, 95% CI = [1.00, 1.56], Cohen’s d = 1.81; classification accuracy = 89 ± 6.0%, P = 4.92 × 10−5, Cohen’s d = 1.59), consistent with the observation that all remembered items received higher subjective arousal ratings than all forgotten items (t (26) = 5.88, P = 3.35 × 10−6, 95% CI = [0.27, 0.56], Cohen’s d = 1.13). To further determine whether the BAAS captures affective arousal independently of memory processes, we conducted additional analyses. All forgotten items had higher subjective arousal ratings than remembered neutral items (t(26) = 3.83, P = 7.07 × 10−4, 95% CI = [0.14, 0.48], Cohen’s d = 0.74), and in line with this the BAAS exhibited higher responses to all forgotten items relative to remembered neutral items (t(26) = 2.54, P = 0.02, 95% CI = [−0.24, −0.03], Cohen’s d = 0.49; classification accuracy = 70 ± 6.2%, P = 3.84 × 10−3, Cohen’s d = 0.65). Additionally, BAAS showed significantly stronger responses to remembered positive and negative items compared to remembered neutral items (positive: t (26) = 8.23, P = 1.02 × 10−8, 95% CI = [0.24, 0.39], Cohen’s d = 1.59; classification accuracy = 89 ± 6.0%, P = 4.92 × 10−5, Cohen’s d = 2.24; negative: t (26) = 7.97, P = 1.89 × 10−8, 95% CI = [0.27, 0.46], Cohen’s d = 1.53; classification accuracy = 96 ± 3.6%, P = 4.17 × 10−7, Cohen’s d = 2.17) (Supplementary Fig. S5b). These findings did not suggest that the affective arousal decoder is solely driven by a higher memorability of high arousing stimuli.

To further verify that this affective arousal signature is dissociable from wakefulness and autonomic arousal, we examined its discriminability during a sleep-wake study with concomitant fMRI- electroencephalogram (EEG) (study 23) and Pavlovian threat conditioning task (study 24). In the sleep-wake study78, 33 participants had two 10-min resting-state sessions and several sleep sessions, with simultaneously collected fMRI and EEG, and exhibited both sleep and wake stages (details see Supplementary Methods). Of these, 28 participants with complete data were included, and the BAAS was applied to their time series to obtain mean pattern responses for sleep and wake stages. Results showed that the BAAS could not significantly differentiate wakefulness from sleep (accuracy = 54 ± 9.4% SE, P = 0.85, Cohen’s d = −0.27) (Fig. S6a). In the Pavlovian threat conditioning task79, simultaneously acquired fMRI and psychophysiological arousal responses (i.e., galvanic skin response, GSR) were recorded for each participant. Consistent with our previous study79, we only included participants (n = 58) who exhibited stronger psychophysiological threat responses (i.e., mean CS + > mean CS−, conditioned stimulus, CS) during threat acquisition. The BAAS was unable to discriminate CS+ (associated with high GSR) from CS− (associated with low GSR) (accuracy = 60 ± 6.4% SE, P = 1.15, Cohen’s d = 0.49, see Supplementary Fig. S6b). Additionally, we employed the BAAS to predict subjective fear experiences (level 0–5) and GSR (level 0–5) induced by images of animals in study 25. The results indicated that the BAAS predicted fear experience (r = 0.18, P = 0.02) but not GSR (r = −0.14, P = 0.08, of note, indicating a negative association). Similar findings were observed in study 26 (details provided in the section below). Together the results from these three studies do not reveal a consistent prediction of GRS responses by the BAAS.

While our extensive specificity tests across several datasets indicate the BAAS does not strongly respond to automatic arousal, wakefulness or cognitive demand, these findings should be interpreted cautiously and future studies are required to test the specificity in well-controlled designs allowing to determine the extent to which specific factors interact with affective arousal within the BAAS (e.g., memorability).

The neurofunctional characterization of the affective arousal signature

To understand the neurofunctional core system of affective arousal in humans we carefully determined the brain regions reliably contributing to the BAAS prediction performance. To this end, we conducted bootstrap tests to identify voxels that most reliably contribute to the prediction (q < 0.05, false discovery rate (FDR) corrected). As shown in Fig. 3a, activity in amygdala, periaqueductal gray (PAG), parahippocampal gyrus, lateral orbitofrontal cortex (lOFC), dorsomedial prefrontal cortex (dmPFC), subgenual anterior cingulate (sACC), and superior frontal gyrus (SFG) strongly predict increased affective arousal. Conversely, most reliable negative weights (i.e., higher arousal with decrease activity) were found in the posterior insula/rolandic operculum, supplementary motor area (SMA), middle frontal gyrus (MFG), inferior parietal lobule (IPL) and posterior cingulate cortex (PCC). These regions have been reported in the literature for a range of highly arousing emotional experiences. Moreover, parcellation-based analysis was employed to determine local brain regions that were predictive of affective arousal. Specifically, for each of the 275 brain regions80,81, we trained and cross-validated parcel-wise models predictive of affective arousal in the discovery dataset and further tested them in the independent validation dataset. As shown in Fig. 3b, affective arousal experience could be significantly predicted by activations in widely distributed regions (P < 0.001, uncorrected), which overlapped with a number of areas in BAAS patterns thresholded at q < 0.05 (FDR corrected, 5000-sample bootstrap).

a Shows the thresholded BAAS weight maps based on 5000 samples bootstrap test (q < 0.05, FDR corrected). b Displays brain regions that significantly (two-sided P < 0.001, uncorrected, equivalent to FDR q < 0.002) predict affective arousal experience in both discovery (10 × 10-fold cross validation) and validation cohorts revealed by parcellation-based analysis. Statistical significance was assessed using Pearson correlations between predicted and actual outcomes. c Summarizes multivariate patterns trained on individual subjects and depicts brain regions that consistently predict subjective affective arousal across n = 60 participants (the discovery cohort) using a one-sample t-test (q < 0.05, FDR corrected) based on training a separate model for each subject’s trial by trial ratings. In (a) and (c), hot color indicates positive values, whereas cold color indicates negative values. In (b), hot color indicates the averaged prediction-outcome Pearson correlation coefficients from the discovery cohort and validation cohort. BAAS Brain affective arousal signature, aIns anterior insula, Amyg amygdala, dmPFC dorsomedial prefrontal cortex, FG fusiform gyrus, IFG inferior frontal gyrus, lOFC lateral orbitofrontal cortex, MFG middle frontal gyrus, MOG middle occipital gyrus, PAG periaqueductal gray, PCC posterior cingulate cortex, PHG parahippocampal gyrus, pIns posterior insula, sACC subgenual anterior cingulate, SFG superior frontal gyrus, SMA supplementary motor area. Source data are provided as a Source Data file.

Given that affective experience is a highly subjective and individually constructed state, with significant variations among individuals82, we further developed a predictive model for each participant based on single-trial data and performed a one-sample t-test analysis (treating participants as a random effect) on the weights derived from individualized multivariate predictive models (q < 0.05, FDR corrected). This approach is based on separate linear SVR prediction analysis for each participant in the discovery cohort using their single-trial activation maps and the one-sample t-test allows to identify arousal-predictive regions consistently engaged across participants. In line with the population-level BAAS model, we observed that amygdala, parahippocampal gyrus, PAG, anterior insula, dmPFC, and SFG robustly predict enhanced affective arousal, while reductions in affective arousal were strongly linked to signals from posterior insula, SMA, MFG, PCC (Fig. 3c). Notably, brain regions associated with affective arousal determined with univariate parametric modulation approach identified a similar set of broadly distributed regions (see Supplementary Results and Supplementary Fig. S7).

To further validate the identified biologically plausible affective arousal brain system, we additionally compared the thresholded BAAS (q < 0.05, FDR corrected) with the conjunction of the meta-analytic maps of ‘negative’ and ‘positive’ affective experiences which were obtained from the Neurosynth (association test q < 0.05, FDR corrected, maps see Supplementary Fig. S8). Given that feeling of arousal are more likely to be accompanied by valanced feelings, positive or negative9, we hypothesized that the overlapping between these two meta-analytic maps might share common brain regions with the BAAS. Consistent with our prediction, several overlapping regions (e.g., amygdala, parahippocampal gyrus, including inferior frontal gyrus (IFG), lOFC, and sACC) between two patterns were observed using conjunction analyses (Fig. 4a). As shown in Supplementary Fig. S8, brain regions including IFG, SFG, dmPFC, amygdala, parahippocampal gyrus, and insula positively associated with both ‘negative’ and ‘positive’ meta-analytic maps were also found significant in the BAAS, though there were not directly overlapping. Conversely, SMA and precentral gyrus regions were negatively associated with both ‘negative’ and ‘positive’ meta-analytic maps and were reliably predictive of decreased arousal in the BAAS. These findings offer evidence confirming the identified regions involved in affective arousal processing.

a Displays overlapping areas between thresholded BAAS and the conjunction of the meta-analytic maps of ‘negative’ and ‘positive’ affect from Neurosynth. b Shows that the performance of the model was evaluated by using an increasing number of voxels/features (on the x-axis) to predict subjective affective arousal in different regions of interest, such as the whole brain (in black), cerebellar brain (in pink), subcortical regions (in dark blue) or large-scale resting-state networks. The y-axis depicts the cross-validated correlation between predicted and actual outcomes. The colored dots demonstrate the average correlation coefficients, while the solid lines indicate the average parametric fit, and the shaded regions reflect the standard deviation. The model’s performance is optimized by randomly sampling approximate 10,000 voxels across the entire brain. c Shows the relative proportions of the voxels of thresholded BAAS within each large-scale functional network given the total number of voxels within each network. Red represents voxels with positive weight in the BAAS, while blue represents voxels with negative weight. BAAS Brain affective arousal signature, VN Visual network, SMN Somatomotor network, DAN Dorsal attention network, VAN Ventral attention network, LIN Limbic network, FPN Frontoparietal network, DMN Default mode network, SCT Subcortical network, CB Cerebellar brain. Source data are provided as a Source Data file.

Together, our results identify the conscious experience of affective arousal is represented in distributed subcortical and cortical regions involved in salience information processing (e.g., amygdala, insula)83,84, emotional awareness (e.g., frontal regions)85, and emotional memory (e.g., hippocampus)86, as well as areas that have been emphasized as a core system mediating the arousal function in animal models (brainstem)19.

Alternative models to determine the contribution of isolated arousal-prediction models: BAAS predicts more precise that isolated networks

Previous studies have emphasized contributions of DMN, salience and subcortical networks to affective arousal processing28,29 and psychological construction theories of emotion propose arousal as one component of the affective space underlying functions that are neurobiologically supported by large-scale networks39. To address this question, we obtained the predictive SVR model by restricting it to masks corresponding to (1) each of seven large-scale resting-state functional networks, (2) a subcortical network, and (3) the cerebellum (see Methods session) in the discovery cohort. This allowed us to examine the extent to which these models could predict arousal experiences compared to the whole-brain BAAS. The results showed that brain networks, especially the visual network and subcortical network, to some extent, predict subjective affective arousal ratings (see Fig. 4b and Supplementary Table S3). Considering the potential effects of the number of features/voxels in prediction analyses, as shown in Fig. 4b, we implemented a control procedure. Specifically, we conducted 5000 repeated random voxel selections from different brain regions, including the entire brain, subcortical, or individual resting-state networks (averaged over 5000 iterations). This approach was carried out to assess the impact of varying the sampled brain systems. Interestingly, our results revealed that the asymptotic prediction achieved when sampling from all brain systems, as demonstrated in the BAAS (black line in Fig. 4b), was gradually stronger than the asymptotic prediction within individual networks (colored line in Fig. 4b) and substantially better than single network when voxels were randomly sampled more than 1000. Of note, though several networks showed higher prediction performance than BAAS with limited voxels (e.g., n = 50), the prediction differences between networks and BAAS were not significant (Z scores ≤1.17). This analysis confirmed that whole-brain models exhibit significantly larger effect sizes than models using features from a single network. Moreover, consistent with previous studies on affective neural signatures13,14, the optimum BAAS performance was achieved when ~10,000 voxels were randomly sampled across the whole brain. Crucially, the performance was contingent upon a diverse selection of voxels derived from multiple brain systems. These findings further support the notion that information about affective arousal experiences is combined in the neural signature that extends across multiple systems.

In addition, we calculated the overlapping ratio between significant features of the BAAS and each large-scale functional network, subcortical network and cerebellar brain (Fig. 4c). The positive predictive weights of the model showed important overlaps with voxels of the visual (26.75%), default mode (16.72%), ventral attention (22.13%), and subcortical (10.83%) networks, and the negative one had a remarkable overlap with visual (31.37%), frontoparietal network (21.24%), somatomotor (16.67%) and dorsal attention (15.69%) networks. These findings further support the notion that arousal is represented across widely distributed brain networks.

Affective arousal and autonomic arousal engage distinct neural representations in humans

Previous conceptualizations have hypothesized that affective arousal and autonomic arousal may operate through distinct neural mechanisms19. Given GSR has been validated as a stable and valid index of autonomic arousal87, we developed a whole-brain pattern for decoding autonomic arousal based on brain activation maps corresponding to various levels of GSR in study 25 (n = 25, leave-one-subject-out (LOSO) cross-validation procedure, see Supplementary Methods), consistent with the approach described by Taschereau-Dumouchel et al.15. The developed autonomic arousal signature accurately predicted the level of autonomic arousal (within-subject correlation coefficient r = 0.79 ± 0.03 SE; overall prediction-outcome correlation coefficient = 0.61). To further validate the developed decoder, a two-alternative forced-choice test was applied in the Pavlovian threat conditioning task (study 24). In line with CS+ being accompanied by higher GSR than CS-, the autonomic arousal decoder response accurately classified CS+ versus CS- with 78 ± 5.5% SE accuracy (P = 3.01 ×10−5, Cohen’s d = 0.91) (Fig. 5a). Of note, the autonomic arousal decoder developed in the current study used the same training dataset as that developed by Taschereau-Dumouchel et al.15. The only difference is that the current study employed a gray matter mask, whereas Taschereau-Dumouchel et al.15 used a whole-brain mask. As expected, the two decoders exhibit similar functional performance (the decoder from Taschereau-Dumouchel et al.15 classified CS+ versus CS- with 78 ± 5.5% accuracy (P = 3.01 ×10−5, Cohen’s d = 0.87)) and spatial similarity (Pearson’s r across gray matter voxels is 0.92). Moreover, the generalizability of the autonomic arousal decoder was additionally validated in the original study using three independent datasets (for details see ref. 15). Notably, in study 26, the autonomic arousal decoder also showed well generalizability (see below for detailed results).

a During a Pavlovian threat conditioning fMRI task (study 24, n = 58), the developed autonomic arousal signature could classify CS+ (associated with high GSR) versus CS− (associated with low GSR) (shown as two-alternative forced-choice classification accuracy and Cohen’s d). b In study 26 (n = 43), BAAS more accurately discriminates high anxious arousal experience from low anxious arousal experience, while autonomic arousal more accurately discriminates high autonomic arousal from low autonomic arousal (shown as two-alternative forced-choice classification accuracy and Cohen’s d). c Scatter plot displaying normalized voxel weights for affective arousal (BAAS, x-axis) and autonomic arousal (AAS, y-axis) signatures. Bars on the right side represent the sum of squared distances from the origin (0,0) for each Octant. Each Octant is assigned a different color, indicating voxels with shared positive or shared negative weights (Octants 2 and 6, respectively). Octant 1 represents selectively positive weights for the positive affect signature, Octant 3 represents selectively positive weights for the negative affect signature, Octant 5 represents selectively negative weights for the positive affect signature, Octant 7 represents selectively negative weights for the negative affect signature, and Octants 4 and 8 represent voxels with opposite weights for the two neural signatures. The numbers at the top of each bar indicate the number of voxels in each Octant. d Regions present a significant prediction of subjective affective arousal ratings and GSR. *P < 0.05, ***P < 0.001, NS P > 0.05. P values in forced-choice tests were based on two-sided binomial tests. BAAS Brain affective arousal signature, GSR Galvanic skin response, CS conditioned stimulus. Source data are provided as a Source Data file.

To test whether affective arousal and autonomic arousal exhibit (partially) distinguishable neural representations, we employed a double dissociation approach which is a weaker form of the separate modifiability criterion that has been one of the main ways of dissociating mental processes in neuropsychological studies88,89. To this end, we first applied the BAAS and autonomic arousal signature to an independent fMRI dataset collected during a UVTA task from study 2616. This produced beta maps corresponding to anxious arousal ratings and categorized brain activation maps based on five different levels of GSR during anxious anticipation for each subject (see Supplementary Methods for details). We found that the BAAS significantly predicted anxious arousal ratings with r = 0.26 (P = 1.55 × 10⁻⁴) and discriminated high (average of ratings 4 and 5) versus low anxious arousal experience (average of ratings 1 and 2) with high accuracy (77 ± 6.4%, P = 6.06 × 10⁻⁴, Cohen’s d = 0.99). In contrast, the BAAS showed low predictive performance for GSR responses (r = 0.06, P = 0.41) and failed to classify high GSR (average of ratings 4 and 5) from low GSR (average of ratings 1 and 2) above chance level (60 ± 7.5%, p = 0.22, Cohen’s d = 0.05). Additionally, the autonomic arousal signature predicted autonomic arousal ratings with r = 0.21 (P = 1.81 ×10−3) and successfully classified high GSR (average of ratings 4 and 5) versus low GSR (average of ratings 1 and 2) with 67 ± 7.1% accuracy (P = 0.03, Cohen’s d = 0.61), but could not predict anxious arousal ratings (r = 0.08, P = 0.23) nor discriminate high anxious experience (average of ratings 4 and 5) from low anxious experience (average of ratings 1 and 2) (53 ± 7.6% accuracy, P = 0.76, Cohen’s d = 0.26).

To further test the hypothesis of partially separable neural representations underlying affective arousal and autonomic arousal, we explored the joint distribution of normalized (Z-scored) voxel weights of these two patterns by plotting BAAS on the x-axis and the autonomic arousal signature on the y-axis (for similar approach see ref. 90). As visualized in Fig. 5c, stronger weights across the whole brain (sum of squared distances to the origin [SSDO]) were located in the non-shared Octants (1 and 5, i.e., positive/negative weights for autonomic arousal signature but not for BAAS).

These dissociations would provide support for the notion that the two signatures do not exclusively rely on a single shared neurofunctional representation at the whole-brain level. However, it does not necessarily mean that affective arousal and autonomic arousal cannot be highly correlated at the same time nor that these two measurements exhibit distinct neural representations in each brain system. To further evaluate this aspect, we next tested whether some brain systems exhibit common representations of subjective affective arousal ratings and GSR on the local level. To this end, we retrained the two signature models by restricting their activation to each of 275 regions80,81 using a LOSO cross-validation procedure following Taschereau-Dumouchel et al.15 and next predicted affective arousal ratings in study 1 and GSR in study 25 (see Methods for details). As shown in Fig. 5d. significant regions most commonly involved in both predictions include subcortical regions such as the amygdala, hippocampus, basal ganglia, and thalamus and cortical regions including the ACC, insula, precentral gyrus, and superior parietal lobule as well as some cerebellar regions.

Together, these results suggest that the neural representations of affective arousal are distinguishable from those of autonomic arousal on the whole-brain level, while common brain representations may underlie both affective and autonomic arousal processes at the local level.

BAAS improves the specificity of previously established affective neural signatures

To examine whether the BAAS could enhance the specificity of previously developed affective neural signatures, we conducted a series of analyses with two signatures. The visually negative affect signatures (VNAS) was designed specifically to respond to visually induced negative affect without encompassing other forms of negative experience or more positive experiences34. In study 1, VNAS was capable of distinguishing negative (i.e., fear and disgust) from positive (i.e., happiness), and neutral stimuli with 76.19% and 93.65% accuracy, respectively (Fig. 6a and Supplementary Table S4; for detailed descriptions of each emotional video category in study 1 see Supplementary Methods). Despite these high accuracies, the specificity of the VNAS was limited due to higher response to positive compared to neutral videos (accuracy = 75.40%, P = 9.61 × 10−9, Cohen’s d = 1.22). We assessed whether incorporating arousal as control could enhance the prediction specificity of the VNAS. By adjusting for arousal rating, we found that the predictions for negative affect were maintained, with VNAS effectively distinguishing negative from positive and neutral videos, achieving 67.46% and 61.91% accuracy, respectively. Importantly, post-adjustment, the VNAS showed comparable responses to positive and neutral videos (accuracy = 51.59%, P = 0.79, Cohen’s d = −0.26). These findings demonstrated the potential of arousal rating regression in refining the specificity of the VNAS. Crucially, we found that adjusting the responses of the VNAS based on BAAS responses could improve prediction specificity to comparable levels, if not identical levels, to those achieved through direct arousal rating adjustments (Fig. 6a; see also Supplementary Table S4). Following correction, VNAS showed comparable responses to positive and neutral videos (accuracy = 52.38%, P = 0.66, Cohen’s d = −0.10). Notably, the adjusted approaches also allowed the VNAS to predict negative versus positive videos more accurately than negative versus neutral videos, underscoring the utility of controlling arousal in refining prediction specificity. These findings were further replicated in study 17, where positive, negative, and neutral emotions were elicited by pictures without acquiring arousal ratings. Results consistently showed that regressing out the BAAS response enhanced the prediction specificity of the VNAS (see Supplementary Results for statistic details).

a in the left panel, VNAS displayed higher responses to negative affect, while it also significantly responded to positive compared to neutral conditions; in the middle and right panels, after two correction methods (actual arousal ratings, BAAS response correction), VNAS showed similar responses to positive and neutral conditions (study 1, n = 63). For details, see Supplementary Results. b in the left panel, VIDS showed higher responses to targeted emotion (i.e., disgust), while it significantly responded to happiness and fear compared to neutral emotions; in the middle and right panels, after arousal-rating-corrected or BAAS-responses corrected, VIDS still performed higher response to disgust, while it showed comparable responses to happiness, fear, and neutral emotions (study 1, n = 63). Of, note, the violin and box plots show the distributions of the signature response. The boxplot’s boundaries are defined by the first and third quartiles, while the whiskers extend to the maximum and minimum values within the range of the median ± 1.5 times the interquartile range. *P < 0.05, **P < 0.01, ***P < 0.001, NS P > 0.05. P values in forced-choice tests were based on two-sided binomial tests. VNAS visually negative affect signatures, VIDS visually induced disgust signature. Source data are provided as a Source Data file.

To further examine the reliability of our approach, we replicated the procedures using another affective neural signature, i.e., visually induced disgust signature (VIDS)13. In study 1, VIDS predicted subjective disgust with robust discriminative power in distinguishing disgust from fear, happiness, and neutral videos, achieving 71–97% accuracies (Fig. 6b and Supplementary Table S5). However, the VIDS also showed a heightened response to happiness (accuracy = 81.75%, P < 1.00 × 10−10, Cohen’s d = 1.33) and fear (accuracy = 83.33%, P < 1.00 × 10−10, Cohen’s d = 1.42) compared to neutral videos. Additionally, the VIDS exhibited a higher response to fear compared to happiness videos (accuracy = 64.29%, P = 1.70 × 10−3, Cohen’s d = 0.32). To address this issue, we incorporated adjustments for arousal ratings and BAAS responses. Following these adjustments, VIDS showed comparable responses to happiness and neutral videos, fear and neutral videos as well as fear and happiness videos (see Fig.6b and Supplementary Table S5).

Collectively, our findings demonstrate the substantial potential of BAAS in refining the specificity of affective neural signatures, highlighting its prospective utility in advancing future models of affect prediction.

Discussion

Arousal represents a key dimension of the affective space and accompanies all affective experiences91. Despite its central role in overarching models of emotion and extensive animal models focusing on the autonomic or vigilance components of arousal a comprehensive and accurate neurofunctional model of the conscious affective experience component is lacking. Establishing such a model may allow to test whether arousal is represented independent of valence, separable from the representation of autonomic arousal and may facilitate accurate neural decoding of specific emotional states47,49. We combined multisensory naturalistic neuroimaging with predictive models to develop and extensively evaluate a sensitive and generalizable brain signature for affective arousal (BAAS). The signature accurately predicted affective arousal on the group and individual level in the discovery cohort and showed robust predictive performance in an independent replication sample that covered a different emotional space. Moreover, extensive evaluation of the BAAS across a series of independent neuroimaging datasets confirmed the efficacy of the BAAS in predicting affective arousal across valence domains, modalities (e.g., visual, auditory, sensory, and noxious stimulation), experimental paradigms, and samples, as well as the high specificity of the BAAS in capturing affective arousal component. To determine the core systems of affective arousal in the human brain, we combined diverse multivariate and univariate analyses. The biological plausibility of the BAAS was further validated by shared common features between the BAAS and conjunction meta-analytic maps of both positive and negative affect, as derived from Neurosynth, which together with the topographic distribution of the most predictive regions of the BAAS, indicates that the amygdala, parahippocampus, PAG, and medial prefrontal systems contribute to high affective arousal across valence domains. Our work also evidenced that the neural representation and predictive domain of the BAAS were moreover separable from a signature predictive of autonomic arousal, indicating that the conscious emotional experience and the autonomic components are represented in partly dissociable neural representations (in line with refs. 15,16). Lastly, and in line with the ‘overshadowing’ concept of high arousal overshadowing the determination of specific emotional states, we demonstrated that the BAAS could be successfully employed to considerably enhance the specificity of existing neural signatures for the target emotional state, suggesting a high application potential for the generation of more specific neuromarkers for mental processes. Together, our findings demonstrate that affective arousal can be accurately decoded from distributed brain systems encompassing both subcortical and cortical areas, with the predictive accuracy of no single region or network surpassing that of the comprehensive whole-brain model. This underscores the complexity and integrative nature of neural mechanisms underlying affective arousal39,82. We provide a comprehensive neurofunctional representations of affective arousal and demonstrate that the corresponding distributed neural representation cuts across the valence domain is separable from autonomic arousal and overshadows precise decoding of specific emotional states.

The current findings revealed that affective arousal depends on the coordinated engagement of distributed representations throughout the entire brain rather than on activity in isolated brain systems, which is consistent with recent neural decoding studies demonstrating that affective processes are represented across brain systems12,13,14,92. Specifically, affective arousal experience requires concerted engagement of brain-wide distributed representations with comparable strong contributions of subcortical regions involved in affective, autonomic and motor responses to highly salient stimuli in the environment (amygdala, parahippocampus, PAG, and caudate)30,74,93,94, anterior cortical midline structures associated with global arousal and implicit emotion regulation (medial prefrontal cortex, ACC)79,95,96, anterior insular systems involved in interoceptive and affective awareness97,98 and sensorimotor integration (occipital cortex, SMA)99, as well as regions that have been identified as key mediators of wakefulness and alertness in animal models (brainstem)19,25. Furthermore, no individual large-scale brain network significantly outperformed the BAAS in predictive performance (see Fig. 4b). Conjunction analysis revealed that the core systems (thresholded BAAS) of affective arousal are distributed across several large-scale brain networks. Notably, the DMN, ventral attention network and subcortical network exhibited significant proportions of positive predictive weights for BAAS. These findings align with previous literature suggesting that the DMN (e.g., anterior medial prefrontal cortex, portions of the ventromedial prefrontal cortex) and salience network (e.g., ACC, insula, amygdala) play crucial roles in affective arousal and conscious emotional experience19,29,100. From an evolutionary perspective, the engagement of these systems may facilitate the detection of salient information in the environment and trigger autonomic processes to generate the adaptive cognitive and homeostatic response83. Together, these findings underscore that distributed representations of affective arousal support appraisal101 and constructionist82 theories of emotions that propose the shared but also distinct distributed neural systems that span cortical and subcortical regions facilitate subjective affective experiences.

From a larger conceptual perspective, affective neuroscience and affective space theories propose that high affective and autonomic arousal will cut across the valence domain and characterize high-intensity positive and negative emotional experiences. In support of these conceptualizations, previous imaging meta-analysis uncovered overlapping brain regions (e.g., amygdala, striatum, thalamus, medial prefrontal cortex, ACC, and SMA) implicated in both positive and negative emotions28, which may represent systems involved in general emotion processing (e.g. appraisal) as well as affective and autonomic arousal5,9. Testing the generalization of the BAAS revealed that the signature robustly decoded high positive and negative experiences to a similar extent but did not differentiate between them. Together with a flanking meta-analytic strategy identifying overlapping regions between the BAAS and both positive and negative emotional experiences, these findings indicate that the amygdala, parahippocampal gyrus, inferior and dorsomedial frontal regions code affective arousal irrespective of valence (for supporting evidence from conventional strategies see refs. 27,28,102,103,104). To further differentiate the contribution of hard-wired physiological arousal responses to the valence-independent affective arousal signature we employed a validated autonomic arousal signature and demonstrate that the BAAS has a considerably higher specificity for affective arousal. These findings are in line with a growing literature suggesting that the neural representations of the hard-wired autonomic responses and the conscious affective experience are distinguishable (for example, refs. 15,16) and underscore that the BAAS captures the affective component. Notably, consistent with previous research105, we found that – at the local level – a number of subcortical (amygdala, thalamus), cerebellar and insular-ACC regions predicted both affective and autonomic arousal, emphasizing the critical role of these systems in general arousal processes. Our work thus presents converging evidence demonstrating that while distributed neural representations constitute distinguishable substrates of different arousal facets (i.e., affective and autonomic arousal), there are some regions that encode affective and autonomic arousal with similar neural representations.

As outlined in different domains, including subjective emotional experiences, emotion recognition as well as neural and autonomic signatures, increasing arousal progressively overshadows the characteristics of specific emotional states. From the perspective of affective neuroimaging, it is therefore critical to control for non-specific emotional processes such as arousal which is inherently associated with the affective experience11,47,49. We explored this conceptual and technical perspective by employing the BAAS in combination with evaluated robust signatures for general negative affect (VNAS34) and disgust (VIDS13). In line with the overshadowing concept, the signatures showed limited specificity during immersive high-arousing emotion processing (study 1, study 17 for convergent evidence see also refs. 35,47). While the arousal contribution considerably affects neural decoding as well as conventional affective neuroimaging experiments106 solutions to this issue have not yet been effectively identified. We here capitalized on the BAAS to critically enhance the predictive specificity of these affective neural signatures by directly regressing out the experience of arousal. The enhanced specificity demonstrated the effectiveness of controlling for the influence of affective arousal on the prediction of emotional states. Furthermore, our findings indicate that adjusting the BAAS response yields a comparable effect to adjusting actual subjective arousal ratings. This holds particular importance given that arousal ratings are not consistently gathered in affective neuroimaging research (or the assessment can strongly interfere with the actual mental process under investigation). Our work provides a simple yet potent strategy by employing the BAAS to mitigate the impact of affective arousal, thereby significantly improving the predictive specificity of other affective neural signatures.

While the current study shows that the BAAS generalizes to multimodal affective arousal, it does not mean that each feature (i.e., voxel) contributes to the predictions of the BAAS similarly across stimulus types. Previous studies have shown that there are common and stimulus-type-specific brain representations of affective experience1,34. Future work is necessary to understand the stimulus-type-specific neural representations of the affective arousal domain. Additionally, some important brain systems of affective arousal are anatomically and functionally connected (e.g., amygdala and hippocampus) and some previous studies demonstrated the engagement of brain networks in wakefulness arousal fluctuations (for example, ref. 107), underscoring a potential role of brain pathways and network level communication in affective arousal74,108 and future research may aim to deepen our understanding of the neurobiological basis of affective arousal by mapping the brain pathways that underlie this process. Moreover, the current study primarily focused on elucidating the functional brain architecture underlying general affective arousal. However, specific neural representations may correlate strongly with self-reported arousal for either positive or negative events. Future studies could investigate the distinct neural patterns underlying the intensity of positive and negative affective experiences. Lastly, considering the characteristic of affective arousal, BAAS was mainly tested in sympathetic arousal-related processes; distinguishing the contributions of parasympathetic and sympathetic arousal to these neural patterns may provide valuable insights into the regulatory mechanisms of affective states in future studies.

In summary, we established a comprehensive distributed, generalizable, and specific predictive neural signature of affective arousal. The signature was validated and generalized across modalities, valence, paradigms, participants, and MRI systems. We showed that the neural representations of affective arousal are encoded in multiple distributed (large-scale) brain systems rather than isolated brain regions. The affective arousal signature cut across the valence domain and was distinguishable from hard-wired autonomic arousal, indicating a valence-unspecific, yet affective arousal-specific neural representation. Based on the characterization of the BAAS, we further developed a novel approach to enhancing the specificity of affective neural signatures. The current work extends our understanding of the neurobiological underpinnings of affective arousal experience and provides objective neurobiological measures of affective arousal. These measures can complement self-reported affective arousal and serve as effective supplementary tools to enhance the prediction specificity of affective models.

Methods

Overview

This research utilized data from n = 26 studies. Study 1 served as the primary study and discovery cohort, which was employed for the main analysis and development of the affective arousal signature (i.e., BAAS). Study 2 involved new emotional video stimuli to validate the identified affective arousal signatures in an independent cohort. Studies 3–18 comprised multiple stimuli and modalities and were utilized for the evaluation of generalizability. Studies 19–24 involving cognitive processes were applied to test the effect of potential confounding factors in the model. The autonomic arousal signature was developed in study 25 and validated in study 24. Finally, the affective arousal signature and autonomic arousal signature were compared using data from study 26. Additional details for each study are provided in Supplementary Table S1, and an overview of the main analysis is depicted in Fig. 1.

Participants in study 1

Sixty-three healthy, right-handed participants were recruited from the University of Electronic Science and Technology of China (UESTC) to undergo fMRI scans during a subjective arousal rating paradigm using movie clips. All participants had no current and past physical, neurological, or psychiatric disorders and had not taken any medication in the month before the experiment. Three participants were excluded due to a lack of high arousal experience (the number of high arousal clips was less than 2, where high arousal was defined as an arousal rating score above 6), resulting in a final analysis of n = 60 participants (23 females; mean ± SD age = 19.55 ± 1.87 years).

The experiment procedure was approved by the local ethics committee at the University of Electronic Science and Technology of China (No. 06142292724710) and was conducted under the latest version of the Declaration of Helsinki. Before the regular experiments, all participants were informed that they would be shown fearful and disgusting movie clips on the screen and completed written informed consent. After the experiment, participants received monetary compensation for study participation.

Movie stimuli, arousal rating paradigm, and procedure in study 1

The movie stimuli were selected from a large pool of clips that were downloaded from an online sharing platform. This selection process was performed by two research assistants. A total of 80 movie clips, each lasts ~25 s and were categorized as positive, negative, and neutral. During the pre-study, independent participants (n = 26; 10 females, mean ± SD age = 21.62 ± 1.92 years) were asked to attentively watch the clips, and rate their level of arousal, valence, and emotional consistency using a 9-point Likert scale (1 = low arousal or negative or low consistency, 9 = high arousal or positive or high consistency), and select one emotional word (such as ‘fearful’, ‘disgusting’, ‘angry’, ‘sad’, ‘surprising’, ‘happy’, ‘neural’, or ‘ambiguous’) that could accurately describe their emotional experience following each clip. The stimuli rating experiment was delivered using PsychoPy109 version 1.85 under Windows. Low arousing neutral and high arousing positive and negative videos were selected as fMRI experiment stimuli while high emotional consistency (rating > 7) was included to serve as the reference condition.

The current study employed a within-subject design. In the arousal rating fMRI paradigm (see Fig. 1a), participants were presented with 40 arousing movie clips over 3 runs. Each run included 14 or 12 clips, which depicted humans, animals, and scenes. The order of runs and trials within a run was the same for all participants, and the order of conditions was counterbalanced before the experiment. Each video lasted approximately 25 s and was followed by a white fixation cross on a black background for 1–1.2 s. During the subsequent 5 s period, participants were instructed to report their level of arousal during each movie using a 9-point Likert scale, where 1 indicates very low arousal and 9 indicates very high arousal. This was followed by a 10–12 s wash-out period. Participants watched a total of 20 low-arousal neural movie clips, 10 high-arousal positive clips, and 10 high-arousal negative clips (details provided in Supplementary Table S2). Stimulus presentation and behavioral data acquisition were controlled using MATLAB 2014a (Mathworks, Natick, MA) and Psychtoolbox (http://psychtoolbox.org/).

Before the fMRI experiment, all participants were trained on the laptop to understand the definition of ‘arousal’. After the scanning, they were asked to select the correct screenshot of movie clips presented during the fMRI experiments in a post-test.

fMRI data acquisition in study 1

fMRI data were collected using standard sequences on a 3.0-Tesla GE Discovery MR750 system (General Electric Medical System, Milwaukee, WI, USA). Structural images were acquired by using a T1-weighted MPRAGE sequence (TR = 6 ms; TE = 2 ms, flip angle = 9°, field of view = 256 × 256 mm2; matrix size = 256 × 256; voxel size = 1 × 1 × 1 mm). Functional images were acquired using a T2*-weighted echo-planar sequence (TR = 2000 ms; TE = 30 ms; slices = 39; flip angle = 90°; field of view = 240 × 240 mm; matrix size = 64 × 64, voxel size: 3.75 × 3.75 × 4 mm).

Participants in study 2

A total of 38 healthy right-handed UESTC students were initially recruited for the study. Following exclusion criteria based on low arousal ratings for positive or negative videos in the fMRI experiments, 36 subjects (17 female, mean ± SD age = 21.03 ± 2.22 years) were enrolled in the final analysis. All participants were free of current and past physical, neurological, or psychiatric disorders and had no contraindications for MRI. Provided written informed consent before participation and the experimental procedures were approved by the local ethics committee at UESTC (No. 06142292724710), in accordance with the latest version of the Declaration of Helsinki. Monetary compensation was provided to all participants for their participation in the study.

Movie stimuli, arousal rating paradigm in study 2

Stimuli used in study 2 were entirely different from those used in study 1. All movie clips (~60 s duration of each) were selected by two researchers to ensure a diverse and non-overlapping set of stimuli. An independent sample of 52 participants (32 females, mean ± SD age = 21.00 ± 2.51) reported the valence, arousal, emotional consistency, and emotional experience (‘fearful’, ‘disgusting’, ‘sad’, ‘ambiguous negative’, ‘happy’, ‘exciting’, ‘romantic’, ‘ambiguous positive’, and ‘neutral’) while watching each video. Of note, when participants had multiple positive or negative emotions, the labels ‘ambiguous positive’ or ‘ambiguous negative’ were chosen (e.g., if a video evokes both happiness and excitement). The selected fMRI video stimuli were chosen based on the results of the behavioral experiment as (1) they were rated high emotional consistency (rating > 7) and (2) their core emotional experience was accurately described (i.e., no positive videos labeled with negative emotions, and no negative videos labeled with positive emotions).

In the arousal rating fMRI paradigm, there were a total of 28 one-minute videos. These videos represented two affective states (9 positive clips and 9 negative clips) as well as a neutral state (10 neutral clips). More details can be found in Supplementary Table S2. During fMRI, all stimuli were presented in three runs, with either three or four clips from each category in each run. The fMRI paradigm used in the validation cohort was the same as the one used in the discovery cohort, except for differences in the presentation time settings for the stimuli.

fMRI data acquisition in study 2