Abstract

This study offers a new method for measuring polarization by using advanced computer vision techniques that involve object detection and measurements of physical distances between pedestrians. Motivated by escalating political polarization around the world, and specifically by the ideological divide between secularism and political Islam in Turkey, we analyze more than 1,400 publicly available YouTube videos recorded on the streets of Turkey. From these videos, we extract and use approximately 170,000 frames that show pedestrians. The analysis detects and categorizes pedestrians based on their gender and level of religiosity by using the YOLOv5 algorithm and develops and refines two innovative distance estimation techniques for calculating the relative distances between pairs of pedestrians. Our unique technical approach allows us to convert the 2D distances in the street videos into 3D relative distances between pedestrians of different genders and levels of religiosity. These distances are then used as a proxy for measuring the extent of polarization. The study concludes that social factors significantly influence these distances, with individuals from similar backgrounds (i.e., religious people, religious females, and non-religious females) tending to walk closer to their in-group. The greatest distances are measured between non-religious males and religious females, as well as between religious females and non-religious males, reflecting traditional gender boundaries in predominantly Muslim communities and highlighting how religious and cultural norms shape social interactions. The image dataset we have assembled stands as the most extensive collection of thematic street imagery found in computational social science research and represents the largest dataset ever gathered for analyzing political polarization in Turkey.

Similar content being viewed by others

Introduction

“Science is the most reliable guide in life.”

– Mustafa Kemal Atatürk.

“We will raise a religious generation.”

– Recep Tayyip Erdoğan.

In recent years, the surge of political polarization between secularism and political Islam within Turkish society has become a subject of significant concern and scholarly inquiry. In the streets and alleys of a Turkish city like Ankara or Istanbul, a street scene unfolds: a woman dressed soigné attire and embracing a relaxed posture walks, crossing paths with another woman, a believer, draped in a colorful hijab (as common in Turkish culture) and wrapped by a heavy silky clothes that covers almost all her head and body. This juxtaposition vividly encapsulates the status quo of politically polarized Turkish streets. Measuring the extent of this phenomenon is crucial for developing effective strategies to mitigate the adverse effects and the understanding the political repercussions of polarization. This project embarks on a multidisciplinary approach, integrating advanced computer vision techniques with statistical estimation methodologies to obtain an understanding of how identities are potentially causally linked to political polarization. Although the growing polarization between religious and secular groups in Turkey serves as a vivid backdrop, the foremost contribution of this paper is methodological. Specifically, we propose a novel computer-vision approach that can be applied to a variety of socio-political contexts, with Turkey functioning here as an illustrative and empirically rich case study. By emphasizing our new technique rather than any single country’s political trajectory, we highlight how this framework can be generalized and adapted to different forms of polarization worldwide. Understanding political polarization, especially as it visibly manifests on the streets, is crucial in today’s global landscape. In the United States, political polarization has led to significant societal divisions, while in India, religious tensions are influencing political discourse. In Brazil, political clashes between supporters of different parties have become more visible and heated. In France, debates over secularism and the integration of Muslim communities have led to social unrest. Similarly, in the United Kingdom, Brexit has deepened visible ideological rifts within society. By examining Turkey’s secular and Islamic divide, this study provides valuable insights into how religious and cultural norms shape social interactions. These findings can inform global strategies to mitigate polarization, offering policymakers and community leaders data-driven approaches to foster social cohesion and bridge ideological divides in increasingly polarized societies.

Previous studies have shed light on how visual cues in political discourse contribute to polarization. Schill1 highlighted how visual symbols create political unity and high-light differences with opponents. Pauwels2 pointed out that the presentation of visual data can shape partisan views. Krogstad3 traced how visual persuasion has historically affected polarization, while Gerodimos4 emphasized the need for interdisciplinary research on visual stimuli’s emotional and cognitive impacts. Campante5 suggested that less media variety might lead to more uniform but polarized opinions. Simon6 saw politicization, often through visuals, as a precursor to polarization, and Barber7 associated visual branding with shifts to party-line politics. Together, these studies demonstrate the powerful role of visuals in political polarization, despite not using large-N data.

The main goal of this article is to understand the extent of political polarization in an ideologically diverse country such as Turkey by focusing on an important aspect of religious clothing: the headscarf. The headscarf, varieties of which are also known as the hijab in some other cultures, has been a crucial cultural identity, and—in some cases—a prominent political symbol, of religious women’s lives in Turkey, as it is part of the incarnation of their pious beliefs in Islamic identity8. Political Islam plays a crucial part in Turkey’s politics and appears to be one of the whips of polarization. “There is significant tension around the issue of secularism or laicism in the country”9. Also, as evidenced by the parliamentary and presidential election results since the 2000s, Turkey’s politics has been characterized by political fragmentation and the main points of contestation are religiousness (the two main groups are political Islamists and laicists) and ethnic identity (again, the two main groups are Turks are Kurds). Recep Tayyip Erdogan, the current president of Turkey, reduced political fragmentation among political Islamists by centralizing power and co-opting key factions into the ruling AKP. He strategically used alliances, such as the one with the Gu¨len movement until their fallout, and later with the Nationalist Action Party (MHP), to consolidate his control, thereby minimizing rival Islamist movements and creating a more unified political front. His political restructuring resulted in a less fragmented political Islamist landscape compared to secular groups and their political formations10,11. This interesting political landscape and other past studies spanning multiple decades and aspects of political polarization point at the necessity of a large-N study to analyze polarization in Turkish politics.

While these studies underscore the impact of visual cues, the physical and spatial dynamics of social interactions also play a crucial role in political polarization. Proxemics, as originally conceptualized by Edward T. Hall12, provides a framework for understanding how physical space and social environments reflect cultural dynamics, particularly in culturally diverse societies like Turkey. In this context, religious and non-religious groups occupy distinct social environments—such as different districts and venues—and maintain greater physical distances in public spaces13,14. This segregation deepens political polarization by reinforcing group identities and reducing cross-group interactions. Recent literature, building on these foundations, shows that such spatial behaviors foster group polarization, where homogeneous environments intensify preexisting beliefs, leading to more extreme views and further entrenching ideological divides15,16,17.

Recent large-N studies leveraging visual cues have advanced our understanding of political and social polarization. Bucy18 uses computer vision to analyze visual data from protests and candidate self-presentation, revealing how visual elements reinforce partisan divides. Dietrich19 employs motion detection in C-SPAN videos, finding that decreased cross-party interactions predict a 14.61 percentage point increase in party-line voting. The closest related literature by Dietrich and Sands20 extends this analysis to racial dynamics, showing pedestrians in New York City maintain a greater distance from Black individuals, averaging 3.1 to 4.4 inches more, translating to a 3.19% to 3.93% deviation of sidewalk width. These studies highlight the critical role of visual data in capturing subtle social and political interactions, providing robust quantitative evidence of how visual cues influence behavior and polarization.

Lastly, there is strong evidence that survey analysis do not always lead to a satisfactory understanding of the political division in the context of Turkey21. Then, the question becomes: How do we capture, clean, and deeply and meticulously analyze the extent of polarization? We believe that analyzing the visual data from the streets could be the answer. Pioneering technical approaches used by Dietrich and Sands20 and Wang and his colleagues22 in their detection of pedestrians on the campuses of Fudan University and the University of Pennsylvania have motivated us methodologically.

Drawing from the theoretical background and the current state of the literature, we aim to examine the following two groups of hypotheses in this paper:

H1: Visible religious choices will influence social interactions between people of different levels of religiosity.

H1a: Religious people will tend to walk closer to in-group members (versus the out-group).

H1b: Non-religious people will tend to walk closer to in-group members; however, the effect will be less differentiated versus the out-group because of the effects of political fragmentation.

H2: Visible gender identities will influence social interactions between people of different gender identities.

H2a: People of the same perceived gender will tend to walk closer to in-group members (versus the out-group).

H2b: The influence of gender on social interactions will be more pronounced for females (compared to males) because religiosity is more easily perceived due to the headscarf.

An important theoretical rationale behind H1b is that religious individuals often rely on distinctive outward signals—such as specific attire, beards, or headscarves—that immediately convey group membership. Because these markers are relatively standardized within each religious group, strangers can easily recognize a fellow in-group member. By contrast, non-religious individuals do not share a single visible ‘marker’ of non-adherence; they are more heterogeneous in their appearance. This heterogeneity makes it less likely that secular or non-religious pedestrians will spontaneously identify fellow ‘non-religious’ individuals as members of the same in-group. Consequently, we expect religious individuals on the street to group together at closer distances than non-religious individuals, whose group identity is not readily signaled to observers.

Our second main goal in this paper is to curate a comprehensive visual dataset comprising diverse elements that computational social science researchers can use to measure social interactions among human beings and to develop innovative methods for measuring political polarization. The compiled dataset encompasses over 1400 video clips showcasing human-centric street views from various cities of Turkey, thousands of frames extracted from these clips, and official voting and poll results, collectively representing the socio-political spectrum. The visual dataset we have compiled is the largest thematic street dataset presented in the computational social science literature; it is also the largest dataset collected to understand political polarization in Turkey.

To fulfill this purpose, datasets were meticulously selected, and machine learning models were trained. “Turkey Videos Reservoir” (TVR), a dataset that contains links to human-centric Turkey street YouTube videos, was scraped. By cutting those videos into frames, hand-labeling, and relevant cleaning, a training set (called HN-7450) and a test set (called HN-178413) were generated. These two datasets contain images that depict Turkish pedestrians. YOLOv5, an object detection model (ODM), was carefully selected among potential alternatives. Based on YOLOv5, HN-7450 is used to train Hijab-Net, a series of algorithms that can classify these pedestrians on images into various gender and political spectrums. Based on what was classified, relative distances between pedestrians among different genders and political spectrums were estimated.

In summary, our paper offers improvements on multiple different aspects of the empirical computer vision literature. The following methodological improvements have been explained in different sections of this paper: (1) Finding the Best Object Detection Model to Detect Humans on Streets: we discuss why YOLOv5, instead of other alternatives, was picked to detect pedestrians on the streets; (2) Human Labeling: a process to generate HN-7450 (which resulted in the collection of a training dataset of over 27,000 human objects that was later used to create our classification model called Hijab-Net) so that each pedestrian on each training image was labeled with designated genders and political categories; (3) Custom Object Detection Model based on YOLOv5: the training, analysis, and prediction process using the Hijab-Net model; (4) 3D Distance Estimation: estimation of relative distances based on the output of the Hijab-Net and conversion of 2D distances to 3D; and (5) Empirical Approach and the Presentation of Results: the use of regression analysis to demonstrate the extent of polarization. Figure 1 below shows the detailed data pipeline used in this study.

Discussion on object detection

A review of different object detection models

Since the purpose of this study is to compare the distances between citizens with different levels of religiosity on streets using frames extracted from videos, the first step is to choose a suitable object detection model to accurately detect pedestrians on the streets. This has been achieved by first looking at the existing models and evaluating their performance using various image datasets.

In the realm of machine learning and computer vision, object detection techniques have evolved significantly. Initially, hand-crafted features like Histogram of Oriented Gradients (HOG) were prominent. With advancements in methodology, the empirical literature at large has transitioned to neural networks and witnessed a shift from two-stage to one-stage detection models.

Our methods section delves into the nature of the models evaluated for our project.

The models include:

-

HOG (Histogram of Oriented Gradients)23: Analyzing gradient orientations to detect objects, emphasizing shape and structure.

-

R-CNN Family24,25,26: A paradigm shift in object detection, addressing localization accuracy and efficient training.

-

YOLO Family27,28: Known for real-time detection, performing detection in a single network pass21,7. YOLOv3 introduced an expansive feature extractor and improved detection capabilities.

-

RetinaNet29: A single-stage model with Feature Pyramid Networks and Focal Loss for improved detection.

-

EfficientDet30: Utilizing compound scaling, EfficientNet backbone, BiFPN, and optimized loss functions for enhanced performance.

Object detection models performance evaluation

In this step, three different datasets (each containing 100 randomly selected images) were chosen to evaluate the accuracy of different object detection models. First, having been used by past computer vision (CV) studies, two notable datasets come to the forefront: Cityscapes31 and Fudan-UPenn Pedestrian22. The Cityscapes dataset, curated from 50 European cities, presents a rich tapestry of diverse urban scenes at a high resolution of 2048 × 1024 pixels. Each image is intricately labeled down to the pixel level, making Cityscapes an excellent choice for projects requiring comprehensive city scene analysis31. On the other hand, the Fudan-UPenn Pedestrian dataset, a collaboration between Fudan University and the University of Pennsylvania, exclusively focuses on pedestrian identification. Cityscapes and Fudan-Upenn Pedestrian dataset will be referred to as the “Berlin” and the “Fudan-Upenn” dataset accordingly. Second, 100 frames edited from 29 videos containing Istiklal Street in Istanbul’s Beyoglu district were selected as the primary dataset (referred to as “Istiklal Dataset”), as this is one of the most bustling streets in Turkey. The first two datasets have been used for testing the models in general, and the Istiklal dataset has been used to ensure that the object detection model is the most suitable one for the specific project.

To select the best performances among the object detection models, YOLOv3, YOLOv4, YOLOv5, YOLOv8, RetinaNet, EfficientDet, Faster R-CNN, and HOG + SVM have been implemented on Berlin, Fudan-Upenn, and Istiklal datasets. The results from both hand labeling and machine detection using different object detection models have been compared using different standards. To be more specific, Euclidean Distance, standard deviation, F1 score, and their respective averages have been calculated and compared. The results are presented in Tables 1, 2, 3, 4, 5, and 6. The mathematical details and meaning of these metrics are discussed in “Appendix C”. Performance Measurements for Benchmarking ODMs.

Based on the results presented in the tables, it is evident that, for the Fudan-UPenn dataset, YOLOv3, YOLOv4, and EfficientDet exhibited relatively poor performance. Conversely, YOLOv5 and YOLOv8 demonstrated an overall superior performance, showcasing the lowest standard deviation and Euclidean Distance, respectively. In the case of the Berlin Street dataset, the HOG + SVM model displayed significantly higher Euclidean Distance and standard deviation compared to the other models, while YOLOv5 and Faster R-CNN performed exceptionally well. For the street image dataset of Turkey, Faster R-CNN emerged as the top-performing model, showcasing the lowest Euclidean Distance and standard deviation. The F1 score, a weighted metric representing both recall and precision, also aligned with the other evaluation metrics. The cells highlighted in bold, denoting the lowest Euclidean Distance, lowest standard deviation, and highest F1 score, further emphasize these findings.

The comprehensive results reveal that there is no single best algorithm for all datasets. Thus, to arrive at a well-rounded decision for further implementation in this study, a normalization process was undertaken. Upon normalization, both YOLOv5 and YOLOv8 exhibited satisfactory results, showcasing relatively small average standard deviation and Euclidean Distance. Considering YOLOv5, which outperformed YOLOv8 in all aspects, has been readily available for over three years, whereas YOLOv8 is a more recent release with limited testing and debugging, opting for YOLOv5 as the model for further implementation appears to be the most appropriate decision.

Additionally, the runtime is a crucial aspect to consider in the evaluation, especially given the need to process over 170,000 frames for the actual experiment. Although the specific runtime varies based on hardware conditions, Faster R-CNN requires a longer runtime due to its two-stage nature. Therefore, choosing YOLOv5 aligns well with the consideration of runtime efficiency.

Data collection and labeling

As part of the study, we built a custom object detection model that can detect genders and levels of religiosity based on the architecture of the previously chosen objection detection model, YOLOv5. This section aims to produce a gallery of images from publicly available YouTube videos that capture street pedestrians and the urban environment of major cities in Turkey. After carefully hand-labeling pedestrians in the frames based on their genders and levels of religiousness, the training dataset was used to train the custom object detection model. Overall, based on TVR, an unlabeled raw image collection, named “Unlabeled HN-9000 dataset” is generated. Carefully applying the “Degree of Religiousness Criteria” (DRC), HN-7450 is manually labeled and pruned from Unlabeled HN-9000. All images utilized in this research are publicly available, eliminating the need for consent regarding their collection, storage, and subsequent empirical analysis.

Collection of unlabeled HN-9000

The relevant video content for the study has been rendered through a systematic search approach. Specifically, using English and Turkish languages, all matching responses to the following search queries have been extracted from YouTube search results: “[Province Name] street video”. Later, the PyTube package in Python was used to access YouTube videos and target specific keywords in the video descriptions. In the following step, the keywords, such as “street video”, district names, and dates that are extracted from the metadata were used to filter relevant street video content. For the compilation of a general-purpose video dataset, we also iterated through the TVR filtered again by the keywords of interest, such as city names, street names, video contents, etc. In the final stage, each video was matched with a city and district pair. Based on the information in the TVR, training, validation, and test sets that have each pedestrian labeled using different colors of bounding boxes were created. Nine cities in Turkey were selected to create a diverse dataset that aims to distinguish people based on different levels of religiousness. The chosen cities were Ankara, Antalya, Bursa, Gaziantep, Istanbul, Izmir, Konya, Samsun, and Van. These cities represent the population centers from seven different geographic regions of Turkey. Associated with each of these cities, 10 videos were randomly selected from the TVR to extract the frames that will be used in the training set. Utilizing the CV2 Python package, we automated the process of capturing screenshots at a specified rate of 10 frames per second. Through this process, a random selection of 1,000 video frames from each city was conducted, culminating in a dataset comprising 9,000 video frames, with an even distribution of 1,000 images from each city. This dataset is denoted as Unlabeled HN-9000; and, finally, a total of 27,582 pedestrians have been extracted from the randomly sampled 9,000 frames.

The contents of the videos from TVR span the street views of 111 distinct districts and 81 unique provinces. Specific variables’ names and explanations are included in the “Appendix B”. Variables in TVR. In addition, Unlabeled HN-9000 is a collection of 9000 raw frames. For further labeling, those frames were uploaded to Roboflow, a Software as a Service (SaaS) site that allows programmers to label and train their neural-network-based object detection models.

Manual labeling process of HN-7450: forming and applying the DRC

The empirical goal is to train a neural-network based model to identify the “level of religiousness” of human objects in Unlabeled HN-9000. Understanding the level of religiousness is crucial to understanding the politics of Turkey. In the following sections, distances will be estimated between human objects. The distances will then be used to quantify the polarization.

Prior to the model training, manual labeling is necessary to build up the accuracy. The frames are all labeled according to the “Degree of Religiousness Criteria” (DRC), to elaborate: DRC proposed herein constitutes a comprehensive framework for the assessment of individuals’ religious affiliations based on observable attributes, primarily focused on attire and gender distinctions. For women, the criteria stratify religiousness into four distinct categories. Non-religious females are identified by their uncovered heads and clothing that accentuates the body, with arms, shoulders, and potentially revealing attire. Neutral females maintain uncovered heads while clothing conceals the body, encompassing fully or mostly covered features. In the religious female category, women’s heads are veiled, yet their clothing remains body-revealing, albeit full body coverage is expected. The very religious female category signifies women with covered heads and non-revealing clothing, characterized by full body concealment. Conversely, the criteria for men delineate religiousness into similar classifications, distinguishing non-religious males’ attires with body-revealing elements, neutral males’ attires with fully or mostly covered features, religious males’ attires, where headwear indicates religious affiliation, and very religious males’ attires, which feature Islamic-style clothing and religious headwear.

This framework provides a systematic means of evaluating religious adherence, considering gender-specific clothing and its implications. We also believe that our dataset is representative for measuring religiousness and polarization in Turkey. The impact of potential noise in the data, such as the assumptions made regarding adherence to Islam in the Turkish context, as well as the inclusion of non-Turkish individuals in the frames, is limited.

First, a strong majority of the Turkish population has a Muslim identity. Different studies indicate that 94% of Turkish population are self-declared Muslims32. Second, a very high percentage of the people captured in the videos are believed to be Turkish citizens. In this regard, a source of concern might have been the number of non-Turkish tourists visiting the country; however, this issue also has a limited impact on our findings, since the average number of tourists visiting Turkey does not exceed 5–7% of the total population of Turkey on a monthly basis33.

As a result, using the Roboflow platform, a total of 7,450 frames have been annotated and over 27,000 people have been labeled. Kids or people with uniforms that are hard to distinguish, as well as all people captured indoors, have been neglected during the labeling process. Our labeling standards for pedestrians include specific criteria to ensure the accuracy and reliability of the data. We avoid labeling pedestrians if they overlap with each other by more than one-third of their bounding box area or if more than one-third of their bounding box area is out of the image frame. Additionally, bounding boxes with heights less than one-fourth of the frame height (indicating they are far away from the camera) are not labeled. This rigorous standard helps maintain the quality of the labeled data and avoids introducing errors that could impact the validation of our distance measurement methodology. Two main reasons for the loss of 1550 images were: 1) the ambiguity when applying DRC, and 2) some of the frames lack human objects. The labeled image collection is referred to as HN-7450. A discussion on the intercoder reliability scores can be found in the “Appendix D”. Following the labeling process, the Roboflow platform autonomously divides the outcome into training, test, and validation sets. Additionally, prior to executing a custom object detection model based on YOLOv5, a descriptive analysis is conducted to more thoroughly examine the distribution of the labeled individuals.

Descriptive findings from HN-7450

Figure 2, a numerical representation of which is Table 7, shows the distribution of religiousness in each city found in the training set. Blue/purple color represents males and red color represents females. The plot shows that there exists a largely over-represented neutral male category, while religious males and very religious males are both highly under-represented. The representations of males in the dataset encompass over 50% of each category and about 70% of the entire observations. Also, people with religious affiliations were less dominantly visible on the streets compared to their non-religious counterparts. An approximately 3-to-7 ratio is displayed between the religious and non-religious groups. It is noteworthy that this ratio is slightly underestimated because neutrally dressed religious people are inevitably considered as “neutral” (and not potentially religious or non-religious). This is acceptable because pedestrians tend to perceive others also based on appearances. A study shows that during common human interactions, how people dress is a shallow but frequent representation of themselves and each other34. How we identify the pedestrians in those images is most likely not significantly different from how the adjacent pedestrians perceive each other.

Model building: custom object detection

As there are no pre-trained effective object detection models to distinguish people from different levels of religiousness available for public use, we needed to build a custom object detective model with relatively high accuracy. Based on YOLOv5, HN-7450 is used as the training set to generate Hijab-Net, the customized ODM that is at the backbone of this study. Hijab-Net is able to classify human figures in frames into different levels of religiousness. Models 1 to 5 are designed for various categories.

After we trained and benchmarked five different object detection models (ranging from Model 1 through 5), we decided to choose Models 2, 4, and 5 (our reasoning is provided in the next section). The series of models were then used to make predictions with the HN-178413 dataset (which will also be introduced later).

Models comparison

Model 1: This model provides predictions for each of the eight categories shown in Fig. 2, which include four categories for males and four for females. It makes individual predictions for each subcategory. The model performance is summarized in Table 8. The training took approximately 2.015 h for 100 epochs and the custom object detection model was trained on a personal laptop with a NVIDIA GeForce RTX 2080 Super GPU and a Intel Core i9-10980HK CPU. We evaluate the performance of the models mainly using the mAP50 score. The mAP (mean Average Precision) score is calculated and interpolated from the precision/recall curve (See “Appendix D”, 3.3.3), and the 50% level was designated to be the threshold of positive and negative classification: to be considered positive, the ratio of overlap ao calculated by must exceed 50%, where Bpredicted stands for the predicted bounding boxes and Bground truth represents the manually labelled bounding boxes35. The overall mAP50 for all categories combined was 0.352, with the “neutral-male” category achieving the highest mAP50 score of 0.705 and “very-religious-male” obtaining the lowest score at 0.011.

Model 2: For this model, predictions are made for four aggregated categories: religious females (combining religious females and very religious females); religious males (combining religious males and very religious males); non-religious females (combining neutral females and non-religious females); and non-religious males (combining neutral males and non-religious males). The training spanned 2.287 h over 100 epochs. The model achieved an overall mAP50 of 0.581. Among the categories, “non-religious-male” exhibited the best mAP50 result at 0.735. The model performance is summarized in Table 9.

Model 3: The third model focuses on predicting all categories of females, encompassing non-religious females, neutral females, religious females, and very religious females. The training duration was 2.211 h across 100 epochs. The combined mAP50 for all categories was 0.371, with “non-religious-female” demonstrating the highest individual mAP50 score of 0.576. Table 10 summarizes the performance of Model 3.

Model 4: This model predicts two distinct categories: Religious females (by merging religious females and very religious females) and non-religious females (by combining neutral females and non-religious females). Requiring 2.390 h for 100 epochs of training, the model got a mAP50 stood at 0.579. Individually, “non-religious-female” secured a mAP50 of 0.626. Table 11 summarizes the performance of Model 4.

Model 5: The fifth model streamlines predictions into two broad categories: religious people and non-religious people. Religious people is a combination of religious females, very religious females, religious males, and very religious males. Conversely, non-religious people grouped neutral females, non-religious females, neutral males, and non-religious males. The training took 2.493 h over 100 epochs. The overall mAP50 was measured at 0.654, with “non-religious-people” leading with a mAP50 score of 0.766. Table 12 summarizes the performance of Model 5.

In summary, across the five models, consolidating categories appears to enhance precision and mAP scores. Specifically, Model 2, Model 4, and Model 5, which employ broader classifications, demonstrate superior performance metrics. This suggests that for the given dataset, generalized categories might yield more consistent and reliable results than finer classifications. Balancing granularity with performance during model training is vital, and in this scenario, the merged categories seem to offer greater efficacy. YOLOv5, the fundamental ODMs of those three models, would provide an output for each frame, with information about the human object being detected, including the categories of the human objects (in numerical values, where different numbers represent different categories), the x and y of the center of the bounding boxes, as well as the width and the height of each bounding box. All results have been normalized within the range from 0 to 1.

The implementation of prediction set: HN-178413

The prediction set is generated using a similar method as to generate HN-9000; in this case, one frame is sampled every 10 s, which generates frames across all 1439 videos. As a result, we obtain 178,413 frames, which are then classified by Hijab-Net Model 2, Model 4, and Model 5 for the detection of human objects. The result of such a process is recorded so that it can be further studied in the distance estimation section.

Methodology: distance estimation

To understand which religiousness and gender groups tend to walk closer on the street, relative distances are worth estimating. Based on the gender and religiousness groups detected and identified by three Hijab-Net Models (Models 2, 4, and 5), this section puts efforts into examining the distances between them. Multispectral Imaging, Detection, and Active Inference (MiDaR)36, a widely-used tool specialized in 2-dimensional image displays, along with relevant data pruning, calculation, and normalization, are used to commit such an estimation during a carefully improving process.

Review of image distance estimation tools

Noteworthy, distances between two objects, in reality, are measured in 3D, while images, a 2D display, lack abilities to interpret the depth of the vista by themselves. Though there exists a wide variety of 3D estimation methods based on images, none of them are suitable for our aim because of the requirement of at least one of the following properties: standardized formatting, fixed camera angle, and reference objects, none of which HN-7450 images obtain. To exemplify, in “Real-Time 3D Object Detection From Point Cloud Through Foreground Segmentation”22, a LIDAR-based 3D Object Detection method is used with a high-resolution camera at a fixed angle and height with known reference objects on the streets. The LIDAR provides a point cloud which turns into a sparse BEV feature map. Without the fixed angle high-resolution camera, the task is impossible to be fulfilled33 In “Monitor Social Distance Using Python, YOLOv5, OpenCV”37, the idea of Bird’s Eye-View Transformation is introduced where in the perspective view image, the streets are at an angle while in the bird’s eye view image, the streets are parallel to each other. Without having bird’s eye view images, the actual distances between pedestrians are impractical to be calculated. In “Measure Distance in Photos and Videos Using Computer Vision”38, the method of drawing bounding boxes is used in the objects they are interested in. Then, computer vision is used to calculate the distance between objects with the condition of knowing the objects’ length. Roboflow’s API is used to calculate the pixels and then convert them into inches for estimating the actual distance.

Since existing methods would not be able to provide a feasible solution to the current problem, the development of a new method is required, which is detailed in the next section.

Relative distance calculation

When calculating the distance between two points in the 2D image displays, the formula of Euclidean Distance has been utilized widely. The formula is shown below, where the only information required is the x and y coordinates for the two points.

The output of YOLOv5 from the previous phase provides information on the x and y of each of the bounding boxes. In other words, YOLOv5 returns results for the position, in terms of relative x and relative y, of the center point for pedestrians detected. An important thing to notice is that YOLOv5 only returns the relative values but not the actual ones, meaning that all the values have been normalized within each frame, to the range of 0 to 1.

With relative x and relative y, the Euclidean Distance formula could be used. However, it is obviously questionable to only take x and y into consideration, since the streets in the real-life situation are all under 3D circumstances, and the depth of the pedestrian to the camera also plays an important role. Therefore, different approaches to generate a relative depth have been implemented one after another, in order to solve existing problems in the previous approaches and improve the accuracy of the model. Furthermore, a progression of how to combine the x, y, and z values has also been done.

Approach 1: Besides the x and y of the center of the bounding boxes, YOLOv5 also returns relative width and relative height for each bounding box. If assuming that every male is about the same height and width, it is obvious to conclude that the area of the bounding boxes would be the same if all human objects were in the same relative depth compared to the camera. In other words, the greater the area of the bounding box, the closer the pedestrian would be to the camera, in terms of the relative depth. Thus, a further conclusion could also be inferred, that is, when trying to compare relative depth between different object pairs, the area ratio could reflect the closeness of the object pairs to some extent.

To be more specific, when the area ratio is equal to the larger bounding box’s area divided by the smaller bounding box’s area, the area ratio should be larger for distancing object pairs and smaller for closer object pairs. Imagine a setting where several individuals are shown at varying distances from the camera. Specifically, “person 1” and “person 2” occupy the foreground, situated closer to one another in depth than “person 1” and “person 3,” who appears further in the background. Despite this close proximity, when comparing the relative size of their outlines (as defined by the measurement approach mentioned earlier), the ratio associated with persons 1 and 2 is smaller than that of persons 1 and 3. This illustrates that simply being positioned nearer in a three-dimensional space does not always produce a proportionally larger outline, underscoring the nuanced relationship between physical distance and how subjects appear in a two-dimensional projection.

The positive correlation between the area ratio and the relative depth could be used in interpreting the relative z. For instance, z could be reflected using the equation below.

Moreover, a combination of z with x and y could be calculated as follows in order to get the relative distance:

However, the potential problem that exists using this approach would be that the relationship between the area ratio and the relative depth is not linear. The nature of logarithmic relationship between the area of the bounding box and the depth can be read in greater detailed in the Appendix (see Fig. 14). Because of linearity, using the area ratio directly to represent the relative depth would lose its accuracy. Secondly, simply calculating the product of z and the result from Euclidean Distance may not be considered a robust approach. Figure 3 shows the output sample of an image from Istiklal street, Istanbul taken by one of the authors.

Approach 2: Approach 2 has been developed to solve the existing problem mentioned in Approach 1 thus improving the accuracy of the result. As mentioned previously, since the positive correlation between the relative depth and the area ratio of the bounding box is not strictly linear, discovering the actual relationship between these two variables would be essentially helpful in improving the accuracy. Therefore, a self-testing has been conducted (see “Appendix I”) and concluded that there exists an inverse square root relationship between the area of the bounding boxes and the depth of the human object toward the camera. Therefore, the relative depth z for each bounding box could be expressed as below.

Secondly, the combination of x, y, and z needs to be improved, and the 3D Euclidean Distance formula has been used to ensure credibility.

Although Approach 2 solved the existing problem in Approach 1, it still has some limitations. First, the method of calculating relative depth z would work theoretically, but still need systematic and numerous rounds of testing to ensure its credibility, since researchers rarely used this formula previously. Self-testing involving depth would be time-consuming since unlike the vertical or horizontal distances that could be measured within a frame, it requires manual measuring of the distances in real-life situations. Secondly, the relationship between the two variables only strictly follows the formula when the camera angle is at an eye-level. Although most of the street videos are shot nearly eye-level, some slightly tilted camera angles would lead to inaccurate results. Therefore, a further progression of the depth estimation method is required.

Approach 3: Approach 3 uses existing depth estimation methods, MiDaR, that largely solve the problem in approach 2. MiDar refers to Multispectral Imaging, Detection, and Active Inference and it only needs images as input and has no technical requirement on camera angle and focal length. The MiDaR technology has been used by many researchers for their purpose and thus does not require self-testing compared to the self-developed formulas in the previous two approaches. For example, NASA used a transmitter with MiDaR technology to create maps of coral reefs in Guam.

As mentioned, MiDaR requires a single frame as input and outputs the relative depth for each pixel, where higher values represent closer pixels (in-depth), and vice versa. In the setting of this study, each frame has been reshaped to 1024 × 1024 pixels2.

The first step is to reverse the result in order to let the closer pixels be represented by smaller values, and secondly, a normalization is needed to make sure the relative depth is within the range of 0 to 1. Both conductions ensure that the relative z value is consistent with the x and y results from YOLOv5, as they need to be in the same direction and the same range of magnitude for further combination. As a result, the relative z has been generated using the output from MiDaR and the formula below, where z′ stands for the depth before normalization and z is the relative depth:

After getting the relative depth for each pixel within each frame, as stated before each pixel would have a relative depth value and each frame contains 1024 × 1024 pixels2. The equation below converted the relative x and relative y (denoted as x and y in the equation below) to corresponding pixels as the result has been rounded to the nearest upper integer.

As each center of the bounding boxes has its relative depth, by using MiDaR as a depth estimation tool and combining the result from MiDaR and YOLOv5 using the 3D Euclidean Distance formula, the relative distance has been calculated for each human object. Although the method might be inaccurate if the center point of a pedestrian has been overlapped by other objects in front of it, this kind of case rarely happens in the dataset. Therefore, combining the normalized result from MiDaR and the output from YOLOv5 would be the most accurate and efficient method among alternatives—and, it is also the method we have decided to use for our analysis.

Although the methodology of the distance measurement is logical on a theoretical approach, we found it necessary to validate the method and ensure it reaches a satisfactory accuracy for our research. Therefore, a validation of the final methods with a test of the accuracy can also be found in “Appendix I”.

Table 13 that summarizes the progress and improvements has been shown below.

Actual distance vs. relative distance

After a successful calculation of the relative distances within each single frame using YOLOv5, MiDaR, and the 3D Euclidean Distance formula, we need to offer a discussion on the usefulness of these approaches in comparison. In other words, how does the robustness of the relative distance results extracted from each method compare across different frames?

Converting the relative distance to the actual distance using a reference object would be a typical approach since the topic of this study is to address a real-world problem. However, in this circumstance, converting relative distance to actual distance is neither feasible nor suitable.

First of all, converting relative distance to actual distance is not feasible. The video dataset contains a vast amount of observations, including videos from different streets with different camera angles and there exists no fixed reference object that could be used in terms of the conversion. Therefore, even if we would have assumed an average height for every male and female objects detected in the videos (a potential suggestion could be the average height for males and females in Turkey), and used one of the pedestrians’ height as a reference point, the conversion would still not be feasible. For instance, in an image where two people are significantly distanced from each other along the depth-axis, but not as much along the horizontal-axis, the result of the actual distance would be notably different when using the left human object as a reference compared with using the right human object as the reference.

Secondly, even if one can accurately convert the relative distance to the real distance, it remains questionable to do so within the context of this research. This is because the paper aims to discuss the distancing between pedestrians due to differences in levels of religiousness, not due to the level of crowdedness of each street. Comparing actual distances takes the level of crowdedness into account, which could significantly impact the results. For instance, consider two scenarios: the first involves two religious pedestrians who are 3 m apart on a less crowded street, while the second involves a non-religious pedestrian and a religious pedestrian who are 0.5 m apart on a crowded street. It would not be reasonable to claim that the religious pair are distancing themselves further compared to the religious vs. non-religious pair. Therefore, the results would be more persuasive if the impact of street crowdedness on the results was minimized, and comparing the relative distance would be more appropriate for this research. The logic of using relative distance essentially places every group of pedestrians in the same imaginary area space (with the same area and level of crowdedness) and observes how they distance from each other, as shown in Fig. 4. In this manner, relative distance has been calculated and used for comparison across the frames, and the approach to using relative distance pairs and conducting the comparison has been explained in detail in the subsequent section

.

Distance score aggregation and normalization method

After the successful gaining of frames and the determination of distances for each pair of individuals, the subsequent phase involves the computation of each class score for each frame. This transition marks a crucial progression in the analytical process, as it shifts the focus from individual distance measurements to an aggregated class-level evaluation in order to make the comparison.

Step 1: Frame selection criteria

The initial stage involves a careful selection of frames that are helpful for an insightful analysis of spatial relationships. Selection is based on criteria ensuring that only frames contributing to inter-class dynamics are included:

-

1.

Exclusion of homogeneous class frames: Frames that depict individuals from a single class are omitted from the analysis due to the absence of a comparative element vital for assessing inter-class distances.

-

2.

Elimination of frames with insufficient number of individuals: Frames with fewer than three individuals are excluded for their limited contribution to the analysis:

-

(a)

One individual: A frame with only one individual does not yield any distance score, as it is required of a pair of x, y, and z to calculate the pairwise distance.

-

(b)

Two individuals: Frames with two individuals result in a normalized score of one, as no other comparative distances within the frame exist. Such frames could bias the data towards the maximum normalized score and are thus excluded.

-

(a)

Table D14, 15 in “Appendix D”. Data Lost During Distance Estimation shows the data loss after each step of dropping the frames, in Model 2, Model 4, and Model 5.

Step 2: Normalization of distance scores

The normalization step is essential for comparing distance measurements across frames. It involves adjusting individual distance scores relative to the frame’s maximum distance score. This is expressed mathematically as:

Such scaling ensures that all distance scores are adjusted to a uniform scale, ranging from 0 to 1, allowing for meaningful comparison and aggregation.

Step 3: Calculation of average distance scores

After normalization, providing a single distance for each class in each frame is crucial.

-

1.

Average Score Computation: For each class pair within a frame, the mean and the median of the normalized distance scores are computed. This average represents the typical distance relationship for that class pair, extracted from the normalized individual pairs.

-

2.

Handling Class Absence: In instances where a frame does not feature representatives from all possible classes, the resulting lack of data for certain class pairs is assigned a ‘NaN’ value to the average distance score for those pairs. This ensures the integrity of the dataset by clearly marking the absence of data.

Empirical approach

As noted previously, the goal of the empirical analysis is to measure the pairwise physical distances between categories of people on the streets. For each pairwise distance j obtained from each frame i (δij), we define a dummy variable D equaling one when we detect more than two people in a frame to whom a gender and religiousness category can be assigned. The reason behind this minimum threshold is the need to have at least two pairs of distances to normalize the relative distance between 0 and 1. For example, in a frame that fulfills this requirement, if we detect a pairwise distance that equals 0.5 between two religious people (RP vs. RP), we have δ = 0.5 and DRP vs. RP = 1. Using this approach, we created one dummy variable per category pair. For the regressions, we are using baseline categories that represent the (assumed) theoretically most distant categories of people. Specifically, we are using religious female vs. non-religious male (RF vs. NRM), religious male vs. non-religious female (RM vs. NRF), religious female vs. non-religious female (RF vs. NRF), and religious people vs. non-religious people (RP vs. NRP) pairs as the baseline categories in three different sets of results (each set of results is associated with a different object detection model). These pairs represent the out-group connections that are expected to provide the highest physical distances. In all model specifications, robust standard errors were used.

We acknowledge that the YouTube videos compiled by individuals on the streets of Turkey may exhibit unobserved differences, stemming from the filming decisions made by the videographers. To address these potential discrepancies, we apply normalization to the pairwise distances extracted from each individual frame. This process controls for unobserved differences between our samples coming from different users.

In addition, we have introduced several other dummy variables that fulfill the role of fixed effects. Specifically, our full model contains dummy variables for each city and district within a city, a dummy indicating the time of night (day or night), and, another dummy indicating when the video was taken (summer or not summer). The accuracy for the day/night image classification model was around 80.55% and the intercoder reliability score was approximately 0.99. A discussion on the development of a custom object detection model for day/night classification, the intercoder reliability scores, and the associated accuracy can be found in the “Appendix C”. For robustness, we are using three alternative approaches: (1) a categorical variable regression (one per object detection model, three in total), (2) a categorical variable regression that uses a logarithmic transformation of the relative distance measure as the dependent variable, and (3) a traditional t-test approach that allows us to compare the variation in pairwise distances across different category pairs.

The regression specification we use is the following equation

where αcity, αdistrict, αnight, and αsummer represent the fixed effects for cities, districts, time of day, and the season of the video upload, respectively. The summary of our findings can be found in the next section.

Results

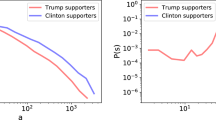

The results associated with the largest object detection model (Model 2) are reported in the main part of the article, while the results for the remaining models can be found in the “Appendix H”. Figures 5 and 6 demonstrate how in-group interactions significantly differ from out-group interactions. Across all statistical estimations and specifications, the results are very similar, and the findings are robust. In almost all specifications, the physical interactions between citizens of similar gender identity and level of religiosity are statistically significantly lower than those in other categories. Within religious groups, especially among religious females, much smaller physical pairwise distances are observed compared to distances between ideologically different categories.

The robustness of the findings has been tested through the application of sensitivity analysis (including fixed effects and additional specifications), feature engineering (log-transformation of the dependent variable), and the use of three different object detection models. The latter approach was specifically applied to address classification challenges related to identifying citizens who cannot be clearly assigned to a religiosity category. This issue became apparent in the visual separation of religious females from very religious females, as well as in the differentiation between neutral and non-religious males, presenting a challenge. To address these issues, relatively broad definitions of religiosity were employed in Models 4 and 5.

The main findings of the analysis are reported below. Tables 14 and 15 present the coefficients associated with the largest object detection model used (Model 2) across various specifications. Both tables suggest that citizens of similar gender and ideological backgrounds tend to maintain smaller physical distances on the street compared to their compatriots; this observation is particularly pronounced for religious females and religious males. The findings are highly robust, with the coefficients showing only minimal changes when fixed effects are introduced and log-transformation is applied. Overall, we can say that religious citizens (females and males) have a relative distance that is 23.2% to 93.4% less compared to distances between ideologically opposite groups. For in-group interactions between non-religious citizens, the findings are mixed and vary from being 38.1% less to 11.6% more than the distances between ideologically opposite groups. In addition, Tables 21, 22, 23, and 24 offer additional model specifications to complement those presented in the main text.

The regression tables associated with Models 4 and 5 are provided in “Appendix H”. All model specifications yield statistically significant results and demonstrate a significantly smaller distance between religious females and religious people at large. The statistical significance is not sensitive to the choice of different variations of robust standard errors.

Lastly, the results of a traditional t-test have been provided below. The whisker plot shows the variation in the physical distances within pairs of categories. As visible from the plot, this variation is consistently low for most of the categories, which further emphasizes the robustness of the findings in this paper. Pairwise t-test results show that almost all pairwise distances are significantly different from each other, except for one test result (see Figs. 7 and 8).

Conceptual clarification and methodological framing

It is important to distinguish clearly between social distance, polarization, and homophily, acknowledging that these concepts are related yet not interchangeable. In our study, social distance refers to the observable or perceived separation in everyday interactions between groups that differ in, for instance, religious or secular identity. This measure does not necessarily prove animosity; it can also result from selective avoidance, differing routines, or other structural factors. By contrast, polarization implies both attitudinal extremity and mutual antagonism between groups, going beyond mere spatial or social separation. Although social distance may correlate with polarized attitudes, one can observe greater clustering of similar individuals (or avoidance of out-groups) without genuine ideological hostility39.

Homophily plays a key role in clarifying why social distance need not always equate to polarization. As39 outlines, people naturally gravitate toward those who share their interests, demographic traits, or beliefs, which can create in-group clusters. Such patterns do not always stem from negative sentiments about out-groups. In many contexts, limited cross-group interaction arises from benign or structural reasons (e.g., individuals sharing the same workplaces, educational institutions, or social circles), rather than from ideological aversion.

In light of these distinctions, our method should be seen as capturing a particular facet of social separation in Turkey’s streets rather than a complete measure of polarization. Building on approaches used in other domains, such as40 and41, we leverage visual data to document how often, and how closely, people walk with those from different (or similar) religious backgrounds. Although these measures can illuminate possible symptoms or consequences of polarization, they do not, on their own, prove the existence of hostile, ideologically polarized camps. Instead, our core methodological contribution lies in demonstrating how advanced computer vision techniques can reveal social distance patterns at scale.

By framing Turkey as a case study, we demonstrate how this technique can highlight interaction patterns within a highly visible secular–religious cleavage. The presence or absence of religious attire provides a salient visual cue for measuring when and where groups cluster, allowing us to test whether everyday proximity aligns with well-known social or political fault lines. That said, we emphasize that this measure reflects observed social boundaries, which may have multiple causes. In other words, low levels of cross-group interaction could indicate deep political schisms or merely reflect self-selection and homophily. Ultimately, our findings help illustrate how these observable forms of social distance relate to broader social and political divisions, without claiming to capture every dimension of polarization.

Limitations

One major limitation of using personal distance to gauge political polarization is that it does not fully capture the emotional and cognitive nuances of affective polarization42. Deeply held beliefs and strong emotional reactions can shape people’s partisan identities in ways that remain invisible in simple measurements of interpersonal space, even if greater distancing may be correlated with out-group animosity43.

A second issue arises from the numerous confounding factors that affect interpersonal distance, making it difficult to attribute observed patterns solely to politics. Socioeconomic status, for instance, can determine one’s housing situation and transportation options, which in turn shape proximity-related behavior44. Moreover, cultural norms play a critical role in defining “comfortable” personal space45. Meanwhile, individual traits such as introversion or extroversion further complicate distancing tendencies46. Without accounting for these varied influences, interpreting observed spacing as a political signal risks oversimplification.

Third, contextual factors can temporarily distort personal distance as an indicator of deeper or longer-term polarization. Highly charged political contexts, such as elections or divisive public debates, may prompt people to avoid out-groups more conspicuously—but this avoidance could be momentary rather than permanent. During the COVID-19 pandemic, for example, ideological differences47 did shape social-distancing practices, yet these patterns were also driven by local health guidance, infection rates, and personal risk assessments48. Thus, isolating the precise impact of partisan identity becomes inherently difficult.

Fourth, the reliability and generalizability of findings based on personal distance are hindered by methodological challenges. In video-based studies, selection bias can skew which interactions end up being analyzed, while manual labeling of religious or partisan symbols introduces the possibility of coding errors or subjective interpretations49. Even automated methods may misinterpret ambiguous signals, and focusing on one geographic area or community can limit broader applicability50. Knowledge of whether individuals already share a relationship or recognize each other’s affiliations often remains unobservable, making it hard to determine causality.

Finally, personal distance by itself rarely clarifies why individuals choose to stand closer or farther apart. People might simply be avoiding physical touch, adhering to cultural taboos, or responding to nonpolitical preferences such as personal comfort51. Hence, without corroborating evidence (for instance, surveys of group attitudes or explicit markers of political hostility), it is risky to assume that distancing reflects underlying polarization. Such nuances indicate that while personal distance can yield valuable insights into social dynamics, it should be combined with other measures and carefully contextualized so as not to overstate its significance as a standalone gauge of political polarization.

Conclusion

Our study demonstrates how a new methodological framework—rooted in object detection and distance estimation—can provide valuable, quantifiable measures of polarization. While we used Turkey as the testing ground, the same pipeline could be adapted to analyze how visible markers of identity operate in other culturally distinct settings. Future research might build on our method to incorporate more nuanced contextual measures or combine it with deeper qualitative insights, thereby offering an even fuller picture of polarization dynamics across diverse societies.

Highlights of the results from the previous section and possible explanations thereof include:

-

Religious Female vs. Religious Male: The distances between religious females and religious males are consistently higher in every estimation compared to in-group distances measured among religious females. We believe established gender identities within Muslim culture may have a causal relationship with this outcome.

-

Non-Religious Female vs. Non-Religious Female: The distances between these groups are comparably higher than the in-group distances among religious females. We think that the political heterogeneity and divided identities within the secular population of Turkey, along with the relatively more united political representation of religious citizens (largely captured by Erdogan’s AKP), may be underlying causes for this phenomenon.

-

Religious Female vs. Non-Religious Female: Despite being relatively higher than the in-group distances among both religious and non-religious female categories, the average distance between females from ideologically opposite groups is significantly lower than that between the most opposite reference groups. This finding may suggest that, although less pronounced than religion, established gender identities (especially in the case of females) are also major drivers of social interaction on the streets.

In conclusion, some of the significant findings are consistent and may potentially be explained by social factors. For instance, in-group distances (i.e., distances between religious individuals, religious women, and non-religious women, respectively) are smaller compared to out-group distances. This observation is in line with traditional gender roles in patriarchal societies and with customary social roles in predominantly Muslim communities. The convergence of social boundaries corresponds with proximity on Turkish streets, which again depicts the complicated polarization of the nation.

It is important to note that Islam has frequently been described as patriarchal, with cultural expectations often confining religious women to domestic or female-dominated spheres. This could help explain the especially close in-group distances we observe among religious women: if their social norms and daily experiences already limit broader cross-gender or out-group interactions, women may become all the more inclined to cluster together in public spaces. At the same time, these findings may not generalize to contexts where patriarchal norms operate differently or where religious affiliation is not a primary mechanism of social ordering. Future research would benefit from testing similar computervision approaches in non-Muslim or less patriarchal societies, to see whether gender plays a similarly strong role in conditioning visible patterns of in-group closeness.

The sections on data collection, the methodological discussion on object detection, the training of a custom object detection model (Hijab-Net), the methodological estimation of distances, and finally, the empirical approach to understanding the results underscore the technical sophistication and rigor of our methodology. The details provided in the methodology and empirical analysis sections not only enhance the precision of our analysis but also offer nuanced insights into how a variety of computer vision techniques can be utilized to understand social and political behaviors. The most noteworthy methodological contribution of our paper to the field of political methodology is the integration of a 2D–3D distance estimation technique with object detection. Conversely, the most substantial contribution is the creation of a new metric for understanding political polarization.

This study, through its innovative approach that combines computational techniques with an economic analysis of social interactions, illuminates the complex relationship between religious expression and political polarization in Turkey. Understanding the extent of political polarization through visual cues is especially important for countries with citizens who associate themselves with a variety of cultural, ideological, racial, and religious backgrounds. Visual cues can be particularly important for nations where formal participation in the political system is limited for certain groups and identities.

Thus, these findings not only contribute to a deeper understanding of the dynamics at play in Turkish society but also suggest a scalable framework for examining similar phenomena in diverse global contexts. The implications of this research extend beyond the academic realm, offering policymakers and social scientists a new lens through which to view the complexities of religious expression and its political ramifications. Future research could expand upon this foundation, exploring other forms of religious and cultural symbolism and their impact on societal polarization.

Data availability

The data that support the findings of this study are available from the authors upon reasonable request. For your request, please contact Dr. Cantay Caliskan (cantay.caliskan@gmail.com).

Abbreviations

- RF:

-

Religious female

- RM:

-

Religious male

- NRF:

-

Non-religious female

- NRM:

-

Non-religious male

- NRP:

-

Non-religious people

- FE:

-

Use of fixed effects

References

Schill, D. The visual image and the political image: a review of visual communication research in the field of political communication. Rev. Commun. 12(2), 118–142 (2012).

Pauwels, L. Visual methods for political communication research: modes and affordances. In Visual Political Communication (eds Veneti, A. et al.) 75–95 (Springer International Publishing, 2019).

Krogstad, A. A political history of visual display. Poster 4(1), 7–29 (2017).

Gerodimos, R. The interdisciplinary roots and digital branches of visual political communication research. In Visual Political Communication (eds Veneti, A. et al.) 53–73 (Springer International Publishing, 2019).

Campante, F. R. & Hojman, D. A. Media and polarization: evidence from the introduction of broadcast TV in the United States. J. Public Econ. 100, 79–92 (2013).

Simon, B., Reininger, K. M., Schaefer, C. D., Zitzmann, S. & Krys, S. Politicization as an antecedent of polarization: evidence from two different political and national contexts. Br. J. Soc. Psychol. 58(4), 769–785 (2019).

Barber, M., McCarty, N., Mansbridge, J. & Martin, C. J. Causes and consequences of polarization. Polit. Negotiat. Handb. 37, 39–43 (2015).

Slininger, S. Veiled Women: Hijab, Religion, And Cultural Practice (Springer, 2014).

C¸ arko˘glu, A., Toprak, B. & Fromm, C. A. Religion, Society and Politics in a Chang- ing Turkey (TESEV Publications, 2007).

Akcay, U. Authoritarian consolidation dynamics in Turkey. Contemp. Polit. 27(1), 79–104. https://doi.org/10.1080/13569775.2020.1845920 (2021).

Cagaptay, S. The New Sultan: Erdogan and the Crisis of Modern Turkey (Bloomsbury Academic, 2023).

Hall, E. T. The Hidden Dimension (Doubleday & Company, Inc., 1966).

Goff, P. A., Steele, C. M. & Davies, P. G. The space between us: stereotype threat and distance in interracial contexts. J. Personal. Soc. Psychol. 94(1), 91 (2008).

Sommer, R. Personal Space: The Behavioral Basis of Design. A Spectrum Book (Prentice- Hall, 1969).

Kahan, D. M., Jenkins-Smith, H. & Braman, D. Cultural cognition of scientific consensus. J. Risk Res. 14(2), 147–174 (2011).

Sunstein, C. R. Deliberative trouble? Why groups go to extremes. In Multi-Party Dispute Resolution, Democracy And Decision-Making 65–95 (Routledge, 2017).

Talisse, R. B. Overdoing Democracy: Why We Must Put Politics in its Place (Oxford University Press, 2019).

Bucy, E. P. Politics through machine eyes: what computer vision allows us to see. J. Vis. Polit. Commun. 10, 59 (2023).

Dietrich, B. J. Using motion detection to measure social polarization in the US House of Representatives. Polit. Anal. 29(2), 250–259 (2021).

Dietrich, B. & Sands, M. Seeing racial avoidance on new york city streets. Nat. Hum. Behav. 7, 1275–1281 (2023).

Ayda¸s, I. et al. Yineleme Kodları [Replication Data for]: ”Tu¨rkiye’de Se¸cim Anketlerinin Toplam Anket Hatası Perspektifinden Bir Incelemesi” [An Examination Of Election Polls In Turkey From The Total Survey Error Paradigm Perspective] (2022).

Wang, L., Shi, J., Song, G. & Shen, I.-f. Object detection combining recognition and segmentation. In Computer Vision—ACCV 2007 189–199 (2007).

Dalal, N. & Triggs, B. Histograms of oriented gradients for human detection. In 2005 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’05) (2005).

Girshick, R. Fast r-cnn. In 2015 IEEE International Conference on Computer Vision (ICCV) (2015).

Girshick, R., Donahue, J., Darrell, T. & Malik,J. . Rich feature hierarchies for accurate object detection and semantic segmentation. In 2014 IEEE Conference on Computer Vision and Pattern Recognition (2014).

Ren, S., He, K., Girshick, R. & Sun, J. Faster r-cnn: towards real-time object detection with region proposal networks. IEEE Trans. Pattern Anal. Mach. Intell. 39(6), 1137–1149 (2017).

Du, J. Understanding of object detection based on cnn family and yolo. J. Phys: Conf. Ser. 1004, 012029 (2018).

Terven, J. & Cordova-Esparza, D. A Comprehensive Review of yolo: From yolov1 and Beyond (2023).

Lin, T.-Y. et al. Focal loss for dense object detection (2018).

Tan, M., Pang, R. & Le, Q. V. Efficientdet: Scalable and Efficient Object Detection (2020).

Cordts, M. et al. MThe cityscapes dataset for semantic urban scene understanding. In 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2016).

Nevzat Çicek|Türkiyéde ínanç ve Dindarlık arastırması yayımlandı: Dindarlastık misekülerlestikmi? (2023).

Turkey: foreign tourist arrivals monthly (2023).

Hester, N. & Hehman, E. Dress is a fundamental component of person perception. Pers. Soc. Psychol. Rev. 27(4), 414–433 (2023).

Everingham, M., Van Gool, L., Williams, C. K., Winn, J. & Zisserman, A. The pascal visual object classes (voc) challenge. Int. J. Comput. Vision 88(2), 303–338 (2009).

Pacific Islands Climate Adaptation Science Center. Guam coral reef mapping continues testing midar technology (2023, accessed 16 Dec 2023).

Joseph, J. Monitor Social Distancing Using Python, Yolov5, Openc (2022).

Odenthal. T. Computer Vision to Measure Distance (2022).

McPherson, M., Lovin, L. S. & Cook, J. M. Birds of a feather: homophily in social networks. Ann. Rev. Sociol. 27, 415–444 (2001).

Dietrich, B. J. Using motion detection to measure social polarization in the US house of representatives. Polit. Anal. 29(2), 250–259 (2021).

Dietrich, B. J. & Sands, M. R. Seeing racial avoidance on New York City streets. Nat. Hum. Behav. 7, 1275–1281 (2023).

Iyengar, S., Lelkes, Y., Levendusky, M., Malhotra, N. & Westwood, S. J. The origins and consequences of affective polarization in the united states. Annu. Rev. Polit. Sci. 22(1), 129–146 (2019).

Karakayali, N. Social distance and affective orientations. Sociol. Forum 24(3), 538–562 (2009).

Zang, E., West, J., Kim, N. & Pao, C. US regional differences in physical distancing: evaluating racial and socioeconomic divides during the COVID-19 pandemic. PLoS ONE 16(11), e0259665 (2021).

McCall, C. Proxemics. In Open Encyclopedia of Cognitive Science (MIT Press, 2024).

Sorokowska, A. et al. Preferred interpersonal distances: a global comparison. J. Cross Cult. Psychol. 48(4), 577–592 (2017).

Grimalda, G. et al. The politicized pandemic: Ideological polarization and the behavioral response to COVID-19. In Kiel Working Paper 2207, Kiel Institute for the World Economy (2022).

Allcott, H. et al. Po- larization and public health: partisan differences in social distancing during the coro- navirus pandemic. J. Public Econ. 191, 104254 (2020).

Renier, L. A., Schmid-Mast, M., Dael, N. & Kleinlogel, E. P. Nonverbal social sensing: what social sensing can and cannot do for the study of nonverbal behavior from video. Front. Psychol. 12, 606548 (2021).

Tonin,M., Lepri, B. & Tizzoni, M. . Physical partisan proximity outweighs online ties in predicting US voting outcomes. arXiv:2407.12146 [cs.SI] (2024).

Psychology Today Staff. Proxemics (2022).

Acknowledgements

We thank Marla Litsky and Moran Guo for their research assistance and support. We also thank Benjamin Laughlin, Jeff Gill, and all other scholars who provided us their insightful comments to improve our work.

Author information

Authors and Affiliations

Corresponding author

Appendices

A: Geographical distribution of religiosity

In order to understand the distribution of the number of religious and non-religious people in Turkey, we calculated a normalized value by dividing of religious people or nonreligious people per each YouTube video extracted by the population density of each province in Turkey. The resulting visualizations are Figs. 9 and 10.

The following are stacked bar charts for the training Fig. 11, validation Fig. 12, and test Fig. 13 sets for 9 cities used in data collection.

B: Variables in TVR