Abstract

Intuitionistic fuzzy similarity measures (IFSMs) play a significant role in applications involving complex decision-making, pattern recognition, and image processing. Several researchers have introduced different methods of IFSMs, yet these IFSMs fail to provide rational decisions. Therefore, in this research, we present a novel IFSM by considering the global maximum and the minimum differences in membership, non-membership, and hesitancy degrees between two intuitionistic fuzzy sets (IFSs). We show that the proposed IFSM meets the fundamental properties and provide numerical examples to prove its superiority. We implement it to solve pattern recognition problems and demonstrate its applicability and feasibility by using the parameter ‘degree of confidence’ as a performance index. Additionally, an image fusion method using the proposed IFSM is developed in this work. To construct an image fusion algorithm, initially, we employ a two-layer decomposition method based on Gaussian filtering to the source images of different modalities to decompose them into the base subimages and the detailed subimages. Then, we use the proposed IFSM to extract the features of base subimages and define two fusion rules to fuse the base subimages and detailed subimages. Then, we show the applicability of this method by conducting extensive experiments using three different types of medical image datasets. We evaluated the effectiveness of the proposed image fusion method using six metrics: Mean, Standard Deviation, feature mutual information, Spatial Frequency, Average Gradient, and Xydeas. Experimental results reveal that the proposed IFSM and fusion approach achieve superior performance compared to most existing methods.

Similar content being viewed by others

Introduction

The fuzzy set invented by Zadeh1 serves as a fundamental framework for managing uncertainty, particularly in image processing and decision-making. In image processing, a fuzzy set is a strategy aimed at resolving the ambiguity inherent in the qualitative characteristics of images via the construction of membership functions. However, accurately determining the membership values for the grayscale intensity of each pixel in an image is challenging. To address this issue, the intuitionistic fuzzy set (IFS), proposed by Atanassov2, has been utilized in image processing tasks3,4,5. In IFS-based techniques, membership degree, non-membership degree, and hesitation degree are usually considered when analyzing the image features, which makes it more powerful than fuzzy sets. Due to their effectiveness in handling uncertainty and vagueness, IFSs have been widely applied in various fields, including decision-making6,7, image processing3,8, clustering9,10, and pattern recognition11.

The intuitionistic fuzzy (IF)-based image processing methods typically employ information measures like entropy measure12, distance & similarity measure3, for image analysis and pattern recognition. They have demonstrated superior performance compared to other traditional methods. Among them, IF similarity measure (IFSM) has been applied in various domains, including fusion, segmentation, enhancement, feature extraction, and pattern recognition. Yet, the absence of a standardized approach for IFSMs has spurred researchers to develop new methodologies. As a result, several IFSMs have been developed. Nevertheless, these IFSMs have certain limitations, such as the ‘zero denominator’ problem, violations of fundamental IFSM properties, and difficulties in decision-making due to counterintuitive outcomes. Consequently, further research is required to overcome these challenges. Therefore, this work aims to contribute by establishing a strong theoretical foundation for IFSM and implementing it in pattern recognition and image fusion.

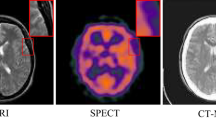

Image fusion has recently become a hot topic because of its applications in medical diagnosis. In several medical specialties, such as neurology, cancer, cardiology, and orthopedics, medical image fusion techniques help to enhance the diagnostic data available to medical practitioners, raising the standard of medical diagnosis and promoting more effective treatment planning. The single-modality medical picture cannot capture all the features of tissues or organs. For instance, computed tomography (CT) images provide detailed structural information on bones and implants, while magnetic resonance images (MRI) provide structural information on organs, soft tissues, and tumors but cannot detect calcification or lesions within bone tissues or human metabolic activity. In medical imaging, MR images are classified into T1-weighted (MR-T1) and T2-weighted (MR-T2) based on the variation of the radio frequency over time. MR-T1 visualizes anatomy and provides good contrast between different structures, while MR-T2 images evaluate pathology and abnormalities in soft tissues, both related to fatty acids and water content. MR-proton density (MR-PD) weighted images manifest when there is a reduction in the contrast of both T1 and T2 MR images. A radiologist might need to combine unique details from several modalities without compromising the localization characteristics of the source image. The integration of CT and MRI is beneficial for brain tumor surgery, as it provides a unified image that simultaneously captures both bone and tumor structures. Additionally, combining several MRI modalities shows superior tissue lesions and aids in analyzing tumor progression for a more thorough clinical diagnosis13.

Several methods for image fusion exist in the literature, utilizing different frameworks, including spatial domain methods, transform domain methods, deep learning-based methods, hybrid algorithms, and approaches based on fuzzy and IF logic. Spatial domain-based methods14,15,16 typically start by segmenting the input image, which can be done manually or using optimization, and then proceed to fuse it according to a predefined fusion strategy. In transform-domain approaches17,18,19, a decomposition transform disintegrates source images into multi-scale coefficients. The coefficients are combined using specific fusion rules, and lastly, the inverse transform is used to reconstruct the fused image. Furthermore, deep learning-based algorithms include convolutional neural networks (CNN)20, pulse coupled neural networks (PCNN)21, auto-encoder-based models22, and generative adversarial network (GAN)-based methods23. These methods are extensively dependent on data, and data availability is a major challenge in the medical image domain because collecting large, well-labeled datasets is difficult due to privacy restrictions, high costs, and the requirement for expert annotation. In addition to that, some hybrid methods16,24 have been constructed by combining the different base models. Recently, fuzzy and IF-based methods3,12,13,25 have been developed for image fusion. These techniques first convert images into fuzzy and IF representations, enhance them using entropy, distance, and similarity measures, and then apply fusion rules to combine the images. These techniques have been used in multimodal image fusion to address challenges such as blurry source images and effectively manage the uncertainty and imprecision inherent in medical images. Therefore, this study introduces an image fusion method based on IFS.

Motivation

We have noticed that existing IFSMs exhibit certain drawbacks, including unreasonable outcomes, the ‘zero denominator problem,’ counter-intuitive results, and violations of similarity axioms. These deficiencies inherent in the current approaches have the potential to adversely affect the outcomes of decision-making processes. Consequently, there is a pressing need to develop an innovative and efficacious similarity measure to overcome these shortcomings. In this context, this study introduces a novel IFSM and depicts its superiority by conducting a comparative analysis with the existing methods.

Furthermore, several pattern recognition methods have been developed using the various IFSMs. Some methods failed to classify the category of the unknown pattern for certain cases, and some are unreliable in pattern recognition as their degree of confidence is very low. Therefore, this study introduces a pattern recognition approach with the proposed IFSM.

Different imaging modalities can have noise, artifacts, or missing details, which creates uncertainty in the fusion process. Also, some parts of an image may need more focus than others. Additionally, medical image fusion depends on the calculation of pixel weight using that pixel value and its surrounding pixel values, presenting a challenge marked by fuzziness and uncertainty. Traditional methods could not produce accurate results in such situations, whereas IFSs address this challenge by allowing pixels to have partial membership and non-membership in multiple categories. In addition to that, traditional image fusion methods have several drawbacks, including extensive dependency on data sets and difficulties in describing edges and outlines. As a result, important information can be lost during the fusion process. On the other hand, classical fuzzy theory-based models offer several advantages. These include noise reduction, better handling of uncertainty in image fusion, and reduced reliance on extensive datasets and high-powered computing resources. However, accurately determining the membership values for the grayscale intensity of each pixel is challenging in conventional fuzzy-based image processing. IF-based approaches successfully overcome this issue by introducing this uncertainty as a hesitancy degree. Moreover, very few studies have been reported in the literature that incorporate the IF theory in image fusion. To the best of our knowledge, there is only one study3 that employs IFSM for medical image fusion. Nevertheless, the IFSM used in this method has several shortcomings, such as generating counter-intuitive and illogical results for certain pairs of IFSs, which affects the outcomes of image fusion.

Novelty of the study

The novelty of the proposed study is based on two key mechanisms:

-

Unlike existing IFSM and IF distance measure (IFDM) that focus on the difference in membership, difference of non-membership, and difference of hesitancy between two IFSs for each element of the set, the proposed method incorporates the impact of the global maximum and minimum of these differences along with their differences for each element. Here, the term global stands for maximum and minimum over the entire set. This comprehensive approach strengthens the IFSM by providing results with a higher degree of confidence.

-

A completely unsupervised image fusion method utilizing a novel image IFSM is proposed. IFSMs are employed to enhance the image, and an image fusion rule based on IFSM is defined. The integration of IFSs improves image fusion performance by effectively handling uncertainty in gray pixel intensity.

Contributions

Driven by the aforementioned motivation and novelty, the main contributions of this study are as follows:

-

Introduction of a novel IFSM: Introduces a new IFSM that meets all the essential criteria necessary for an IFSM. A comprehensive comparison of the proposed IFSM with existing methods is presented.

-

Pattern recognition algorithm based on IFSM: Presents a pattern recognition algorithm using the proposed IFSM. The reliaibilty of the proposed method is demonstrated by using the performance index ‘degree of confidence’.

-

Design of an image fusion method: Constructs a new unsupervised image fusion method using the proposed IFSM with Gaussian filtering for multimodal medical images. The IFS breaks down the images into three components: membership, non-membership, and hesitancy, each representing different features of the image. The IFSM is then applied to enhance the images and define a new fusion rule, ensuring that key image features are preserved.

-

Comparative study and analysis: Conducts extensive experiments using three different pairs of medical images: CT and MRI, MR-T1 and MR-T2, & MR-T2 and MR-PD. Its effectiveness and superiority are demonstrated by comparing five state-of-the-art (SOTA) methods across six different evaluation metrics. Additionally, an experiment on a large-scale dataset is performed to check the computational complexity.

The remainder of the paper is organized as follows. Literature Survey discusses the existing IFSMs and traditional image fusion methods. Section Intuitionistic fuzzy similarity measure presents some fundamental notations and definitions of IFSs, which enable us to understand the further discussion and propose a new IFSM with some numerical examples. Section A novel image fusion method based on IFSM presents a novel image fusion technique for multimodality images employing IFSM and Gaussian curvature filter. Section Experiments, Results and Discussion provides experimental analysis on image fusion for medical images and presents results and comparisons. Section Conclusion summarizes the study and Section Limitations and future work provides limitations of the proposed work and future direction for further research in this area.

Literature survey

This study primarily focuses on the required background of the approaches, namely, IFSM and image fusion methods.

Intuitionistic fuzzy similarity measures

As an extension of fuzzy sets, IFSs inherit several distance and similarity measures that are direct generalizations of those available for fuzzy sets. Moreover, concepts like triangular fuzzy numbers, probabilistic divergence measures, trigonometric functions, etc., have been used to construct IFSM. Additionally, the duality relation between distance and similarity established by Hung and Yang26 is also implemented to derive IFSM. The duality relation implies that one measure can be used to derive the other. Therefore, our literature review will cover both similarity and distance measures for IFSs and their shortcomings and advantages. A detailed discussion is provided in Table 1.

In Table 1, the term counterintuitive indicates that the corresponding similarity measure generates identical similarity values for two different pairs of IFSs. Unreasonable or irrational denotes that the corresponding IFSM fails to satisfy human intuition. A zero denominator problem indicates that the corresponding IFSM cannot produce a finite value for a certain pair of IFSs because of the zero divisor problem. All these drawbacks are observed for certain pairs of IFSs.

Image fusion methods

Various medical image fusion techniques have been explored in the literature and classified into spatial domain methods, transform domain methods, hybrid approaches, and fuzzy logic-based methods. Spatial domain-based methodologies14,15,48 offer advantages such as simplicity, ease of implementation, and minimal computing costs. However, these methods cannot fully describe edges and outlines, leading to a loss of information during fusion. Transform-domain approaches17,19,49,50,51,52 exhibit limitations, such as Laplacian pyramid is ineffective in capturing precise shapes and contrasts within images. Additional techniques, including CoT, DWT, and ST, tend to introduce artifacts and Gibbs phenomena in the fused outputs due to their insufficient feature representation capabilities. Furthermore, some hybrid methods including PCA-DWT24, CNP-MIF53, DTNP-MIF54 and MDLSR-RFM116 have been developed by combining different base models. However, these methodologies are computationally complex due to the intricate steps involved. Moreover, very limited studies3,12,13,25 available in the literature that uses the IF concepts in image fusion methods.

We have provided a brief overview of fundamental image fusion methods based on spatial domain, transform domains, hybrid methods, and IF-based techniques in Table 2.

Intuitionistic fuzzy similarity measure

Basic concepts

This section covers basic definitions and mathematical properties related to IFS, including the definition of IFS, operations on IFSs, and an axiomatic definition of IFSM. We denote Universe of discourse (UOD) as \(\mathcal {U} = \{u_{1}, u_{2},..., u_{n} \}\) and \(IFS(\mathcal {U})\) represents the collection of IFSs on \(\mathcal {U}.\)

Definition 1

An IFS \(\mathcal {P}\) on \(\mathcal {U}\) is defined by Atanassov2, as \(\mathcal {P}= \lbrace {\langle u,\mu _{\mathcal {P}}(u),\nu _{\mathcal {P}}(u)\rangle |\hspace{0.1cm}u\in \mathcal {U}}\rbrace\), where \(\mu _{\mathcal {P}}:\mathcal {U}\longrightarrow [0,1]\) and \(\nu _{\mathcal {P}}:\mathcal {U}\longrightarrow [0,1]\) are membership and non-membership mappings respectively, and \(\forall u\in \mathcal {U}\), \(0\le \mu _{\mathcal {P}}(u)+\nu _{\mathcal {P}}(u)\le 1\). A hesitancy degree is given by \(\tau _{\mathcal {P}}(u)= 1- \mu _{\mathcal {P}}(u)-\nu _{\mathcal {P}}(u)\), \(\forall u\in \mathcal {U}\).

Remark 1

The fuzzy set is a particular case of IFS, when \(\tau _{\mathcal {P}}(u)=0\), then IFS becomes a fuzzy set.

Operations of IFS: Let \(\mathcal {P}= \lbrace {\langle u,\mu _{\mathcal {P}}(u),\nu _{\mathcal {P}}(u)\rangle }\rbrace\) and \(\mathcal {Q}= \lbrace {\langle u,\mu _{\mathcal {Q}}(u),\nu _{\mathcal {Q}}(u)\rangle }\rbrace\) be two IFSs on \(\mathcal {U}\) with hesitancy degree \(\tau _{\mathcal {P}}(u)\) and \(\tau _{\mathcal {Q}}(u)\), respectively. To facilitate the subsequent discussion, some fundamental operations on IFSs are outlined below:

-

1.

\(\mathcal {P}\subseteq \mathcal {Q}\) iff \(\mu _{\mathcal {P}}(u)\le \mu _{\mathcal {Q}}(u)\), \(\nu _{\mathcal {P}}(u)\ge \nu _{\mathcal {Q}}(u)\), \(\forall u\in \mathcal {U}\).

-

2.

\(\mathcal {P}=\mathcal {Q}\) iff \(\mu _{\mathcal {P}}(u)=\mu _{\mathcal {Q}}(u)\), \(\nu _{\mathcal {P}}(u)=\nu _{\mathcal {Q}}(u)\), \(\forall u\in \mathcal {U}\).

-

3.

\(\mathcal {P}^{c}= \lbrace {\langle u,\nu _{\mathcal {P}}(u),\mu _{\mathcal {P}}(u)\rangle }\vert u\in \mathcal {U} \rbrace\), where \(\mathcal {P}^{c}\) is the complement of \(\mathcal {P}\).

Several IF distance measures and IFSMs functions (provided in Table S1 of the supplementary file) have been developed in the literature to compute the discrimination and resemblance between two IFSs, respectively. They have several flaws, including unreasonable outcomes, counter-intuitive values, and ‘the zero denominator’ problem. Therefore, to verify their feasibility and validity, Mitchell30 provided some fundamental properties, which are given as Definition 2.

Definition 2

30 Let \(\mathcal {S}\) be a mapping \(\mathcal {S}:IFSs(\mathcal {U})\times IFSs(\mathcal {U})\longrightarrow [0,1]\). Then \(\mathcal {S}\) is known as an IFSM if \(\mathcal {S}(\mathcal {P},\mathcal {Q})\) satisfies the following axioms:

-

S1:

\(0 \le \mathcal {S}(\mathcal {P},\mathcal {Q}) \le 1\)

-

S2:

\(\mathcal {S}(\mathcal {P},\mathcal {Q})= 1\) iff \(\mathcal {P}=\mathcal {Q}\)

-

S3:

\(\mathcal {S}(\mathcal {P},\mathcal {Q})=\mathcal {S}(\mathcal {Q},\mathcal {P})\)

-

S4:

If \(\mathcal {P}\subseteq \mathcal {Q} \subseteq \mathcal {R}\), \(\mathcal {P}, \mathcal {Q}, \mathcal {R} \in IFSs(\mathcal {U})\), then \(\mathcal {S}(\mathcal {P},\mathcal {R})\le \mathcal {S}(\mathcal {P},\mathcal {Q})\) and \(\mathcal {S}(\mathcal {P},\mathcal {R})\le \mathcal {S}(\mathcal {Q},\mathcal {R})\).

The proposed similarity measure

The expressions of the existing IFSMs are given in Table 1 of the supplementary file. From this table, it is evident that most of the IFSMs27,30,32,35,41,43 have considered the difference between memberships and the difference of non-membership degrees for each element of the universal set. Few methods8,47,57 also considered the terms like maximum and minimum of membership and non-membership. In contrast to that, we consider the effect of the entire set while defining the IFSM. We take into account the maximum and minimum of differences in all belongingness degrees (membership, non-membership, and hesitancy) over the entire universal set, along with their differences for each element. Below, we have defined a few essential terms that are necessary for formulating the similarity function.

\(\Delta {\mu _i}=|\mu _{\mathcal {P}}(u_{i})-\mu _{\mathcal {Q}}(u_{i})|\) = Difference between membership degrees

\(\Delta {\nu _i}=|\nu _{\mathcal {P}}(u_{i})-\nu _{\mathcal {Q}}(u_{i})|\) = Difference between non-membership degrees

\(\Delta {\tau _i}=|\tau _{\mathcal {P}}(u_{i})-\tau _{\mathcal {Q}}(u_{i})|\) = Difference between hesitancy degrees

\(\Delta \mu _{\max }=\max \limits _i\{\Delta {\mu _i}\}=\max \limits _i\{|\mu _{\mathcal {P}}(u_{i})-\mu _{\mathcal {Q}}(u_{i})|\}\) = Global maximum of difference of memberships

\(\Delta \nu _{\max }=\max \limits _i\{\Delta {\nu _i}\}=\max \limits _i\{|\nu _{\mathcal {P}}(u_{i})-\mu _{\mathcal {Q}}(u_{i})|\}\) = Global maximum of difference of non-memberships

\(\Delta \tau _{\max }=\max \limits _i\{\Delta {\tau _i}\}=\max \limits _i\{|\tau _{\mathcal {P}}(u_{i})-\tau _{\mathcal {Q}}(u_{i})|\}\) = Global maximum of difference of hesitancy degrees

\(\Delta \mu _{\min }=\min \limits _i\{\Delta {\mu _i}\}=\max \limits _i\{|\mu _{\mathcal {P}}(u_{i})-\mu _{\mathcal {Q}}(u_{i})|\}\) = Global minimum of difference of memberships

\(\Delta \nu _{\min }=\min \limits _i\{\Delta {\nu _i}\}=\max \limits _i\{|\nu _{\mathcal {P}}(u_{i})-\mu _{\mathcal {Q}}(u_{i})|\}\) = Global minimum of difference of non-memberships

\(\Delta \tau _{\min }=\min \limits _i\{\Delta {\tau _i}\}=\max \limits _i\{|\tau _{\mathcal {P}}(u_{i})-\tau _{\mathcal {Q}}(u_{i})|\}\) = Global minimum of difference of hesitancy degrees

The IFSM is constructed using these terms and given in Eq. (1).

where, \(f_{\mu _i}=\dfrac{2-\Delta \mu _{i}-\Delta \mu _{\max }}{2+\Delta \mu _{\min }-\Delta \mu _{\max }}\), \(f_{\nu _i}=\dfrac{2-\Delta \nu _{i}-\Delta \nu _{\max }}{2+\Delta \nu _{\min }-\Delta \nu _{\max }}\), and \(f_{\tau _i}=\dfrac{2-\Delta \tau _{i}-\Delta \tau _{\max }}{2+\Delta \tau _{\min }-\Delta \tau _{\max }}.\)

The inclusion of the global maximum and minimum differences in membership (\(f_{\mu _i}\)), non-membership (\(f_{\nu _i}\)), and hesitancy degrees (\(f_{\tau _i}\)) helps avoid counter-intuitive and unreasonable results (discussed in Table 1). Moreover, by considering the influence of the entire universal set, the method produces outcomes with a higher degree of confidence. We have normalized these functions to prevent a zero denominator, thereby ensuring that the proposed measure adheres to the axiomatic definition of an IFSM. Most existing IFSMs fail to identify unknown pattern categories in pattern classification problems, as they often produce identical values for different pairs of IFSs. In contrast, the proposed method effectively distinguishes these patterns, yielding results with a higher level of confidence.

The proposed IFSM is valid if it satisfies the axioms provided in Definition 2. In order to prove the properties of IFSM, we need to prove the following lemma 1.

Lemma 1

A function \(f:[0,1] \longrightarrow [0,1]\) such that \(f(\alpha ,\beta ,\gamma )= \dfrac{2-\alpha -\beta }{2+\gamma -\beta }\) is monotonically decreasing.

Proof

Differentiate f partially with respect to \(\alpha\), \(\beta\), \(\gamma\)

\(\frac{\partial f}{\partial \alpha }=\dfrac{-1}{2+\gamma -\beta },\quad \frac{\partial f}{\partial \beta }=\dfrac{-\gamma -\alpha }{(2+\gamma -\beta )^{2}},\quad \frac{\partial f}{\partial \gamma }=\dfrac{-2+\alpha +\beta }{(2+\gamma -\beta )^{2}}\)

\(\implies\) \(\frac{\partial f}{\partial \alpha }<0\), \(\frac{\partial f}{\partial \beta }\le 0\), \(\frac{\partial f}{\partial \gamma }<0\)

Since all the partial derivatives of f are negative for \(\alpha\), \(\beta\) and \(\gamma\), therefore f is monotonically decreasing. \(\square\)

Theorem 1

\(S_{A}(\mathcal {P},\mathcal {Q})\) is a similarity measure between two IFSs \(\mathcal {P}\) and \(\mathcal {Q}\) defined on UOD \(\mathcal {U}.\)

Proof

-

S1:

We have \(0\le \Delta \mu _{i}\le 1\), \(0\le \Delta \nu _{i}\le 1\), \(0\le \Delta \tau _{i}\le 1\). \(\Rightarrow 0\le \Delta \mu _{\min }\le 1\), \(0\le \Delta \nu _{\min }\le 1\), \(0\le \Delta \tau _{\min }\le 1\), \(0\le \Delta \mu _{\max }\le 1\), \(0\le \Delta \nu _{\max }\le 1\), \(0\le \Delta \tau _{\max }\le 1\). \(\Rightarrow 0\le f_{\mu _i} \le 1\), \(0\le f_{\nu _i}\le 1\), \(0\le f_{\tau _i}\le 1\). \(\Rightarrow\) \(0\le f_{\mu _i}(1-\Delta \mu _{i})+f_{\nu _i}(1-\Delta \nu _{i})+f_{\tau _i}(1-\Delta \tau _{i}) \le 3\). Therefore, we have \(0 \le S_{A}(\mathcal {P},\mathcal {Q}) \le 1\)

-

S2:

Let \(S_{A}(\mathcal {P},\mathcal {Q})=1\). \(\Leftrightarrow \frac{1}{3n}\sum \limits _{i=1}^{n}\left[ f_{\mu _i}(1-\Delta \mu _{i})+f_{\nu _i}(1-\Delta \nu _{i})+f_{\tau _i}(1-\Delta \tau _{i})\right] =1\). \(\Leftrightarrow f_{\mu _i}(1-\Delta \mu _{i})+f_{\nu _i}(1-\Delta \nu _{i})+f_{\tau _i}(1-\Delta \tau _{i})=3\), \(\forall i=1,2,...,n\). \(\Leftrightarrow f_{\mu _i}(1-\Delta \mu _{i})=1\), \(f_{\nu _i}(1-\Delta \nu _{i})=1\) & \(f_{\tau _i}(1-\Delta \tau _{i})=1\). \(\Leftrightarrow f_{\mu _i}=1\), \(1-\Delta \mu _{i}=1\), \(f_{\nu _i}=1\), \(1-\Delta \nu _{i}=1\) and \(f_{\tau _i}=1\), \(1-\Delta \tau _{i}=1\). \(\Leftrightarrow \Delta \mu _{i}=0\), \(\Delta \nu _{i}=0\) and \(\Delta \tau _{i}=0\), \(\forall i\). \(\Leftrightarrow \mu _{\mathcal {P}}(u_{i})=\mu _{\mathcal {Q}}(u_{i})\), \(\nu _{\mathcal {P}}(u_{i})=\nu _{\mathcal {Q}}(u_{i})\) & \(\tau _{\mathcal {P}}(u_{i})=\tau _{\mathcal {Q}}(u_{i})\). \(\Leftrightarrow \mathcal {P}=\mathcal {Q}\).

-

S3:

This part of the proof is trivial.

-

S4:

Suppose \(\mathcal {P}\subseteq \mathcal {Q} \subseteq \mathcal {R},\) \(\mathcal {P}, \mathcal {Q}, \mathcal {R} \in IFSs(\mathcal {U}).\) Then, \(\mu _{\mathcal {P}}(u_{i})\le \mu _{\mathcal {Q}}(u_{i})\le \mu _{\mathcal {R}}(u_{i}),\) \(\nu _{\mathcal {P}}(u_{i})\ge \nu _{\mathcal {Q}}(u_{i})\ge \nu _{\mathcal {R}}(u_{i})\) and \(\tau _{\mathcal {P}}(u_{i})\ge \tau _{\mathcal {Q}}(u_{i})\ge \tau _{\mathcal {R}}(u_{i}).\) \(\implies\) \(\Delta \mu _{i}^{\mathcal {P}\mathcal {R}}\ge \Delta \mu _{i}^{\mathcal {P}\mathcal {Q}},\) \(\Delta \nu _{i}^{\mathcal {P}\mathcal {R}}\ge \Delta \nu _{i}^{\mathcal {P}\mathcal {Q}},\) and \(\Delta \tau _{i}^{\mathcal {P}\mathcal {R}}\ge \Delta \tau _{i}^{\mathcal {P}\mathcal {Q}}\)\(.\) \(\implies\) \(\Delta \mu _{\min }^{\mathcal {P}\mathcal {R}}\ge \Delta \mu _{\min }^{\mathcal {P}\mathcal {Q}},\) \(\Delta \nu _{\min }^{\mathcal {P}\mathcal {R}}\ge \Delta \nu _{\min }^{\mathcal {P}\mathcal {Q}},\) \(\Delta \tau _{\min }^{\mathcal {P}\mathcal {R}}\ge \Delta \tau _{\min }^{\mathcal {P}\mathcal {Q}},\) \(\Delta \mu _{\max }^{\mathcal {P}\mathcal {R}}\ge \Delta \mu _{\max }^{\mathcal {P}\mathcal {Q}},\) \(\Delta \nu _{\max }^{\mathcal {P}\mathcal {R}}\ge \Delta \nu _{\max }^{\mathcal {P}\mathcal {Q}},\) \(\Delta \tau _{\max }^{\mathcal {P}\mathcal {R}}\ge \Delta \tau _{\max }^{\mathcal {P}\mathcal {Q}}.\) Let \(x=(\Delta \mu _{i}^{\mathcal {P}\mathcal {R}}, \Delta \mu _{\min }^{\mathcal {P}\mathcal {R}}, \Delta \mu _{\max }^{\mathcal {P}\mathcal {R}} )\) and \(y=(\Delta \mu _{i}^{\mathcal {P}\mathcal {Q}}, \Delta \mu _{\min }^{\mathcal {P}\mathcal {Q}}, \Delta \mu _{\max }^{\mathcal {P}\mathcal {Q}}),\) then \(x\ge y.\) From lemma 1, \(f_{(at\hspace{0.1cm}x)}\le f_{(at\hspace{0.1cm}y)}\) \(\implies\) \(\dfrac{2-\Delta \mu _{i}^{\mathcal {P}\mathcal {R}}-\Delta \mu _{\min }^{\mathcal {P}\mathcal {R}}}{2+\Delta \mu _{\max }^{\mathcal {P}\mathcal {R}}-\Delta \mu _{\min }^{\mathcal {P}\mathcal {R}}} \le \dfrac{2-\Delta \mu _{i}^{\mathcal {P}\mathcal {Q}}-\Delta \mu _{\min }^{\mathcal {P}\mathcal {Q}}}{2+\Delta \mu _{\max }^{\mathcal {P}\mathcal {Q}}-\Delta \mu _{\min }^{\mathcal {P}\mathcal {Q}}}.\) \(\Rightarrow\) \(f_{\mu _i}^{\mathcal {P}\mathcal {R}} \le f_{\mu _i}^{\mathcal {P}\mathcal {Q}}\). Similarly, we have \(f_{\nu _i}^{\mathcal {P}\mathcal {R}} \le f_{\nu _i}^{\mathcal {P}\mathcal {Q}}\) and \(f_{\tau _i}^{\mathcal {P}\mathcal {R}} \le f_{\tau _i}^{\mathcal {P}\mathcal {Q}}.\) Then, we can have \(S_{A}(\mathcal {P},\mathcal {R})\le S_{A}(\mathcal {P},\mathcal {Q})\). In the similar manner, we prove \(S_{A}(\mathcal {P},\mathcal {R})\le S_{A}(\mathcal {Q},\mathcal {R}).\)

\(\square\)

Experiments on IFSM

Ideally, a feasible IFSM should precisely compute the level of similarity across different pairs of IFSs, and it is considered valid if it satisfies the basic properties of IFSM provided in Definition 2. So, in this section, we have shown the feasibility and superiority of the proposed IFSM by using two examples. Example 1 illustrates that the proposed IFSM geometrically fulfills the fundamental properties of a similarity measure. Example 2 aims to compare the proposed IFSM with several existing IFSMs3,6,29,33,35,37,38,39,42,43,46,58,59. We demonstrate that the proposed IFSM is capable of distinguishing between two cases of different pairs of IFSs.

Example 1

Let \(P=\{\langle \mu , \nu \rangle \}\) be an IFSs on UOD \(\mathcal {U}\) where \(0 \le \mu , \nu \le 1\) and \(0\le \mu +\nu \le 1\). Then, the similarity values between P and IFSs \(Q_1=\{\langle 1,0\rangle \}\), \(Q_2=\{\langle 0,1\rangle \}\) and \(Q_3=\{\langle 0.5,0.5\rangle \}\) are computed using the proposed IFSM and demonstrated in Fig. 1. These surfaces have been drawn by taking varying values of \(\mu\) & \(\nu\) and their similarity between three IFSs (\(Q_1\), \(Q_2\) and \(Q_3\)). The plots for similarity of P from \(Q_1\), \(Q_2\) and \(Q_3\) are given in Fig. 1a–c, respectively. We can see from these figures that all the similarity values lie between 0 and 1. For instance, In Fig. 1a, for \(\mu =1\) and \(\nu =0\), the similarity value for \(Q_1\) is 1, for \(\mu =0\), \(\nu =1\); the similarity value for \(Q_2\) is 1 in Fig. 1b and in Fig. 1c, the similarity value is highest at the point \(\langle 0.5, 0.5 \rangle\), which show that the similarity measure satisfies the axiom S2. Moreover, all these graphs show the symmetricity property of the similarity measure.

Example 2

There are five pairs of different IFSs on UOD \(X=\{u\}\) as demonstrated in Table 3. In case 1 and case 2, the IFSs \(M_{1}=M_{2}\) but \(N_{1}\) and \(N_{2}\) are different and in case 3, case 4, and case 5, \(M_{3}=M_{4}=M_{5}\) but \(N_{3}\), \(N_{4}\) and \(N_{5}\) are different. The ideal IFSM should not produce the same value for case 1 & case 2 as they are different pairs and should not generate identical values for case 1, case 2, and case 3 as \(N_{3}\ne N_{4} \ne N_{5}\). We have computed similarity values using the proposed IFSM and existing ones. The outcomes are tabulated in Table 3.

Discussion on Table 3:

-

The IFSMs \(S_H\), \(S_{HY}^{1}\), \(S_{HY}^{2}\), \(S_{HY}^{3}\), \(S_{HY}^{4}\), \(S_{YC}\), \(S_{BA}\), \(S_{Y1}\), \(S_{Y2}\), \(S_{MP}\) and \(S_{Z}\) could not differentiate between the case 1 and case 2. They produce the same value for both cases.

-

The IFSMs \(S_H\), \(S_{HY}^{1}\), \(S_{HY}^{2}\), \(S_{HY}^{3}\), \(S_{HY}^{4}\), \(S_{VC}\), \(S_{YC}\), \(S_{BA}\), \(S_{Y1}\), \(S_{Y2}\), \(S_{MP}\), \(S_{G}\), \(S_{Z}\), \(S_{J}\) and \(S_{D}\) could not discriminate between the case 4 and case 5 as these measures generate the same value for both cases.

-

The similarity measures of \(S_{DC}\), \(S_{Y}\), \(S_{P}^2\) violates the fundamental properties of IFSM. For instance, in case 1, the similarity value by \(S_{DC}\) is 1 while both the sets are different, which is a violation of S2. Similarly, \(S_{P}^2\) provides similarity value 1 for cases 1, 2, and 3, while all the three pairs are different, i.e., \(M_i\ne N_i; i=1,2,3\).

-

The IFSMs methods \(S_{VC}\), \(S_{Y}\), and \(S_{X}\) failed to generate finite value for case 1 & case 2 and also violate property S1 of Definition 2.

It is clear from the above discussion that existing methods have several shortcomings and fail to discriminate between all the cases. At the same time, the suggested IFSM provides different values for all the cases and can discriminate between all the pairs of IFSs.

Experiment on pattern recognition

A strong similarity measure ought to facilitate rational decisions when identifying relationships between various patterns. Several studies47,60,61 have demonstrated the applicability of IFSMs in pattern recognition problems. Most of them correctly classified the results by using the maximum similarity principle but did not verify the superiority of their outcomes. As a result, Hatzimichailidis60 introduced the ‘degree of confidence’ as a performance index in pattern recognition by IFSMs. Therefore, we have provided an algorithm for pattern recognition with a ‘degree of confidence’ using IFSMs. The stepwise procedure for pattern recognition using IFSM is presented below.

Suppose we have m number of different patterns \(M_1,M_2, M_3,...,M_m\) and an unknown pattern N. Then, we aim to determine the class of the unknown pattern among the given patterns. Therefore, we use the proposed IFSM to evaluate the resemblance between the N and \(M_i\)s. The step-wise algorithm is given below.

- Step 1::

-

Evaluate the similarity values between the unknown pattern N and each given pattern \(M_i\), \(i=1,2,...,m\) by employing the intuitionistic fuzzy similarity measure.

- Step 2::

-

Compute a maximum (\(\mathcal {S}^{*}\)) of the similarity values using given formula (2):

$$\begin{aligned} \mathcal {S}^{*}=arg \max _{i}\{S(M_{i},N)\}. \end{aligned}$$(2)where \(S(M_{i},N)\) is the IFSM between the test pattern N and alternative \(M_i\).

- Step 3::

-

Select the pattern for which we obtain the maximum similarity value. Suppose, the maximum similarity is obtained for the pattern \(M_{k}\), then \(M_{k}\) is the desired alternative.

- Step 4::

-

Compute the ‘degree of confidence (DOC)’ for the selected pattern. The formula for DOC is provided in equation (3).

$$\begin{aligned} {\textbf {DOC}}^{(k)}=\sum _{i=1, i\ne k}^{m}|S(M_i, N)-S(M_k,N)|. \end{aligned}$$(3)Thus, the unknown pattern is classified as pattern \(M_{k}\) with \({\textbf {DOC}}^{(k)}\).

The higher DOC denotes the reliability of the similarity measure62.

To show the effectiveness of the our suggested IFSM, we have applied it to two pattern recognition problems using the above algorithm. We have used \({\textbf {DOC}}\) as a performance index to evaluate the performance of the proposed IFSM and the existing ones.

Example 3

Let the three known patterns are \(M_i\) (\(i=1,2,3\)) and the unknown pattern N is defined as IFSs on UOD \(X=\{u_1,u_2\}\) are \(M_1=\{\langle 0.35,0.35\rangle ,\langle 0.20,0.47\rangle \}\), \(M_2=\{\langle 0.33,0.35\rangle ,\langle 0.21,0.46\rangle \}\), \(M_3=\{\langle 0.31,0.31\rangle ,\langle 0.20,0.47\rangle \}\) and \(N=\{\langle 0.37,0.31\rangle ,\langle 0.23,0.44\rangle \}\). Our aim is to determine the class of unknown pattern N. Therefore, we have computed the similarity between all the known patterns \(M_i\) (\(i=1,2,3\)) and an unknown pattern N. The results of the proposed method and existing ones are listed in Table 4. Moreover, we have computed the DOC to show the effective and reliability of the outcomes.

From Table 4, it is evident that several existing methods \(S_H\), \(S_{DC}\), \(S_M\) , \(S_{VS}\) , \(S_{HY}^1\), \(S_{HY}^2\), \(S_{HY}^3\), \(S_{HY}^4\), \(S_{Y}\), \(S_{BA}\), \(S_{Y1}, S_{Y2}\)\(S_{MP}\), \(S_{p}^1\), \(S_{G}\), \(S_{Z}\), \(S_{P}^2\) , \(S_{J}\) and \(S_{D}\) are unable to identify the category of unknown pattern. In addition to that, IFSM of \(S_{P}^2\) provide similarity value 1, while \(M_i \ne N \forall i=1,2,3\), which is a violation of S2. Nevertheless, IFSMs \(S_{WX}\), \(S_{YC}\), \(S_{X}\) and the proposed method successfully identify the category of unknown pattern as \(M_2\). However, the proposed IFSM has a higher degree of confidence compared to the other three IFSMs \(S_{WX}\), \(S_{YC}\), \(S_{X}\), as shown in Fig. 2. Therefore, the suggested IFSM is more reliable.

Degree of confidence for the pattern recognition problem of example 3.

Example 4

We have the assessment of three candidates who applied for a vacant position in a company. The assessment of three applicants as IFSs are \(M_1=\{\langle 1,0 \rangle ,\langle 0.8,0\rangle ,\langle 0.7,0.1 \rangle \}\), \(M_2=\{\langle 0.8,0.1\rangle ,\langle 1,0\rangle ,\langle 0.9,0 \rangle \}\) and \(M_3=\{\langle 0.6,0.2\rangle ,\langle 0.8,0\rangle ,\langle 1,0\rangle \}\) and the desired criteria set for the open position is \(N=\{\langle 0.5,0.3\rangle ,\langle 0.6,0.2\rangle ,\langle 0.8,0.1\rangle \}\). We aim to choose a suitable candidate for the open position using IFSM. We have calculated similarity values between N and other \(M_i\), \(i=1,2,3\) by the proposed method and some existing ones, and the obtained values are listed in Table 5.

From this table, we can see that the similarity methods by Vlachos and Sergiadis37 \(S_{VS}\) and Xiao42 \(S_{X}\) could not identify the suitable candidate because of ‘zero denominator’ issue for all the pairs. In addition to that, the methods \(S_H\), \(S_M\), \(S_{WX}\), \(S_{HY}^2\) and \(S_{BA}\) produce counter-intuitive outcomes. These methods identify the suitable one but cannot rank the other two candidates. Our method successfully discriminates between all the applicants and chooses the suitable one. Most of the existing methods support our decision. Therefore, we computed the degree of confidence by using Eq. (3) for the performance evaluation of the IFSMs. The evaluation of DOC is provided in Fig. 3. It is evident from Fig. 3 that the proposed method has higher DOC compared to others. Therefore, it is more effective and reliable.

Degree of confidence for the pattern recognition problem of example 4.

Thus, these examples demonstrate that SOTA methods for computing similarities between two IFSs have several flaws, and the proposed similarity measure outperforms them.

A novel image fusion method based on IFSM

This section presents a new unsupervised image fusion approach using the proposed IFSM and Gaussian filter. Image processing utilizing fuzzy sets is designed to address the vagueness in the qualitative attributes of images through the formulation of membership functions. However, this approach encounters challenges in precisely quantifying the gray level of a specific pixel. The primary objective of the proposed methodology is to merge two or more images, thereby mitigating the ambiguity associated with gray levels and the uncertainty in assigning membership values to indistinct image pixels.

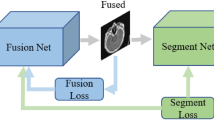

Firstly, we have two different source images of different modalities. Then, a two-scale Gaussian decomposition method is utilized to disintegrate the images into the base and detail layers. We have applied two fusion rules to fuse the base and detail layers. At last, a final fused image is reconstructed by integrating the obtained fused detail layer and base layer. The architecture of the proposed algorithm is presented in Fig. 4.

Two layer decomposition

A Gaussian filter is utilized to disintegrate the source image into two sub-images. Let \(I_1\) and \(I_2\) be two source images with dimension \(p\times q\). We aim to fuse these images. At first, we decompose them in the base layer and detail layer. We applied the Gaussian filter to the source images to obtain base subimages using Eq. (4).

where \(\mathcal {G}\) is the Gaussian filter. The base layer contains more crucial information, and the detail layer reflects texture information. The detailed sub-images can be obtained using Eq. (5).

Thus, we have obtained the base and detailed subimages for both the source images.

Fusion of base subimages

The base layer contains more crucial information but lacks texture information. The base images involve uncertainty and noise due to the lack of sharpness; therefore, IFSs are used to enhance the base images because they have the power to analyze vagueness and uncertainty. Membership functions for fuzzy sets are typically established based on an expert’s intuition to address ambiguity, with the understanding that the selection of the optimal membership function cannot be made with absolute certainty. Driven by these shortcomings, IFSs are utilized to diminish the uncertainty in assigning values to the gray levels of a not-clearly-defined image, as well as to tackle the hesitancy encountered in defining membership functions, which may stem from a lack of knowledge or potential errors. The proposed approach aims to mitigate this intrinsic uncertainty. Therefore, we have introduced an intuitionistic fuzzification method, which is presented as follows.

Initially, a pixel-valued matrix of an image B of dimension \(p\times q\) is fuzzified using Eq. (6).

where B(x, y) is the pixel value of the image B at (x, y) and \(B_{\max }(x,y)\) and \(B_{\min }(x,y)\) are maximum and minimum of the pixel values of B, respectively. Then, the membership values for IFSs can be calculated using Eq. (7).

Then, the non-membership function is given by the following Eq. (8).

The hesitancy degree is given in Eq. (9).

Here \(\alpha >0\) is the experimental value. Thus, we obtain an Intuitionistic fuzzy image (IFI) of dimension \(p\times q\).

To fuse the base sub-images \(B_1\) and \(B_2\) obtained from Eq. (4), we define the fusion rule using the proposed similarity measure. The fusion algorithm for base sub-images is given as follows:

- (Step1)::

-

Convert the base subimages \(B_1\) and \(B_2\) into IFSs using the Eqs. (6–9).

- (Step2)::

-

Compute the IFSM between each \(B_i\) and a crisp IFS C (i.e., \(\mu =1\), \(\nu =0\), and \(\tau =0\) for all the elements) of the same dimension, using the proposed IFSM. The formulas to calculate the similarity values between \(B_1\) and C & \(B_2\) and C are provided in Eqs. (10) and (11), respectively.

$$\begin{aligned} \begin{aligned} S_A^{B_1C}(x,y)=\frac{1}{3}\left[ \dfrac{2-\Delta \mu ^{B_1C}(x,y)-\Delta \mu _{\max }^{B_1C}}{2+\Delta \mu _{\min }^{B_1C}-\Delta \mu _{\max }^{B_1C}}(1-\Delta \mu ^{B_1C}(x,y)) +\dfrac{2-\Delta \nu ^{B_1C}(x,y)-\Delta \nu _{\max }^{B_1C}}{2+\Delta \nu _{\min }^{B_1C}-\Delta \nu _{\max }^{B_1C}}(1-\Delta \nu ^{B_1C}(x,y)) \right. \\ \left. +\dfrac{2-\Delta \tau ^{B_1C}(x,y)-\Delta \tau _{\max }^{B_1C}}{2+\Delta \tau _{\min }^{B_1C}-\Delta \tau _{\max }^{B_1C}}(1-\Delta \tau ^{B_1C}(x,y))\right] \end{aligned} \end{aligned}$$(10)$$\begin{aligned} \begin{aligned} S_A^{B_2C}(x,y)=\frac{1}{3}\left[ \dfrac{2-\Delta \mu ^{B_2C}(x,y)-\Delta \mu _{\max }^{B_2C}}{2+\Delta \mu _{\min }^{B_2C}-\Delta \mu _{\max }^{B_2C}}(1-\Delta \mu ^{B_2C}(x,y)) +\dfrac{2-\Delta \nu ^{B_2C}(x,y)-\Delta \nu _{\max }^{B_2C}}{2+\Delta \nu _{\min }^{B_2C}-\Delta \nu _{\max }^{B_2C}}(1-\Delta \nu ^{B_2C}(x,y)) \right. \\ \left. +\dfrac{2-\Delta \tau ^{B_iC}(x,y)-\Delta \tau _{\max }^{B_iC}}{2+\Delta \tau _{\min }^{B_2C}-\Delta \tau _{\max }^{B_2C}}(1-\Delta \tau ^{B_2C}(x,y))\right] \end{aligned} \end{aligned}$$(11) - (Step3)::

-

Refer these two images as \(IFSMI_1\) and \(IFSMI_2\).

- (Step4)::

-

Decompose these images into blocks of size \(m\times n\) and \(k^{th}\) block of images \(IFSMI_1\) and \(IFSMI_2\) are represented as \(IFSMI_1^k\) and \(IFSMI_2^k\), respectively.

- (Step5)::

-

Compute the sum of pixel values of each block of these images using the following Eqs. (12) and (13).

$$\begin{aligned} \Omega (IFSMI_1^k)=\sum _{i=1}^m \sum _{j=1}^n IFSMI_1^k(i,j) \end{aligned}$$(12)$$\begin{aligned} \Omega (IFSMI_2^k)=\sum _{i=1}^m \sum _{j=1}^n IFSMI_2^k(i,j) \end{aligned}$$(13) - (Step6)::

-

Apply the following fusion rules defined in Eq. (14).

$$\begin{aligned} B_{fused}= {\left\{ \begin{array}{ll} B_1^k(x,y) & \Omega (IFSMI_1^k) > \Omega (IFSMI_2^k)\\ B_2^k(x,y) & \Omega (IFSMI_1^k) < \Omega (IFSMI_2^k)\\ \dfrac{B_1^k(x,y)+ B_2^k(x,y)}{2} & \text {Otherwise} \end{array}\right. } \end{aligned}$$(14)where \(B_1^k(x,y)\) and \(B_2^k(x,y)\) is \(k^{th}\) block of image \(B_1\) and \(B_2\), respectively.

Thus, we obtain a fused base image \(B_{fused}\) of dimension \(p\times q\). The architecture of the base fusion model is provided in Fig. 5.

Fusion of detail subimages

The detailed images capture the approximate characteristics of medical images, and the amplitude of the coefficient provides sufficient information for fusion. Therefore, we apply a simple fusion rule defined as follows:

where \(D_{fused}\) represents the fused detailed layer coefficients; \(D_1(x,y)\) and \(D_2(x,y)\) are detailed layer coefficients for images \(I_1\) and \(I_2\) of order \(p\times q\), respectively.

Reconstruction of the final fused image

The final fused image is generated by combining the fused base image and the detailed image, as described in Eq. (16).

Hence, \(I_{fused}\) is the required fused image of the source images \(I_1\) and \(I_2\).

Experiments, results and discussion

This section compares the proposed image fusion method with some SOTA methods to show their superiority and effectiveness. Extensive experiments on medical images were carried out to demonstrate the advantages of the proposed image fusion technique over existing ones. In addition to that, we have implemented the proposed method on infrared and visible image fusion to check the generalizability. The outcomes for this experiment are provided in Section 2 of the supplementary file.

Image fusion experiments

To show the effectiveness of the proposed fusion algorithm, we applied it to fuse the medical images. The experiment was conducted using three types of datasets: CT and MRI, MR-T1 and MR-T2, MR-T2, and MR-PD. These datasets are publicly available on the Harvard Medical School website https://www.med.harvard.edu/aanlib and also available at github https://github.com/xianming-gu/CNN-Image-Fusion/tree/main/MyDatasets and google sites https://sites.google.com/view/durgaprasadbavirisetti/datasets?authuser=0.

The architecture of the proposed fusion method is shown in Fig. 4 by using one sample pair of CT (\(I_1\)) and MRI (\(I_2\)) images. At first, we decomposed both the images into two different subimages: base subimages (\(B_1\) and \(B_2\)) and detailed subimages (\(D_1\) and \(D_2\)). The base layer involves more crucial information, and the detail layer reflects texture information. Next, we employ the proposed similarity measure on base subimages to extract the features. Thus, we have obtained enhanced base subimages \(IFSMI_1\) and \(IFSMI_2\). Then, we decomposed these images into blocks of size \(2\times 2\) and reconstructed fused images using Eq. (14). Thus, we obtained base fused image \(B_{fused}\). After that, we fuse detailed images (\(D_1\) & \(D_2\)) using Eq. (15). The detailed sub-images present the approximate features of the medical images, and the coefficient amplitude provides sufficient information for fusion. The final fused image (\(I_{fused}\)) is computed by using Eq. (16). We have compared our proposed method with some recent image fusion methods, including IFS-based method3 and four other SOTA methods PCA-DWT24, CNP-MIF53, DTNP-MIF54, MDLSR-RFM16. Jiang et al.3’s method is constructed using IFSM, PCA-DWT is a hybrid method of PCA and DWT, CNP-MIF and DTNP-MIF are NSCT domain-based methods, and MDLSR-RFM is an SR-based model. The program codes for these methods are readily available to the public or provided directly by the authors. To ensure a fair and unbiased comparison, all parameters are set according to the recommendations specified in the relevant scholarly articles. Three distinct datasets, labeled 1, 2, and 3, are considered for comparison. Dataset 1 contains five pairs of CT and MRI images, as depicted in Fig. 6. Dataset 2 consists of five pairs of MR-T2 and MR-PD images, as shown in Fig. 7. Dataset 3 includes three pairs of MR-T1 and MR-T2 images, as shown in Fig. 8. The subjective and objective assessment of the results obtained is provided in the following sections.

Subjective assessment

The visual outcomes for the Dataset 1 are provided in Fig. 6. In Fig. 6, it is clear that the PCA-DWT method fails to capture CT details, while the CNP-MIF and DTNP-MIF methods fall short in delivering adequate contrast. It is noteworthy that the MDLSR-RFM method introduces unwanted artifacts, leading to the distortion of local features. Likewise, the IFS method fails to display MRI image edges in the fused image. Despite these shortcomings, our proposed fusion technique outperforms other sophisticated methods in delivering enhanced contrast.

Dataset 2 includes the fusion of MR-PD and MR-T2 images, where this fusion process highlights the brain’s soft tissues with sharp edge definition. The fusion results for Dataset 2, utilizing both SOTA and proposed methods, are illustrated in Fig. 7. Initially, the PCA-DWT performance indicates a significant loss of brain edge information. In particular, the fusion outcomes from CNP-MIF and DTNP-MIF methods poorly represent anatomical structures. Moreover, there is a notable decrease in contrast at the intensity level for the fused images obtained by the MDLSR-RFM. On the other hand, the IFS-based method and the proposed method demonstrate favorable results. Nonetheless, the proposed method offers superior fusion quality, particularly in terms of detail clarity and intensity levels.

Dataset 3 includes MR-T1 and MR-T2 images, which are differentiated by their radio-frequency pulse sequences that emphasize fat tissue and water in the body. Combining these images yields comprehensive details that are beneficial for complex image analyses. Figure 8 displays the fusion results for Dataset 3. It is observed that PCA-DWT fails to retain the gray and white matter details. Concurrently, the images fused using CNP-MIF and DTNP-MIF appear blurred and lack essential complementary details. Visually, the MDLSR-RFM does not maintain the critical features of the source images and produces unnecessary artifacts, leading to the distortion of local features. Furthermore, findings of the fusion with the IFS method do not adequately contain the white matter from the original images, showing a significant loss of energy from the source images. Thus, the proposed fusion method outperforms the others by effectively reducing uncertainties and demonstrating all the features of source images.

Objective assessment

Visual evaluation alone is insufficient to assess the quality of the fusion outcomes. So, six popular evaluation metrics are used to carry out a thorough, objective evaluation, including mean63, Standard Deviation (SD)63, Average Gradient (AG)64, Spatial Frequency (SF)65, feature mutual information (FMI)66 and Xydeas67. These metrics are frequently utilized to assess the accuracy of the proposed fusion method. Higher values of these metrics indicate superior performance. The evaluation metric Mean indicates average intensity or brightness in the fused image; SD indicates the contrast; AG represents local contrast and edge sharpness and SF represents the frequency of intensity changes and global contrast in the fused image; FMI values suggest how many features from both source images are well preserved in the fused image; Xydeas indicates the edge information from the source images to the fused image.

The findings of the objective measures for Dataset 1 are given in Table 6. This table clearly shows that, PCA-DWT performs poorly compared to all other methods. CNP-MIF and MDLSR-RFM yield low values for SF, indicating poor contrast and poor edge preservation in the fused images. The proposed approach achieves greater values for all parameters except for FMI, compared to the existing methods across all pairs. Thus, five out of six parameters produce higher values for the proposed methodology. This evaluation indicates that the fusion outcomes by the proposed approach preserve more detailed information and features of source images compared to existing methods.

The objective evaluation for Dataset 2 is illustrated in Table 7. This table shows that the proposed method has better results in several aspects compared to the SOTA methods. The mean, SD, SF, and AG values are larger than the existing method for all the fused images. The higher values of these metrics indicate that the fused images preserve local contrast, global contrast, and edge sharpness better than the SOTA methods. However, FMI and Xydeas have high values for IFS-based method, but the difference between the proposed method and IFS-based method3 for FMI and Xydeas values is very much less.

The objective evaluation of Dataset 3, consisting of MR-T1 and MR-T2 images is presented in Table 8. It is evident from this table that the PCA-DWT method achieves very low values across all metrics, demonstrating poor performance. In contrast, CNP-MIF and MDLSR-RFM yield low values for SF, indicating poor contrast in the fused images. The IFS-based method produces competitive results compared to the proposed approach. The majority of the evaluation metrics, such as mean, SD, SF, and AG, exhibit higher values than those of the existing methods. Consequently, this indicates that our proposed approach delivers enhanced performance relative to the other techniques.

The graphical representations of an average of all the objective metrics for various methods are illustrated in Fig. 9. For a better visual representation, we scale all the values to the same range by calculating percentiles. From Fig. 9, it is evident that the performance curves of the proposed image fusion method outperform those of many existing approaches. Mean and SD are crucial metrics for image fusion as they assess pixel intensity values in fused images. As depicted in Fig. 9, our method scores higher in Mean, SD, SF, AG, and Xydeas values than competing methods. This indicates that our method is more effective at integrating and highlighting features from the original medical images than the other methods. Moreover, our method’s FMI scores are superior to those of most other methods, except the IFS method. Overall, the evaluation metrics demonstrate that our newly proposed medical image fusion technique, utilizing a novel fuzzy similarity measure between IFSs, is more effective than most competing methods.

Moreover, we compared the computational complexity of the proposed image fusion method based on user parameter dependency and time complexity. The methods CNP-MIF and DTNP-MIF require five user-defined parameters, including the iteration number \(t_{\max }\), threshold \(\tau _0\) for each neuron, the value of spikes p in the spiking rule for neurons, the neighborhood radius r and the weight matrix \(W_{r\times r}\). The MDLSR-RFM algorithm requires two user-defined inputs: the regularization parameter t and a number of iterations n. The IFS-based method depends on three parameters: the maximum decomposition level N, window size for decomposition, and experimental parameter \(\alpha\) for fuzzification, whereas the proposed technique requires only two parameters: the window size for decomposition and the experimental parameter \(\alpha\) for fuzzification. Furthermore, we compared the runtime of all methods, as shown in Fig. 10. The proposed technique requires less time than three other methods: CNP-MIF, DTNP-MIF, and MDLSR-RFM.

Thus, the proposed method demonstrates clear advantages over existing methods due to its simplicity, requiring less number of user-defined parameters. This streamlined approach reduces the complexity and risk of human error, saves time in parameter tuning, and maintains consistent performance across different datasets. It is clear from the above discussion that the IF-based image fusion methods outperform the traditional methods objectively and subjectively. The main benefit of these techniques is that they do not necessitate a large volume of data & high-power computers, so the overall computational cost is much less.

Significance test for statistical comparison

A significance test is first conducted to assess whether the obtained results exhibit a meaningful difference. The null hypothesis (\(H_0\)) suggests no effect or difference, while the alternative hypothesis (\(H_1\)) indicates there is an effect or distinction exists between methods. The absence of a benchmark dataset and universally accepted protocols for conducting comparative studies makes statistical analysis essential for ensuring high-quality research in image fusion. The motivation for implementing statistical analysis for image fusion algorithms comes from the study by Liu et al.68. Several methods produce nearly identical objective values, making it challenging to determine which one performs better. Therefore, it is crucial to analyze the statistical significance of the proposed technique compared to existing ones for more meaningful comparisons68.

This section aims to determine whether the proposed methodology shows a significant difference compared to the other five fusion algorithms across all datasets and six evaluation fusion metrics used in the experiments. Initially, we organized the datasets so that the columns represent the methods and the rows represent metric values for all images. Then, we applied the Friedman test to check for significant differences. For Dataset 1, we obtained a p-value of \(2.6882e-16\), indicating significant differences among the fusion techniques and prompting further tests to identify the sources of these differences. Subsequently, we conducted Dunn’s post-hoc analysis to compare each existing method to the proposed method, and the results are presented in Table 9. Table 9 displays the average rankings and corrected p-values for each comparison. A star (\(*\)) in the second row indicates a significant difference, as shown by the corrected p-value, which is less than 0.05. The optimal ranking is presented in bold, with smaller values indicating better performance. Based on the table, there is no significant difference between the IFS-based and proposed methods, although both differ significantly from the other algorithms. Similarly, results for Dataset 2 and Dataset 3 are tabulated in Tables 10 and 11, respectively. These tables clearly show that the proposed methods differ significantly from the first four methods and hold the lowest ranking for Dataset 1, Dataset 2, and Dataset 3.

Sensitivity analysis

To evaluate the influence of the parameter \(\alpha\) used in the intuitionistic fuzzification process, a sensitivity analysis was conducted by varying \(\alpha\) over the range \(\{0, 0.5, 1, 1.5, 2, 2.5\}\) as shown in Table 12. The impact of \(\alpha\) was assessed across three different types of datasets using widely accepted objective image fusion metrics. The results indicate that while smaller values of \(\alpha\) led to minor variations in the fusion quality, the performance metrics gradually stabilized as \(\alpha\) increased. Notably, the outcomes for \(\alpha = 2\) and \(\alpha = 2.5\) were nearly identical across all datasets, suggesting that the model reaches a level of insensitivity to \(\alpha\) beyond a certain point. Based on this observation, \(\alpha = 2\) was selected for all experiments to ensure consistency and computational efficiency, without compromising the robustness or quality of the fused results.

Ablation study

We conducted a detailed ablation study to understand the impact and significance of the fusion rules in our proposed image fusion method. The rule we developed for fusing the base layers is referred to as Rule 1, while the rule for the detail layers is called Rule 2. We created four different combinations of fusion rules, as shown in Fig. 11 and Table 13. We then calculated various metric values for image S1 from Dataset 1. Our method achieved higher scores in three of the five metrics, indicating that the first combination is the most suitable for the image fusion process.

Implementation on large-scale data set

We have applied the proposed method to a large-scale dataset consisting of 160 pairs of CT and MRI images. The evaluation of the fusion metric is given in Table 14.

Since the proposed method is completely unsupervised, the evaluation is performed one by one; therefore, the computational complexity is the same for a single pair of images as it was in the previous experiment. The proposed method achieves higher values for the metrics AG, SF, and Xydeas, which are crucial for edge preservation, higher contrast, and detailed information in image fusion. The IFS-based method yields the highest values for Mean, SD, and FMI; however, the proposed method achieves the second-best values for these metrics, demonstrating its effectiveness in preserving feature information and brightness. The last column of Table 14 shows the total evaluation time taken for the fusion of 160 images; however, the average execution time is the same as before (as shown in Fig. 10). Therefore, the proposed method is applicable for a large-scale dataset with the same performance efficiency and computational complexity.

Conclusion

We have conducted a comprehensive literature survey on IFSMs and identified several drawbacks in the existing IFSMs, such as violation of fundamental axioms, producing counter-intuitive or unreasonable outcomes, and the inability to produce a finite value for certain pairs of IFSs. These flaws can lead to inaccurate outcomes and negatively impact the decision-making process. As a result, we introduced a novel IFSM based on the global maximum and minimum of the difference in membership, non-membership, and hesitancy degrees between the two IFSs. We have shown that the proposed IFSM adheres to the essential properties and axiomatic definitions of IFSM. Then, the proposed IFSM was compared to some existing ones by using five different pairs of IFSs, and its superiority was demonstrated. In addition to that, we provided a pattern recognition algorithm using the proposed IFSM and provided two examples to prove its effectiveness. The performance of the IFSMs is evaluated by using the ‘Degree of confidence’ as the performance index. We found that the suggested method has a higher degree of confidence compared to existing ones. Additionally, we developed an image fusion technique for multi-modality images. First, we applied a two-scale decomposition strategy using a Gaussian filter to separate the source images into two sub-images: a base image and a detailed image. Since the base layer holds essential information but lacks texture and is prone to uncertainty and noise due to its softness, we transformed the base images into IF form using the IFG for better clarity and precision. IFSM was then used to extract features from the base images and establish a new fusion rule to merge them. Additionally, we applied a different rule to merge the detailed images. Finally, the fused base and detailed sub-images were merged to produce the final fused image. Furthermore, an extensive experimental analysis was carried out to validate the superiority and effectiveness of the proposed image fusion approach. The proposed technique is compared with five SOTA methods using three distinct datasets: CT & MRI, MR-PD & MR-T2, and MR-T1 & MR-T2. The comparison is based on subjective analysis and objective assessment using objective measures: mean, SD, SF, FMI, AG, and Xydeas. In addition to that, we have implemented the proposed image fusion method on a large-scale dataset containing 160 CT and MRI images. The results reveal that the proposed image fusion technique consistently achieves greater objective values across various parameters than existing methods, underscoring its enhanced performance across multiple dimensions. To evaluate the generalizability of the proposed method, we have also applied it to infrared and visible images, and the results are provided in the supplementary file.

Limitations and future work

Although the proposed IFSM and image fusion method demonstrate improved performance, there is still potential for enhancement. Some of the key limitations of the proposed work are as follows:

-

In the intuitionistic fuzzification process, the parameter \(\alpha\) was selected based on the optimal values of evaluation metrics. However, alternative approaches, such as entropy-based methods could also be explored to determine a more theoretically grounded or adaptive choice of \(\alpha\).

-

The method employs a Gaussian filter to decompose images into sub-images; however, the potential of alternative filters or decomposition methods, such as the Laplacian pyramid or wavelet transform, for creating sub-bands could be further explored.

-

While IFSM-based fusion performs well for specific medical images (e.g., CT and MRI), applying it to other modalities and color image domains may require adjustments, limiting its broader use.

Moreover, the research directions based on the proposed work are provided below:

-

The proposed similarity measure could be extended to other generalized fuzzy sets, including Pythagorean fuzzy sets (PFS), Fermatean fuzzy sets (FFS), and interval-valued intuitionistic fuzzy sets.

-

The other intuitionistic fuzzification methods could be constructed based on the intuitionistic fuzzy generator to convert the crisp data and image data into intuitionistic fuzzy form.

-

The proposed IFSM could be utilized to construct other approaches, including multi-criteria decision-making, clustering, and image segmentation.

-

The proposed approach could be integrated with deep learning techniques to boost the fusion method’s effectiveness and to reduce the time complexity for large datasets.

Furthermore, several emerging complex fuzzy environments such as neutrosophic fuzzy environments and spherical neutrosophic environments, have demonstrated potential in complex decision-making problems by utilizing distance and similarity measures69,70. However, their application in image processing tasks remains limited due to the absence of suitable generators and appropriate methods for transforming image data into these environments. Researchers have also begun employing IFGs in other extended fuzzy sets, such as PFS and FFS, to handle image-processing tasks. Recently, Jana et al.71 attempted to construct a Pythagorean fuzzy generator; nevertheless, standardized generators for other fuzzy environments are still lacking. Therefore, our future work will aim to develop effective generators and similarity measures for these advanced fuzzy environments to enable their application in image processing.

Data availability

The datasets used and/or analyzed in this study are available from the corresponding author on reasonable request.

References

Zadeh, L. A. Fuzzy sets. Inf. Control 8, 338–353 (1965).

Atanassov, K. Intuitionistic fuzzy sets vii itkr s session. Sofia 1, 983 (1983).

Jiang, Q. et al. A lightweight multimode medical image fusion method using similarity measure between intuitionistic fuzzy sets joint laplacian pyramid. IEEE Trans. Emerg. Top. Comput. Intell. (2023).

Selvam, C., Jebadass, R. J. J., Sundaram, D. & Shanmugam, L. A novel intuitionistic fuzzy generator for low-contrast color image enhancement technique. Inf. Fusion 108, 102365 (2024).

Ragavendirane, M. & Dhanasekar, S. Low-light image enhancement via new intuitionistic fuzzy generator-based retinex approach. IEEE Access (2025).

Patel, A., Jana, S. & Mahanta, J. Intuitionistic fuzzy em-swara-topsis approach based on new distance measure to assess the medical waste treatment techniques. Appl. Soft Comput. 2023, 110521 (2023).

Rani, P., Mishra, A. R., Cavallaro, F. & Alrasheedi, A. F. Location selection for offshore wind power station using interval-valued intuitionistic fuzzy distance measure-rancom-wisp method. Sci. Rep. 14, 4706 (2024).

Patel, A., Jana, S. & Mahanta, J. Construction of similarity measure for intuitionistic fuzzy sets and its application in face recognition and software quality evaluation. Expert Syst. Appl. 237, 121491 (2024).

Garg, H. & Rani, D. Novel distance measures for intuitionistic fuzzy sets based on various triangle centers of isosceles triangular fuzzy numbers and their applications. Expert Syst. Appl. 191, 116–228 (2022).

Zhang, Y. & Huang, H.-L. The distance and entropy measures-based intuitionistic fuzzy c-means and similarity matrix clustering algorithms and their applications. Appl. Soft Comput. 169, 112581 (2025).

Patel, A., Jana, S. & Mahanta, J. Two new 3d distance measures for ifss and their applications in pattern classification and pathological diagnosis. In 2022 IEEE Silchar Subsection Conference (SILCON) 1–6 (IEEE, 2022).

Jiang, Q. et al. Medical image fusion using a new entropy measure between intuitionistic fuzzy sets joint gaussian curvature filter. IEEE Trans. Radiat. Plasma Med. Sci. (2023).

Palanisami, D., Mohan, N. & Ganeshkumar, L. A new approach of multi-modal medical image fusion using intuitionistic fuzzy set. Biomed. Signal Process. Control 77, 103762 (2022).

Wang, H.-q. & Xing, H. Multi-mode medical image fusion algorithm based on principal component analysis. In 2009 International Symposium on Computer Network and Multimedia Technology 1–4 (IEEE, 2009).

Liu, Y. et al. Deep learning for pixel-level image fusion: Recent advances and future prospects. Inf. Fusion 42, 158–173 (2018).

Wang, J., Qu, H., Zhang, Z. & Xie, M. New insights into multi-focus image fusion: a fusion method based on multi-dictionary linear sparse representation and region fusion model. Inf. Fusion 105, 102230 (2024).

Jin, X., Nie, R., Zhou, D. & Yu, J. Color image fusion researching based on s-pcnn and laplacian pyramid. In Cloud Computing and Big Data: Second International Conference, CloudCom-Asia 2015, Huangshan, China, June 17–19, 2015, Revised Selected Papers 2 179–188 (Springer, 2015).

Liu, Z., Chai, Y., Yin, H., Zhou, J. & Zhu, Z. A novel multi-focus image fusion approach based on image decomposition. Inf. Fusion 35, 102–116 (2017).

Suriya, T. & Rangarajan, P. An improved fusion technique for medical images using discrete wavelet transform. J. Med. Imaging Health Inf. 6, 585–597 (2016).

Zhang, Y. et al. Ifcnn: a general image fusion framework based on convolutional neural network. Inf. Fusion 54, 99–118 (2020).

Sinha, A. et al. Multi-modal medical image fusion using improved dual-channel pcnn. Med. Biol. Eng. Comput. 62, 2629–2651 (2024).

Feng, X. et al. Mmif-vaefusion: an end-to-end multi-modal medical image fusion network using vector quantized variational auto-encoder. Biomed. Signal Process. Control 102, 107407 (2025).

Deng, X. & Han, B. Tq-cgan: a trible-generator quintuple-discriminator conditional generative adversarial network for multimodal grayscale medical image fusion. Biomed. Signal Process. Control 102, 107322 (2025).

Atyali, R. K. & Khot, S. R. An enhancement in detection of brain cancer through image fusion. In 2016 IEEE International Conference on Advances in Electronics, Communication and Computer Technology (ICAECCT) 438–442 (IEEE, 2016).

Balasubramaniam, P. & Ananthi, V. Image fusion using intuitionistic fuzzy sets. Inf. Fusion 20, 21–30 (2014).

Hung, W.-L. & Yang, M.-S. Similarity measures of intuitionistic fuzzy sets based on hausdorff distance. Pattern Recogn. Lett. 25, 1603–1611 (2004).

Hong, D. H. & Kim, C. A note on similarity measures between vague sets and between elements. Inf. Sci. 115, 83–96 (1999).

Chen, S.-M. Similarity measures between vague sets and between elements. IEEE Trans. Syst. Man. Cybern. Part B (Cybern.) 27, 153–158 (1997).

Dengfeng, L. & Chuntian, C. New similarity measures of intuitionistic fuzzy sets and application to pattern recognitions. Pattern Recogn. Lett. 23, 221–225 (2002).

Mitchell, H. B. On the dengfeng-chuntian similarity measure and its application to pattern recognition. Pattern Recogn. Lett. 24, 3101–3104 (2003).

Hung, W.-L. & Yang, M.-S. Similarity measures of intuitionistic fuzzy sets based on lp metric. Int. J. Approx. Reason. 46, 120–136 (2007).

Wang, W. & Xin, X. Distance measure between intuitionistic fuzzy sets. Pattern Recogn. Lett. 26, 2063–2069 (2005).

Atanassov, K. T. Intuitionistic fuzzy sets. In Intuitionistic Fuzzy Sets 1–137 (Springer, 1999).

Grzegorzewski, P. Distances between intuitionistic fuzzy sets and/or interval-valued fuzzy sets based on the hausdorff metric. Fuzzy Sets Syst. 148, 319–328 (2004).

Hung, W.-L. & Yang, M.-S. On similarity measures between intuitionistic fuzzy sets. Int. J. Intell. Syst. 23, 364–383 (2008).

Pappis, C. P. & Karacapilidis, N. I. A comparative assessment of measures of similarity of fuzzy values. Fuzzy Sets Syst. 56, 171–174 (1993).

Vlachos, I. K. & Sergiadis, G. D. Intuitionistic fuzzy information-applications to pattern recognition. Pattern Recogn. Lett. 28, 197–206 (2007).

Yang, Y. & Chiclana, F. Intuitionistic fuzzy sets: spherical representation and distances. Int. J. Intell. Syst. 24, 399–420 (2009).

Ye, J. Cosine similarity measures for intuitionistic fuzzy sets and their applications. Math. Comput. Model. 53, 91–97 (2011).

Boran, F. E. & Akay, D. A biparametric similarity measure on intuitionistic fuzzy sets with applications to pattern recognition. Inf. Sci. 255, 45–57 (2014).

Ye, J. Similarity measures of intuitionistic fuzzy sets based on cosine function for the decision making of mechanical design schemes. J. Intell. Fuzzy Syst. 30, 151–158 (2016).

Xiao, F. A distance measure for intuitionistic fuzzy sets and its application to pattern classification problems. IEEE Trans. Syst. Man Cybern. Syst. 51, 3980–3992 (2019).

Mahanta, J. & Panda, S. A novel distance measure for intuitionistic fuzzy sets with diverse applications. Int. J. Intell. Syst. 36, 615–627 (2021).

Patel, A., Kumar, N. & Mahanta, J. A 3d distance measure for intuitionistic fuzzy sets and its application in pattern recognition and decision-making problems. New Math. Nat. Comput. https://doi.org/10.1142/S1793005723500163 (2022).

Gohain, B., Chutia, R. & Dutta, P. Distance measure on intuitionistic fuzzy sets and its application in decision-making, pattern recognition, and clustering problems. Int. J. Intell. Syst. 37, 2458–2501 (2022).

Zeng, W., Cui, H., Liu, Y., Yin, Q. & Xu, Z. Novel distance measure between intuitionistic fuzzy sets and its application in pattern recognition. Iran. J. Fuzzy Syst. 19, 127–137 (2022).

Dutta, D., Dutta, P. & Gohain, B. Similarity measure on intuitionistic fuzzy sets based on benchmark line and it s diverse applications. Eng. Appl. Artif. Intell. 133, 108522 (2024).

Qu, G., Zhang, D. & Yan, P. Medical image fusion by independent component analysis. In Proc. 5th Int. Conf. Electron. Meas. Instrum. 887–893 (2001).

Wang, A., Sun, H. & Guan, Y. The application of wavelet transform to multi-modality medical image fusion. In 2006 IEEE International Conference on networking, sensing and control 270–274 (IEEE, 2006).

Mankar, R. & Daimiwal, N. Multimodal medical image fusion under nonsubsampled contourlet transform domain. In 2015 International Conference on Communications and Signal Processing (ICCSP) 0592–0596 (IEEE, 2015).

Guo, K. & Labate, D. Optimally sparse multidimensional representation using shearlets. SIAM J. Math. Anal. 39, 298–318 (2007).

Wang, L., Li, B. & Tian, L. A novel multi-modal medical image fusion method based on shift-invariant shearlet transform. Imaging Sci. J. 61, 529–540 (2013).

Li, B. et al. Medical image fusion method based on coupled neural p systems in nonsubsampled shearlet transform domain. Int. J. Neural Syst. 31, 2050050 (2021).

Li, B., Peng, H. & Wang, J. A novel fusion method based on dynamic threshold neural p systems and nonsubsampled contourlet transform for multi-modality medical images. Signal Process. 178, 107793 (2021).

Nawaz, Q., Bin, X., Weisheng, L. & Hamid, I. Multi-modal medical image fusion using 2dpca. In 2017 2nd International Conference on Image, Vision and Computing (ICIVC) 645–649 (IEEE, 2017).

Calhoun, V. D. & Adali, T. Feature-based fusion of medical imaging data. IEEE Trans. Inf Technol. Biomed. 13, 711–720 (2008).

Gohain, B., Dutta, P., Gogoi, S. & Chutia, R. Construction and generation of distance and similarity measures for intuitionistic fuzzy sets and various applications. Int. J. Intell. Syst. 36, 7805–7838 (2021).

Szmidt, E. & Kacprzyk, J. Distances between intuitionistic fuzzy sets. Fuzzy Sets Syst. 114, 505–518 (2000).

Chen, T.-Y. A note on distances between intuitionistic fuzzy sets and/or interval-valued fuzzy sets based on the hausdorff metric. Fuzzy Sets Syst. 158, 2523–2525 (2007).

Hatzimichailidis, A. G., Papakostas, G. A. & Kaburlasos, V. G. A novel distance measure of intuitionistic fuzzy sets and its application to pattern recognition problems. Int. J. Intell. Syst. 27, 396–409 (2012).

Wu, X. et al. Nonlinear strict distance and similarity measures for intuitionistic fuzzy sets with applications to pattern classification and medical diagnosis. Sci. Rep. 13, 13918 (2023).

Singh, S. & Singh, K. Novel construction methods for picture fuzzy divergence measures with applications in pattern recognition, madm, and clustering analysis. Pattern Anal. Appl. 28, 46 (2025).

Jinju, J., Santhi, N., Ramar, K. & Bama, B. S. Spatial frequency discrete wavelet transform image fusion technique for remote sensing applications. Eng. Sci. Technol. Int. J. 22, 715–726 (2019).

Haddadpour, M., Daneshvar, S. & Seyedarabi, H. Pet and mri image fusion based on combination of 2-d hilbert transform and ihs method. Biomed. J. 40, 219–225 (2017).

Das, S. & Kundu, M. K. Nsct-based multimodal medical image fusion using pulse-coupled neural network and modified spatial frequency. Med. Biol. Eng. Comput. 50, 1105–1114 (2012).

Haghighat, M. B. A., Aghagolzadeh, A. & Seyedarabi, H. A non-reference image fusion metric based on mutual information of image features. Comput. Electr. Eng. 37, 744–756 (2011).

Xydeas, C. S. & Petrovic, V. S. Objective pixel-level image fusion performance measure. In Sensor Fusion: Architectures, Algorithms, and Applications IV, vol. 4051 89–98 (SPIE, 2000).

Liu, Z., Blasch, E. & John, V. Statistical comparison of image fusion algorithms: recommendations. Inf. Fusion 36, 251–260 (2017).