Abstract

Reliable zero-watermarking is a distortion-free approach to copyright protection, which has been a primary focus of digital watermarking research. Traditional zero-watermarking techniques often struggle to maintain resilience against geometric and signal processing attacks while ensuring high security and imperceptibility. Many existing methods fail to extract stable and distinguishable features, making them vulnerable to image distortions such as compression, filtering, and geometric transformations. This paper presents a robust zero-watermarking technique for color images, combining Local Binary Patterns (LBP) with deep features extracted from the CONV5-4 layer of the VGG19 neural network to overcome these limitations. Frequent domain transformations, utilizing the Discrete Wavelet Transform (DWT) and Discrete Cosine Transform (DCT), enhance feature representation and improve resilience. Furthermore, a chaotic encryption scheme based on the Lorenz system and the Logistic map is used to scramble the feature matrix and watermark, thereby ensuring increased security. The zero watermark is generated through an XOR operation, facilitating imperceptible and secure ownership verification. Experimental results show that the proposed method is highly resilient to various attacks, including scaling, noise, filtering, compression, and rotation. The extracted watermark maintains a low Bit Error Rate (BER) and a high Normalized Cross-Correlation (NCC). At the same time, the Peak Signal-to-Noise Ratio (PSNR) of attacked images remains optimal. Specifically, the BER values of the extracted watermarks were below 0.0022, and the NCC values were above 0.9959. In contrast, the average PSNR values of the attacked images reached 34.0692 dB, demonstrating the method’s superior robustness and visual quality. Compared to existing zero-watermarking algorithms, the proposed method shows superior robustness and security, making it highly effective for multimedia copyright protection.

Similar content being viewed by others

Introduction

The widespread use of computers, the internet, and multimedia has enabled the global sharing of digital data. However, the ease of access to image processing tools has increased the risk of unauthorized copying and modification, raising concerns about intellectual property protection and data integrity.

Various information security techniques have been proposed to address copyright protection issues. These techniques are broadly categorized into cryptography and information hiding methods. Cryptography transforms messages into secure formats accessible only to authorized individuals. However, once decrypted, the message becomes vulnerable to potential misuse. Additionally, cryptographic methods are often more computationally complex than information hiding techniques. In contrast, information hiding approaches such as watermarking and steganography offer alternatives that overcome some of the complexity and limitations of cryptography1.

The term steganography is derived from the Greek words steganos (meaning “covered”) and graphein (meaning “writing”), which together form the phrase “hidden writing.” In steganography, hidden information is imperceptible; only the intended recipient knows the concealed data. However, a key limitation of steganography is that unintended parties find hidden messages difficult to detect or retrieve, making it less practical for specific multimedia applications.

The primary distinction between steganography and watermarking lies in the method of embedding. In steganography, the cover object and the hidden message are generally unrelated, whereas in watermarking, the watermark and the host media may be related or unrelated. Moreover, watermarking techniques can be visible or invisible, while steganography is inherently invisible1.

Digital image watermarking effectively ensures owner identification, privacy protection2, content authentication3, and digital image validity4,5,6,7. Traditional methods embed watermark information into images for protection8,9,10,11,12, but they have limitations. These methods often distort images, compromising data integrity, which is problematic for military imaging, medical diagnosis, and artwork scanning applications. Moreover, balancing robustness and imperceptibility remains a challenge13.

Watermarking techniques are generally categorized based on the embedding domain into spatial domain and frequency domain techniques. Spatial domain methods directly modify pixel values, which makes them simpler but more vulnerable to attacks. Frequency domain methods, such as those based on the Discrete Wavelet Transform (DWT) and the Discrete Cosine Transform (DCT), embed watermarks in transformed coefficients, thereby improving robustness against common image-processing attacks14.

Deep learning, a branch of machine learning, is a significant area of artificial intelligence research that utilizes neural networks to analyze vast datasets15. It enhances watermarking by extracting visual attributes and adaptively embedding them16. Convolutional Neural Networks (CNN) play a key role in this process, utilizing convolution and pooling layers to capture essential features17.

Zero-watermarking technology enhances copyright protection for digital multimedia, preserving visual quality, particularly for images. Unlike traditional watermarking, zero-watermarking18,19,20,21,22,23 associates the watermark sequence with the image without embedding it, thereby ensuring the integrity of the watermark. Ownership is verified through a zero watermark, transmitted securely over public channels, relying on intrinsic features and a master share.

Zero-watermarking approaches are classified into four categories based on significant image features23,24: CNN-based features25,26, frequency-domain features, spatial-domain features14, and moment-based features27. In CNN-based methods, deep feature maps are combined to extract image features from the layers of a convolutional neural network (CNN)25,26. Frequency-domain methods use transformed features but struggle with rotational and scaling invariance14. Spatial-domain methods directly derive features but are highly sensitive to geometric and image-processing attacks14. In comparison, Moment-based approaches leverage invariants for feature extraction27,28.

The zero-watermarking technique aims to produce a watermark without altering the original data29. It extracts robust features from the host image, converts them into numerical values, and combines them to form the zero-watermark, which is then encrypted with copyright data30. Pang et al.31 introduced a blind watermarking method for protecting open-source datasets using a GAN-based deep learning model. This approach applies invisible watermarks to dataset images and utilizes UNet with GAN for watermark embedding. The watermark is extracted for verification if a model is suspected of improper training. Thanh et al.32 proposed a resilient zero-watermarking technique that employs QR decomposition and a visual map, utilizing permutation attributes to enhance resilience and minimize computational costs. Daoui et al.33 suggested a zero-watermarking strategy integrated with robust image encryption to strengthen security during image sharing over the internet. Ge et al.16 proposed a DNN-based watermarking method for document images, utilizing an encoder-decoder framework for embedding and extraction. A noise layer simulates various attacks, while a text-sensitive loss mechanism limits changes to character embeddings. Despite achieving adequate PSNR and SSIM, the method suffers from poor visual quality due to the visible background in the document image. They introduced an embedding strength adjustment technique to enhance image quality while maintaining extraction accuracy, addressing this issue. Shao et al.34 developed a resilient double-zero watermarking technique to simultaneously safeguard the copyrights of two images. Han et al.25 proposed a resilient zero-watermarking method leveraging the VGG19 deep convolutional neural network.

Despite extensive research in zero-watermarking, existing approaches still face several limitations. These issues can be summarized as follows:

-

(1)

Grayscale vs. Color Image Protection: Most traditional zero-watermarking techniques were designed for grayscale images, while color image protection is increasingly more relevant in real-world applications.

-

(2)

Weak Resistance to Combined Attacks: Many traditional zero-watermarking methods demonstrate weak resistance to combined signal-processing and geometric attacks, compromising the watermark’s robustness.

-

(3)

Instability in Moment-based Methods: Moment-based zero-watermarking approaches often rely on approximation methods, which can lead to instability, inaccuracy, and inefficiency, ultimately resulting in degraded feature extraction performance.

-

(4)

Weakness in Frequency Domain Feature Extraction: Feature extraction within the frequency domain is often ineffective against geometrical distortions and suffers from high time complexity, making it less practical for real-time applications.

To overcome the challenges outlined above, this paper presents a robust zero-watermarking approach that enhances resilience against various attacks while ensuring security and imperceptibility. Unlike traditional watermarking methods, zero watermarking protects copyright without embedding visible or hidden markers into the content. The proposed method integrates LBP with deep features from the CONV5-4 layer of VGG19, capturing both local texture details and high-level semantic information. This hybrid approach significantly enhances resistance to geometric distortions, signal processing attacks, and their combinations, including noise, scaling, and compression. Security is enhanced through frequency domain transformations and chaotic encryption based on the Lorenz system and the Logistic map. This framework strengthens multimedia security, providing a solid foundation for future research in digital information protection.

The main contributions of this paper are summarized as follows:

-

1.

The proposed method integrates deep features from the CONV5-4 layer of VGG19 with LBP to enhance watermark resilience against various distortions and attacks. LBP extracts essential texture details, while VGG19 features capture high-level semantic representations to increase robustness.

-

2.

The DWT decomposes the image into frequency sub-bands, while the DCT selects key coefficients for embedding, further enhancing robustness against signal processing and geometric attacks.

-

3.

A secure encryption mechanism combines the Lorenz system and 2D Logistic Adjusted Chaotic Map (2D-LACM) to scramble the extracted features and the watermark data. The final zero watermark is generated through an XOR operation, ensuring ownership verification and strong resistance to compression, filtering, and transformation attacks.

-

4.

The proposed approach achieves near-optimal results in terms of BER (0) and NCC (1), while maintaining high PSNR, thereby preserving image quality and watermark robustness.

The novelty of this work lies in the following aspects:

-

1.

The unique fusion of VGG19 deep features and LBP in a zero-watermarking framework, which has not been explored in previous literature, provides complementary strengths for texture and semantic analysis.

-

2.

The application of both DWT and DCT in a unified framework to address vulnerabilities in both spatial and frequency domains.

-

3.

Applying a hybrid chaotic encryption system (Lorenz and 2D-LACM) to the watermark and the feature matrix introduces an extra security layer not typically present in existing watermarking methods.

-

4.

Experimental results demonstrate that the proposed method consistently achieves near-perfect robustness and high imperceptibility under a wide range of attacks, significantly outperforming existing approaches in the literature.

The rest of the paper is structured as follows. “Proposed method” section outlines the fundamentals of the proposed method. “Results and analysis of experiments” section presents the results and analysis of experiments. Finally, “Conclusion” section concludes the paper.

Proposed method

The proposed scheme, Hybrid Deep-Chaotic Zero Watermarking Scheme, is designed to achieve robust and imperceptible zero watermarking by combining deep learning-based feature extraction with chaotic encryption. Figures 1 and 2 illustrate the overall workflow of the proposed method, which consists of two main phases: zero-watermark generation and zero-watermark verification.

The host and watermark images are input into the system in the generation phase. Features are extracted from the host image using a hybrid approach that combines Local Binary Patterns (LBP) and deep features obtained from the VGG19 model. These features are fused and binarized to form a robust binary feature matrix. Meanwhile, the watermark image is preprocessed by inverting its pixel values to improve robustness, then encrypted using a combination of Lorenz and Logistic chaotic maps. The resulting encrypted watermark is embedded into the high-frequency (HH) sub-band of the feature matrix, utilizing a combination of DWT and DCT. After embedding, the inverse transforms (IDCT and IDWT) are applied to reconstruct the modified feature matrix. Finally, a zero-watermark is generated by performing an XOR operation between the reconstructed matrix and the encrypted watermark.

In the verification phase, the attacked image undergoes the same feature extraction process using LBP and VGG19. The resulting binary feature matrix is reordered using the same chaotic permutation key employed during watermark generation. This reordered matrix is XOR-ed with the stored zero-watermark to recover the encrypted watermark. Decryption is then performed using the binary chaotic sequence to retrieve the original watermark. The extracted watermark is compared to the original watermark to assess the robustness and imperceptibility of the scheme under various common image processing attacks, including JPEG compression, rotation, scaling, filtering, cropping, translation, and noise addition. The detailed implementation steps and corresponding mathematical formulations are presented in the following subsections.

Reading the host image and the watermark image

The suggested zero-watermarking technique begins by reading a color image (I) with size M × N, where M = N = 512. A binary watermark image (WI) is also used, with a size of m × n, where m = n = 64. These images serve as inputs for feature extraction and watermark embedding.

Feature extraction

Feature extraction is crucial in watermarking, as it ensures resilience against various attacks. The proposed method extracts features from the host image using two complementary techniques: LBP for texture representation and VGG19 deep learning features for high-level semantic information.

-

LBP

LBP is a widely used texture descriptor that encodes the local structure of an image by comparing pixel intensity values with those of their neighbors35. The image is divided into regions, and LBP values are extracted and combined into a global representation. The LBP value for a given pixel is computed as follows36:

Here, g(p) and g(c) denote the pixel intensities of the neighboring and center pixels, respectively. This process enables texture analysis while ensuring robustness against variations in illumination. To illustrate the LBP computation process, a sample 3 × 3 patch is extracted from the “House” image, which is 512 × 512 in size, centered at pixel (50, 50), as shown in Fig. 3. The center pixel has a value of 101. A binary pattern is generated by comparing each neighboring pixel with the center pixel: if the neighbor is greater than or equal to 101, a binary 1 is assigned; otherwise, a binary 0 is assigned.

The resulting binary matrix is then multiplied element-wise by a predefined weight matrix, following the clockwise order of the neighbors starting from the top-left. Finally, the sum of the weighted binary values yields the LBP value, which is 56 in this case.

After computing the LBP value for a single pixel in a 3 × 3 patch, the same operation was systematically applied across the entire “House” image using a sliding 3 × 3 window as shown in Fig. 4. For each non-border pixel, the LBP value is calculated based on its local neighborhood using the same procedure previously illustrated in the 3 × 3 patch example. The process is repeated across the image, producing a matrix of LBP values that encodes local texture and edge information at each position, forming the LBP feature map.

Figure 4 visualizes the full LBP feature map using a color map, where each pixel’s color reflects its computed LBP value. Blue regions correspond to low LBP values, indicating smoother or low-contrast areas with less texture variation. Red and yellow regions indicate high LBP values, typically found in textured regions, object boundaries, and fine details.

This complete LBP map effectively captures the spatial distribution of textural features. It is later fused with high-level semantic features extracted from the VGG19 network to enhance the robustness and discrimination capability of the proposed hybrid zero-watermarking system.

-

VGG19 Deep Features (Using the CONV5-4 Layer):

The proposed scheme incorporates deep semantic features using the pre-trained VGG19 convolutional neural network and local texture descriptors.

VGG19, developed by Simonyan and Zisserman in 201537, is a deep CNN comprising 16 convolutional layers and 3 fully connected layers, totaling 19 layers. It is widely adopted for hierarchical feature extraction and image classification due to its uniform architecture and strong generalization capabilities38. The feature can be extracted by:

Here, \({E}_{i}^{out}\) and \({E}_{i}^{in}\), are the input and output feature maps, Hi is the convolutional filter, \({d}_{i}\), is the bias, and \(\tau\), is the ReLU activation function. Pooling layers reduce computational complexity while preserving important details of the features. The final classification is performed using a softmax layer:

where \({F}_{j}\) represents the probability of a class \(\text{j}\), and \(\text{C}\) is the number of classes.

In our zero-watermarking approach, we utilize the VGG19 network architecture, as illustrated in Figure 5. Feature extraction is performed by passing the input image through the network to the conv5_4 layer, the last convolutional layer in the fifth block. The output of this layer is selected as the feature map because it provides high-level semantic representations that are more robust to common image transformations and distortions.

Figure 5 shows the internal structure of the VGG19 network used in this work. The model comprises five convolutional blocks, each followed by a max-pooling operation. As the image progresses through the network, the depth of the feature maps increases, allowing the network to learn increasingly abstract visual representations. In the proposed scheme, feature maps are extracted from the conv5_4 layer, highlighted in the final block, as it retains rich semantic information needed for robust zero-watermark generation.

Feature Fusion

The extracted LBP and VGG19 features are fused into a single feature vector to construct a robust and discriminative representation. The LBP captures fine-grained local texture information, while VGG19 provides high-level semantic context. Combining these complementary features enables the system to leverage the image’s regional and global characteristics. This hybrid representation significantly enhances the system’s resilience against various image distortions and attacks, including noise, filtering, compression, and geometric transformations.

Watermark embedding

The Lorenz system and the Logistic map generate chaotic sequences that provide strong encryption for the watermark, ensuring both robustness and security. The watermark is incorporated into the high-frequency components of the image using the DCT and the DWT, followed by encryption using the Exclusive-OR (XOR) operation. This ensures that the watermark is safely and invisibly embedded while maintaining the quality of the original image. Each step is explained in detail below.

-

1.

Chaotic Encryption Using Lorenz System and Logistic Map:

To improve security, the watermark undergoes encryption using two chaotic maps:

Lorenz system: a chaotic system used for scrambling the watermark features.

Logistic Map: a chaotic system used to generate binary sequences.

Step 1 Generating a Chaotic Sequence with the Lorenz System

The Lorenz system, introduced in 1963, is a chaotic system represented by the following differential equations39:

For parameters \(\sigma\) = 10, ρ = 28, and β = \(\frac{8}{3}\), the system exhibits chaotic behavior, making it ideal for encryption due to its sensitivity to initial conditions and unpredictability. The chaotic properties enable secure scrambling of features and watermark data. The initial conditions are selected as follows:

The values of \(\text{x}\), \(\text{y}\), and \(\text{z}\) are then calculated over time using the previous formulae, resulting in a chaotic sequence that is utilized to scramble the watermark:

The initial values \(x\left(1\right), y\left(1\right),\) and \(z\left(1\right)\) are saved as SK1 (chaotic key).

Step 2 Generating a Chaotic Sequence with the Logistic Map

The 1D logistic map is another chaotic function that produces pseudo-random sequences40.

This map is used to construct another chaotic sequence, further scrambling the watermark. The logistic map equation is presented below:

Here, \({x}_{n}\) is the current value within the range [0, 1], while \(\mu\) is the control parameter ranges from 0 to 4. The logistic map exhibits chaotic behavior when \(\mu\) falls within the range (3.569945972, …, 4]. Therefore, it is typically set to 3.999 to ensure a highly chaotic sequence.

The generated sequence is then thresholded, resulting in a binary sequence suitable for encryption.

Watermark embedding using DWT and DCT

Step 1 Decomposition using DWT

The input image is decomposed using DWT into four frequency sub-bands: \(LL\), \(LH\), \(HL\), and \(HH\). The \(HH\) A subband containing high-frequency components is selected for embedding the watermark. This band strikes a balance between imperceptibility and sensitivity to specific types of attacks.

Step 2 Applying DCT

DCT is then applied to the HH sub-band. This transformation concentrates the image’s energy into a small number of significant coefficients, allowing the watermark to be embedded in a compact yet robust manner. By embedding the watermark in the frequency domain rather than directly in the spatial domain, it becomes more resistant to compression and filtering attacks. This combination of DWT and DCT ensures imperceptibility and robustness, making the embedding process suitable for zero watermarking applications.

Step 3 Chaotic Encryption of the Watermark.

The watermark undergoes additional security measures before embedding:

-

1.

Inversion and Flattening:

-

The watermark is inverted to increase security. The inversion is mathematically represented as:

$${W}_{b}={\text{reshape}}\left(\sim WI,[1,m\cdot n]\right)$$(8)where \(\sim WI,\) represents the bitwise inversion of the watermark.

-

-

2.

Chaotic Reordering:

-

The binary watermark is reordered utilizing the Lorenz chaotic sequence:

$${W}_{c}={W}_{b}\left(L\right)$$(9)

-

-

3.

XOR-based Encryption:

-

The reordered watermark is encrypted using XOR with the Logistic chaotic sequence:

$${W}_{\text{en}}={\text{xor}}\left({W}_{c},S2\right)$$(10)

-

The encrypted watermark is reshaped back to a 2D matrix:

Step 4 Embedding the Encrypted Watermark.

The encrypted watermark is incorporated within the DCT coefficients of the HH sub-band. This process modifies the DCT coefficients to embed the watermark securely:

Here, \(\alpha\) is the embedding strength factor.

Step 5 Inverse Transformations (IDCT and IDWT)

After embedding the encrypted watermark into the DCT coefficients of the HH subband, the Inverse Discrete Cosine Transform (IDCT) is applied to transform the frequency-domain data back to the spatial domain. Subsequently, the Inverse Discrete Wavelet Transform (IDWT) is used to reconstruct the full watermarked image by combining the modified HH subband with the original LL, LH, and HL subbands. These inverse transformations ensure that the watermarked image retains high visual quality while preserving the embedded watermark.

Final Watermark Embedding Using XOR:

To further secure the watermark, an XOR operation is applied between the watermarked image and the encrypted watermark:

This ensures that the watermark remains imperceptible while resisting various attacks.

Watermark extraction

The watermark extraction process aims to accurately recover the embedded watermark from an attacked image while preserving its integrity and authenticity. This is achieved through XOR-based decryption and inverse chaotic scrambling using the Lorenz system sequence.

The extraction process follows these steps:

-

(1)

XOR Decryption: The encoded watermark is decrypted by executing an XOR operation with the binary chaotic sequence \(S2\):

$$WI\left(x,y\right)={W}_{e}\left(x,y\right)\oplus S2\left(x,y\right)$$(14)where \(WI(x,y)\) is the extracted watermark, \({W}_{e}(x,y)\) is the encrypted watermark from the attacked image, and \(S2(x,y)\), is the chaotic sequence.

-

(2)

Restoring the Original Order: To reconstruct the original watermark, inverse chaotic scrambling is applied using the Lorenz system sequence \(\text{L}\). This reverses the initial scrambling performed during the embedding phase.

-

(3)

(3) Reshaping the Watermark: The binary watermark is reshaped into a matrix of size \(m\times n\), to match the original watermark dimensions.

This ensures accurate recovery of the watermark while preserving its integrity against various attacks.

The steps of the proposed method are summarized as follows:

This methodology ensures robust, imperceptible, and secure zero-watermarking while achieving high resilience against attacks.

Results and analysis of experiments

Generally, effective zero-watermarking necessitates both durability and invisibility. Zero-watermarking is exceptionally imperceptible by design, and durability is a critical requirement. In this section, we will conduct a series of tests to evaluate the efficiency of the zero-watermarking method proposed in this paper.

Experimental dataset: We selected eight 512 × 512-pixel color images from the USC-SIPI41 and the Computer Vision Group (CVG)42 datasets as the host images, as shown in Fig. 6a–h, and four binary images with 64 × 64-pixel watermarks, as shown in Fig. 6i–l.

Parameter Setting This paper’s suggested zero-watermarking approach parameters are as follows: the host image size is M = N = 512, and the watermark image size is m = n = 64. The Lorenz system’s initial state values and parameters are defined as \({x}_{0}=0.8633\), \({y}_{0}=0.9234\), \({z}_{0}=0.1\), \(\sigma =10\), \(\rho =28\), \(\beta =\frac{8}{3}\) and the integration step \(\Delta t=0.01\). The Logistic map control parameter is set as \(\mu =3.999\). The watermark embedding strength factor is \(\alpha =0.05\).

Evaluation metrics The proposed zero-watermarking approach utilizes the PSNR to assess the visual quality of the distorted color images compared to the originals. The PSNR is computed as follows:

where \({I}_{h}\left(x,y\right)\) and \({II}_{h}\left(x,y\right)\), represent the original and distorted images of size \(M \times N\), respectively, and \(h\in \left\{R,G,B\right\},\) refers to the color channels.

The watermarking system’s resilience is evaluated using the BER and NCC. These measures calculate the similarity and error between the original and retrieved watermarks after attacks. The definitions are as follows:

where \(WI\left(i,j\right)\) and \(WI^{\prime } \left( {{\text{i}},{\text{j}}} \right)\) represent the original and extracted watermark images of size \(m\times n\), respectively, and \(\oplus\) denotes the XOR operation. A lower BER value increases the system’s robustness. Conversely, a higher NCC value indicates more remarkable similarity and improved robustness.

Anti‑attack performance analysis

The suggested zero-watermark approach is tested against conventional attacks to ensure its robustness. Color images in this subsection are subjected to various attacks, including rotation, scaling, brightness adjustments, filtering process, noise, and JPEG compression. The extracted and original watermarks are compared to calculate the BER and NCC values. Table 1 provides thorough explanations of traditional signal-processing and geometrical attacks.

This section evaluates the technique’s robustness against popular image processing and geometrical attacks. The tests performed might be split into two primary categories.

-

We began with a 512 × 512 color image called “House,” as shown in Fig. 6. Tables 2, 3, 4, 5, 6, 7, 8, and 9 show the PSNR, BER, and NCC values for each suggested attack and the recovered watermark images. The watermark was created using a binary image named ‘Flower.’ These tables demonstrate that the proposed technique produces more similar watermarks than the original. The obtained BER and NCC values are near optimal.

-

Experiments were conducted on seven standard color images, as shown in Fig. 6. Tables 10, 11, 12, 13, 14, and 15 present the values of PSNR and NCC for the proposed methods in response to each attack.

Robustness to geometrical attacks

Geometrical attacks often cause watermark detection to desynchronize. The two most common geometrical attacks are rotation and scaling. This experiment performs rotation-based attacks using nearest, bilinear, and bicubic interpolations. The test image undergoes rotation at 1°, 3°, 5°, 10°, and 50°, with the results shown in Table 3. For most angles, the NCC values become 1.0, and the BER values become 0, demonstrating that this approach is robust against rotation-based attacks. After that, the test image undergoes resizing using several methods, including scaling with factors of 0.25, 0.5, 2.0, and 4.0. Scaling attacks employ three interpolations: ‘Nearest,’ ‘Bilinear,’ and ‘Bicubic,’ with the results shown in Table 4. Most factors have ideal NCC values of 1.0 and ideal BER values of 0, suggesting complete robustness to scaling attacks.

Robustness to image processing attacks

The resistance of the suggested algorithm is assessed using standard image processing attacks, including filtering, noise addition, JPEG compression, unsharp masking (sharpening), and histogram equalization, on the test color image “House.” Filtering attacks were first conducted, as shown in Table 2, which presents the filtered images, PSNR values, and BER and NCC values for the retrieved watermark images. Most values of BER and NCC achieve the ideal values of 0 and 1.0, respectively, indicating a high resemblance between the original and extracted watermarks. These results confirm the suggested approach’s strong resilience against image-filtering attacks. The test color image was subjected to noise attacks utilizing salt-and-pepper and Gaussian noises. Table 5 presents the images after noise addition, their PSNR values, the extracted watermark images, and their accompanying BER and NCC values.

For Gaussian noise, all values of BER and NCC achieved the optimal values of 0 and 1.0, respectively. In the case of Salt-and-Pepper noise, three values reached the ideal BER and NCC values of 0 and 1.0. These results demonstrate that the suggested method exhibits strong resilience to noise attacks, ensuring the retrieved watermarks remain highly similar to the original ones. Subsequently, the test color image underwent JPEG compression attacks with quality factors of 5%, 10%, 50%, and 90%. Table 6 presents the images after compression, their PSNR values, and the corresponding BER and NCC values for the retrieved watermarks. Across all quality factors, the BER and NCC consistently achieved their ideal values of 0 and 1.0, respectively, with the retrieved watermarks being identical to the originals.

These results demonstrate the proposed method’s complete resistance to JPEG compression attacks. Lastly, the Effectiveness of Sharpening and histogram equalization attacks was evaluated, and their results, including PSNR values, attacked images, and retrieved watermark metrics (BER and NCC), are presented in Table 6. Translation attack outcomes are shown in Table 7, while cropping attack results are detailed in Table 9. Additionally, the effects of conventional combined attacks are summarized in Table 8. Across these scenarios, the values of BER and NCC predominantly align with the optimal values of 0 and 1.0, respectively. Moreover, the extracted watermarks are either highly close to or identical to the originals, underscoring the robustness of the proposed approach against these attacks.

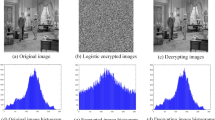

Security analysis

The security of the proposed watermarking scheme is significantly enhanced through a dual-chaotic encryption mechanism and zero-watermarking strategy. Initially, the watermark undergoes permutation based on chaotic sequences generated by the Lorenz system, which is highly sensitive to its initial conditions (x₀, y₀, z₀) and control parameters (σ, ρ, β, ∆t). These parameters act as the first secret key (SK1), introducing high sensitivity and unpredictability to the watermark position. In parallel, a second layer of encryption is applied using a Logistic Map, which produces a binary sequence based on the control parameter μ = 3.999. The watermark is then XORed with this sequence to form the final encrypted watermark. The combination of permutation and XOR-based masking ensures that even if part of the watermark is intercepted, it remains unrecoverable without knowledge of both chaotic keys.

To evaluate the resistance of the proposed scheme against brute-force attacks, the key space of the chaotic encryption mechanism is analyzed. The first key (SK1) is derived from six floating-point parameters in the Lorenz system. Assuming a computational precision of 1015, the number of possible combinations for SK1 is approximately:

The Logistic Map encryption introduces two additional parameters (initial value and control parameter), each with similar precision:

Hence, the total key space of the system becomes:

This vast key space far exceeds the 2128 benchmark commonly required for modern cryptographic security, making brute-force attacks computationally infeasible.

Additionally, the scheme follows a zero-watermarking model, where the host image is not modified directly. Instead, the watermark is logically bound to features extracted from the image (via VGG19 and LBP), further increasing the difficulty of tampering or forgery. Even if the image is stolen or altered, verification cannot be performed without access to the correct feature and encryption information. This layered approach, which combines spatial feature binding, dual chaotic encryption, and logical embedding, provides strong resistance against unauthorized extraction, reverse engineering, and key guessing, thereby fulfilling the requirements for robustness and security.

Computational complexity analysis

The computational complexity of the proposed zero-watermarking scheme is analyzed step-by-step as follows:

-

1.

LBP feature extraction

For an input image of size \(M \times N\), the LBP value is computed for each non-border pixel using a 3 × 3 sliding window.

- Time Complexity: \(O(M \times N)\)

-

2.

VGG19 feature extraction

The image is passed through the VGG19 network up to the conv5_4 layer to extract deep semantic features. This step involves several convolution and pooling operations across multiple layers, and its cost is abstracted as T, representing the internal complexity of VGG19 up to the conv5_4 layer.

- Time Complexity: \(O(T)\)

-

3.

Feature fusion

The extracted LBP and VGG19 features are flattened and concatenated into a single feature vector.

- Time Complexity: \(O(M \times N + P)\), where P is the size of the conv5_4 feature map.

-

4.

Binarization

The combined feature vector is binarized and reshaped into an \(m \times n\) matrix.

- Time Complexity: \(O(m \times n)\)

-

5.

Chaotic Encryption using Lorenz and Logistic Map

A chaotic permutation using the Lorenz system is applied (requires sorting), followed by XOR operations for watermark encryption.

- Time Complexity: \(O(m \times n log(m \times n) + 3 \times m \times n)\)

-

6.

Attack Simulation (e.g., filtering, noise, compression)

Image attacks are applied over the host image, generally affecting the full \(M \times N\) space.

- Time Complexity: \(O(M \times N)\)

-

7.

Watermark Extraction

The same LBP and VGG19 extraction are repeated on the attacked image, followed by binarization and inverse permutation.

- Time Complexity: \(O(M \times N + T + m \times n)\)

-

8.

Quality Metric Computation (PSNR, BER, NCC)

Metrics are computed over the m × n extracted and original watermark.

- Time Complexity: \(O(3 \times m \times n)\)

Hence, summing the complexities of all steps, the total computational complexity of the proposed scheme is: \(O(4MN + 2T + P + m n log(m n) + 8mn)\)

To further support the analysis presented above, Table 16 presents a comparison between the proposed method and four recent state-of-the-art methods5,8,10,43, highlighting the overall computational cost in terms of Big-O notation. The results confirm that the proposed method requires less computational effort, making it more suitable for real-time processing and large-scale applications.

Wilcoxon signed-rank test comparison

Table 17 presents a statistical comparison between the proposed watermarking method and four existing techniques13,44,45, and46 under different types of attacks: Average filter, Median filter, Gaussian noise, JPEG compression, Rotation, and Scaling. Each row corresponds to a specific kind of attack, while each column represents the outcome of the Wilcoxon signed-rank test comparing the proposed method with one of the compared schemes.

The Wilcoxon signed-rank test was applied independently for each attack type at a significance level of α = 0.05, indicating a 95% confidence level for the statistical test. The test is non-parametric and is commonly used to assess whether there is a statistically significant difference between two related samples. For each comparison, the null hypothesis (\({H}_{0}\)) states that there is no statistically significant difference in performance between the proposed and the compared method. A result of rejecting the null hypothesis H0 indicates that the proposed method achieves significantly different results. In contrast, a result of not rejecting the null \({H}_{0}\) suggests that no meaningful statistical difference could be identified under the given test conditions. For the statistically significant cases, the test also compares the sum of negative ranks (w′) with the corresponding critical value (\({w}_{{\alpha }}^{*}\)).

If w′ ≤ \({w}_{{\alpha }}^{*}\), this provides further evidence that the proposed method outperforms the compared one under the given attack. As observed in the table, the proposed method achieves statistically significant improvements over all the compared methods in most attacks, particularly under Median filter, Gaussian noise, JPEG compression, and Rotation attacks. However, under the Scaling and Average filter attacks, the difference is not statistically significant against methods13 and45, which suggests performance parity in those specific scenarios. Overall, this statistical validation confirms the robustness and superiority of the proposed scheme under a wide range of attack conditions.

Comparing robustness to previous works

To comprehensively assess the efficiency of the suggested method, we performed two comparisons. In the first comparison, various attacks were applied to seven standard color images with parameters detailed in Table 1. The average PSNR values are then calculated, and the lowest NCC values are chosen, as presented in Tables 10, 11, 12, 13, 14, and 15. The summarized results are provided in Table 18 for clarity and are further illustrated in Fig. 7. Additionally, the results are compared with those from zero-watermarking methods in13,44, and46. Table 18 and Fig. 7a show that the proposed algorithm’s PSNR values are higher, indicating superior image resolution compared to the three methods. Similarly, Fig. 7b and Table 18 show that the values of NCC of the proposed method under various attacks are nearer to the ideal value of 1 and outperform those of the methods in13,44, and46. These findings highlight the resilience of the suggested approach in resisting different image attacks, surpassing the performance of zero-watermarking methods13,44, and46.

For the second comparison, the resistance of the suggested algorithm was thoroughly evaluated against several zero-watermarking techniques reported in previous studies13,43,44,47,48,45,46. This evaluation encompassed a diverse range of attacks to ensure comprehensive testing of the algorithm’s capabilities. The tested attacks included JPEG compression with quality factors of 10%, 30%, 50%, 70%, and 90%; rotation at angles of 3° and 5°; and scaling with a factor of 0.5. Additionally, noise attacks were conducted, including salt-and-pepper noise with parameters of 0.01 and 0.03 and Gaussian noise with 0.1, 0.3, 0.5, and 0.01. Filtering attacks were also applied, involving average filtering and Median filtering with window sizes of 3 × 3, 5 × 5, and 7 × 7, as well as the sharpening attack. As presented in Table 19, the experimental results demonstrate the superior performance of the proposed algorithm, which consistently achieves bit error rate (BER) values close to the optimal value of 0. This outcome reflects the algorithm’s significantly enhanced resilience to various attacks, surpassing the robustness of existing zero-watermarking methods.

Conclusion

This paper proposes a reliable zero-watermarking technique for color images, which combines Local Binary Pattern (LBP) and deep features from the CONV5-4 layer of VGG19 to enhance resilience and security. Frequency domain transformations, such as those using the DWT and DCT, isolate critical features. At the same time, chaotic encryption relies on the Lorenz system, and the Logistic map secures the watermark by scrambling the feature matrix and the watermark image. The watermark is embedded by modifying high-frequency coefficients, ensuring imperceptibility. The algorithm is resilient to attacks such as scaling, compression, noise addition, filtering, and rotation, while maintaining excellent image quality and robust ownership verification. Experimental results demonstrate that the proposed approach surpasses conventional zero-watermarking algorithms in terms of resilience, security, and image preservation, effectively preserving the copyright of color images. In the future, the proposed technique will be expanded to video watermarking to enhance its security and resilience, thereby meeting the demands of dynamic multimedia content. Furthermore, its use in e-healthcare, telemedicine, stereoscopic imaging, and real-time acquired pictures will be investigated.

Data availability

The data supporting this study’s findings are available from the corresponding author upon request.

References

Kadian, P., Arora, S. M. & Arora, N. Robust digital watermarking techniques for copyright protection of digital data: A survey. Wirel. Pers. Commun. 118, 3225–3249 (2021).

Soualmi, A., Laouamer, L. & Alti, A. A novel intelligent approach for color image privacy preservation. Multimed. Tools Appl. 83(33), 79481–79502 (2024).

Hosny, K. M., Darwish, M. M., Li, K. & Salah, A. Parallel multi-core CPU and GPU for fast and robust medical image watermarking. IEEE Access 6, 77212–77225 (2018).

Soualmi, A., Alti, A. & Laouamer, L. An imperceptible watermarking scheme for medical image tamper detection. Int. J. Inf. Secur. Priv. (IJISP) 16(1), 1–18 (2022).

Soualmi, A. & Laouamer, L. A blind watermarking approach based on hybrid imperialistic competitive algorithm and SURF points for color Images’ authentication. Biomed. Signal Process. Control 84, 105007 (2023).

Evsutin, O. O., Melman, A. S. & Meshcheryakov, R. V. Digital steganography and watermarking for digital images: A review of current research directions. IEEE Access 8, 166589–166611. https://doi.org/10.1109/ACCESS.2020.3022779 (2020).

Iwendi, C. et al. KeySplitWatermark: Zero watermarking algorithm for software protection against cyber-attacks. IEEE Access 8, 72650–72660. https://doi.org/10.1109/ACCESS.2020.2988160 (2020).

Singh, R., Pal, R. & Joshi, D. Optimal frame selection-based watermarking using a meta-heuristic algorithm for securing video content. Comput. Electr. Eng. 121, 109857 (2025).

Zeng, C. et al. Multi-watermarking algorithm for medical image based on KAZE-DCT. J. Ambient Intell. Humaniz. Comput. 15, 1–9. https://doi.org/10.1007/s12652-021-03539-5 (2022).

Singh, R., Pal, R., Mittal, H. & Joshi, D. Multi-objective optimization-based medical image watermarking scheme for securing patient records. Comput. Electr. Eng. 118, 109303 (2024).

Singh, R., Mittal, H. & Pal, R. Optimal keyframe selection-based lossless video-watermarking technique using IGSA in LWT domain for copyright protection. Complex Intell. Syst. 8(2), 1047–1070 (2022).

Magdy, M., Ghali, N. I., Ghoniemy, S. & Hosny, K. M. Multiple zero-watermarking of medical images for internet of medical things. IEEE Access 10, 38821–38831 (2022).

Darwish, M. M., Farhat, A. A. & El-Gindy, T. M. Convolutional neural network and 2D logistic-adjusted-Chebyshev-based zero-watermarking of color images. Multimed. Tools Appl. 83(10), 29969–29995 (2024).

Meselhy Eltoukhy, M., Khedr, A. E., Abdel-Aziz, M. M. & Hosny, K. M. Robust watermarking method for securing color medical images using Slant-SVD-QFT transforms and OTP encryption. Alex. Eng. J. 78, 517–529 (2023).

Hosny, K. M., Magdi, A., ElKomy, O. & Hamza, H. M. Digital image watermarking using deep learning: A survey. Comput. Sci. Rev. 53, 100662 (2024).

Ge, S. et al. A robust document image watermarking scheme using deep neural network. Multimed. Tools Appl. 82(25), 38589–38612 (2023).

Kaczynski, M., Piotrowski, Z. & Pietrow, D. High-quality video watermarking based on deep neural networks for video with HEVC compression. Sensors 22(19), 7552 (2022).

Gao, J., Li, Z. & Fan, B. An efficient robust zero watermarking scheme for diffusion tensor-Magnetic resonance imaging high-dimensional data. J. Inf. Secur. Appl. 65, 103106 (2022).

Liu, W., Li, J., Shao, C., Ma, J., Huang, M. & Bhatti, U. A. Robust zero watermarking algorithm for medical images using local binary pattern and discrete cosine transform. In International Conference on Artifcial Intelligence and Security 350–362 (Springer, 2022).

Xiyao, L. et al. DIBR Zero-watermarking based on invariant feature and geometric rectification. IEEE Multimed. https://doi.org/10.1109/MMUL.2022.3148301 (2022).

Xiao, X., Li, J., Yi, D., Fang, Y., Cui, W., Bhatti, U. A. & Han, B. Robust zero watermarking algorithm for encrypted medical images based on DWT-Gabor. In Innovation in Medicine and Healthcare: Proceedings of 9th KES-In Med 2021 75–86 (Springer, 2021).

Yi, D., Li, J., Fang, Y., Cui, W., Xiao, X., Bhatti, U. A. & Han, B. A robust zero-watermarking algorithm based on PHTs-DCT for medical images in the encrypted domain. In Innovation in Medicine and Healthcare: Proceedings of 9th KES-In Med 2021. 101–113 (Springer, 2021).

Hosny, K. M. & Darwish, M. M. New geometrically invariant multiple zero-watermarking algorithm for color medical images. Biomed. Signal Process. Control 70, 103007 (2021).

Hosny, K. M., Darwish, M. M. & Fouda, M. M. New color image zero-watermarking using orthogonal multi-channel fractional-order legendre-Fourier moments. IEEE Access 9, 91209–91219. https://doi.org/10.1109/ACCESS.2021.3091614 (2021).

Han, B., Du, J., Jia, Y. & Zhu, H. Zero-watermarking algorithm for medical image based on VGG19 deep convolution neural network. J. Healthc. Eng. https://doi.org/10.1155/2021/5551520 (2021).

Fierro-Radilla, A., Nakano-Miyatake, M., Cedillo-Hernandez, M., Cleofas-Sanchez, L. & Perez-Meana, H. A robust image zero-watermarking using convolutional neural networks. In 2019 7th International Workshop on Biometrics and Forensics, IWBF. 1–5 (2019).

Gao, G. & Jiang, G. Bessel-Fourier moment-based robust image zero-watermarking. Multimed. Tools Appl. 74, 841–858. https://doi.org/10.1007/s11042-013-1701-8 (2015).

Shao, Z. et al. Robust watermarking scheme for color image based on quaternion-type moment invariants and visual cryptography. Signal Process Image Commun. 48, 12–21 (2016).

Quan, W., Tanfeng, S. & Shuxun, W. Concept and application of zero-watermark. Acta Electron Sin. 31, 214–216 (2003) (In Chinese).

Lu, Z., Wei, J., Li, C., Zhai, J. & Tong, D. Robust copyright tracing and trusted transactions using zero-watermarking and blockchain. Multimed. Tools Appl. 84, 1–38 (2024).

Pang, Z., Wang, M., Cao, L., Chai, X. & Gan, Z. Pairwise open-sourced dataset protection based on adaptive blind watermarking. Appl. Intell. 53(14), 17391–17410 (2023).

Thanh, T. M. & Tanaka, K. An image zero-watermarking algorithm based on the encryption of visual map feature with watermark information. Multimed. Tools Appl. 76, 13455–13471 (2017).

Daoui, A., Karmouni, H., Sayyouri, M. & Qjidaa, H. Robust image encryption and zero-watermarking scheme using SCA and modifed logistic map. Expert Syst. Appl. 190, 116193 (2022).

Shao, Z., Shang, Y., Zhang, Y., Liu, X. & Guo, G. Robust watermarking using orthogonal FourierMellin moments and chaotic map for double images. Signal Process 120, 522–531 (2016).

Ramakrishnan, S., Murugavel, A. M., Sathiyamurthi, P. & Ramprasath, J. Seizure detection with local binary pattern and CNN classifier. J. Phys. Conf. Ser. 1767(1), 012029 (2021).

Sedaghatjoo, Z., Hosseinzadeh, H., & Bigham, B. S. Local binary pattern (LBP) optimization for feature extraction. Preprint at arXiv:2407.18665. (2024).

Simonyan, K. & Zisserman, A. Very deep convolutional networks for large-scale image recognition. In 3rd Int Conf Learn Represent ICLR 2015 - Conf Track Proc (2015).

Fierro-Radilla, A., Nakano-Miyatake, M., Cedillo-Her-Mendez, M. et al. A robust image zero-watermarking using convolutional neural networks. In Proceedings of the 2019 7th International Workshop on Biometrics and Forensics (IWBF) 1–5 (2019).

Lorenz, E. N. Deterministic nonperiodic flow. J. Atmos. Sci. 20(2), 130–141 (1963).

Şahin, S. & Güzelis, C. “Chaotification” of real systems by dynamic state feedback. IEEE Antennas Propag. Mag. 52(6), 222–233. https://doi.org/10.1109/MAP.2010.5723276 (2010).

University of Southern California (2020) USC-SIPI Image Database. In: USC-SIPI Image Database. http://sipi.usc.edu/database/. Accessed 22 Nov 2021.

CVG Bonn (2014) Computer Vision Group. https://pages.iai.uni-bonn.de/gall_juerg en/index.html. Accessed 9 Jan 2022.

El-Khanchouli, K. et al. Protecting medical images using a zero-watermarking approach based on fractional Racah moments. IEEE Access 13, 16978 (2025).

Taj, R. et al. A reversible-zero watermarking scheme for medical images. Sci. Rep. 14(1), 17320 (2024).

Huang, T., Xu, J., Yang, Y. & Han, B. Robust zero-watermarking algorithm for medical images using double-tree complex wavelet transform and Hessenberg decomposition. Mathematics 10(7), 1154 (2022).

Kang, X. et al. Robust and secure zero-watermarking algorithm for color images based on majority voting pattern and hyper-chaotic encryption. Multimed. Tools Appl. 79, 1169–1202 (2020).

Farhat, A. A., Darwish, M. M. & El-Gindy, T. M. Resnet50 and logistic Gaussian map-based zero-watermarking algorithm for medical color images. Neural Comput. Appl. 36(31), 19707–19727 (2024).

Tu, S., Jia, Y., Du, J. & Han, B. Application of zero-watermarking for medical image in intelligent sensor network security. CMES-Comput. Model. Eng. Sci. 136(1), 293 (2023).

Funding

Open access funding provided by The Science, Technology & Innovation Funding Authority (STDF) in cooperation with The Egyptian Knowledge Bank (EKB).

Author information

Authors and Affiliations

Contributions

Hager A. Gharib: Conceptualization, methodology, software, Visualization, writing—original draft preparation Noha M. Abdelnapi: Validation, investigation, supervision Khalid M. Hosny: Conceptualization, methodology, Formal analysis, supervision, writing—review and editing.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Gharib, H.A., Abdelnapi, N.M.M. & Hosny, K.M. Robust zero-watermarking for color images using hybrid deep learning models and encryption. Sci Rep 15, 28906 (2025). https://doi.org/10.1038/s41598-025-09290-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-09290-7