Abstract

Shared Decision-Making (SDM), a patient-centered approach to medical care, improves treatment outcomes and patient satisfaction. However, traditional SDM struggles in handling complex medical scenarios, dynamic patient preferences, and multi-issue negotiations, particularly under incomplete information. The key challenge lies in capturing the fuzzy preferences of doctors and patients while ensuring efficient and fair multi-issue negotiations. This study introduces AutoSDM-DDPG, an automated SDM framework based on the Deep Deterministic Policy Gradient (DDPG) algorithm. Using an Actor-Critic network, the framework dynamically optimizes negotiation strategies to address multidimensional demands and resolve preference inconsistencies in treatment planning. Fuzzy membership functions are applied to model the uncertainty in patient preferences, enhancing representation and improving multi-issue negotiation outcomes. Experimental results show that AutoSDM-DDPG outperforms other models in key indicators, including social welfare, satisfaction disparity, and decision quality. It achieves faster and more equitable negotiations while balancing the needs of both doctors and patients. In scenarios involving multi-issue negotiations and complex preferences, AutoSDM-DDPG demonstrates exceptional adaptability, achieving efficient and fair decision-making.

Similar content being viewed by others

Introduction

The doctor-patient relationship plays a crucial role in medical decision-making, directly influencing the patient’s treatment outcomes and satisfaction1. In recent years, Shared Decision-Making (SDM), a patient-centered approach to healthcare, has gained significant attention. SDM involves both doctors and patients actively participating in medical decisions, engaging in in-depth discussions about treatment goals, methods, and expected outcomes, and ultimately reaching a consensus decision2,3. Research has demonstrated that SDM enhances patients’ sense of involvement in the treatment process, reduces doctor-patient conflicts, and offers considerable benefits in improving patients’ quality of life and clinical treatment outcomes4. In clinical practice, SDM not only aids in optimizing resource allocation but also fosters more equitable and transparent decision-making in complex medical scenarios. However, despite the broad acceptance of SDM in the healthcare field, its practical implementation continues to face significant challenges.

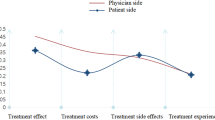

Traditional SDM methods primarily rely on doctors’ experience and limited communication with patients, which makes it difficult to address personalized needs in complex medical situations5,6. This issue is particularly evident in the insufficient modeling of patient preferences. Patient preferences are often multidimensional and complex, shaped by factors such as economic conditions, health status, and psychological factors, which traditional methods struggle to quantify and express accurately3,4. Moreover, there are frequently significant differences in the goals and preferences between doctors and patients. For instance, doctors tend to focus on treatment outcomes, while patients are often more concerned with cost and convenience7,8. This disparity in goals creates complexity and uncertainty in the negotiation process. Furthermore, the multi-issue nature of medical decision-making–covering factors like cost, efficacy, and side effects–makes it challenging to achieve a balance using one-dimensional or simple rule-based methods8. These challenges render traditional SDM methods inadequate for managing complex negotiation scenarios, highlighting the need for more efficient and intelligent solutions.

Agent-based multi-issue negotiation frameworks provide novel solutions to the complex negotiation challenges in SDM. By assigning independent intelligent agents to both parties (e.g., doctors and patients), these frameworks simulate their decision-making processes, significantly enhancing both negotiation efficiency and fairness9. However, these frameworks still encounter several limitations in complex scenarios: First, many frameworks rely on static heuristic rules, which make it challenging to dynamically adjust negotiation strategies in response to real-time changes in the medical context10. Second, treatment plans in real-world medical settings are typically multi-issue and nonlinear. When faced with high-dimensional and nonlinear decision problems, traditional frameworks’ linear utility functions struggle to adequately capture the multidimensional preferences of both parties11. Third, due to patients’ lack of medical expertise, their preferences are often vague and uncertain. Traditional frameworks generally fail to model and express this uncertainty and fuzziness precisely, which can result in lower negotiation efficiency and diminished decision quality12.

In response to the limitations of traditional intelligent agent frameworks in dynamic adjustment and multi-issue handling, Deep Reinforcement Learning (DRL), a cutting-edge technology in artificial intelligence, has demonstrated significant application potential. DRL integrates the dynamic optimization power of reinforcement learning with the feature extraction capabilities of deep neural networks, enabling it to learn optimal strategies in complex, high-dimensional environments13. The key advantage of this approach is its ability to capture the multidimensional preferences of both doctors and patients through historical data and real-time interactions, while dynamically adjusting negotiation strategies based on real-time feedback14. Particularly in multi-issue negotiations, DRL can effectively manage the nonlinear relationships and dynamic changes between issues, helping both parties achieve more efficient and equitable decisions15. Among various DRL algorithms, Deep Deterministic Policy Gradient (DDPG) is considered an ideal choice for addressing multi-issue negotiation problems due to its efficient learning capabilities in continuous action spaces16. Therefore, by combining the dynamic optimization strengths of the DDPG algorithm with the precision of multi-issue modeling, it provides new solutions for overcoming the limitations of traditional SDM negotiation frameworks.

Literature review

Shared decision-making related concepts and methods

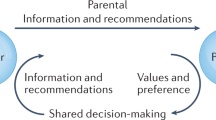

SDM is a crucial patient-centered concept in modern medicine, emphasizing equal collaboration between doctors and patients in medical decision-making. By sharing medical information, discussing treatment goals, and weighing the pros and cons of different treatment options, both parties can collaboratively arrive at a scientific and personalized decision17. The theoretical foundation of SDM is based on respecting patients’ autonomy while ensuring that the decision-making process remains both scientific and rational. Research has demonstrated that SDM can significantly improve patient satisfaction and treatment outcomes, while also enhancing the overall quality of18.

To standardize the practical application of SDM, researchers have developed various theoretical frameworks. One of the most widely recognized is the Three-Dimensional Interaction Model proposed by Charles et al., which divides the doctor-patient interaction into three stages: information sharing, opinion negotiation, and consensus building1. This model emphasizes the equal participation of both doctors and patients, facilitating personalized decisions through the provision of scientific medical information and the expression of patient preferences. Elwyn et al. proposed a clinical practice model that further enhances the operability of SDM3. This model adopts a three-step approach: inviting patients to participate in decision-making, sharing medical information, and negotiating to reach a consensus. This structured approach provides clinicians with a clear, easy-to-implement guide, demonstrating strong adaptability in information-rich medical environments. However, its effectiveness may be limited in situations where patients possess lower levels of medical knowledge or have difficulty understanding medical information.

Although these theoretical models provide a structured framework for SDM, their practical implementation heavily depends on efficient tools and methods. Decision aids are currently the most widely utilized support tools. These tools assist patients in better understanding medical options and increase their involvement in decision-making by providing structured information, such as the pros and cons, risks, and costs associated with treatment options19. For example, the Cochrane Decision Aid Library presents a variety of tools tailored for different disease scenarios, including breast cancer screening and diabetes treatment8. The Ottawa Decision Support Framework (ODSF) is a comprehensive tool for implementing SDM. It offers extensive support for complex medical scenarios by assessing patient needs, designing personalized decision aids, and evaluating patients’ decision outcomes through follow-up assessments20. The application of ODSF in cancer screening and chronic disease management demonstrates that the framework significantly enhances the scientific rigor and personalization of decisions. However, its implementation typically relies on high-quality decision aids, resulting in higher development and application costs.

Despite these advances, SDM in practice still faces significant challenges, especially in handling multi-issue decisions, accurately modeling patient preferences, and dynamically optimizing negotiation strategies. Moreover, as healthcare delivery becomes increasingly complex, medical decisions often involve not only doctors and patients but also family members, multiple clinicians, or even entire care teams. In such contexts, the process of SDM begins to overlap with group decision-making (GDM), which emphasizes integrating input from multiple stakeholders to reach consensus. While SDM typically focuses on the dyadic interaction between doctor and patient, its foundational principles–mutual respect, joint participation, and information sharing–are consistent with GDM. In fact, SDM can be regarded as a specialized form of GDM, tailored to clinical contexts with a strong emphasis on patient autonomy and individualized care. This convergence is particularly evident in multidisciplinary medical scenarios, where reaching consensus requires balancing scientific evidence, clinical judgment, and patient values.

Agent negotiation technology

Agent is an intelligent computer system that can autonomously perform tasks within a specific environment and interact with other agents or human users21. In complex decision-making problems, agents support automation and efficient collaboration by perceiving the environment, reasoning through decisions, and taking actions. Agent negotiation refers to the process in which multiple intelligent agents interact within a shared environment, exchanging information and employing negotiation strategies based on their respective goals and constraints, ultimately reaching an agreement[9]. Compared to traditional human-led negotiations, agent-based automated negotiation offers significant advantages, including efficiency, by enabling the rapid processing of complex information; objectivity, by minimizing the influence of human emotions on decisions; and scalability, by handling multi-party, multi-issue negotiation scenarios22. These advantages make automated negotiation a crucial tool for addressing modern complex decision-making challenges.

Agent-based automated negotiation frameworks typically consist of three core components: negotiation goals, negotiation protocols, and negotiation agents23,24. Negotiation goals refer to the issues that need to be addressed during the negotiation process, which can be divided into single-issue and multi-issue negotiations based on the number of issues involved. Single-issue negotiation deals with a single, distinct issue, while multi-issue negotiation involves multiple interconnected issues, which are often more complex due to potential conflicts in preferences across the issues. In multi-issue negotiations, the solution is generally a combination of the selections made for each individual issue. These issues can be either quantitative (e.g., price, duration) or qualitative (e.g., color, size). Additionally, the complexity of the issues can be classified into linear and nonlinear problems, where linear problems involve simple metrics, while nonlinear problems involve more intricate attributes.

Negotiation protocols establish the rules and agreements governing communication between participants, outlining how proposals are exchanged, when agreements are reached, and how conflicts are resolved. A commonly used method in this context is the Alternating Offers Protocol (AOP)25. In AOP, agents take turns making offers until an agreement is reached or time expires. This method facilitates appropriate trade-offs and concessions throughout the negotiation process, helping the parties move closer to an optimal solution.

Negotiation agents are intelligent entities responsible for executing the negotiation process. They generate proposals, evaluate counterparty proposals, and respond according to established negotiation strategies. The design of negotiation agents can be systematically analyzed using the Bidding-Opponent-Acceptance (BOA) model proposed by Baarslag et al.26. The BOA model consists of three main components: bidding, opponent, and acceptance. Bidding refers to the negotiation proposal put forward by the agent; the opponent is the other party involved in the negotiation, with potentially different goals and strategies; acceptance is the process by which the agent determines whether to accept the counterparty’s proposal based on certain conditions. This model offers a flexible decision-making framework for complex and dynamic negotiation environments by highlighting the dynamic interactions between these components.

Agent-based automated negotiation frameworks have been widely applied across various domains, including e-commerce27, resource scheduling28, and workflow planning29. In these fields, agent-based negotiation effectively facilitates negotiations in complex environments, optimizing decision-making processes. In healthcare, agent-based negotiation frameworks are used to resolve conflicts in decision-making between doctors and patients, improving the efficiency of diagnostic and treatment processes. For instance, the multi-agent negotiation model proposed by Elghamrawy30 enhances diagnostic efficiency and reduces the time required for conflict resolution by optimizing negotiations among doctors. However, these frameworks often rely on linear utility models, which are insufficient in addressing complex doctor-patient preference conflicts. In medical decision-making, preferences are typically nonlinear and fuzzy, rendering traditional linear utility-based models ineffective in handling complex negotiation scenarios. In decision-making contexts where nonlinear relationships need to be considered, user-preference-based approaches have been applied successfully in multi-issue negotiations31. Moreover, to address the fuzziness of preferences in the decision-making process, fuzzy theory has gradually been incorporated into automated negotiation models, leading to successful applications in areas such as job scheduling12 and bilateral trade negotiations32.

Deep reinforcement learning for automated negotiation

Recent years have witnessed significant progress in the application of DRL to automated negotiation. Unlike traditional reinforcement learning methods, DRL integrates deep neural networks to model high-dimensional state spaces, enabling intelligent agents to autonomously learn and optimize their decision-making strategies in complex, multi-dimensional environments. The primary advantages of DRL lie in its adaptability and flexibility, as it allows agents to continuously adjust their negotiation strategies by interacting with the environment, thereby responding to real-time changes in the negotiation context and improving both decision-making efficiency and quality. Studies have shown that DRL methods exhibit superior learning capabilities and greater efficiency compared to traditional approaches, especially in complex negotiation scenarios involving multiple issues and participants33,34.

DRL-based automated negotiation has been successfully applied across various fields, particularly in smart grid resource allocation and automated market trading. By interacting with other intelligent agents, DRL dynamically adjusts pricing strategies and optimizes resource allocation, thereby maximizing utility35,36. These applications highlight the advantages of DRL-based algorithms in multi-party decision-making and in scenarios involving complex constraints. However, DRL methods also face several challenges in practical applications, especially in terms of high computational costs and slow convergence speeds, which can lead to efficiency bottlenecks in real-time negotiations. Specifically, the training process of DRL typically requires a large number of samples and significant computational resources, which can slow down system responses when managing large-scale and complex negotiation tasks37,38.

Despite the success of DRL in various fields, it continues to face challenges when applied to complex medical decision-making, particularly in SDM. SDM typically involves multiple dynamically changing factors, with patient preferences often exhibiting fuzziness and uncertainty–issues that traditional DRL methods have not fully addressed. In medical decision-making, patients must consider not only the cost and efficacy of treatments but also balance personalized needs and emotional factors. The nonlinear relationships among these factors further complicate the negotiation process39. As a result, existing DRL methods struggle to efficiently handle such complex, fuzzy, and dynamic negotiation problems. To address these challenges, this study proposes a negotiation framework that combines the DDPG algorithm with fuzzy constraint theory. The framework uses the DDPG algorithm to dynamically adjust negotiation strategies based on real-time feedback, improving the adaptability of the negotiation process. Additionally, fuzzy constraint theory is employed to model the fuzzy preferences of both doctors and patients, allowing the model to more accurately capture the uncertainty in patients’ decisions during complex medical scenarios. By integrating these two approaches, the framework effectively addresses the limitations of traditional DRL algorithms in managing the complexity of multi-issue negotiations and preference fuzziness, offering a more efficient and intelligent solution–particularly in complex doctor-patient shared decision-making scenarios.

Methods

Modeling of the SDM negotiation scenarios

In this section, we focus on modeling the negotiation scenarios within SDM by designing a structured negotiation process and defining the environment in which agents interact. The primary goal is to simulate realistic doctor-patient interactions in multi-issue decision-making scenarios while ensuring that the negotiation framework is adaptable to complex and diverse medical contexts. This involves creating a negotiation environment, implementing a step-by-step process for offer exchanges, and utilizing utility functions to evaluate the outcomes. By doing so, we establish a foundation for understanding how agents can achieve optimal agreements in SDM negotiations.

Design of the negotiation process

SDM is a patient-centered treatment decision-making process where doctors and patients collaboratively discuss and decide on treatment options, considering multiple issues and various factors. To accurately simulate the real doctor-patient relationship, we have established a specific negotiation environment. In this environment, the negotiation involves two agents: the Doctor Agent (DA) and the Patient Agent (PA). Before the negotiation begins, these agents must agree on a negotiation protocol, P, which defines the valid actions each can take in any negotiation state. In this study, we adopt the AOP, a widely used protocol in multi-issue automated negotiations. Under this protocol, the doctor and patient take turns making offers until an agreement is reached or time expires. Throughout this process, both parties continuously exchange and evaluate offers, make appropriate concessions, and ultimately reach a joint treatment decision. The specific negotiation process involves the following key steps:

Negotiation Environment Setup: Each agent negotiates within a SDM environment that includes a domain of issues. This issue domain consists of n distinct, independent issues \(I(I_1,I_2,\ldots ,I_n,)\), each representing an important aspect of medical decision-making (e.g., treatment cost, efficacy, side effects, risks, etc.). Each issue is treated as a discrete, finite set containing several possible discrete or continuous values. In this experiment, each issue is set to have discrete values.

Initialization: The doctor and patient agents define their preferences based on their individual needs and assign weights to each issue, which reflect the importance of each issue in the decision-making process.

Offer Exchange: Both parties alternately propose treatment plans (i.e., offers), each of which includes specific values for several issues (e.g., treatment cost, efficacy, side effects, etc.).

Utility Evaluation: Both parties evaluate the offers based on a utility function, calculating their satisfaction with each offer and determining the total utility.

Decision: If both parties accept an offer, an agreement is reached. If both reject the offer, the exchange continues until either an agreement is reached or time expires.

Through this process, both negotiating parties can make reasonable trade-offs and concessions in a multi-issue environment, ultimately reaching the optimal solution.

Modeling of SDM utility functions

In multi-issue negotiations within SDM, the design of the utility function is crucial, as it quantifies the impact of each issue on the decision-making processes of both doctors and patients. Traditional decision modeling often assumes that preferences are precise and quantifiable, with each issue evaluated using specific numerical values. For instance, treatment costs can be represented by a fixed amount, whereas efficacy and side effects are often quantified as single values. However, in medical decision-making, the preferences of patients and doctors are generally more complex. Patients may prioritize goals such as “minimizing treatment costs” or “reducing side effects as much as possible.” These preferences are inherently fuzzy, making them challenging to accurately represent with a single numerical value. Consequently, traditional utility function models struggle to effectively address this fuzziness and uncertainty.

To address this fuzziness, this study utilizes fuzzy constraint theory to model the utility functions of both doctors and patients. By incorporating fuzzy membership functions, we effectively capture this uncertainty, enabling the utility functions to more accurately reflect the actual needs of both parties12,32. In this study, each issue is represented using a trapezoidal fuzzy membership function, with multiple intervals defined to describe the satisfaction levels of patients and doctors regarding factors such as treatment cost, efficacy, and side effects. The definition and shape of the trapezoidal membership function are detailed in Eq. (1) and Fig. 1.

Here, a, b, c, d are the parameters that define the start and end points as well as the “shoulders” of the trapezoid, determining the acceptance interval and transition ranges; k is a normalization constant; and x denotes the value of a specific issue under consideration. Specifically, \(x \in [b, c]\) is the interval where the agent has the highest satisfaction, \(x<a\) or \(x>d\) represent unacceptable values, and \(x \in [a, b]\) or \(x \in [c, d]\) represent gradual transitions in satisfaction.

The utility functions of both the doctor and the patient need to consider the influence of multiple issues. We calculate the overall satisfaction of the DA or PA with the offer using a weighted sum. Specifically, the aggregate satisfaction function is shown in Eq. (2).

In this equation, n denotes the total number of negotiation issues, \(w_i\) is the weight of the i-th issue, \(f_i (o_i)\) is the fuzzy membership function value for issue i given offer \(o_i\), and o represents a vector of issue values that constitute a specific offer.

This study focuses on the negotiation of treatment options for childhood asthma. The basic information about childhood asthma treatment has been collected and calculated from experienced doctors and actual data analysis as shown in Table 1. It lists the characteristics of five different types of treatment options including their cost range, effectiveness range, side effect range, risk range and convenience range. It is important to note that this data is intended for interpretive and experimental purposes only and is not suitable for direct clinical use. In practice, doctors and patients negotiate based on the treatment regimens provided in Table 1, e.g., doctors may favor the “\(En-High\ Dose\ ICS/ LABA+LTRA\)” regimen because it performs better in terms of therapeutic efficacy and risk management, whereas patients may favor the “\(En-High\ Dose\ ICS+Sustained-Release\ THP^d\)” regimen, which has advantages in terms of cost and convenience. Considering the preferences and limitations of both parties, they may agree on a compromise solution such as the “\(En-High\ Dose\ ICS/LABA+Sustained-Release\ THP\) ” program. This program balances treatment efficacy and risk control with cost and convenience to maximize mutual satisfaction.

Negotiation strategy

Negotiation strategy refers to the approach participants, such as doctors and patients, use to make decisions during the negotiation process. This involves considering their preferences, goals, and the current state of the environment to achieve an optimal outcome. In the context of SDM, developing effective negotiation strategies is crucial due to the inherent incompleteness and uncertainty of information. In scenarios with incomplete information, agents must predict the actions of the other party based on available data and past interactions, adjusting their strategies accordingly. Negotiation strategies are generally classified into two categories: time-dependent strategies and behavior-based strategies. Both categories utilize decision functions that map the offer state to the target utility, aiming to optimize the negotiation outcome for both parties.

Time-dependent strategies

Time-dependent strategies refer to strategies that evolve over the course of the negotiation process. The core idea behind these strategies is that, as negotiations progress, the passage of time can influence participants’ decisions. For instance, as discussions continue, participants may gradually relax their demands to eventually reach an agreement. Time-dependent strategies typically incorporate a time variable to adjust offers, thereby affecting participants’ choices and behaviors23. Equation (3) describes the time-based strategy model:

Here, u(t) represents the utility at time t, \(P_{min}\) and \(P_{max}\) are the minimum and maximum utilities, respectively, and F(t) is a time-varying function that controls the dynamic change of the utility u(t), reflecting the participants’ changing attitudes during the negotiation and encouraging the decision to gradually approach the optimal solution23. The function F(t) in the equation can be expressed by Eq. (4).

In this equation, k is a constant, t is the maximum time for negotiation, and c is the concession factor. For simplicity, k is typically set to 0. If \(0<c<1\), the agent makes concessions at the end, known as a Boulware strategy. If \(c\ge 1\), the agent concedes quickly and offers its reservation value, which is referred to as a Conceder strategy. \(c=1\) indicates that the agent’s decision utility decreases linearly, referred to as a Linear strategy40.

Behavior-based strategies

Behavior-based strategies adjust the current approach based on the actions of the other party in previous interactions. One of the most well-known behavior-based strategies is the Relative Tit-for-Tat (TFT) strategy, which determines an agent’s actions by referencing the opponent’s behavior in earlier rounds41. If the opponent made cooperative offers in previous rounds, the agent will respond with cooperative behavior. Conversely, if the opponent displayed adversarial or non-cooperative behavior, the agent will reciprocate with similar non-cooperative actions. As shown in Eq. (5), the TFT strategy reciprocates by offering concessions proportional to the opponent’s concessions made in the rounds prior to round \(\delta\).

In this equation, \({x}_{a\rightarrow b}^{{t}_{n+1}}[j]\) represents the offer on issue j sent by agent a to agent b at time \(t_{(n+1)}\). This value is determined by the proportion of the opponent’s previous concession and is then adjusted based on that proportion to modify agent a’s previous offer \({x}_{a\rightarrow b}^{{t}_{n-1}}[j]\). and \({\mathop {\underset{j}{min}}\limits ^{a}}\), \({\mathop {\underset{j}{max}}\limits ^{a}}\) denote the minimum and maximum allowable values for issue j. The minimum and maximum values ensure that the offer stays within the specified range.

SDM negotiation framework overview

In the SDM process, constructing an efficient negotiation framework is essential for facilitating decision-making between doctors and patients. The proposed DDPG-based SDM automated negotiation framework, AutoSDM-DDPG, comprises multiple modules that address key aspects of SDM negotiation. These include the setup of the negotiation environment, decision strategies, bidding strategies, acceptance strategies, and more. The overall structure of the framework is illustrated in Fig. 2.

In this framework, the SDM Agent functions as the core of the negotiation process, leveraging the DRL model to learn and optimize strategies within the SDM context. The primary role of the SDM Agent is to dynamically select optimal strategies based on the state information of the negotiation environment, aiming to maximize the utility for both the doctor and the patient and to reach a consensus. Specifically, the SDM Agent comprises two main components: the acceptance strategy and the offer strategy. Throughout the negotiation process, the SDM Agent employs the DDPG algorithm from deep reinforcement learning to continuously adjust and refine its decision-making process. The overall procedure is outlined as follows:

First, each agent constructs the negotiation domain knowledge based on its own goals \(\Omega\), the issue domain \(I(I_1,I_2,\ldots ,I_n)\), the possible range of values for each issue \(I_n=<a,b,c,d>\), and the weight \(w_i\) assigned to each issue. On this basis, the agent uses a fuzzy preference model to quantify and model these preferences and goals, ensuring that the fuzzy and uncertain preferences of both the doctor and the patient on different issues are accurately represented.

Next, the agent uses an Actor-Critic network architecture based on the DDPG algorithm to select and evaluate actions. In the first round of the negotiation, the agent selects an offer from the optimal offer set and sends it to the opponent. If the opponent accepts the offer, the negotiation ends. If not, the opponent agent returns a counteroffer. At this point, the opponent’s counteroffer \(B_t^o\) and the time step t constitute the next round’s environmental feedback state \(S_{(t+1)}\). Starting from the second round, the agent selects the next action based on the current negotiation state \(S_{(t+1)}\) and its fuzzy preference utility function U through the Actor network. This decision includes whether to accept the opponent’s offer \(B_t^o\) (i.e., whether to reach an agreement) or to reject it and propose a new offer \(B_{(t+1)}\) (i.e., propose a new treatment plan). The agent then provides the selected action as feedback to the environment.

Next, the Critic network computes the loss (or value evaluation) based on the action chosen by the Actor network. The Critic’s role is to assess the effectiveness of the agent’s behavior by calculating the difference between the utility of the current action and the expected utility, i.e., the Q-value. This Q-value loss is then used to guide the adjustment of the Actor network, continuously refining its strategy to ensure that the agent makes more efficient and beneficial decisions in subsequent actions.

Through this iterative process, the agent continuously learns and adjusts using the DDPG algorithm, gradually optimizing its offer and acceptance strategies. Specifically, in each round, the agent calculates the satisfaction based on the utility of the current offer and evaluates the satisfaction of each issue using fuzzy membership functions. These evaluations are used as the basis for the next round’s decision, allowing each agent to adjust its offer or acceptance strategy based on the opponent’s response. Ultimately, after several rounds of alternating offers, the agent finds an optimal balance among multiple issues, helping both the doctor and the patient reach a treatment plan that is acceptable to both parties.

The main steps of the proposed AutoSDM-DDPG negotiation framework are shown in Algorithm 1:

For example, in a typical negotiation process for childhood asthma treatment, the DA and PA each initialize their preferences and issue weights (see Table 3). In the first round, DA might propose a plan such as [Cost = 4.0, Effectiveness = 9, Side-effects = 10, Risk = 8, Convenience = 8], while PA proposes [Cost = 3.0, Effectiveness = 8, Side-effects = 5, Risk = 6, Convenience = 9]. Both agents evaluate these offers using the fuzzy membership functions and aggregate satisfaction (Eqs. (1) and (2)). If either party is not satisfied, they generate counter-offers with the Actor network and update their negotiation strategies through reinforcement learning (Eqs. (9)–(11)). This process continues, with offers and responses exchanged in each round, until both agents reach an agreement or the negotiation deadline is met. The changes in each agent’s satisfaction value over negotiation rounds can be visualized using a simple line graph to illustrate how the negotiation converges to a mutually acceptable solution.

Pre-training

In the AutoSDM-DDPG framework, pre-training is a crucial step to enhance the learning efficiency and stability of the framework, especially when dealing with complex multi-issue negotiation tasks. The main purpose of pre-training the Actor network is to provide a good initial policy, reduce random exploration, and improve both the learning efficiency and convergence stability of the AutoSDM-DDPG framework. By initializing the Actor with prior knowledge from pre-training, the negotiation agent can adapt more quickly to the multi-issue negotiation environment, leading to better performance during formal training and real-world deployment.

The data used for pre-training are generated through simulation, based on the negotiation scenarios and preference models established in this study. Specifically, we construct simulated doctor-patient negotiation instances by sampling preferences and issue weights within clinically reasonable ranges, reflecting the variability and diversity encountered in actual medical decision-making. This approach ensures that the pre-training phase captures realistic patterns and prepares the model for subsequent learning with real or more complex data.

In this study, we employed a pre-training method based on simulated data, aiming to provide an optimized starting point for the subsequent formal training phase.

The first step of the pre-training is to generate labeled data suitable for the Actor network, primarily by generating the training dataset through Eq. (6):

Here, \({U}_{Issue}(u(t),{V}_{Issue})\) denotes the issue-specific utility value. u(t) represents the utility value of the treatment plan, and \(V_{Issue}\) indicates the preference value for each issue, reflecting the degree of preference that both the doctor and the patient have for different issues (such as treatment cost, efficacy, etc.). The 20 data sets generated through Eq. (6) are used as the training data for the Actor network during the pre-training process. These data include preferences and utility values for different issues, and are used to train the network to make decisions in various scenarios.

During the pre-training process, the Actor network learns through the backpropagation algorithm, gradually adjusting its parameters to learn a reasonable strategy from historical data. Specifically, the goal of the Actor network is to generate the optimal offer based on the current state and historical decisions, and optimize its strategy through feedback provided by the Critic network.

And the Critic network is responsible for evaluating the value of the current offer, calculating the Q-value, and providing feedback to the Actor network to guide it in adjusting its strategy to maximize long-term utility16. The Critic network evaluates the Q-value of the current state-action pair using Eq. (7) and updates its parameters:

Here, \(r_t\) is the reward at time t, \(\gamma\) is the discount factor, \(Q^{'}\) is the Q-value of the target Critic network, and \(s_{(t+1)}\) and \(a_{(t+1)}\) are the next state and next action, respectively. The Critic network updates its parameters by minimizing the error between the actual Q-value and the target Q-value. After receiving feedback from the Critic network, the Actor network optimizes its strategy through the gradient update using Eq. (8):

In Eq. (8), \({\nabla }_{{\theta }^{\pi }}J({\theta }^{\pi })\) denotes the gradient of the policy objective with respect to the Actor network parameters \({\theta }^{\pi }\); \({\pi }_{{\theta }^{\pi }}({s}_{t})\) is the policy function; and \(Q(s_t, a_t)\) is the Critic’s estimate of the state-action value16.

This update mechanism ensures that the Actor network can gradually optimize its strategy based on the feedback from the Critic network, making more effective decisions in complex negotiation environments.

Acceptance strategy net

The acceptance strategy network dynamically optimizes the acceptance and rejection decisions in the multi-issue negotiation process through the Actor-Critic structure. The Actor network generates acceptance probabilities based on the current state and action, and selects the final action through Softmax. The Critic network provides the state-action value function, assisting the Actor network in adjusting its strategy to improve the negotiation outcome. Its architecture is shown in Fig. 3.

Actor Network: Responsible for generating the acceptance or rejection decision. Its main function is to generate a probability distribution for the acceptance action based on the current state. The input to the Actor network is the current state \(s_(t+1)\), which includes a six-dimensional vector representing the opponent’s offer (cost, risk, treatment duration, efficacy, convenience) \(B_t^o\) and time t. Through a series of fully connected layers, with ReLU activation functions and Softplus output functions, the network finally outputs a non-negative action value a.

Choose action: After the Actor network generates an action value a, it normalizes it using the Softmax function to calculate the probability distribution of each action. Then, a discrete sampling strategy is used to select a specific action a (0: reject, 1: accept) from this distribution.

Critic Network: Used to evaluate the value function Q(s, a) of a given state-action pair, i.e., the expected return of the action. The input to the Critic network is the concatenation of the current state \(s_{(t+1)}\) and the action a, which passes through multiple fully connected layers and uses a ReLU activation function. The network finally outputs the action value estimate Q(s, a). The loss calculation for the acceptance strategy network is shown in Algorithm 2:

Bidding strategy net architecture

In this study, the bidding strategy network is responsible for generating the offer vector for the treatment plan. In the context of multi-issue negotiations, the agent generates an offer that includes the utility of multiple issues based on the current negotiation state. Specifically, the bidding network outputs a 5-dimensional vector \(B=[I_1,I_2,I_3,I_4,I_5]\), where each element \(I_i\) represents the utility value for a particular issue, ranging from 0 to 1. These issues include treatment cost, efficacy, side effects, risks, and treatment convenience.

If the agent rejects the current offer, a counteroffer will be made based on the current negotiation status. This process is achieved through continuous control, with decision-making utilizing samples drawn from a normal distribution. The normal distribution \(\mathcal {N}(\mu ,{\sigma }^{2})\) is used here to model the utility of the offer, with its probability density function as shown in Eq. (9).

Here, f(x) is the probability density function of the normal distribution. x is the random variable (in our application, the utility value of the offer), \(\mu\) is the mean, and \({\sigma }^{2}\) is the variance. These two parameters are typically generated by a neural network to represent the expected utility and uncertainty of each issue. Based on this distribution, the utility of each issue is generated, which subsequently determines the final offer.

The agent first evaluates whether to accept the current offer and end the negotiation using the acceptance strategy network. If the agent rejects the current offer, it generates a counteroffer through the bidding strategy network. The architecture of the bidding strategy network is shown in Fig. 4. The input to the bidding strategy network is the current state \({s}_{t+1}\), which includes a six-dimensional vector containing the opponent’s offer \({B}_{t}^{o}\) (cost, risk, treatment duration, efficacy, convenience) and the time step t. The Actor network then generates the mean and standard deviation of the utility values for each issue based on the current state. These utility values are generated by sampling from a normal distribution.

In the offer generation process, the mean \({\mu }_{i}\) and standard deviation \({\sigma }_{i}\) for each issue are first output by multiple Actor networks, and then sampled using the Eq. (10):

Here, \(z_i\) is a random variable sampled from the standard normal distribution \(\mathcal {N}(\textrm{0,1})\), which generates the utility value for each issue. Through this mechanism, the bidding network is able to balance the expected utility and uncertainty for each issue, and generate an offer \({B}_{t+1}\) that meets the negotiation requirements.

The Critic network evaluates the utility of the offer by calculating the Q-value of the current state and offer pair. The input to the Critic network is the current state \(s_t\) and the offer \(a_t\), and it outputs the corresponding Q-value, which represents the utility of the offer in the current state. The output of the Critic network is used to provide feedback to the Actor network to optimize the offer strategy. The structure of the Critic network is similar to that of the Actor network, consisting of multiple fully connected layers, and it outputs a scalar Q-value representing the action value. The loss calculation for the bidding strategy network is shown in Algorithm 3.

Reward function

For the reward function, the acceptance strategy network and the bidding strategy network share the same reward function. Given the deadline T, issue weights \(\omega\) and the utility U(o) of the final offer o, the rewards for DA and PA are shown in Eq. (11).

In Eq. (11), \(R_t\) denotes the reward received at the end of negotiation. Here, \(\omega ^t\) is the vector of issue weights at the final negotiation round \(t_f\), and U(o) is the utility of the final agreed offer o. If the two agents reach an agreement before the negotiation deadline (\(t_f < T\) and \(t_f \ne 0\)), the reward is set as the weighted utility of the agreement, directly reflecting the overall satisfaction from the negotiation outcome. If the deadline T is reached without agreement (a conflict deal), the reward is set to zero, which penalizes negotiation failure and discourages agents from prolonging negotiations without reaching consensus. This reward structure encourages both agents to reach a mutually beneficial agreement efficiently and to maximize the weighted sum of their preferences, aligning the negotiation dynamics with the goal of timely, satisfactory shared decision-making.

Experiment results and discussion

To evaluate the proposed model, we conduct multiple negotiation experiments across various scenarios using the AutoSDM-DDPG simulation. This section presents the evaluation metrics and discusses the experimental results.

Evaluation metrics

-

Average Doctor ASV (\(Avg.{ASV}_{doctor}\)): This metric represents the average aggregate satisfaction of doctors across all negotiation experiments where an agreement was reached. The calculation method is shown in Eq. (12).

$$\begin{aligned} Avg.{ASV}_{doctor}=\sum _{i=1}^{Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}}} {U}_{DA}\left( {B}_{agree}^{i}\right) /Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}} \end{aligned}$$(12)where \(Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}}\) represents the total number of agreements reached. \({B}_{agree}^{i}\) denotes the agreement reached in the i-th negotiation. \({U}_{DA}\) stands for the utility function of the doctor.

-

Average Patient ASV (\(Avg.{ASV}_{patient}\)): This metric represents the average patient satisfaction across all negotiation experiments where an agreement was reached. The calculation method is shown in Eq. (13).

$$\begin{aligned} Avg.{ASV}_{patient}=\sum _{i=1}^{Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}}} {U}_{PA}\left( {B}_{agree}^{i}\right) /Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}} \end{aligned}$$(13)where \({U}_{PA}\) represents the patient’s utility function.

-

The Combination of ASV (CASV): This metric represents the combined societal welfare utility, which is the sum of \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\). It can be calculated using Eq. (14).

$$\begin{aligned} CASV=Avg.AS{V}_{\textrm{d}\textrm{o}\textrm{c}\textrm{t}\textrm{o}\textrm{r}}+Avg.AS{V}_{\textrm{p}\textrm{a}\textrm{t}\textrm{i}\textrm{e}\textrm{n}\textrm{t}} \end{aligned}$$(14) -

The Difference of ASV (DASV): This metric represents the difference between \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\). An agreement is likely to be one that satisfies both parties, rather than an extreme one. It can be calculated using Eq. (15).

$$\begin{aligned} DASV=\mid Avg.AS{V}_{\textrm{d}\textrm{o}\textrm{c}\textrm{t}\textrm{o}\textrm{r}}+Avg.AS{V}_{\textrm{p}\textrm{a}\textrm{t}\textrm{i}\textrm{e}\textrm{n}\textrm{t}}\mid \end{aligned}$$(15) -

Average Negotiation Rounds (Avg.R): This metric represents the average number of negotiation rounds required per negotiation experiment. It can be calculated using Eq. (16).

$$\begin{aligned} Avg.R=\sum _{i=1}^{Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}}} {R}_{i}/Ag{r}_{\textrm{t}\textrm{o}\textrm{t}\textrm{a}\textrm{l}} \end{aligned}$$(16)Where \(R_i\) denotes the number of negotiation rounds required to reach an agreement in the i-th negotiation experiment between the doctor and the patient.

Experimental design

Table 2 presents the agents used in the experiments. The comparison models include the fuzzy-constrained time-based negotiation model (ANF-TIME)23, the fuzzy constraint-directed agent-based negotiation model for SDM (FCAN)39, and the agent-based negotiation framework with fuzzy constraints and genetic algorithms (ANFGA)42. Two types of experiments were designed to evaluate both the performance of the negotiation models and the effectiveness of the negotiation process.

Experiment 1: To evaluate the negotiation performance of frameworks under different time constraints, this experiment compares the negotiation performance of the AutoSDM-DDPG framework with other negotiation frameworks in environments with varying deadlines. The experimental design includes five different deadlines (10, 15, 20, 25, and 30 rounds), with a fixed number of issues (5 issues).

Experiment 2: To evaluate the negotiation performance of models with varying solution space sizes, this experiment compares the negotiation performance of the AutoSDM-DDPG framework with other negotiation frameworks in environments with different numbers of issues. The experimental design includes four different issue sets (3, 5, 7, and 9 issues), with a fixed number of negotiation rounds (20 rounds).

To ensure the reliability of the negotiation outcomes, this study calculates the average results from 500 simulations conducted under different time constraints and problem quantities. The experiment employs data gathered by Lin et al.39 on doctors’ and patients’ preferences for child asthma treatment plans. These treatment plans address issues such as cost, effective, side-effects, risk, and convenience. Detailed doctor-patient preference data and negotiation domain configurations are provided in Table 3, which include problem value preferences and weight preferences. Utility preferences are defined using a set of trapezoidal fuzzy membership functions. The set W consists of arrays of \(0\sim {1}\) values, ensuring that the sum of all issue weights equals 1. In Eq. (4), \(c=1\) and \(k=0\).

The parameter settings for all comparative methods are as follows. For AutoSDM-DDPG, the learning rate was set to 0.001, the discount factor (\(\gamma\)) to 0.99, the number of hidden units in each neural network layer to 128, and the standard deviation of exploration noise to 0.2. For the baseline methods (ANFGA, FCAN, ANF-TIME), parameters were set according to their original publications23,39,42or commonly used defaults when not specified. For example, ANFGA used a population size of 100 and 200 generations; FCAN and ANF-TIME adopted fuzzy membership function parameters based on the clinical issue ranges listed in Table 1. All methods used the same negotiation deadlines and issue domains to ensure fair comparison.

Although we recognize that modern DRL algorithms such as Proximal Policy Optimization (PPO) and Soft Actor-Critic (SAC) are widely adopted and effective in general continuous control and multi-agent domains, there are several reasons why they are not included as baselines in our current experiments. First, the negotiation scenario studied in this work is a highly customized, multi-issue, discrete-and-continuous hybrid environment with fuzzy constraints and unique acceptance/bidding structures, which are quite different from the standard benchmark tasks for which PPO and SAC are typically designed and tuned. Implementing PPO or SAC to fully comply with our framework would require substantial nontrivial modifications, including designing specialized reward shaping, multi-agent extensions, and customized policy structures. Such adaptations would go far beyond a straightforward application of existing PPO/SAC code, and may risk unfairly disadvantaging those methods or requiring a dedicated benchmarking study in its own right. Second, to ensure a fair and meaningful comparison, each algorithm would need to be carefully tuned for the unique characteristics of the SDM negotiation process, which is itself a substantial research effort. Therefore, for this paper, we have chosen to focus on direct comparisons with methods that have been previously established and validated specifically for multi-issue automated negotiation. We believe this choice provides a clear and relevant performance reference for the domain. Nevertheless, we fully acknowledge that the inclusion of advanced DRL algorithms such as PPO and SAC is a valuable direction for future work, and we plan to explore such comparisons in subsequent research.

Experimental results

To assess the effectiveness of the AutoSDM-DDPG in SDM, this section presents a comparison of the model’s performance with other comparative models under various experimental settings. The experimental design includes two types of comparisons: one compares negotiation performance under different deadline rounds, and the other compares the impact of the number of issues on negotiation efficiency and effectiveness. The experimental results demonstrate that AutoSDM-DDPG outperforms the other comparative models across several key metrics, highlighting its superiority in handling complex SDM problems.

Comparative experiments with different number of deadline rounds

Table 4 summarizes the experimental results of multiple models across different deadline rounds (10, 15, 20, 25, and 30). The table highlights in bold to show the best performance in each of the comparison metrics. Our analysis shows that AutoSDM-DDPG consistently delivers superior results, particularly in terms of social welfare (CASV) and doctor-patient satisfaction( \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\)).

In terms of \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\), AutoSDM-DDPG consistently outperformed other models. In deadline rounds of 10, 15, and 20, AutoSDM-DDPG achieved significantly higher \(Avg.{ASV}_{doctor}\) compared to other models. Although, in the 30-round deadline, AutoSDM-DDPG’s \(Avg.{ASV}_{doctor}\) (0.621) was slightly lower than ANF-TIME (0.620), the difference was minimal (0.001). This indicates that, in extended negotiation processes, AutoSDM-DDPG can maintain relatively high \(Avg.{ASV}_{doctor}\) , better meeting doctors’ needs in complex SDM scenarios. In terms of \(Avg.{ASV}_{patient}\), while AutoSDM-DDPG performed worse than FCAN, the gap remained within the range of 0.01 to 0.028. Notably, FCAN’s \(Avg.{ASV}_{doctor}\) is significantly lower than AutoSDM-DDPG’s, suggesting that FCAN achieves higher \(Avg.{ASV}_{patient}\) at the expense of excessive concessions by doctors. From a social welfare perspective, AutoSDM-DDPG overall performs exceptionally well, effectively capturing patients’ preferences and needs while minimizing concessions from doctors, ensuring a balanced consideration of both parties’ interests.

In terms of societal welfare utility (CASV), as shown in Fig. 5, AutoSDM-DDPG consistently achieves a high CASV, particularly in environments with larger deadline rounds. For instance, in the experiment with a 20-round deadline, the CASV of AutoSDM-DDPG is 1.443, surpassing that of FCAN (1.299) and ANFGA (1.346). When the number of deadline rounds was increased to 30, AutoSDM-DDPG continued to maintain a high CASV level at 1.398. Additionally, both \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\) remain above 0.6, demonstrating that AutoSDM-DDPG effectively balances the needs of doctors and patients during the negotiation process, promoting fairer and more efficient decision-making.

As shown in Fig. 6, AutoSDM-DDPG shows an excellent balance in terms of doctor and patient satisfaction gap (DASV). At a deadline of 20 rounds, the DASV of AutoSDM-DDPG is 0.113, significantly lower than that of FCAN (0.277) and ANFGA (0.122). As the number of deadline rounds increased, the DASV for all models showed a slight increase. However, AutoSDM-DDPG continued to maintain a low DASV. In the 30-round deadline, its DASV was 0.158, still lower than that of the other comparison models. This result highlights that AutoSDM-DDPG effectively reduces the satisfaction gap between doctors and patients in longer negotiations, thus promoting a fairer negotiation outcome.

As shown in Fig. 7, AutoSDM-DDPG demonstrates high efficiency in terms of the average negotiation rounds (Avg.R) across all experimental settings. For example, in the experiment with 30 deadline rounds, the negotiation rounds for AutoSDM-DDPG is 7.235, which is significantly lower than those of ANF-TIME (19.765) and FCAN (9.770). This difference highlights the advantage of AutoSDM-DDPG in optimizing the negotiation strategy and responding promptly to each opponent’s needs.

Comparative experiments with different number of issues

Table 5 presents the performance of AutoSDM-DDPG compared to other models at varying numbers of issues (3, 5, 7, 9 issues). As the number of issues increases, the complexity of the negotiation problem rises significantly, and this, in turn, affects the model’s performance. The experimental results demonstrate that AutoSDM-DDPG consistently achieves higher social welfare and fewer negotiation rounds, even as the number of issues increases.

When the number of issues is low, the \(Avg.{ASV}_{doctor}\) of AutoSDM-DDPG is slightly lower than that of ANFGA and ANF-TIME (0.735 vs. 0.658 vs. 0.778), and the \(Avg.{ASV}_{patient}\) is also slightly lower than that of ANFGA and ANF-TIME (0.805 vs. 0.812 vs. 0.580). However, as shown in Figs. 8 and 9, the CASV is significantly better than that of ANFGA and ANF-TIME in terms of social welfare utility (1.540 vs 1.470 vs 1.358). Additionally, the DASV (0.070) outperformed the other models. This indicates that AutoSDM-DDPG effectively balances the satisfaction disparity between doctors and patients, helping to avoid conflicts.

As the number of issues increases, although the satisfaction levels of AutoSDM-DDPG decreases in \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\), the model still maintains a high level of satisfaction (both \(Avg.{ASV}_{doctor}\) and \(Avg.{ASV}_{patient}\) remain above 0.6). Additionally, AutoSDM-DDPG sustains a high CASV and a low DASV. As shown in the Figs. 8 and 9, the CASV of AutoSDM-DDPG is 1.267 when the number of issues is 9, which is much higher than that of other models (1.121 for FCAN and 1.133 for ANFGA). Meanwhile the DASV of AutoSDM-DDPG is 0.043, which is significantly lower than the other models (0.255 for FCAN and 0.181 for ANFGA). Furthermore, as shown in Fig. 10, AutoSDM-DDPG requires fewer negotiation rounds(10.100), significantly outperforming the other models. The standard deviation for average negotiation rounds is 0.45–1.11. This demonstrates that AutoSDM-DDPG can effectively balance the needs of doctors and patients in a complex, multi-issue negotiation environment, improving both fairness and efficiency in the negotiation process.

Computational cost and efficiency

To assess the practicality of the proposed AutoSDM-DDPG framework for real-world deployment, we evaluated its computational cost in terms of training time, convergence speed, and runtime per negotiation. All experiments were conducted on a workstation equipped with an Intel i9 CPU and NVIDIA RTX 3090 GPU. Training the AutoSDM-DDPG model for 500 episodes (with 500 negotiation rounds per episode) required approximately 4.5 hours. The framework typically converged to a stable negotiation policy after about 300 episodes, demonstrating efficient learning behavior. Once trained, the average runtime for a single negotiation session was less than 0.3 seconds, which is sufficiently fast for practical use in clinical or interactive decision-making settings. For comparison, baseline methods such as ANFGA and FCAN did not require model training, but each negotiation session took about 0.7–1.2 s on average due to the iterative optimization or genetic algorithm steps. These results indicate that although AutoSDM-DDPG requires more initial training time, it offers significant advantages in inference speed and real-time decision-making capability after deployment.

Summary and discussion

In conclusion, AutoSDM-DDPG shows significant advantages in all metrics, especially in terms of social welfare utility, average doctor satisfaction, average patient satisfaction, negotiation rounds, and doctor-patient satisfaction gap, which are better than the other comparative models. By combining DRL with fuzzy constraint theory, AutoSDM-DDPG not only optimizes the negotiation strategy but also effectively balances the interests of doctors and patients, reducing the satisfaction gap between them and improving the fairness and efficiency of the negotiation process.

These experimental results show that AutoSDM-DDPG demonstrates strong adaptability and learnability under different negotiation conditions (including different numbers of deadline rounds and issues), providing an efficient and intelligent decision support system. Especially in multi-issue and complex SDM scenarios, it is effective in enhancing the aggregate satisfaction of both doctors and patients while reducing the satisfaction gap between them, fully showcasing its potential for real-world applications.

Compared with existing approaches in the literature, the proposed AutoSDM-DDPG framework demonstrates several distinct strengths. First, by integrating deep deterministic policy gradient (DDPG) with fuzzy constraint modeling, our approach can effectively capture both the nonlinear and uncertain nature of doctor-patient preferences–an aspect often oversimplified or ignored in traditional linear utility or rule-based methods39,42. Second, AutoSDM-DDPG dynamically adjusts negotiation strategies in high-dimensional, multi-issue scenarios, outperforming classical agent-based and genetic algorithm-based models in terms of both social welfare and individual satisfaction, as confirmed in comparative experiments. Third, the proposed method achieves fairer negotiation outcomes by significantly reducing the satisfaction gap between parties and requires fewer negotiation rounds to reach agreement, thus improving both efficiency and practicality. Furthermore, AutoSDM-DDPG shows strong adaptability and generalizability, making it more suitable for real-world shared decision-making settings where preferences and decision contexts are complex and dynamic.

Although the proposed AutoSDM-DDPG framework is essentially an automated (reinforcement learning-based) decision-making system, it offers several advantages over traditional supervised and semi-supervised approaches, particularly in the context of complex multi-issue negotiation. Supervised and semi-supervised learning methods require large amounts of labeled data and are primarily designed for static prediction or classification tasks; they often lack the flexibility to adapt to dynamic, interactive environments where negotiation strategies must be continuously updated in response to an opponent’s actions and changing preferences. In contrast, reinforcement learning (RL) frameworks like AutoSDM-DDPG can learn optimal negotiation policies directly through trial-and-error interactions with the environment, enabling agents to adapt their strategies in real time and optimize long-term outcomes. This is especially valuable in medical decision-making scenarios with incomplete information, dynamic preferences, and multidimensional negotiation issues, where pre-labeled data may be scarce or impractical to obtain. Our experimental results further support this advantage, as AutoSDM-DDPG demonstrates strong adaptability, efficiency, and fairness across a variety of negotiation scenarios. Nevertheless, we acknowledge that RL methods can require significant computational resources and longer training times compared to supervised approaches, but the ability to operate in data-sparse and dynamically evolving environments makes them particularly suitable for automated negotiation tasks in shared decision-making.

Another limitation of this study is the absence of direct experimental comparison with modern DRL methods such as PPO and SAC. While these algorithms have demonstrated impressive results in a range of reinforcement learning problems, adapting them to our specific multi-issue negotiation context–characterized by complex, hybrid action spaces and fuzzy preference modeling–poses unique methodological and engineering challenges. Our current work prioritizes fair and domain-specific comparisons with agent-based negotiation baselines. In future research, we will investigate how to adapt and optimize PPO, SAC, or other modern DRL methods to the SDM negotiation framework, in order to provide an even more comprehensive evaluation of negotiation performance across learning paradigms.

Conclusion and future work

This study proposes AutoSDM-DDPG, a DDPG-based automated negotiation framework for multi-issue shared decision-making (SDM) in healthcare. Experimental comparative analyses reveal several key findings. First, AutoSDM-DDPG consistently achieves higher social welfare (CASV) and better fairness (lower satisfaction gap) than classical baseline models across different negotiation deadlines and issue numbers. Notably, our model maintains both doctor and patient satisfaction at high levels even as the negotiation problem becomes more complex, demonstrating strong adaptability and scalability. Second, AutoSDM-DDPG significantly reduces the average number of negotiation rounds required to reach an agreement, indicating improved efficiency in resolving complex multi-issue medical decisions. Third, compared with conventional agent-based and genetic algorithm approaches, AutoSDM-DDPG better captures the nonlinear and fuzzy nature of real-world preferences, resulting in more balanced and equitable outcomes. These patterns suggest that deep reinforcement learning combined with fuzzy modeling can effectively address the inherent uncertainty and diversity of preferences in SDM. Overall, our findings indicate that AutoSDM-DDPG provides a promising and generalizable solution for intelligent, fair, and efficient automated negotiation in medical and other multi-stakeholder domains.

Despite these advances, further validation in real clinical settings and adaptation to broader medical scenarios are needed to fully realize the potential of automated SDM frameworks. (1) The complexity of medical scenarios suggests that doctor and patient decisions may be influenced by other social relationships. Therefore, exploring group decision-making within the context of these social dynamics is a key area for future research. (2) The model’s application can be expanded to include a wider range of disease-related decision-making scenarios to verify its generalization and adaptability across diverse healthcare environments. (3) Given the critical nature of medical decisions, additional empirical validation of the SDM negotiation model through real-world applications is necessary before it can be implemented in clinical practice.

Data availability

The data that support the findings of this study are available on request from the corresponding author. The data are not publicly available due to privacy or ethical restrictions.

Code availability

The core code supporting the findings of this study is openly available at the GitHub repository: https://github.com/5854665/DDPG. Researchers and practitioners are encouraged to access, use, and further develop the code in accordance with the repository’s license.

References

Charles, C., Gafni, A. & Whelan, T. Shared decision-making in the medical encounter: What does it mean? (or it takes at least two to tango). Soc. Sci. Med. 44, 681–692. https://doi.org/10.1016/S0277-9536(96)00221-3 (1997).

Bomhof-Roordink, H., Gärtner, F. R., Stiggelbout, A. M. & Pieterse, A. H. Key components of shared decision making models: a systematic review. BMJ Open 9, e031763. https://doi.org/10.1136/bmjopen-2019-031763 (2019).

Elwyn, G. et al. Shared decision making: a model for clinical practice. J. Gen. Intern. Med. 27, 1361–1367. https://doi.org/10.1007/s11606-012-2077-6 (2012).

Joseph-Williams, N., Edwards, A. & Elwyn, G. Power imbalance prevents shared decision making. BMJ Br. Med. J. 348, g3178. https://doi.org/10.1136/bmj.g3178 (2014).

Drake, R. E., Cimpean, D. & Torrey, W. C. Shared decision making in mental health: prospects for personalized medicine. Dialog. Clin. Neurosci. 11, 455–463. https://doi.org/10.31887/DCNS.2009.11.4/redrake (2009).

Pieterse, A. H., Stiggelbout, A. M. & Montori, V. M. Shared decision making and the importance of time. JAMA 322, 25–26. https://doi.org/10.1001/jama.2019.3785 (2019).

Hillner Bruce, E. & Smith Thomas, J. Efficacy and cost effectiveness of adjuvant chemotherapy in women with node-negative breast cancer. N. Engl. J Med. 324, 160–168. https://doi.org/10.1056/NEJM199101173240305(1991).

Stacey, D. et al. Decision aids for people facing health treatment or screening decisions. Cochrane Database Syst. Rev. https://doi.org/10.1002/14651858.CD001431.pub5 (2017).

Jennings, N. R. et al. Automated negotiation: Prospects, methods and challenges. Group Decis. Negot. 10, 199–215. https://doi.org/10.1023/A:1008746126376 (2001).

Lin, R., Kraus, S., Wilkenfeld, J. & Barry, J. Negotiating with bounded rational agents in environments with incomplete information using an automated agent. Artif. Intell. 172, 823–851. https://doi.org/10.1016/j.artint.2007.09.007 (2008).

Zhang, L., Song, H., Chen, X. & Hong, L. A simultaneous multi-issue negotiation through autonomous agents. Eur. J. Oper. Res. 210, 95–105. https://doi.org/10.1016/j.ejor.2010.10.011 (2011).

Hsu, C.-Y., Kao, B.-R., Ho, V. L. & Lai, K. R. Agent-based fuzzy constraint-directed negotiation mechanism for distributed job shop scheduling. Eng. Appl. Artif. Intell. 53, 140–154. https://doi.org/10.1016/j.engappai.2016.04.005 (2016).

Gronauer, S. & Diepold, K. Multi-agent deep reinforcement learning: a survey. Artif. Intell. Rev. 55, 895–943. https://doi.org/10.1007/s10462-021-09996-w (2022).

Chen, S., Zhao, J., Weiss, G., Su, R. & Lei, K. An effective negotiating agent framework based on deep offline reinforcement learning (2023).

Bagga, P., Paoletti, N. & Stathis, K. Adaptive strategy templates using deep reinforcement learning for multi-issue bilateral negotiation. Neurocomputing 623, 129381. https://doi.org/10.1016/j.neucom.2025.129381 (2025).

Liang, Y., Guo, C., Ding, Z. & Hua, H. Agent-based modeling in electricity market using deep deterministic policy gradient algorithm. IEEE Trans. Power Syst. 35, 4180–4192. https://doi.org/10.1109/TPWRS.2020.2999536 (2020).

Stiggelbout, A. M., Pieterse, A. H. & De Haes, J. C. J. M. Shared decision making: Concepts, evidence, and practice. Patient Educ. Couns. 98, 1172–1179. https://doi.org/10.1016/j.pec.2015.06.022 (2015).

Joosten, E. A. G. et al. Systematic review of the effects of shared decision-making on patient satisfaction, treatment adherence and health status. Psychother. Psychosom. 77, 219–226. https://doi.org/10.1159/000126073 (2008).

ter Stege, J. A. et al. Development of a patient decision aid for patients with breast cancer who consider immediate breast reconstruction after mastectomy. Health Expect. 25, 232–244. https://doi.org/10.1111/hex.13368 (2022).

Légaré, F. et al. Supporting patients facing difficult health care decisions: use of the ottawa decision support framework. Can. Fam. Physician 52, 476 (2006).

Wooldridge, M. An Introduction to MultiAgent Systems (Wiley Publishing, 2009), 2nd edn.

Fujita, K. et al.Modern Approaches to Agent-based Complex Automated Negotiation. Studies in Computational Intelligence (Springer, Switzerland, 2017).

Faratin, P., Sierra, C. & Jennings, N. R. Negotiation decision functions for autonomous agents. Robot. Auton. Syst. 24, 159–182. https://doi.org/10.1016/S0921-8890(98)00029-3 (1998).

Imran, K. et al. Bilateral negotiations for electricity market by adaptive agent-tracking strategy. Electric Power Syst. Res. 186, 106390. https://doi.org/10.1016/j.epsr.2020.106390 (2020).

Aydoğan, R., Festen, D., Hindriks, K. V. & Jonker, C. M. Alternating Offers Protocols for Multilateral Negotiation, 153–167 (Springer International Publishing, Cham, 2017).

Baarslag, T. Exploring the strategy space of negotiating agents: a framework for bidding, learning and accepting in automated negotiation (Springer Cham, 2016).

Huang, C.-C., Liang, W.-Y., Lai, Y.-H. & Lin, Y.-C. The agent-based negotiation process for b2c e-commerce. Expert Syst. Appl. 37, 348–359. https://doi.org/10.1016/j.eswa.2009.05.065 (2010).

Wong, T. N., Leung, C. W., Mak, K. L. & Fung, R. Y. K. An agent-based negotiation approach to integrate process planning and scheduling. Int. J. Prod. Res. 44, 1331–1351. https://doi.org/10.1080/00207540500409723 (2006).

Marwa, M., Hajlaoui, J. E., Sonia, Y., Omri, M. N. & Rachid, C. Multi-agent system-based fuzzy constraints offer negotiation of workflow scheduling in fog-cloud environment. Computing 105, 1361–1393. https://doi.org/10.1007/s00607-022-01148-4 (2023).

Elghamrawy, S. Healthcare informatics challenges: A medical diagnosis using multi agent coordination-based model for managing the conflicts in decisions. In Hassanien, A. E., Slowik, A., Snášel, V., El-Deeb, H. & Tolba, F. M. (eds.) Proceedings of the International Conference on Advanced Intelligent Systems and Informatics 2020, 347–357 (Springer International Publishing, 2020).

Ito, T., Klein, M. & Hattori, H. A multi-issue negotiation protocol among agents with nonlinear utility functions. Multiagent Grid Syst. 4, 67–83. https://doi.org/10.3233/MGS-2008-4105 (2008).

Luo, X., Jennings, N. R., Shadbolt, N., Leung, H.-F. & Lee, J.H.-M. A fuzzy constraint based model for bilateral, multi-issue negotiations in semi-competitive environments. Artif. Intell. 148, 53–102. https://doi.org/10.1016/S0004-3702(03)00041-9 (2003).

Canese, L. et al. Multi-agent reinforcement learning: A review of challenges and applications https://doi.org/10.3390/app11114948 (2021).

Chang, H.-C.H. Multi-issue negotiation with deep reinforcement learning. Knowl.-Based Syst. 211, 106544. https://doi.org/10.1016/j.knosys.2020.106544 (2021).

Binyamin, S. S. & Ben Slama, S. Multi-agent systems for resource allocation and scheduling in a smart grid https://doi.org/10.3390/s22218099 (2022).

Shavandi, A. & Khedmati, M. A multi-agent deep reinforcement learning framework for algorithmic trading in financial markets. Expert Syst. Appl. 208, 118124. https://doi.org/10.1016/j.eswa.2022.118124 (2022).

Liu, D. et al. Scaling up multi-agent reinforcement learning: An extensive survey on scalability issues. IEEE Access 12, 94610–94631. https://doi.org/10.1109/ACCESS.2024.3410318 (2024).

Li, K., Niu, L., Ren, F. & Yu, X. An offer-generating strategy for multiple negotiations with mixed types of issues and issue interdependency. Eng. Appl. Artif. Intell. 136, 108891. https://doi.org/10.1016/j.engappai.2024.108891 (2024).

Lin, K. et al. Fuzzy constraint-based agent negotiation framework for doctor-patient shared decision-making. BMC Med. Inform. Decis. Mak. 22, 218. https://doi.org/10.1186/s12911-022-01963-x (2022).

Baarslag, T. et al. Evaluating practical negotiating agents: Results and analysis of the 2011 international competition. Artif. Intell. 198, 73–103. https://doi.org/10.1016/j.artint.2012.09.004 (2013).

Bahrammirzaee, A., Chohra, A. & Madani, K. An adaptive approach for decision making tactics in automated negotiation. Appl. Intell. 39, 583–606. https://doi.org/10.1007/s10489-013-0434-8 (2013).

Lin, K.-B. et al. An opponent model for agent-based shared decision-making via a genetic algorithm. Front. Psychol. 14. https://doi.org/10.3389/fpsyg.2023.1124734(2023).

Acknowledgements

We would like to express our gratitude to the Department of Pediatrics in Xiamen Hospital of Traditional Chinese Medicine for providing the research environment.

Funding

This work was supported in part by Xiamen Institute of Technology Young and Middle-aged Scientific Research Fund Project under Grant (No.KYT2023005), and Xiamen Institute of Technology Institute-level scientific research fund project under Grant (No.SJKY202305). These funds were used in the design of the study and in the collection, analysis, and interpretation of data, as well as in the writing of the manuscript.

Author information

Authors and Affiliations

Contributions

X.C. was responsible for developing the AutoSDM-DDPG and execute iterative negotiation experiments, and drafted the initial manuscript. F.H. was responsible for data collection and participate in experimental research. P.L. and Y.L. were responsible for experimental design and participate in experimental research. P.L. offered the relevant literature review and the major idea of the developed models, and finalized the manuscript. All authors contributed to revising the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics approval and consent to participate

This study was approved by the Medical Ethics Committee of Xiamen Hospital of Traditional Chinese Medicine, China, and the approval number is 2021-K065-01. All methods were performed in accordance with the relevant guidelines and regulations of the institutional and/or national research committee and with the Declarations of Helsinki. In addition, all participants and their parents or legal guardian provided written informed consent after a complete description of the study.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article