Abstract

Joint models for longitudinal and survival data are essential in biomedical research, enabling the simultaneous analysis of biomarker progression and clinical events. These models account for the interdependence between longitudinal and survival outcomes, improving insights into disease progression. However, missing data in longitudinal studies pose challenges, particularly when time dependent markers contain missing values, leading to biased estimates. This paper proposes a two-stage joint modeling framework integrating multiple imputation and inverse probability weighting. First, a linear mixed-effects model estimates biomarker trajectories, handling missing data using multiple imputation. Second, predicted biomarker values are incorporated into a Cox model, where inverse probability weight corrects for selection bias in survival estimation. A detailed simulation study has been conducted to study the performance of the proposed method compared to other common approaches. Results demonstrate the framework’s effectiveness in handling incomplete time dependent covariates while providing precise estimates of the relationship between biomarker progression and survival outcomes.

Similar content being viewed by others

Introduction

Time-dependent markers play a pivotal role in survival analysis, offering dynamic insights into the progression of disease and the risk of adverse events over time. Unlike baseline covariates, time-dependent markers, such as biomarkers or clinical measurements, capture longitudinal changes in an individual’s condition, enabling a more accurate and personalized assessment of event risk1,2. These markers are particularly important in medical research, where the progression of chronic diseases, such as cancer or cardiovascular conditions, or the effects of treatment, need to be continuously monitored3,4. By incorporating time-dependent markers, survival models improve prediction accuracy and enhance clinical decision-making5,6.

Joint models for longitudinal and survival data provide a powerful framework to simultaneously analyze both the processes, allowing researchers to account for the interdependence between longitudinal biomarker trajectories and the risk of an event7,8,9,10. Early developments by Wulfsohn et al.11 introduced a foundation for linking random effects in the longitudinal submodel to the survival submodel. This framework has since evolved to accommodate time-varying covariates and measurement error, with Rizopoulos12 extending it for dynamic prediction and clinical applications. More recent advancements have addressed its limitations by allowing flexible hazard functions and incorporating nonparametric approaches13,14,15.

Several studies have explored two-stage approaches to joint modeling of longitudinal and time-to-event data, aiming to improve computational efficiency while maintaining accuracy. Huong et al.16 proposed a modified two-stage method that avoids complex multi-integrations, enabling the use of high-dimensional random effects for non-linear longitudinal data while reducing bias. Similarly, Mehdizadeh et al.17 applied a two-stage model to competing risk data, demonstrating improved performance with larger sample sizes and emphasizing the need for better diagnostic tools. Dutta and Chakraborty18 highlighted the robustness of their two-stage approach against time-to-event model misspecification, while Mauff et al.19 introduced an importance-sampling correction to mitigate bias in survival estimates, particularly in multivariate joint models. Alvares and Leiva-Yamaguchi20 extended the two-stage Bayesian framework by incorporating informative priors and bias correction mechanisms, leading to improved accuracy and reduced computational burden. Finally, Chen et al.21 addressed missingness and left-censoring in longitudinal covariates within a joint modeling framework, ensuring a more comprehensive use of available data. These contributions collectively demonstrate the potential of two-stage approaches in handling complex joint models, balancing computational feasibility with statistical precision.

Despite their utility, joint models face challenges when dealing with missing data, a frequent issue in longitudinal studies due to dropout, nonresponse, or skipped visits21,22,23. Missing data mechanisms can significantly bias parameter estimates, especially when the missingness is informative or dependent on patient characteristics or clinical outcomes24,25. Conventional methods, such as complete-case analysis or last observation carried forward, fail to address the complexity of informative missingness and can lead to biased results when coupled with measurement error26,27.

Seaman and White28 highlighted the importance of integrating robust methods such as multiple imputation (MI) and inverse probability weighting (IPW) to address these biases. MI creates multiple imputed datasets based on the observed data distribution, allowing uncertainty due to missingness to be incorporated into the analysis29,30. IPW, on the other hand, adjusts the likelihood by weighting observations based on their probability of being observed, thereby correcting for selection bias under the missing-at-random (MAR) assumption31,32. Combining these approaches has proven effective in complex settings where missing data arise both intermittently and at informative times28,33,34. The literature provides substantial theoretical and empirical support for the use of combined MI and IPW approaches, rather than applying either method in isolation33. Seaman et al. demonstrated that the IPW/MI approach can inherit the efficiency advantages of MI while avoiding bias from incorrectly imputing larger blocks of missing data, particularly in longitudinal studies with substantial attrition33. The robustness of the combined approach is particularly valuable in complex longitudinal biomarker studies where the method provides protection against misspecification of either the imputation model or the weighting model33. More recently, Thaweethai et al.35 showed that even when the imputation model is misspecified, such as by omitting important interactions, the combined MI and IPW approach can still yield asymptotically unbiased point estimates. Nevertheless, these methods require careful specification of both the imputation model and the weighting mechanism to ensure unbiased and valid results36,37,38.

To address the challenges posed by missing longitudinal data, we propose a two-stage joint modeling approach that incorporates both MI and IPW for handling missingness. This combined framework enables us to account for complex missing data patterns while accurately capturing the longitudinal and survival processes. In the first stage, we fit a linear mixed-effects model to the biomarker trajectory, using MI to address missing data. Imputation is performed using chained equation approach, while IPW weights are derived from a logistic regression model for non-missingness. In the second stage, predicted individual biomarker trajectories from the first stage are used to fit a Cox proportional hazards model, incorporating IPW weights to account for informative missingness in the survival analysis. This framework, implemented using our newly developed package JMtwostage available in https://github.com/kumarbhrigu/JMtwostage, provides robust estimates of the association between biomarkers and survival, even in the presence of informative missingness. Our approach was applied to a real-life dataset containing longitudinal biomarker measurements and survival times, showcasing its effectiveness in handling missing data and preserving key covariate relationships in both the longitudinal and survival components.

Methodology

Let \(Y_{ij}\) denote the observed biomarker measurement for the i-th individual at time \(t_{ij}\), where \(i = 1, \dots , n\) represents the individuals in the dataset, and \(j = 1, \dots , n_i\) represents the time points for repeated measurements, with \(n_i\) indicating the total number of measurements for the ith individual. The kth time fixed covariate is denoted by \(X_{ik}\), where \(k=1,2,\dots ,p\). The survival data for ith individual consist of the censoring time and failure time denoted by \(T_{cens}\) and \(T_{fail}\) respectively. Therefore survival time for each individual is denoted by \(T_i=min(T_{fail},T_{cens})\), which represents the time until the occurrence of a specific event, such as death or disease progression. The event indicator \(\delta _i\), associated with \(T_i\), assumes the value 1 when the event occurs by time \(T_i\), or 0 if the survival time is censored, indicating that the event was not witnessed within the study period.

First stage: mixed-effects model for longitudinal data

To model the longitudinal biomarker measurements, we employ a linear mixed-effects model. This model accounts for the hierarchical structure of the data, where repeated measurements are nested within individuals, allowing for both fixed and random effects. This formulation enables the model to capture both population-level trends in the biomarker and individual-specific deviations from these trends. The model is formulated as follows:

where \(\beta _0\) is the fixed intercept, representing the average baseline level of the biomarker across individuals. The parameter \(\beta _1\) captures the fixed effect of time \(t_{ij}\), representing the average rate of change in the biomarker over time. The terms \(\beta _k\) (for \(k = 2, \dots , p\)) represent the effects of additional time fixed covariates, such as treatment groups or baseline characteristics, included in the model. The random intercept \(b_{0i}\), assumed to follow a normal distribution \(b_{0i} \sim N(0, \sigma _b^2)\), accounts for between-individual variability in the biomarker’s baseline levels. Finally, the residual error \(\epsilon _{ij}\), also assumed to follow a normal distribution \(\epsilon _{ij} \sim N(0, \sigma ^2)\), represents within-individual variability in the biomarker measurements that is not explained by the covariates or random effects.

Second stage: cox proportional hazards model for survival data

In this second stage, we aim to assess the association between the biomarker trajectory and survival by fitting a Cox proportional hazards model. This model is commonly used in survival analysis to relate the hazard of an event (such as death or failure) to a set of covariates, including time-varying covariates. Here, the predicted biomarker trajectory \({\hat{Y}}_{ij}\), derived from the first-stage mixed-effects model, is treated as a time-dependent covariate. The general form of the Cox proportional hazards model is as follows:

where \(h(t \mid {\hat{Y}}_{ij}, {\textbf{X}}_i)\) is the hazard function at time \(t\), representing the instantaneous risk of the event occurring at time \(t\) given the biomarker trajectory \({\hat{Y}}_{ij}\) and the other covariates \({\textbf{X}}_i\). The baseline hazard \(h_0(t)\) is unspecified and left as a non-parametric function of time. The biomarker trajectory \({\hat{Y}}_{ij}\) is obtained from the first-stage mixed-effects model and is included as a time-dependent covariate. The vector \({\textbf{X}}_i = (X_{i2}, X_{i3}, \dots , X_{iq})\) includes other covariates that may affect the hazard of the event. The regression coefficient \(\gamma _1\) quantifies the effect of the biomarker trajectory on the hazard, while \(\gamma _k\) for \(k = 2, 3, \dots , q\) represent the regression coefficients for the other covariates. To estimate the parameters of the Cox model, we use the log partial likelihood approach, which does not require specifying the baseline hazard, allowing for more flexible estimation without estimating the baseline survival function. Instead, this method focuses on the relative risk between individuals at any given time based on their covariates. The partial likelihood function for the Cox model is given by:

where \(n\) is the number of individuals in the study and \(R(t)\) is the risk set at time \(t\), which includes individuals who are at risk of experiencing the event at that time. The numerator represents the risk of the event for individual \(i\) at time \(t\), while the denominator is the total risk at time \(t\) considering all individuals in the risk set. The log partial likelihood function is the natural logarithm of this product:

The parameters \(\gamma _1, \gamma _2, \dots , \gamma _q\) are estimated by maximizing the log partial likelihood function using numerical optimization techniques, such as Newton-Raphson or other gradient-based methods. The estimated coefficients \(\gamma _1, \dots , \gamma _q\) can be interpreted as log hazard ratios, with \(\exp (\gamma _1)\) representing the hazard ratio for a unit increase in the biomarker trajectory. The Cox model can accommodate censoring, where individual’s event times are not observed, and adjusts for this by including only individuals at risk at each time point. Additionally, the model allows for time-varying covariates, such as the biomarker trajectory, which can change over time as the individual’s biomarker level evolves. The log partial likelihood approach thus provides a flexible and efficient way to estimate the parameters, quantify the effect of the biomarker on survival, and handle censored data and time-varying covariates in survival analysis.

Handling missing data: multiple imputation and inverse probability weighting

We adopt a two-stage approach: multiple imputation and inverse probability weighting to address missing values in the longitudinal biomarker measurements \(Y_{ij}\). Under the assumption that the data are missing at random (MAR), multiple imputation is used to generate plausible values for missing measurements. The multiple imputation algorithm (1) systematically handles missing data by iteratively imputing missing values based on conditional distributions. The process begins by specifying an imputation model for the missing values, \(Y_{ij}^{\text {mis}}\), conditioned on the observed values, \(Y_{ij}^{\text {obs}}\), and covariates, \({\textbf{X}}_{i}\). Initial imputed values are generated by sampling from the observed data to provide a starting point. For each imputation iteration, m, and at each time point, j, the algorithm conditions on the available observed values \(Y_{ij}^{\text {obs}}\), draws parameter estimates \(\varvec{\psi }^m\) and random effects \(b_{0i}^m\) from their respective distributions, and subsequently generates imputations for the missing values, \(Y_{ij}^{\text {mis(m)}}\), from the specified conditional distribution. This iterative process is repeated across multiple imputations to account for the uncertainty associated with missing data, ensuring that imputed datasets reflect the underlying variability and provide robust statistical inference.

To further account for potential informative missingness, which may arise when the likelihood of missing data depends on unobserved variables, we compute inverse probability weights. These weights are derived from a generalized linear mixed effect model predicting the probability of observing each biomarker measurement. The model is specified as:

where \(R_{ij}\) is an indicator variable equal to 1 if \(Y_{ij}\) is observed and 0 otherwise. The coefficients \(\alpha _0\) and \(\alpha _k\) represent the effects of covariates \(X_{ik}\) on the probability of observing the biomarker. The model contains the random intercept \(a_{0i}\) which follows \(N(0,\sigma _a^2)\). The weights are then computed as \(w_{ij} = 1 / \text {Pr}(R_{ij} = 1 \mid X_{ij})\), ensuring that the contribution of each observation to the analysis is inversely proportional to its likelihood of being observed.

Once the inverse probability weights \(w_{ij}\) are estimated, they are applied in the second stage of our modeling framework- specifically, during the fitting of the Cox proportional hazards model for the survival outcome. The predicted longitudinal trajectories obtained from the imputed datasets are treated as time-dependent covariates in the survival model. In order to correct for potential selection bias introduced by the informative missingness in these covariates, each subject’s contribution to the partial likelihood function is weighted by the corresponding \(w_{ij}\) (algorithm (3)). This reweighted partial likelihood ensures that subjects who are underrepresented (i.e., have lower probability of being observed) contribute more to the estimation process, thereby mitigating bias due to non-random missingness.

Importantly, this weighting mechanism is integrated within each imputed dataset, and final estimates are obtained using Rubin’s rules to combine the estimates and standard errors across the multiple imputed and weighted analyses. This approach allows us to harness the advantages of both MI and IPW, capturing the uncertainty due to imputation and adjusting for the potential non-random nature of the missing data.

Pooling and combining results

After fitting the two-stage model across all m imputed datasets, the resulting parameter estimates are combined to produce a single set of final estimates that account for both within-imputation and between-imputation variability. This pooling process is achieved using Rubin’s rules, a standard method for combining results from multiple imputations.

Let \(\varvec{\gamma }_m\) represent the parameter estimate derived from the mth imputed dataset, where \(m = 1, \dots , M\). The pooled parameter estimate \(\bar{\varvec{\gamma }}\) is computed as the arithmetic mean of the parameter estimates across all imputed datasets:

where \(\bar{\varvec{\gamma }}\) represents the combined parameter estimate, reflecting the central tendency of the estimates from the m datasets.

The overall variance of \(\bar{\varvec{\gamma }}\), represented by T, consists of two components: the within-imputation variance, reflecting the variability of parameter estimates within each imputed dataset, and the between-imputation variance, addressing the variability among the various imputed datasets. The total variance is expressed as:

where \(U_m\) is the within-imputation variance for the m-th dataset, and B represents the between-imputation variance. The within-imputation variance \(U_m\) is calculated for each dataset as the variance of the parameter estimates within that dataset, reflecting the precision of the estimates in a single imputed dataset. On the other hand, the between-imputation variance B measures the variability of the parameter estimates across the M datasets and is given by:

The formula for T combines these two sources of variability, ensuring that the final pooled estimate \(\bar{\varvec{\gamma }}\) and its associated variance T appropriately reflect the uncertainty introduced by the imputation process.

Rubin’s rules thus provide a robust framework for synthesizing results from multiple imputed datasets. They account for the fact that imputation introduces an additional layer of uncertainty due to the missing data. By combining within and between imputation variances, the methodology ensures that final inferences are valid and properly calibrated. These pooled results are then used to interpret the association between longitudinal biomarker trajectories and survival outcomes, yielding insights that account for the missing data in the analysis.

Model implementation in R

The JMtwostage R package provides a comprehensive solution for handling missing data in time-dependent markers within survival models. It includes five distinct approaches for handling incomplete data: complete case analysis (jmCC), last observation carried forward (jmLOCF), simple two-stage with single imputation (jm2s), multiple imputation (jmMI), and multiple imputation with inverse probability weighting (jmwMI). The general syntax for the functions are as follows:

-

jmCC

jmCC(ldata,sdata,id,visitTime,timeDep,coxModel=NULL,model=“Cox”)

-

jmLOCF

jmLOCF(ldata,sdata,id,visitTime,timeDep,coxModel=NULL,

model=“Cox”)

-

jm2s

jm2s(ldata,sdata,id,visitTime,timeDep, impModel=NULL,

coxModel=NULL,model=“Cox”)

-

jmMI

jmMI(ldata,sdata,M=5,id,visitTime,timeDep,impModel=NULL,

coxModel=NULL,model=“Cox”)

-

jmwMI

jmwMI(ldata,sdata,M=5,id,visitTime,timeDep,impModel=NULL,

ipwModel=NULL,coxModel=NULL,model=“Cox”).

The functions need the following arguments ldata for longitudinal measurements and sdata for survival outcomes, linking them via a subject identifier (id). The function accounts for time-dependent covariates specified in timeDep, measured at visit times (visitTime). Users can specify a survival model (model), choosing between the Cox proportional hazards model or the Aalen additive hazards model. An optional coxModel formula allows customization of the survival model. The jmCC function performs two stage joint modeling of longitudinal and survival data using complete case analysis (CC). The jmLOCF function performs joint modeling of longitudinal and survival data using the last observation carried forward (LOCF) method for handling missing values in time-dependent biomarkers. The jm2s function implements a two-stage joint modeling approach with single imputation for time-dependent covariates in survival analysis. The function imputes missing biomarker values using user-specified imputation models (impModel) and fits a survival model (model), which can be either a Cox proportional hazards model or an Aalen additive model. The visitTime argument defines the time points for longitudinal measurements, while timeDep specifies the time-dependent biomarkers. The coxModel argument allows users to define the structure of the survival model, incorporating the imputed covariates. The jmMI function implements multiple imputation for time-dependent covariates in joint modeling of longitudinal and survival data using algorithm (2). It imputes missing biomarker values using specified imputation models (impModel), performs survival analysis using a Cox or Aalen model (model), and combines results from multiple imputations (M) using Rubin’s rule. The jmwMI function is designed for joint modeling with multiple Imputation and inverse probability weighting for time-dependent covariates in survival analysis. The timeDep argument specifies the time-dependent covariates that require imputation, while impModel defines the imputation models for each of these covariates. Additionally, ipwModel provides models for calculating inverse probability weights, ensuring that missing data are appropriately handled. The function fits a survival model using the formula specified in coxModel, which can be either a Cox proportional hazards model or an Aalen additive model, as indicated by the model argument. The number of imputations is controlled by M, with a default value 5. Compared to jmMI, the jmwMI function extends its approach by integrating inverse probability weighting alongside multiple imputation. While jmMI focuses solely on imputing missing values in time-dependent covariates, jmwMI incorporates IPW to adjust for potential informative missingness, making it more robust in scenarios where missing data mechanisms are not random. A pseudo algorithm used for jmwMI function is given in algorithm (3). The pooled results provide final estimates for the association between longitudinal biomarker measurements and survival outcome, accounting for potential biases due to missing data. The package also provides its own print,summary functions for the fitted objects through different methods. The prederror function helps users to select the best approach using Brier score value for different imputation. Besides these our package includes plot_jmtwostage function to visualize the survival probability for different individual along with it’s time dependent marker values.

Simulation study

Dataset

Simulation studies play a critical role in assessing the performance of statistical models, especially in medical research. They enable researchers to generate data with adequate sample sizes and realistic characteristics, facilitating robust comparisons of competing methods. Following the guidelines outlined in Morris et al.39 and Burton et al.40, we conducted a simulation study to evaluate the impact of missing time dependent marker on patient’s survival times. Our aim was to replicate the characteristics of the real life dataset and evaluate the performance of the proposed approach for a missing time-dependent marker in the relative risk model.

The data generation process involves simulating longitudinal and survival data under various scenarios, incorporating individual-level heterogeneity and missingness mechanisms. For each subject i, we generate the individual-specific trajectories for a time-dependent marker (\(y_{ij}\)), survival times, and event indicators. The marker \(y_{ij}\) is modeled as:

where \(\beta _0 = 1.1\), \(\beta _1 =-0.025\), \(\beta _2 =-0.003\) represent the intercept, the effect of the covariate \(x_{1i}\), and the effect of time \(t_{ij}\), respectively. Random effects \(b_{0i} \sim {\mathcal {N}}(0, \sigma _b^2)\) capture individual-level variability, with \(\sigma _b = 1\), and \(\epsilon _{ij} \sim {\mathcal {N}}(0, 1)\) introduces error term.

The hazard of the event is modeled using a time-dependent Cox proportional hazards model:

where \(\lambda _0(t)\) is the baseline hazard, and \((\gamma _{1},\gamma _{2}) = (0.01,-0.5)\) represents the effects of \(x_{1j}\) and \(y_{ij}\). Event times (\(t_{\text {fail}}\)) are generated using an exponential distribution based on the hazard, and censoring times (\(t_{\text {cens}}\)) are drawn independently from an exponential distribution with rate 0.02. The observed time is given by \(t_{\text {event}} = \min (t_{\text {fail}}, t_{\text {cens}})\), and an event indicator is recorded as \(I(t_{\text {fail}} = t_{\text {event}})\).

The missing data indicator vector \(R_{ij}\) for \(Y_{ij}\) was generated under three different missing data scenerio: MCAR(missing completely at random), MAR(missing at random), MNAR(missing not at random). For MCAR, missingness is assigned with a constant probability \(P(\text {R}_{ij}=1|x_{1i},t_{ij}) = 0.2\). For MAR, missingness is modeled as:

where \((\alpha _0,\alpha _1,\alpha _2) = (-0.6, -0.001, -0.01)\). For MNAR, the model incorporates the value of \(y_{ij}\):

where \((\alpha _0,\alpha _1,\alpha _2)= (-1.5, 0.01, 0.4)\). Iterative adjustments ensure that the overall missingness aligns with predefined threshold \(15\%-28\%\) missingness. The final dataset includes start and stop times, event indicators, individual IDs, \(x_1\), y, survival times, and survival status. This design supports analyses of time-to-event outcomes and longitudinal patterns under realistic missing data conditions.

Analysis

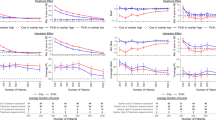

We conducted the simulation study generating 100 datasets of sample size n=100, 200 and 300 each. Under each sample size n=100, 200, 300 we also considered three different missing data generation process MCAR, MAR, MNAR. The estimates for the model parameters of Eqn (10) was obtained using five different methods: cc (complete case), locf (last observation carry forward), twos (two stage with single imputation), mi (multiple imputation) and wmi (multiple imputation with inverse probability weight). We carried out the analysis considering two mixed effect model with random intercept at individual level for imputation and inverse probability weight. We calculated the bias, mean square error(MSE), coverage probability of 95% confidenece interval for the model parameter \(\gamma _1\) and \(\gamma _2\). Tables 1, 2, 3 shows the results of simulation study under MCAR, MAR and MNAR mechanism with three sample sizes n=100, 200, 300 in each case. Figure 1 shows the estimated density plot for \(\gamma _1\) and \(\gamma _2\). For MCAR Fig. 2 shows, the cc method consistently exhibits substantial bias for \(\gamma _1\), particularly in smaller sample sizes. In contrast, mi and wmi demonstrate superior performance by achieving lower bias and MSE for \(\gamma _1\). The locf method also shows relatively high bias and MSE. For the regression coefficient \(\gamma _2\), most methods perform well, with minimal bias observed across all sample sizes, though wmi achieves slightly better efficiency in terms of MSE which is evident from Fig. 3. Regarding coverage probability, mi maintains values closer to the nominal 95% level, while cc and locf underperform, especially in smaller datasets.

Under MAR, the results further emphasize the advantages of mi and wmi methods, which outperform cc and locf in all key metrics. Both methods achieve consistently lower bias and MSE for \(\gamma _2\), and their performance improves with increasing sample sizes. For \(\gamma _1\) Figs. 2, 4 shows, although all methods maintain low bias and adequate coverage, wmi slightly outperforms others in terms of MSE and achieves coverage probabilities closer to the nominal level. On the other hand, cc and locf methods remain limited due to their inability to properly address missing data mechanisms, resulting in higher bias and lower efficiency, especially for \(\gamma _2\).

The findings under MNAR reiterate the limitations of cc and locf methods, which exhibit the highest bias and MSE for \(\gamma _2\) across all sample sizes. In contrast, mi and wmi maintain their superior performance by achieving the lowest bias and MSE values and providing coverage probabilities close to the nominal level. For \(\gamma _1\), wmi consistently delivers the best results across all metrics, underscoring its robustness in handling missing data. Across all missingness mechanisms, mi and wmi methods stand out as the most reliable approaches, particularly for the variable \(\gamma _2\), demonstrating robustness, efficiency, and accuracy. These results emphasize the importance of adopting advanced imputation techniques, such as mi and wmi, in statistical analyses to handle missing data effectively, particularly when assumptions of MCAR and MAR are violated. The consistent performance of wmi in minimizing bias, reducing MSE, and achieving reliable coverage probabilities highlights its utility in scenarios where standard methods may fall short. Additional simulation results with higher missingness levels (29 37%), provided in the supplementary material, further confirm the robustness of the wMI method. The wMI approach shows lower bias and MSE for both \(\gamma _1\) and \(\gamma _2\) across all sample sizes and missing data types, especially under MAR and MNAR (Figs. (S1, S2, S3)). It also maintains coverage probabilities close to the nominal 95% level, even as missingness increases. In contrast, methods like cc and locf show greater bias and poor coverage, particularly for \(\gamma _2\).

Data application

Based on the published work by Noronha et al.41, we created a simulated dataset that closely resembles the real-life data from a Phase 3 randomized clinical trial conducted on patients with non-small cell lung cancer (NSCLC) harboring activating EGFR mutations in a first-line setting. During data simulation, a total of 350 patients were randomly assigned to one of two treatment arms: gefitinib (Gef) monotherapy or gefitinib combined with the chemotherapy agents pemetrexed and carboplatin (Gef+C). Among these participants, 176 were allocated to the Gef arm, whereas 174 were given the Gef+C combination. The median age of the study population was 54 years (range: 27 78 years). The distribution of sex was approximately balanced, with 51% of patients in the Gef+C group and 53% in the Gef group being male. In terms of smoking history, the majority of patients were never-smokers (83% in the Gef+C group and 85% in the Gef group), while a smaller proportion were former smokers (11% and 12%, respectively), and even fewer were current smokers (6% and 3%, respectively). The Eastern Cooperative Oncology Group (ECOG) scale was used to evaluate performance status, quantifying a patient’s functional capability. Most patients had an ECOG status of 1 (79% in the Gef+C group and 74% in the Gef group), indicating they were able to carry out daily activities with some restrictions. A smaller percentage had an ECOG status of 2 (21% and 22%), meaning they were capable of self-care but unable to work. Very few patients had an ECOG status of 0 (1% and 4%), signifying they were fully active without limitations. The presence of comorbidities varied among patients. In the Gef+C group, 56% of patients had no comorbidities, while 12% had hypertension, 5% had diabetes, 5% had COPD/emphysema, and 2% had prior tuberculosis. The remaining 13% had multiple comorbidities. In the Gef group, 46% had no comorbidities, while a higher proportion had hypertension (18%), and similar proportions had diabetes (5%), COPD (3%), and prior tuberculosis (3%). Histological subtypes of NSCLC were also recorded. The majority of patients were diagnosed with adenocarcinoma (98% in the Gef+C group and 97% in the Gef group). Less common histological subtypes included adenosquamous carcinoma (2% and 2%), squamous cell carcinoma (1% and 1%), and sarcomatoid carcinoma (0% and 1%). With respect to disease stage, most patients had stage IV NSCLC (98% in the Gef+C group and 97% in the Gef group), while a small fraction had stage IIIB disease (2% and 3%). The distribution of metastatic sites varied among patients. In the Gef+C group, 17% had pleura or pericardium metastases, 12% had metastases in the opposite lung, 14% had bone metastases, and 1% had liver metastases. Additionally, 52% of patients had metastases in multiple sites. The Gef group showed similar trends, with 14% having pleura/pericardium metastases, 10% opposite lung metastases, 14% bone metastases, and 47% having multiple metastatic sites. A notable finding was the presence of brain metastases, which were diagnosed in 17% of the Gef+C group and 19% of the Gef group. The summary details of the dataset are shown in Table 4.

We aimed to demonstrate our proposed two stage joint model for incomplete time dependent marker and time-to-event data. In our model, serum cholesterol was included as a time-dependent covariate, while age at baseline was treated as a time-fixed covariate. Approximately 20% of serum cholesterol values were missing, necessitating an appropriate strategy for handling missing data. To address this, we employed our proposed two-stage joint model approach combining MI and IPW. MI was used to impute the missing cholesterol values through a linear mixed-effects model, while IPW adjusted for potential biases due to missingness.

To implement our approach, we first imputed missing serum cholesterol values using the following linear mixed-effects model:

where \(b_{0i} \sim N(0, \sigma _b^2)\) represents the random intercept for individual \(i\), capturing between-subject variability, and \(\epsilon _{ij} \sim N(0, \sigma ^2)\) denotes the residual error. For IPW, we estimated the probability of having observed serum cholesterol values using a mixed-effects logistic regression model based on the fully observed covariates (age and visit time):

where \(a_{0i} \sim N(0, \sigma _a^2)\) is the individual-level random intercept, accounting for subject-specific variability in missingness. The inverse probability weights were derived from the fitted probabilities of this model. Finally, we fitted a Cox proportional hazards model to estimate the association between survival time, serum cholesterol, and age:

where \(h_0(t)\) is the baseline hazard function, and \(\text {Age}_i\) represents the time-fixed covariate for individual \(i\). Our modeling approach allows to account for missing data while assessing the relationship between cholesterol levels and survival outcomes in a statistically rigorous manner.

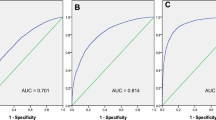

The hazard ratios for serum cholesterol and age were then estimated and results were compared across various missing data handling appproaches, including complete case analysis (cc), two-stage with single imputation (twos), last observation carry forward (locf) and multiple imputation (mi). Table 5 presents the hazard ratio (HR), standard error (SE), 95% confidence intervals (CI), and p-values for \(\gamma _1\)(serum cholesterol (serChol)) and \(\gamma _2\)(age), as estimated using various data imputation and analysis approaches. From the cc, the hazard ratio for age is estimated to be 0.0525 (95% CI: 0.026 to 0.079, \(p < 0.001\)), indicating a significant positive association between age and the hazard. However, the hazard ratio for serum cholesterol is non-significant (HR = 0.345, \(p = 0.818\)). This highlights the challenges of using only complete cases when data are missing. The twos and mi methods yield consistent results for age, with HR estimates of 0.047 and 0.048, respectively, both significant with \(p < 0.001\). Serum cholesterol becomes statistically significant under these methods, with HR estimates of 2.336 (\(p = 0.001\)) for twos and 2.165 (\(p = 0.004\)) for mi. These results suggest that advanced imputation methods may uncover relationships obscured in complete case analysis. Under locf, the hazard ratio for serum cholesterol remains significant (HR = 1.618, \(p = 0.025\)), although the estimate is lower than in the twos and mi methods. This reflects the limitations of carrying forward the last observed value, which might introduce bias. The wmi approach provides a hazard ratio for serum cholesterol of 2.062 (95% CI: -0.368 to 4.492, \(p = 0.096\)), which is not statistically significant.

Discussion

Modeling longitudinal biomarkers in survival analysis is challenging, especially when these biomarkers are measured with error or exhibit complex patterns over time. To address this, various techniques have been developed to better account for measurement errors and time-dependent covariates. For example, Bycott and Taylor42 explored smoothing methods for handling measurement errors in CD4 count data within a Cox model, showing that appropriate error correction can significantly impact survival estimates. Similarly, Fieuws et al.43 demonstrated that jointly modeling multiple biomarkers can improve predictions in clinical settings, such as renal graft failure.

Despite the advantages of joint modeling, one of its major limitations is the computational burden, particularly when working with multiple random effects. Traditional likelihood-based estimation methods are efficient when the number of random effects is small, but their feasibility diminishes as the number of variables increases43. The challenge is even greater in nonlinear settings, where high-dimensional integration is required. Common numerical integration methods such as Monte Carlo and Gauss-Hermite quadrature struggle with multiple random effects, making model estimation difficult and, in some cases, unreliable. These challenges highlight the need for computationally efficient alternatives.

In this paper, we propose a two-stage joint modeling approach that combines MI and IPW to handle missing data in longitudinal and survival data. Recent studies have introduced refinements to two-stage approaches to improve efficiency and reduce bias. For instance, Huong et al.16 proposed a modified two-stage approach that avoids complex multi-integrations in joint modeling, allowing for flexible longitudinal submodels. Similarly, Mehdizadeh et al.17 discussed the application of a two-stage joint model for competing risks data, demonstrating improved performance when sample sizes are large. Other research has highlighted the benefits of importance sampling corrected two-stage approaches, which can provide unbiased estimates while maintaining computational efficiency19. Furthermore, Moreno et al.44 introduced a novel multiple imputation for joint modeling approach, which integrates MI within a two-stage modeling framework to account for time-dependent covariates subject to missingness or measurement error.

Our approach is particularly useful when dealing with missing data in settings where full joint modeling is computationally challenging with existing software. The first stage of our approach involves the modeling of each longitudinal marker and impute the missing values through MI. The second stage consists of fitting a proportional hazards model with IPW, incorporating the predicted marker trajectories from the first stage as time-dependent covariates. Our simulation study demonstrates that the proposed method performs well across various missing data scenarios, yielding smaller biases and confidence intervals that achieve nominal coverage. A variant of our approach, which relies solely on MI without IPW, exhibited more variable performance across scenarios and was generally less reliable. This underscores the importance of combining both components- MI to address missing data uncertainty and IPW to adjust for potential selection bias. While traditional methods such as cc and locf demonstrated clear limitations.

At the same time, the simulation results also show some important limitations. For example, in small samples (such as \(\gamma _1\) in n=100, \(\gamma _2\) in n=200 scenario), the wmi method sometimes had higher bias and variability, especially under MCAR and MNAR. This may be because the weights used in IPW were less stable when estimated from smaller datasets. Also, during simulation, we did not put any restriction on the number of visits per subject, which meant that some subjects had very few observations. This can make it harder for the models to estimate subject-specific effects and can affect the performance of both MI and IPW. In the MNAR setting, where missingness depends on unobserved values, both mi and wmi showed some bias, as expected, since the missing data mechanism was not directly modeled. These points suggest that while our method works well in many realistic cases, its performance depends on sample size, data quality, and the type of missingness, which should be considered when applying it in practice.

Compared to full joint modeling, our approach is computationally efficient and can be implemented using mainstream statistical software. Unlike methods requiring specialized tools, our approach is readily applicable within standard multiple imputation and survival analysis frameworks. Additionally, our method demonstrated substantially lower computation times than existing software packages in R, making it a practical alternative for large-scale datasets containing missing values.

Future research could focus on refining the approach to better accommodate highly correlated markers and extending it to more complex survival models. Another important extension would involve adapting the method to settings with irregular visits, which would require assumptions about the visit process. While our method can be applied in such contexts, the timing of the last available measurement may influence the model’s approximation, warranting further investigation into its impact on model performance. In conclusion, the proposed two-stage joint modeling approach provides computationally feasible solution for handling missing data in longitudinal and survival analyses. While some approximations are inherent in the imputation process, our simulations demonstrate that the method remains robust across a range of realistic scenarios. Given its flexibility and computational efficiency, this approach holds strong potential for broad application in clinical and epidemiological research, enhancing the reliability of statistical inferences and ultimately contributing to improved patient outcomes.

Data Availability

The generated data used here is not available publicly, however example simulated data can be obtained from the corresponding author on reasonable request. Any patients/questionnaire/volunteers humans are not involved in our study.

References

Fisher, L. D. & Lin, D. Y. Time-dependent covariates in the cox proportional-hazards regression model. Annu. Rev. Public Health 20, 145–157 (1999).

Therneau, T. M. & Grambsch, P. M. Modeling Survival Data: Extending the Cox Model (Springer, 2000).

Rizopoulos, D. Joint Models for Longitudinal and Time-to-Event Data: With Applications in R (Chapman and Hall/CRC, 2012).

Rizopoulos, D., Molenberghs, G. & Lesaffre, E. M. Dynamic predictions with time-dependent covariates in survival analysis using joint modeling and landmarking. Biom. J. 59, 1261–1276 (2017).

Cox, D. R. Regression models and life-tables. J. Roy. Stat. Soc.: Ser. B (Methodol.) 34, 187–220 (1972).

Klein, J. P. & Moeschberger, M. L. Survival Analysis: Techniques for Censored and Truncated Data (Springer Science & Business Media, 2006).

Tsiatis, A. A. & Davidian, M. A semiparametric estimator for the proportional hazards model with longitudinal covariates measured with error. Biometrika 88, 447–458 (2001).

Tsiatis, A. A. & Davidian, M. Joint modeling of longitudinal and time-to-event data: An overview. Statistica Sinica 809–834 (2004).

McCrink, L. M., Marshall, A. H. & Cairns, K. J. Advances in joint modelling: A review of recent developments with application to the survival of end stage renal disease patients. Int. Stat. Rev. 81, 249–269 (2013).

Bhattacharjee, A., Rajbongshi, B. K. & Vishwakarma, G. K. jmbig: enhancing dynamic risk prediction and personalized medicine through joint modeling of longitudinal and survival data in big routinely collected data. BMC Med. Res. Methodol. 24, 172 (2024).

Wulfsohn, M. S. & Tsiatis, A. A. A joint model for survival and longitudinal data measured with error. Biometrics 330–339 (1997).

Rizopoulos, D. Dynamic predictions and prospective accuracy in joint models for longitudinal and time-to-event data. Biometrics 67, 819–829 (2011).

Hickey, G. L., Philipson, P., Jorgensen, A. & Kolamunnage-Dona, R. Joint modelling of time-to-event and multivariate longitudinal outcomes: recent developments and issues. BMC Med. Res. Methodol. 16, 1–15 (2016).

Wu, L., Liu, W., Yi, G. Y. & Huang, Y. Analysis of longitudinal and survival data: Joint modeling, inference methods, and issues. J. Prob. Stat. 2012, 640153 (2012).

Wang, J.-L. & Zhong, Q. Joint modeling of longitudinal and survival data. Annual Review of Statistics and Its Application12, (2024).

Huong, P. T. T., Nur, D., Pham, H. & Branford, A. A modified two-stage approach for joint modelling of longitudinal and time-to-event data. J. Stat. Comput. Simul. 88, 3379–3398 (2018).

Mehdizadeh, P., Baghfalaki, T., Esmailian, M. & Ganjali, M. A two-stage approach for joint modeling of longitudinal measurements and competing risks data. J. Biopharm. Stat. 31, 448–468 (2021).

Dutta, S. & Chakraborty, A. Joint model for longitudinal and time-to-event data: A two-stage approach. J. Stat. Appl. Prob. 10, 807–819 (2021).

Mauff, K., Steyerberg, E., Kardys, I., Boersma, E. & Rizopoulos, D. Joint models with multiple longitudinal outcomes and a time-to-event outcome: A corrected two-stage approach. Stat. Comput. 30, 999–1014 (2020).

Alvares, D. & Leiva-Yamaguchi, V. A two-stage approach for Bayesian joint models: Reducing complexity while maintaining accuracy. Stat. Comput. 33, 115 (2023).

Chen, Q., May, R. C., Ibrahim, J. G., Chu, H. & Cole, S. R. Joint modeling of longitudinal and survival data with missing and left-censored time-varying covariates. Stat. Med. 33, 4560–4576 (2014).

Dupuy, J.-F. & Mesbah, M. Joint modeling of event time and nonignorable missing longitudinal data. Lifetime Data Anal. 8, 99–115 (2002).

Bhattacharjee, A., Vishwakarma, G. K. & Banerjee, S. Joint modeling of longitudinal and time-to-event data with missing time-varying covariates in targeted therapy of oncology. Commun. Stat. Case Stud. Data Anal. Appl. 6, 330–352 (2020).

Ibrahim, J. G. & Molenberghs, G. Missing data methods in longitudinal studies: A review. TEST 18, 1–43 (2009).

Andersen, P. K. & Liestøl, K. Attenuation caused by infrequently updated covariates in survival analysis. Biostatistics 4, 633–649 (2003).

Little, R. J. & Rubin, D. B. Statistical analysis with missing data (John Wiley and Sons, 2019).

Lachin, J. M. Fallacies of last observation carried forward analyses. Clin. Trials 13, 161–168 (2016).

Seaman, S. R. & White, I. R. Review of inverse probability weighting for dealing with missing data. Stat. Methods Med. Res. 22, 278–295 (2013).

Rubin, D. B. Multiple imputation for nonresponse in surveys (John Wiley and Sons, 1987).

Van Buuren, S. & Groothuis-Oudshoorn, K. mice: Multivariate imputation by chained equations in r. J. Stat. Softw. 45, 1–67 (2011).

Rotnitzky, A. Inverse probability weighted methods. In Longitudinal Data Analysis (ed. Rotnitzky, A.) 467–490 (Chapman and Hall/CRC, 2008).

Ross, R. K. et al. Accounting for nonmonotone missing data using inverse probability weighting. Stat. Med. 42, 4282–4298 (2023).

Seaman, S. R., White, I. R., Copas, A. J. & Li, L. Combining multiple imputation and inverse-probability weighting. Biometrics 68, 129–137 (2012).

Guo, F., Langworthy, B., Ogino, S. & Wang, M. Comparison between inverse-probability weighting and multiple imputation in cox model with missing failure subtype. Stat. Methods Med. Res. 33, 344–356 (2024).

Thaweethai, T., Arterburn, D. E., Coleman, K. J. & Haneuse, S. Robust inference when combining inverse-probability weighting and multiple imputation to address missing data with application to an electronic health records-based study of bariatric surgery. Annals Appl. Stat. 15, 126 (2021).

Sun, B. et al. Inverse-probability-weighted estimation for monotone and nonmonotone missing data. Am. J. Epidemiol. 187, 585–591 (2018).

Butera, N. M., Zeng, D., Green Howard, A., Gordon-Larsen, P. & Cai, J. A doubly robust method to handle missing multilevel outcome data with application to the china health and nutrition survey. Stat. Med. 41, 769–785 (2022).

Bhattacharjee, A., Vishwakarma, G. K., Rajbongshi, B. K. & Tripathy, A. Miipw: An r package for generalized estimating equations with missing data integration using a combination of mean score and inverse probability weighted approaches and multiple imputation. Expert Syst. Appl. 238, 121973 (2024).

Morris, T. P., White, I. R. & Crowther, M. J. Using simulation studies to evaluate statistical methods. Stat. Med. 38, 2074–2102 (2019).

Burton, A., Altman, D. G., Royston, P. & Holder, R. L. The design of simulation studies in medical statistics. Stat. Med. 25, 4279–4292 (2006).

Noronha, V. et al. Gefitinib versus gefitinib plus pemetrexed and carboplatin chemotherapy in egfr-mutated lung cancer. J. Clin. Oncol. 38, 124–136 (2020).

Bycott, P. & Taylor, J. A comparison of smoothing techniques for cd4 data measured with error in a time-dependent cox proportional hazards model. Stat. Med. 17, 2061–2077 (1998).

Fieuws, S., Verbeke, G., Maes, B. & Vanrenterghem, Y. Predicting renal graft failure using multivariate longitudinal profiles. Biostatistics 9, 419–431 (2008).

Moreno-Betancur, M. et al. Survival analysis with time-dependent covariates subject to missing data or measurement error: Multiple imputation for joint modeling (mijm). Biostatistics 19, 479–496 (2018).

Author information

Authors and Affiliations

Contributions

A.B. and G.K.V.: Supervision, Conceptualization, Review, Writing. B.K.R.: Conceptualization, Methodology, Writing, Software, Editing. All authors reviewed the manuscript.

Corresponding authors

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Vishwakarma, G.K., Bhattacharjee, A. & Rajbongshi, B.K. A two-stage joint model approach to handle incomplete time dependent markers in survival data through inverse probability weight and multiple imputation. Sci Rep 15, 33949 (2025). https://doi.org/10.1038/s41598-025-11125-4

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-11125-4