Abstract

The challenges of handling imbalanced datasets in machine learning significantly affect the model performance and predictive accuracy. Classifiers tend to favor the majority class, leading to biased training and poor generalization of minority classes. Initially, the model incorrectly treats the target variable as an independent feature during data generation, resulting in suboptimal outcomes. To address this limitation, the model was adjusted to more effectively manage target variable generation and mitigate the issue. This study employed advanced techniques for synthetic data generation, such as synthetic minority oversampling (SMOTE) and Adaptive Synthetic Sampling (ADASYN), to enhance the representation of minority classes by generating synthetic samples. In addition, data augmentation strategies using Deep Conditional Tabular Generative Adversarial Networks (Deep-CTGANs) integrated with ResNet have been utilized to improve model robustness and overall generalizability. For classification, TabNet, a model tailored specifically for tabular data, proved highly effective with its sequential attention mechanism that dynamically processes features, making it well suited for handling complex and imbalanced datasets. Model performance was evaluated using a novel approach of training synthetic data and testing on real data (TSTR). The framework was validated on the COVID-19, Kidney, and Dengue datasets, achieving impressive testing accuracies of 99.2%, 99.4%, and 99.5%, respectively. Furthermore, similarity scores of 84.25%, 87.35%, and 86.73% between the real and synthetic data for the COVID-19, Kidney, and Dengue datasets, respectively, confirmed the reliability of the synthetic data. TabNet consistently showed substantial improvements in F1-scores compared to other models, such as Random Forest, XGBoost, and KNN, emphasizing the importance of selecting the right synthetic data augmentation techniques and classifiers. Additionally, SHapley Additive exPlanations (SHAP)-based explainable AI tools were used to interpret model performance, providing insights into feature importance and its impact on predictions. These findings confirm that the proposed approach enhances the accuracy, robustness, and interpretability, offering a valuable solution for addressing data imbalance in classification tasks.

Similar content being viewed by others

Introduction

The advancement of machine learning (ML) in healthcare has been transformative, enabling breakthroughs in disease prediction, diagnosis, and treatment planning. However, the effectiveness of these models hinges on the availability of high-quality, representative datasets—something that is often hindered by data imbalance, privacy concerns, and limited accessibility1. To address these challenges, researchers have turned to synthetic data generation as a promising solution2. By simulating real-world distributions while avoiding the disclosure of sensitive information, synthetic data support privacy preservation and open collaboration3. Generative models such as GANs and VAEs have demonstrated success in producing realistic tabular data, making them invaluable in healthcare applications where data scarcity is common4. Their application improves generalization, facilitates reproducibility, and enables model validation across decentralized environments5. Importantly, these techniques contribute to responsible AI development by reducing reliance on identifiable patient records while maintaining utility for downstream ML tasks6.

Despite these benefits, the generation of synthetic data for tabular healthcare datasets remains a complex undertaking. Classical oversampling techniques like SMOTE and ADASYN address class imbalance through interpolation, but they often fail to capture non-linear dependencies and multi-modal distributions intrinsic to medical data7. Deep generative models offer more expressive capacity but face obstacles in modeling heterogeneous data types or small class populations8. Moreover, standalone generative models may not ensure statistical fidelity or interpretability required in clinical practice9. To overcome these limitations, hybrid frameworks have emerged that integrate traditional resampling with deep learning–based data synthesis and attention-driven classifiers. These methods aim to enhance predictive accuracy, explainability, and robustness in risk-sensitive settings like healthcare diagnostics. This study investigates whether a hybrid approach combining classical resampling, deep generative modeling (Deep-CTGAN + ResNet), and attention-based classification (TabNet) can improve performance on imbalanced healthcare datasets.

Synthetic data offers a promising solution to the challenges of data scarcity, imbalance, and privacy in machine learning. By generating realistic yet privacy-safe datasets that preserve feature relationships, synthetic data allows organizations to train, test, and deploy models without risking sensitive information. Beyond privacy, synthetic data supports data augmentation—enabling better model generalization and improved performance, especially in domain-specific applications where collecting sufficient labeled data is difficult. It also enables collaborative research across organizational boundaries by mitigating data-sharing restrictions.

In healthcare and similar sensitive domains, access to reliable, privacy-safe data is crucial for accurate machine learning—making synthetic data generation an essential innovation. Current approaches to synthetic data generation, including techniques such as SMOTE and statistical models such as Synthpop, provide partial solutions for handling data imbalances. However, they often struggle to accurately capture complex relationships and joint distributions in high-dimensional healthcare datasets. Additionally, although models such as GANs and VAEs show considerable potential for generating synthetic data, their use in healthcare-specific contexts, particularly for tabular data that integrate both categorical and numerical features, remains underexplored. Moreover, there is a noticeable lack of comprehensive validation techniques that can ensure that synthetic healthcare data maintain the complexity and integrity of real-world data while avoiding bias10. The present research has not fully addressed the optimization of advanced generative models for healthcare data nor has it adequately examined their utility or reliability for practical healthcare applications. To address this, the contribution of the research are.

-

Create a framework to handle class imbalance by producing synthetic data that closely resemble real-world datasets.

-

Investigate the role of data augmentation in enhancing the classification accuracy by augmenting and fully replacing real data.

-

Examine and evaluate how integrating ResNet’s residual connections with Deep-CTGAN improves its ability to capture features and learn complex patterns.

-

Contrasting TabNet’s performance with other models such as Random Forest, XGBoost, and KNN when applied to imbalanced datasets.

-

Analyze model performance across various datasets, such as COVID-19, kidney disease, and dengue, using different synthetic data augmentation strategies.

-

The similarity between synthetic and real data distributions was measured using quantitative similarity scores for validation purposes.

-

Improvements in model performance were analyzed by examining confusion matrices, F1-score patterns, and AUC values using different synthetic data generation methods.

-

Explore the relationship between dataset size and model performance, focusing on how synthetic data proportions influence the outcomes.

-

Leverage Explainable AI (SHAP) is used to interpret model decisions, offering insights into the importance of features and their impact on predictions.

Related works

Traditional resampling and statistical techniques

Early approaches to class imbalance in healthcare datasets relied on statistical oversampling methods such as SMOTE and ADASYN, which synthetically interpolate new minority class samples to balance distributions11. While effective in boosting recall, these methods often fail to capture nonlinear dependencies or preserve joint feature distributions in high-dimensional clinical data. Statistical models like Synthpop offer interpretable alternatives but are limited in modeling complex interactions12.

GAN-based synthetic data generation

The emergence of Generative Adversarial Networks (GANs) has significantly advanced synthetic data generation for tabular healthcare applications. CTGAN and TVAE were among the first to address mixed-type features and mode collapse issues in tabular data13. CopulaGAN and TGAN further improved fidelity and diversity by modeling joint distributions and temporal dependencies14. Evaluation frameworks such as SynMeter and TabSynDex have been proposed to assess fidelity, privacy, and downstream utility of synthetic datasets15,16.

Hybrid frameworks integrating resampling and deep models

To overcome the limitations of standalone methods, hybrid pipelines have emerged that combine classical resampling (SMOTE/ADASYN) with deep generative synthesis, such as Deep-CTGAN enhanced by ResNet17. These frameworks improve feature learning and class-specific generation, especially in small-sample or imbalanced clinical datasets. Other hybrid innovations like GenerativeMTD and GAN-BLR integrate residual learning, kNN-based conditioning, or Bayesian regularization to improve fidelity and class control18,19.

Domain-specific innovations in healthcare

Recent work has focused on tailoring synthetic data generation to clinical and biomedical contexts, where privacy, interpretability, and small sample sizes are critical. Models such as CTAB-GAN + and SynthCity have demonstrated strong performance in generating privacy-preserving, utility-rich synthetic data for healthcare analytics20,21. Meanwhile, NextConvGeN, TabPFGen, and MTabGen introduce structural priors, attention-based conditioning, and diffusion mechanisms to better capture clinical semantics and temporal dependencies22,23,24. Liu et al.25 conducted a comprehensive exploration of the design space for GAN-based tabular data synthesis, proposing architectural optimizations such as conditional vector encoding and mode-specific normalization. While their framework improves fidelity and diversity, it lacks integration with class-balancing strategies, making it less effective for imbalanced healthcare datasets.

Rashidi et al.26 introduced a fully automated platform for synthetic tabular data generation and validation, emphasizing usability and reproducibility. However, their approach primarily targets general-purpose datasets and does not incorporate domain-specific enhancements like attention mechanisms or residual learning, which are critical for clinical feature modeling. Rodriguez-Almeida et al.27 focused on disease prediction using small and imbalanced datasets, leveraging synthetic data to improve classification. Although their work highlights the utility of synthetic augmentation, it does not explore hybrid architectures or interpretability frameworks, limiting its applicability in high-stakes clinical decision-making.

Elshewey et al.28 proposed a hybrid model combining Greylag Goose Optimization (GGO) for feature selection with LSTM networks for heart disease classification, achieving high predictive accuracy. However, the approach lacks mechanisms for handling class imbalance and interpretability, limiting its scalability in broader clinical contexts. Tarek et al.29 proposed a feature selection framework using the Snake Optimization algorithm with multiple classifiers for early cardiovascular disease detection, but the approach does not address class imbalance or incorporate explainable or generative modeling techniques. Elshewey et al.30 proposed an EEG-based eye state classification framework using a Modified Al-Biruni Earth Radius (MBER) metaheuristic algorithm for feature selection, achieving high accuracy with KNN as the classifier. However, the approach does not address class imbalance or incorporate synthetic data generation, limiting its applicability in imbalanced biomedical datasets.

Elshewey and Osman31 proposed a hybrid classification framework for orthopedic diseases by integrating Binary Breadth-First Search (BBFS) for feature selection with a Random Forest (RF) classifier. While the BBFS-RF model achieved high accuracy (up to 99.41%), the approach was limited to a small dataset with only six features and did not incorporate class imbalance handling or explainability techniques. El-Rashidy et al.32 proposed a multitask, multilayer deep learning model using LSTM and MLP architectures to predict both the need for mechanical ventilation and associated mortality in ICU patients. While the model achieved high predictive performance, it did not incorporate synthetic data generation or class imbalance handling, limiting its adaptability to skewed clinical datasets. Elkenawy et al.33 proposed a hybrid model combining Greylag Goose Optimization (GGO) for feature selection with a Multilayer Perceptron (MLP) for lung cancer classification, but the approach lacks mechanisms for handling class imbalance and does not incorporate explainability or synthetic data generation.

Across these methodological categories—ranging from traditional resampling to domain-specific deep synthesis—researchers have made substantial progress in tabular synthetic data generation. However, most existing approaches either optimize for fidelity without addressing class imbalance or focus on oversampling without capturing complex feature dependencies. Few studies effectively integrate multi-stage pipelines that couple class-aware sampling (like ADASYN or SMOTE) with GAN-based augmentation and feature-level enhancement mechanisms like ResNet. Moreover, while some frameworks demonstrate utility on benchmark datasets, their clinical transferability, scalability, and explainability often remain limited. This study bridges these gaps by proposing a hybrid, interpretable framework tailored to healthcare datasets, leveraging Deep-CTGAN, ResNet, and TabNet to simultaneously address class imbalance, synthetic fidelity, and clinical interpretability across multiple disease34,35,36 datasets.

Methodology

This research methodology focuses on tackling class imbalance in datasets and enhancing model performance by leveraging advanced techniques for synthetic data generation and augmentation. Methods such as SMOTE and ADASYN were employed to balance class distributions by creating additional minority class samples, which bolstered the model’s ability to learn from challenging areas. Additionally, Deep-CTGANs were used alongside ResNet for data augmentation, ensuring that data dependencies were maintained while producing diverse and realistic synthetic data, thereby improving generalization. ResNet’s residual connections enabled the model to capture complex patterns and features better, further strengthening its robustness. These techniques were integrated with TabNet, a deep learning model designed specifically for tabular data that utilizes sequential attention to dynamically select relevant features, leading to more accurate and interpretable classification results, as illustrated in Figure.1.

The process for handling missing data begins by analyzing the dataset to identify the number of missing values in each column, providing insight into the extent of missing data. For numerical columns with missing values, the mean imputation method was applied, in which the missing values were replaced by the mean of the respective column. For categorical columns, the mode imputation method was used to fill in the missing values with the most frequent values in each column. This ensured that the dataset remained complete and free of gaps. This approach guarantees data integrity and allows for accurate and reliable outcomes in subsequent analyses.

Advanced synthetic data generation techniques for dataset balancing

Addressing imbalanced datasets presents a significant challenge in machine learning because of its potential to negatively affect the model performance and prediction accuracy. In such cases, models often exhibit a bias towards the majority class, leading to poor generalization for the minority class. To mitigate this, synthetic data generation techniques such as SMOTE and ADASYN are commonly used. These methods generated additional synthetic samples for the minority class, thereby facilitating a more balanced class distribution. Additionally, data augmentation strategies using Deep-CTGANs combined with ResNet were implemented to boost model robustness and enhance its generalization capacity. The deep residual connections in ResNet allow the model to learn complex features more effectively, further improving its overall performance.

SMOTE: generating synthetic samples with interpolation

SMOTE is widely used to address class imbalances by synthetically generating new instances of minority classes. This technique identifies the k-nearest neighbors for each minority class instance and creates synthetic data points between the original sample and its neighbors. This is achieved by linear interpolation, which produces new synthetic samples that lie on the line segments between existing samples. The formula used to generate a synthetic data point is given by

where \(\:{X}_{new}\) is the newly generated synthetic instance, \(\:{X}_{i}\) is an original minority class sample, \(\:{X}_{neighbor}\) is one of the k-nearest neighbors of \(\:{X}_{i}\) and λ is a random value in the interval [0, 1], ensuring that the synthetic sample is a convex combination of \(\:{X}_{i}\) and its neighbor. Interpolation introduces variety to the minority class distribution, effectively spreading synthetic instances throughout the feature space. This prevents overfitting by making the decision boundary more generalizable and better-defined.

ADASYN: adaptive synthetic sampling for imbalanced data

Unlike SMOTE, which uniformly samples across the minority class, ADASYN selectively generates synthetic points in regions where classification is most challenging—near the decision boundary. This is achieved by assigning more weight to minority samples surrounded by majority-class neighbors. The number of synthetic samples for each minority instance i is determined by

where, \(\:{G}_{i}\)is the number of synthetic samples generated for instance i and \(\:{\varDelta\:}_{i}\:\)is the difficulty level of instance i, calculated based on the number of majority class neighbors. m is the total number of minority class instances; G is the total number of synthetic samples to be created for the minority class. By emphasizing hard-to-classify regions, ADASYN improves the model’s sensitivity without oversaturating easy areas of the feature space making it an ideal precursor to more expressive generators like Deep-CTGAN in the proposed hybrid pipeline.

The difficulty level \(\:{\varDelta\:}_{i}\) is computed as follows:

Thus, instances with a higher value of \(\:{\varDelta\:}_{i}\) indicate regions where the minority class is closer to the majority class, necessitating more synthetic samples to reinforce the learning capacity of the model. ADASYN enhances the ability of the model to generalize over challenging areas of data distribution, leading to an improved classification performance.

Data augmentation techniques for enhanced model robustness

After balancing the class distribution using SMOTE and ADASYN, data augmentation was applied to enhance the model generalization and robustness. This process introduces variations in the training data, reducing the risk of overfitting and improving the model’s performance on unseen data. Advanced techniques used for augmentation include Deep-CTGANs and the integration of ResNet. ResNet’s residual connections aid in capturing complex patterns, thereby contributing to better model robustness and overall performance.

Deep-CTGANs: conditional generative adversarial networks for tabular data

Generative Adversarial Networks (GANs) are built around two essential components: a generator and a discriminator, which are trained in an adversarial manner. The function of the generator is to produce synthetic data from a random noise vector, whereas the discriminator evaluates the synthetic data and attempts to distinguish it from real data. The training process revolves around a min-max optimization problem, where the generator attempts to deceive the discriminator by creating data that closely mirrors the real distribution. The objective function for training GANs is typically represented as

where G is the generator that produces synthetic samples from noise vector z, D is the discriminator that differentiates between real data samples and those generated by G, x is a real data sample drawn from the data distribution \(\:{p}_{data}\) and z is a noise vector sampled from a prior distribution \(\:{p}_{z}\left(z\right)\).

While GANs are well-suited for continuous domains such as image generation, their performance degrades when applied to tabular data due to challenges in modeling heterogeneous feature types and preserving joint distributions across categorical and numerical variables. Conditional Tabular GANs (CTGANs) address these issues through class-conditioning and mode-specific normalization, enabling more accurate generation of mixed-type tabular data. By modeling conditional distributions rather than marginal densities, CTGANs improve the quality and coherence of synthetic records. However, they remain limited in handling severe class imbalance, often underrepresenting minority categories in the generated output.

Deep-CTGAN extends CTGAN by incorporating class-specific conditioning into the generator’s input, allowing it to focus on sparsely represented subpopulations. This targeted generation improves coverage of minority classes and produces more balanced synthetic datasets. While the core adversarial loss remains similar, the architectural modifications enhance minority sample representation and downstream classification performance—particularly in imbalanced clinical datasets.

ResNet

Residual Networks (ResNets) enhance the capacity of deep neural architectures by introducing skip connections that allow the input of a layer to bypass intermediate transformations and be added directly to the output. This facilitates stable gradient flow during training and mitigates performance degradation commonly observed in deeper networks.In the context of Deep-CTGAN, ResNet blocks are embedded within the generator to improve learning of both low- and high-complexity patterns across mixed-type tabular features. The structure enables the network to retain critical information while modeling intricate feature dependencies present in healthcare datasets.

Mathematically, a residual block in ResNet can be described as

where x is the input to the residual block, \(\:F\left(x,\left\{{W}_{i}\right\}\right)\) represents the transformations (such as convolution, normalization, and activation) applied to the input, y is the output, which combines the original input x with the transformed input \(\:F\left(x,\left\{{W}_{i}\right\}\right)\).

The integration of ResNet into Deep-CTGAN improves generalization and reduces overfitting, particularly when generating synthetic samples for underrepresented classes. When used in conjunction with resampling techniques such as SMOTE and ADASYN, this design contributes to a robust hybrid framework capable of generating high-fidelity, class-aware synthetic tabular data.

Classification—TabNet

TabNet is an interpretable deep learning model designed specifically for tabular datasets. It performs sequential attention-based feature selection using learnable masks that adapt to the data distribution. This mechanism allows the model to focus on the most relevant features at each decision step, which is particularly advantageous in clinical contexts where feature-level explanations are essential.

The core architecture of TabNet comprises two key components: a feature transformer and attentive transformer. The feature transformer is responsible for transforming the input features into meaningful representations that can be utilized in subsequent steps. This transformer uses dense neural network layers that map input features into high-dimensional spaces, allowing the model to learn complex interactions. On the other hand, the attentive transformer is designed to learn a sequential decision-making process over the features. Employing an attention mechanism, it focuses on the selection and reweighting of specific features during each decision step. Unlike traditional neural networks that process inputs in a parallel manner, TabNet adopts a sequential decision process. This process operates over multiple decision steps, enabling the model to focus on different subsets of the features at each step. At each step, the attentive transformer produces a feature mask that selects the most relevant features to be processed by the feature transformer. This mask is computed based on a soft selection mechanism using entmax activation functions to ensure sparsity in feature selection. Sparsity is crucial because it prevents overfitting and enhances the model interpretability. Figure 2 presents the TabNet architecture.

Mathematically, given an input feature vector X, the mask at step t, \(\:{M}_{t}\), is calculated as:

where \(\:{A}_{t}\:\)is a learned matrix that defines the attention weights of each feature. The function entmax is an activation function similar to softmax but promotes sparsity by pushing less important weights closer to zero.

The feature reuse mechanism allows the model to reuse certain features across different decision steps, which enhances learning efficiency. The transformed features pass through a series of fully connected layers in the feature transformer, producing intermediate outputs, which are then aggregated to generate the final decision. Each decision step contributes to a portion of the final prediction, ensuring that the relevant features across the various steps are effectively utilized.

The aggregated output at each step t, \(\:{Z}_{t}\), is formulated as

where \(\:{H}_{t}\:\)is the hidden state produced by the feature transformer, \(\:{W}_{t}\) is a learned weight matrix, and σ is an activation function. The cumulative output of all the decision steps is then combined to produce the final output of the model.

TabNet uses a loss function and includes a regularization term that promotes sparsity in the feature masks, thereby controlling overfitting and ensuring that only the most relevant features are used to make predictions. The regularization parameter, known as the sparsity coefficient \(\:{{\uplambda\:}}_{sparse}\), penalizes the use of irrelevant features and encourages the model to select features selectively

where \(\:{L}_{task}\:\)is the task-specific loss (e.g., classification loss), and \(\:{‖{M}_{t}‖}_{1}\) is the L1 norm of the feature mask at step t.

The training process of TabNet involves updating both the attentive and feature transformer parameters using gradient-based optimization techniques. As the model proceeds through each decision step, the attention mechanism dynamically modifies the importance of features based on the evolving state of the network. This sequential updating of feature importance helps the model learn complex patterns in the data while maintaining interpretability. Furthermore, TabNet supports various optimizers, such as Adam, with learning-rate schedules to improve convergence.

Algorithm hybrid_synthetic_framework

Input: Raw healthcare dataset D.

Output: Trained classifier M, evaluation metrics (AUC, F1, accuracy, TSTR.)

-

1.

Preprocessing:

-

Handle missing values and categorical encoding.

-

Normalize/scale numerical features.

-

2.

Class Imbalance Handling:

if Dataset == ‘COVID’ or ‘Kidney’:

Apply ADASYN on minority class → D_syn.

else if Dataset == ‘Dengue’:

Apply SMOTE on minority class → D_syn.

-

3.

Synthetic Generation:

-

Train Deep-CTGAN with ResNet blocks on D_syn.

-

Generate synthetic samples G.

-

4.

Augmented Dataset Creation:

-

D_aug ← D_real + G (augmentation or replacement).

-

5.

Model Training:

-

Train classifiers (TabNet, RF, XGBoost, KNN) on D_aug.

-

6.

Evaluation:

-

Test on real hold-out set.

-

Compute AUC, F1, accuracy, TSTR.

-

Interpret using SHAP for feature importance.

-

7.

Return M and evaluation metrics.

Experimental setup

All experiments were conducted on a workstation equipped with an NVIDIA RTX 3090 GPU (24 GB VRAM), 64 GB system RAM, and an AMD Ryzen 9 5950X processor. The framework was implemented in Python 3.10 using PyTorch 2.0 and SHAP 0.41 libraries. On average, the proposed hybrid configuration (Deep-CTGAN + ResNet + TabNet) required ~ 42 min for training across 50 epochs, while classical resampling setups (e.g., SMOTE + TabNet) completed training in under 10 min.

Result and discussion

This section evaluates the effectiveness of the proposed hybrid model in utilizing synthetic data generated through various augmentation strategies to enhance the performance of classification algorithms on COVID-19, kidney disease, and dengue datasets. The objective is to investigate whether synthetic data can supplement or replace real data during training and assess the resulting impact on model performance. Key evaluation metrics include Area Under the ROC Curve (AUC) and F1-score. Multiple synthetic data generation techniques—such as SMOTE, ADASYN, CTGAN, and Deep-CTGAN integrated with ResNet—were applied to assess their influence on classifiers including TabNet, XGBoost, Random Forest (RF), and K-Nearest Neighbors (KNN). The analysis involved both data augmentation with synthetic samples and complete real-data replacement to examine their efficacy in handling class imbalance and improving generalization.

To further assess the utility of synthetic data, the “Train on Synthetic, Test on Real” (TSTR) protocol was employed to evaluate generalization capability. This approach measures how effectively models trained on synthetic data perform on real-world samples. In addition to performance metrics, similarity assessments between real and synthetic distributions were conducted to validate structural alignment. Confusion matrices, accuracy progression curves, and F1-score trends were analyzed to understand class-wise performance variations and the influence of synthetic data proportions on training effectiveness.

The hyperparameter setup described in the Table 1 is an intentional effort towards optimizing the Deep-CTGAN as well as its built-in ResNet module for efficient generation of synthetic data on diverse sets of healthcare data. The Deep-CTGAN used a learning rate of 0.0002 for secure adversarial training, whereas the batch size was set at 128, representing a good practical compromise between computationally efficiency and gradient smoothness. The embedding dimension, ranging between 128 and 256 based on dataset complexity, enabled elastic representation of feature interactions. Both the generator and discriminator employed activation functions like LeakyReLU in order to combat gradient vanishing and enable accelerated convergence. Application of Adam optimizer with beta settings of (0.5, 0.999) facilitated efficient weight updates, enabling smoother learning dynamics. In the ResNet backbone, the lighter architecture of ResNet-18 was used to trade expressiveness for training time. The addition of dropout (0.3) and weight decay (1e-5) was a key factor in regularization, essentially mitigating the risk of model overfitting on small healthcare samples. The use of ReLU activation in the residual blocks allowed for non-linear transformations while maintaining feature integrity, and batch normalization enhanced training stability. The learning rate for ResNet was maintained lower (0.0001) to support the more sophisticated feature extraction process involved in enriching Deep-CTGAN’s output.

Dataset

Dengue dataset

This dataset contains 400 records of patient information linked to dengue fever, spanning various demographic, clinical, and laboratory characteristics. Certain key columns, such as Year, Month, Age, Sex, along with symptoms such as Fever, Rash, and Myalgia, were complete with no missing data. However, the other columns exhibit a considerable amount of missing data. For instance, occupation, Marital Status, and residence were missing in 344, 335, and 151 records, respectively. Essential clinical fields, such as the Date of Symptom Onset (DOS) and diagnostic details such as comorbidities (372 records missing), NS1 Antigen (223 records missing), and PT/INR (261 records missing), also show gaps. Laboratory-related data such as Esr (375 missing records), MCH (178 missing records), and TLC (10 missing records) present additional gaps. Moreover, the columns related to severe dengue conditions showed significant missing data, with 391 missing entries for Severe Dengue Fever and 395 missing entries for Dengue Hemorrhagic Fever.

Covid dataset

The dataset includes 600 rows of patient data related to Covid-19, encompassing a wide range of demographic, clinical, and laboratory characteristics. Columns such as Patient_ID, Status, Age, Hematocrit, Hemoglobin, Platelets, and several other blood-related measurements, including Red Blood Cells, Lymphocytes, and MCHC, were fully populated with no missing data. However, some fields had significant missing data. Both Inf_B_rapid and Inf_A_rapid have missing data in 403 entries, representing a large portion of incomplete information. Other important variables, such as C_reactive_protein (99 missing entries), Creatinine (179 missing entries), Potassium (231 missing entries), Sodium (234 missing entries), and Urea (206 missing entries), also contain gaps.

Kidney dataset

The dataset comprises 400 rows of patient information related to kidney disease, covering a range of demographic, clinical, and laboratory characteristics. The ID and classification columns were complete, with no missing data. However, the other columns show varying levels of missing entries. The rbc and rc columns exhibited the most significant data gaps, with 152 and 131 missing values, respectively. Additionally, columns such as wc (106 missing entries), pot (88 missing entries), sod (87 missing entries), and pcv (71 missing entries) had considerable amounts of missing data. Other columns, such as sg, al, and su, have missing values between 46 and 49. Smaller gaps are observed in bp (12 missing entries), bgr (44 missing entries), and bu (19 missing entries). Columns htn, dm, cad, appet, pe, and ane have minimal missing data, with only one or two missing entries each. Despite these data gaps, the dataset offers a rich set of features for analysis; however, managing the missing values carefully is critical for obtaining reliable results.

Dataset augmentation and class balancing overview

The dataset comparison highlighted the impact of different data augmentation techniques on the number of samples in the dengue31, COVID-1930, and kidney datasets29, as shown in Table 2. Starting with the original number of samples, the SMOTE and ADASYN methods modestly increase the dataset size, showing a balanced expansion for each case. Specifically, the dengue and kidney datasets grew from 400 to 600 samples, whereas the COVID-19 dataset increased from 600 to 900 samples. However, the CTGAN approach produced a larger augmentation, further increasing the sample count to 800 for the dengue and kidney datasets and to 1200 for the COVID-19 dataset. DCTGAN combined with ResNet yielded the most significant increase, with 1000 samples for the dengue and kidney datasets and 1400 samples for the COVID-19 dataset. This indicates that advanced generative techniques, such as DCTGAN and ResNet, provide a more substantial increase in data, offering potentially richer synthetic datasets for model training.

For the kidney dataset, the highest F1-score improvement (+ 0.20) was achieved with the ADASYN + DCTGAN + ResNet combination, using TabNet and 100% synthetic data. On the other hand, the largest F1-score decline (-0.25) for the kidney occurred with the combination of NC + Sep. + DCTGAN + ResNet and KNN using only synthetic data. For the COVID-19 dataset, the best F1-score upgrade (+ 0.15) was obtained with ADASYN + DCTGAN + ResNet using TabNet and 50% synthetic data, whereas the most substantial drop (-0.30) was recorded with SMOTE + DCTGAN + ResNet using KNN and fully synthetic data. In the dengue dataset, the highest improvement (+ 0.18) was observed using SMOTE + DCTGAN + ResNet and TabNet with 150% synthetic data, whereas the most significant downgrade (-0.24) was obtained with NC + DCTGAN + ResNet using XGB and 150% synthetic data. These results demonstrate that although the combination of DCTGAN + ResNet with TabNet generally leads to the highest F1-score upgrades, the performance can vary significantly based on the type of dataset, augmentation method, and model used. Table 3 shows the effect of various synthetic data generation and classification techniques. Table 3, summarizes the classification performance from the experiments, showing both the highest improvements and declines in the F1-score across three datasets (COVID-19, kidney, and dengue).

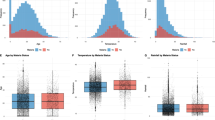

These plots are shown in Fig. 3a,b, respectively. Impact of varying training data sizes on F1-score for kidney and COVID-19 datasets using different models: TabNet, XGB, RF, and KNN. In the kidney dataset (top graph), as the sample size increases from 200 to 1000, all models show an upward trend in the F1-score, with TabNet consistently outperforming the others, reaching a peak of approximately 0.85. RF and XGB exhibit more moderate improvements, whereas KNN lags behind, especially with smaller sample sizes. Conversely, in the COVID-19 dataset (bottom graph), increasing the sample size led to a decline in the F1-scores across all models. TabNet maintains a lead initially but drops to approximately 0.75 by the time the sample size reaches 1400. KNN experienced the most significant drop, showing poor performance as the dataset grew. These results suggest that TabNet is highly effective for kidney data but struggles with larger COVID-19 datasets, while KNN exhibits significant instability across both datasets.

Analysis of synthetic data augmentation and replacement

The quality of synthetic data is assessed through two approaches: augmentation, where the original training data are expanded with synthetic samples, and replacement, where synthetic data fully replace the original dataset. The replacement method evaluates how well the synthetic data mirror the real data, whereas augmentation focuses on whether adding synthetic data enhances the classification performance. As reflected in the tables, the model performance on the test sets differs based on the synthetic data generation techniques used. In the augmentation task, DCTGAN-ResNet yielded the highest AUC values, with TabNet reaching 98.2 for the dengue dataset Table 4. Similarly, in the replacement task, DCTGAN-ResNet stood out, with TabNet achieving an AUC of 95.8 on dengue as shown in Table 5. Overall, DCTGAN-ResNet consistently produced high-quality synthetic data, boosting the model performance in both augmentation and replacement scenarios. Tables 4 and 5 summarizes the classification performance from the experiments, showing both the highest improvements and declines in the F1-score across three datasets (COVID-19, kidney, and dengue) when various combinations of data balancing, augmentation methods, and machine learning models are used, along with different percentages of synthetic data.

Result analysis of Covid dataset

The evaluation of the COVID-19 dataset using synthetic data provided several important insights, as shown in Fig. 4a. The similarity index between the real and synthetic data was 84.25%, demonstrating a strong alignment in the distribution of features, as depicted in the first graph. This similarity indicates that the synthetic data effectively replicated the key patterns and characteristics of the original dataset. The feature importance of the Dengue Dataset as shown in Fig. 4b.

The confusion matrix reflects the model’s exceptional classification performance with high precision for both the negative and positive classes, as shown in Fig. 4c. The model accurately identified 99.85% of negative cases and 99.35% of positive cases with very low error rates (0.15% false positives and 0.65% false negatives). This performance emphasizes the accuracy of the model in separating the two classes with minimal misclassifications.

The graph illustrates the accuracy progression for a COVID dataset, where the model was trained on synthetic data and tested on real data over 25 epochs, as shown in Fig. 4d. Both curves, representing the synthetic and real data, showed a rapid improvement in accuracy during the initial epochs, followed by stabilization as the epochs progressed. By the 5th epoch, the accuracy surpassed 97% and continued to increase incrementally, reaching an overall accuracy of 99.2% by the final epoch. The closeness of the curves indicates a minimal performance discrepancy between synthetic training and real data testing, highlighting the effectiveness of synthetic data in closely approximating real data for this task.

Analysis of kidney dataset

Analysis of the kidney dataset using synthetic data revealed several critical observations, as shown in Fig. 5a. The similarity index between the original and synthetic data was 87.35%, indicating a high degree of alignment in the distribution of the features, as shown in the first graph. This close match suggests that the synthetic data effectively captured the essential patterns in the real dataset. The feature importance of the Dengue Dataset as shown in Fig. 5b.

The confusion matrix demonstrates the model’s strong classification accuracy for both CKD (Chronic Kidney Disease) and non-CKD classes as shown in Fig. 5c. It correctly identified 99.25% of non-CKD cases and 99.43% of CKD cases with very low misclassification rates (0.75% false positives and 0.57% false negatives). This indicates that the model is highly reliable in distinguishing between these two categories.

The graph depicts the accuracy progression for a kidney dataset in which the model is trained on synthetic data and tested on real data over 25 epochs, as shown in Fig. 5d. Both curves, representing training on synthetic data and testing on real data, exhibited rapid accuracy improvements within the first five epochs, with accuracy increasing from below 90% to nearly 99%. After this steep rise, the accuracy stabilized and hovered around 99.4%, indicating the exceptional performance of the model. The minimal gap between the two curves highlights the reliability of the synthetic data in mimicking real-world conditions for training purposes.

Analysis of dengue dataset

Analysis of the dengue dataset using synthetic data provided insightful observations, as shown in Fig. 6a. The similarity index between the synthetic and real data was 86.73%, indicating that the synthetic data captured a significant portion of the original dataset’s structure, although with some noticeable differences, as shown in the first graph. This shows that while the synthetic data closely mirror the real distribution, there are minor deviations. The feature importance of the Dengue Dataset as shown in Fig. 6b.

The confusion matrix highlights the robustness of the model, with 99.45% accuracy in identifying negative cases and 99.25% accuracy in identifying positive cases. The error rates were minimal, with only 0.55% false positives and 0.75% false negatives, as shown in Fig. 6c. These results suggest that the model maintains a high accuracy across both classes, with only slight misclassifications, which are expected in real-world datasets. The accuracy progression graph reveals that the model quickly attains a high accuracy, stabilizing at 99.5% after only a few training epochs when synthetic data are used for training and real data are used for testing, as shown in Fig. 6d. This rapid convergence underlines the reliability of synthetic data for training purposes and its effectiveness in delivering a near-perfect classification performance.

Explainable AI: COVID dataset

A summary plot in Fig. 7a. ranked features based on their average impact on the model’s output, effectively identifying their importance in distinguishing between classes 0 and 1. Leukocytes emerged as the most influential feature, contributing significantly to both classes, with higher SHAP values suggesting a greater predictive power. Other features, such as Monocytes, Eosinophils, and Platelets, also have notable effects on the decision-making process of the model. The color coding (blue for Class 1 and red for Class 0) visually distinguishes which class each feature predominantly influences, providing insights into the model’s interpretability.

A SHAP waterfall plot in Fig. 7b. visually demonstrates how individual feature values contribute to the model prediction. The chart begins with the base value E[f(x)] = 0.146, and each feature incrementally shifts the prediction towards its final output f(x) = 0.17. Features such as the Hematocrit and RDW had the most significant impact, with positive contributions (red) pushing the prediction upward and negative contributions (blue) lowering it. Other features, such as Platelets, Age, and Leukocytes, also influence the prediction, although their effects are moderate. The visualization effectively decomposes the overall prediction into additive feature contributions, providing transparency on how each feature value steers the model’s decision-making.

A summary plot in Fig. 7c shows the impact of each feature on the model output, with the horizontal spread representing the effect of SHAP values on the prediction. Features such as Leukocytes, Monocytes, Eosinophils, and Platelets were the most influential, with a wide range of SHAP values, indicating that these features contribute significantly to the model’s decision-making. The color gradient from red (high feature values) to blue (low feature values) indicates the effect of the magnitude of each feature on the output of the model. A high SHAP value (either positive or negative) for a feature implies a strong influence on the prediction, whereas a concentration of points near zero suggests a minimal impact. The balanced distribution of feature effects underscores the complexity and nuances of how individual feature values shape a model’s predictive outcome.

Clinical analysis of COVID dataset

Leukocytes.

-

Interpretation: Elevated leukocyte counts are strongly associated with positive COVID-19 diagnoses. The SHAP values indicate that high leukocyte levels consistently increase the model’s predicted probability of infection.

-

Clinical implication: This reinforces the role of leukocytosis as a diagnostic indicator in inflammatory states like viral infections. It suggests that automated models can flag patients with elevated leukocyte counts for further virological testing or isolation protocols.

Explainable AI: kidney dataset

A summary plot in Fig. 8a shows the average impact of each feature on the model’s output for the two classes (Class 0 in blue and Class 1 in red). Key features, such as hemo, sc, pcv, and sg, had the highest influence on model predictions, contributing significantly to the differentiation between classes. The balance between the blue and red bars indicates how each feature impacts the two classes, with features such as hemo showing a more substantial impact for Class 1, whereas others such as dm and htn have more balanced effects across both classes.

The SHAP waterfall plot in Fig. 8b shows the contribution of each feature value to the final model prediction. The feature “hemo” has the most substantial negative effect, reducing the prediction by -0.17, while “pcv” also contributed negatively, with a decrease of -0.11. Conversely, features like “sg,” “sc,” and “dm” positively impact the model’s output, with shifts such as + 0.04 and + 0.03. The base value E[f(x)] = 0.383 was adjusted by each feature’s SHAP value, culminating in the final prediction of f(x) = 0.15. This visualization clearly outlines the direction and magnitude of each feature’s influence, showcasing which variables push the prediction higher or lower, thereby offering insights into feature importance and its effects on the model’s output.

A summary plot in Fig. 8c shows the impact of each feature on the model’s predictions, with the color indicating the value of the feature (red for high and blue for low values). The horizontal spread of each point represents the effect of the SHAP value on model output. Highly influential features like “hemo,” “sc,” and “pcv” have a wide range of SHAP values, indicating significant contributions to the model’s prediction, whether positive or negative.

Clinical analysis of kidney dataset

Hemoglobin and hematocrit (kidney dataset).

-

Interpretation: Lower hemoglobin and hematocrit values push the model toward predicting chronic kidney disease (CKD).

-

Clinical implication: These features’ predictive influence mirrors real-world anemia patterns in CKD patients due to decreased erythropoietin production. Early model alerts based on these indicators may trigger timely lab panels or nephrology referrals.

Explainable AI: dengue dataset

The SHAP (SHapley Additive exPlanations) values in the chart reveal the influence of various features in predicting dengue outcomes, distinguishing between Class 0 (non-severe) and Class 1 (severe) cases as shown in Fig. 9a.The feature “Year” has the most significant impact, especially on Class 1, suggesting that temporal factors are crucial in determining the severity of dengue. The “Remarks” feature also plays a strong role in predicting severe cases, indicating that clinical notes or remarks provide valuable insights. Other key features, such as hemoglobin levels (HB), presenting symptoms, and differential leukocyte count (DLC), show a notable influence on both classes, but more so in Class 1, emphasizing their importance in predicting severe cases. Factors such as age, duration of admission (DOA), and liver enzyme levels (SGOT) also meaningfully contribute to the model’s decisions. Although features such as platelet count, hepatomegaly, and classic dengue symptoms have a lesser impact, they still play a role in distinguishing between classes.

The SHAP waterfall plot provides an in-depth explanation of the feature contributions to the model output for a specific instance in predicting dengue severity, as shown in Fig. 9b. The feature “Year” has the most significant negative impact, reducing the prediction by -0.02, followed by “Presenting Symptoms” and “HB” (hemoglobin levels), both contributing to a further reduction of -0.02. Clinical remarks (“Remarks”) and chills also negatively impacted the prediction, with chills being the only feature slightly increasing the prediction (+ 0.02), indicating that the presence of chills slightly increased the likelihood of severity. Other features such as age, hematocrit (HCT), MCHC, and elevated IGG also had small negative contributions, pulling the prediction score further down. Overall, these features cumulatively reduce the prediction score, moving it closer to the non-severe class.

The SHAP summary plot for dengue predictions shows how different features impact the output of the model, as shown in Fig. 9c. Each point represents a SHAP value for a feature’s contribution to the prediction. Features like “Year,” “Remarks,” and “HB” (hemoglobin levels) have a significant impact, with higher values in red indicating a positive influence on severity prediction, while lower values in blue reduce the likelihood of severity. “Presenting Symptoms,” “DLC,” and “DOA” also contribute notably, though with a more balanced distribution between high and low values. Age, liver enzymes (SGOT and SGPT), and chills showed a mixed effect, where both high and low values affected the predictions. Features such as platelet count, hepatomegaly, and classic dengue fever symptoms exhibit smaller SHAP values, suggesting a lesser impact on the prediction, although still contributing. Overall, the plot highlights that a combination of demographic, clinical, and temporal factors interact to shape the model’s decision, with certain features like “Year” and “Remarks” playing a more prominent role in determining dengue severity.

Clinical analysis of dengue dataset

Year and clinical remarks (dengue dataset).

-

Interpretation: Temporal variables like “Year” had high SHAP impact on severe dengue classification, suggesting disease severity trends may be seasonally or outbreak-driven. Remarks (clinical observations) often reinforced severity predictions.

-

Clinical implication: The model learns not just static physiology but incorporates temporal and context-rich features—enabling risk stratification that adjusts based on epidemiological wave patterns or textual flags in EHRs.

Discussions

While the individual components employed in this study—such as SMOTE, ADASYN, CTGAN, ResNet, and TabNet—are established techniques, their integration within a task-specific hybrid framework represents a novel, purpose-driven approach to improving clinical classification. Unlike prior studies that apply these tools in isolation, the proposed model strategically combines generative, discriminative, and interpretability modules tailored to the structural challenges of small, imbalanced healthcare datasets. The framework not only enhances predictive performance but also ensures model transparency via SHAP and validates generalizability through the TSTR protocol, making it well-suited for deployment in real-world, data-constrained clinical environments.

From Table 6, the baseline model, which was trained with an imbalanced dataset without utilizing synthetic data generation or augmentation, achieved an accuracy of 73.5%, an F1-score of 0.70, and an AUC of 0.72. Consistent with the expectations, performance was below optimal since balanced training data was missing. The combination of SMOTE and ADASYN with TabNet significantly enhanced classification results, with both achieving F1-scores of more than 0.75 and p-values of less than 0.05, which validate statistically significant improvements. Further improvement was noted with the usage of Deep-CTGAN, a generative model that generates realistic synthetic tabular data. When combined with TabNet, it yielded 81.6% accuracy and an AUC value of 0.82. The addition of ResNet during the data augmentation step also added to improved performance. Yet, the best performance was recorded when Deep-CTGAN and ResNet were combined prior to classification with TabNet. This resulted in an accuracy of 83.4%, an F1-score of 0.81, and an AUC of 0.85, with a low standard deviation (± 1.1) and a p-value of 0.005, which is highly statistically significant over the baseline. In total, the results explicitly reveal that the integration of synthetic data generation and deep learning-based data augmentation greatly improves classification performance, with all the approaches exhibiting statistically significant gains over the baseline.

Statistical significance was determined using a two-tailed paired t-test with a threshold of p < 0.05.The breakdown of performance metrics shown in Table 6 captures how various model and augmentation techniques address classification errors in the context of imbalanced data as in Table 7. For the COVID-19 dataset, the integration of KNN with SMOTE and DCTGAN combined with ResNet produced the highest misclassification rates—8.2% false positives and 10.5% false negatives—leading to a 3.4% decrease in accuracy. This is probably due to the model’s failure to recognize fine clinical overlaps in asymptomatic and mild cases. XGBoost, when combined with the NC + DCTGAN + ResNet pipeline, exhibited relatively lower error rates, reflecting its improved ability to adapt to structured tabular patterns. For the kidney dataset, KNN had lower robustness when applied to the NC + Sep + DCTGAN + ResNet combination, with error rates above 10% resulting in the greatest accuracy drop of 4.5%. Random Forest, although more stable, still recorded a 3.1% decrease with the BS-based method. Both RF and XGBoost struggled in distinguishing class-specific symptomatology in the dengue dataset, leading to very similar FP and FN rates and accuracy losses of more than 3%. Taken together, these findings indicate that the synergy between feature complexity, synthetic data quality, and model architecture has a direct bearing on failure patterns, particularly in class imbalance.

From Table 8, the computational complexity of the proposed framework depends mainly on the size and dimensionality of the dataset and the complexity of the models utilized to generate synthetic data and classification. Processing imbalanced datasets via techniques like SMOTE or undersampling has a low overhead with a complexity of \(\:O(n\cdot \:d)\), where n represents the samples and d denotes the feature dimension. The highest computational phase is the synthetic data generation by GAN-based models such as CTGAN or TimeGAN, whose training processes consist of iterative updates between the generator and discriminator, resulting in a complexity of \(\:O(e\cdot \:n\cdot \:{d}^{2})\) over e epochs. Data augmentation introduces extra instances through transformations (e.g., addition of noise or creation of variations in features), and although linear in complexity \(\:O\left(n\cdot \:a\right)\), it can be parallelized efficiently. Lastly, the classification step, regardless of whether one uses deep models such as ResNet or tree models such as Random Forest, takes a complexity of \(\:O(e\cdot \:n\cdot \:c)\) with c varying with the classifier’s structure and input dimension. Generally speaking, the model achieves equilibrium between data quality and computability, and optimization methods like parallel processing and early stopping can improve its efficiency even more. TIEO again partitions the population into tribes and encourages parallel search with a time complexity of \(\:O(g\cdot \:t\cdot \:p\cdot \:T)\), where g is generations, t is the number of tribes, and p is population per tribe.

Table 9 provides a comparative analysis of runtime versus performance for the evaluated configurations. The proposed hybrid model—Deep-CTGAN + ResNet + TabNet—achieves the highest AUC (0.85) and F1-score (0.81) across all datasets, reflecting its superior predictive ability and consistency. While it incurs a higher training time (~ 42 min), this overhead is justified by the statistically significant improvement in performance (p < 0.005) and its robust generalizability across clinical domains. In contrast, baseline configurations such as SMOTE + TabNet and ADASYN + TabNet complete training in under 10 min, but deliver only moderate improvements. The Deep-CTGAN + TabNet variant, which omits ResNet, offers a middle ground with slightly reduced performance and shorter training duration (~ 32 min). These results highlight a clear accuracy–efficiency trade-off, wherein the proposed pipeline delivers maximum diagnostic utility at a manageable computational cost—appropriate for settings where precision is prioritized over minimal runtime.

The comparison Table 10 clearly illustrates that the proposed hybrid framework—combining Deep-CTGAN, ResNet, and TabNet—outperforms all other configurations by delivering the highest AUC (0.85) and F1-score (0.81), while addressing limitations such as data imbalance and interpretability in a unified pipeline. Classical methods like SMOTE and ADASYN offer faster training but struggle with minority class diversity and generalization. Intermediate models (e.g., Deep-CTGAN + TabNet) improve realism but lack enhancement modules. Despite higher runtime, the proposed method demonstrates a superior balance of accuracy, robustness, and clinical relevance, making it the most effective and scalable solution among the compared approaches.

Conclusion

This research provides a comprehensive solution to the issue of class imbalance in machine learning by implementing a framework that incorporates advanced synthetic data generation and augmentation methods. The combination of SMOTE, ADASYN, and Deep-CTGAN with ResNet significantly enhances the representation of underrepresented classes, improving the model’s ability to generalize and perform well across different classification tasks. The study demonstrated that TabNet, designed specifically for tabular data, consistently outperformed models such as Random Forest, XGBoost, and KNN on various imbalanced datasets including COVID-19, Kidney, and Dengue data. The application of the Train on Synthetic, Test on Real (TSTR) protocol demonstrated that the synthetic data were highly reliable, with notable alignment between the distributions of real and synthetic data. Furthermore, the use of Explainable AI tools, such as SHAP, allowed for detailed insights into feature importance and model behavior, adding transparency, and enhancing the understanding of how the models made decisions. This combination of synthetic data techniques with explainable AI forms a robust framework that not only improves model performance, but also offers clear interpretability. The results of this research set a foundation for future exploration, particularly for improving synthetic data techniques and applying this approach to more varied and complex domains. Scaling up the proposed framework opens up a wide range of appealing directions for future research and deployment. One primary path is to scale the model over wider clinical datasets with diverse modalities, e.g., genomics, radiology, and electronic health records, to test its versatility outside of pre-structured tabular forms. This will enable one to determine the generalizability of Deep-CTGAN with ResNet in producing context-aware synthetic data congruent with various healthcare applications. Another key area of emphasis is the integration of real-time streams of data to support ongoing learning. By allowing the synthetic data generator to adjust to new patterns as they appear—such as shifts in disease progression, treatment response, or demographics—the model can remain highly relevant and performant in ever-changing clinical settings. This responsiveness could be crucial in predictive diagnosis, particularly in conditions that change rapidly, such as infectious disease.

Data availability

The datasets generated and/or analysed during the current study are available in the Github and figshare repository. The datasets used are Covid dataset https://github.com/soares-f/ER-CoV/blob/master/raw_new.csv, Kidney dataset https://github.com/rajib1346/MLCkd-Source_Code/blob/main/Datasets/kidney_disease.csv, and Dengue dataset https://figshare.com/articles/dataset/Data_xlsx/21257040/1?file=37786119.

References

Wang, A. X. et al. Challenges and opportunities of generative models on tabular data. Appl. Soft Comput. 112, 223 (2024).

Pezoulas, V. C. et al. Synthetic data generation methods in healthcare: A review on open-source tools and methods. Comput. Struct. Biotechnol. J. 1, 1 (2024).

Arora, A. & Arora, A. Generative adversarial networks and synthetic patient data: current challenges and future perspectives. Future Healthc. J. 9 (2), 190–193 (2022).

Gonzales, A., Guruswamy, G. & Smith, S. R. Synthetic data in health care: A narrative review. PLOS Digit. Health. 2 (1), e0000082 (2023).

Fallahian, M., Dorodchi, M. & Kreth, K. GAN-Based tabular data generator for constructing synopsis in approximate query processing: challenges and solutions. Mach. Learn. Knowl. Extr. 6 (1), 171–198 (2024).

Apellániz, P. A., Jiménez, A., Galende, B. A., Parras, J. & Zazo, S. Synthetic tabular data validation: a divergence-based approach. IEEE Access (2024).

Kumari, N. & Rajabi, E. A comparative exploration of two diffusion generative models on tabular data synthesis. Int. J. Data Sci. Analytics. 1, 1–12 (2024).

Chatterjee, S. & Byun, Y. C. A synthetic data generation technique for enhancement of prediction accuracy of electric vehicles demand. Sensors 23 (2), 594 (2023).

Akkem, Y., Biswas, S. K. & Varanasi, A. A comprehensive review of synthetic data generation in smart farming by using variational autoencoder and generative adversarial network. Eng. Appl. Artif. Intell. 131, 107881 (2024).

Qian, Z., Davis, R. & van der Schaar, M. Synthcity: a benchmark framework for diverse use cases of tabular synthetic data. Adv. Neural. Inf. Process. Syst. 36, 1 (2024).

Xu, L. & Veeramachaneni, K. Synthesizing tabular data using generative adversarial networks. ArXiv Preprint arXiv:181111264 (2018).

Krenmayr, L. et al. GANerAid: realistic synthetic patient data for clinical trials. Inf. Med. Unlocked. 35, 101118 (2022).

Cheng, Y., Wang, C. H., Potluru, V. K., Balch, T. & Cheng, G. Downstream task-oriented generative model selections on synthetic data training for fraud detection models. ArXiv Preprint arXiv:240100974 (2024).

Hernandez, M., Epelde, G., Alberdi, A., Cilla, R. & Rankin, D. Synthetic data generation for tabular health records: A systematic review. Neurocomputing 493, 28–45 (2022).

Chundawat, V. S., Tarun, A. K., Mandal, M., Lahoti, M. & Narang, P. A universal metric for robust evaluation of synthetic tabular data. IEEE Trans. Artif. Intell. 5 (1), 300–309 (2022).

Sivakumar, J., Ramamurthy, K., Radhakrishnan, M. & Won, D. GenerativeMTD: A deep synthetic data generation framework for small datasets. Knowl. Based Syst. 280, 110956 (2023).

Ma, J., Dankar, A., Stein, G., Yu, G. & Caterini, A. TabPFGen–tabular data generation with TabPFN. ArXiv Preprint arXiv:240605216 (2024).

Villaizán-Vallelado, M., Salvatori, M., Segura, C. & Arapakis, I. Diffusion models for tabular data imputation and synthetic data generation. ArXiv Preprint arXiv:240702549 (2024).

Mahendra, M., Umesh, C., Bej, B., Schultz, K. & Wolkenhauer, O. Convex space learning for tabular synthetic data generation. ArXiv Preprint arXiv:240709789 (2024).

Peng, Y. H. et al. DreamStruct: Understanding slides and user interfaces via synthetic data generation. ArXiv Preprint (2024). arXiv:2410.00201.

Zhao, Z., Kunar, A., Birke, R., Van der Scheer, H. & Chen, L. Y. Ctab-gan+: enhancing tabular data synthesis. Front. Big Data. 6, 1296508 (2024).

Panfilo, D. et al. A deep learning-based pipeline for the generation of synthetic tabular data. IEEE Access. 11, 63306–63323 (2023).

Miletic, M. & Sariyar, M. Challenges of using synthetic data generation methods for tabular microdata. Appl. Sci. 14 (14), 5975 (2024).

Papadaki, E., Vrahatis, A. G. & Kotsiantis, S. Exploring innovative approaches to synthetic tabular data generation. Electronics 13 (10), 1965 (2024).

Liu, T., Fan, J., Li, G., Tang, N. & Du, X. Tabular data synthesis with generative adversarial networks: design space and optimizations. VLDB J. 33 (2), 255–280 (2024).

Rashidi, H. H., Albahra, S., Rubin, B. P. & Hu, B. A novel and fully automated platform for synthetic tabular data generation and validation. Sci. Rep. 14 (1), 23312 (2024).

Rodriguez-Almeida, A. J. et al. Synthetic patient data generation and evaluation in disease prediction using small and imbalanced datasets. IEEE J. Biomedical Health Inf. 27 (6), 2670–2680 (2022).

Elshewey, A. M. et al. Enhancing heart disease classification based on Greylag Goose optimization algorithm and long short-term memory. Sci. Rep. 15, 1277 (2025).

Tarek, Z., Alhussan, A. A., Khafaga, D. S., El-Kenawy, E. M. & Elshewey, A. M. A snake optimization algorithm-based feature selection framework for rapid detection of cardiovascular disease in its early stages. Biomed. Signal Process. Control. 102, 107417 (2024).

Elshewey, A. M. et al. EEG-based optimization of eye state classification using modified-BER metaheuristic algorithm. Sci. Rep. 14, 24489 (2024).

Elshewey, A. M. & Osman, A. M. Orthopedic disease classification based on breadth-first search algorithm. Sci. Rep. 14, 23368 (2024).

El-Rashidy, N. et al. Multitask multilayer-prediction model for predicting mechanical ventilation and the associated mortality rate. Neural Comput. Applic. 37, 1321–1343 (2025).

Elkenawy, E. S. M. et al. Greylag Goose optimization and multilayer perceptron for enhancing lung cancer classification. Sci. Rep. 14, 23784 (2024).

Covid Dataset. - https://github.com/soares-f/ER-CoV/blob/master/raw_new.csv.

KidneyDataset. https://github.com/rajib1346/MLCkd-Source_Code/blob/main/Datasets/kidney_disease.csv.

Dengue Dataset. https://figshare.com/articles/dataset/Data_xlsx/21257040/1?file=37786119.

Acknowledgements

We extend our sincere thanks to all participants and staff for their valuable contributions.

Author information

Authors and Affiliations

Contributions

The initial draft of the manuscript was prepared by Kannan M. D Umamaheswari –Conceptualization, Manimekala- Supervision and final drafting, Priya Stella Mary-coding Implementation, P. Margaret Savitha-Validation and conceptualization and Juliet Rozario-Project administration and dataset collection.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Ethics declarations

The manuscript has not been submitted to any other journal. It follows all the ethics of the publishing.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Kannan, M., Umamaheswari, D., Manimekala, B. et al. An enhancement of machine learning model performance in disease prediction with synthetic data generation. Sci Rep 15, 33482 (2025). https://doi.org/10.1038/s41598-025-15019-3

Received:

Accepted:

Published:

DOI: https://doi.org/10.1038/s41598-025-15019-3