Abstract

In the research of non-intrusive load monitoring (NILM), the temporal characteristics of V–I trajectories are often overlooked, and using a single feature for identification may lead to insignificant differences between similar loads. Based on this, this paper proposes a non-intrusive load monitoring method based on time-enhanced multidimensional feature visualization. By adding a time axis to the V–I trajectory, it integrates the rate of change in voltage and current, power factor, and third harmonic to form a three-dimensional spatiotemporal color V–I trajectory, addressing the gap in dynamic characteristics. The ECA-ResNet34 network model is used for load identification, avoiding the problems of network degradation and training difficulties caused by the excessive depth of traditional convolutional neural networks (CNN), and achieving efficient monitoring of household loads. The method was validated on the PLAID dataset, achieving an average F1 score of 97.3%. Furthermore, utilizing transfer learning, the model trained and tested on the PLAID dataset was further tested on the WHITED dataset to increase the model’s universality and generalization ability, showing significant effects in identifying loads with similar V–I trajectories and multiple states.

Similar content being viewed by others

Introduction

As the economy and society develop, the demand for energy continues to grow globally. According to data from the International Energy Agency, global energy demand is expected to increase by 25% by 2040, with electricity demand projected to grow by 58% compared to 20181. Effective load monitoring is a necessary means to reduce energy consumption in households and industrial sectors2.

Intrusive Load Monitoring (ILM) requires the installation of sensors on each electrical device of users to gather data on electricity usage, which is high in implementation costs and poses a risk of leaking user privacy. Hart3 proposed Non-Intrusive Load Monitoring (NILM), which does not require installing measurement devices for each appliance4. By analyzing the total electricity meter data of homes or factories, it is possible to obtain information on the number, type, and electricity consumption of appliances, saving costs and protecting user privacy. NILM has become a hot topic among scholars and researchers, being a key technology in smart grids and modern energy management systems5.

Early researchers used single steady-state features for load identification. These steady-state features include active power, reactive power, current waveform, steady-state current harmonics, power harmonics6,7,8,9, and V-I trajectories10, among which V-I trajectories are widely used due to their good identification effects11. H. Y. Lam et al.12 proposed a method of classifying household appliances using the shape features of V-I trajectories. De Baets et al.13 utilized the differences in V-I trajectories to detect unknown devices, but the identification accuracy needs improvement. Hassan et al.14 extracted shape features like the area and cyclic direction of V-I trajectories for identification, but it lacks accuracy for loads with similar V-I trajectories.

Compared to steady-state features, transient features generated during load switching processes have a stronger correlation with device characteristics15 and can provide more information16. Common transient features include changes in power during transients, startup current waveform, voltage noise, and the edge size or steepness of current waveform spikes5. Cox et al.17 used transient voltage waveforms measured at power outlets to identify different loads, but the measurement results were not stable enough; Tsai et al.18 analyzed current transient signals by capturing the on and off transient responses of devices, identifying different operational states of devices, but this approach demands high hardware costs and computational capacity for data collection; Liu et al.19 used the “direction” of voltage and current changes between two consecutive points to increase feature uniqueness; Zheng et al.20, Sun et al.21 effectively distinguished low-power loads using harmonic features.

The identification accuracy of single features is limited. Researchers have improved identification accuracy by integrating multiple features on the basis of V-I trajectories using visualization technology. Liu et al.19 integrated changes in voltage and reactive current, power factor, and V-I trajectories, visually representing the integrated features through color coding technology. Xiang Y et al.22 translated the power and current size of loads into color information through color coding and then integrated it with V-I trajectories, but the resulting image colors were monotonous, causing most loads with lower power or current to concentrate in the same color interval, affecting identification accuracy. Chen et al.23 integrated V-I trajectories, the magnitude and phase angle of odd harmonics, and fundamental amplitude into a feature matrix, constructing a matrix heatmap through colors to avoid feature masking, but it lacks universality.

In fact, V-I trajectories are dynamically formed, and some appliances have similar V-I trajectories, but the formation processes are different. Previous studies have overlooked the dynamic features of V-I trajectories. Visualizing the formation process of V-I trajectories would significantly improve the resolution ratio of V-I trajectory.

Convolutional Neural Networks (CNN) are widely used in the field of image classification, and can still recognize images when they are rotated, inverted, or deformed24. Jia et al.25 employed convolutional neural networks (CNNs) to recognize reconstructed V-I trajectory images, verifying their excellent performance in household load classification. However, as the depth of the network increases, problems such as model degradation, gradient vanishing, or gradient explosion may occur. ResNet26,27,28,29 added a residual network on its basis to alleviate the phenomenon of gradient disappearance caused by increased depth. Madhushan et al.30, Zou et al.31 added the SE channel attention mechanism to ResNet, but it requires capturing the dependencies among all channels, which is inefficient. ECA-ResNet3432,33 captures cross-channel interactions directly through one-dimensional adaptive convolution, dynamically adjusts attention to different channels, and flexibly adjusts the scaling of feature maps, both improving learning efficiency and saving computational resources.

In terms of model generalization, Liu et al.19 first applied transfer learning to NILM, achieving knowledge transfer between different domains34,35, and improving identification efficiency.

In summary, this paper proposes a non-intrusive load monitoring method based on time-enhanced multidimensional feature visualization. Firstly, it introduces the time dimension, converting two-dimensional V-I trajectories into three-dimensional ones; then, it uses the H, S, V channels to color-code the three-dimensional V-I trajectories: the H channel (hue) depicts the “direction” of voltage and current changes36, the S channel (saturation) represents power factor, and the V channel (value) represents the third harmonic20,21. The ECA-ResNet34 network is used for load identification, and transfer learning is implemented with ECA-ResNet34. Simulation validation on the public datasets PLAID and WHITED proves the effectiveness of this method.

The main contributions of this paper are as follows:

-

(1)

Addressing the issue of recognizing loads with similar features, this paper integrates steady-state features (power factor, third harmonics), transient features (voltage and current rate of change), and dynamic features (time) into a feature fusion approach. It proposes a time-enhanced multidimensional feature visualization method for load identification, significantly improving the accuracy of load recognition.

-

(2)

In terms of feature extraction, the use of visualization technology has enabled the construction of dynamic color V-I trajectories based on a time axis, enhancing the resolution of load features.

-

(3)

Utilizing ECA-ResNet34, the method achieves cross-domain transfer learning between different datasets, reducing the demand for training data and enhancing the model’s training efficiency. This approach demonstrates strong generalizability and universality.

Data preprocessing method

This section describes how to obtain V-I trajectories, transform them into three-dimensional spatio-temporal trajectories, and then encode them into colored images in the HSV color space.

V-I trajectory collection

Under the NILM framework, aggregated power, current, and voltage information can be obtained through intelligent sensors. Assuming that only one load’s operational state changes at a certain moment, extracting the current and voltage signals before and after the load state change allows for the acquisition of the load’s V-I trajectory.

The steady-state current and voltage waveforms for T periods before and after the event are extracted, represented as \({v_{on}}\), \({v_{off}}\), \({i_{on}}\)and \({i_{off}}\).Using the fundamental voltage phase angle as a reference, a fast Fourier transform is performed on the periodic voltage waveforms. The intersection of the fundamental voltage with the horizontal axis as the initial sampling point defines the load’s voltage an

The V-I trajectory can be formed by taking the voltage and current within a cycle as the abscissa and ordinate respectively37.

Constructing three-dimensional spatio-temporal trajectories

The original V-I trajectories only retain their spatial shapes, and different loads may have similar trajectories. For example, as shown in Fig. 1, it is difficult to accurately distinguish between (a) a hairdryer and (b) a heater using only the shape of the trajectory.

Figure 2 reflects the formation processes of two types of loads. Although the trajectories appear consistent, the direction of the trajectory cycle differs. Dynamically displaying the V-I trajectories significantly enhances the feature details.

Constructing a three-dimensional coordinate system

First, define a three-dimensional coordinate system, where voltage (V), current (I), and time (t) correspond to the X-axis, Y-axis, and Z-axis, respectively. To ensure comparisons within a unified scale, it is necessary to normalize the voltage and current data.

The normalization formula for voltage is:

where \({V_{\hbox{max} }}\) and \({V_{\hbox{min} }}\)respectively represent the maximum and minimum values of the voltage. The normalization formula for current is:

where \({I_{\hbox{max} }}\) and \({I_{\hbox{min} }}\) respectively represent the maximum and minimum values of the current. These normalization steps help to scale the voltage and current data of different dimensions to the same range, facilitating comparison and analysis.

Next, generate a sequence of equidistant timestamps from 0 to the total collection time T. The time interval \(\Delta t\) is determined by the sampling frequency \({f_s}\)with the formula:

Each point in the time series can be represented as:

where N is the total number of sampling points. This formula ensures that each voltage and current data point has a corresponding timestamp.

Finally, map each voltage and current reading to a point in three-dimensional space. Each point \(P({t_i})\)represents the state of voltage and current at time \({t_i}\), defined as:

where \(P({t_i})\)represents a point in three-dimensional space, \({V_{norm}}({t_i})\) and \({I_{norm}}({t_i})\) are the normalized voltage and current values at time \({t_i}\)and \({t_i}\)is the corresponding timestamp.

Draw 3D trajectory plot

After the data has been mapped to three-dimensional space, the next step is to draw a three-dimensional trajectory graph by connecting these points. Considering the position of each \({P_i}=\left[ {{V_{norm}}(i),{I_{norm}}(i),t(i)} \right]\) in three-dimensional space, we can construct a continuous trajectory that passes through these points. For any pair of consecutive points in the sequence, \(\left[ {{V_{norm}}(i),{I_{norm}}(i),t(i)} \right]\) and \(\left[ {{V_{norm}}(i+1),{I_{norm}}(i+1),t(i+1)} \right]\), a line segment connecting these two points is constructed. For the line segment between two consecutive points, the parametric equation is

Here, λ is a parameter between 0 and 1, representing the interpolation from \({P_i}\) to \({P_{i+1}}\).

The entire trajectory is formed by sequentially connecting these line segments. The mathematical representation of the trajectory can be considered as a collection of these line segment Eq.

where n is the total number of data points.

As shown in Fig. 3, after unfolding the electrical V-I trajectory along the time axis, the trajectory graph reveals more rich details and information. The trajectories of (a) incandescent light bulb (ILB), (d) hairdryer, (f) heater, and (h) air conditioner (AC) show significant differences, making it easy to distinguish them.

Three-dimensional spacetime trajectory images of different electrical loads, including (a) incandescent light bulb (ILB), (b) laptop, (c) fridge, (d) hairdryer, (e) fan, (f) heater, (g) compact fluorescent lamp (CFL), (h) air conditioner (AC), (i) microwave, (j) vacuum cleaner, and (k) washing machine.

Color-coding technique

To enhance the feature representation of 3D V-I trajectories, the hue, saturation, and brightness19 are used to encode the V-I trajectory into a color image based on temporal features, as shown in Fig. 4. Translate the steady-state features (power factor, third harmonics), transient features (voltage and current rate of change), and dynamic features (time) into a feature fusion, forming a three-dimensional color VI trajectory.

(1) Voltage and current rate of change. The hue (H) represents the direction of trajectory movement, defined as

where the function \(a\tan 2( \cdot )\) is a four-quadrant inverse tangent function, calculating the phase angle between two consecutive points in the trajectory, yielding an angle range from 0° to 360°.

A new temporal hue matrix \(H({n_x},{n_y})\) is defined, with elements calculated as the average hue of trajectory segments through grid \(({n_x},{n_y})\). It is expressed as

where A = {a|a\(a{\text{th}}\) point crosses grid \(({n_{\text{I}}}_{{_{j}}},{n_{{V_j}}})\)}, and \(| \cdot |\) is the cardinality of a set.

\(H({n_{\text{I}}}_{{_{j}}},{n_{{V_j}}})\) includes temporal features, meaning it is calculated based on the rate of change of trajectory segments in the time series. This provides a time-variant hue value for each grid point in a 2 N×2 N matrix.

(2) Power factor. Saturation (S) represents the power factor of the load, which is the ratio of active power to apparent power

Here, N is the total number of sampling points, \({P_{active}}\) is the active power, and \({P_{apparent}}\) is the apparent power, \({V_{rms}}\), \({I_{rms}}\) are the effective values of load voltage and current respectively.

(3) Third harmonic. Brightness (V) represents harmonic features. A Fourier transform (FFT) is used to decompose steady-state current signals

Here, \({A_1},{A_2},{A_k}\) is the amplitude of each harmonic, and \({\theta _1},{\theta _2},{\theta _k}\) is the phase angle of each harmonic.

Harmonic amplitude and phase are numerically significant, and integrating them with the V-I trace requires data processing of voltage, current, and amplitude phase. To normalize the selected data, perform the following operation

where K represents the original value, \({K_{\hbox{min} }}\) represents the minimum value of the period, and \({K_{\hbox{max} }}\) represents the maximum value of the period. \(K^{\prime}\) is the normalized value.

Rounding off the normalized data is as follows:

where \({K_{sure}}\) and \({A^\prime}_{f}\) represent the final feature values, \({A_f}\) represents the base magnitude, \({x_f}\) represents the quotient, and \({y_f}\) represents the remainder.

Construction of the recognition network

ResNet26 effectively mitigates the issues of gradient vanishing and degradation in deep networks by introducing residual blocks and skip connections, significantly enhancing the model’s stability and feature extraction capabilities38. The residual structure increases the depth and accuracy of feature extraction, where the lower convolutional layers excel at extracting details such as edges and textures, and the middle layers are capable of capturing medium-complexity patterns like color and shape. ResNet34, compared to ResNet, can more effectively extract and distinguish features in a dataset, accurately capturing details of edges, colors, and shapes. It has fewer parameters than ResNet50 or ResNet10139, making it better suited for the data used in this study.

The network structure of ResNet34

Figure 5 presents both the standard network structure and the ResNet structure. The basic residual learning unit neither introduces new parameters nor increases computational complexity.

The principle of ResNet is as follows

Where \(h()\) is the direct mapping, and \(f()\) is the activation function.

The residual block can be represented as

The relationship between layer L and layer l is

According to the chain rule of derivatives used in backpropagation, the gradient of the loss function \(\upvarepsilon\) with respect to \({x_l}\) is

During the entire training process, it is impossible for \(\frac{\partial }{{\partial {x_l}}}\sum\limits_{{i=1}}^{{L - 1}} {F({x_i},{W_i})}\) to always be − 1, hence there is no issue of gradient vanishing in residual networks. Moreover, \(\frac{{\partial \varepsilon }}{{\partial {x_l}}}\) implies that the gradient of the L layer can be directly transmitted to any shallower L layer.

The structure of ResNet34 is shown in Fig. 6.

The features extracted by the convolutional layers stacked in a CNN are high-dimensional. Some of these features may be lost, but the residual blocks in ResNet34 skip over some convolutional layers to extract features, blending the features from before layer n with the convolutional features after layer n, thereby preserving both high-dimensional and low-dimensional features and improving network performance. Global Average Pooling (GAP) is also used to replace the fully connected (fc) layers in classic CNNs. GAP enhances the correspondence between the feature maps on the fully connected layer and the categories, making it more suitable for convolutional structures. Additionally, there are no parameters to optimize in GAP, which avoids overfitting. Furthermore, GAP aggregates spatial information, making it more robust to spatial transformations in the input.

ECA mechanism

As shown in Fig. 7, the ECA (Efficient Channel Attention) mechanism module is applied directly after the global average pooling layer using a 1 × 1 convolutional layer, eliminating the fully connected layer. This module avoids dimensionality reduction and effectively captures cross-channel interactions. It achieves significant results with only a few parameters involved.

Transfer learning

Transfer learning can be categorized into Appliance Transfer Learning (ATL) and Cross-Domain Transfer Learning (CTL). ATL refers to transferring from one device to another, while CTL involves transferring from one data domain to another. In this paper, the model trained and tested on the PLAID dataset will be transferred to the WHITED dataset for further testing, achieving cross-domain transfer learning.

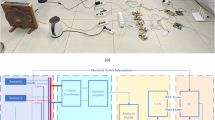

Figure 8 illustrates the model transfer experiment scheme for NILM. By treating the convolutional and fully connected layers of the pretrained ECA-ResNet34 model as a cascaded feature extractor, it is assumed that the initial layers can be transferred between different tasks. As long as the load features are converted into visual representations, and the last fully connected layer is replaced with one that matches the size of the load categories, this experimental scheme can be generalized to any other dataset to achieve cross-domain transfer learning.

Identification process

The recognition process of this text is shown in Fig. 9, which includes data preprocessing, feature fusion, and load identification.

Firstly, voltage and current data from various household appliances are collected, and the data are normalized to standardize the data range. Then, the voltage and current waveforms are converted into three-dimensional V-I trajectories. At the same time, color coding technology is used to color the three-dimensional V-I trajectories, combining three types of feature data (trajectory motion information, power factor, and harmonic characteristics) to enhance the expressiveness of the data. Finally, these data are fed into the ECA-ResNet34 network for training and testing to achieve effective identification of household appliance loads.

Experiment analysis

All the test cases were conducted on a computer running Windows 10, Pytorch 1.7, and NVIDIA CUDA 11.8, with the following hardware configuration: Intel i5-13400 CPU and NVIDIA GeForce RTX 3060 Ti GPU.

Dataset

In this section, we use two public datasets, PLAID40 and WHITED41, to validate the proposed load signature and machine learning methods. In this paper, the model is trained and tested on the PLAID dataset and then transferred to the WHITED dataset for further testing. The PLAID dataset includes 1,074 current and voltage data samples from 11 different types of devices in over 55 households in Pittsburgh, Pennsylvania, USA, with a sampling rate of 30 kHz. The WHITED dataset is a global transient energy dataset for home and industrial applications, containing 1,259 data samples from 54 different types of devices from various regions around the world, with a sampling rate of 44.1 kHz. The PLAID dataset shows richer internal variations of devices, whereas the WHITED dataset covers a wider range of differences among devices. Both are recognized high-resolution electrical measurement datasets used for NILM assessment.

Model parameter settings and training

The training process utilizes the Adam optimizer, with default parameters β1 = 0.9, β2 = 0.999, and a learning rate of 0.001. The specific fundamental parameters for training and transfer learning in all experiments are as shown in the Table 1.

Construct the sample set as described in this paper, extracting 80% of each device type to form the training set, with the remaining 20% serving as the test set. The loss function curve of the ECA-ResNet34 model trained on the PLAID dataset is shown in the Fig. 10.

Results on PLAID

The assessment uses confusion matrices42,43, where each row of the matrix represents instances in the predicted categories, and each column represents instances in the actual categories. The diagonal cells display the number of correct predictions for each category, that is, the quantity of matches between actual and predicted categories. Values outside the diagonal indicate where the classifier made errors, allowing for a visual inspection of mispredictions. The values in the chart’s far-right column represent the accuracy of each predicted category, denoted as Precision (P), while the values in the bottom row of the chart represent the accuracy of each actual category, denoted as Recall (R). The cell in the bottom right corner of the chart shows the overall accuracy.

This paper selects Precision (P), Recall (R), the harmonic mean of Precision and Recall (F1), and overall Accuracy (A) as evaluation metrics. From the confusion matrix, TP, FP, FN, and TN can be calculated, where TP indicates that the prediction is true and the actual result is also true; FP indicates that the prediction is true but the actual result is false; FN indicates that the prediction is false but the actual result is true; TN indicates that the prediction is false and the actual result is also false. Based on TP, FP, FN, and TN, P, R, F1, and A can be calculated. The formulas for each metric are as follows:

Precision (P): Measures the proportion of actual positives among instances predicted as positive.

Recall (R): Measures the proportion of actual positives that were correctly predicted as positive.

F1-score (F1): The harmonic mean of precision and recall, serving as a balance between the two metrics. The F1-score is particularly useful when there is a need to balance precision and recall.

Overall Accuracy (A): Measures the proportion of correct predictions among all predictions, i.e., the proportion of instances (regardless of positive or negative class) that were correctly predicted by the model out of all instances.

The confusion matrix on the PLAID dataset is shown in the Fig. 11.

According to Table 2, the average F1 score reached 0.973. Among them, the F1 scores for compact fluorescent lamps and heaters reached 1 and 0.988, respectively. The V-I trajectories of heaters and hair dryers are very similar, with F1 scores of 0.988 and 0.987, respectively. Multi-state loads such as air conditioners, refrigerators, microwaves, and washing machines achieved F1 scores of 0.929, 0.870, 0.993, and 0.963, respectively.

The evaluation is based on Precision (P), Recall (R), harmonic mean of Precision and Recall (F1), and overall Accuracy (A). From the Table 2, The precision (P), recall (R), F1, and accuracy (A) for various types of loads mostly remain above 0.95, with averages of 0.977, 0.969, 0.973, and 0.997, respectively. Figure 12 visualizes the F1-score (%).

Comparative analysis

To verify that the inclusion of temporal information can significantly improve the accuracy of identification, experiments were conducted under the exact same conditions as this study, excluding temporal information, and the identification accuracy was represented using confusion matrices, as shown in Fig. 13.

Tables 2 and 3 indicate that the average F1 score was 0.850, which increased to 0.973 after incorporating time features, an improvement of 0.123. Additionally, the minimum F1 score increased from 0.494 to 0.87, an enhancement of 0.376. The F1 scores for multi-state loads like air conditioners, refrigerators, and washing machines were 0.771, 0.494, and 0.814, respectively, which improved to 0.929, 0.870, and 0.963 after adding time features, with respective increases of 0.158, 0.376, and 0.149. Heater and hair dryer trajectories, which are similar, had F1 scores of 0.702 and 0.917; these improved to 0.988 and 0.987 respectively upon the inclusion of time features, increases of 0.286 and 0.07, proving that incorporating time features can effectively enhance identification resolution.

Figures 11 and 13 show the confusion matrices for the two methods on the PLAID dataset, demonstrating that adding time features can effectively reduce recognition confusion. Refrigerators, as multi-state loads, showed more confusion with air conditioners, representing 1.0% (11 instances), but this was reduced to 0.2% (2 instances) after adding time features, significantly lowering the confusion probability. The hair dryer and heater, being devices with similar trajectory characteristics, had confusion incidents of 0.9% (10 instances) and 0.3% (3 instances), which dropped to only 0.1% (1 instance) after incorporating time features. Moreover, confusion between refrigerators and incandescent bulbs, and between laptops and compact fluorescent lamps, occurred at rates of 0.6% (6 instances) and 1.3% (14 instances), respectively, with no confusion after adding time features. In summary, incorporating time features can make trajectory differences more pronounced, providing significant advantages in distinguishing multi-state devices and devices with similar trajectories.

Evaluation is based on precision (P), recall (R), the harmonic mean of precision and recall (F1), and overall accuracy (A). From Table 3, the average values for P, R, F1, and A for various loads are 0.856, 0.856, 0.850, and 0.983, respectively. These values have decreased compared to the scores with added time features, which are 0.977, 0.969, 0.973, and 0.997. Figure 14 visualizes the F1-scores (%).

Comparison of the features of this paper with other methods

To verify the universality of the features, this study utilized the feature set described herein, combined with the identification models from references19,23, to conduct experimental validations on the PLAID dataset, using the F1-score as the evaluation metric. The results are shown in Fig. 15. The F-macro scores for the three models using this article’s feature set were 97.3%, 95.1%, and 94.6%, respectively, demonstrating the effectiveness and universality of the features. The F1-score of this study’s model were higher for all devices compared to the other two models, indicating the superiority of our identification model. Among the three models, air conditioners and fridges generally had lower F1-score compared to other devices. This is because continuously variable devices have variable power consumption characteristics and do not have a fixed number of states, making them more prone to confusion with other loads; air conditioners, with various operational conditions such as cooling and heating modes, are easily confused with refrigerators and hair dryers; refrigerators’ working frequency usually changes with temperature, leading to uncertain V-I trajectories.

Results on WHITED

To verify the feasibility of transfer learning, the transfer model described in Sect. 3.3 of this article was experimentally validated on the WHITED dataset. In the ECA-ResNet34 model, trained on the PLAID dataset, all layers except the last fully connected layer were extracted, and a new fully connected layer replaced the original model’s last one for a new device classification task.

The WHITED dataset contains 54 types of loads. To assess the classification performance for each device, we chose to use the F1-score. As shown in Fig. 16, it is clear that our method has excellent classification performance for all loads, with most F1-scores remaining above 99% and an F-macro of 98.8%. Among them, the LaserPrinter exhibited irregular waveform fluctuations, resulting in lower accuracy. Unlike the results on the PLAID dataset, air conditioners and fridges had high accuracy, which is because this dataset only included one working mode for these devices.

Example analysis

Compare methods that use the same dataset but select different features or different recognition models, and evaluate the effectiveness of each method. The results are shown in Table 4.

Chen et al.23 fused V-I trajectories, the amplitude and phase angle of odd harmonics, and fundamental wave amplitude into a feature matrix. A heatmap was constructed based on the magnitude of values to effectively address the issue of feature masking caused by feature fusion, achieving an F-macro of 96% on both the REDD and PLAID datasets. Han et al.45 proposed a CGAN algorithm for monitoring unknown and identifying known devices, inputting V-I trajectory features and generating probability capsules through a capsule network in the generator to represent the latent features of devices. It ensures their separation in the feature space by learning the feature distribution of each known device through multiple learnable Gaussian priors, achieving an F-macro of 95.3% and 98.31% on the PLAID and WHITED datasets, respectively. Wang et al.46 uses the VGG16 convolutional neural network for the preliminary classification of V-I trajectories of loads, combined with maximum relevance minimum redundancy feature selection and support vector machine algorithm for a two-stage identification, achieving an average F1 score of 0.982 on the PLAID dataset. However, as a deeper convolutional network, VGG16 is parameter-intensive and computationally complex, and is less computationally efficient than the method described in this paper. Shi et al.47 utilizes an LSTM denoising autoencoder to decompose the mixed current signals of home busbars, obtaining independent load current signals and combining them with voltage signals to generate multi-cycle color V-I trajectories, recognized through an optimized CNN network, with results of 0.87. Lu et al.48 proposes a Siamese network that combines a fixed CNN and backpropagation network for online retraining, extracting low-dimensional features of V-I trajectories and fine-tuning the BP network, achieving an average F1 score of 0.992 on the PLAID dataset. Although this method’s average F1 score is slightly higher than that in this paper, it does not employ transfer learning and has limited generality. Ou et al.49 proposes a residual convolutional neural network model based on energy normalization and squeeze-excitation blocks (EN-SE-RECNN), using weighted V-I trajectories, Markov transition fields, and GAF images based on current spectral sequences, achieving average F1 scores of 0.9744, 0.9543, and 0.9684 on the PLAID, WHITED, and HRAD datasets, respectively. Li et al.50 combines short-time Fourier transform (STFT) to extract time-frequency features and optimizes feature extraction through a sparse stacked autoencoder (SSAE), using a Bi-LSTM and DTW model to construct a load identification architecture, with an average F1 score of 0.9578 on the PLAID dataset. The average F1 scores of the method proposed in this paper are 0.973 and 0.988, which are better results compared to other control groups.

Conclusion

This paper proposes a NILM technique based on time-enhanced multi-dimensional feature visualization, which fuses color coding and temporal characteristics based on V-I trajectories to form three-dimensional spatio-temporal V-I trajectories, achieving the visualization of steady-state, transient, and dynamic features. This improvement is particularly crucial for distinguishing V-I trajectories that appear similar but have different formation processes. With the addition of the time dimension, it is possible to intuitively observe the formation process of V-I trajectories, effectively distinguishing similar loads that are difficult to identify with traditional methods. In terms of image recognition, a transfer learning-based ECA-ResNet34 network recognition model is constructed, which avoids the gradient descent problem of CNNs and achieves the use of a single classifier model to recognize multiple loads, reducing training time and improving computational efficiency. Finally, using PLAID and WHITED datasets for validation, the results prove that the proposed method has better accuracy compared to other methods. However, designing more appropriate NILM for multi-state loads remains a challenging problem, requiring more advanced modeling methods to be solved in future work.

Data availability

The datasets generated and/or analyzed during the current study are available in the DataSet repository, https://github.com/Yym212612/DataSet.

References

Yan, L., Tian, W., Han, J. & Li, Z. Event-driven two-stage solution to non-intrusive load monitoring. Appl. Energy. 311, 118627 (2022).

Tu, J., Zhou, M., Li, G. & Luan, K. A potential game based distributed optimization strategy for the electricity retailer considering residential demand response. Proc. CSEE. 40 (2), 400–410 (2020).

Hart, G. W. Nonintrusive appliance load monitoring. Proc. IEEE 80 (12), 1870–1891 (1992).

Tekler, Z. D. et al. Near-real-time plug load identification using low-frequency power data in office spaces: experiments and applications. Appl. Energy. 275, 115391 (2020).

Deng, X. P., Zhang, G. Q., Wei, Q. L., Peng, W. & Li, C. D. A survey on the non-intrusive load monitoring. Acta Autom. Sin. 48, 644–663 (2022).

Bonfigli, R. et al. Non-intrusive load monitoring by using active and reactive power in additive factorial hidden Markov models. Appl. Energy. 208, 1590–1607 (2017).

Liang, J., Ng, S. K., Kendall, G. & Cheng, J. W. Load signature study—part I: Basic concept, structure, and methodology. IEEE Trans. Power Delivery. 25 (2), 551–560 (2009).

He, D., Du, L., Yang, Y., Harley, R. & Habetler, T. Front-end electronic circuit topology analysis for model-driven classification and monitoring of appliance loads in smart buildings. IEEE Trans. Smart Grid. 3 (4), 2286–2293 (2012).

Wichakool, W., Avestruz, A. T., Cox, R. W. & Leeb, S. B. Modeling and estimating current harmonics of variable electronic loads. IEEE Trans. Power Electron. 24 (12), 2803–2811 (2009).

Mulinari, B. M. et al. IEEE,. A new set of steady-state and transient features for power signature analysis based on VI trajectory. In 2019 IEEE PES Innovative Smart Grid Technologies Conference-Latin America (ISGT Latin America) 1–6 (2019).

Gao, J., Kara, E. C., Giri, S. & Bergés, M. A feasibility study of automated plug-load identification from high-frequency measurements. In IEEE Global Conference on Signal and Information Processing (GlobalSIP) 220–224 (IEEE, 2015). (2015).

Lam, H. Y., Fung, G. S. K. & Lee, W. K. A novel method to construct taxonomy electrical appliances based on load signaturesof. IEEE Trans. Consum. Electron. 53 (2), 653–660 (2007).

De Baets, L., Develder, C., Dhaene, T. & Deschrijver, D. Detection of unidentified appliances in non-intrusive load monitoring using siamese neural networks. Int. J. Electr. Power Energy Syst. 104, 645–653 (2019).

Hassan, T., Javed, F. & Arshad, N. An empirical investigation of VI trajectory based load signatures for non-intrusive load monitoring. IEEE Trans. Smart Grid. 5 (2), 870–878 (2013).

Chang, H. H. Non-intrusive demand monitoring and load identification for energy management systems based on transient feature analyses. Energies 5 (11), 4569–4589 (2012).

Reddy, R., Garg, V. & Pudi, V. A feature fusion technique for improved non-intrusive load monitoring. Energy Inf. 3 (1), 1–15 (2020).

Cox, R., Leeb, S. B., Shaw, S. R. & Norford, L. K. IEEE,. Transient event detection for nonintrusive load monitoring and demand side management using voltage distortion. In Twenty-First Annual IEEE Applied Power Electronics Conference and Exposition, APEC’06 7 (2006).

Tsai, M. S. & Lin, Y. H. Modern development of an adaptive non-intrusive appliance load monitoring system in electricity energy conservation. Appl. Energy. 96, 55–73 (2012).

Liu, Y., Wang, X. & You, W. Non-intrusive load monitoring by voltage–current trajectory enabled transfer learning. IEEE Trans. Smart Grid. 10 (5), 5609–5619 (2018).

Zheng, Z., Chen, H. & Luo, X. A supervised event-based non-intrusive load monitoring for non-linear appliances. Sustainability 10 (4), 1001 (2018).

Zhou, M., Song, X., Tu, J., Li, G. & Luan, K. Residential electricity consumption behavior analysis based on non-intrusive load monitoring. Power Syst. Technol. 40 (12), 3912–3917 (2016).

Xiang, Y. et al. Non-invasive load identification algorithm based on color coding and feature fusion of power and current. Front. Energy Res. 10, 899669 (2022).

Chen, T., Qin, H., Li, X., Wan, W. & Yan, W. A non-intrusive load monitoring method based on feature fusion and SE-ResNet. Electronics 12 (8), 1909 (2023).

Gai, R. L., Cai, J. R. & Wang, S. Y. Research review on image recognition based on deep learning. J. Chin. Comput. Syst. 42, 1980–1984 (2021).

Jia, D., Li, Y., Du, Z., Xu, J. & Yin, B. Non-intrusive load identification using reconstructed voltage–current images. IEEE Access. 9, 77349–77358 (2021).

He, K., Zhang, X., Ren, S. & Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition 770–778 (2016).

Zhang, Y. et al. A novel non-intrusive load monitoring method based on ResNet-seq2seq networks for energy disaggregation of distributed energy resources integrated with residential houses. Appl. Energy. 349, 121703 (2023).

Oh, C. & Jeong, J. Non-intrusive load monitoring based on regularized ResNet with multivariate control chart. In Computational Science and Its Applications–ICCSA 2020: 20th International Conference, Cagliari, Italy, July 1–4, Proceedings, Part II, vol. 20, 646–661 (Springer, 2020).

Shastri, H. & Batra, N. Neural network approaches and dataset parser for NILM toolkit. In Proceedings of the 8th ACM International Conference on Systems for Energy-Efficient Buildings, Cities, and Transportation 172–175 (2021).

Madhushan, N., Perera, U., Wijewardhana, U. & Dharmaweera, N. Real-time non-intrusive load monitoring for low-power appliances using odd current harmonics. Int. J. Electr. Electron. Eng. Telecommunications. 12, 385–394 (2023).

Zou, L., Liu, W., Lei, M. & Yu, X. An improved residual network for pork freshness detection using near-infrared spectroscopy. Entropy 23 (10), 1293 (2021).

Li, K., Feng, J., Xing, Y. & Wang, B. A self-training multi-task attention method for NILM. In 2022 IEEE 11th Data Driven Control and Learning Systems Conference (DDCLS) 11–15 (IEEE, 2022).

Yu, H., Pang, C., Xuan, Y., Chen, Y. & Zeng, X. Sequence-to-sequence based beta-VAE combined with IECA attention mechanism for energy disaggregation algorithm. IEEE Trans. Instrum. Meas. 72, 1–13 (2023).

Zhou, Z. et al. A novel transfer learning-based intelligent nonintrusive load-monitoring with limited measurements. IEEE Trans. Instrum. Meas. 70, 1–8 (2020).

D’Incecco, M., Squartini, S. & Zhong, M. Transfer learning for non-intrusive load monitoring. IEEE Trans. Smart Grid. 11 (2), 1419–1429 (2019).

Han, Y., Li, K., Feng, H. & Zhao, Q. Non-intrusive load monitoring based on semi-supervised smooth teacher graph learning with voltage–current trajectory. Neural Comput. Appl. 34 (21), 19147–19160 (2022).

Wang, A. L., Chen, B. X., Wang, C. G. & Hua, D. Non-intrusive load monitoring algorithm based on features of V–I trajectory. Electr. Power Syst. Res. 157, 134–144 (2018).

Zaeemzadeh, A., Rahnavard, N. & Shah, M. Norm-preservation: why residual networks can become extremely deep? IEEE Trans. Pattern Anal. Mach. Intell. 43 (11), 3980–3990 (2020).

Zhao, Z. et al. Research on blast furnace air outlet state identification model based on improved resNet18. Arab. J. Sci. Eng. 1, 1–15 (2024).

Gao, J., Giri, S., Kara, E. C. & Bergés, M. Plaid: a public dataset of high-resolution electrical appliance measurements for load identification research: demo abstract. In Proceedings of the 1st ACM Conference on Embedded Systems for Energy-Efficient Buildings 198–199 (2014).

Kahl, M., Haq, A. U., Kriechbaumer, T. & Jacobsen, H. A. Whited-a worldwide household and industry transient energy data set. In 3rd International Workshop on Non-intrusive Load Monitoring 1–4 (2016).

De Baets, L., Ruyssinck, J., Develder, C., Dhaene, T. & Deschrijver, D. Appliance classification using VI trajectories and convolutional neural networks. Energy Build. 158, 32–36 (2018).

Sadeghianpourhamami, N., Ruyssinck, J., Deschrijver, D., Dhaene, T. & Develder, C. Comprehensive feature selection for appliance classification in NILM. Energy Build. 151, 98–106 (2017).

Wang, S. X., Guo, L. Y., Chen, H. & Deng, X. Y. Non-intrusive load identification algorithm based on feature fusion and deep learning. Autom. Electr. Power Syst. 44 (9), 103–110 (2020).

Han, Y., Li, K., Wang, C., Si, F. & Zhao, Q. Unknown appliances detection for non-intrusive load monitoring based on conditional generative adversarial networks. IEEE Trans. Smart Grid. 14 (6), 4553–4564 (2023).

Wang, L. et al. Non-intrusive load monitoring method with multi-state characterization of loads. Power Syst. Technol. 48 (11), 4417–4426 (2024).

Shi, J., Zhi, D. & Fu, R. Research on a non-intrusive load recognition algorithm based on high-frequency signal decomposition with improved VI trajectory and background color coding. Mathematics 12, 30 (2024).

Lu, L., Kang, J. S., Meng, F. & Yu, M. Non-intrusive load identification based on retrainable siamese network. Sensors 24, 2562 (2024).

Ou, L. et al. A residual convolutional neural network with multi-block for appliance recognition in non-intrusive load identification. Energy Build. 281, 112749 (2023).

Li, M., Tu, Z., Wang, J., Xu, P. & Wang, X. Dynamic time warping optimization-based non-intrusive load monitoring for multiple household appliances. Int. J. Electr. Power Energy Syst. 159, 110002 (2024).

Author information

Authors and Affiliations

Contributions

Y.Y. wrote all manuscript text. T.C. and J.G. assisted in experimental design. S.G. and P.Y. have jointly participated in proofreading the manuscript. All authors reviewed the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare no competing interests.

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution-NonCommercial-NoDerivatives 4.0 International License, which permits any non-commercial use, sharing, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if you modified the licensed material. You do not have permission under this licence to share adapted material derived from this article or parts of it. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by-nc-nd/4.0/.

About this article

Cite this article

Chen, T., Yuan, Y., Gao, J. et al. Non-intrusive load monitoring based on time-enhanced multidimensional feature visualization. Sci Rep 15, 4800 (2025). https://doi.org/10.1038/s41598-025-89191-x

Received:

Accepted:

Published:

Version of record:

DOI: https://doi.org/10.1038/s41598-025-89191-x